Progressive von Mises–Fisher Filtering Using Isotropic Sample Sets for Nonlinear Hyperspherical Estimation †

Abstract

1. Introduction

2. Preliminaries

2.1. General Conventions of Notations

2.2. The von Mises–Fisher Distribution

2.3. Geometric Structure of Hyperspherical Manifolds

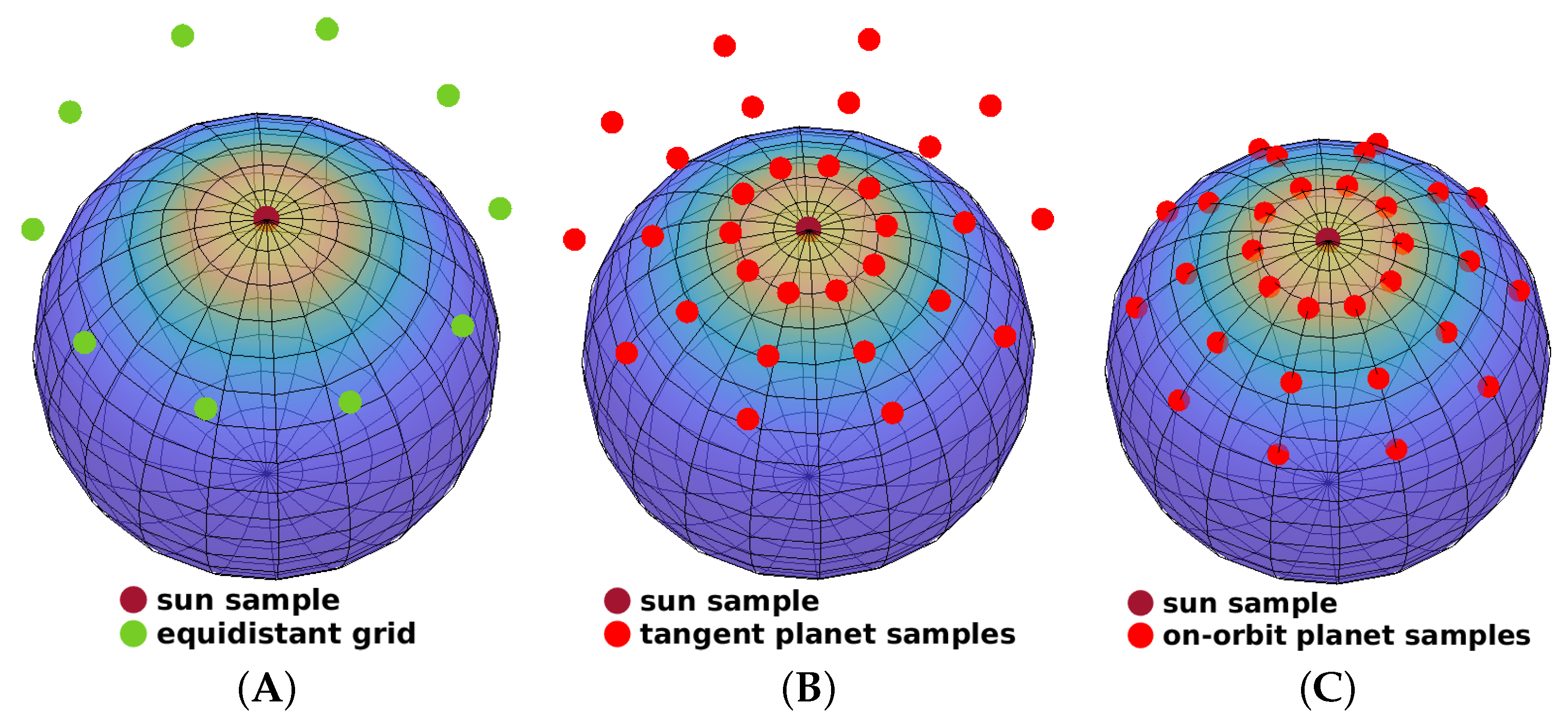

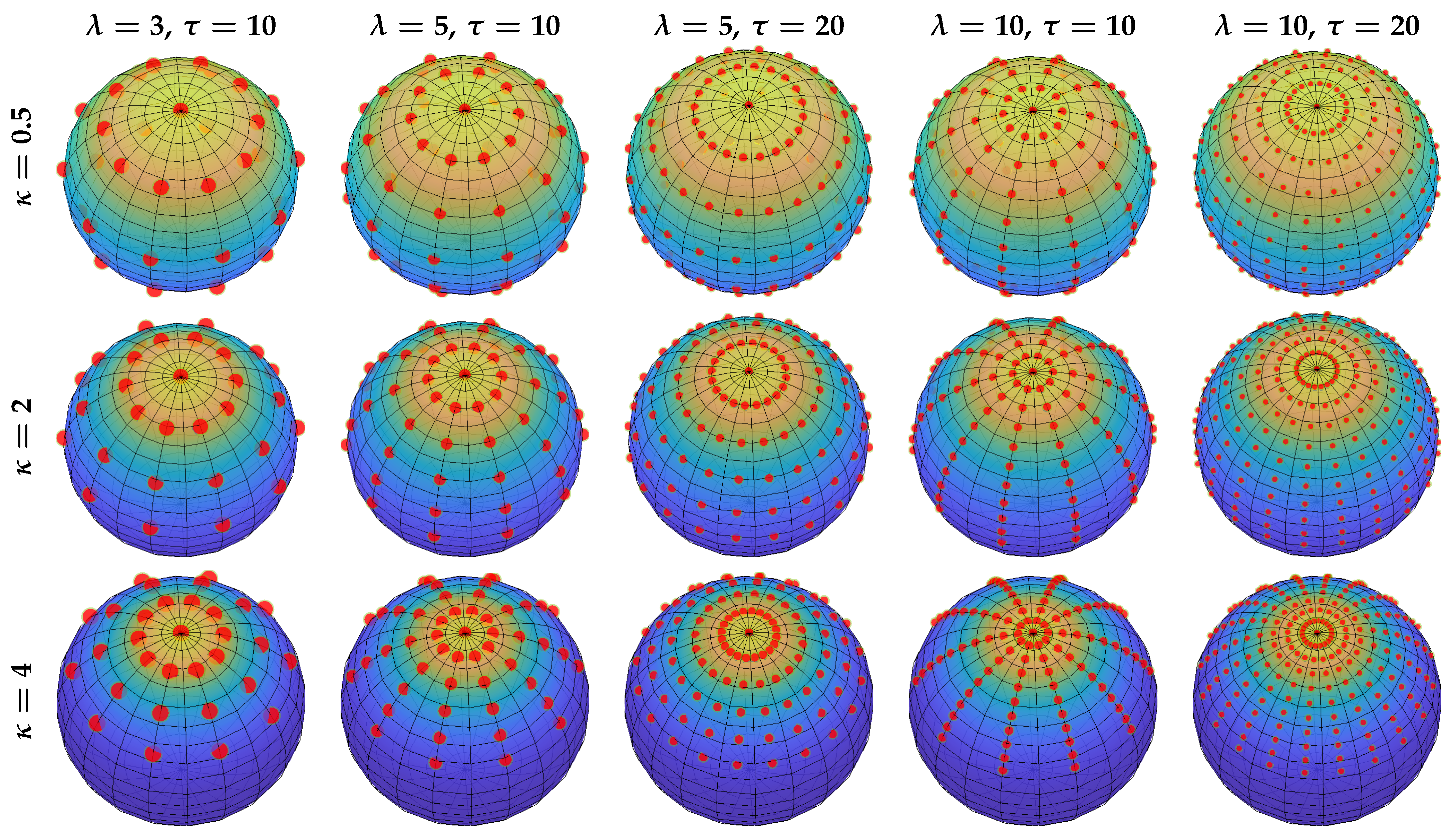

3. Isotropic Deterministic Sampling

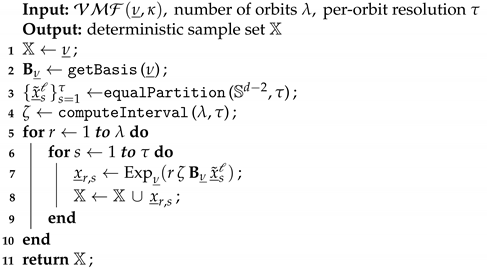

| Algorithm 1: Isotropic Deterministic Sampling |

|

3.1. Numerical Solution for Equation (5)

3.2. Example

4. Progressive Unscented von Mises–Fisher Filtering

4.1. Task Formulation

4.2. Prediction Step for Nonlinear von Mises–Fisher Filtering

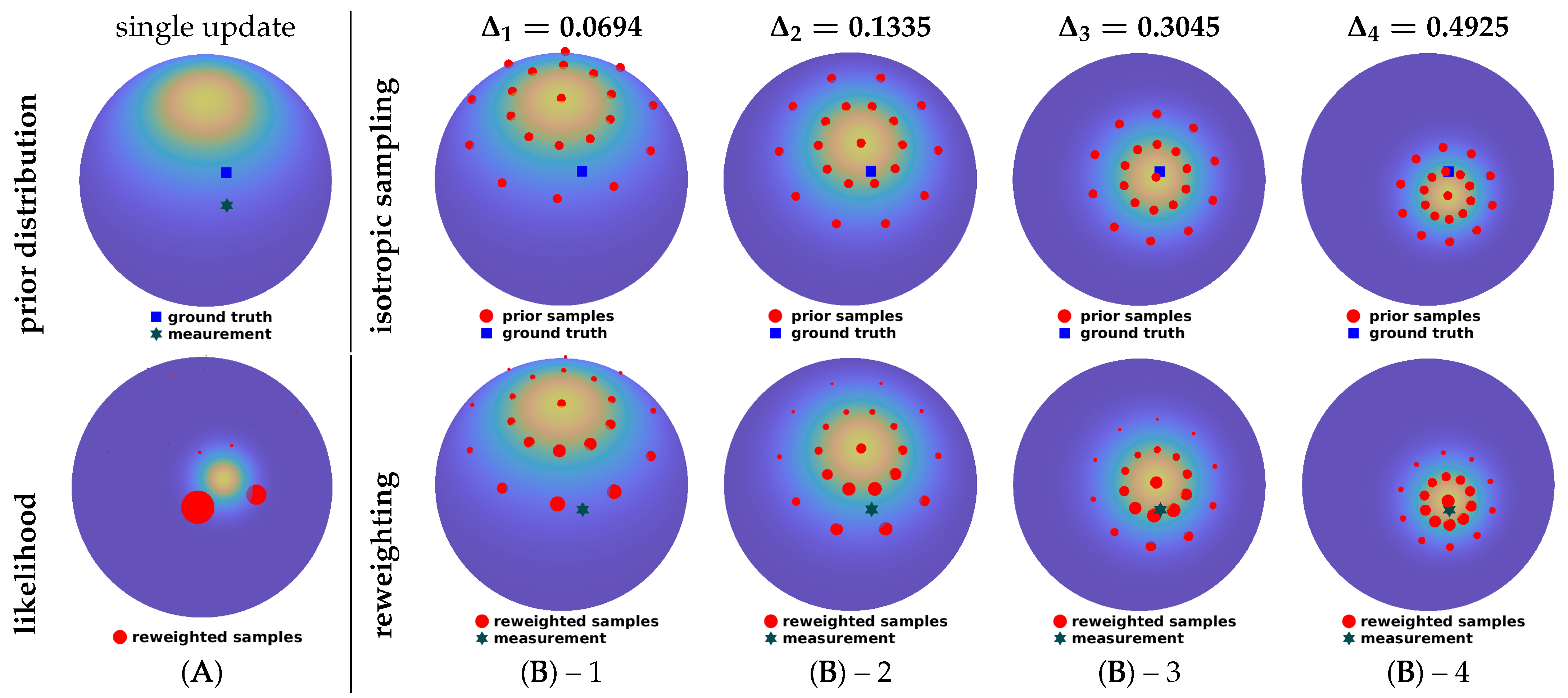

4.3. Deterministic Progressive Update Using Isotropic Sample Sets

| Algorithm 2: Isotropic Progressive Update |

|

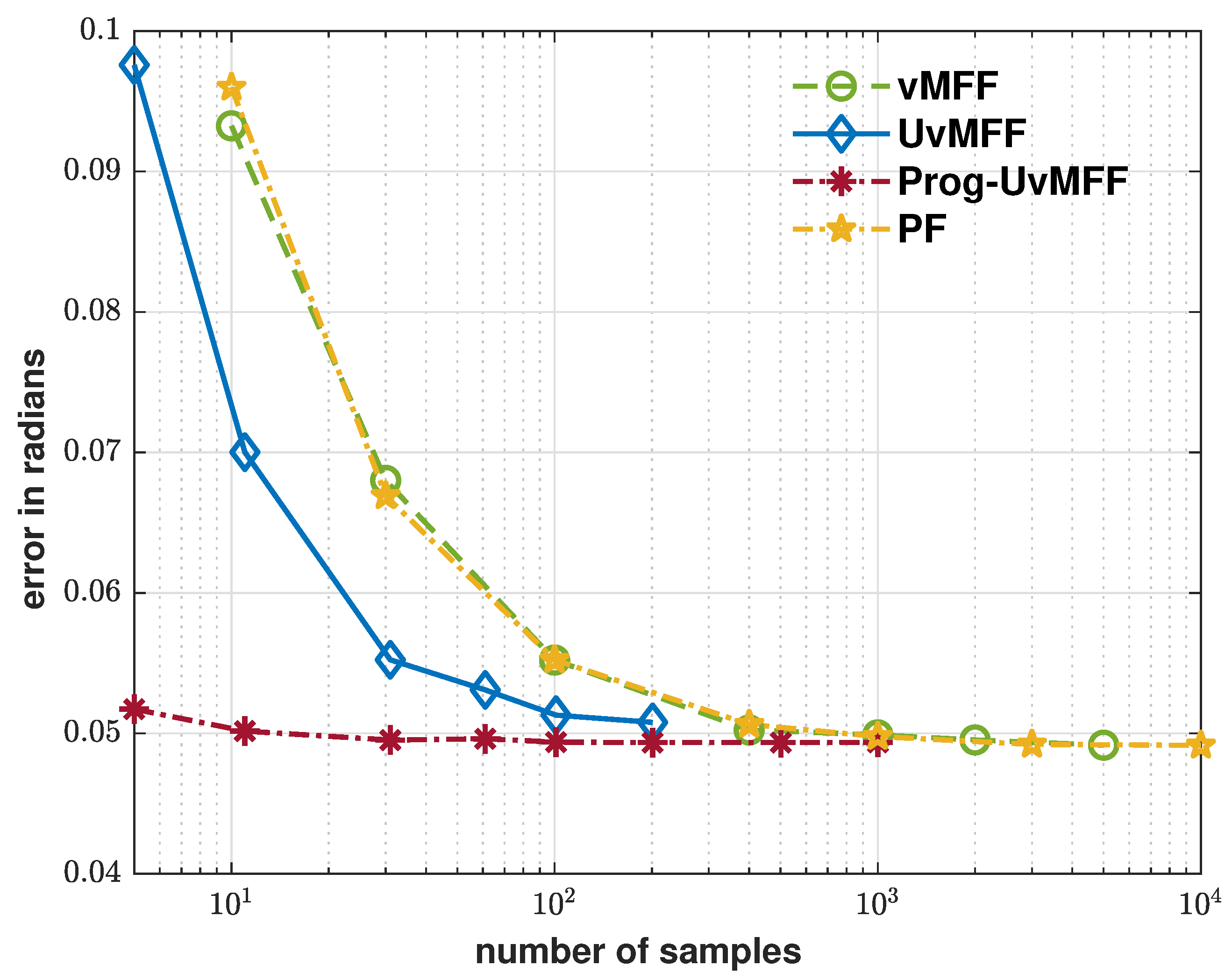

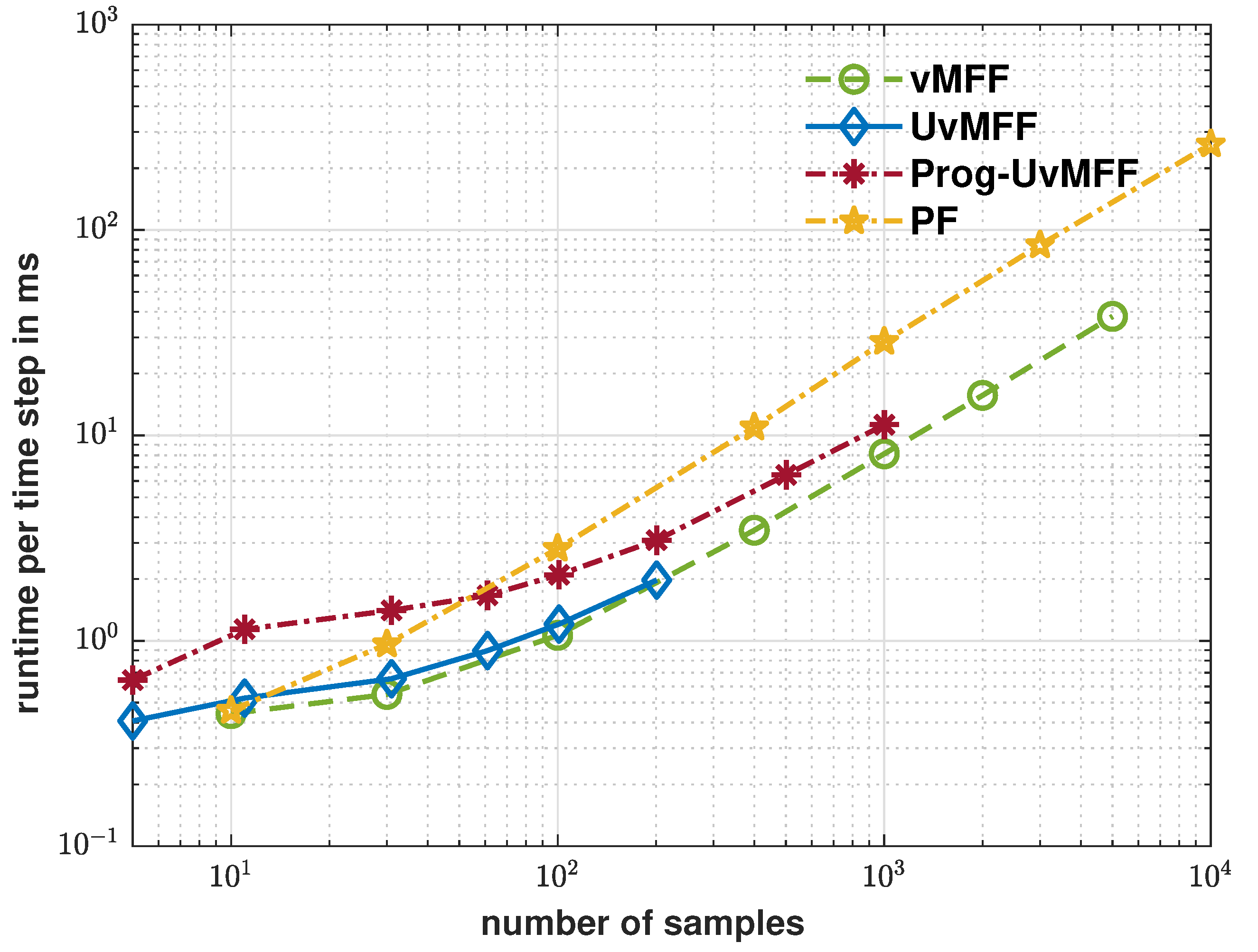

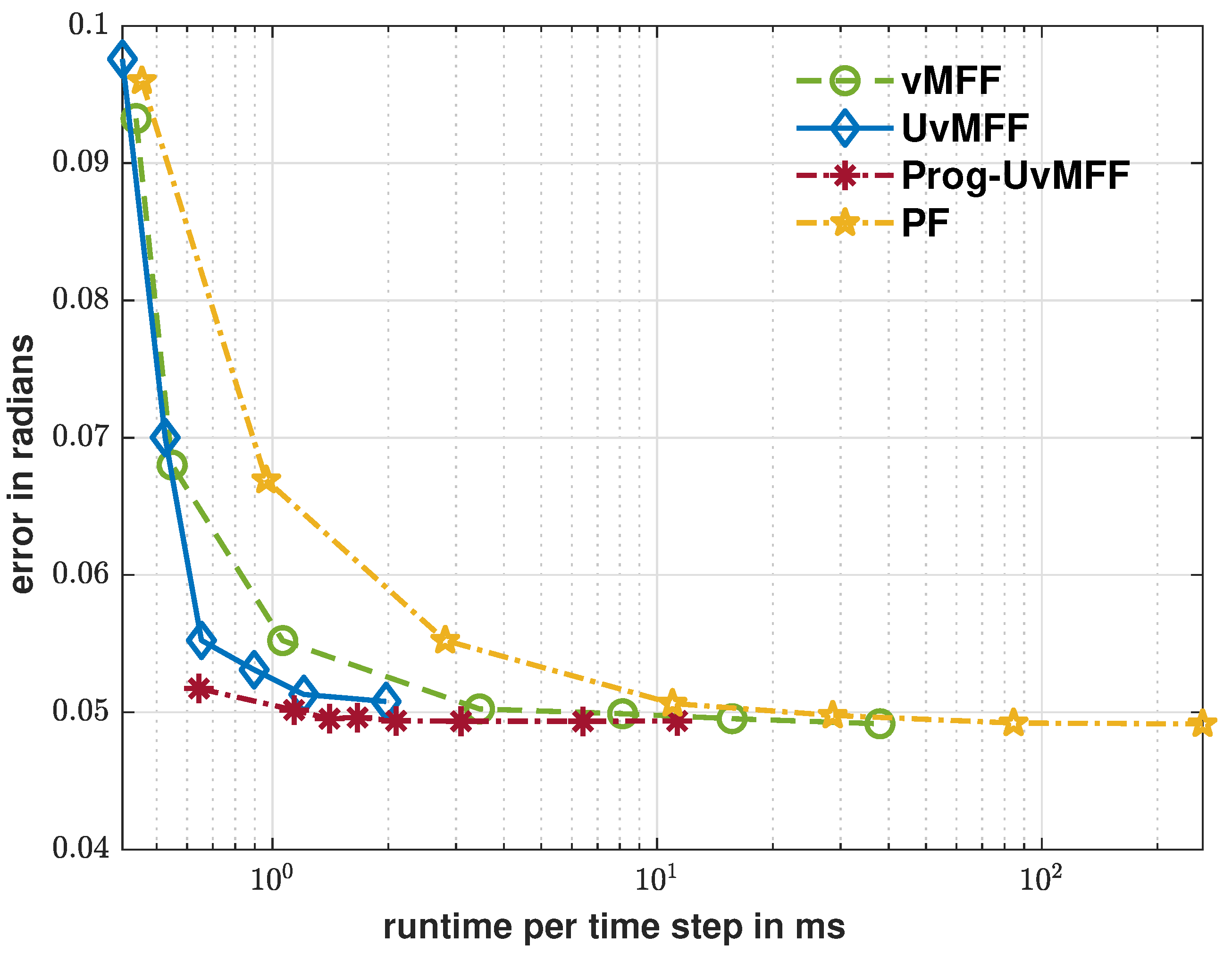

5. Evaluation

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Hoff, P.D. Simulation of the Matrix Bingham–von Mises–Fisher Distribution, with Applications to Multivariate and Relational Data. J. Comput. Graph. Stat. 2009, 18, 438–456. [Google Scholar] [CrossRef]

- Bultmann, S.; Li, K.; Hanebeck, U.D. Stereo Visual SLAM Based on Unscented Dual Quaternion Filtering. In Proceedings of the 22nd International Conference on Information Fusion (Fusion 2019), Ottawa, ON, Canada, 2–5 July 2019. [Google Scholar]

- Kok, M.; Schön, T.B. A Fast and Robust Algorithm for Orientation Estimation Using Inertial Sensors. IEEE Signal Process. Lett. 2019, 26, 1673–1677. [Google Scholar] [CrossRef]

- Lunga, D.; Ersoy, O. Unsupervised Classification of Hyperspectral Images on Spherical Manifolds. In Proceedings of the Industrial Conference on Data Mining, Vancouver, BC, Canada, 11–14 December 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 134–146. [Google Scholar]

- Marković, I.; Chaumette, F.; Petrović, I. Moving Object Detection, Tracking and Following Using an Omnidirectional Camera on a Mobile Robot. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA 2014), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Guan, H.; Smith, W.A. Structure-from-Motion in Spherical Video Using the von Mises–Fisher Distribution. IEEE Trans. Image Process. 2016, 26, 711–723. [Google Scholar] [CrossRef] [PubMed]

- Möls, H.; Li, K.; Hanebeck, U.D. Highly Parallelizable Plane Extraction for Organized Point Clouds Using Spherical Convex Hulls. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA 2020), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Straub, J.; Chang, J.; Freifeld, O.; Fisher, J., III. A Dirichlet Process Mixture Model for Spherical Data. In Proceedings of the Artificial Intelligence and Statistics, San Diego, CA, USA, 9–12 May 2015; pp. 930–938. [Google Scholar]

- Fisher, R.A. Dispersion on a sphere. Proc. R. Soc. London. Ser. A Math. Phys. Sci. 1953, 217, 295–305. [Google Scholar] [CrossRef]

- Mardia, K.V.; Jupp, P.E. Directional Statistics; John Wiley & Sons: Hoboken, NJ, USA, 2009; Volume 494. [Google Scholar]

- Ulrich, G. Computer Generation of Distributions on the M-Sphere. J. R. Stat. Soc. Ser. C (Appl. Stat.) 1984, 33, 158–163. [Google Scholar] [CrossRef]

- Wood, A.T. Simulation of the von Mises–Fisher Distribution. Commun. -Stat.-Simul. Comput. 1994, 23, 157–164. [Google Scholar] [CrossRef]

- Jakob, W. Numerically Stable Sampling of the von Mises–Fisher Distribution on (and Other Tricks); Technical Report; Interactive Geometry Lab, ETH Zürich: Zürich, Switzerland, 2012. [Google Scholar]

- Kurz, G.; Hanebeck, U.D. Stochastic Sampling of the Hyperspherical von Mises–Fisher Distribution Without Rejection Methods. In Proceedings of the IEEE ISIF Workshop on Sensor Data Fusion: Trends, Solutions, Applications (SDF 2015), Bonn, Germany, 15–17 October 2015. [Google Scholar]

- Kurz, G.; Gilitschenski, I.; Hanebeck, U.D. Unscented von Mises–Fisher Filtering. IEEE Signal Process. Lett. Apr. 2016, 23, 463–467. [Google Scholar] [CrossRef]

- Gilitschenski, I.; Kurz, G.; Hanebeck, U.D. Non-Identity Measurement Models for Orientation Estimation Based on Directional Statistics. In Proceedings of the 18th International Conference on Information Fusion (Fusion 2015), Washington, DC, USA, 6–9 July 2015. [Google Scholar]

- Hanebeck, U.D.; Huber, M.F.; Klumpp, V. Dirac Mixture Approximation of Multivariate Gaussian Densities. In Proceedings of the 2009 IEEE Conference on Decision and Control (CDC 2009), Shanghai, China, 15–18 December 2009. [Google Scholar]

- Gilitschenski, I.; Hanebeck, U.D. Efficient Deterministic Dirac Mixture Approximation. In Proceedings of the 2013 American Control Conference (ACC 2013), Washington, DC, USA, 17–19 June 2013. [Google Scholar]

- Gilitschenski, I.; Steinbring, J.; Hanebeck, U.D.; Simandl, M. Deterministic Dirac Mixture Approximation of Gaussian Mixtures. In Proceedings of the 17th International Conference on Information Fusion (Fusion 2014), Salamanca, Spain, 7–10 July 2014. [Google Scholar]

- Kurz, G.; Gilitschenski, I.; Siegwart, R.Y.; Hanebeck, U.D. Methods for Deterministic Approximation of Circular Densities. J. Adv. Inf. Fusion 2016, 11, 138–156. [Google Scholar]

- Collett, D.; Lewis, T. Discriminating Between the von Mises and Wrapped Normal Distributions. Aust. J. Stat. 1981, 23, 73–79. [Google Scholar] [CrossRef]

- Hanebeck, U.D.; Lindquist, A. Moment-based Dirac Mixture Approximation of Circular Densities. In Proceedings of the 19th IFAC World Congress (IFAC 2014), Cape Town, South Africa, 24–29 August 2014. [Google Scholar]

- Gilitschenski, I.; Kurz, G.; Hanebeck, U.D.; Siegwart, R. Optimal Quantization of Circular Distributions. In Proceedings of the 19th International Conference on Information Fusion (Fusion 2016), Heidelberg, Germany, 5–8 July 2016. [Google Scholar]

- Gilitschenski, I.; Kurz, G.; Julier, S.J.; Hanebeck, U.D. Unscented Orientation Estimation Based on the Bingham Distribution. IEEE Trans. Autom. Control 2016, 61, 172–177. [Google Scholar] [CrossRef]

- Li, K.; Frisch, D.; Noack, B.; Hanebeck, U.D. Geometry-Driven Deterministic Sampling for Nonlinear Bingham Filtering. In Proceedings of the 2019 European Control Conference (ECC 2019), Naples, Italy, 25–28 June 2019. [Google Scholar]

- Li, K.; Pfaff, F.; Hanebeck, U.D. Hyperspherical Deterministic Sampling Based on Riemannian Geometry for Improved Nonlinear Bingham Filtering. In Proceedings of the 22nd International Conference on Information Fusion (Fusion 2019), Ottawa, ON, Canada, 2–5 July 2019. [Google Scholar]

- Steinbring, J.; Hanebeck, U.D. S2KF: The Smart Sampling Kalman Filter. In Proceedings of the 16th International Conference on Information Fusion (Fusion 2013), Istanbul, Turkey, 9–12 July 2013. [Google Scholar]

- Kurz, G.; Gilitschenski, I.; Hanebeck, U.D. Nonlinear Measurement Update for Estimation of Angular Systems Based on Circular Distributions. In Proceedings of the 2014 American Control Conference (ACC 2014), Portland, OR, USA, 4–6 June 2014. [Google Scholar]

- Li, K.; Frisch, D.; Radtke, S.; Noack, B.; Hanebeck, U.D. Wavefront Orientation Estimation Based on Progressive Bingham Filtering. In Proceedings of the IEEE ISIF Workshop on Sensor Data Fusion: Trends, Solutions, Applications (SDF 2018), Bonn, Germany, 9–11 October 2018. [Google Scholar]

- Sra, S. A Short Note on Parameter Approximation for von Mises–Fisher Distributions: And a Fast Implementation of Is(x). Comput. Stat. 2012, 27, 177–190. [Google Scholar] [CrossRef]

- Banerjee, A.; Dhillon, I.S.; Ghosh, J.; Sra, S. Clustering on the Unit Hypersphere Using von Mises–Fisher Distributions. J. Mach. Learn. Res. 2005, 6, 1345–1382. [Google Scholar]

- Kurz, G.; Pfaff, F.; Hanebeck, U.D. Kullback–Leibler Divergence and Moment Matching for Hyperspherical Probability Distributions. In Proceedings of the 19th International Conference on Information Fusion (Fusion 2016), Heidelberg, Germany, 5–8 July 2016. [Google Scholar]

- Hauberg, S.; Lauze, F.; Pedersen, K.S. Unscented Kalman Filtering on Riemannian Manifolds. J. Math. Imaging Vis. 2013, 46, 103–120. [Google Scholar] [CrossRef]

- Leopardi, P. A Partition of the Unit Sphere Into Regions of Equal Area and Small Diameter. Electron. Trans. Numer. Anal. 2006, 25, 309–327. [Google Scholar]

- Jeffrey, A.; Dai, H.H. Handbook of Mathematical Formulas and Integrals; Elsevier: Amsterdam, The Netherlands, 2008. [Google Scholar]

- Bruckner, A.M.; Bruckner, J.B.; Thomson, B.S. Real Analysis; Prentice Hall: Upper Saddle River, NJ, USA, 1997. [Google Scholar]

- Li, K.; Pfaff, F.; Hanebeck, U.D. Nonlinear von Mises–Fisher Filtering Based on Isotropic Deterministic Sampling. In Proceedings of the 2020 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI 2020), Karlsruhe, Germany, 14–16 September 2020. [Google Scholar]

- Lengyel, E. Mathematics for 3D Game Programming and Computer Graphics; Nelson Education: Toronto, ON, Canada, 2012. [Google Scholar]

- Chiuso, A.; Picci, G. Visual Tracking of Points as Estimation on the Unit Sphere. In The Confluence of Vision and Control; Springer: Berlin/Heidelberg, Germany, 1998; pp. 90–105. [Google Scholar]

- Radtke, S.; Li, K.; Noack, B.; Hanebeck, U.D. Comparative Study of Track-to-Track Fusion Methods for Cooperative Tracking with Bearings-only Measurements. In Proceedings of the 2019 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI 2019), Taipei, Taiwan, 6–9 May 2019. [Google Scholar]

- Traa, J.; Smaragdis, P. Multiple Speaker Tracking With the Factorial von Mises–Fisher Filter. In Proceedings of the IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Reims, France, 21–24 September 2014. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, K.; Pfaff, F.; Hanebeck, U.D. Progressive von Mises–Fisher Filtering Using Isotropic Sample Sets for Nonlinear Hyperspherical Estimation. Sensors 2021, 21, 2991. https://doi.org/10.3390/s21092991

Li K, Pfaff F, Hanebeck UD. Progressive von Mises–Fisher Filtering Using Isotropic Sample Sets for Nonlinear Hyperspherical Estimation. Sensors. 2021; 21(9):2991. https://doi.org/10.3390/s21092991

Chicago/Turabian StyleLi, Kailai, Florian Pfaff, and Uwe D. Hanebeck. 2021. "Progressive von Mises–Fisher Filtering Using Isotropic Sample Sets for Nonlinear Hyperspherical Estimation" Sensors 21, no. 9: 2991. https://doi.org/10.3390/s21092991

APA StyleLi, K., Pfaff, F., & Hanebeck, U. D. (2021). Progressive von Mises–Fisher Filtering Using Isotropic Sample Sets for Nonlinear Hyperspherical Estimation. Sensors, 21(9), 2991. https://doi.org/10.3390/s21092991