Combining RSSI and Accelerometer Features for Room-Level Localization

Abstract

:1. Introduction

- Testing the efficiency of accelerometer measurements in room-level localization task, which is actually a classification problem.

- Examining the performance of feature extraction from RSSI readings, based on the features suggested in [8].

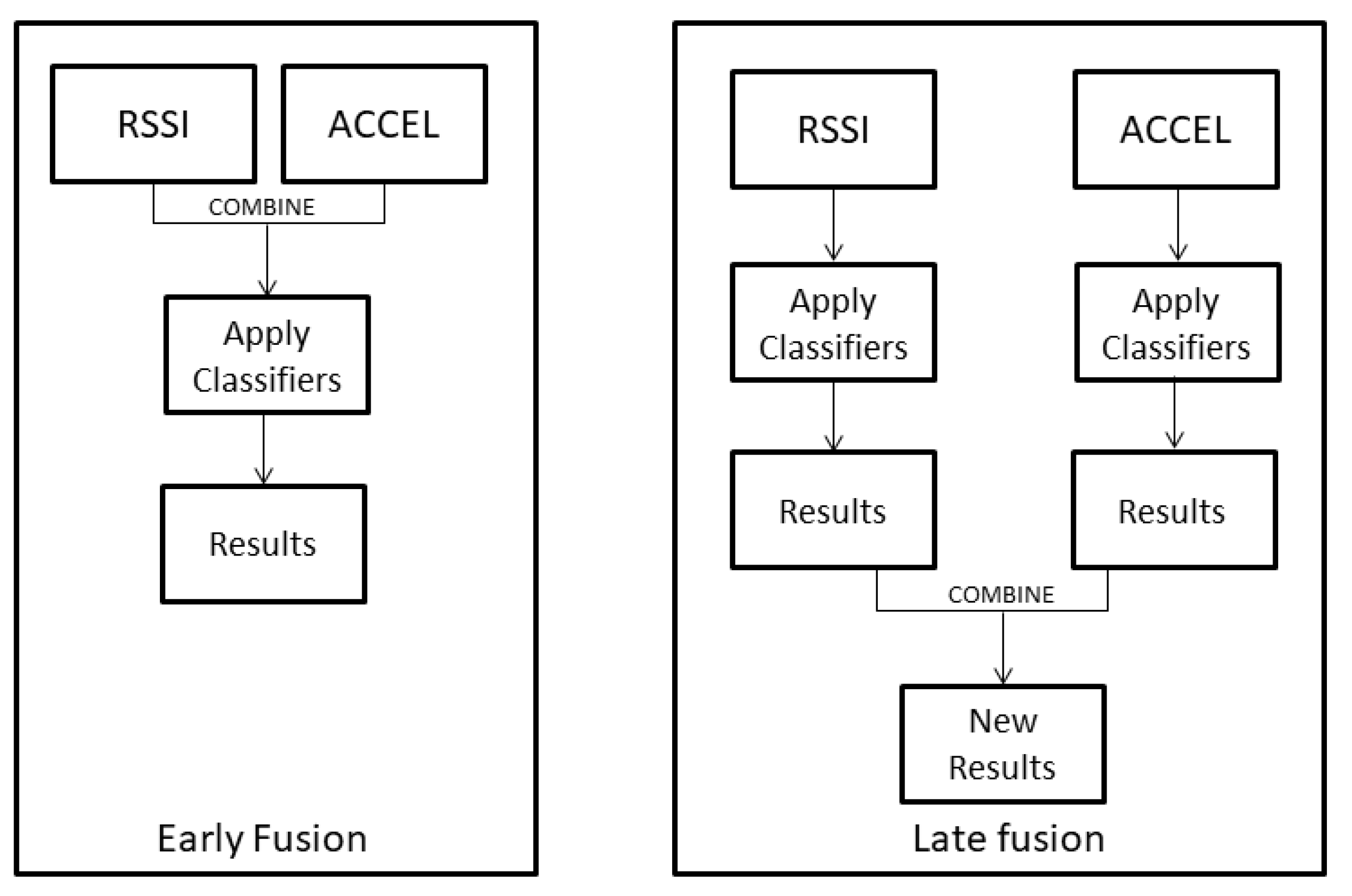

- Assessing the performance of RSSI and accelerometer data together, in room-level localization, by combining them in an early level or in the results level. To achieve this, we applied several ensemble learning methods, which are not usually implemented in such tasks, but they are very common in wearable sensors’ analysis for problems like activity recognition or fall detection.

- We manipulated the initial dataset [11] in two different ways, which we later refer to as evaluation protocols, to apply the aforementioned framework. We finally compare the individual performance of RSSI and accelerometer features, with the performance of the concatenated features (early fusion) as well as the performance of the late fusion algorithms.

2. Related Work

3. Methods

- (1)

- Initialization of the population of chromosomes.

- (2)

- Selection of the part of the population that survive using the fitness function as a criterion.

- (3)

- Creation of a new generation of chromosomes through a combination of genetic operators: crossover and mutation.

- The crossover is a genetic operation used to combine two parents to create a new chromosome.

- The mutation is a genetic operation used to maintain diversity from one generation to the next.

- (4)

- Repetition of steps 2 and 3 until a termination condition is reached.

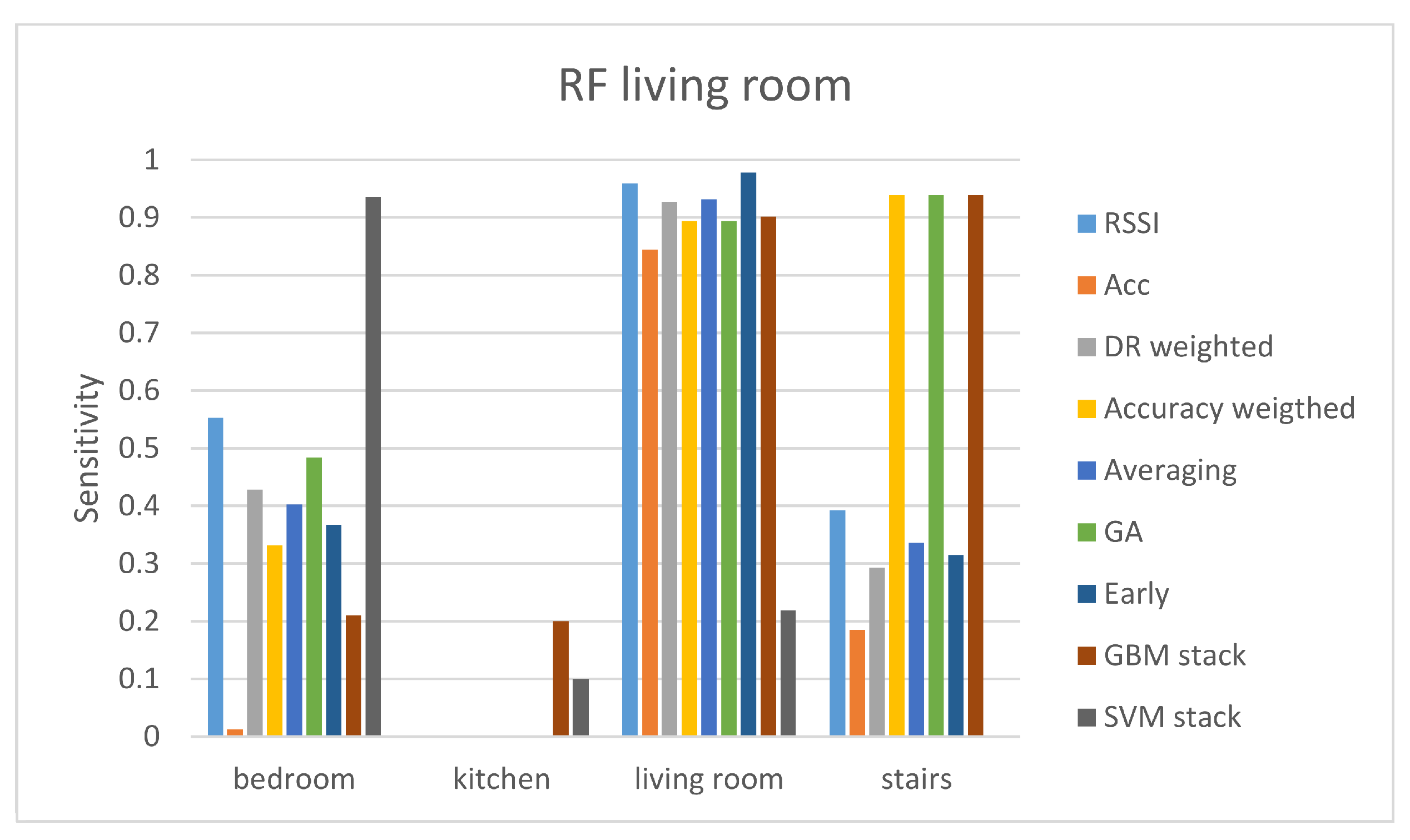

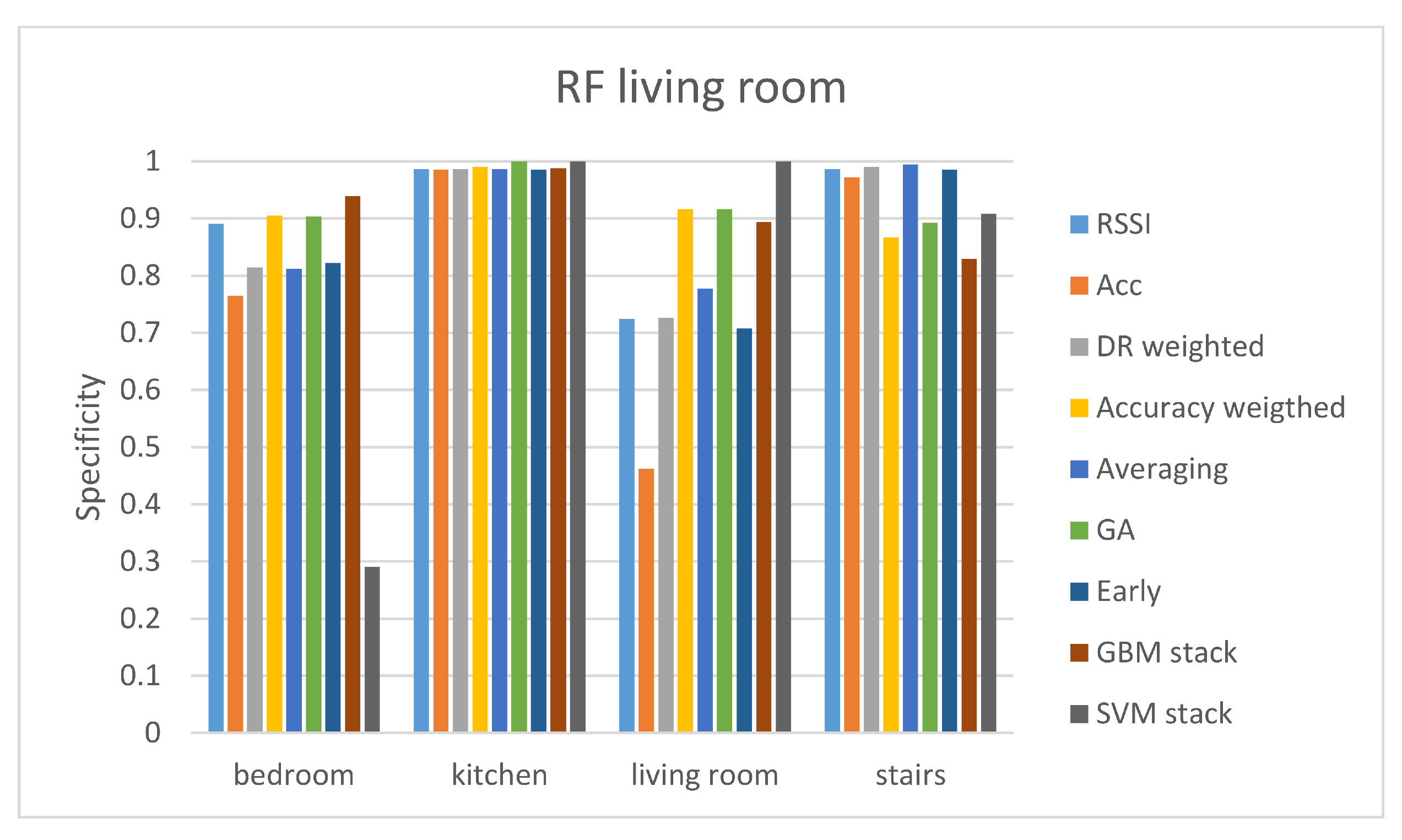

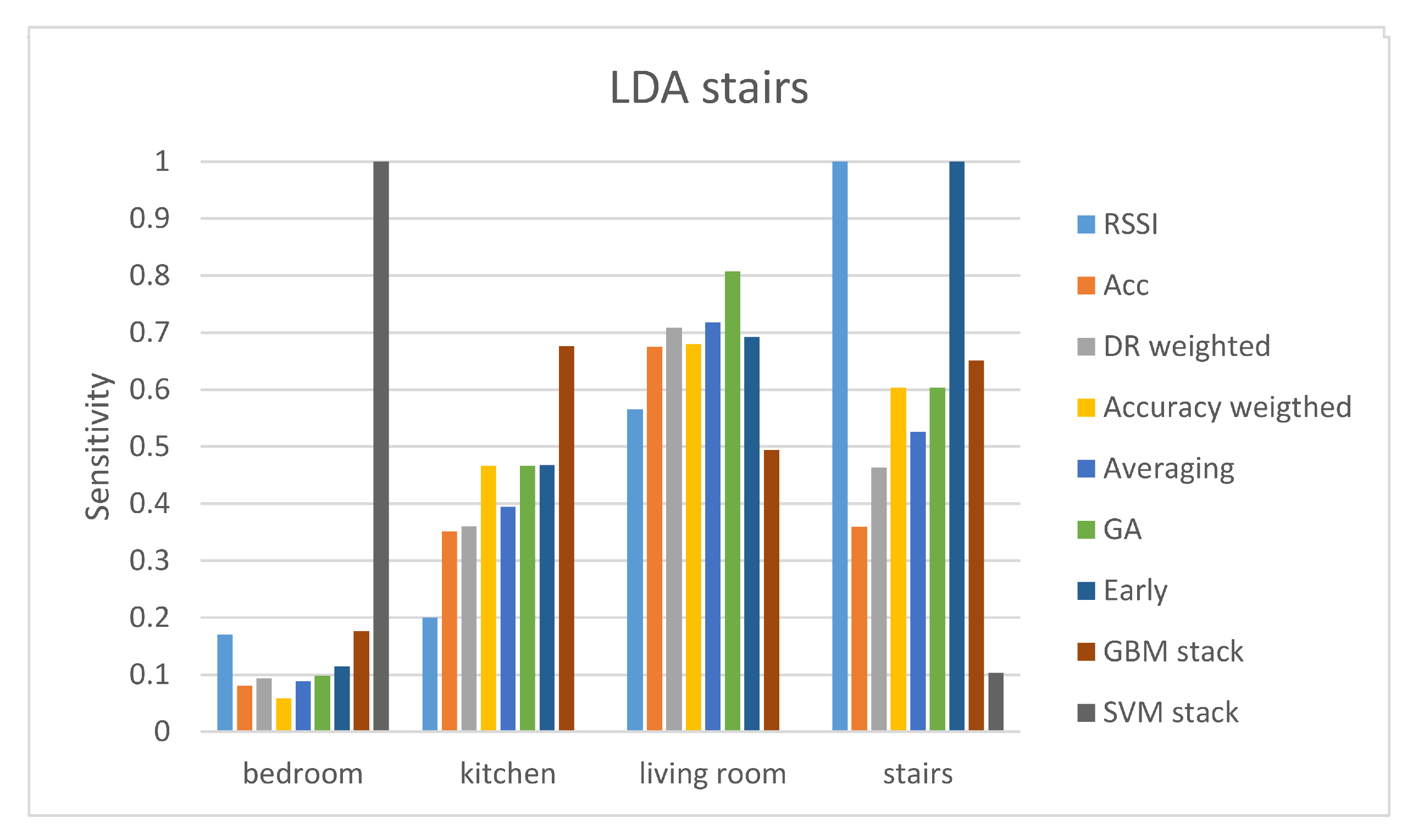

4. Results

4.1. Data

4.2. Experimental Results

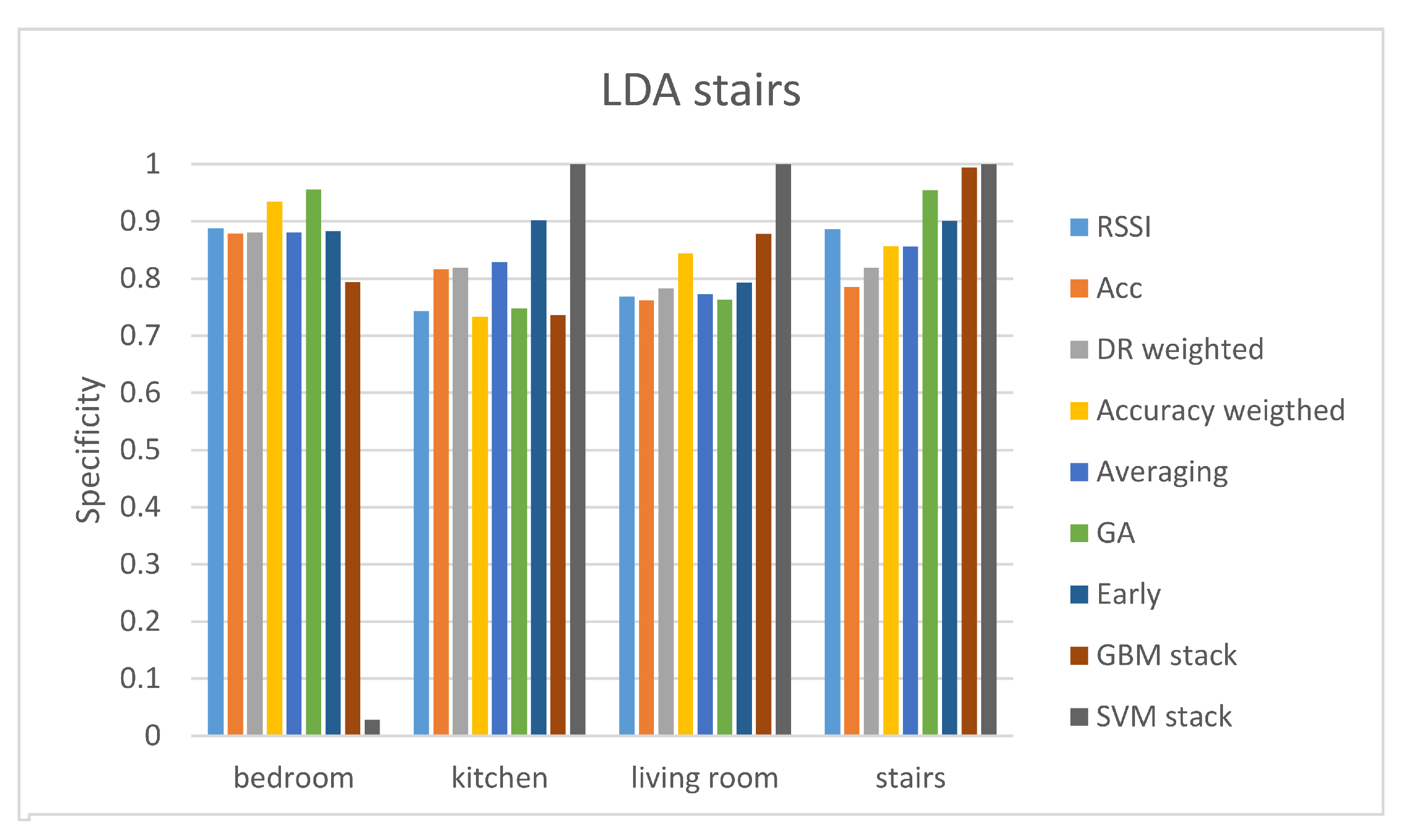

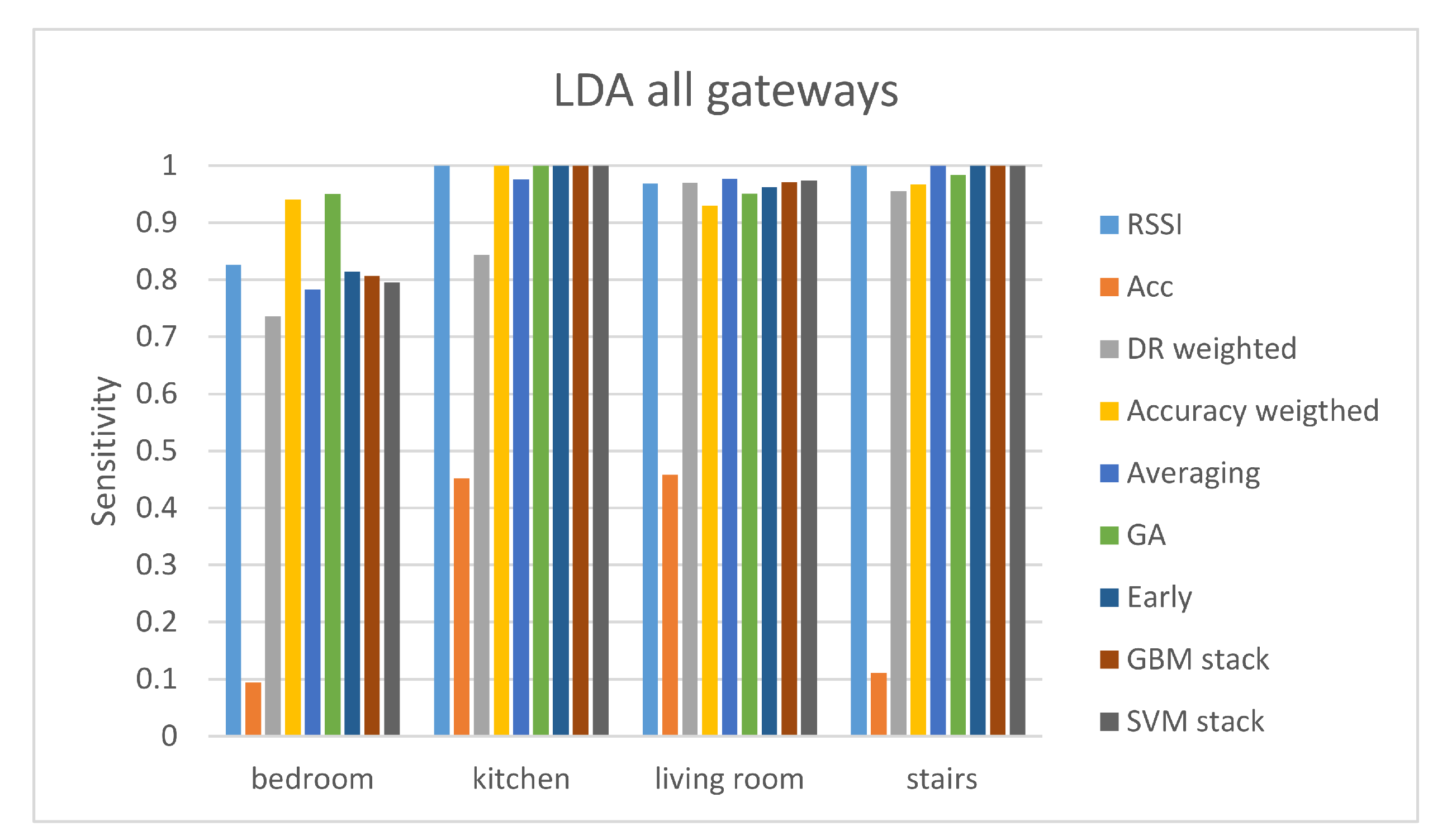

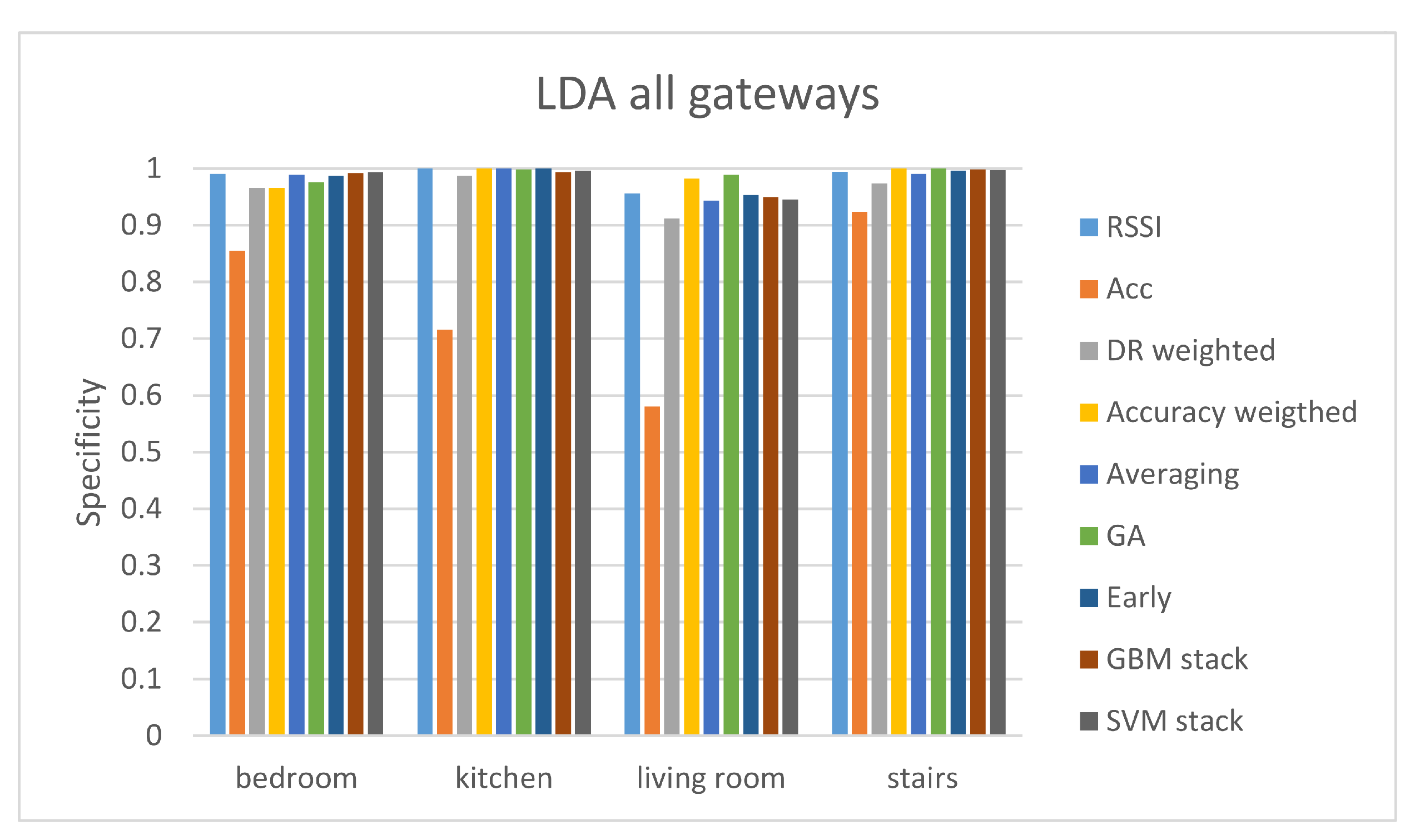

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- McConville, R.; Byrne, D.; Craddock, I.; Piechocki, R.; Pope, J.; Santos-Rodriguez, R. Understanding the quality of calibrations for indoor localisation. In Proceedings of the 2018 IEEE 4th World Forum on Internet of Things (WF-IoT), Singapore, 5–8 February 2018; pp. 676–681. [Google Scholar]

- Hossain, M.S. Cloud-supported cyber–physical localization framework for patients monitoring. IEEE Syst. J. 2015, 11, 118–127. [Google Scholar] [CrossRef]

- Correa, A.; Barcelo, M.; Morell, A.; Vicario, J.L. A review of pedestrian indoor positioning systems for mass market applications. Sensors 2017, 17, 1927. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ansari, A.R.; Saeed, N.; Haq, M.I.U.; Cho, S. Accurate 3D localization method for public safety applications in vehicular ad-hoc networks. IEEE Access 2018, 6, 20756–20763. [Google Scholar] [CrossRef]

- Kyritsis, A.I.; Kostopoulos, P.; Deriaz, M.; Konstantas, D. A BLE-based probabilistic room-level localization method. In Proceedings of the 2016 International Conference on Localization and GNSS (ICL-GNSS), Barcelona, Spain, 28–30 June 2016; pp. 1–6. [Google Scholar]

- Kozlowski, M.; Byrne, D.; Santos-Rodriguez, R.; Piechocki, R. Data Fusion for robust indoor localisation in digital health. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference Workshops (WCNCW), Barcelona, Spain, 15–18 April 2018; pp. 302–307. [Google Scholar]

- Sadowski, S.; Spachos, P. Rssi-based indoor localization with the internet of things. IEEE Access 2018, 6, 30149–30161. [Google Scholar] [CrossRef]

- McConville, R.; Archer, G.; Craddock, I.; Kozłowski, M.; Piechocki, R.; Pope, J.; Santos-Rodriguez, R. Vesta: A digital health analytics platform for a smart home in a box. Future Gener. Comput. Syst. 2021, 114, 106–119. [Google Scholar] [CrossRef]

- Tudor-Locke, C.; Barreira, T.V.; Schuna, J.M., Jr. Comparison of step outputs for waist and wrist accelerometer attachment sites. Med. Sci. Sport. Exerc. 2015, 47, 839–842. [Google Scholar] [CrossRef] [PubMed]

- Kozłowski, M.; Santos-Rodríguez, R.; Piechocki, R. Sensor modalities and fusion for robust indoor localisation. EAI Endorsed Trans. Ambient Syst. 2019, 6. [Google Scholar] [CrossRef] [Green Version]

- McConville, R.; Byrne, D.; Craddock, I.; Piechocki, R.; Pope, J.; Santos-Rodriguez, R. A dataset for room level indoor localization using a smart home in a box. Data Brief 2019, 22, 1044–1051. [Google Scholar] [CrossRef] [PubMed]

- Bahl, P.; Padmanabhan, V.N. RADAR: An in-building RF-based user location and tracking system. In Proceedings of the IEEE INFOCOM 2000, Conference on Computer Communications, Nineteenth Annual Joint Conference of the IEEE Computer and Communications Societies (Cat. No. 00CH37064), Tel Aviv, Israel, 26–30 March 2000; Volume 2, pp. 775–784. [Google Scholar]

- Husen, M.N.; Lee, S. Indoor human localization with orientation using WiFi fingerprinting. In Proceedings of the 8th International Conference on Ubiquitous Information Management and Communication, Siem Reap, Cambodia, 9–11 January 2014; pp. 1–6. [Google Scholar]

- Poulose, A.; Han, D.S. Hybrid Deep Learning Model Based Indoor Positioning Using Wi-Fi RSSI Heat Maps for Autonomous Applications. Electronics 2021, 10, 2. [Google Scholar] [CrossRef]

- Ssekidde, P.; Steven Eyobu, O.; Han, D.S.; Oyana, T.J. Augmented CWT Features for Deep Learning-Based Indoor Localization Using WiFi RSSI Data. Appl. Sci. 2021, 11, 1806. [Google Scholar] [CrossRef]

- Wang, J.; Hwang, J.G.; Peng, J.; Park, J.; Park, J.G. Gaussian Filtered RSSI-based Indoor Localization in WLAN using Bootstrap Filter. In Proceedings of the 2021 International Conference on Electronics, Information, and Communication (ICEIC), Jeju, Korea, 31 January–3 February 2021; pp. 1–4. [Google Scholar]

- Coviello, G.; Avitabile, G. Multiple synchronized inertial measurement unit sensor boards platform for activity monitoring. IEEE Sens. J. 2020, 20, 8771–8777. [Google Scholar] [CrossRef]

- Evennou, F.; Marx, F. Advanced integration of WiFi and inertial navigation systems for indoor mobile positioning. EURASIP J. Adv. Signal Process. 2006, 2006, 86706. [Google Scholar] [CrossRef] [Green Version]

- Yoon, P.K.; Zihajehzadeh, S.; Kang, B.S.; Park, E.J. Adaptive Kalman filter for indoor localization using Bluetooth Low Energy and inertial measurement unit. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 825–828. [Google Scholar]

- Malyavej, V.; Kumkeaw, W.; Aorpimai, M. Indoor robot localization by RSSI/IMU sensor fusion. In Proceedings of the 2013 10th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Krabi, Thailand, 15–17 May 2013; pp. 1–6. [Google Scholar]

- Poulose, A.; Kim, J.; Han, D.S. A sensor fusion framework for indoor localization using smartphone sensors and Wi-Fi RSSI measurements. Appl. Sci. 2019, 9, 4379. [Google Scholar] [CrossRef] [Green Version]

- Li, W.W.L.; Iltis, R.A.; Win, M.Z. A smartphone localization algorithm using RSSI and inertial sensor measurement fusion. In Proceedings of the 2013 IEEE Global Communications Conference (GLOBECOM), Atlanta, GA, USA, 9–13 December 2013; pp. 3335–3340. [Google Scholar]

- Xu, Y.; Shen, T.; Chen, X.Y.; Bu, L.L.; Feng, N. Predictive adaptive Kalman filter and its application to INS/UWB-integrated human localization with missing UWB-based measurements. Int. J. Autom. Comput. 2019, 16, 604–613. [Google Scholar] [CrossRef]

- Brigalda, D.; Tapus, N. Indoor localization with correction and validation. In Proceedings of the 2020 19th RoEduNet Conference: Networking in Education and Research (RoEduNet), Bucharest, Romania, 11–12 December 2020; pp. 1–6. [Google Scholar]

- Jeon, J.S.; Kong, Y.; Nam, Y.; Yim, K. An indoor positioning system using bluetooth RSSI with an accelerometer and a barometer on a smartphone. In Proceedings of the 2015 10th International Conference on Broadband and Wireless Computing, Communication and Applications (BWCCA), Krakow, Poland, 4–6 November 2015; pp. 528–531. [Google Scholar]

- Grzechca, D.; Wróbel, T.; Bielecki, P. Indoor localization of objects based on RSSI and MEMS sensors. In Proceedings of the 2014 14th International symposium on communications and information technologies (ISCIT), Incheon, Korea, 24–26 September 2014; pp. 143–146. [Google Scholar]

- Lv, X.; Mourad-Chehade, F.; Snoussi, H. Fingerprinting-based localization using accelerometer information in wireless sensor networks. In Proceedings of the 2013 IEEE Global Communications Conference (GLOBECOM), Atlanta, GA, USA, 9–13 December 2013; pp. 510–515. [Google Scholar]

- Jiang, Y.; Pan, X.; Li, K.; Lv, Q.; Dick, R.P.; Hannigan, M.; Shang, L. Ariel: Automatic wi-fi based room fingerprinting for indoor localization. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 441–450. [Google Scholar]

- Li, J.; Xie, Z.; Sun, X.; Tang, J.; Liu, H.; Stankovic, J.A. An automatic and accurate localization system for firefighters. In Proceedings of the 2018 IEEE/ACM Third International Conference on Internet-of-Things Design and Implementation (IoTDI), Orlando, FL, USA, 17–20 April 2018; pp. 13–24. [Google Scholar]

- Gunes, H.; Piccardi, M. Affect recognition from face and body: Early fusion vs. late fusion. In Proceedings of the 2005 IEEE international conference on systems, man and cybernetics, Waikoloa, HI, USA, 12 October 2005; Volume 4, pp. 3437–3443. [Google Scholar]

- Maghsoudi, Y.; Alimohammadi, A.; Zoej, M.V.; Mojaradi, B. Weighted combination of multiple classifiers for the classification of hyperspectral images using a genetic algorithm. In ISPRS Commission I Symposium” From Sensors to Imagery; Citeseer: Marne-la-Vallée, France, 2006. [Google Scholar]

- Tsanousa, A.; Meditskos, G.; Vrochidis, S.; Kompatsiaris, I. A weighted late fusion framework for recognizing human activity from wearable sensors. In Proceedings of the 2019 10th International Conference on Information, Intelligence, Systems and Applications (IISA), Patras, Greece, 15–17 July 2019; pp. 1–8. [Google Scholar]

- Goldberg, D.E.; Deb, K. Special issue on genetic algorithms. Comput. Methods Appl. Mech. Eng. 2000, 186, 121–124. [Google Scholar] [CrossRef]

- Su, X.; Tong, H.; Ji, P. Activity recognition with smartphone sensors. Tsinghua Sci. Technol. 2014, 19, 235–249. [Google Scholar]

- Yerushalmy, J. Statistical problems in assessing methods of medical diagnosis, with special reference to X-ray techniques. In Public Health Reports 1896–1970; Sage Publications, Inc.: Thousand Oaks, CA, USA, 1947; pp. 1432–1449. [Google Scholar]

- Pope, J.; McConville, R.; Kozlowski, M.; Fafoutis, X.; Santos-Rodriguez, R.; Piechocki, R.J.; Craddock, I. Sphere in a box: Practical and scalable eurvalve activity monitoring smart home kit. In Proceedings of the 2017 IEEE 42nd Conference on Local Computer Networks Workshops (LCN Workshops), Singapore, 9 October 2017; pp. 128–135. [Google Scholar]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd international conference on document analysis and recognition, Montreal, QC, Canada, 14–16 August 1995; Volume 1, pp. 278–282. [Google Scholar]

- Hodges, J.L. Discriminatory Analysis; Number 11; USAF School of Aviation Medicine: Dayton, OH, USA, 1950. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Ripley, B.D. Modern Applied Statistics with S. In Statistics and Computing; Springer: New York, NY, USA, 2002. [Google Scholar]

| Features | |||

|---|---|---|---|

| Mean | Standard deviation | 25% quantile | Skewness |

| Median | Minimum | 75% quantile | Kurtosis |

| Variance | Maximum | Interquartile range | |

| Classifier | RSSI | Acc | DR Weighted Fusion | Accuracy Weighted Fusion | Early Fusion | Averaging | GA Weighted |

|---|---|---|---|---|---|---|---|

| KNN | 0.7506 | 0.4906 | 0.6622 | 0.7399 | 0.7466 | 0.6997 | 0.7614 |

| LDA | 0.6769 | 0.5402 | 0.5898 | 0.6662 | 0.6099 | 0.5416 | 0.7185 |

| RF | 0.7895 | 0.5201 | 0.7212 | 0.7668 | 0.7131 | 0.7439 | 0.7989 |

| SVM | 0.7386 | 0.1367 | 0.5268 | 0.7265 | 0.5389 | 0.5979 | 0.7212 |

| Stacking Algorithms | KNN | LDA | RF | SVM |

|---|---|---|---|---|

| SVM | 0.6769 | 0.6501 | 0.3539 | 0.4638 |

| GBM | 0.7319 | 0.7721 | 0.7493 | 0.5550 |

| Classifier | RSSI | Acc | DR Weighted Fusion | Accuracy Weighted Fusion | Early Fusion | Averaging | GA Weighted |

|---|---|---|---|---|---|---|---|

| KNN | 0.4447 | 0.2304 | 0.3502 | 0.4539 | 0.5184 | 0.3871 | 0.4677 |

| LDA | 0.4954 | 0.4470 | 0.4908 | 0.5369 | 0.6198 | 0.5161 | 0.5922 |

| RF | 0.4516 | 0.4055 | 0.4839 | 0.5138 | 0.5276 | 0.4792 | 0.5553 |

| SVM | 0.5092 | 0.4217 | 0.3433 | 0.4147 | 0.5323 | 0.3433 | 0.4378 |

| Stacking Algorithms | KNN | LDA | RF | SVM |

|---|---|---|---|---|

| SVM | 0.3249 | 0.1429 | 0.2419 | 0.3065 |

| GBM | 0.4470 | 0.5392 | 0.4355 | 0.5691 |

| Classifier | RSSI | Acc | DR Weighted Fusion | Accuracy Weighted Fusion | Early Fusion | Averaging | GA Weighted |

|---|---|---|---|---|---|---|---|

| KNN | 0.9526 | 0.9544 | 0.7807 | 0.9807 | 0.9561 | 0.9807 | 0.9860 |

| LDA | 0.8877 | 0.8018 | 0.7211 | 0.9298 | 0.5579 | 0.8439 | 0.9333 |

| RF | 0.9825 | 0.8105 | 0.6982 | 0.9912 | 0.9842 | 0.9842 | 0.9912 |

| SVM | 0.9754 | 0.9456 | 0.4825 | 0.9509 | 0.9561 | 0.9509 | 0.6175 |

| Stacking Algorithms | KNN | LDA | RF | SVM |

|---|---|---|---|---|

| SVM | 0.9649 | 0.9807 | 0.5782 | 0.7346 |

| GBM | 0.9543 | 0.8875 | 0.9859 | 0.9684 |

| Classifier | RSSI | Acc | DR Weighted Fusion | Accuracy Weighted Fusion | Early Fusion | Averaging | GA Weighted |

|---|---|---|---|---|---|---|---|

| KNN | 0.8372 | 0.4469 | 0.5448 | 0.8290 | 0.2414 | 0.7586 | 0.8524 |

| LDA | 0.8717 | 0.5228 | 0.5559 | 0.7917 | 0.7131 | 0.6221 | 0.8855 |

| RF | 0.8428 | 0.2869 | 0.4648 | 0.8303 | 0.4966 | 0.7393 | 0.8428 |

| SVM | 0.8593 | 0.2234 | 0.5214 | 0.8497 | 0.2414 | 0.6497 | 0.8386 |

| Stacking Algorithms | KNN | LDA | RF | SVM |

|---|---|---|---|---|

| SVM | 0.7793 | 0.8359 | 0.6455 | 0.6759 |

| GBM | 0.7945 | 0.8276 | 0.6386 | 0.6703 |

| Classifier | RSSI | Acc | DR Weighted Fusion | Accuracy Weighted Fusion | Early Fusion | Averaging | GA Weighted |

|---|---|---|---|---|---|---|---|

| KNN | 0.9390 | 0.3009 | 0.8573 | 0.9351 | 0.9364 | 0.8936 | 0.9429 |

| LDA | 0.9610 | 0.3307 | 0.8832 | 0.9598 | 0.9572 | 0.9481 | 0.9715 |

| RF | 0.9455 | 0.3929 | 0.7937 | 0.9351 | 0.9364 | 0.8716 | 0.9468 |

| SVM | 0.9416 | 0.3942 | 0.7886 | 0.9274 | 0.9572 | 0.8690 | 0.9429 |

| Stacking Algorithms | KNN | LDA | RF | SVM |

|---|---|---|---|---|

| SVM | 0.8872 | 0.9572 | 0.9183 | 0.9092 |

| GBM | 0.9339 | 0.9585 | 0.9429 | 0.9092 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsanousa, A.; Xefteris, V.-R.; Meditskos, G.; Vrochidis, S.; Kompatsiaris, I. Combining RSSI and Accelerometer Features for Room-Level Localization. Sensors 2021, 21, 2723. https://doi.org/10.3390/s21082723

Tsanousa A, Xefteris V-R, Meditskos G, Vrochidis S, Kompatsiaris I. Combining RSSI and Accelerometer Features for Room-Level Localization. Sensors. 2021; 21(8):2723. https://doi.org/10.3390/s21082723

Chicago/Turabian StyleTsanousa, Athina, Vasileios-Rafail Xefteris, Georgios Meditskos, Stefanos Vrochidis, and Ioannis Kompatsiaris. 2021. "Combining RSSI and Accelerometer Features for Room-Level Localization" Sensors 21, no. 8: 2723. https://doi.org/10.3390/s21082723

APA StyleTsanousa, A., Xefteris, V.-R., Meditskos, G., Vrochidis, S., & Kompatsiaris, I. (2021). Combining RSSI and Accelerometer Features for Room-Level Localization. Sensors, 21(8), 2723. https://doi.org/10.3390/s21082723