1. Introduction

Human Activity Recognition (HAR) has been considered as an indispensable technology in many Human–Computer Interaction (HCI) applications, such as smart home, health care, security surveillance, virtual reality, and location-based services (LBS) [

1,

2]. Traditional human activity sensing approaches are the wearable sensor-based methods [

3,

4] and the camera (vision)-based methods [

5,

6]. While promising and widely used, these device-based approaches suffer from respective drawbacks, making them fail to be suitable for all the application scenarios. For instance, the wearable sensor-based method works only if the users are carrying the sensors, such as smartphones, smart shoes, or smartwatches with built-in inertial measurement units (IMUs), including gyroscope, accelerometer, magnetometer, etc. However, it is inconvenient for constant use. In addition, although the camera (vision)-based method could potentially achieve satisfactory accuracy, it is limited by certain shortcomings, such as privacy leakage, line-of-sight (LOS) and light conditions, etc. Moreover, both methods require dedicated devices, which are high cost. In addition, the durability of the devices is another critical factor that should be considered.

Recently, Wi-Fi-based human activity recognition has attracted extensive attention in both academia and industry, becoming one of the most popular device-free sensing (DFS) technologies [

7,

8]. Compared with the other wireless signals, such as Frequency Modulated Continuous Wave (FMCW) [

9,

10], millimeter-wave (MMW) [

11,

12], and Ultra Wide Band (UWB) [

13,

14,

15,

16,

17], Wi-Fi possesses the most prominent and potential advantage, which is that it is ubiquitous in people’s daily lives. Leveraging the commercial off-the-shelf (COTS) devices, Wi-Fi-based human activity recognition obviates the need for additional specialized hardware. Beyond this, it also has the same merits as other wireless signals, including the capability to operate in darkness and non-line-of-sight (NLOS) situations while providing better protection of users’ privacy in the meantime. As a result, research on Wi-Fi-based human activity recognition has proliferated rapidly over the past decade [

18,

19,

20,

21].

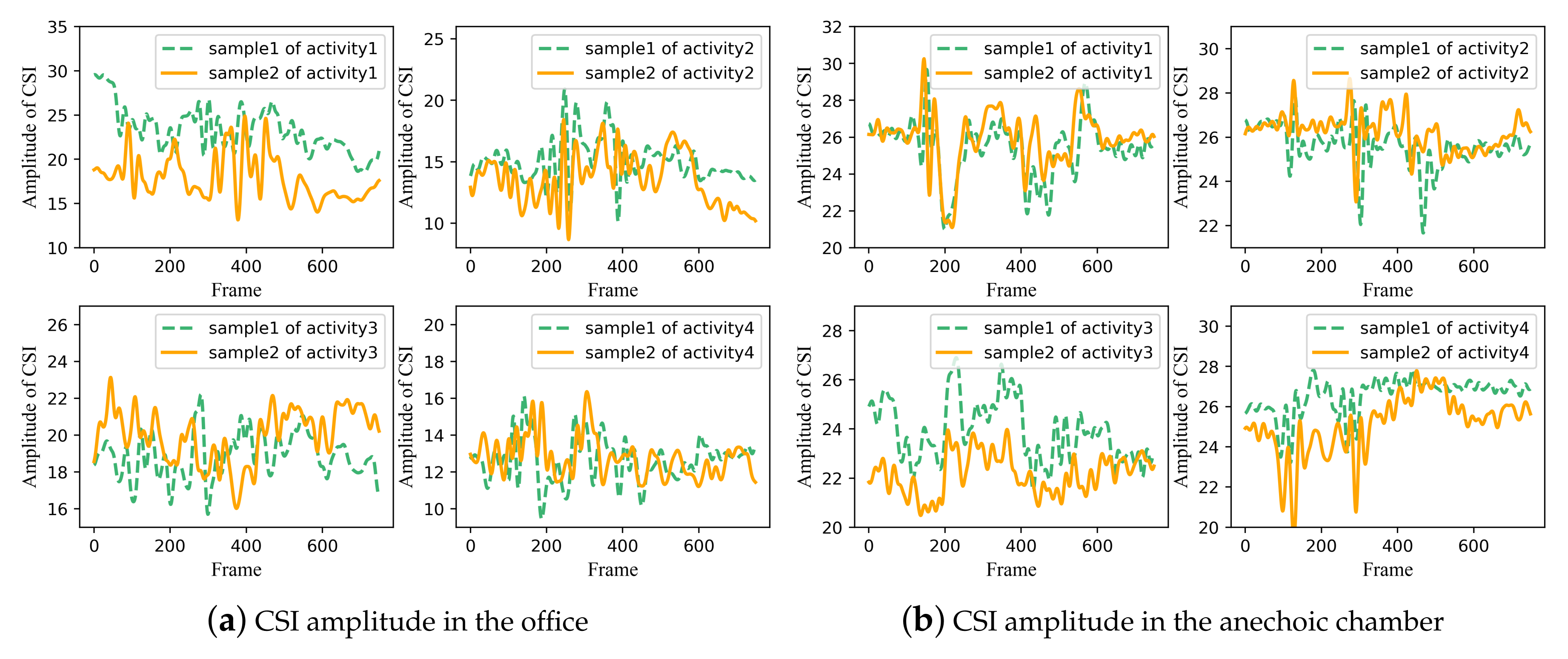

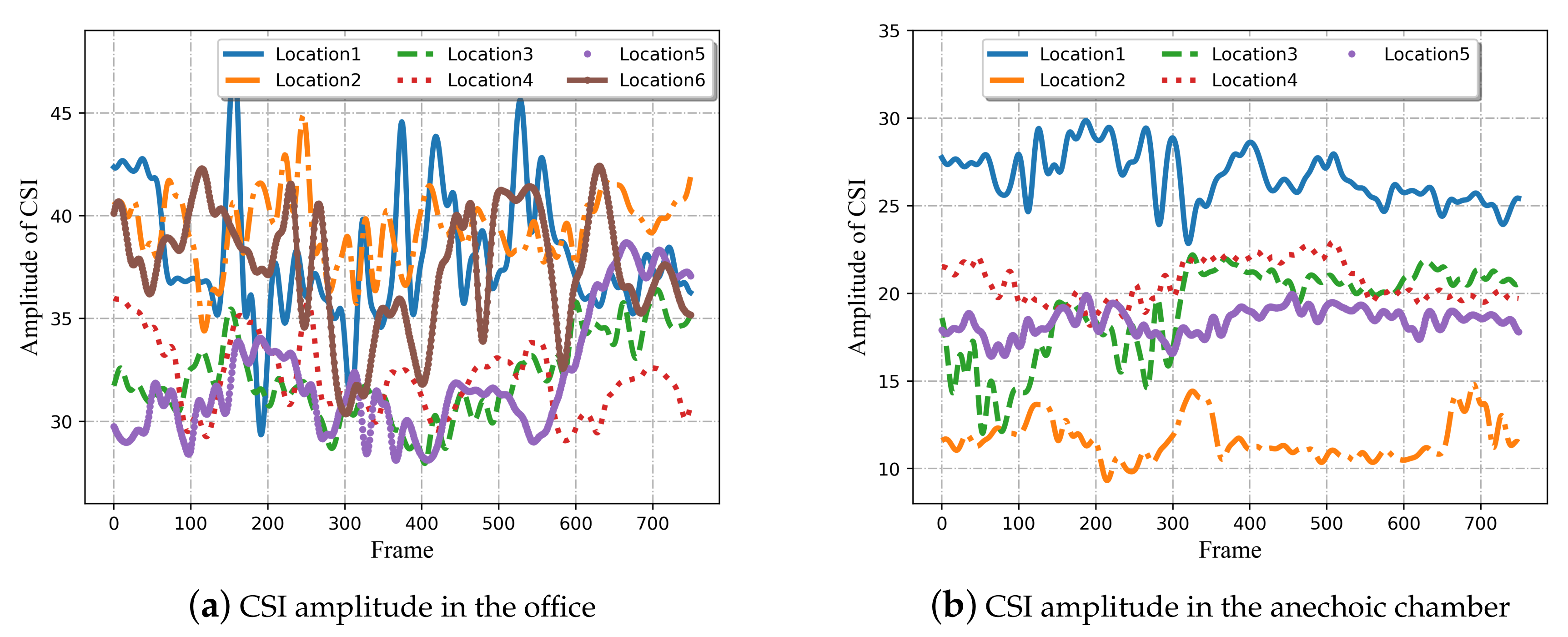

Previous attempts involving Wi-Fi-based sensing yielded great achievements, such as E-eyes [

22], CARM [

23], etc. However, the major challenge referring to the generalization performance of the approaches and systems has not been fully explored and solved. For instance, when deployed in a room, the system must work well in each location rather than a specified location. Location-independent sensing is one of the most necessary generalization capabilities. It can also be regarded as the ability of a method to transfer among different locations. Note that this is a crucial factor to determine whether the technology can be commercialized. According to the principle of wireless perception, it is not difficult to find that human activities in different locations have different effects on signal transmission. Specifically, activities conducted by people in different locations will change the path of wireless signal propagation in different ways, leading to diverse multipath superimposed signals at the receiver. It is worth noting that these signals have different data distributions, which can be treated as different domains. Hence, it is clear that the human activity recognition model trained in a specific domain will not work well in the other domains. The most obvious solution is to provide abundant data for each domain to learn the characteristics of activities in the different domains. However, it is labor-intensive, time-consuming, and with poor user experience to obtain a large amount of data in practical applications. Therefore, how to utilize as few samples as possible to solve the problem of location-independent perception to achieve outstanding generalization performance is desired.

Some solutions have been proposed to solve the above problems, and remarkable progress has been made, which lays a solid foundation for realizing location-independent sensing with good generalization ability. The solutions fall into the following four categories: (1) Generate virtual data samples for each location [

24], (2) Separate the activity signal from the background [

25,

26], (3) Extract domain-independent features [

27], and (4) Domain adaptation and transfer learning. Some approaches involving other domains (such as environment, orientation, and person) can also be grouped into these four categories. However, they pay less attention to location-independent sensing [

28,

29,

30,

31]. Although the above methods promote the process of device-free human activity recognition from academic research to industrial application, there are still some limitations. WiAG [

24] requires the user to hold a smartphone in hand for one of the training sample collections in order to estimate the parameters. Widar 3.0 [

27] is limited by link numbers and complex parameter estimation methods. FALAR [

25] benefits from its development of a new OpenWrt firmware which can get fine-grained Channel State Information (CSI) of all the 114 subcarriers, improving data resolution. Similarly, high transmission rates of the perception signal (such as 2500 packets/s in Lu et al. [

26]) can also boost the resolution. As the author described by Zhou et al. [

30], a low sampling rate may miss some key information, which accounts for the deterioration in the system performance. However, using shorter packets helps reduce latency and has less impacts on communication. The detailed discussions about the effect of different sampling rates on the sensing accuracy can be found in the evaluation in [

27,

30]. In summary, a location-independent method that can adapt to data with a small number of antennas and subcarriers as well as a small data transmission rate is required.

This work aims to realize device-free location-independent human activity recognition using as few samples as possible. It means that the model trained with the source domain data samples can perform well on the target domain with only very few data samples. We describe our task as a few-shot learning problem, improving the performance of the model in the unseen domain when its amount of available data are relatively small [

32]. The task is also consistent with meta learning, whose core idea is learning to learn [

33]. They have been successfully applied in a variety of fields to solve classification tasks. Inspired by the typical meta learning approach matching network, we apply the learning method obtained from the source domain to the target domain by means of metric learning [

34,

35]. Assuming that, although there is no stable feature that can describe a class of actions well, we can still identify its category through maximizing the inter-class variations and minimizing intra-class differences. Judging the category of a sample by calculating the distance can be regarded as a learning method. To realize location-independent sensing, we expect to learn not only the discriminative features representation specific to our task but also the distance function and metric relationships that can infer the label with a confident margin.

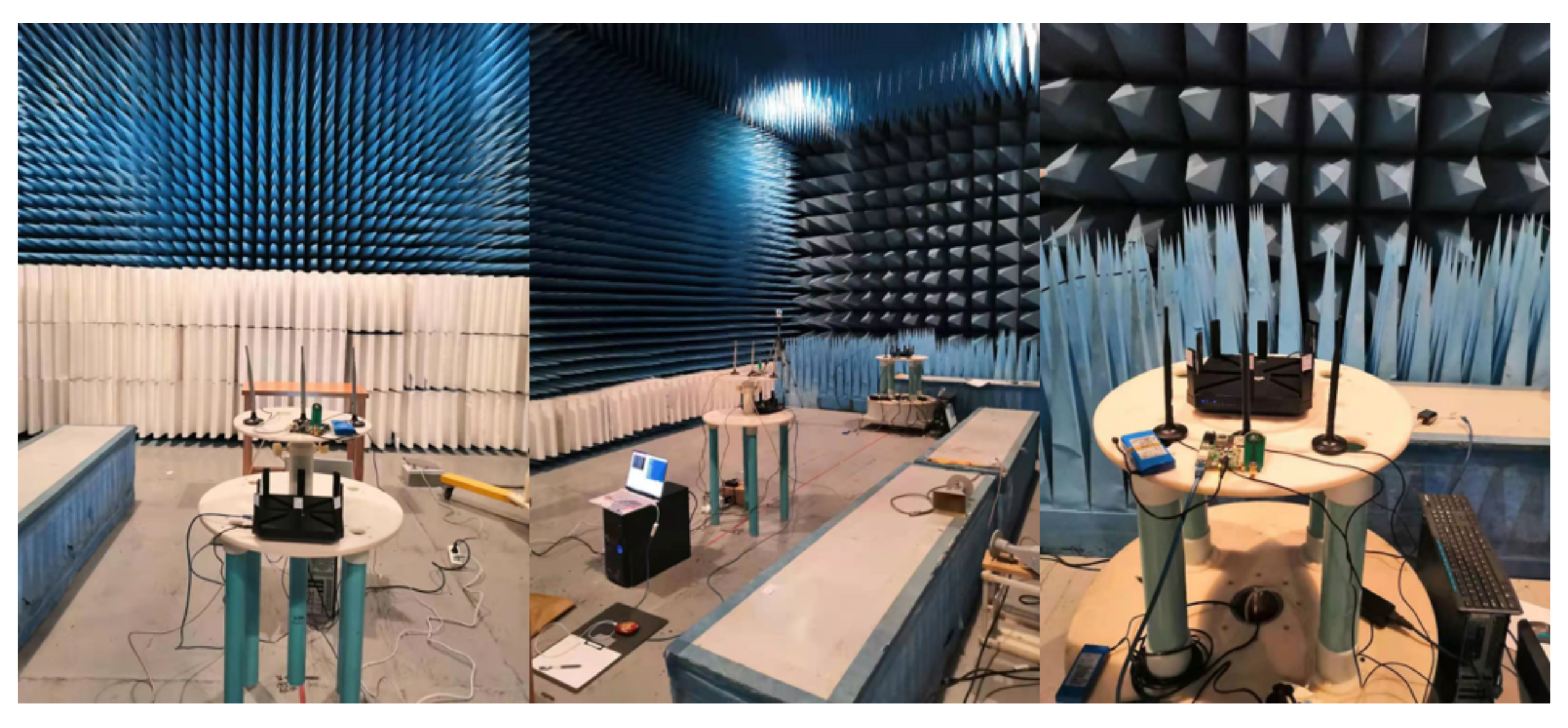

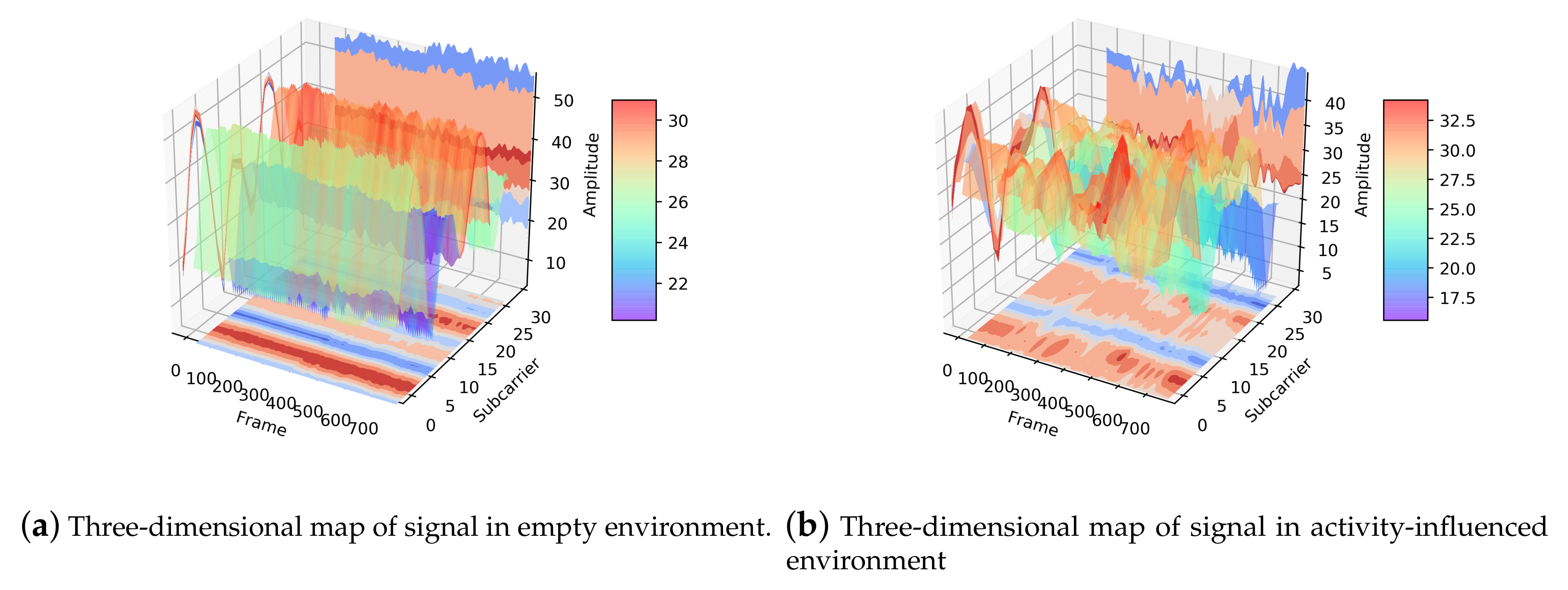

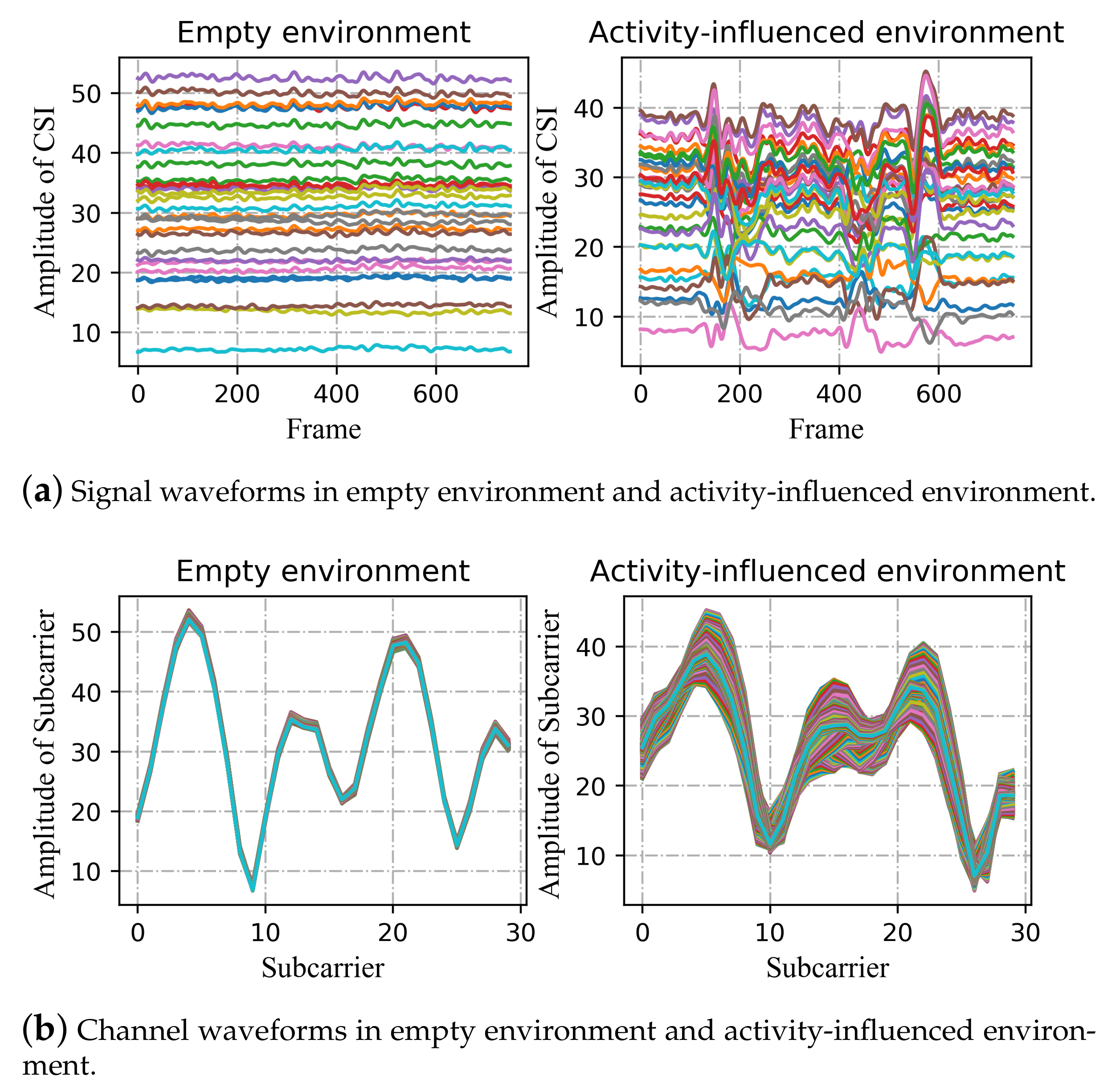

In this paper, we first comprehensively and visually investigate the effects of the same activity at different locations on wireless signal transmission. We also analyze the signal received in different antennas and subcarriers with different sampling rates. Moreover, we discuss how different locations affect signal transmission without any other variable influence factors by utilizing data collected from the anechoic chamber. Then, we propose a device-free location-independent human activity recognition system named WiLiMetaSensing, which is based on meta learning to enable few-shot learning sensing. Convolutional Neural Network (CNN) and Long Short-Term Memory (LSTM) are introduced for feature representation. Unlike the traditional feature extraction process for Wi-Fi signal based on LSTM, in this paper, the memory capacity of LSTM is utilized to retain the valuable information of the samples from all the activities. In addition, an attention mechanism-based metric learning method is used to learn the metric relations of the activity with the same or different categories. Finally, extensive experiments are conducted to explore the recognition performance of the proposed system. The evaluation refers to the property involving single location, mixed locations, and location-independent sensing. Unlike existing evaluations, we reduce the sampling rate, the number of subcarriers, and antennas. Experiments show that WiLiMetaSensing achieves satisfying results with robust performance in a variety of situations.

3. WiLiMetaSensing

In this section, we provide a detailed introduction to the proposed WiLiMetaSensing system. We first present the system overview. Then, a CNN-LSTM-based feature representation method is described. Finally, an attention mechanism enhanced metric learning-based human activity recognition method is presented.

3.1. System Overview

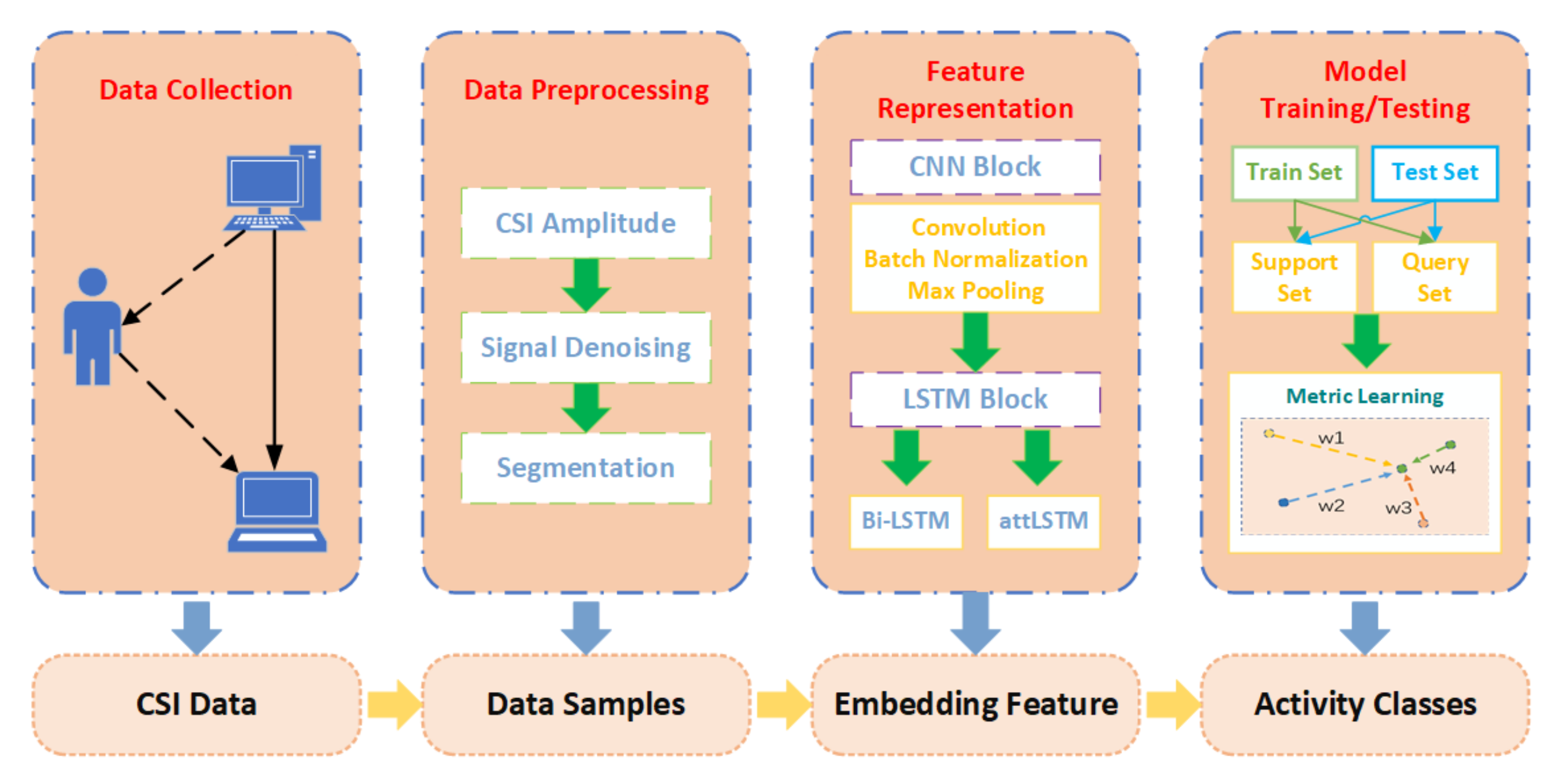

The workflow of the location-independent human activity recognition system WiLiMetaSensing is shown in

Figure 10, which mainly consists of four parts, including data collection, data preprocessing, feature representation, and model training/testing. In the data collection phase, we collect the raw CSI measurements, which describe the changes in the environment. In the data preprocessing step, the amplitude is calculated by the raw complex CSI. Due to the noisy raw data, a 5-order lowpass Butterworth filter is utilized for denoising. Beyond that, the collected data are divided into samples with the size of time × subcarrier, which indicates the number of frames corresponding to an activity multiplied by the number of subcarriers. Then, we map the data samples to high dimensional embedding space to fulfill the feature representation through CNN and LSTM. Finally, in order to achieve location-independent perception with as few samples as possible, regarding a few-shot learning problem, the human activity perceptive method based on metric learning is proposed. Subsequently, we will introduce the system in detail.

3.2. CNN-LSTM-Based Feature Representation

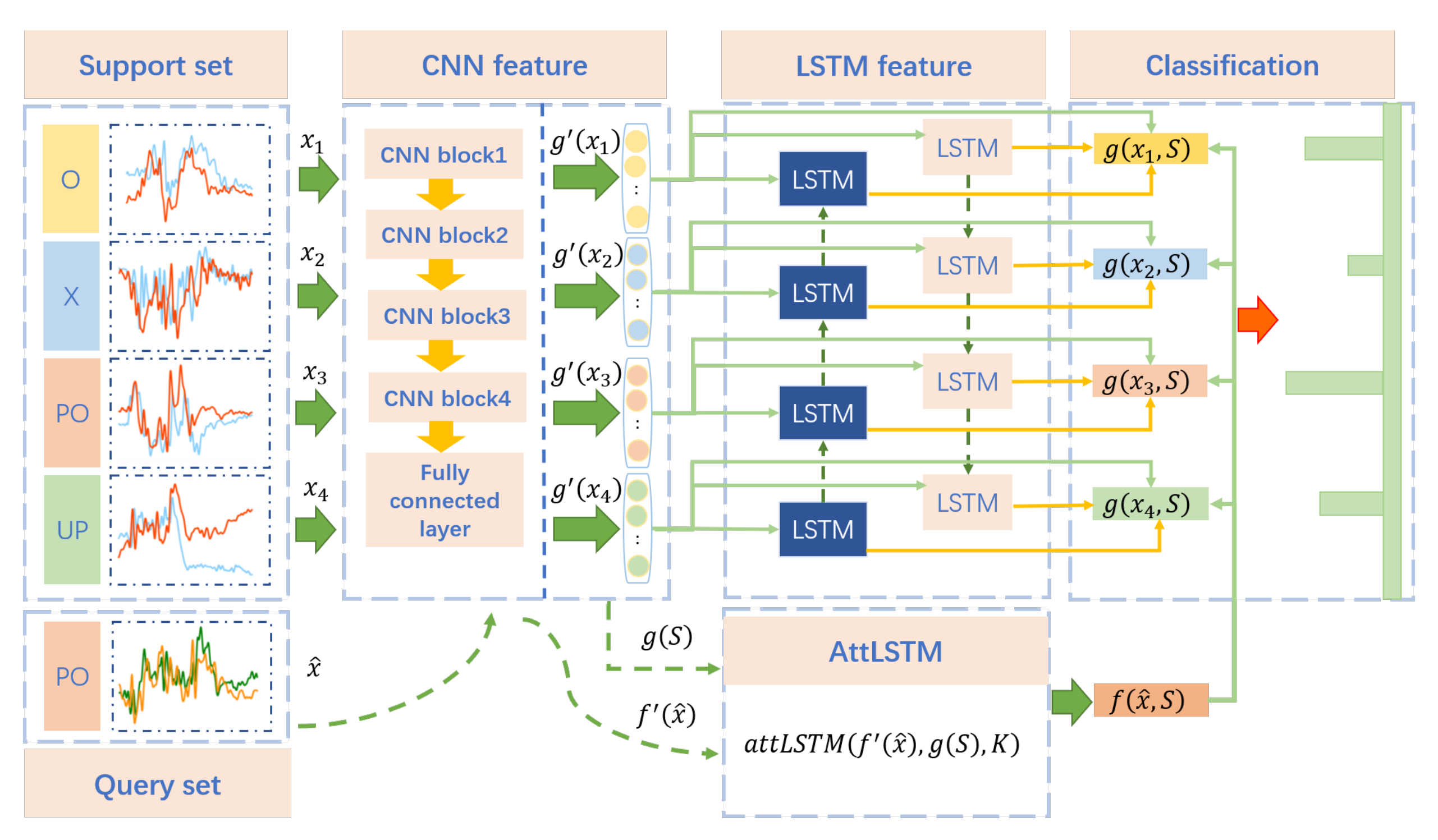

In this section, in order to extract activity-specified and location-independent features from input samples for few-shot learning, deep learning methods, including CNN and LSTM, are introduced for feature representation shown as

Figure 11. Following the learning strategy of meta learning, the data samples are divided into two parts, including the support set and the query set with the same data selection strategy, which will be presented in detail in the next section.

We use and to denote the samples from the above two sets. indicates the support set which is made up of samples from n categories, and k samples for each class. and are modeled to achieve feature representation of and fully conditioned on the support set, respectively.

The feature embedding function

for each sample

can be expressed as:

The samples are first mapped to high-dimensional embedding space through CNN to capture the feature in subcarrier and time dimensions. Specifically, the embedding model is made up of a cascade of blocks, each including a convolutional layer, a batch normalization layer, and a MaxPooling layer, followed by a fully-connected layer. The activation function is a rectified linear unit (ReLU).

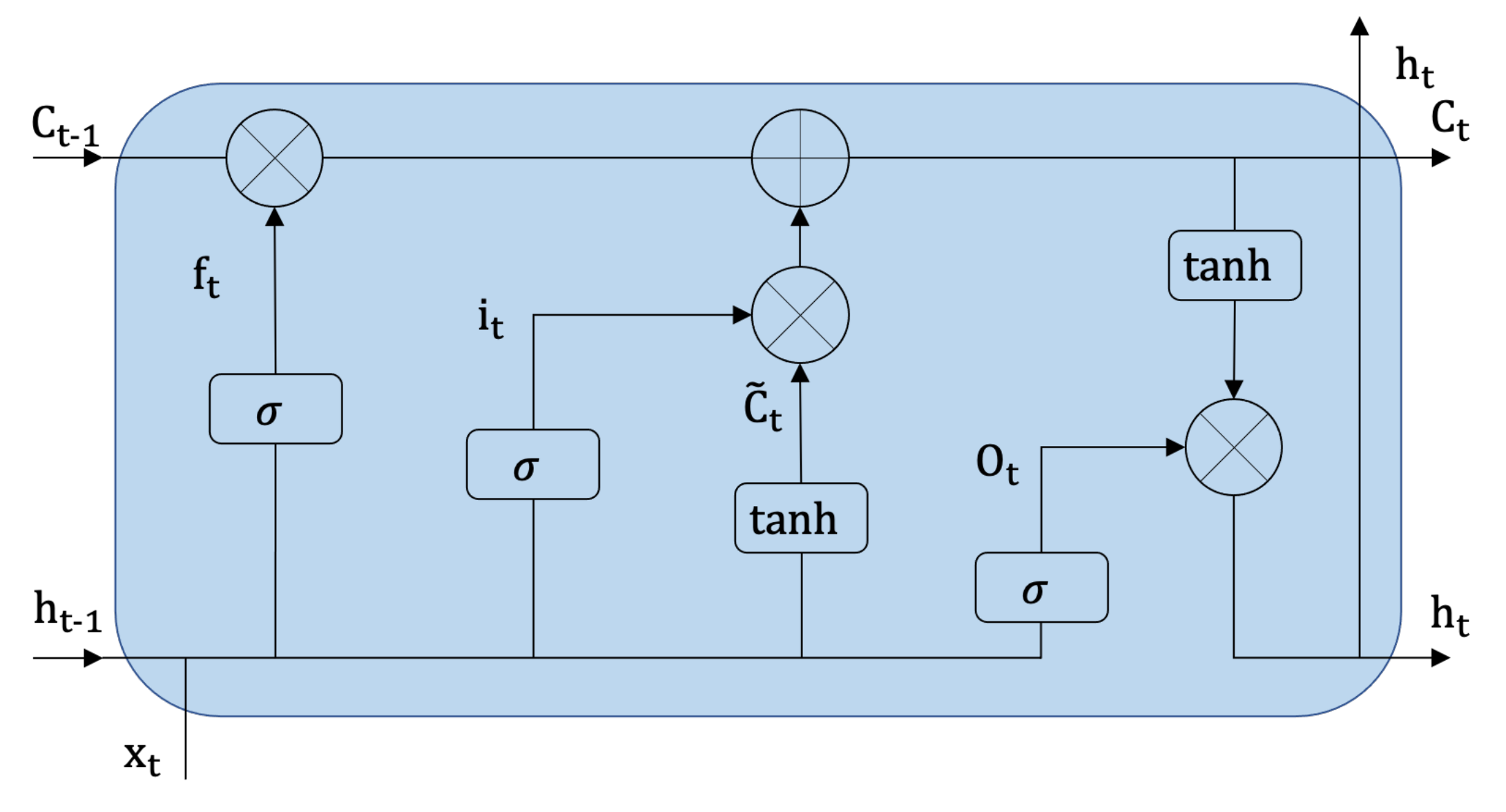

The samples embedded by CNN form a sequence, which serves as the input of bidirectional long short-term memory (Bi-LSTM). It consists of a forward propagation LSTM and a backward propagation LSTM. The basic structure of LSTM is shown in

Figure 12, which consists of three control gates, including an input gate

, a forget gate

, an output gate

. In addition, a memory cell

and a hidden unit

are also significant components. With the current input

, the hidden state

, and cell state

at time

, the LSTM parameters at timestep

t can be calculated as follows:

where

are the weight and

are the bias of the three gates.

and tanh denote sigmoid and hyperbolic tangent activation functions, respectively. × stands for the element-wise multiplication.

Through the forget gate, the previous memory cell can be selectively forgotten. The input gate controls the current input, while the output gate determines how the memory unit is converted to a hidden unit. However, the LSTM network processes the sequential data in one direction resulting in only partial categories of features that can be utilized. Therefore, the Bi-LSTM is leveraged to merge the information from two directions of the sequence. The final hidden vector of the Bi-LSTM at the

moment can be expressed as:

where ⊕ is the concatenation operation,

and

are the outputs (hidden vector) of the forward LSTM and the backward LSTM, respectively.

Through the above CNN-LSTM feature representation, we aim to leverage the common characteristics of different activities to calibrate the high-dimensional embedding of each sample. In other words, in the feature representation of each class sample, the information of other class samples can be used. As we all know, the received CSI measurements contain not only dynamic activity information but also static environment information and varying location information. Therefore, there are some common features about the background for different samples in each category. We hope that the model can learn and memorize the common characteristics of different types of activities, as well as the distinct information of different categories. The distinct information can be utilized to increase the distance of inter-class, and reduce the distance of intra-class.

The embedding function

for a query sample

is defined as follows:

where

is a neural network, the same as

.

K denotes the number of “processing” steps following work from Vinyals et al. [

37].

represents the embedding function

g applied to each element

from the set

S. Thus, the state after

k processing steps is as follows:

Noting that the

in both

g and

f follows the same LSTM implementation defined by Sutskever et al. [

38].

3.3. Metric Learning-Based Human Activity Recognition

Our location-independent activity recognition task can be described as a few-shot learning problem and a meta learning task. Meta learning trains the model from a large number of tasks and learns faster on new tasks with a small amount of data. Unlike the traditional meta learning and few-shot learning methods, which apply the model learned from some classes (source domain) to the other new classes (target domain) with very few samples from the new classes, our work is intended to utilize the model to the data with the same label, but with different data distribution.

Meta learning includes training process and testing process, which is called meta-training and meta-testing. In our task, samples in part of locations are selected as the source domain data, while samples from other locations are the target domain data. Both the source domain data and the target domain data are classified into the support set and query set with the same data set selection strategy.

Assuming that there is a source domain sample set

S with

n classes, and a target domain set

T with the same

n classes. We randomly select support sets

and

, query sets

and

from

S and

T datasets.

m and

l,

k, and

t are the number of samples picked from each class of source domain and target domain, respectively. This is the so-called

k-shot learning. More precisely, leveraging the support set

from the source domain, we learn a function which can map test samples

from

to a probability distribution

over outputs

.

P is a probability distribution parameterized by a CNN-LSTM feature representation neural network and a classifier. In the target domain, when a new support set

is given, we can simply use the function

P to make a prediction

for each test sample

from

. In short, we predict the label

for the unseen sample

and a support set

can be expressed as:

A simple method to predict

is calculating a linear combination of the labels in the support set as follows:

where

a is an attention mechanism which is shown as:

It is softmax over the cosine similarity

c of the embedding functions

f and

g, which are the feature representation neural network. In addition, the cosine similarity is calculated as:

The training procedure is an episode-based training, which is a form of meta-learning, learning to learn from a given support set to minimize a loss over a batch. More specifically, we define a task

T as a distribution over possible label sets

L (four activities in our experiment). To form an “episode” to compute gradients and update our model, we first sample

L from

T (e.g.,

L could be the label set X, O, PO, UP). We then use

L to sample the support set

S and a batch

B (i.e., both

S and

B are labelled examples of X, O, PO, UP). The network is then trained to minimize the error predicting the labels in the batch

B conditioned on the support set

S. More precisely, the training objective is as follows:

where

represents the parameters of the embedding function

f and

g.

4. Evaluation

In this section, we evaluate the performance of the proposed WiLiMetaSensing system through extensive experiments. The evaluation contains the following three parts. Firstly, we explore the feasibility and effectiveness of our system. Then, we investigate the system Modules. Finally, the robustness of the system is discussed by demonstrating the influence of different data samples.

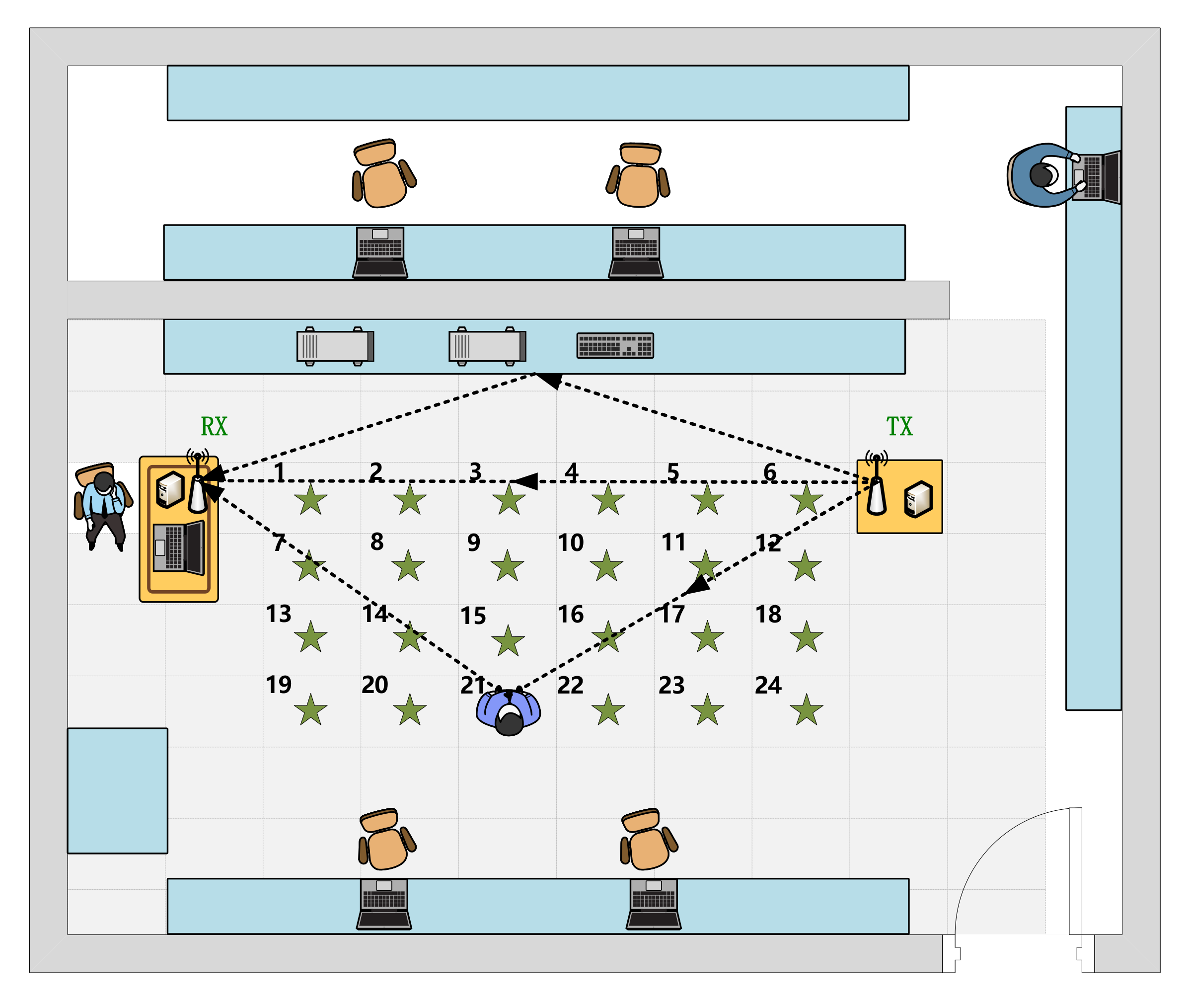

4.1. Experiment Setup

We first evaluate the performance of our sensing method in a traditional way, including the single location sensing and the mixed locations sensing. In addition, we validate the effectiveness of location-independent sensing. There are 50 samples for each activity at each location for each person, 60% of which are randomly selected as the training set, 20% as the validation set, and the rest as the testing set. For single location sensing, we train and test at the same location. For mixed locations sensing, we apply the activities of all the locations for training and testing. For location-independent sensing, we show the overall average accuracy with four locations for training and 24 locations for testing. In this section, we show the overall accuracy for one-shot learning using the samples with 200 frames/s sampling rate, which lasts for 3.5 s, and 90 subcarriers. According to the training strategy of meta learning method, when we test for k-shot learning, we set the number of samples in each category of the support set as k for the testing sets. We set the support set of training and validation sets the same as the testing sets.

Specifically, the CNN embedding module consists of four CNN blocks, each including a convolutional layer, a batch normalization layer, and a max-pooling layer, followed by a fully-connected layer with 64 neurons. In addition, 64 filters with the kernel size are used. In the Bi-LSTM embedding module, the number of hidden units is , which is the number of activities multiplied by the k-shot. The input size of Bi-LSTM is decided by the dimension of a fully-connected layer which is 64. The number of hidden layers is 1. Hidden size (the dimension of the hidden layer) is 32, while, in attLSTM, it is 64. We minimize the cross-entropy loss function with Adam to optimize the model parameters. The exponential decay rate and are empirically set as 0.9 and 0.999. The learning rate is set as 0.0001. The total number of training iterations is 300. The batch size is set as 16. Unless otherwise specified, the following evaluations follow the above settings.

4.2. Overall Performance

Table 2 illustrates the recognition average accuracy of our method compared with the traditional deep learning method CNN and WiHand [

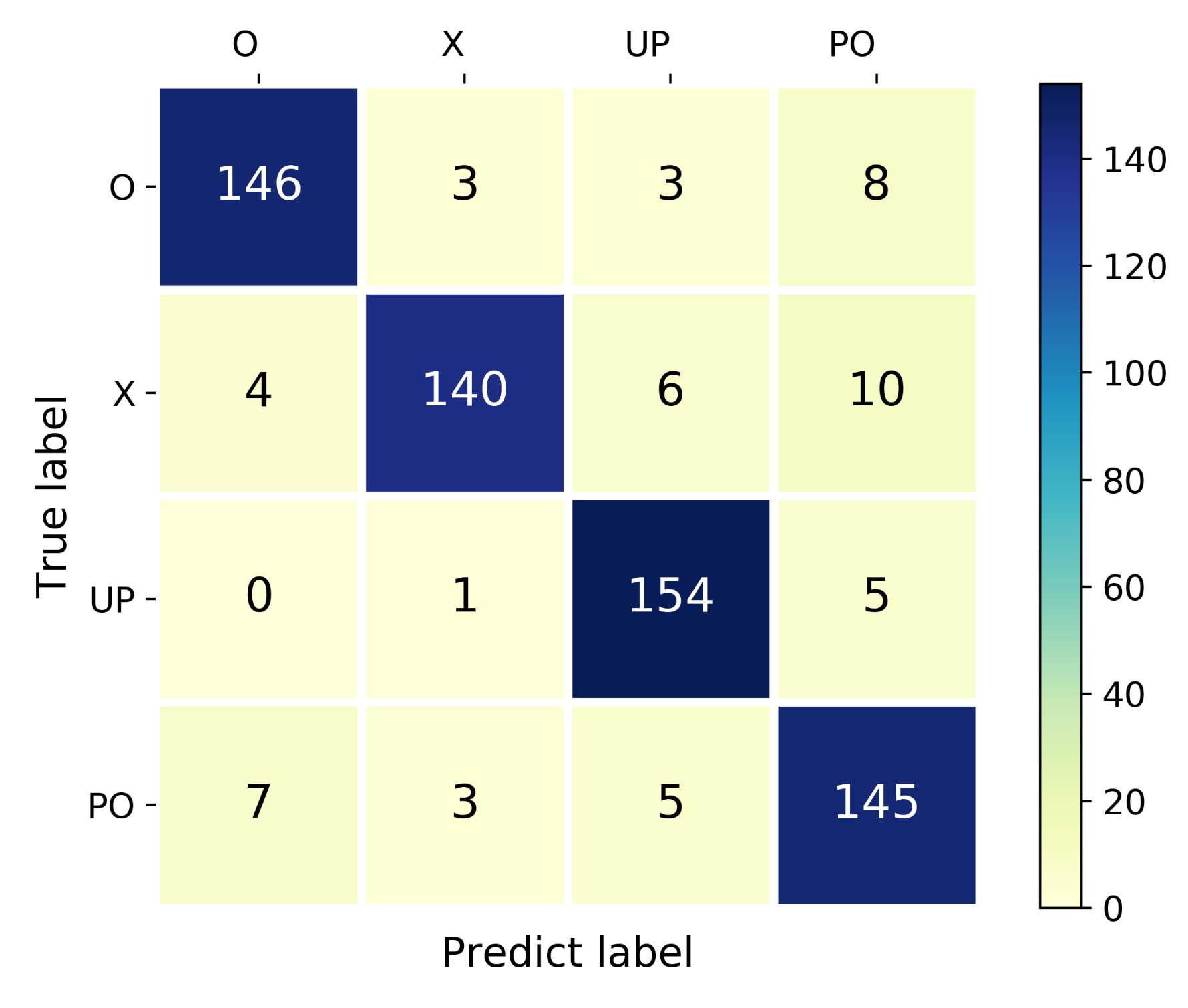

25]. WiHand is based on the low rank and sparse decomposition (LRSD) algorithm and extracts the histogram of the gesture CSI profile as the features, which outperforms the other location-independent approach. It can be seen that our system outperforms these two methods in both location-dependent sensing and location-independent sensing. All the methods can recognize with high accuracy for single location sensing and mixed locations sensing. For the location-independent sensing, WiLiMetaSensing can also obtain an average 91.11% recognition accuracy, which is about 7% higher than CNN, and about 9% higher than WiHand. Specifically, the confusion matrix of a test for our location-independent human activity recognition method is shown in

Figure 13 with a 91.41% accuracy. We can see that all of the activities can be recognized with high accuracy. Note that

Table 2 shows the optimal recognition accuracy of WiHand with 30 subcarriers and 20 features. We analyze the reason why WiHand did not perform as well as the original dataset, including (1) The nine data collection locations of WiHand are relatively close to the TX and RX, while our 24 locations have a wider coverage. (2) The sampling rate of WiHand is 2500 packets/s, which is much larger than our 200 packets/s. (3) WiHand could extract CSI streams of all 56 subcarriers from the customized drivers, while ours is 30 subcarriers. A higher sampling rate and more subcarriers may provide richer fine-grained information. After the matrix decomposition, more activity-related information will be preserved.

4.3. Module Study

Comparison with different feature representation modules. In this section, we explore the effect of the embedding module

g (Bi-LSTM) and

f (attLSTM) for the samples from the support set and the query set. We test for one-shot learning using the samples with 90 subcarriers. From

Table 3, we can see that both modules enhance the performance of the method. Leveraging all the activity samples from the support set, common features can be obtained to adjust the feature representation, so as to pay more attention to the location-independent features. The embedding module for the query set enables the sample in the source domain to effectively calibrate the feature representation of the sample in the target domain.

4.4. Robustness Evaluation

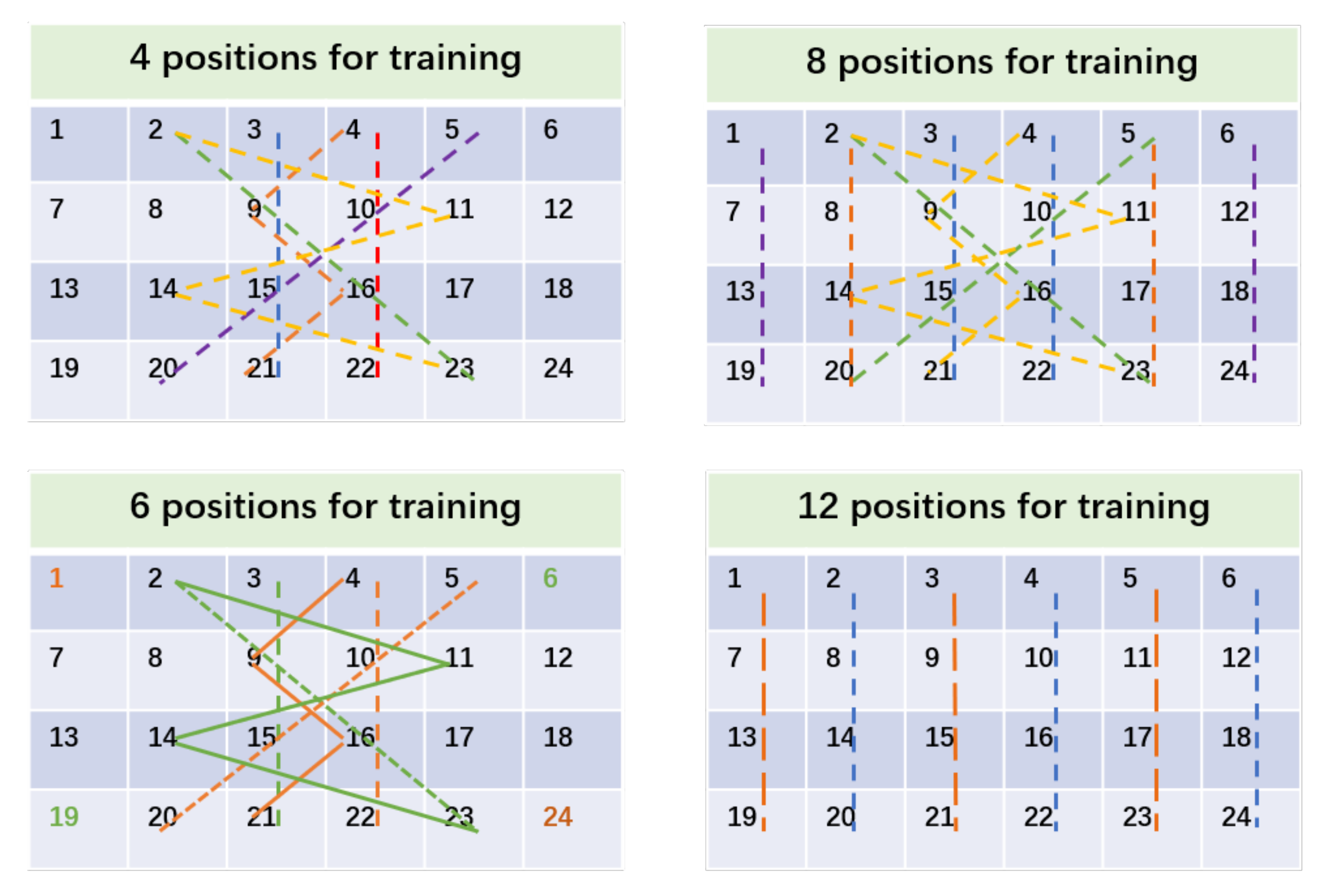

Performance of location-independent sensing in terms of different number of training locations. The activity samples of each position have different data distributions. The further the distance of the locations, the higher the probability of a broader distribution distance will be. Therefore, when it comes to the samples collected for training the models, we hope the positions of the training samples become more decentralized. We adopt a fixed training position selection strategy, in which the positions should be distributed as far as possible in the entire space, instead of clustering together in a line parallel to the transceiver. We choose 4/6/8/12/24 locations for training and 24 locations for testing. The selections of fixed 4/6/8/12 training positions are depicted in

Figure 14. Specifically, for 4/8/12 training locations, the positions where the same colored straight line goes through, or the inflection points and the enthesis of the same colored broken lines, constitute the training samples. For six training locations, the straight lines or broken lines together with the same colored marked locations form the training pairs.

As demonstrated in

Table 4, when we pick four training locations and 1-shot, the accuracy is 91.11%. When eight training locations and 1-shot are selected, the accuracy is 92.66%. The results indicate that the more training positions there are, the higher accuracy the recognition obtains.

Performance of location-independent sensing for samples with different numbers of subcarriers. We explore one-shot human activity recognition with different numbers of subcarriers. As illustrated in

Table 5, the recognition accuracy reduces with the decrease of the number of subcarriers. However, it still maintains an acceptable recognition rate when there are only 30 subcarriers from one pair of antenna.

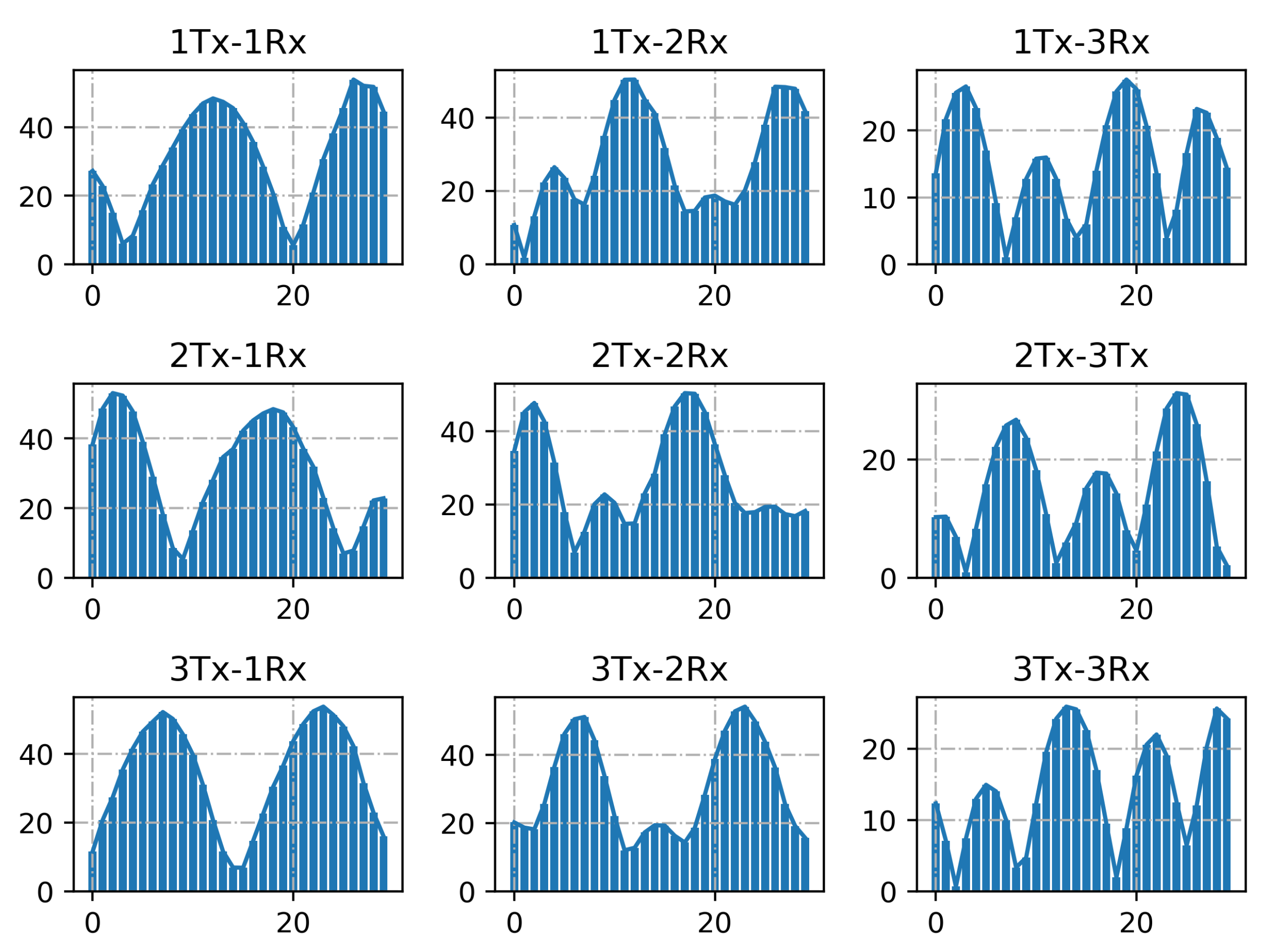

Performance of location-independent sensing for different TX-RX antenna pairs. We investigate the recognition accuracy with 30 subcarriers from different TX-RX antennas. As shown in

Table 6, different antenna pairs have similar recognition effects. The difference reflects that different antenna pairs contain more or less diverse information. Therefore, 90 subcarriers which integrate these features can obtain superior results. Note that, in

Table 6,

iTX-

jRX represents CSI data from

i-th TX and

j-th RX.

Performance of location-independent sensing for different number of shots. We explore the number of samples in support set for testing. As examples, we also select four locations for training and 24 locations for testing. The samples with 90 subcarriers are used. The identification results are listed in

Table 7. It is noted that all the average accuracy is above 90%, and the accuracy will increase with the growth of the sample size.

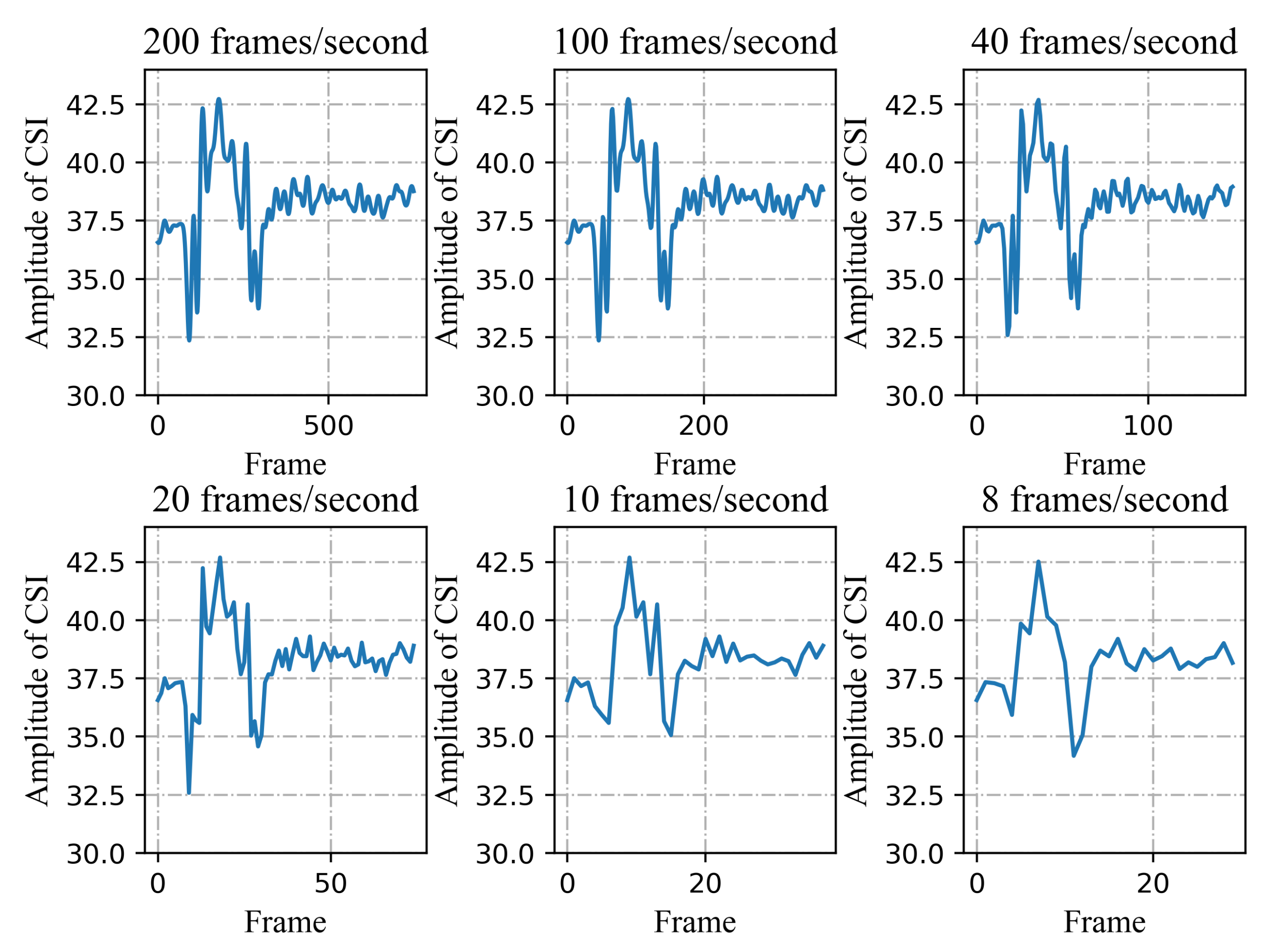

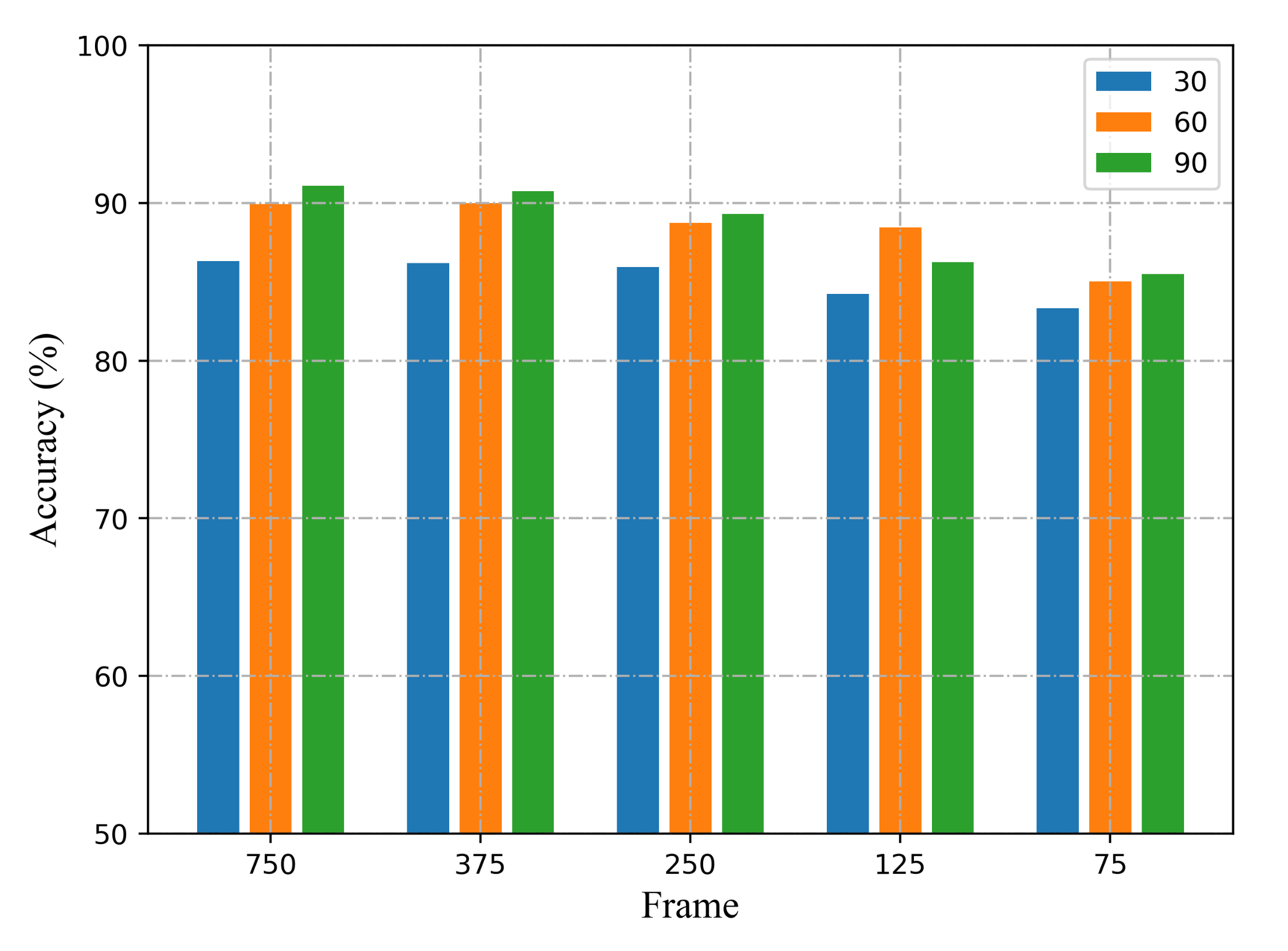

Performance of location-independent sensing for samples with different sampling rates. We collect CSI measurements at the initial transmission rate of 200 packets/s, and down-sample the 750 CSI series to 375, 250, 150, 75. The one-shot results with different sampling rates are shown in

Figure 15. As can be seen, when he sampling rate decrease to 20 frames/s, the method can still obtain satisfying accuracy.