Fourier-Transform-Based Surface Measurement and Reconstruction of Human Face Using the Projection of Monochromatic Structured Light

Abstract

1. Introduction

2. Theoretical Analyses

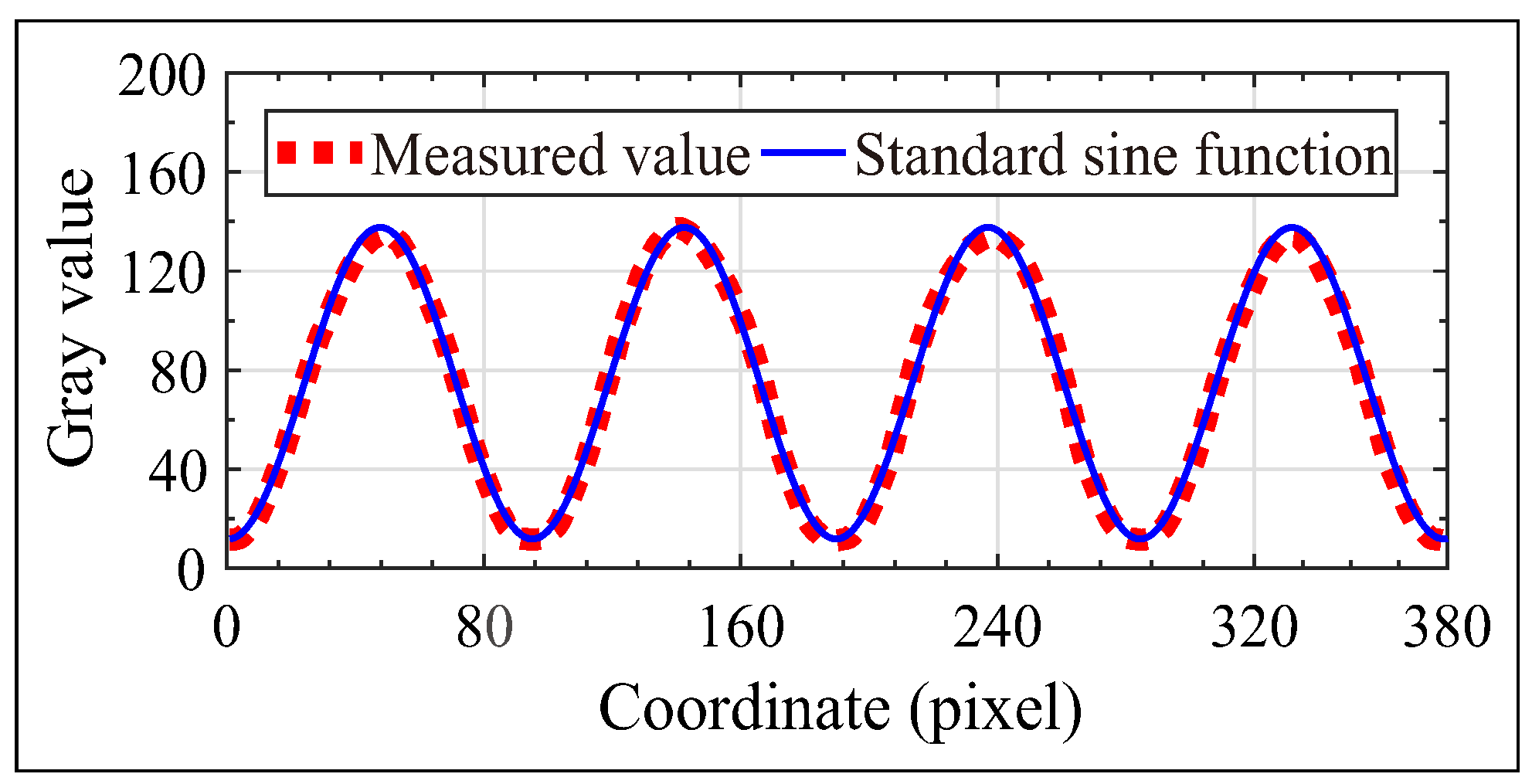

2.1. The Generation and Projection of Sinusoidal Fringe Signal

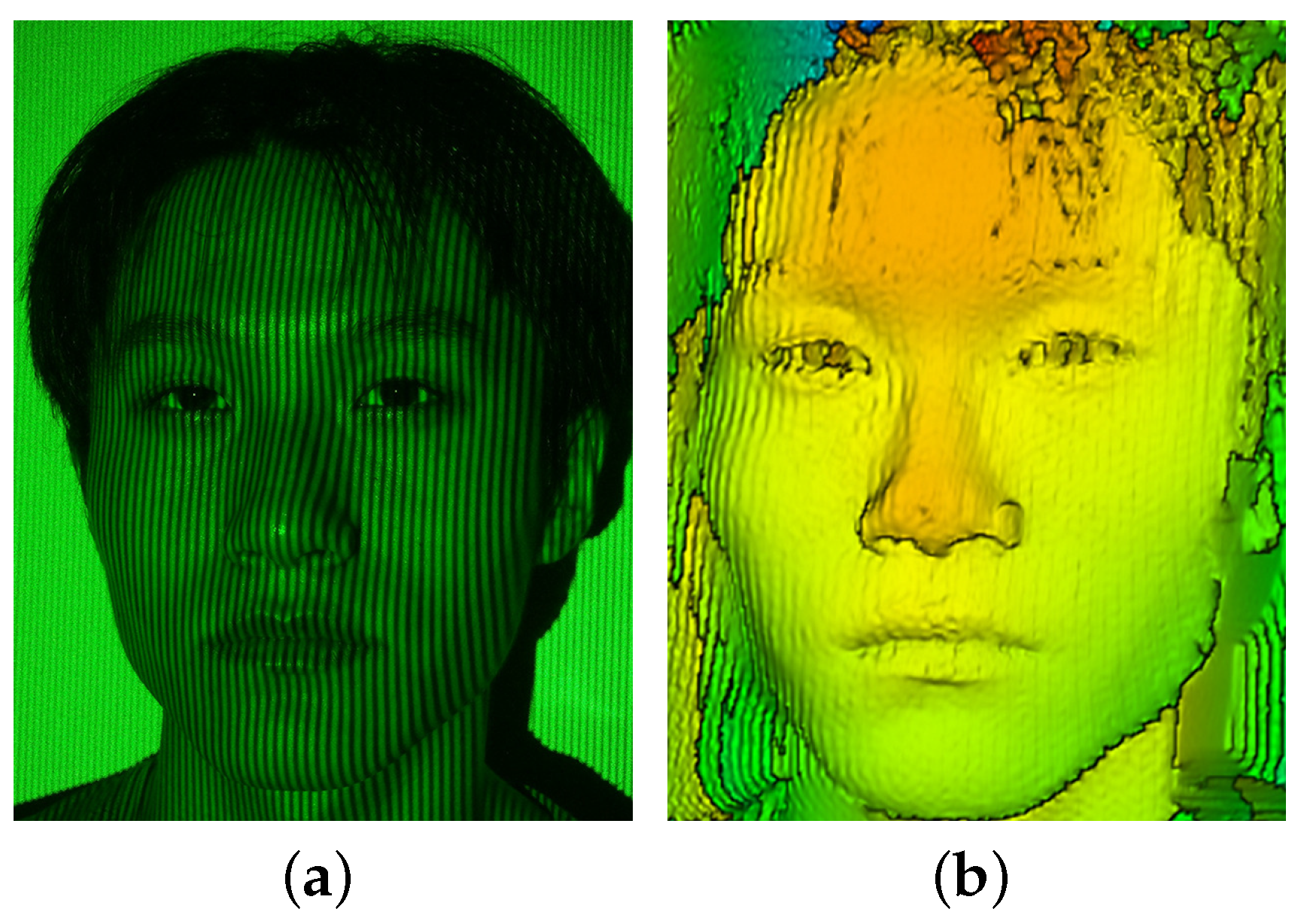

2.2. Imaging Technique and Reconstruction Based on One-Shot FTP

2.2.1. Removing the Effect of Background Illumination Using an Optical Filter

2.2.2. Eliminating the Effect of Multiply-Scattered Photons Using a Polarizer

2.2.3. Reconstruction Based on One-Shot FTP

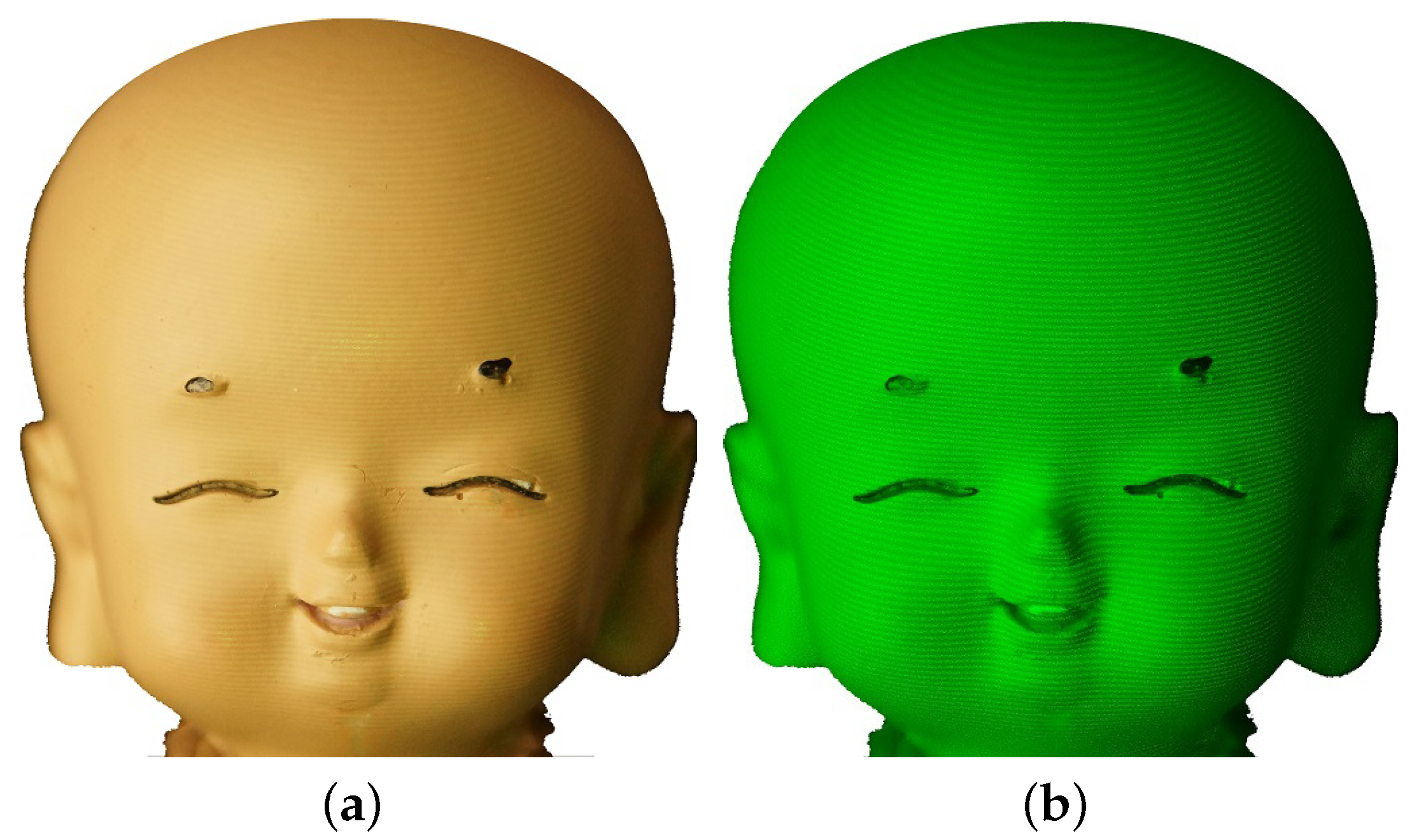

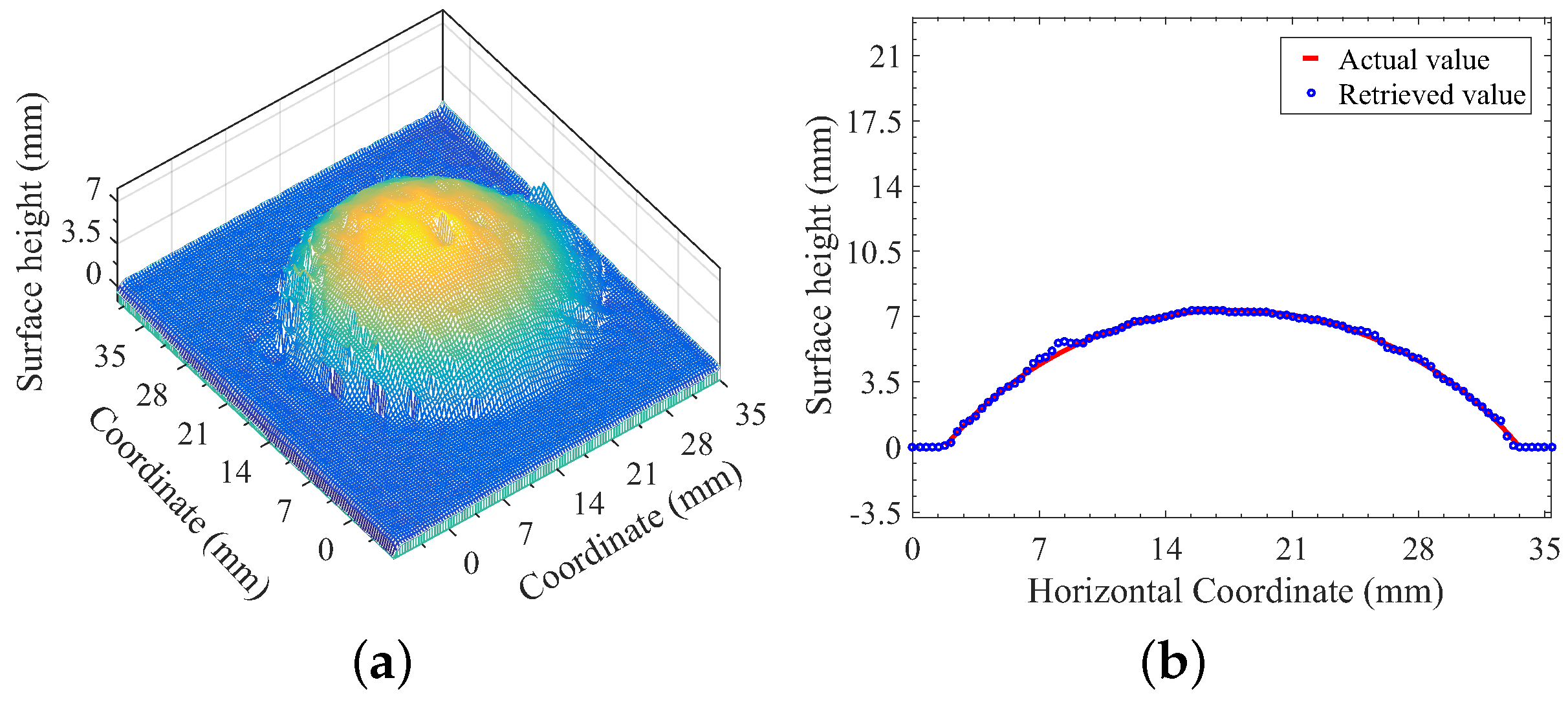

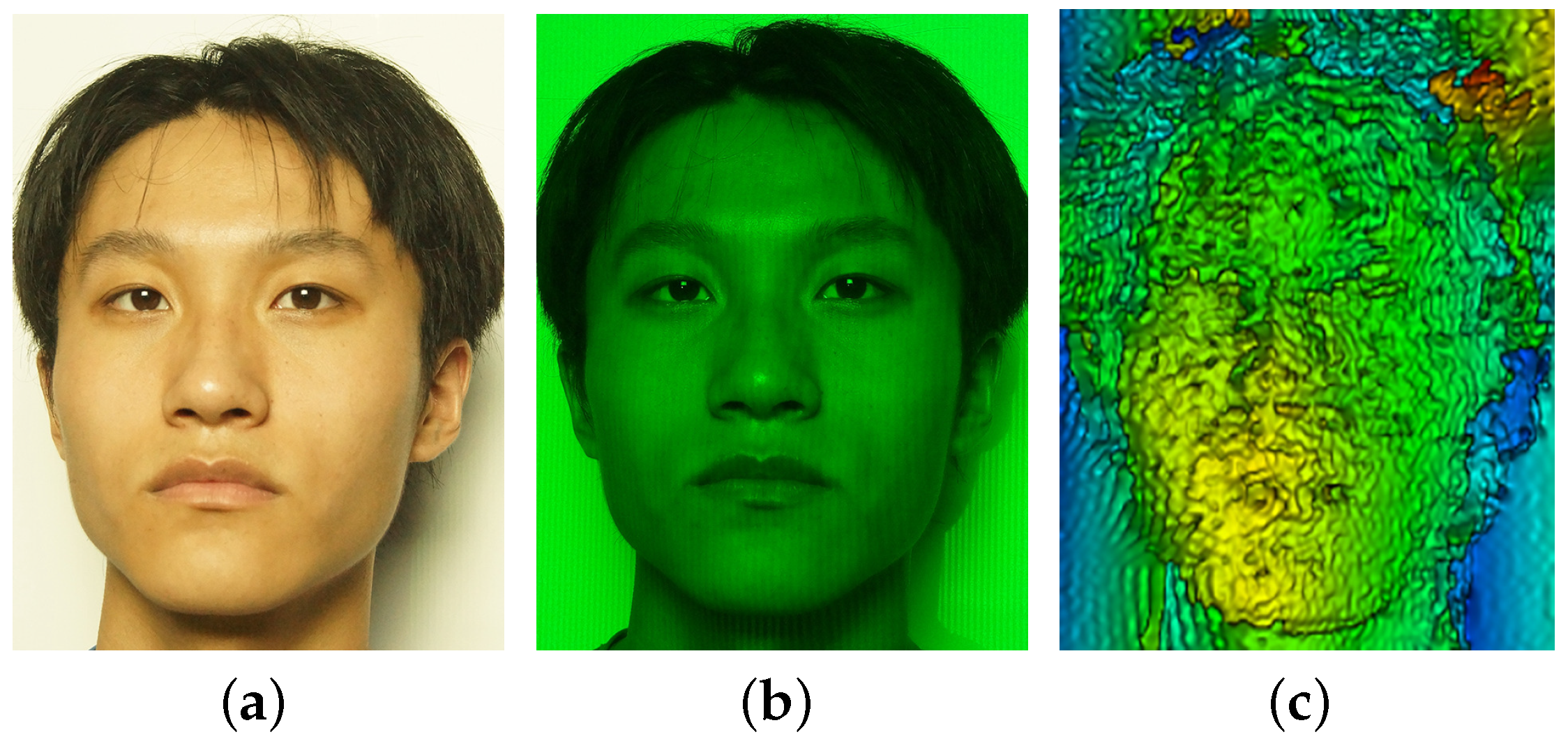

3. Experimental Results of Facial Reconstruction and Discussions

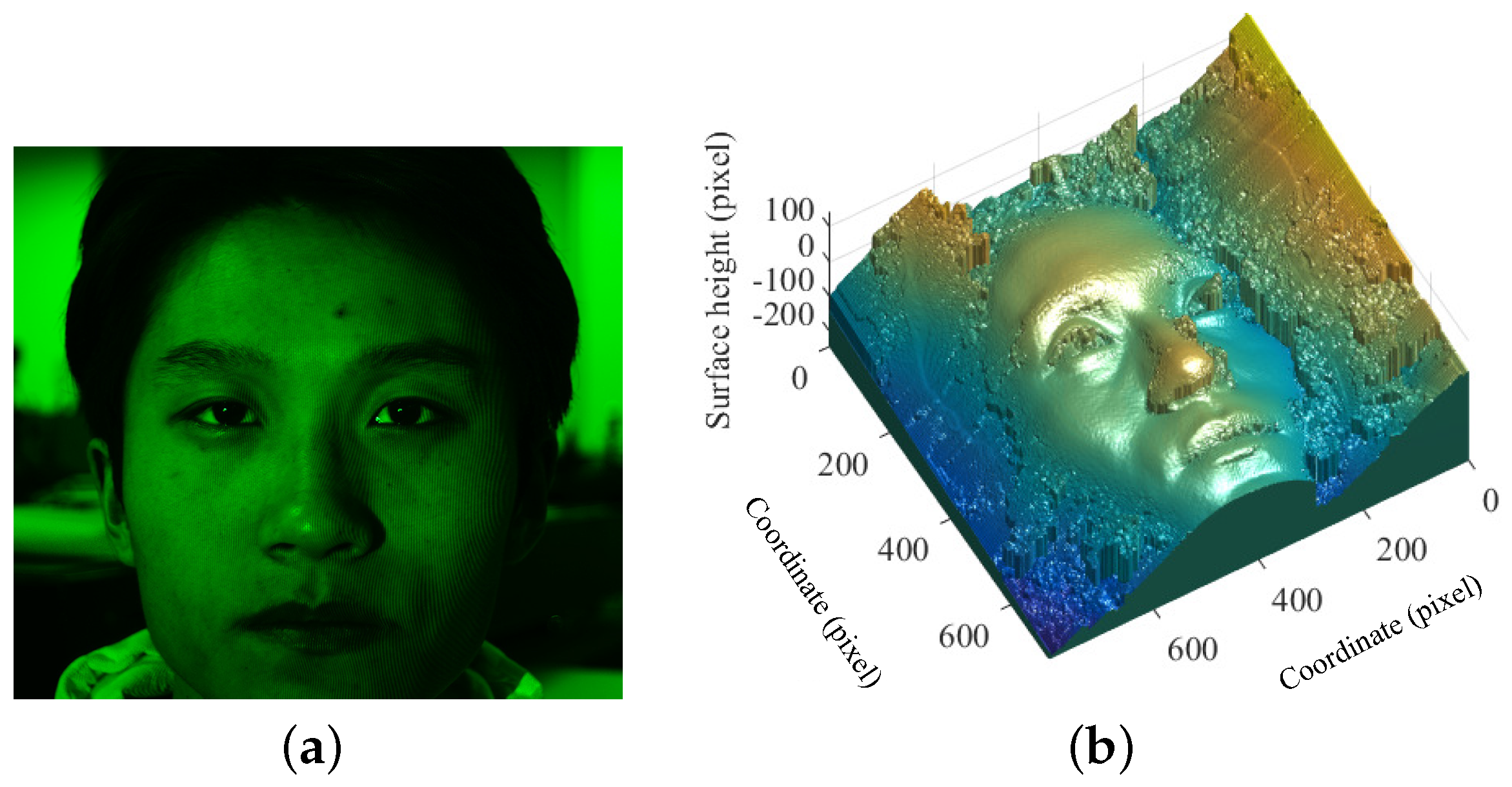

3.1. The Effect of Fringe Contrast

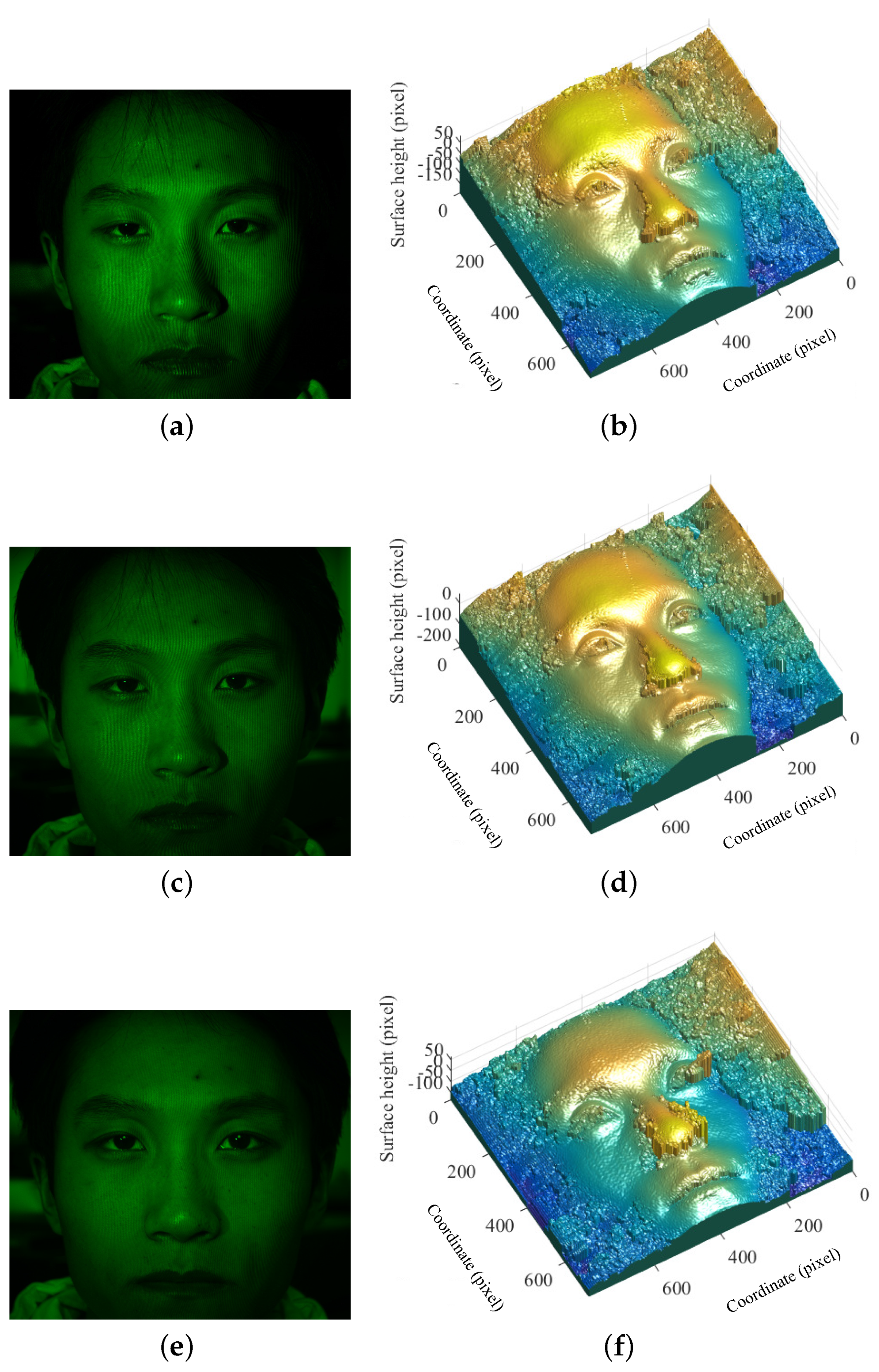

3.2. Reconstruction for Both Static and Dynamic Faces at Higher Spatial Frequency

3.3. Discussions

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Piedra-Cascón, W.; Meyer, M.J.; Methani, M.M.; Revilla-León, M. Accuracy (trueness and precision) of a dual-structured light facial scanner and interexaminer reliability. J. Prosthet. Dent. 2020, 124, 567–574. [Google Scholar] [CrossRef]

- Zhao, Y.-J.; Xiong, Y.-X.; Wang, Y. Three-dimensional accuracy of facial scan for facial deformities in clinics: A new evaluation method for facial scanner accuracy. PLoS ONE 2017, 12, e0169402. [Google Scholar] [CrossRef]

- Amornvit, P.; Sanohkan, S. The accuracy of digital face scans obtained from 3D scanners: An in vitro study. Int. J. Environ. Res. Public Health 2019, 16, 5061. [Google Scholar] [CrossRef]

- Vázquez, M.A.; Cuevas, F.J. A 3D facial recognition system using structured light projection. In Proceedings of the International Conference on Hybrid Artificial Intelligence Systems, HAIS 2014, Salamanca, Spain, 11–13 June 2014; pp. 241–253. [Google Scholar] [CrossRef]

- Sharma, S.; Kumar, V. Voxel-based 3D face reconstruction and its application to face recognition using sequential deep learning. Multimed. Tools Appl. 2020, 79, 17303–17330. [Google Scholar] [CrossRef]

- Dunn, S.M.; Keizer, R.L.; Yu, J. Measuring the area and volume of the human body with structured light. IEEE Trans. Syst. Man Cybern. 1989, 19, 1350–1364. [Google Scholar] [CrossRef]

- Bhatia, G.; Vannier, M.W.; Smith, K.E.; Commean, P.K.; Riolo, J.; Leroy Young, V. Quantification of facial surface change using a structured light scanner. Plast. Reconstr. Surg. 1994, 94, 768–774. [Google Scholar] [CrossRef]

- Gregory, A.; Lipczynski, R.T. The three dimensional reconstruction and monitoring of facial surfaces. Med. Eng. Phys. 1994, 16, 249–252. [Google Scholar] [CrossRef]

- Amor, B.B.; Ardabilian, M.; Chen, L. 3D Face modeling based on structured-light assisted stereo sensor. In Proceedings of the Image Analysis and Processing, ICIAP 2005, LNCS 3617, Cagliari, Italy, 6–8 September 2005; pp. 842–849. [Google Scholar] [CrossRef]

- Yang, B.; Lesicko, J.; Moy, A.; Reichenberg, J.; Sacks, M.; Tunnell, J.W. Color structured light imaging of skin. J. Biomed. Opt. 2016, 21, 050503. [Google Scholar] [CrossRef]

- Yue, H.; Yu, Y.; Chen, W.; Wu, X. Accurate three dimensional body scanning system based on structured light. Opt. Express 2018, 26, 28544–28559. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, P.S. High-resolution, real-time three-dimensional shape measurement. Opt. Eng. 2006, 45, 123601. [Google Scholar] [CrossRef]

- Kimura, M.; Mochimaru, M.; Kanade, T. Measurement of 3D foot shape deformation in motion. In Proceedings of the 5th ACM/IEEE International Workshop on Projector Camera Systems, Bali Way, CA, USA, 20 August 2008; pp. 1–8. [Google Scholar] [CrossRef]

- Liberadzki, P.; Adamczyk, M.; Witkowski, M.; Sitnik, R. Structured-light-based system for shape measurement of the human body in motion. Sensors 2018, 18, 2827. [Google Scholar] [CrossRef]

- Sitnik, R. Four-dimensional measurement by a single-frame structured light method. Appl. Opt. 2009, 48, 3344–3354. [Google Scholar] [CrossRef]

- Wang, Z. Robust three-dimensional face reconstruction by one-shot structured light line pattern. Opt. Laser Eng. 2020, 124, 105798. [Google Scholar] [CrossRef]

- Bleier, M.; Nüchter, A. Low-cost 3D laser scanning in air or water using self-calibrating structured light. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 105. [Google Scholar] [CrossRef]

- Takeda, M.; Mutoh, K. Fourier transform profilometry for the automatic measurement of 3-D object shapes. Appl. Opt. 1983, 22, 3977–3982. [Google Scholar] [CrossRef]

- Maurel, A.; Cobelli, P.; Pagneux, V.; Petitjeans, P. Experimental and theoretical inspection of the phase-to-height relation in Fourier transform profilometry. Appl. Opt. 2009, 48, 380–392. [Google Scholar] [CrossRef]

- Zappa, E.; Busca, G. Static and dynamic features of Fourier transform profilometry: A review. Opt. Laser Eng. 2012, 50, 1140–1151. [Google Scholar] [CrossRef]

- Yun, H.; Li, B.; Zhang, S. Pixel-by-pixel absolute three-dimensional shape meausrement with Fourier transform profilometry. Appl. Opt. 2017, 56, 1472–1480. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Z.; Gao, N.; Xiao, Y.; Gao, F.; Jiang, X. Single-shot 3D shape measurement of discontinuous objects based on a coaxial fringe projection system. Appl. Opt. 2019, 58, A169–A178. [Google Scholar] [CrossRef]

- Clement, M.; Daniel, G.; Trelles, M. Optimising the design of a broad band light source for the treatment of skin. J. Cosmet. Laser Ther. 2005, 7, 177–189. [Google Scholar] [CrossRef]

- Ash, C.; Dubec, M.; Donne, K.; Bashford, T. Effect of wavelength and beam width on penetration in light-tissue interaction using computational methods. Lasers Med. Sci. 2017, 32, 1909–1918. [Google Scholar] [CrossRef] [PubMed]

- Holroyd, M.; Lawrence, J. An analysis of using high-frequency sinusoidal illumination to measure the 3D shape of translucent objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2985–2991. [Google Scholar] [CrossRef]

- Jensen, S.N.; Wilm, J.; Aanæs, H. An error analysis of structured light scanning of biological tissue. In Proceedings of the Scandinavian Conference on Image Analysis, SCIA-2017, Tromsø, Norway, 12–14 June 2017; pp. 135–145. [Google Scholar] [CrossRef]

- Ohtani, K.; Li, L.; Baba, M. Determining surface shape of translucent objects by using laser rangefinder. In Proceedings of the 2013 IEEE/SICE International Symposium on System Integration, Kobe, Japan, 15–17 December 2013; pp. 454–459. [Google Scholar] [CrossRef]

- Chen, B.; Stamnes, K.; Stamnes, J.J. Validity of the diffusion approximation in bio-optical imaging. Appl. Opt. 2001, 40, 6356–6366. [Google Scholar] [CrossRef] [PubMed]

- Ding, H.; Lu, J.Q.; Wooden, W.A.; Kragel, P.J.; Hu, X.H. Refractive indices of human skin tissues at eight wavelengths and estimated dispersion relations between 300 and 1600 nm. Phys. Med. Biol. 2006, 51, 1479–1489. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, B.; Li, H.; Yue, J.; Shi, P. Fourier-Transform-Based Surface Measurement and Reconstruction of Human Face Using the Projection of Monochromatic Structured Light. Sensors 2021, 21, 2529. https://doi.org/10.3390/s21072529

Chen B, Li H, Yue J, Shi P. Fourier-Transform-Based Surface Measurement and Reconstruction of Human Face Using the Projection of Monochromatic Structured Light. Sensors. 2021; 21(7):2529. https://doi.org/10.3390/s21072529

Chicago/Turabian StyleChen, Bingquan, Hongsheng Li, Jun Yue, and Peng Shi. 2021. "Fourier-Transform-Based Surface Measurement and Reconstruction of Human Face Using the Projection of Monochromatic Structured Light" Sensors 21, no. 7: 2529. https://doi.org/10.3390/s21072529

APA StyleChen, B., Li, H., Yue, J., & Shi, P. (2021). Fourier-Transform-Based Surface Measurement and Reconstruction of Human Face Using the Projection of Monochromatic Structured Light. Sensors, 21(7), 2529. https://doi.org/10.3390/s21072529