Abstract

For accurate object vehicle estimation using radar, there are two fundamental problems: measurement uncertainties in calculating an object’s position with a virtual polygon box and latency due to commercial radar tracking algorithms. We present a data-driven object vehicle estimation scheme to solve measurement uncertainty and latency problems in radar systems. A radar accuracy model and latency coordination are proposed to reduce the tracking error. We first design data-driven radar accuracy models to improve the accuracy of estimation determined by the object vehicle’s position. The proposed model solves the measurement uncertainty problem within a feasible set for error covariance. The latency coordination is developed by analyzing the position error according to the relative velocity. The position error by latency is stored in a feasible set for relative velocity, and the solution is calculated from the given relative velocity. Removing the measurement uncertainty and latency of the radar system allows for a weighted interpolation to be applied to estimate the position of the object vehicle. Our method is tested by a scenario-based estimation experiment to validate the usefulness of the proposed data-driven object vehicle estimation scheme. We confirm that the proposed estimation method produces improved performance over the conventional radar estimation and previous methods.

1. Introduction

Autonomous driving technologies such as collision risk decision, path planning with collision avoidance, lane change systems, and advanced driver assistance systems (ADASs) are attracting attention [1,2,3,4]. These research areas are becoming critical not only for research but also to bring autonomous vehicles to public roads. To improve active safety systems for autonomous driving, it is necessary to accurately estimate the relative position of surrounding vehicles [5,6]. Object vehicle estimation research incorporates various types of sensors, such as radio detecting and ranging (radar), light detection and ranging (LiDAR), and cameras. Among the various sensors, radar is a reliable vehicle sensor that measures the motion of surrounding vehicles. Its advantages lie in its commercial availability and robustness against environmental variation. Radar sensors have been applied in ADASs functions such as blind-spot detection (BSD) and adaptive cruise control (ACC).

However, radar has intrinsic measurement uncertainties in calculating an object vehicle’s position and velocity as it uses a virtual polygon box with only partial information [7,8,9]. To address this limitation, various filters have been applied to improve radar accuracy. In radar applications, the Kalman filter (KF) and the interacting multiple model (IMM) were compared in [10]. A particle filter [11] and an unscented Kalman filter (UKF) [12] for nonlinear systems have been proposed for target tracking using a radar sensor. For reasonable object tracking of a radar system, it has been found that the multiple model approach provides better filtering performance than a single model [13]. Radar tracking performance is improved through IMM [14] and convex interpolation [15] by using different radar accuracies depending on the object vehicle position [16]. In [9], the authors proposed an IMM algorithm using extended Kalman filters (EKF) for multi-target state estimation. In [17], the performances of the IMM and Viterbi algorithm were investigated and compared through radar tracking and detection. A self-adapting variable structure multiple model (VS-IMM) estimation approach combined with an assignment algorithm was presented in [18] for tracking ground targets with constrained motion. Motion uncertainties due to variable dynamic driving situations were handled using the VS-IMM. In [19], the authors presented a data-driven object tracking approach by training a deep neural network to learn situation-dependent sensor measurement models.

Another approach to accurate object tracking using radar adds sensors such as a camera and LiDAR. Research fusing radar and camera sensors is described in [20]. In [21], the authors used visual recognition information to improve tracking model selection, data association, and movement classification. An algorithm to estimate the location, size, pose, and motion information of a threat vehicle was implemented by fusing the information from a stereo-camera and from millimeter-wave radar sensors in [22]. In [23], the authors proposed a fusion architecture using radar, LiDAR, and camera for accurate detection and classification of moving objects. In [24], heuristic fusion with adaptive gating and track to track fusion were applied to a forwarding vehicle tracking system using camera and radar sensors, and the two algorithms were compared. In [25], the authors presented an EKF that reflects the distance characteristics of LiDAR and radar sensors. In [26], the fusion of radar and camera sensor data with a neural network was studied to improve object detection accuracy. In [27], the object was identified and detected using vision and radar sensor data, and YOLOv3 architecture. However, the sensor fusion approach requires a larger number of sensors. Although the estimation performance can be improved through multi-sensor applications, it increases the vehicle’s cost. In addition, latency occurs due to the increase in computational cost for sensor fusion [28].

As stated above, by applying a filter without an additional sensor, accurate tracking is possible without increasing the cost. However, radar latency (processing delay) increases with the use of a filter [16,29,30]. This latency increases further depending on the tracking algorithm used (e.g., point cloud clustering, segmentation, single sensor tracking, multilateration, classification, and filtering) in vehicle applications [7,14,31,32]. In this regard, the radar sensor was evaluated for the effect of processing latency on the efficiency of detecting, acquiring, and tracking a target [29]. In [33], the authors noted that it is important for delays in the measurement (i.e., the time elapsed since a physical event occurs until it is output to the application) and accurate data on the position of other vehicles in future driver assistance systems. In [34], the authors proposed a classification method based on deep neural networks using automotive radar sensors in consideration of latency. Eventually, this processing latency causes a tracking error depending on the relative speed in autonomous driving applications. Therefore, a person who designing an upper-level application should consider processing latency when developing object vehicle estimation for driving safety.

The objective of this paper is to propose an object vehicle estimation scheme to improve radar accuracy. The scheme develops a data-driven object vehicle estimation scheme that can consider radar accuracy within a feasible set to solve the measurement uncertainty and latency problems. To resolve these problems, we first develop radar accuracy models by comparing the radar and ground truth data divided in each zone. Each zone’s models are selected depending on where the object vehicle is located. We then solve the radar latency problem according to the relative velocity. The position error for the relative velocity data sets is stored in each vertex, and we find the solution in the feasible set for these data sets. By using the developed radar accuracy models with latency coordination, weighted interpolation is applied to estimate the object vehicle. This approach will allow the radar accuracy models to remove the measurement uncertainty and latency within the feasible set. We verify the utility of the proposed method through scenario-based experiments. The contribution of this paper is the developmetn of an accurate object vehicle estimation scheme that solves the radar measurement uncertainty and latency problems.

The remainder of this paper is organized as follows. Section 2 describes two problems to improve object vehicle estimation accuracy. The data-driven radar accuracy modeling with an occupancy zone is described in Section 3. In Section 4, weighted interpolation is applied to object estimation by considering error characteristics and latency. Section 5 describes the analysis and results by applying the proposed method to vehicle applications and mentions future work. Section 6 presents concluding remarks.

2. Problem Statement

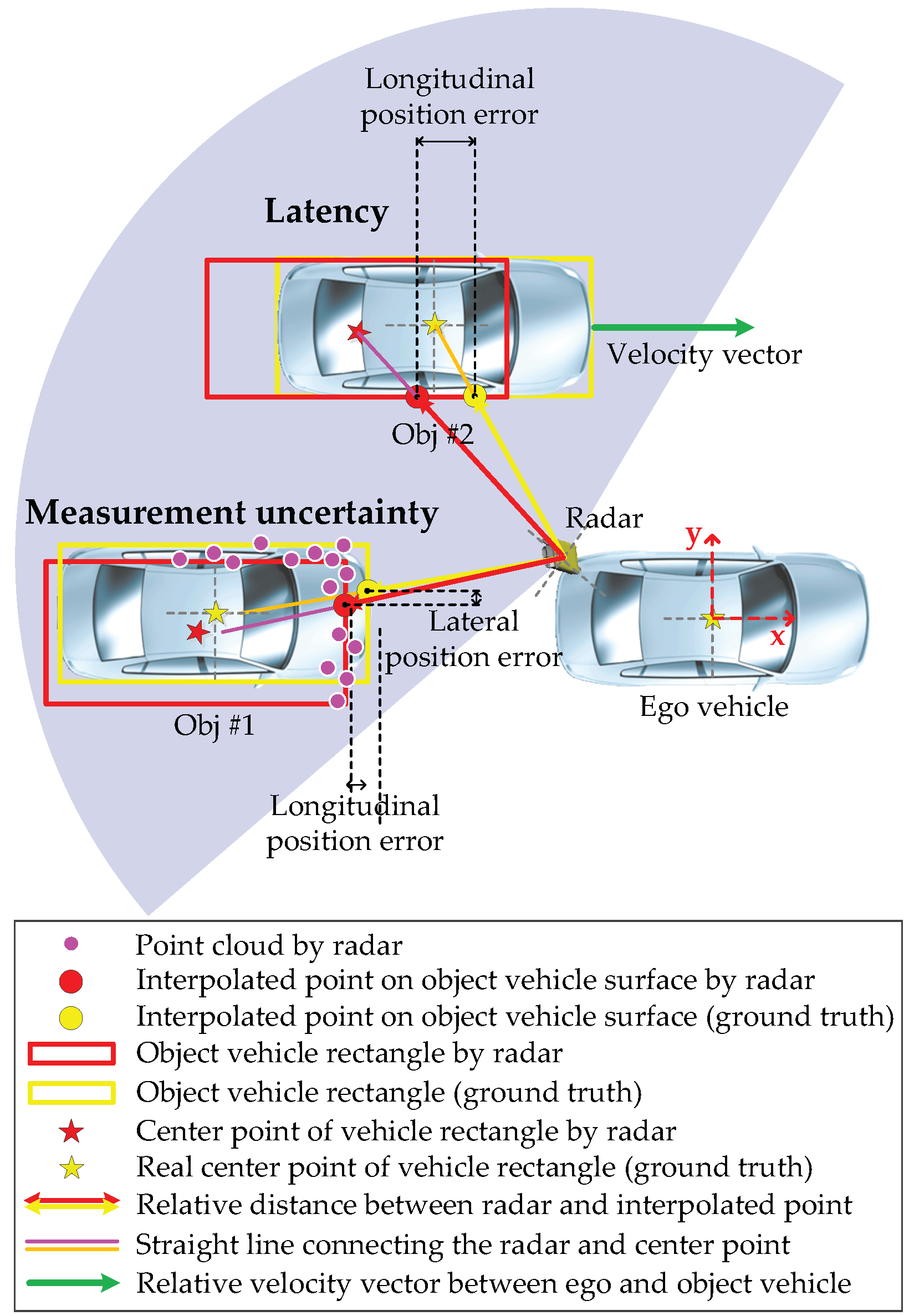

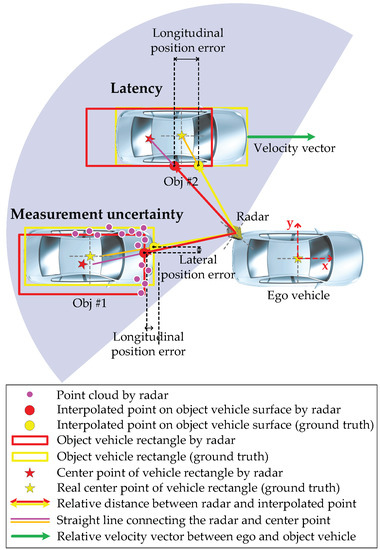

The problem we are interested in is object vehicle estimation by considering a radar’s measurement uncertainty and latency, as shown in Figure 1. There are two fundamental problems in accurate object vehicle estimation: measurement uncertainties in calculating an object’s position with a virtual polygon box and latency due to the tracking algorithm of a commercial radar. To resolve these problems, we develop a data-driven object vehicle estimation scheme using a radar accuracy modeling method with weighted interpolation. The radar accuracy modeling is designed using an error model between the radar and the ground truth data, and taking into account the relative speed. We are also interested in demonstrating the utility of our method through experiments.

Figure 1.

Example of object vehicle estimation by radar: the measurement uncertainty occurs due to insufficient point cloud and classification errors. This error occurs because the radar estimation algorithm (e.g., point cloud clustering, segmentation, single sensor tracking, multilateration, classification, and filtering) can only estimate an object vehicle’s size with a virtual polygon box with partial information [7,8,14,35]. Furthermore, the latency that causes position errors occurs due to the tracking algorithm of a commercial radar. The error caused by the latency becomes larger depending on the relative velocity.

These problems are almost undetectable and unknown to those who develop high-level applications such as ADASs. Therefore, we propose a scheme modeling these undetectable and unknown error characteristics as noise characteristics based on each divided zone and design a data-driven object estimation scheme. In addition, we propose a method to reduce errors that occur in radar algorithms by developing data-driven latency coordination. We use the relative position and velocity, which are the only available data to the person designing an upper-level application.

3. Data-Driven Radar Accuracy Modeling

To improve radar accuracy, we first developed a model. Previous research analyzing radar accuracy [14,15] found that the error characteristics differ according to the mounting angle and detection area of the radar observing the object [16]. Since the radar error differs depending on the angle and the detection area, it is difficult to obtain an error characteristic solution for a radar’s detection area. Therefore, we model these unknown error characteristics so that an error has the same value in each of the divided representative detection zones because the part of the object vehicle detected by the radar is similar to other object vehicles in the same detection area. In other words, each radar unit has a representative model for each zone. The measurement uncertainty of radar can be reduced by using an occupancy zone with the error characteristics. However, there is an error according to the object vehicle’s velocity due to the radar’s latency (the results of the analysis of the experimental data are shown in Section 4). This is caused by the object tracking algorithm of commercial radars [14,29]. This is a problem for anyone designing high-level applications for radar.

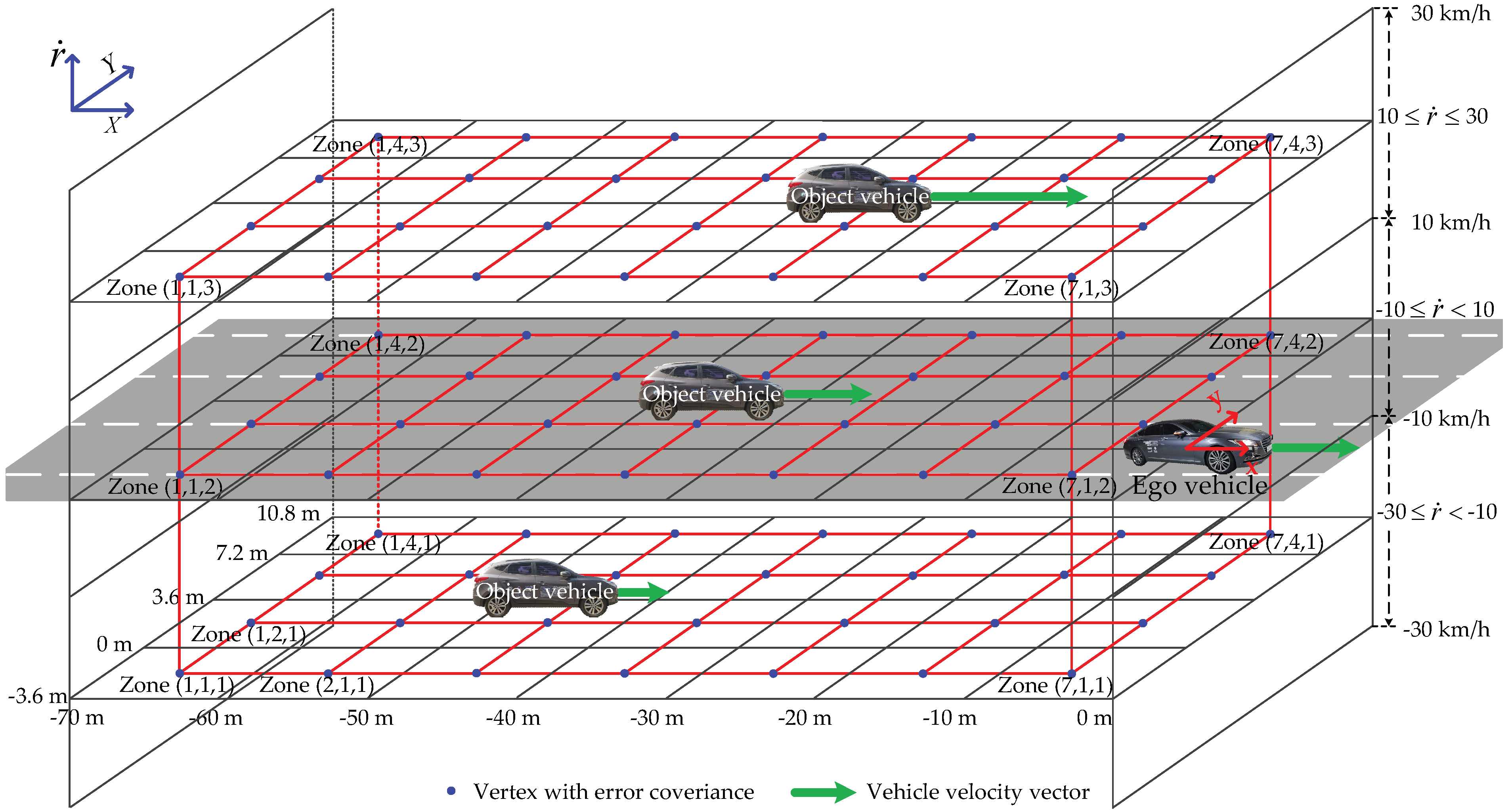

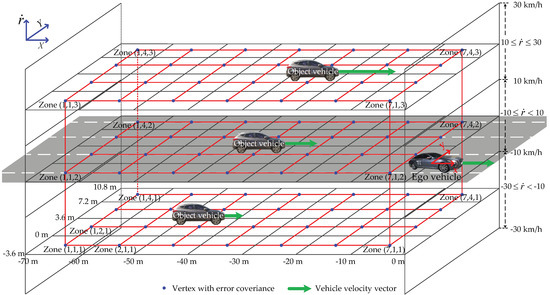

Therefore, we constructed an example of occupancy zones, as shown in Figure 2, taking into account the detectable area of the radar, where is the global coordinate frame, is the ego vehicle coordinate frame, and is the relative vehicle speed. The example of a divided occupancy zone configuration is divided by the x-axis (considering a multiple of the overall vehicle length), the y-axis (considering lane spacing), and the z-axis (considering experimental data analysis) based on the vehicle coordinate frame. Here, the z-axis is divided by data sets for each relative speed. The center point of each divided black quadrangle zone becomes each vertex of the red quadrangle (feasible set for error covariance). Then, the error characteristics analyzed in each zone are stored in each vertex. The radar accuracy in each divided occupancy zone detected by radar sensors is analyzed by comparing radar sensor data with ground truth (GT), as shown in Figure 1. An interpolated point on the object vehicle surface is calculated by a straight line connecting the ego vehicle’s radar and the center point of the virtual polygon box of the object vehicle. Here, the real center point (ground truth) is calculated from the differential global positioning system (DGPS) mount point. Then, we can obtain the longitudinal and lateral position errors by comparing the interpolated point and the center point.

Figure 2.

Example of a divided occupancy zone configuration: the occupancy zone is created by taking into account the detectable area of the radar, which is divided by the x-axis and y-axis based on the vehicle coordinate frame. The z-axis is divided by data sets for relative velocity. The center point of each divided black quadrangle zone becomes each vertex of the red quadrangle (feasible set for error covariance). Then, the error characteristics analyzed in each zone are stored in each vertex.

The model for object vehicle estimation can be expressed as a discrete-time state-space model assuming that the vehicle is moving with constant relative velocity in the longitudinal and lateral directions, respectively [36]. With the state , the state-space model is defined as

where

with

where is the output variables at the measurement instant k, is the system noise, is the radar measurement accuracy, C is the identity matrix, is the longitudinal relative distance, is the lateral relative distance, is the longitudinal relative velocity, is the lateral relative velocity, m is the longitudinal relative positional zone index, n is the lateral relative positional zone index, s is the zone index for relative velocity, M is the zone number of the X-axis, N is the zone number of the Y-axis, and S is the zone number of the Z-axis.

Assumption 1.

Radar measurement accuracy has a zero-mean white Gaussian distribution property in each zone [37]. The radar measurement accuracy covariance is a value determined by the characteristics of the sensor. The radar measurement accuracy covariance in each zone is set based on the error characteristics. The radar sensor is calibrated at each zone, such that the mean value of the position error becomes zero. Therefore, the zero-mean radar error becomes

and its covariance is

where subscript represents the ground truth data and subscript represents the calibrated radar data. Since it is not easy to obtain radar accuracy covariance values according to driving situations, we experimentally applied covariance values based on the method presented in [38]. In this regard, the experimental analysis results with the calibrated radar accuracy are shown in Section 4.

Remark 1.

By adjusting the system noise covariance through the KF in which the previously set radar measurement accuracy covariance is used, estimation errors approaching the minimum value in each zone are obtained [39]. Then, we set the system noise covariance for each zone.

4. Object Tracking with Weighted Interpolation

4.1. Estimation with Error Characteristic

The weighted interpolation in the occupancy zone is applied to state estimation by considering the error characteristics. The weighted interpolation method is used to solve the ambiguity problem of moving from a zone to another zone caused by dividing the occupancy zone. To apply weighted interpolation, we create a feasible set denoted by a red quadrangle relative to the center point of each black quadrangle zone in Figure 2. The center point of each zone is the vertex of the feasible set for error covariance, and takes into account the lane width. The data-driven covariance calculated in the previous section is stored at each zone’s vertex. This process is carried out offline using data analyzed in advance.

In online computation, the object vehicle positions and , and relative speed are given by the radar sensor. Then, the data-driven covariance stored at the vertex is applied to state estimation. The three-dimensional parameter vector can be represented in the polytopic form [15,40,41]:

where

denotes a weighted interpolation parameter vector satisfying , , and

denotes each zone’s vertices. When selecting the each zone’s vertices , we chose the eight vertices closest to the given , , and measured by the radar in the feasible set , as shown in Figure 2. Then, we can get

where in which is the Euclidean distance between each vertex and the given point (, , ) measured by the radar. Using the interpolation parameters with the parameter vector at eight vertices, we can find an approximate data-driven covariance from the precomputed data-driven covariance calculated from Assumption 1 at each vertex. The approximate data-driven covariance is expressed as follows:

From the KF using , we can obtain the estimated object vehicle position and [39,42]. This approach satisfies the computational complexity because it does not consider all the zones’ vertices. Here, we describe covariance related to the position for the object vehicle estimation; the covariance related to the velocity can be referred to [14] in a similar way.

4.2. Latency Coordination

To solve the aforementioned latency problem, weighted interpolation is applied to the state estimation similar to the previous subsection. As stated above, a radar’s latency varies depending on the relative velocity. The velocity region is divided, and the average position error by latency that occurred in each velocity set is stored in each vertex. Detailed data analysis is provided in Section 4.

The object vehicle longitudinal relative velocity and lateral relative velocity are given by the radar sensor. Then, the position error average by latency in each velocity region stored at the vertex is applied to the state estimation. Two-dimensional parameter vector can be represented in the polytopic form:

where

denotes the weighted interpolation parameter vector satisfying , , and

denotes the vertices. When selecting two vertices from the given relative velocities and measured by the radar, we chose two vertices that matched the relative velocity data set from the viable set . Then, we can get

where in which is the Euclidean distance between each vertex and the given relative velocities point (, ) measured by the radar. Using the interpolation parameters given the parameter vector for the relative velocity at two vertices, we can find an approximate position error from the interpolation between the precomputed average position error at each vertex. The approximate position error is expressed as follows

Using the precomputed position error average at each vertex, we can calculate the approximate position error for a given relative velocity and . The approximate position error , and and calculated by KF in the previous subsection are directly involved in the determination of estimated approximate position and :

Then, estimated approximate position and are applied to object vehicle tracking.

5. Application

We experimentally validated how useful the proposed data-driven weighted interpolation algorithm is when applied to object vehicle estimation of an autonomous vehicle.

5.1. Experimental Setup

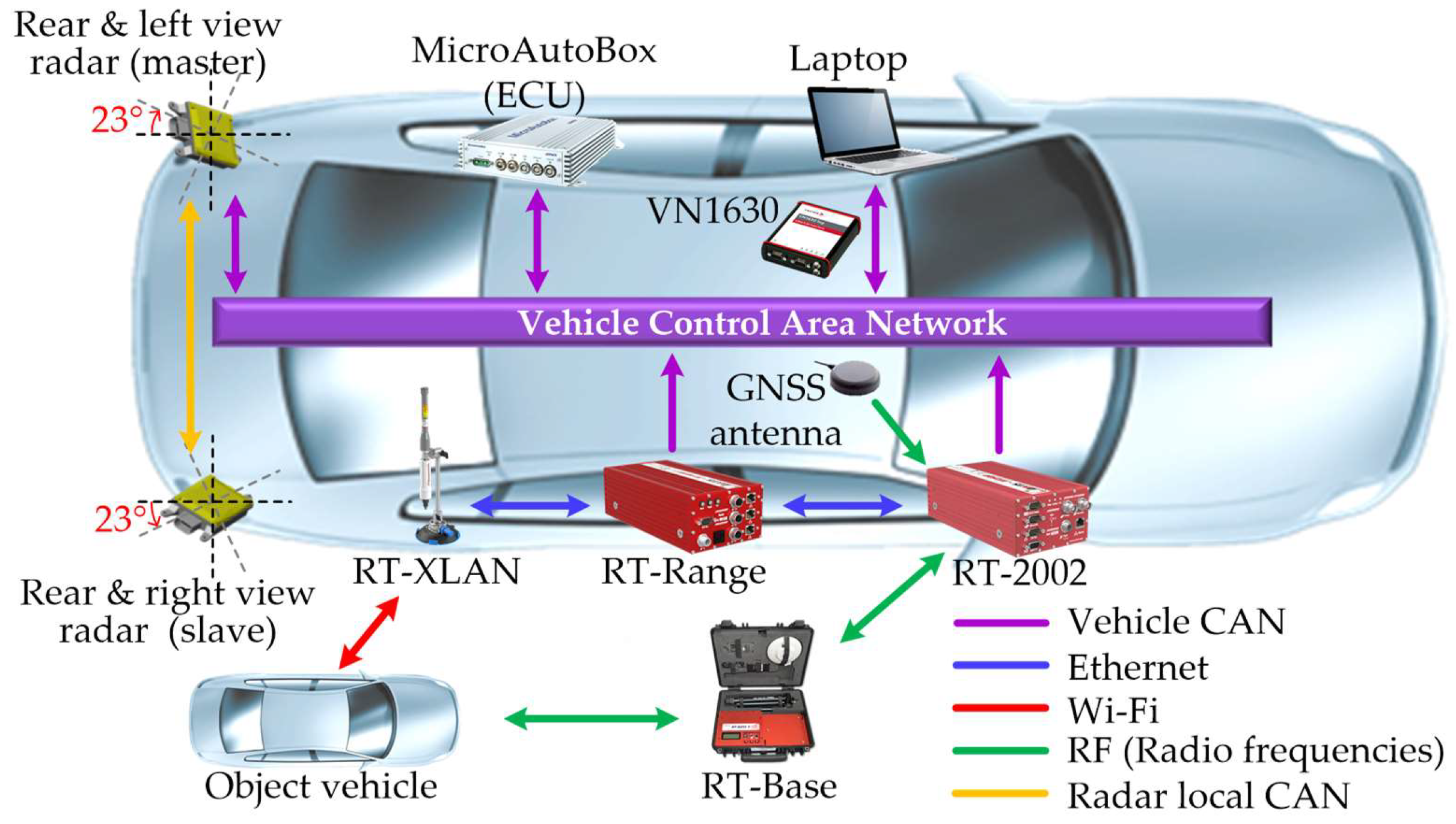

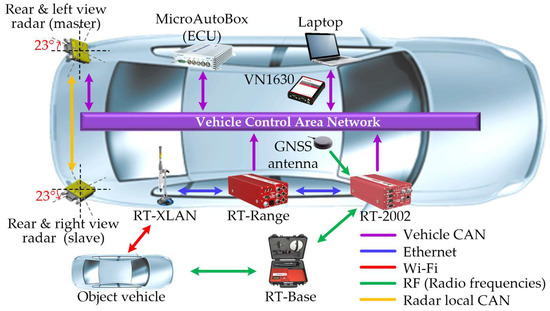

For the experimental setup shown in Figure 3, the ego and object vehicles used were Genesis DH and Tucson IX from Hyundai, as shown in Figure 4, respectively. The rear left, and rear right view radars connected by a master and slave system with radar local control area network (CAN) were located on both sides of the rear of the ego vehicle and were rotated 23 degrees outward.

Figure 3.

Hardware configuration of the experimental setup.

Figure 4.

Vehicles used for experiment: (a) ego vehicle: Genesis DH from Hyundai and (b) object vehicle: Tucson IX from Hyundai.

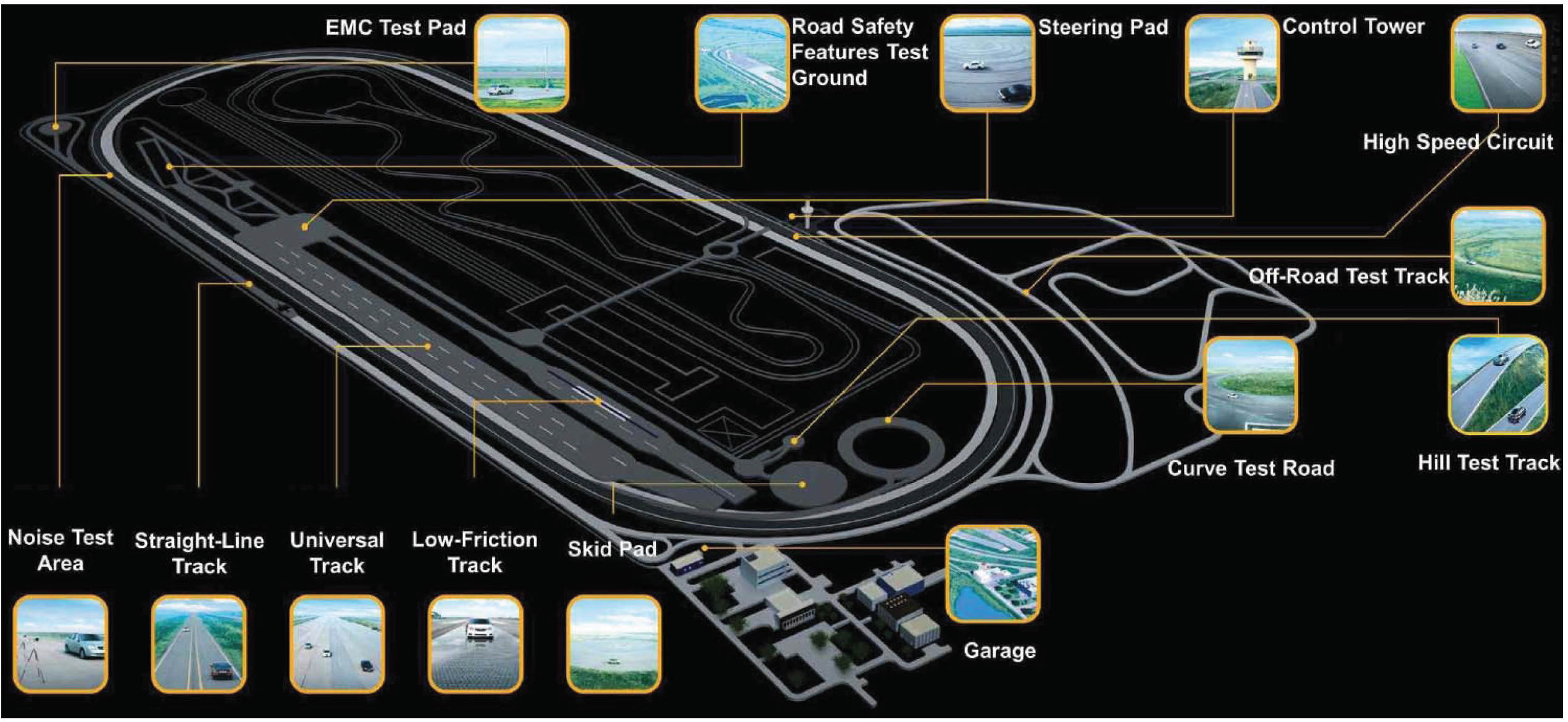

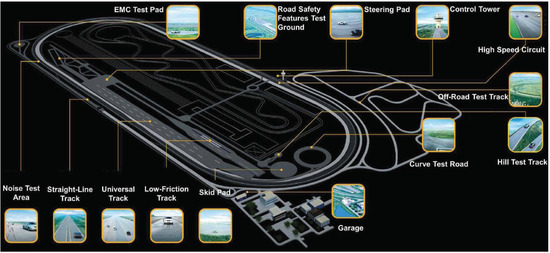

The radar used 24 GHz BSD from Mando-Hella Electronics Corp., in Incheon, South Korea, the update sampling rate was 50 ms, and the distance detect range was up to 70 m. The ground truth data were collected at an update period of 10 ms using DGPS from OxTS (RT-2002, RT-Range, global navigation satellite system (GNSS) antenna, RT-XLAN, and RT-Base) with its real-time kinematic (RTK) positioning service = 0.01 m). We collected the object vehicle’s ground truth data through the RT-Range and RT-XLAN Wi-Fi. Radar and DGPS data were collected through MicroAutoBox from dSPACE, analyzed with Vector’s CANoe with VN1630, and evaluated using MATLAB/Simulink. These data were given by the ego and object vehicle driven manually on a high-speed circuit in the Korea Automobile Testing & Research Institute (KATRI) in South Korea, as shown in Figure 5.

Figure 5.

Test road: Korea Automobile Testing & Research Institute (KATRI).

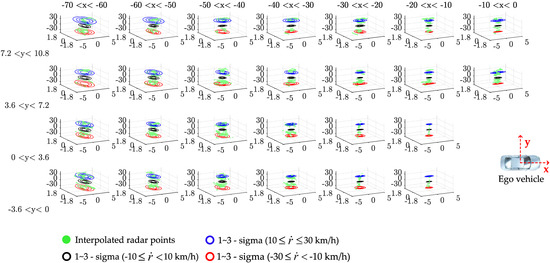

5.2. Radar Accuracy Analysis

The radar accuracy was analyzed by the occupancy zone, as shown in Figure 6. The radar accuracy was analyzed by comparing the DGPS and radar data in each divided occupancy zone. Each zone shows the probability density function contour of the normal distribution based on DGPS, and blue (10 30 km/h), black ( 10 km/h), and red (10 km/h) colors were plotted for each speed data set. It was found that the radar accuracy was different depending on the relative distance and speed. The error was increased as the relative distance and relative velocity between the ego vehicle and the object vehicle increased. Depending on the relative distance, measurement uncertainties by radar occurred [14,16]. We collected data through various real driving situations. The radar accuracy analysis was based on a total of 193,324 samples In this regard, the longitudinal relative velocity between the two vehicles was about −30 to 30 km/h.

Figure 6.

Data-driven radar accuracy analysis in the divided occupancy zone: each zone shows the probability density function contour (1 , 2 , and 3 ) of the normal distribution for radar’s position error based on differential global positioning system (DGPS). Contour lines are shown in blue, black, and red for each speed data set.

Remark 2.

If the amount of sampled calibrated sensor data increases, the distribution of the measurement noise becomes the Gaussian distribution, as shown in Figure 6. Therefore, the system has better performance with more calibrated sensor data. Here is a reference if the measurement noise is not Gaussian [43].

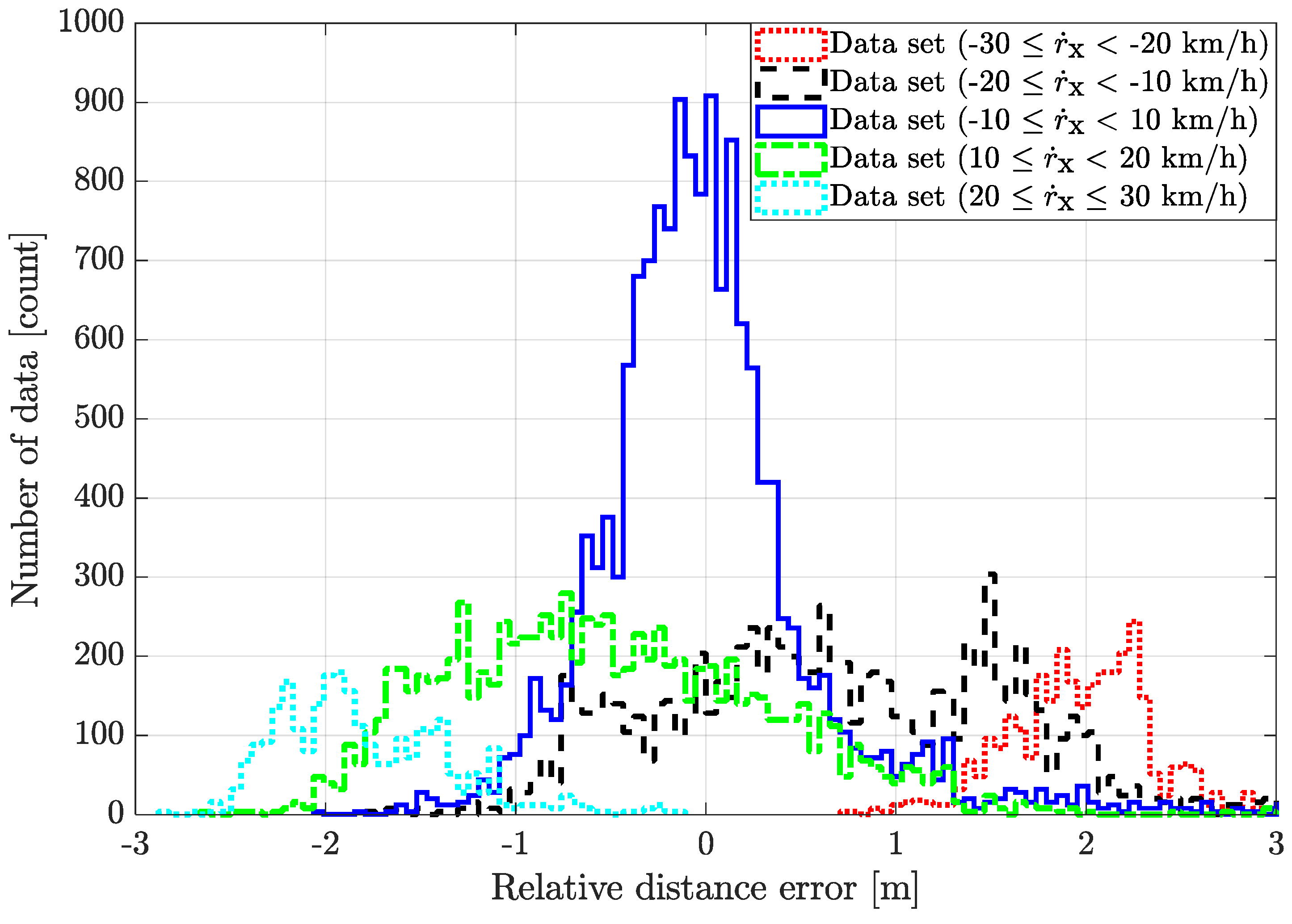

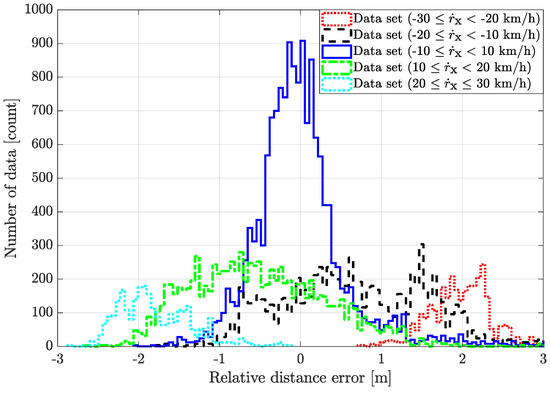

The longitudinal position error, which increases with relative velocity, was due to the latency of the radar, as shown in Figure 7. The average position error of each velocity data set was analyzed as follows:

Figure 7.

Histogram of the radar relative distance error depending on the vehicle speed.

- (i)

- The average position error of the data set ( km/h) is 1.968 m.

- (ii)

- The average position error of the data set ( km/h) is 0.709 m.

- (iii)

- The average position error of the data set ( 10 km/h) is 0.018 m.

- (iv)

- The average position error of the data set (10 20 km/h) is −0.511 m.

- (v)

- The average position error of the data set (20 30 km/h) is −1.793 m.

The calculated position error average by latency was stored at each vertex of the velocity regions. Based on the analysis results, the position error and covariance values of each zone’s error were obtained for the filter design.

The point to note here is that Figure 6 and Figure 7 showed different results from what is generally understood, and therefore, careful attention is required. The radar’s point cloud data is accurate in the longitudinal direction and inaccurate in the lateral direction. This is certain in radar’s row data. However, providing a cloud data point to users makes it difficult for upper-level users to use radar data. Therefore, commercial radar represents an object as one tracking point data through an estimation algorithm (e.g., point cloud clustering, segmentation, single sensor tracking, multilateration, classification, and filtering) [7,8,14,35]. Therefore, there is latency (processing delay). The greater the difference in speed between the ego vehicle and the object vehicle, the greater the latency, and in a vehicle application with a velocity in the longitudinal direction, it causes a longitudinal error. As a result of this, unlike the general idea that the relative longitudinal distance of the radar is more accurate than the relative lateral distance, the experimental data with DGPS shows that the longitudinal direction is more inaccurate than the lateral direction. This means that, as the relative speed increases, the longitudinal error increases. Therefore, anyone designing an upper-level application needs to increase the radar accuracy. This is why we used object vehicle estimation with radar accuracy modeling.

Remark 3.

The latency of the relative lateral velocity is insignificant so it is not considered [44]. It can be calculated similarly to the method calculating the position error by latency.

5.3. Scenario-Based Experimental Result

An object vehicle tracking scenario is constructed using data-driven object vehicle estimation with a radar sensor. For a comparative study of object vehicle tracking, we collected radar data while the object vehicle was driving in the detectable area of the rear left radar of the ego vehicle. As stated above, we determined the error characteristics in the occupancy zone by analyzing radar accuracy. Then, the approximate object estimation data were obtained by the data-driven weighted interpolation process using error characteristics data. When using weighted interpolation, the interpolation parameter vector was designed to satisfy and for numerical stability, where and are small values. The proposed process improves the estimation performance of the commercial radar and the previously studied interpolation method [15].

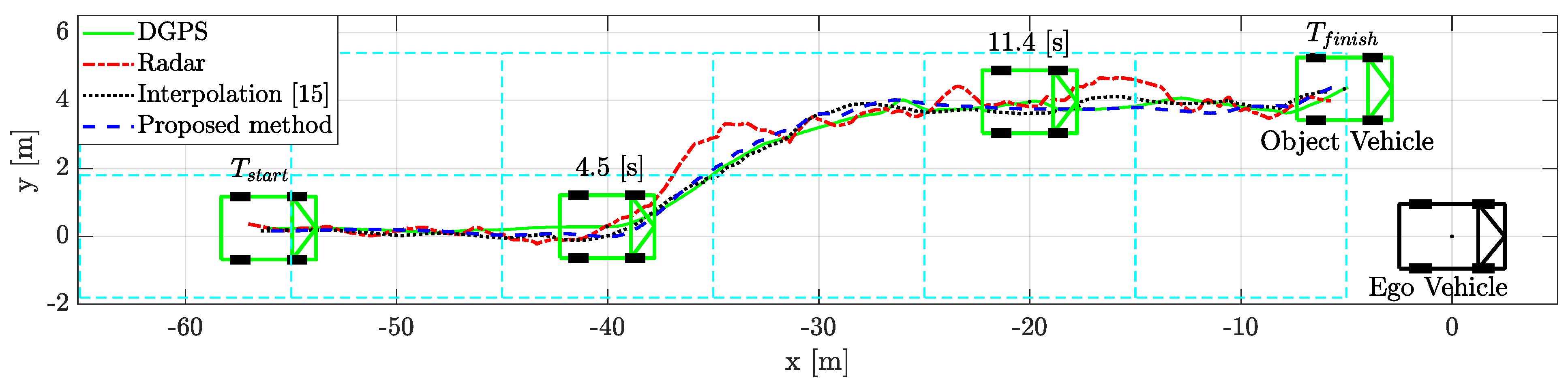

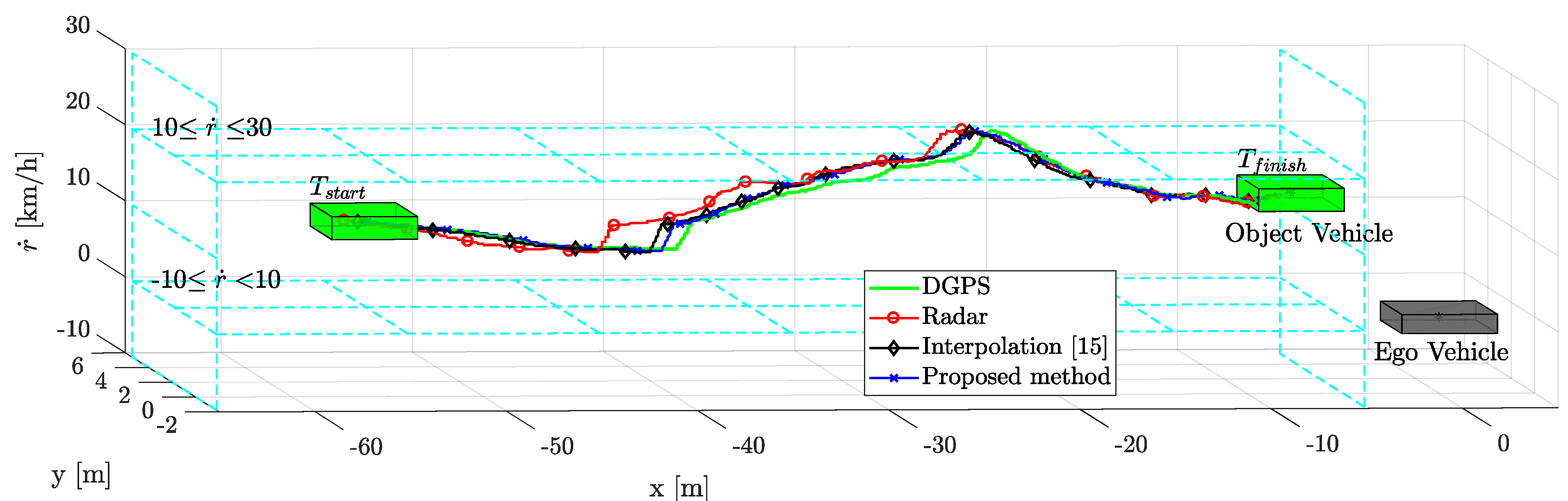

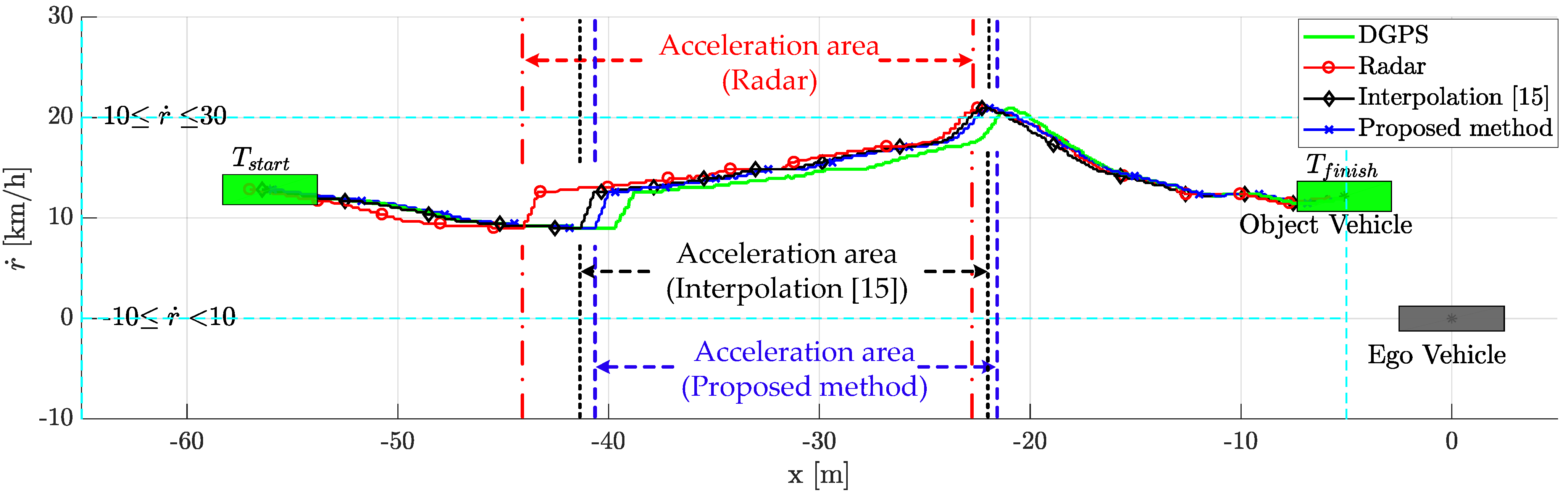

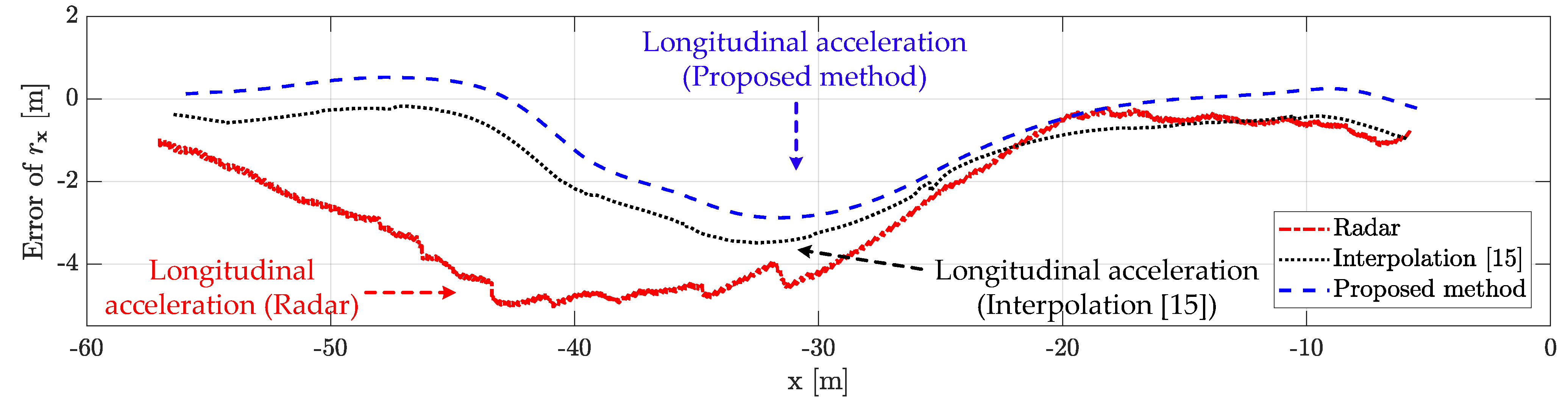

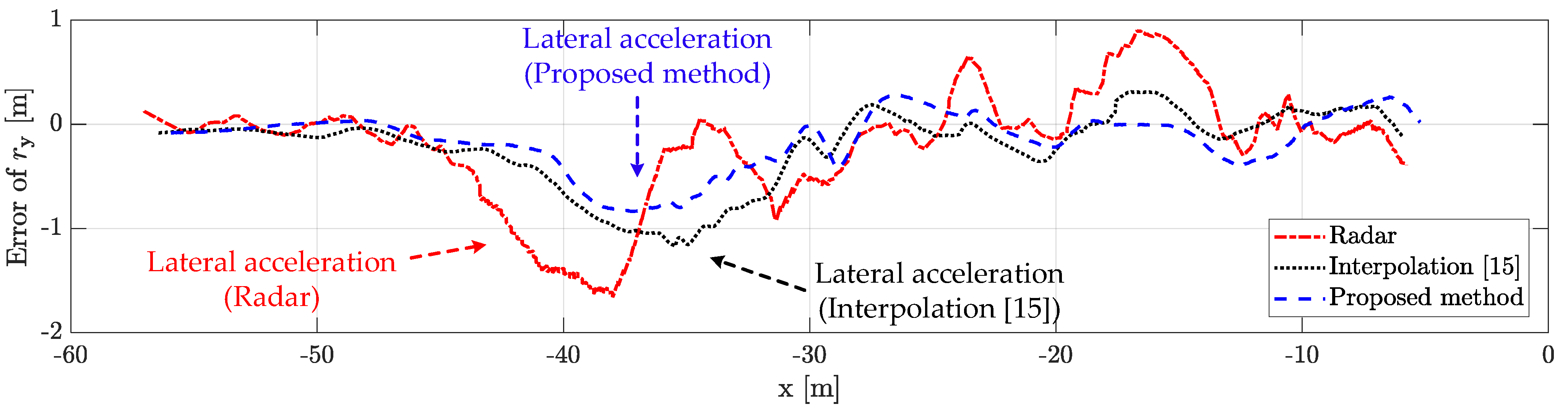

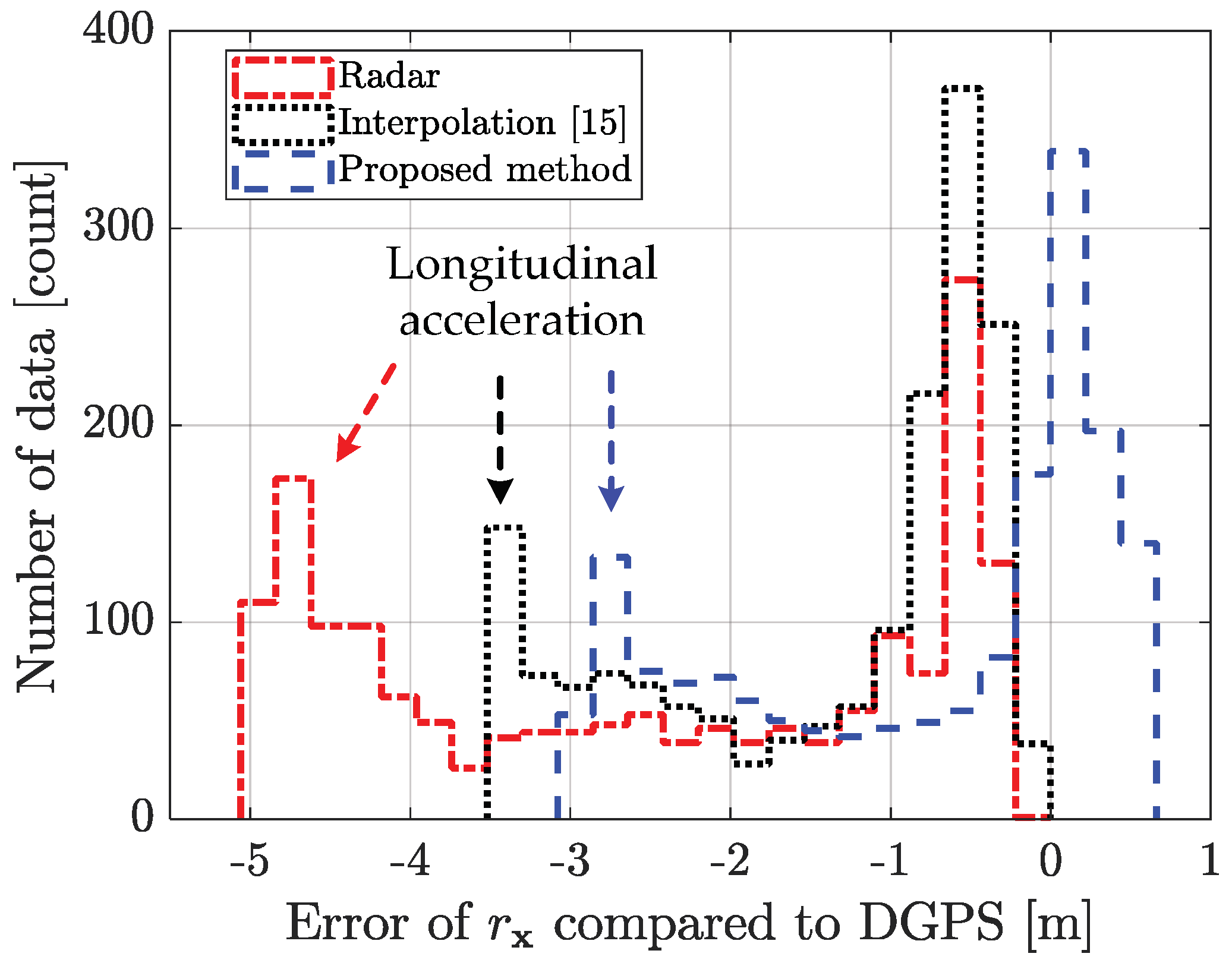

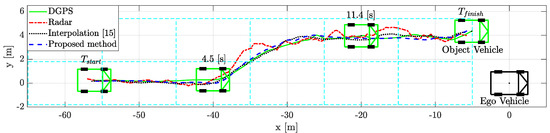

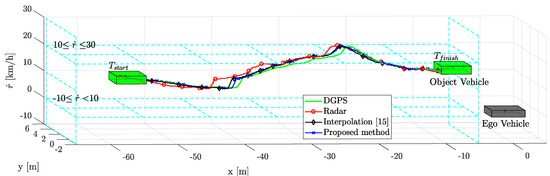

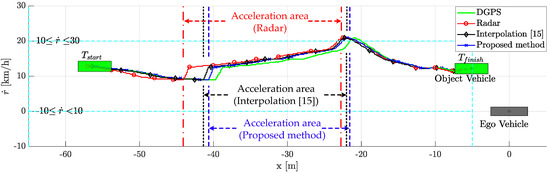

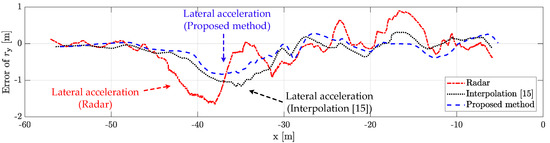

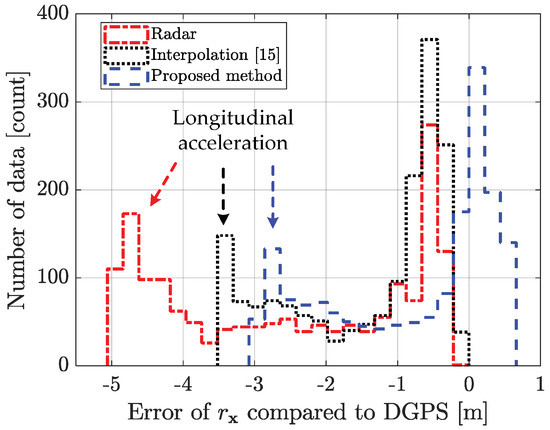

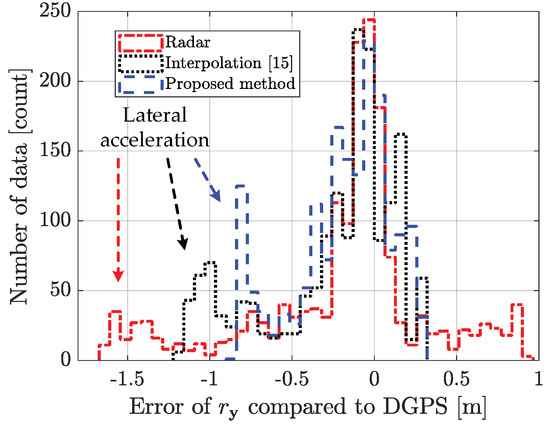

The comparative results of the tracking performance are shown in Figure 8. The proposed weighted interpolation scheme improved the object vehicle tracking performance by reducing the estimation error. The scenario-based relative movement of object tracking was plotted in the top view. The proposed weighted interpolation scheme’s performance was similar to that of DGPS. On the other hand, object tracking using a commercial radar had a larger tracking error than the proposed method due to measurement uncertainty and radar latency. Compared to DGPS, the root mean square error (RMSE) of the commercial radar and the proposed method are 3.04 m and 1.29 m in the longitudinal position and 0.57 m and 0.32 m in the lateral position, respectively. When estimated with a commercial radar, there is a longitudinal position error of about −5 m and a lateral position error of about −1.5 m between −43 m and −38 m. This is because the latency significantly affects the longitudinal position error. This error is also affected by the measurement uncertainty and relative acceleration. The influence of relative acceleration will be described in detail in the next paragraph. In addition, there is a lateral position error of about 1 m between −17 m and −15 m. This error is due to the influence of measurement uncertainty. In this regard, Figure 9 shows object tracking in the 3D view, including the relative velocity. The object vehicle changed lanes while increasing speed to overtake the ego vehicle. The proposed method outperforms the conventional radar estimation method and the previous interpolation method [15]. This is because the relative speed was not considered in the previous interpolation method. In this regard, the position error is covered in more detail in the next subsection, with Figure 10, Figure 11, Figure 12, Figure 13 and Figure 14. The proposed weighted interpolation scheme reflects the average position error and covariance for the relative speed, even when there is speed variation. We observed that the proposed weighted interpolation scheme is robust against speed variation and that it outperforms the tracking performance of the commercial radar.

Figure 8.

Scenario-based relative movement of object estimation from the top view: when estimated with a commercial radar, the longitudinal and lateral position errors (between −37 and −33 m) and lateral position error (between −17 and −15 m) occurred due to latency and measurement uncertainty.

Figure 9.

Scenario-based relative movement of object estimation with relative speed in a 3D view.

Figure 10.

Scenario-based relative movement for relative longitudinal distance and relative speed replotted from Figure 9: there is an acceleration area because the object vehicle changes lanes with increasing speed to overtake the ego vehicle. After that, the relative speed decreases.

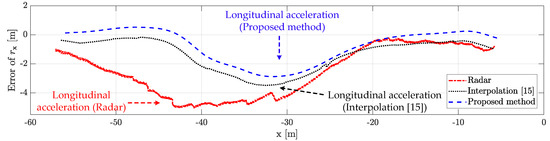

Figure 11.

Longitudinal distance error for scenario-based object estimation: the proposed method outperforms the conventional radar estimation method and the previous interpolation method. However, there is a longitudinal error in all methods due to latency for longitudinal acceleration in the acceleration area.

Figure 12.

Lateral distance error for scenario-based object estimation: the proposed method outperforms the conventional radar estimation method and the previous interpolation method. When measured with radar, the lateral position error is heavily influenced by the measurement uncertainty. However, there is a lateral position error in all methods due to latency for lateral acceleration via the lane change motion of the object vehicle.

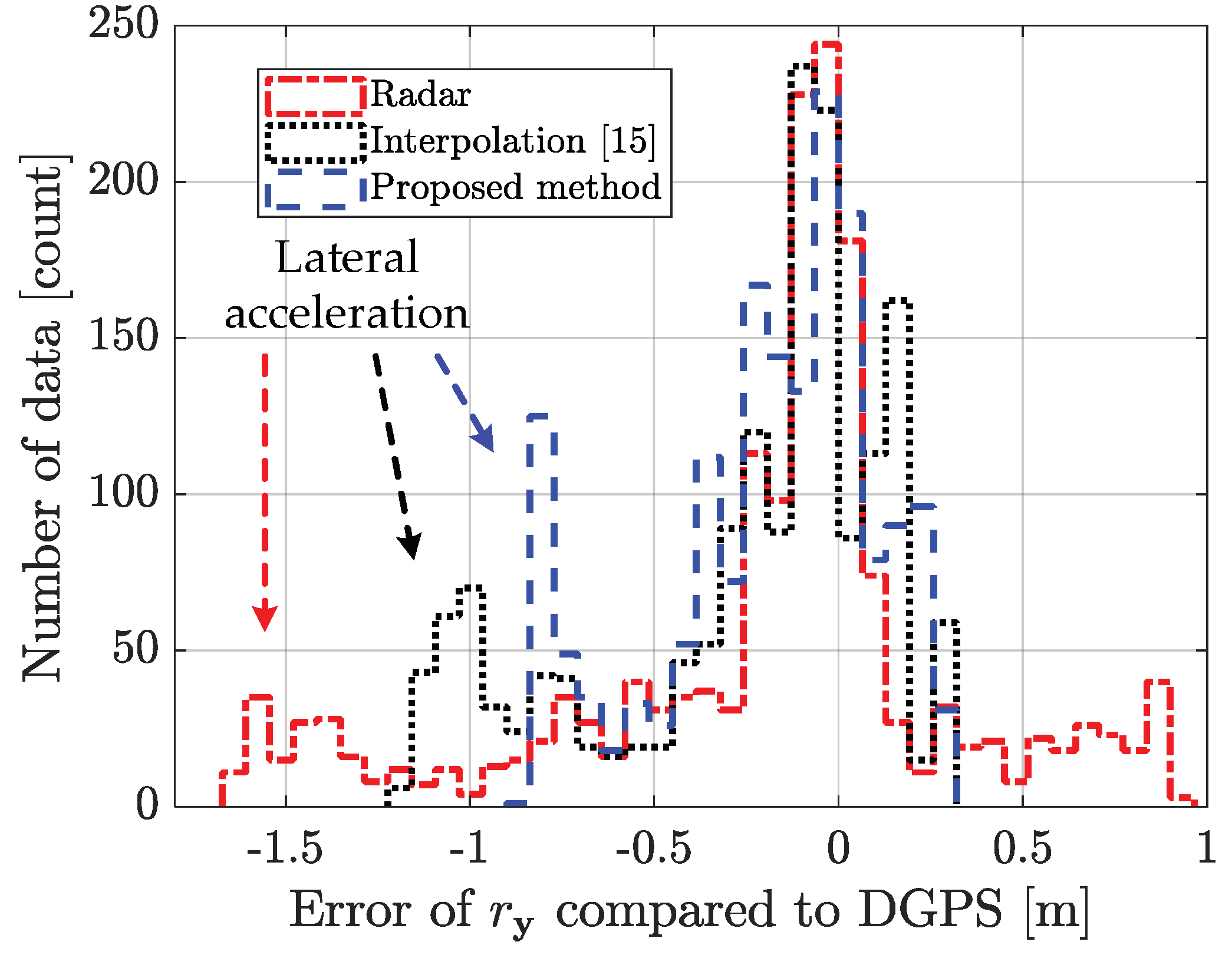

Figure 13.

Histogram of the longitudinal distance error for scenario-based object estimation: using the weighted interpolation method improves the estimation performance statistically. However, there is a longitudinal error due to latency for longitudinal acceleration in the acceleration area.

Figure 14.

Histogram of the lateral distance error for scenario-based object estimation: using the weighted interpolation method improves the estimation performance statistically. However, there is a lateral position error due to latency for lateral acceleration via the lane change motion of the object vehicle.

5.4. Performance Analysis with Limitation

The proposed method outperforms the conventional radar estimation method and the previously researched interpolation method [15]. The performance for the scenario-based experimental result is shown in Figure 10, Figure 11, Figure 12, Figure 13 and Figure 14. Figure 10 represents the relative longitudinal distance (x-axis) and relative velocity (y-axis) from Figure 9. There are acceleration areas (relative speed increase area) for radar, the previously researched interpolation method, and the proposed method. Figure 11 and Figure 12 show the longitudinal and lateral position errors in terms of the x-axis position. The proposed method has a smaller position error than the conventional radar estimation method and the previous interpolation method compared to DGPS. Previously researched interpolation methods introduce measurement uncertainty and latency errors for speed. This is because speed is not considered. When measured with radar, the lateral position error is heavily influenced by the measurement uncertainty. Figure 13 and Figure 14 show histograms of the relative position error for longitudinal and lateral, respectively. By using the proposed method, longitudinal errors due to latency and lateral errors due to measurement uncertainty are reduced.

However, there is a lateral position error in all methods due to latency for lateral acceleration via the lane change motion of the object vehicle. The estimation performance is improved by using the weighted interpolation method, but there is a limitation to the proposed method. The limitation arises because radar accuracy modeling is used only as the constant relative velocity model (2) and because relative acceleration is not considered. The longitudinal position error increases in the acceleration area, as shown in Figure 11 and Figure 13. This is confirmed to be the effect of latency on relative acceleration. The position error was reduced outside of the acceleration area due to the proposed method. Since the object vehicle’s lane change in the acceleration area is also performed, the lateral position error increases as the relative lateral acceleration increases, as shown in Figure 12 and Figure 14. We have confirmed that the position error occurs in radar, the previously researched interpolation method, and the proposed method due to the influence of relative acceleration.

As future work, research should be conducted to reduce the effects of relative acceleration. The effect of relative acceleration can be reduced by using the relative acceleration model. In this regard, we will further consider the acceleration model using multiple models and expect to improve the collision risk performance using accurate radar estimation.

6. Conclusions

This paper proposed a data-driven object vehicle estimation scheme to solve the radar system accuracy problem. For object estimation considering the radar accuracy, we first developed an accuracy model that considers the different error characteristics depending on the zone. The accuracy model was used to solve the measurement uncertainty of radar. We also developed latency coordination for the radar system by analyzing the position error depending on the relative velocity. The developed accuracy modeling and latency coordination methods were applied to object vehicle estimation using weighted interpolation. The utility of the proposed method was validated through a scenario-based estimation experiment. The proposed data-driven object vehicle estimation outperformed the commercial radar algorithm and the previously researched interpolation method. The proposed method is expected to improve object vehicle estimation accuracy. Future work is expected to use an additional acceleration model as multiple models to reduce the effect of relative acceleration. This achievement is critical for autonomous driving technology for developing a high-level controller for functions such as collision risk decision, path planning with collision avoidance, and lane change system.

Author Contributions

Conceptualization, W.Y.C.; methodology, W.Y.C.; software, W.Y.C.; validation, J.H.Y. and C.C.C.; formal analysis, W.Y.C.; investigation, W.Y.C. and J.H.Y.; resources, C.C.C.; data curation, W.Y.C. and J.H.Y.; writing—original draft preparation, W.Y.C.; writing—review and editing, W.Y.C. and C.C.C.; visualization, W.Y.C.; supervision, C.C.C.; project administration, W.Y.C. and C.C.C.; funding acquisition, C.C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. 2021R1A2C2009908, Data-Driven Optimized Autonomous Driving Technology Using Open Set Classification Method) and by the Industrial Source Technology Development Programs (No. 20000293, Road Surface Condition Detection using Environmental and In-Vehicle Sensors) funded by the Ministry of Trade, Industry, and Energy (MOTIE, Korea).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Eskandarian, A. Handbook of Intelligent Vehicles; Springer: London, UK, 2012. [Google Scholar]

- Rajamani, R. Vehicle Dynamics and Control; Springer Science & Business Media: New York, NY, USA, 2011. [Google Scholar]

- Lin, P.; Choi, W.Y.; Chung, C.C. Local Path Planning Using Artificial Potential Field for Waypoint Tracking with Collision Avoidance. In Proceedings of the International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–7. [Google Scholar]

- Choi, W.Y.; Lee, S.H.; Chung, C.C. Robust Vehicular Lane Tracking Control with Winding Road Disturbance Compensator. IEEE Trans. Ind. Inform. 2020. [Google Scholar] [CrossRef]

- Yang, J.H.; Choi, W.Y.; Chung, C.C. Driving environment assessment and decision making for cooperative lane change system of autonomous vehicles. Asian J. Control 2020, 1–11. [Google Scholar] [CrossRef]

- Lee, H.; Kang, C.M.; Kim, W.; Choi, W.Y.; Chung, C.C. Predictive risk assessment using cooperation concept for collision avoidance of side crash in autonomous lane change systems. In Proceedings of the International Conference on Control, Automation and Systems, Jeju, Korea, 18–21 October 2017; pp. 47–52. [Google Scholar]

- Kellner, D.; Barjenbruch, M.; Klappstein, J.; Dickmann, J.; Dietmayer, K. Tracking of extended objects with high-resolution Doppler radar. IEEE Trans. Intell. Transp. Syst. 2015, 17, 1341–1353. [Google Scholar] [CrossRef]

- Roos, F.; Kellner, D.; Klappstein, J.; Dickmann, J.; Dietmayer, K.; Muller-Glaser, K.D.; Waldschmidt, C. Estimation of the orientation of vehicles in high-resolution radar images. In Proceedings of the International Conference on Microwaves for Intelligent Mobility, Heidelberg, Germany, 27–29 April 2015; pp. 1–4. [Google Scholar]

- Kim, B.; Yi, K.; Yoo, H.J.; Chong, H.J.; Ko, B. An IMM/EKF approach for enhanced multitarget state estimation for application to integrated risk management system. IEEE Trans. Veh. Technol. 2015, 64, 876–889. [Google Scholar] [CrossRef]

- Yeddanapudi, M.; Bar-Shalom, Y.; Pattipati, K. IMM estimation for multitarget-multisensor air traffic surveillance. Proc. IEEE 1997, 85, 80–96. [Google Scholar] [CrossRef]

- Gustafsson, F.; Gunnarsson, F.; Bergman, N.; Forssell, U.; Jansson, J.; Karlsson, R.; Nordlund, P.J. Particle filters for positioning, navigation, and tracking. IEEE Trans. Signal Process. 2002, 50, 425–437. [Google Scholar] [CrossRef]

- Kulikov, G.Y.; Kulikova, M.V. Accurate continuous–discrete unscented Kalman filtering for estimation of nonlinear continuous-time stochastic models in radar tracking. Signal Process. 2017, 139, 25–35. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Li, X.R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation: Theory Algorithms and Software; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Choi, W.Y.; Kang, C.M.; Lee, S.H.; Chung, C.C. Radar Accuracy Modeling and Its Application to Object Vehicle Tracking. Int. J. Control Autom. Syst. 2020, 18, 3146–3158. [Google Scholar] [CrossRef]

- Choi, W.Y.; Yang, J.H.; Lee, S.H.; Chung, C.C. Object Vehicle Tracking by Convex Interpolation with Radar Accuracy. In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, 15–18 October 2019; pp. 1589–1593. [Google Scholar]

- Alland, S.; Stark, W.; Ali, M.; Hegde, M. Interference in automotive radar systems: Characteristics, mitigation techniques, and current and future research. Signal Process. Mag. 2019, 36, 45–59. [Google Scholar] [CrossRef]

- Averbuch, A.; Itzikowitz, S.; Kapon, T. Radar target tracking-Viterbi versus IMM. IEEE Trans. Aerosp. Electron. Syst. 1991, 27, 550–563. [Google Scholar] [CrossRef]

- Kirubarajan, T.; Bar-Shalom, Y.; Pattipati, K.R.; Kadar, I. Ground target tracking with variable structure IMM estimator. IEEE Trans. Aerosp. Electron. Syst. 2000, 36, 26–46. [Google Scholar] [CrossRef]

- Ebert, J.; Gumpp, T.; Münzner, S.; Matskevych, A.; Condurache, A.P.; Gläser, C. Deep Radar Sensor Models for Accurate and Robust Object Tracking. In Proceedings of the International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Alessandretti, G.; Broggi, A.; Cerri, P. Vehicle and guard rail detection using radar and vision data fusion. IEEE Trans. Intell. Transp. Syst. 2007, 8, 95–105. [Google Scholar] [CrossRef]

- Cho, H.; Seo, Y.W.; Kumar, B.V.; Rajkumar, R.R. A multi-sensor fusion system for moving object detection and tracking in urban driving environments. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 1836–1843. [Google Scholar]

- Wu, S.; Decker, S.; Chang, P.; Camus, T.; Eledath, J. Collision sensing by stereo vision and radar sensor fusion. IEEE Trans. Intell. Transp. Syst. 2009, 10, 606–614. [Google Scholar]

- Chavez-Garcia, R.O.; Aycard, O. Multiple Sensor Fusion and Classification for Moving Object Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2016, 17, 525–534. [Google Scholar] [CrossRef]

- Kim, K.E.; Lee, C.J.; Pae, D.S.; Lim, M.T. Sensor fusion for vehicle tracking with camera and radar sensor. In Proceedings of the International Conference on Control, Automation and Systems, Jeju, Korea, 18–21 October 2017; pp. 1075–1077. [Google Scholar]

- Kim, T.; Park, T.H. Extended Kalman filter (EKF) design for vehicle position tracking using reliability function of radar and lidar. Sensors 2020, 20, 4126. [Google Scholar] [CrossRef]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A deep learning-based radar and camera sensor fusion architecture for object detection. In Proceedings of the 2019 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 15–17 October 2019; pp. 1–7. [Google Scholar]

- Jha, H.; Lodhi, V.; Chakravarty, D. Object detection and identification using vision and radar data fusion system for ground-based navigation. In Proceedings of the International Conference on Signal Processing and Integrated Networks, Noida, India, 7–8 March 2019; pp. 590–593. [Google Scholar]

- Muntzinger, M.M.; Aeberhard, M.; Zuther, S.; Maehlisch, M.; Schmid, M.; Dickmann, J.; Dietmayer, K. Reliable automotive pre-crash system with out-of-sequence measurement processing. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 1022–1027. [Google Scholar]

- Wielgo, M.; Misiurewicz, J.; Radecki, K. Processing latency effects on resource management in rotating AESA radar. In Proceedings of the 2017 18th International Radar Symposium (IRS), Prague, Czech Republic, 28–30 June 2017; pp. 1–10. [Google Scholar]

- Supradeepa, V.; Long, C.M.; Wu, R.; Ferdous, F.; Hamidi, E.; Leaird, D.E.; Weiner, A.M. Comb-based radiofrequency photonic filters with rapid tunability and high selectivity. Nat. Photonics 2012, 6, 186–194. [Google Scholar] [CrossRef]

- Klotz, M.; Rohling, H. 24 GHz radar sensors for automotive applications. J. Telecommun. Inf. Technol. 2001, 4, 11–14. [Google Scholar]

- Parashar, K.N.; Oveneke, M.C.; Rykunov, M.; Sahli, H.; Bourdoux, A. Micro-Doppler feature extraction using convolutional auto-encoders for low latency target classification. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017; pp. 1739–1744. [Google Scholar]

- de Ponte Müller, F. Survey on ranging sensors and cooperative techniques for relative positioning of vehicles. Sensors 2017, 17, 271. [Google Scholar] [CrossRef]

- Angelov, A.; Robertson, A.; Murray-Smith, R.; Fioranelli, F. Practical classification of different moving targets using automotive radar and deep neural networks. IET Radar Sonar Navig. 2018, 12, 1082–1089. [Google Scholar] [CrossRef]

- Karunasekera, H.; Wang, H.; Zhang, H. Multiple object tracking with attention to appearance, structure, motion and size. IEEE Access 2019, 7, 104423–104434. [Google Scholar] [CrossRef]

- Kang, C.M.; Lee, S.H.; Chung, C.C. Vehicle lateral motion estimation with its dynamic and kinematic models based interacting multiple model filter. In Proceedings of the Conference on Decision and Control (CDC), Las Vegas, NV, USA, 12–14 December 2016; pp. 2449–2454. [Google Scholar]

- Li, X.R.; Jilkov, V.P. Survey of maneuvering target tracking. Part I. Dynamic models. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1333–1364. [Google Scholar]

- Myers, K.; Tapley, B. Adaptive sequential estimation with unknown noise statistics. IEEE Trans. Autom. Control 1976, 21, 520–523. [Google Scholar] [CrossRef]

- Grewal, M.; Andrews, A. Kalman Filtering: Theory and Practice with MATLAB, 4th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Choi, W.Y.; Kim, D.J.; Kang, C.M.; Lee, S.H.; Chung, C.C. Autonomous vehicle lateral maneuvering by approximate explicit predictive control. In Proceedings of the Annual American Control Conference (ACC), Milwaukee, WI, USA, 27–29 June 2018; pp. 4739–4744. [Google Scholar]

- Rühaak, W. A Java application for quality weighted 3-d interpolation. Comput. Geosci. 2006, 32, 43–51. [Google Scholar] [CrossRef]

- Stubberud, S.C.; Kramer, K.A. Monitoring the Kalman gain behavior for maneuver detection. In Proceedings of the 2017 25th International Conference on Systems Engineering (ICSEng), Las Vegas, NV, USA, 22–24 August 2017; pp. 39–44. [Google Scholar]

- Zhang, D.; Xu, Z.; Karimi, H.R.; Wang, Q.G. Distributed filtering for switched linear systems with sensor networks in presence of packet dropouts and quantization. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 64, 2783–2796. [Google Scholar] [CrossRef]

- Nishigaki, M.; Rebhan, S.; Einecke, N. Vision-based lateral position improvement of radar detections. In Proceedings of the 2012 15th International IEEE Conference on Intelligent Transportation Systems, Anchorage, AK, USA, 16–19 September 2012; pp. 90–97. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).