1. Introduction

Denser site deployment has been contemplated as a key enabling technology that can support the mushrooming of mobile data traffic and meet the demands of high-data-rate communications for next-generation wireless communication networks [

1]. In contrast, conventional base stations (BSs) consume 80% of the electricity [

2], as, in the BSs, all the radio and baseband processing functions are coordinated in the second-generation (2G) radio access network (RAN) architecture. Subsequently, the radio and baseband processing functions are divided into two separate nodes, i.e., remote radio head (RRH) and baseband processing unit (BBU), in the development of the third-generation (3G) and fourth-generation (4G) distributed radio access network (Distributed-RAN) architecture. Nevertheless, Distributed-RAN is incompetent in dealing with tremendous growths in data traffic to deliver high-bandwidth, low-latency, and cost-efficient services [

3], and is incapable of supporting the demands of the quality of expectation (QoE) and quality of service (QoS) [

4] for the fifth-generation (5G) of mobile communication systems. Cloud radio access networks (Cloud-RANs) have been regarded as a promising solution, owing to their superiority in reducing the capital expenditure (CAPEX) and operational expenditure (OPEX) of the network operators with the centralization and cloudification of BBUs and their corresponding RRHs. Cloud-RANs can solve the limitations of the Distributed-RAN architecture in terms of expanding the network scalability, simplifying network management and maintenance, optimizing network performance, reducing energy consumption, and enhancing spectrum efficiency [

3]. In a Cloud-RAN architecture, the conventional BSs are physically detached into two parts: BBUs, which are grouped as a cloud processing unit (CU) for designing all coordination and energy trading strategies, and the remaining RRHs, which are in charge of all radio frequency (RF) operations [

5]. Even beamforming is designed in the CU; RRHs consume an enormous portion of electricity to amplify and transmit RF signals to users in order to satisfy their data-rate and energy requirements. However, due to the large number of densely deployed RRHs, with each serving a time-varying number of users in a highly dynamic wireless environment, the amount of energy demanded by the wireless network operators from the energy generation (EG) plants will be highly variable and statistically unknown over different times of the day. Equipping the RRHs with green energy technology that harvests energy from natural sources, such as wind and sunlight, to power next-generation mobile communication networks can significantly contribute to the reduction of the global carbon footprint [

6]. However, the uncertain nature of renewable energy supply coupled with dynamic user energy demand necessitates the integration of green energy supply with the conventional grid to maximally benefit the network operator [

7,

8,

9,

10,

11,

12,

13,

14,

15]. These random variations in electricity demand increase the OPEX of the energy generation process because the EG plants must maintain an instantaneous balance between the aggregate demand for electricity and the total power generated as a whole [

7]. Hence, the operators need to routinely control the operation of the wireless network based on the well-known operating characteristics of the conventional EG plants. Deviation from the operating points of the EG plants to provide compensating variations in order to maintain the balance increases the total OPEX of the EG plants, which will, in turn, reflect on the OPEX of the network operators.

The operational time frame of the grid can be generally divided into regulation, load following, and unit commitment. During each one of these time frames, suitable reserved energy sources are dispatched to correct the imbalance between the generation and the demand. The EG sources reserved for load following, which are deployed on a slower time scale than the regulating frames, are used to accommodate for causes of variability and uncertainty, e.g., due to traffic energy demand and renewable energy generation, during the regular operation of the grid. Although the ramping and energy needed to follow the variations and uncertainties can be supplied by the ancillary energy markets, the insufficient ramping capability of the base low-cast conventional power plants can significantly inflate the price of the energy dispatched by expensive peaking EG units with fast ramp rates. Using conventional regulation units to compensate for uncertain abrupt ramps in energy demand is among the most expensive services. Hence, efficient control mechanisms are required to be developed for the flexibility in the EG fleet in order to maintain their cost-aware reliable operation under variability and uncertainty.

This paper focuses on designing an intelligent control mechanism for the steep ramps in energy demand in wireless cellular networks to minimize the long-term energy cost. We introduce an online learning approach for price-aware energy procurement at RRHs by supplying the load-following EG reserves within advance energy trading offers based on possible forthcoming variations and uncertainties in the energy demand. As the energy demand varies from low to peak values during different hours, the proposed strategy is designed to avoid paying for high peak-time energy cost by purchasing energy in advance at a lower off-peak price to reduce the OPEX. The proposed approach anticipates the future energy demand (surplus) at each RRH and prepares for purchasing (selling) the energy from the hour-ahead/day-ahead market (to the grid) before the actual demand (surplus) emerges. In this way, the EG units will have more time to regulate their electricity generation process according to the demand with slower ramp rates and, consequently, at lower prices.

1.1. Related Works

The authors in [

7] first investigated the energy efficiency problem in a coordinated multipoint (CoMP) system powered by a smart gird. They formed the problem formulation for the proposed system as a simplified two-level Stackelberg game and concluded that such a design significantly reduces the OPEX. Equipping the end-user with renewable energy devices and accounting for the varying electricity price, the authors in [

8] developed an energy trading algorithm to maximally benefit the network operator while satisfying the energy demand of end-users in a grid/renewable energy hybrid network. To take advantage of two-way energy trading with the grid and cooperative transmission, the authors in [

9] proposed an aggregator-aided joint communication and energy cooperation strategy in the CoMP networks powered by both grid and renewable energy. In [

10], the authors designed a joint real-time energy trading and cooperative transmission mechanism based on convex optimization techniques in a smart-grid-powered CoMP system. In [

11], the authors studied energy trading in a more general setting, including trading among a set of storage units and the grid from the perspective of noncooperative game theory, and they proposed an algorithm that achieves at least one Nash equilibrium point. By assuming the availability of varying hourly profiles of the energy demand of base stations and renewable generation as well as the day-ahead knowledge of hourly varying electricity prices, the authors of [

12] minimized the electricity bill in cellular base stations powered jointly by a smart grid and locally harvested solar energy. The authors of [

13] integrated the CoMP system with a simultaneous wireless information and power transfer (SWIPT) concept and proposed a joint energy trading and partial cooperation design based on sparse beamforming, accounting for limited-capacity backhaul links in a green Cloud-RAN by minimizing the instantaneous energy cost without integrating reinforcement learning. The authors of [

14] investigated the optimal power flow problem for smart micro-grids in a distributed manner and adopted an alternating direction method of multipliers to ensure the global optimum of the semidefinite programming (SDP) problem. It can be perceived that an abstract idea of the combinatorial multi-armed bandit (CMAB) approach was firstly tackled in [

15] by introducing two iterative energy trading algorithms to search for a set of cost-efficient energy packages in ascending and descending order of package sizes and assuming invariability of wireless channel circumstances. Consequently, the study in [

16] proposed a CMAB approach for energy trading in the cellular network to support the unpredictable wireless channel conditions to further lessen the total energy cost over a finite time horizon.

1.2. Main Contributions

This paper’s main contributions to real-time energy resource and energy trading in Cloud-RAN environments are summarized as follows:

A joint energy trading and clustering technique to account for limited-capacity backhaul links in a green Cloud-RAN with a SWIPT system was proposed in [

13]. However, their design was based on myopic optimization of semidefinite programming (SDP) (i.e., minimizing the instantaneous energy cost for the current time only) without any learning process for future demand provisioning. Furthermore, their proposed design cannot cope with the time-varying system dynamics, since they considered no temporal dynamic of the energy demand and cost over time and provided no solution for the look-ahead energy purchase decisions.

In contrast to [

15], this paper develops a combinatorial upper confidence bound (CUCB) algorithm as a prescheduling mechanism to maintain cost-aware reliable operation in CRANs to handle the variability and uncertainty of both the electrical generation and the intrinsic characteristics of the cellular communication system. This paper predicts the best possible combination of energy packages to be purchased for the next time slot by exploring the rewards of new combinations of energy packages within given trials at the current time slot and exploiting the past captured information on rewards of super arms from the previous time slots to optimize long-term averaged rewards.

Differently from the system model proposed in [

16], this paper considers a downlink Cloud-RAN with SWIPT, where the RRHs concurrently transfer satisfied data beams to information users and requested energy beams to active energy users. Furthermore, this paper also integrates a sparse beamforming technique to iteratively remove the cooperative links between the RRHs and the active information users based on the renewable power budgets and front-haul link capacity limitations at the individual RRHs. The clustering technique has been confirmed to enhance energy efficiency and decrease the total energy cost of the RRH in pragmatic Cloud-RANs [

17]. In contrast to their CMAB approach, this paper estimates the imminent energy demands by dynamically deciding on an optimal set of super arms by exploring all of the possible minimal combinatorial energy packages to be purchased from the day-ahead market, thus diminishing the risk of regret factors.

This work’s novel contribution is the development of a sequential learning algorithm that adaptively tracks the temporal variations of energy demands and makes predictive decisions on look-ahead energy purchases in dynamically changing environments with unknown statistics to asymptotically minimize the time-averaged overall energy cost in the long run. The proposed algorithm anticipates the future energy demands of the distributed RRHs in the Cloud-RAN and schedules these demands by invoking the various power plants well in advance so that higher energy prices at peak demand times are curtailed. The proposed algorithm does not require any other description of usage patterns or statistical distribution of stochastic events. It performs foresighted optimization based on online learning during the operation. It only uses the past captured data on averaged accumulated rewards for predicting the energy consumption at the next period based on the proposed strategy.

1.3. Organization and Notations

The rest of this paper is structured as follows. The system model for the downlink Cloud-RAN with SWIPT and the energy management model are introduced in the

Section 2. In

Section 3, the problem of real-time collaborative energy trading at an individual time frame is formulated and then transformed into a numerically tractable form. The predictive energy trading strategy is proposed in

Section 4. Numerical simulation results are interpreted in

Section 5. Finally,

Section 6 summarizes the proposed work.

Notation 1. w, , and , respectively, denote a scalar w, a vector , and a positive semidefinite matrix . , , , and indicate the sets of n-by-m dimensional complex matrices, the complex conjugate transpose operators, the trace operators, and the expected value, respectively. represents the -norm of a vector and denotes the number of non-zero entries in the vector. Notice that the duration of a time frame is normalized to one and the normalized energy unit, i.e., , is assumed in this paper. Therefore, in this paper, the terms “power” and “energy” are mutually interchangeable.

2. System Model

Consider a downlink transmission Cloud-RAN with SWIPT from

N RRHs towards

information users (IUs),

active energy users (EUs), and

idle EUs, respectively, over a shared bandwidth. Notice that the active EUs located within the energy-serving area of an RRH can exploit the energy-carrying signals directly from that particular RRH. In contrast, the idle EUs located outside any energy-serving area of the RRHs can only scavenge energy from the ambient radio frequency signals for self-sustainability [

13]. Each RRH is equipped with

M antennas, and the individual IUs and EUs have one single antenna. Based on perfect knowledge of channel state information (CSI), the CU coordinates all the resource management and energy trading strategies for the RRHs and administers all the IUs’ data to the corresponding RRHs finite-capacity front-haul links. Remark that, under the perfect CSI assumption, all the channel properties of the downlink Cloud-RAN communication links, i.e., path loss, scattering, fading, shadowing, etc., are assumed to be perfectly known at both the IU and EU terminals.

Let , , , and denote, respectively, the set of indexes of the RRHs, the IUs, the active EUs, and the idle EUs. The amount of energy flow in this paper depends on the data-rate requirements by the IUs, the wireless energy transfer requirements by the active EUs, and the harvested energy requirements from the environment by the idle EUs, whereas the amount of data flow depends only on the IUs. Let us divide the long-term period T into discrete time slots, indexed as , and define and as the set of indexes of the frames within a time slot and the set of indexes of the learning trials within a frame, respectively. The channel is assumed to vary across frames, but remains invariant within each frame. This paper proposes an online learning algorithm that iteratively alternates between designing the overall transmission strategy using convex optimization and preparing for future energy demand from the day-/hour-ahead market via online learning, i.e., a CMAB approach, to avoid steep ramps in the energy generation plant and to minimize the long-term energy cost.

2.1. Energy Management Model

Similarly to [

13], it is assumed that at least one renewable energy generator, i.e., solar panel or/and wind turbine, is installed in the vicinity of each RRH. In this setup, none of the RRHs are equipped with any frequently rechargeable storage devices. Furthermore, bidirectional energy trading with the primary grid is enabled at the individual RRHs. Thus, the RRHs can purchase energy in the day-/hour-ahead market during off-peak hours at a lower price and/or in the spot market during peak hours at a higher price, and the surplus energy can also be sold back to the grid at an agreed-upon price. Let

,

,

, and

denote, at time slot

, the amount of real-time energy purchases from the spot-market for the

n-th RRH to cover an instantaneous energy shortage, the amount of look-ahead energy purchases from the day-/hour-ahead market at the end of previous time slot “t − 1”, the amount of surplus energy to be traded back to the primary grid, and the amount of renewable energy generation at the

n-th RRH, respectively. In addition, let

be the total transmit power and

be the total power consumption of the hardware circuits at the

n-th RRH. Furthermore, in any frame, the total energy consumption at the

n-th RRH, i.e.,

, is constrained as

By viewing from the perspective of supply and demand, let us assume

, where

,

,

, and

denote the price of purchasing (selling) per unit energy of

,

,

, and generating per unit energy of

(by averaging the capital expenses and OPEX of renewable devices over their lifetime), respectively. Then, the cumulative energy cost procured by the

n-th RRH at the

k-th trial,

of the frame

at the time slot

, i.e.,

, is given by

2.2. Downlink Transmission Model

Let

and

be defined, respectively, as the set of indexes of the beamforming vector from all RRHs towards the

i-th IU,

and the

e-th active EU,

, where

and

represent the beamformer from the

n-th RRH to the

i-th IU and the

e-th active EU, respectively. In addition, let

denote the set of indexes of the channel vector between all RRHs and the

i-th IU, where

denotes the channel vector from the

n-th RRH to the

i-th IU. Accordingly, the signal collected at the

i-th IU,

, can be expressed as the summation of the dedicated information-carrying signal, the inter-user interference induced by other non-devoted information beams, the interference provoked by the energy-carrying signals assigned to all active EUs, and the additive white Gaussian noise at the

i-th IU as

Due to the fact that energy beams carry no information, only the data of IUs will be delivered via the front-haul links. Without loss of generality,

is assumed, and the signal-to-interference-plus-noise ratio (SINR) at the

i-th IU,

, is formulated as

where

indicates the desired power received at the

i-th IU and

is the required transmit power at the RRHs. Let us define the scheduling arrangements between the

i-th IU and the

n-th RRH for partial cooperation [

18], i.e.,

, as

where

betokens that the

i-th IU is not selected to be supported by the

n-th RRH and, hence, the front-haul link between the CU and the

n-th RRH is not employed for joint data transmission to the

i-th IU. Hence, the front-haul link capacity consumption of the

n-th RRH is expressed as

where

is the achievable data-flow rate (bit/s/Hz) for the

i-th IU and directly depends on the transmit power and the wireless channel fading condition. The total energy received by the

e-th active EU,

, is defined as

where the terms on the right-hand side of (

7) represent the intended energy-carrying signal for the

e-th active EU, the inter-user interference caused by all other non-desired energy beams, and the inter-user interference caused by information beams, respectively. Let

denote the conversion efficiency to convert the harvested RF energy into the functional electrical energy form, and

indicates the set of indexes of the channel vector between all the RRHs and the

e-th active EU. The collective energy that can be harvested from the ambiances and atmospheres by the

z-th idle EU,

, is presented as

where

represents the set of indexes of the channel vector between all the RRHs and the

z-th idle EU.

4. Predictive Energy Trading Strategy

The multi-armed bandit (MAB) problem is expressed as a

J-arm system, with each being associated with independent and identically distributed (i.i.d.) stochastic rewards. The objective is to maximize the accumulated profits by observing the associated reward of new arms during the exploration stage while simultaneously optimizing the decisions among a set of arms based on existing knowledge at the exploitation stage in multiple trials [

24]. Let consider a combinatorial generalization of the classical MAB problem, where a super arm consisting of a set of

N base arms,

, is played, and the rewards of its relevant base arms are observed individually in each trial [

25].

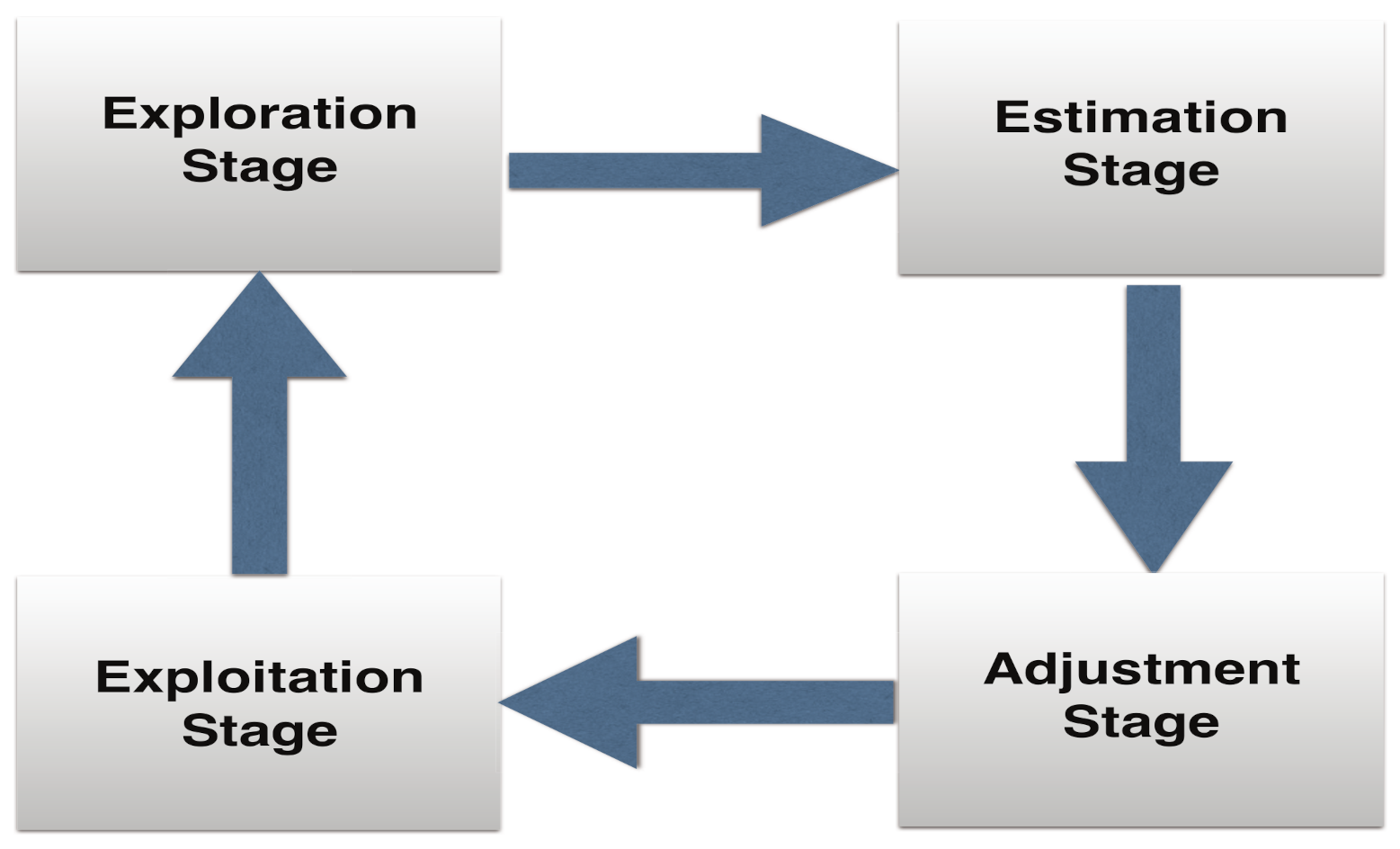

As illustrated in

Figure 1, the problem scrutinized in this paper is categorized as a combinatorial MAB problem, where a super arm is composed of

N base arms and each base arm corresponds to an energy package purchased for an RRH from the day-/hour-ahead market at each trial

before the real-time energy demand. The CU adapts its cooperative energy trading strategies to the intermittent environment in the Cloud-RAN by dynamically forming super arms to maximize the averaged rewards accumulated over the period

T, which is equivalent to lessening the averaged energy expense in the long run. Let

be defined as a set of indexes for possible energy packages offered in the day-/hour-ahead market by the grid, and let

denote all energy packages offered by the grid in the day-/hour-ahead market, where

. Furthermore, let

represent a super arm, i.e., a set of

N energy packages purchased in advance for

N RRHs from the day-/hour-ahead market, at the

k-th trial. Let us further define the reward for the individual arms at the

n-th RRH and the reward for the super arm at the

k-th trial as

and

, respectively, as

where

and

in (

17) are the total energy cost incurred by the

n-th RRH at the initial trial and the

k-th trial of a frame, respectively. Furthermore, let

be defined as the reward vector for the

n-th RRH, where

, is the reward associated to the

p-th energy package in the

k-th trial of the

f-th frame at the

t-th time slot.

In the following, we propose CUCB-based [

25] predictive energy trading strategy, which is shown in

Figure 2 and detailed in Algorithms 1 and 2, to find the best possible combination of energy packages to be purchased from the day-/hour-ahead market for

N RRHs for the next time slot by exploring the rewards of new combinations of energy packages within a limited number of trials at the current time slot and exploiting the past captured information on rewards of super arms from the previous time slots so that the long-term averaged rewards, i.e., the total energy cost in the long run, can be optimized.

| Algorithm 1 Super Arm Exploration |

- 1:

Initialize: Total number of trials K - 2:

for - 3:

Solve problem (16) for a given , - 4:

CU calculates as per (2), as per (17), and as per (18). - 5:

If - 6:

then . - 7:

else if the super arm reward of all the RRHs , - 8:

then , , - 9:

else if the individual reward for the n-th RRH, and , - 10:

then , - 11:

else . - 12:

end If - 13:

Calculate the total energy cost of all the RRHs, as . - 14:

Calculate the energy package index p at all RRHs from . - 15:

Update ; - 16:

Update ; - 17:

end for - 18:

Estimated mean reward for K trials .

|

| Algorithm 2 Main Online Learning Algorithm |

- 1:

Initialize: Time slot count: ; - 2:

while do - 3:

Increment the iteration index ; - 4:

for - 5:

if (initial time slot) - 6:

then Initialize the super arm for the first trial () as , - 7:

else , - 8:

end if - 9:

Exploration Stage: Run Algorithm 1 - 10:

Estimation Stage: - 11:

Calculate the mean reward vector for the frame , where . - 12:

Adjustment Stage: - 13:

if (number of times the p-th arm is played) - 14:

then adjust , - 15:

else . - 16:

end if - 17:

end for - 18:

Average adjusted mean reward vector over all frames . - 19:

Exploitation Stage: - 20:

Average over accumulated number of time slots, as . - 21:

For the next time slot: find N optimum arm indexes as , and the updated super arm as . - 22:

end while

|

Let

and

denote the estimated mean reward vector and the adjusted reward vector of individual energy packages, respectively. In the exploration stage within each frame, Algorithm 1 explores new combinations of energy packages (super arms) for the next trial based on the rewards obtained at the current and the previous trials. Once a given number of

K trials are completed, the mean rewards for individual energy packages, i.e.,

, in each frame are estimated. The estimated mean rewards are, first, adjusted and averaged over a total number of

F frames of a time slot as per step 18, then averaged again over the total number of past time slots as per step 20 [

26], and, finally, used to update the super arm

, i.e., the optimal set of energy packages purchased from the day-ahead market, to be exploited in the next time slot, as detailed in Algorithm 2.

The proposed learning-based algorithm can be considered as a mixed online learning and convex optimization problem with linear matrix inequality constraints. The optimization problem is solved once per learning trial. Therefore, the complexity of the resulting algorithm is mainly due to the number of iterations required for solving a convex optimization problem that has polynomial worst-case complexity [

22] and whose total number of learning trials depends on the dynamic range of variations in the environment.

5. Simulation Results

A downlink Cloud-RAN consisting of three adjacent RRHs with SWIPT towards six single-antenna IUs and six single-antenna EUs was considered in this paper. The proposed Cloud-RAN operated under the channel bandwidth of 20 MHz. All of the RRHs were installed with eight antennas and placed 500 m away from each other. The performance of the proposed scheme was assessed with

trials per frame,

frames per time slot,

time slots, and a total number of

energy packages with

mW, i.e.,

mW. The renewable energy generation values at the individual RRHs were

,

, and

W, respectively, at a price of

GBP/W. It was assumed that

,

, and

GBP/W. A correlated channel model,

, was adopted [

17,

27], where

are zero-mean circularly symmetric complex Gaussian random variables with unit variance,

is the spatial covariance matrix, and its

-th element is given by

where

dBi denotes the antenna gain,

represents the path loss model over a distance of

d km,

is the variance of the complex Gaussian fading coefficient,

dB is the log-normal shadowing standard deviation,

is the angular offset standard deviation, and

is the estimated angle of departure. The simulation parameters were assumed, unless otherwise stated, to be

dBm,

dBm,

dBm,

dBm,

bits/s/Hz,

dBm,

dBm [

18], and

, respectively. The simulation results were accomplished via CVX [

28] using an Intel i7-3770 CPU at 3.4GHz with 8 GB RAM, and the running time for each learning trial was approximately seven seconds without use of parallelization. Our proposed online learning strategy was compared against a baseline design that had no ahead-of-time energy preparation and the non-learning based design in [

13], which always assumes that a fixed set of energy packages is prepared from the day-/hour-ahead market, i.e.,

mW. For fair comparison, identical constraints were applied to all the strategies.

Note that the convergence speed of the proposed online learning strategy to achieve its steady-state is based on the total number of learning trials, which also depends on the dynamic range of variations in the environment. Due to the limitations of our simulation tool, we downsized the total number of learning trials and the other simulation parameters according to the scale of our problem size. In a practical scenario, with a large number of users, the resulting amount of look-ahead energy purchased from the day-/hour-ahead market will be increased proportionally, which may increase the number of arms or increase the difference between two adjacent arms, and may also increase the number of learning trials needed to speed up the convergence. Therefore, the practical enlarged scenario does not affect the scalability of the proposed algorithm, as it may only increase the computational burden.

Figure 3a compares the normalized total energy cost over discrete time slots for different strategies at

dB. It can be observed that, at its steady-state, the proposed strategy achieves performance gains of 43 percent and 11 percent, respectively, as compared with the baseline scheme and the design in [

13], since their designs provide no adaption to the dynamic wireless channel conditions in Cloud-RANs.

Figure 3b shows the normalized total energy cost of our proposed strategy at

dB. One may observe that the performance of the proposed strategy slightly degrades with increasing target SINR, i.e., from

dB to

dB.

Figure 3c represents the normalized total energy cost of our proposed strategy at

dB in a more complex scenario, where it is assumed that the number of per-RRH antennas is six and the renewable energy generation at individual RRHs ranges from [0.5 2.5], [0.3 1.5], and [0.1 1.0] W, respectively.

It is clear from the

Figure 3c that the performance of the proposed strategy was slightly degraded compared to

Figure 3b, which was simulated in a simpler scenario. However, as the time-slot index increases, the performance of our proposed strategy indicates considerable smaller variations in total energy cost and much better average performance compared to that of [

13] under the same system setup. This validates the ability of our proposed algorithm to adapt to more realistic wireless networks.

Figure 4 presents in detail the procedure of a super arm being selected in accordance with Algorithms 1 and 2.

Figure 4a illustrates the procedure of a super arm, i.e., an optimal set of energy packages purchased for a set of RRHs from the day-/hour-ahead market, in different trials at the fifth time slot. In each trial, a new combination of energy packages is explored on the basis of the individual and the averaged accumulated rewards obtained from the current and the previous trials, as per Algorithm 1.

Figure 4b demonstrates the optimal super arm that was selected at the

t-th time slot to be exploited as the starting point at the (

)-th time slot, as per Algorithm 2. It can be observed that from the 15th time slot onwards, nearly identical super arms that associate with the highest rewards for the RRHs are selected, which demonstrates the convergence of the proposed algorithm for the given simulation.

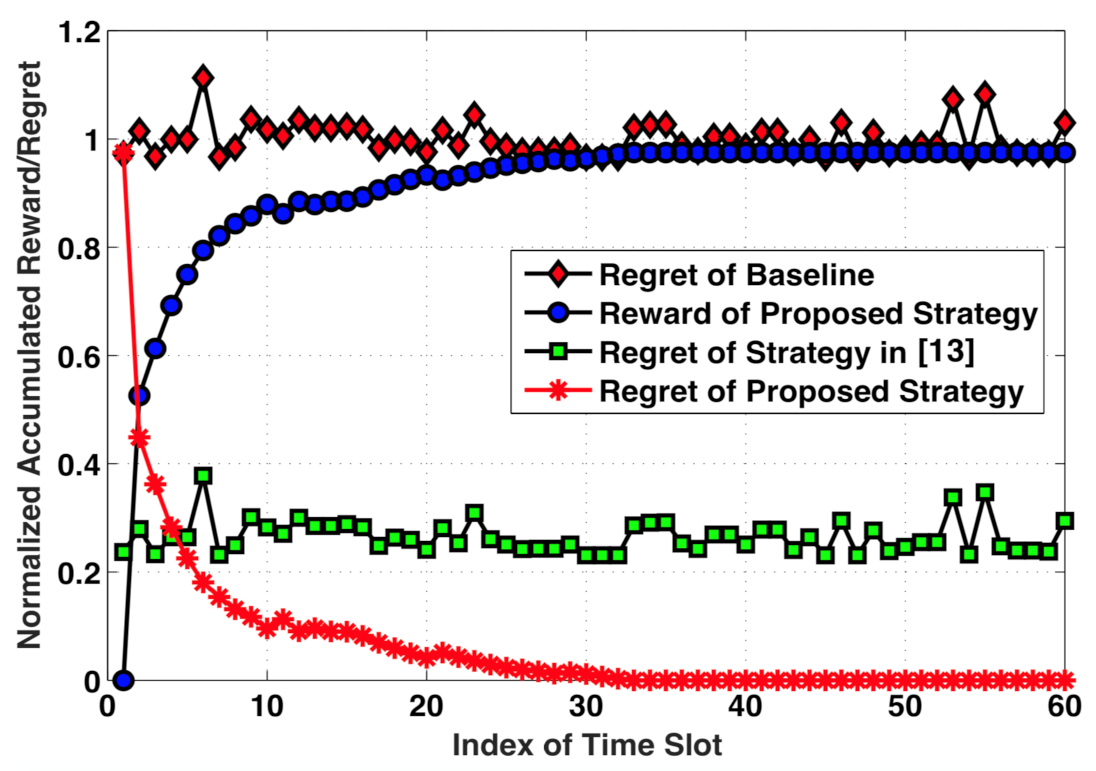

The normalized accumulated reward and regret at each time slot for different strategies are shown in

Figure 5. The normalized accumulated reward at time slot

t, denoted by

, is calculated by averaging the difference of the total energy cost at the

t-th time slot and the initial time slot over all frames, i.e.,

. In contrast, the regret of the strategies is defined as the difference in the accumulated reward between always playing the optimal super arm and playing the super arm according to the proposed strategy at the

t-th time slot, i.e.,

, where

is the accumulated reward after the convergence.

Figure 5 confirms that a significant performance gap exists between the proposed strategy and the baseline scheme, as well as the design in [

13]. One can conclude that, although the regret of the proposed strategy has the worst performance at the initial time slot, it declines rapidly with the continuous learning process until convergence due to the fact that the proposed strategy learns from the past captured behavior of cooperative energy trading and adapts to the dynamic wireless environment.