Traffic Signal Control Using Hybrid Action Space Deep Reinforcement Learning

Abstract

:1. Introduction

2. Literature Review

2.1. Action Space Definitions

2.2. Agent Architecture Specifications

3. Background and Preliminaries

RL for Hybrid Action Space

4. Parameterized Deep Reinforcement Learning Approach for TSC

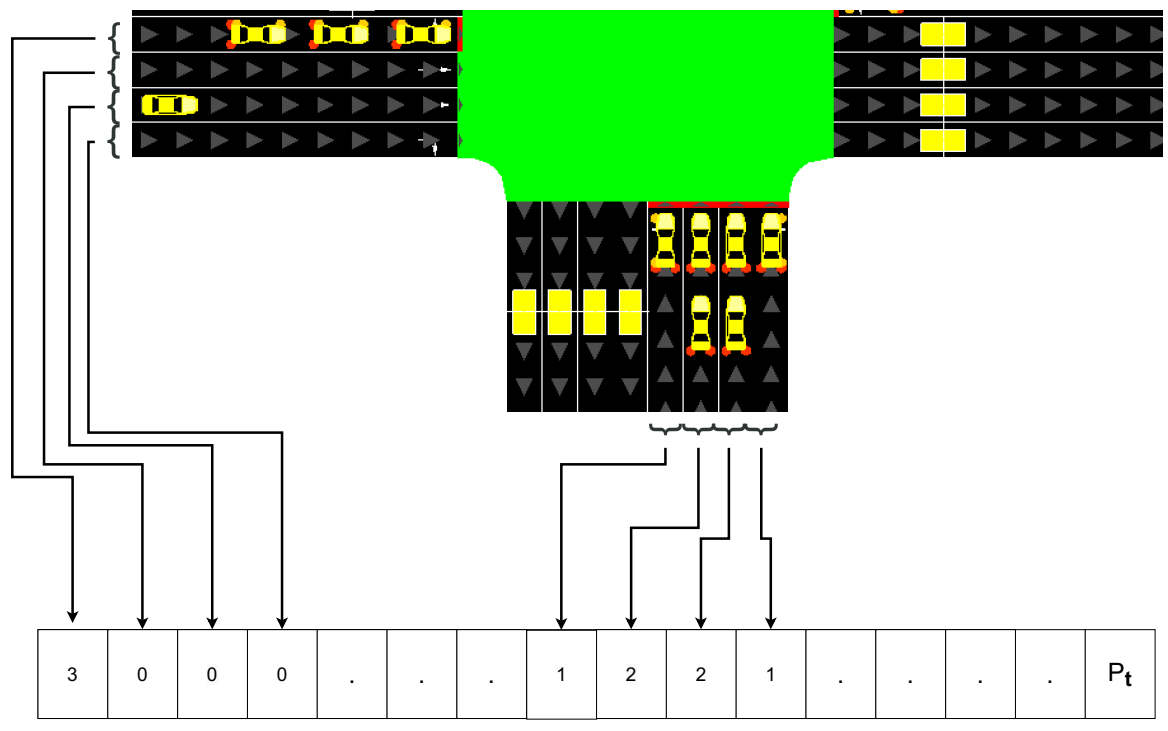

4.1. State Space

4.2. Reward Function

4.3. Action Space

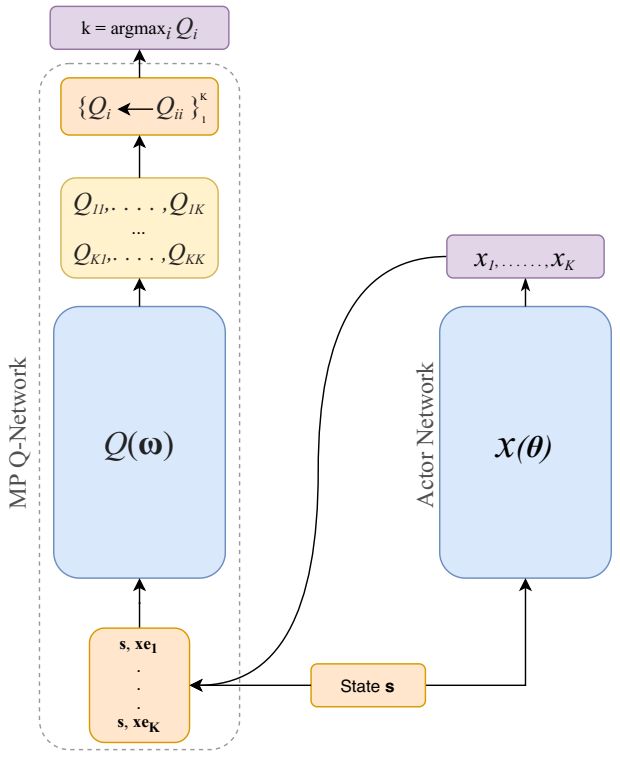

4.4. Agent’s Architecture

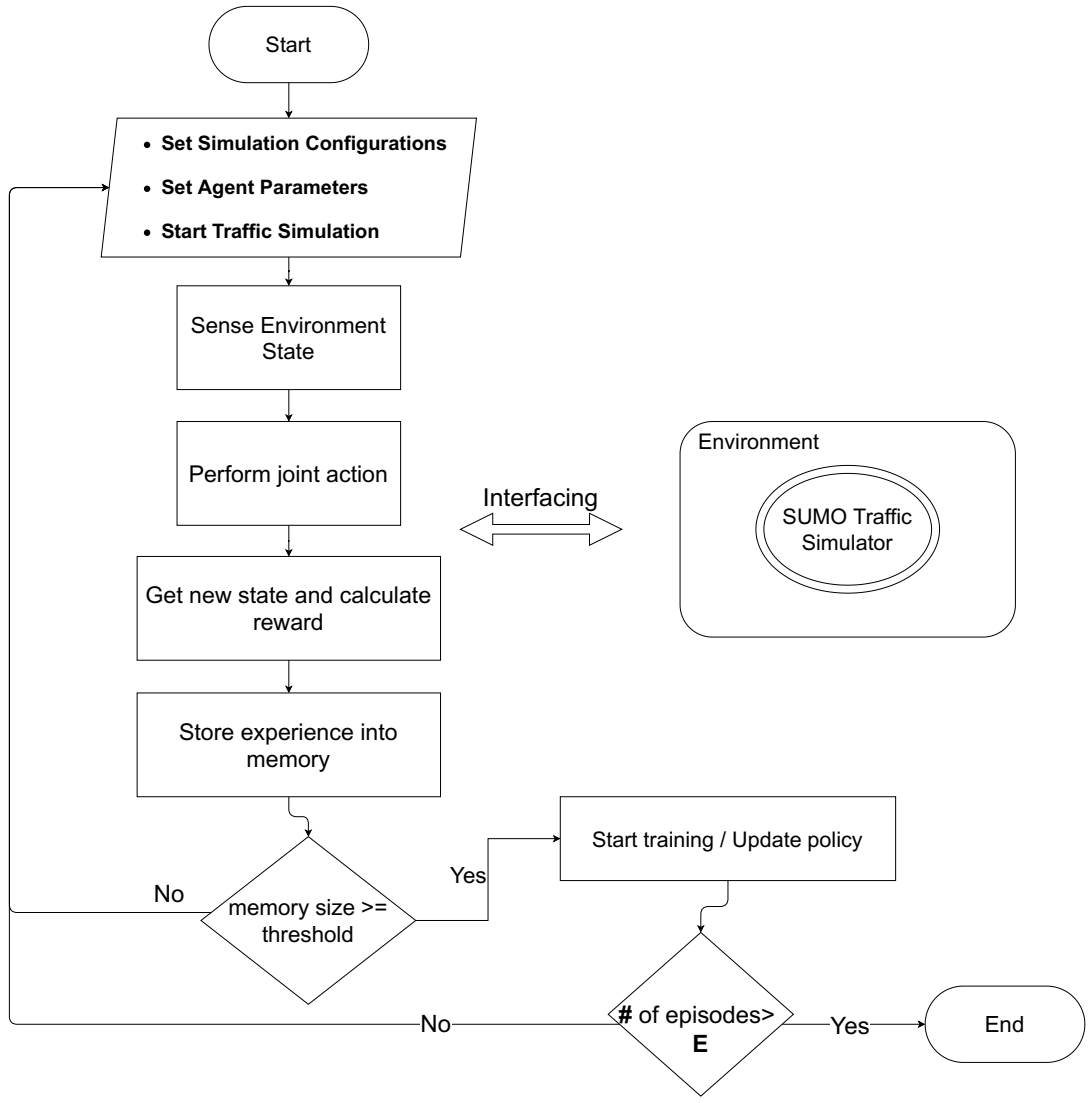

| Algorithm 1 Traffic Signal Control Using Parameterized Deep RL. |

|

5. Experiments

5.1. Experiment Setup

5.2. Parameters and Training Settings

5.3. Performance Evaluation Metrics

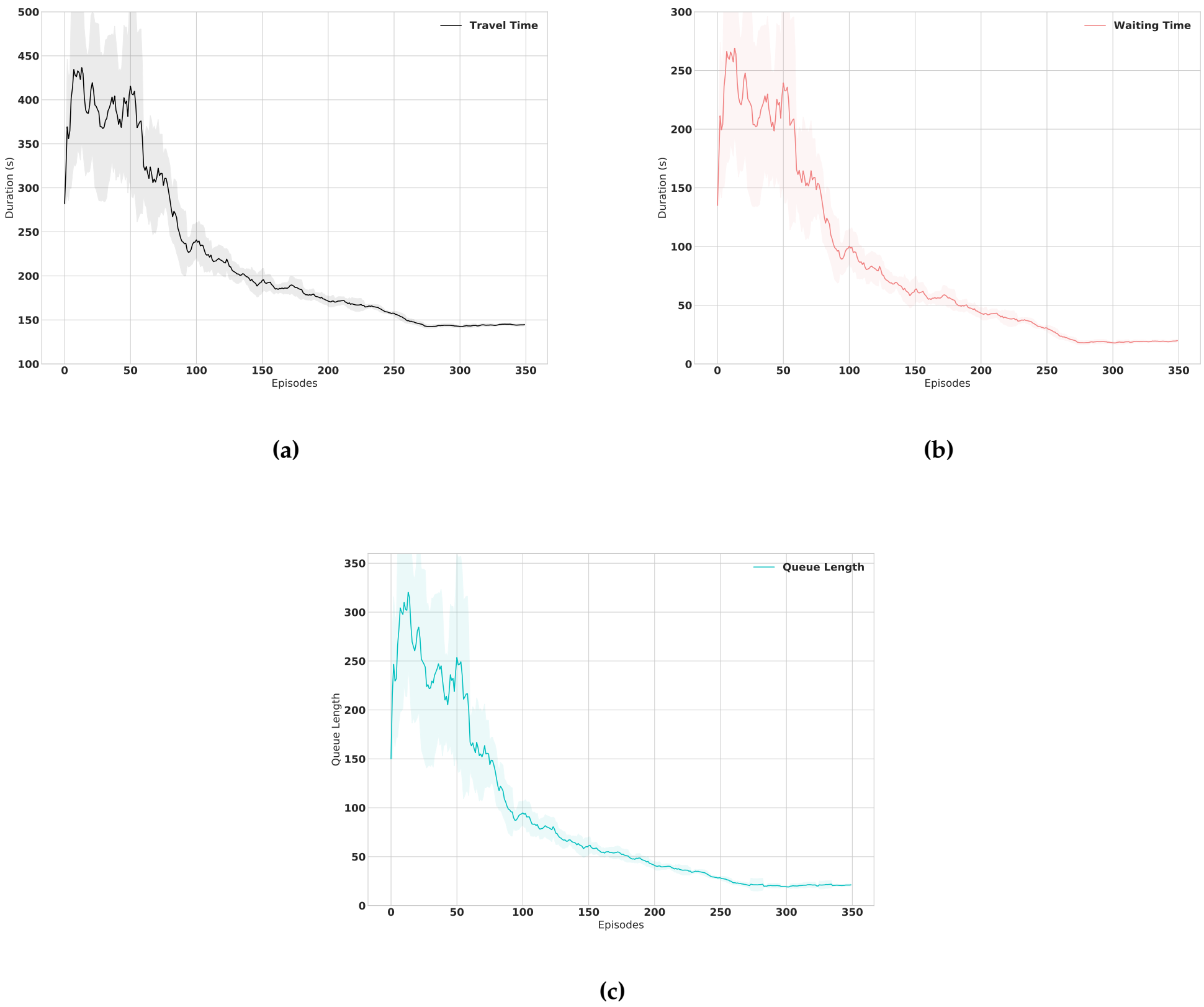

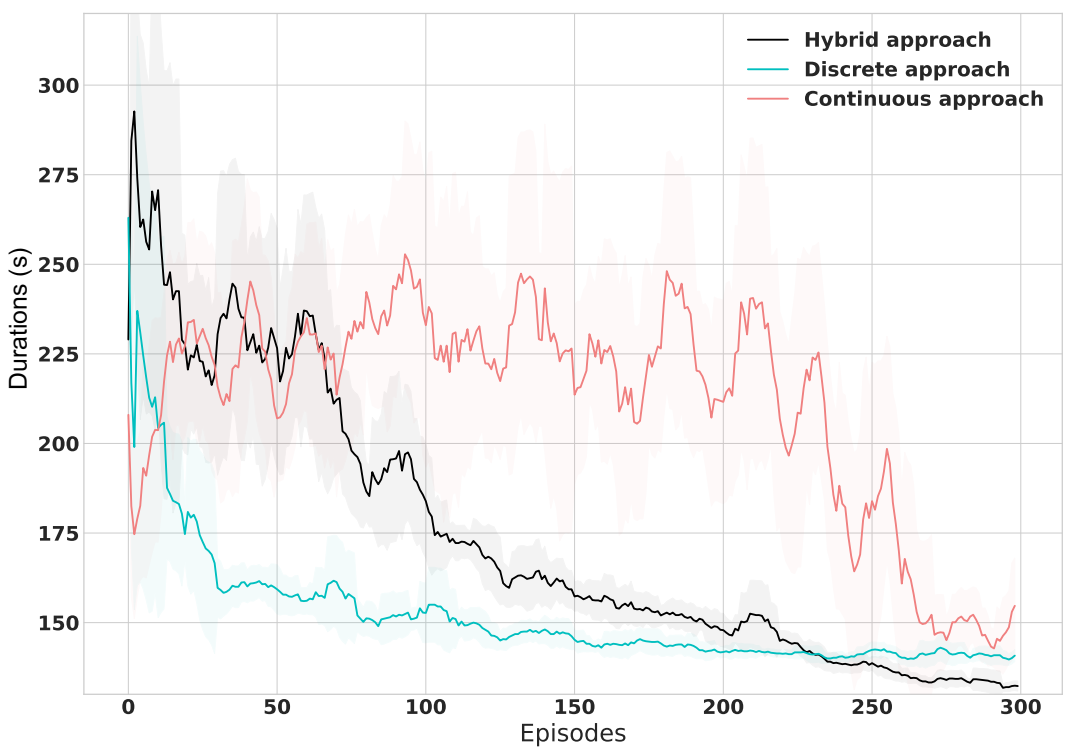

5.3.1. Average Travel Time (ATT).

5.3.2. Average Waiting Time (AWT).

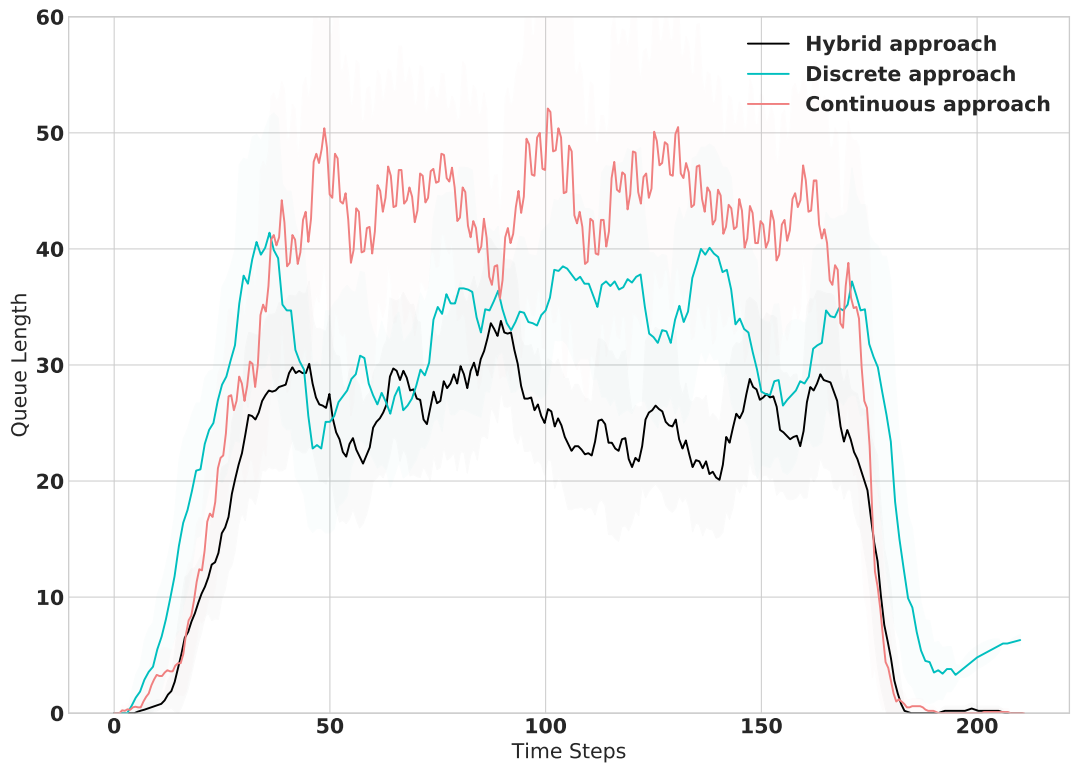

5.3.3. Queue Length (QL).

5.4. Benchmarks

5.4.1. Fixed Time Approach

5.4.2. Discrete Approach

5.4.3. Continuous Approach

5.5. Results and Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- INRIX Scoreboard 2019. PRESS RELEASES. Available online: https://inrix.com/press-releases/2019-traffic-scorecard-uk/ (accessed on 9 February 2021).

- Haydari, A.; Yilmaz, Y. Deep Reinforcement Learning for Intelligent Transportation Systems: A Survey. arXiv 2020, arXiv:2005.00935. [Google Scholar] [CrossRef]

- Lin, Y.; Dai, X.; Li, L.; Wang, F.Y. An Efficient Deep Reinforcement Learning Model for Urban Traffic Control. arXiv 2018, arXiv:1808.01876. [Google Scholar]

- Gregurić, M.; Vujić, M.; Alexopoulos, C.; Miletić, M. Application of Deep Reinforcement Learning in Traffic Signal Control: An Overview and Impact of Open Traffic Data. Appl. Sci. 2020, 10, 4011. [Google Scholar] [CrossRef]

- Genders, W.; Razavi, S. Using a Deep Reinforcement Learning Agent for Traffic Signal Control. arXiv 2016, arXiv:1611.01142. [Google Scholar]

- Casas, N. Deep Deterministic Policy Gradient for Urban Traffic Light Control. arXiv 2017, arXiv:1703.09035. [Google Scholar]

- Gao, J.; Shen, Y.; Liu, J.; Ito, M.; Shiratori, N. Adaptive Traffic Signal Control: Deep Reinforcement Learning Algorithm with Experience Replay and Target Network. arXiv 2017, arXiv:1705.02755. [Google Scholar]

- Guo, M.; Wang, P.; Chan, C.Y.; Askary, S. A Reinforcement Learning Approach for Intelligent Traffic Signal Control at Urban Intersections. arXiv 2019, arXiv:1905.07698. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- van Hasselt, H.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-learning. arXiv 2015, arXiv:1509.06461. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; van Hasselt, H.; Lanctot, M.; de Freitas, N. Dueling Network Architectures for Deep Reinforcement Learning. arXiv 2016, arXiv:1511.06581. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2019, arXiv:1509.02971. [Google Scholar]

- Gu, S.; Lillicrap, T.; Sutskever, I.; Levine, S. Continuous Deep Q-Learning with Model-based Acceleration. arXiv 2016, arXiv:1603.00748. [Google Scholar]

- Masson, W.; Ranchod, P.; Konidaris, G. Reinforcement Learning with Parameterized Actions. arXiv 2015, arXiv:1509.01644. [Google Scholar]

- Xiong, J.; Wang, Q.; Yang, Z.; Sun, P.; Han, L.; Zheng, Y.; Fu, H.; Zhang, T.; Liu, J.; Liu, H. Parametrized Deep Q-Networks Learning: Reinforcement Learning with Discrete-Continuous Hybrid Action Space. arXiv 2018, arXiv:1810.06394. [Google Scholar]

- Wei, H.; Zheng, G.; Yao, H.; Li, Z. IntelliLight: A Reinforcement Learning Approach for Intelligent Traffic Light Control. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; Association for Computing Machinery: New York, NY, USA, 2018; pp. 2496–2505. [Google Scholar] [CrossRef]

- Liu, X.Y.; Ding, Z.; Borst, S.; Walid, A. Deep Reinforcement Learning for Intelligent Transportation Systems. arXiv 2018, arXiv:1812.00979. [Google Scholar]

- Yu, C.; Liu, J.; Nemati, S. Reinforcement Learning in Healthcare: A Survey. arXiv 2020, arXiv:1908.08796. [Google Scholar]

- Wu, J.; Wei, Z.; Li, W.; Wang, Y.; Li, Y.; Sauer, D.U. Battery Thermal- and Health-Constrained Energy Management for Hybrid Electric Bus Based on Soft Actor-Critic DRL Algorithm. IEEE Trans. Ind. Inform. 2021, 17, 3751–3761. [Google Scholar] [CrossRef]

- Gong, Y.; Abdel-Aty, M.; Cai, Q.; Rahman, M.S. Decentralized network level adaptive signal control by multi-agent deep reinforcement learning. Transp. Res. Interdiscip. Perspect. 2019, 1, 100020. [Google Scholar] [CrossRef]

- Wei, H.; Chen, C.; Zheng, G.; Wu, K.; Gayah, V.; Xu, K.; Li, Z. PressLight: Learning Max Pressure Control to Coordinate Traffic Signals in Arterial Network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1290–1298. [Google Scholar] [CrossRef]

- Genders, W. Deep Reinforcement Learning Adaptive Traffic Signal Control. Ph.D. Thesis, McMaster University, Burlington, ON, Canada, 2018. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.P.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. arXiv 2016, arXiv:1602.01783. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor. arXiv 2018, arXiv:1801.01290. [Google Scholar]

- Hausknecht, M.; Stone, P. Deep Reinforcement Learning in Parameterized Action Space. arXiv 2016, arXiv:1511.04143. [Google Scholar]

- Bester, C.J.; James, S.D.; Konidaris, G.D. Multi-Pass Q-Networks for Deep Reinforcement Learning with Parameterised Action Spaces. arXiv 2019, arXiv:1905.04388. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; The MIT Press: London, UK, 2018; p. 47. [Google Scholar]

- Behrisch, M.; Bieker, L.; Erdmann, J.; Krajzewicz, D. SUMO - Simulation of Urban MObility: An overview. In Proceedings of the SIMUL 2011, The Third International Conference on Advances in System Simulation, Barcelona, Spain, 23–29 October 2011; pp. 63–68. [Google Scholar]

- Vidali, A.; Crociani, L.; Vizzari, G.; Bandini, S. A Deep Reinforcement Learning Approach to Adaptive Traffic Lights Management. Available online: http://ceur-ws.org/Vol-2404/paper07.pdf (accessed on 22 August 2020).

- Speed Limits by Country—Wikipedia, The Free Encyclopedia. Available online: https://en.wikipedia.org/wiki/Speed_limits_by_country (accessed on 22 August 2020).

- Tieleman, T.; Hinton, G. Lecture 6.5-Rmsprop: Divide the Gradient by a Running Average of Its Recent Magnitude. Available online: http://www.cs.toronto.edu/~hinton/coursera/lecture6/lec6.pdf (accessed on 24 March 2021).

- Gordon, R.; Tighe, W. Traffic Control Systems Handbook; FEDERAL HIGHWAY ADMINISTRATION: Washington, DC, USA, 2005.

- GEUS, S.D. Utilizing Available Data to Warm Start Online Reinforcement Learning. Master’s Thesis, Vrije Universiteit Amsterdam, Amsterdam, The Netherlands, 2020. [Google Scholar]

| Distribution Type | Configuration | Generated Flow (Vehicles) | Start Time (s) | End Time (s) |

|---|---|---|---|---|

| Weibull Dist | C1 C2 C3 | 1500 4000 C1-C2-C1 | 0 s 0 s 0 s | 3800 s 3800 s 11,000 s |

| Normal Dist | C4 C5 C6 | 1500 4000 C4-C5-C4 | 0 s 0 s 0 s | 3800 s 3800 s 11,000 s |

| Parameter | Description | Value |

|---|---|---|

| N | Number of training episodes | 301 |

| M | Replay Memory | 20,000 |

| Memory Training threshold | 128 | |

| b | Mini-batch size | 64 |

| Actor Learning rate | ||

| ParamActor Learning rate | ||

| Gamma factor | ||

| minimum value of epsilon | ||

| Number of epsilon episodes | 270 | |

| Yellow phase duration | 3 s |

| C1 | C2 | C3 | C4 | C5 | C6 | |

|---|---|---|---|---|---|---|

| Fixed-Time | 164.94 | 254.01 | 217.10 | 165.45 | 255.64 | 223.92 |

| Discrete approach | 142.07 | 149.5 | 147.28 | 139.10 | 147.86 | 145.97 |

| Continuous approach | 148.46 | 167.02 | 160.95 | 137.03 | 160.63 | 154.25 |

| Hybrid approach | 133.61 | 141.05 | 138.33 | 130.59 | 138.98 | 136.89 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bouktif, S.; Cheniki, A.; Ouni, A. Traffic Signal Control Using Hybrid Action Space Deep Reinforcement Learning. Sensors 2021, 21, 2302. https://doi.org/10.3390/s21072302

Bouktif S, Cheniki A, Ouni A. Traffic Signal Control Using Hybrid Action Space Deep Reinforcement Learning. Sensors. 2021; 21(7):2302. https://doi.org/10.3390/s21072302

Chicago/Turabian StyleBouktif, Salah, Abderraouf Cheniki, and Ali Ouni. 2021. "Traffic Signal Control Using Hybrid Action Space Deep Reinforcement Learning" Sensors 21, no. 7: 2302. https://doi.org/10.3390/s21072302

APA StyleBouktif, S., Cheniki, A., & Ouni, A. (2021). Traffic Signal Control Using Hybrid Action Space Deep Reinforcement Learning. Sensors, 21(7), 2302. https://doi.org/10.3390/s21072302