Abstract

In this paper, we present an infrared microscopy based approach for structures’ location in integrated circuits, to automate their secure characterization. The use of an infrared sensor is the key device for internal integrated circuit inspection. Two main issues are addressed. The first concerns the scan of integrated circuits using a motorized optical system composed of an infrared uncooled camera combined with an optical microscope. An automated system is required to focus the conductive tracks under the silicon layer. It is solved by an autofocus system analyzing the infrared images through a discrete polynomial image transform which allows an accurate features detection to build a focus metric robust against specific image degradation inherent to the acquisition context. The second issue concerns the location of structures to be characterized on the conductive tracks. Dealing with a large amount of redundancy and noise, a graph-matching method is presented—discriminating graph labels are developed to overcome the redundancy, while a flexible assignment optimizer solves the inexact matching arising from noises on graphs. The resulting automated location system brings reproducibility for secure characterization of integrated systems, besides accuracy and time speed increase.

1. Introduction

Integrated Circuit (IC) (a glossary is present at the end of this paper) are electronic components used in many fields such as wearable technologies and IoT (Internet of Things). The body of these components is made of highly pure silicon, doped with several materials to create a functional network of electronic parts. During the conception process, IC may be inspected using infrared cameras. Silicon has the property of being transparent to infrared light. Specialized cameras embed an indium gallium arsenide (InGaAs) sensor that operates in the Short-Wave Infrared (SWIR) wavelength range from 900 nm to 1700 nm, making it possible to see through the silicon substrates of IC. This kind of camera is widely used in the semiconductor industry as it allows defect detection (among others). In this study, we used such an infrared vision system for the particular purpose of characterizing secure IC through physical hacking attempts.

These IC must be submitted to visual inspections prior to any characterization to identify potential critical parts and locate them. The characterization process then utilizes these locations, taking them as targets for physical hacks. This paper proposes a cartography system to automate spatial positioning on the structures of interest inside an IC, thus overcoming several limitations of the manual way. The proposed system is divided into two complementary sides: an autofocus system to solve the Z-dimension of the cartography and a graph-based location system to solve the XY-dimensions. The overall framework deals with critical imaging constraints implied by the experimental context, such as illumination problems and speckle noise.

1.1. Industrial Context

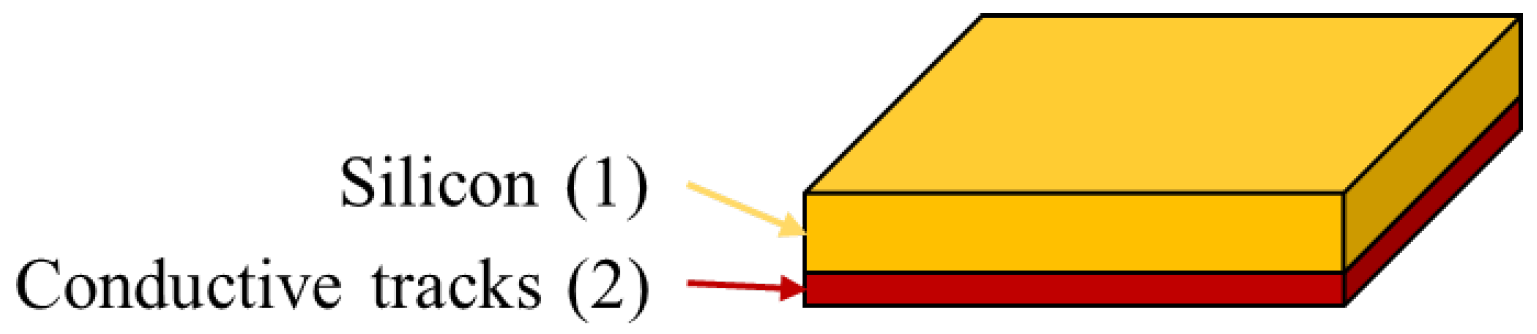

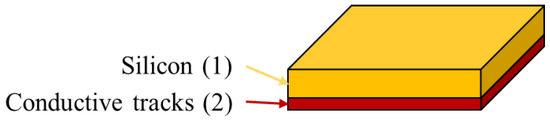

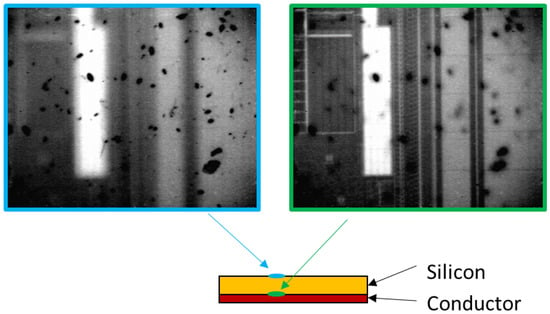

An IC is made of several layers of different materials. In our study, we only consider the two top layers which are: (1) the silicon, (2) a conductive material that forms the conductive tracks and constitutes the heart of the IC (Figure 1). The surface of the conductive material (2) corresponds to the Surface Of Interest (SOI).

Figure 1.

Concept mapping of different materials composing our Integrated Circuit (IC). The conductive tracks (2) are viewed through the silicon layer (1), since the remaining material is opaque to infrared light.

Depending on their targeted application, some IC need to be secured to ensure the privacy of users, and their security needs to be validated. The tasks to characterize a secure IC include the study of the IC’s behavior following a physical disruption. Such a disruption can be obtained by targeting a specific location on the IC that corresponds to internal structures such as memories, CPUs or I/O gates, visible on the SOI.

In this study, we addressed the case of local disruptions, which require a high targeting precision.

Any weakness detected during a secure characterization must be addressed by countermeasures and validated by a second characterization. Validation is the key point that raises the question of reproducibility. The conditions of the analysis must be controlled to ensure its reliability, and to exactly reproduce the same conditions for each characterization step. However, mechanical adjustments for the characterization require precise and careful handling, which makes reproducibility difficult. Usually, every security characterization is initiated manually when, on the other hand, the local disruptions must all be conducted with identical setups.

1.2. Vision Context

Targeting these disruptions requires nanoscale precision to accurately target structures on the SOI. For that purpose, we used an optical system composed of an infrared camera coupled to an optical microscope to inspect the SOI under high magnification. The camera was SWIR-sensitive, which allowed us to see the metallic materials through the silicon layer. For this vision technology, some third-party equipment was necessary to emit SWIR light, reflected by the scene and caught by the InGaAs sensor. We chose not to use some thermally insulated equipment, even if it improves image quality.

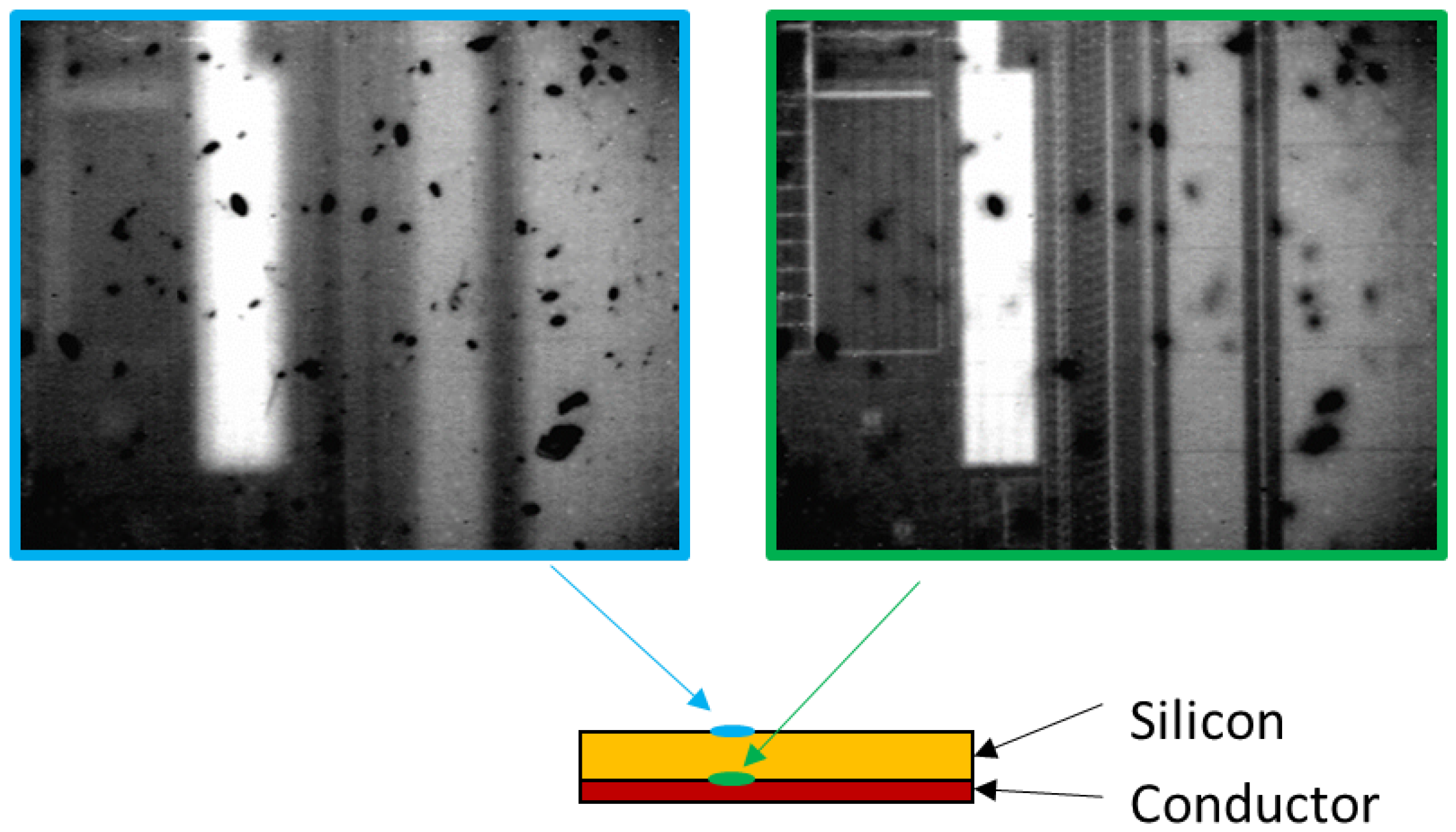

On the one hand, this system gave the necessary precision (±1 μm resolution) to find out the target structures for the secure characterization while keeping the IC functional (non-invasive vision). On the other hand, the result was poor image quality—low-contrasted, gray-leveled and noisy. Speckle noise is inherent to infrared systems; here, it was heavily worsened by optical magnification. Moreover, this not thermally insulated sensor is subject to the thermal inference of the environment, which disturbs the vision process. This thermal inference comes from the third-party equipment that emits the SWIR light, which is reflected by the conductive materials and thus triggers the InGaAs sensor. Additionally, the image quality depends on the material reflectance to light, which may vary with the technology used to build the IC. The random presence of artifacts or contaminants on the silicon layer may also impair the view of the SOI. These contaminants locally block the light casting shadows on the SOI under it (see Figure 2).

Figure 2.

View of contaminants on the silicon surface (blue), shadowing the conductive tracks (green).

Today, the targeting process that precedes characterization is carried out manually. First, the focus of the camera is adjusted once and the target region to be characterized is determined on the SOI.

Depending on the characterization results, these two steps will be repeated to validate countermeasures. Let us highlight two points here:

- If the IC is tilted or deformed (even by a micron), then the focus may need to be readjusted at every point during the characterization.

- Re-targeting a structure induces imprecision because the human visual perception can vary significantly.

These points are critical for both accuracy and reproducibility purposes. The use of automated processes to locate structures could solve these issues, but such automated processes must also consider the imaging constraints spotted here.

Self-positioning in a coordinate system implies the overall knowledge of this system. For computer vision, self-positioning can be implemented by scanning the scene to create a coordinate system, whereas locating an object requires visual analysis of this object in the scene. Such a process is split into two sub-processes: 3D scan on one side and pattern recognition on the other side.

In natural scenes, several 3D scanner systems have already been developed, generally using several tools to both take a photo and locate it in space to build an image of the scene [1,2]. The optical system generally used has an infinite depth of field, which enables correctly focused acquisitions that requires no pre-focusing step. A large part of the advances take advantage of merging different specialized acquisition systems to strengthen the accuracy of the overall system [3]. On the shape recognition side, solutions are as numerous as their application fields. Shape recognition is nowadays widely investigated in machine learning approaches [4,5,6,7,8].

However, conventional scanning and pattern-recognition approaches are difficult to apply in the context of IC, as the vision systems lead to great constraints. Several vision systems were used for IC inspection, for example X-ray, thermography, Scanning Electron Microscope (SEM), surface acoustic waves or scanning acoustic microscopy [9,10]. Each system has its own pros and cons in this field, as reviewed in [11], but only non-destructive systems that allow internal inspection are applicable in our study. The closest study [12] proposed to use an SEM to produce high-fidelity images, which permits robust pattern recognition [13] as the obtained images are of far better quality than ours. However, this vision system is incompatible with the characterization field as it is destructive. As far as we know and according to our constraints, no image-based method has been proposed to reliably scan the internals of an IC and locate complex structures.

The aim of this paper is to propose a method for overcoming the image quality constraints and automating the XYZ scanning system to address the issue of reproducibility in IC characterization (cf. Section 1.1). In that context, the use of a single uncooled InfraRed (IR) camera with optical magnifiers and without any other third-party material makes our system innovative and solves a great issue.

For that purpose, we firstly present a specialized autofocus system that can efficiently scan the conductive tracks of an IC (Section 2). Secondly, we discuss a graph-matching-based method to automatically locate the structures of interest to be characterized (Section 3). Section 4 presents the resulting framework, and shows that the system we built is relevant and that it is compatible with infrared microscopy for IC.

2. Scanning System for Viewing Integrated Circuits

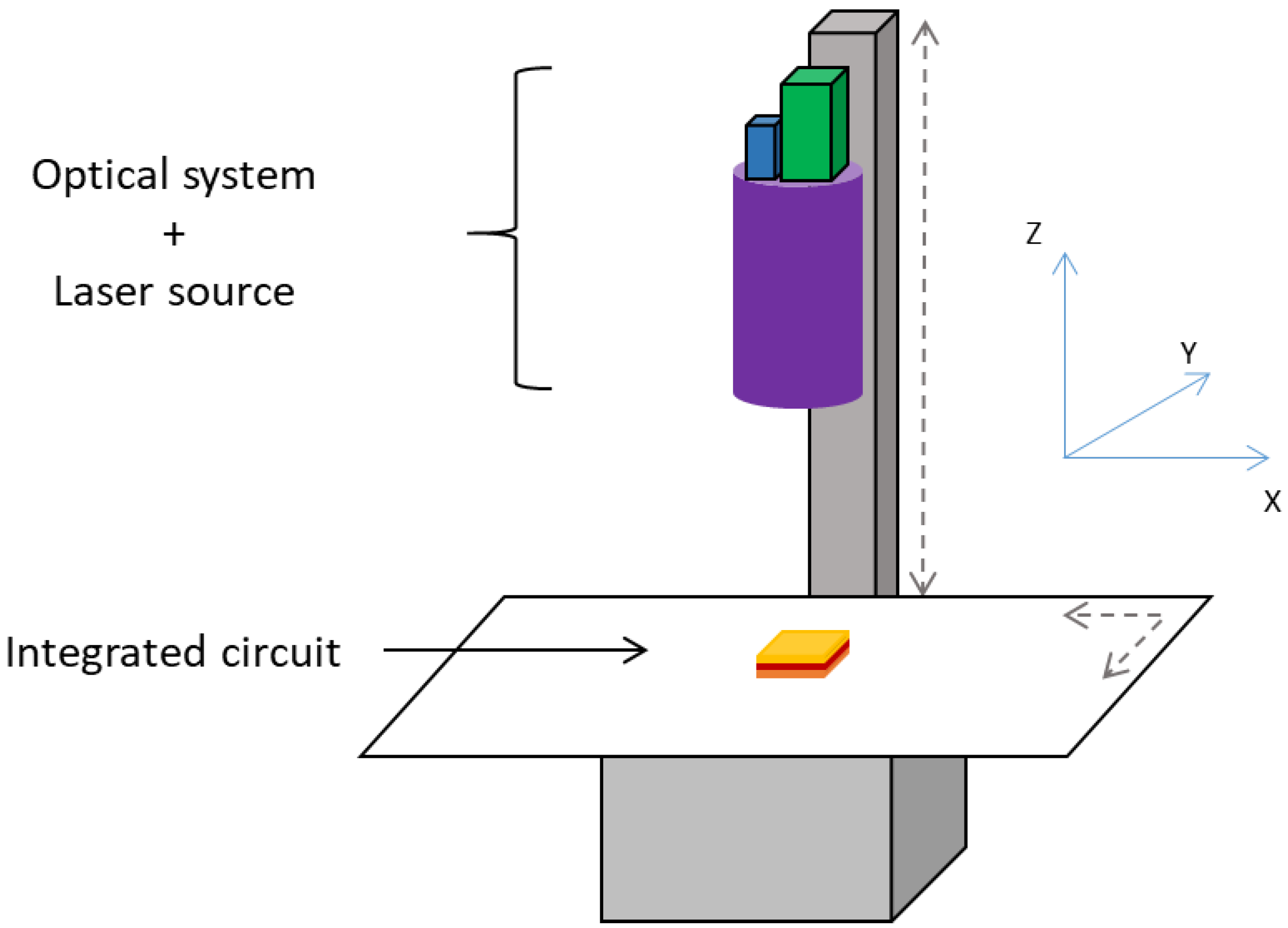

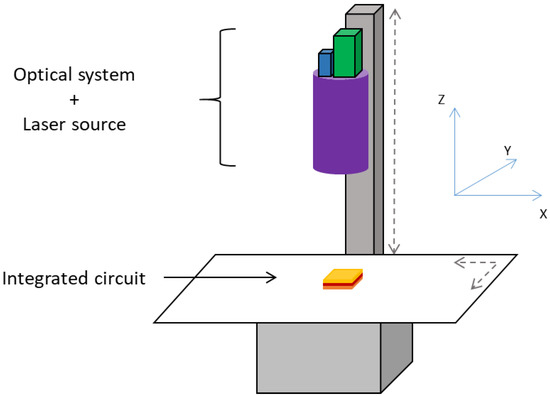

For characterization, we placed an IC in a bench as illustrated in Figure 3. Let be the coordinate system where correspond to the orthogonal axes of the motors in the horizontal plane, and Z is the distance from the optical system to the plane. The optical system orientation is fixed vertically along Z.

Figure 3.

Representation of a bench used for laser-injection-based IC characterization. Each 3D scanning axis is motorized in the 3D coordinate system. The vertical column represents the Z axis, along which the optical system moves thanks to a motor. The horizontal tray, where the IC is placed, is motorized according to the XY plane.

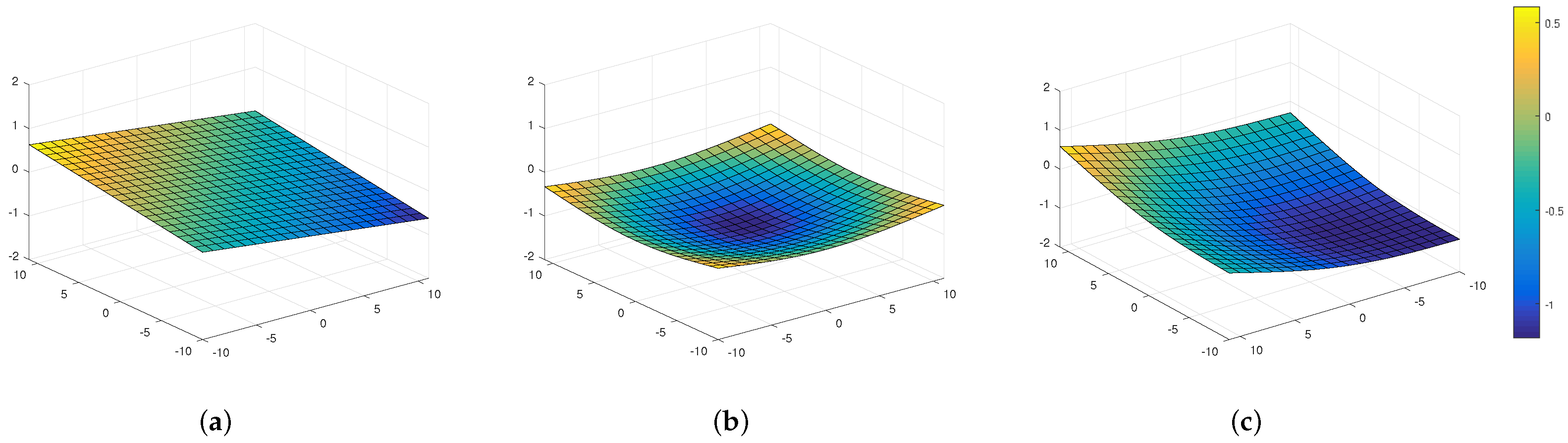

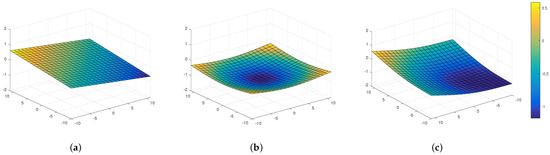

In the context of infrared microscopy, the scan process consists of an iterative stepping procedure where each step follows an exposition time of the optical system to acquire the best possible image. After the iterative stepping procedure, the images are brought together to create a global image of the scanned object. As the optical system is not precise enough to distinguish the height differences between the internal IC structures, the SOI is considered as a flat surface. During manual prepositioning, the IC happens to be tilted due to an imperfect adjustment. Moreover, because of conception constraints, the SOI can appear as curved. Figure 4 shows examples of possible topologies.

Figure 4.

Representation of the surface of a tilted IC (a), a curved IC (b) and both (c).

As in any microscopy system, the more magnified the light, the shorter the focal distance and the shorter the field depth, hence the more important the precision of the focus. Therefore, the focus adjustment is of main interest for an accurate scan under high magnification conditions. In addition, this focus adjustment has to be computed on the fly during the scan process. Hence, we propose to compute the Z focus adjustment with an AutoFocus (AF) system.

2.1. Autofocus Methods

The AF mechanism is a deterministic algorithm used to compute the lens position such that the system is focused on the scene/object of interest. If focused, the image of the scene is sharpest. Two types of AF approach exist—active and passive [14]. An active approach depends on an additional system measuring the distance from the lens to the scene. Knowing the optics-to-object distance and the focal length of the system, the correct lens position is estimated to obtain a focused image [15,16,17,18]. As we do not have such a system, we consider passive approaches, which rely on analyzing the images from the optical system to determine the best distance to the scene. Images should be evaluated as the Human Visual System (HVS) does. Then, the main difficulty is the interpretation of human subjectivity through algorithms that follow objective rules and criteria [19].

Over the years, several AF methods have been proposed in different domains, each one adapted to its specific context such as images of natural scenes, low-contrast images, microscopy, digital holography and so on [20,21,22]. In each case the concept of sharpness has to be defined to build an objective function. This function, or focus metric, can rely on statistics on the image data in the spatial, frequency and time-frequency domains. Under natural light microscopy, [23] proposed a method to measure the blurriness of calibration charts based on Bayes-Spectral-Entropy of Discrete Cosine transformed images. The Discrete Cosine Transform was also used in [24,25] to measure the sharpness level, distinguishing high frequencies from medium and low frequencies. A time-frequency analysis was proposed in [26] through the Wavelet Packet Transform, used for its ability to rise high frequencies which characterize the sharpness in their context. A comparison between sixteen AF methods for microscopy was made in [27], with histogram, intensity, statistic, derivative and transform-based methods. Each of these methods were proposed to match specific contexts and overcome the inherent constraints. These constraints do not match ours, for which a first approach was propose in [28] based on wavelet decomposition. However, this approach shows weaknesses when the acquisition context gets worse as it leads to noisier graphs. Image pre-processing could be a solution to this issue [29], but it is time-consuming and, therefore, not a preferred solution in our industrial context. We will see in the next section, how we propose to circumvent this issue of noisy graphs.

2.2. Specialized Autofocus for Viewing Integrated Circuits

In our context of focusing on an IC, infrared microscopy raises several constraints. The optical system produces images with low contrast where noise increases with magnification, and where random black spots are visible on the SOI (cf. Section 1.2). These two points make image analysis difficult and make usual AF methods ineffective.

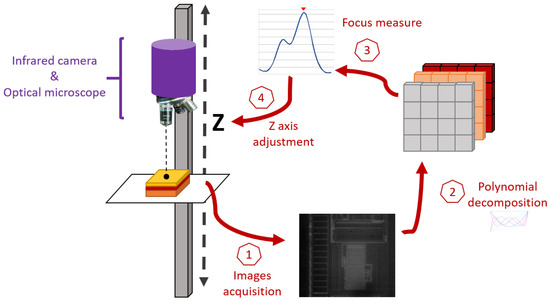

To deal with these specific constraints, an AF was proposed in [30] based on special IC viewing features, analyzed in the time-frequency domain. The Focus Metric based on POlynomial Decomposition (FMPOD) is proposed to evaluate the focus level of images acquired for a varying distance of the optical system from the SOI.

More than conventional multi-resolution analysis such as wavelet transforms, the polynomial transform is a flexible tool used to extract oriented features for a given frequency and scale. Such a transform consists in projecting an image onto a complete basis of orthogonal polynomials. A polynomial basis is defined on the Hilbert vector space equipped with a scalar product such that:

where represents the scalar product of functions and , and is a weighting function with . The basis is composed of bivariate polynomials:

where and are the degrees of variables and , respectively, and is the group of real coefficients of the polynomial. To ensure the orthogonality of the basis, its polynomials meet the scalar product condition:

This weighting function defines the basis type (e.g., Legendre, Hermite or Laguerre). Such a basis is noted , where its degree and such that:

As the projection of an image I on is modeled by the scalar product of this image by the base, it is essential to determine for the decomposition expectation and analysis.

Introduced in [31], polynomial image decomposition was used for its ability to extract textural features from images [32,33,34,35,36,37]. This decomposition is highly customizable by the choice of the basis type, its degree, and the discrete domain on which the projection is calculated.

Indeed, the whole polynomial transform process is discretized according to the multi-resolution analysis scheme [38]. The discrete process to transform a 2D function U defined on a domain of size is as follows:

- Given a resolution factor L, we define sub-domains of such that:with the sub-domain indices, whose size is:and such that .

- For each , the projection coefficients are given by:where is the restriction of U in the sub-domain and the polynomials defined in given sub-domain,

- The resulting multi-resolution structure is designed by grouping the projection coefficients according to polynomial degree from the basis used for the projection.

In [30], the use of a Legendre polynomial for the FMPOD is justified by its ability to raise features of interest in infrared images of IC. The authors propose a focus metric by analyzing the directional features highlighted through polynomial decomposition, features considered as relevant to describe a focused image. They found that specific polynomial combinations used from the basis allow the analysis of different directional features. The proposed focus metric is computed from the standard deviation of horizontal and vertical coefficients of this decomposition. It gives robustness to the focus algorithm regardless of the scale, contrasts and lightning, even despite several image degradations.

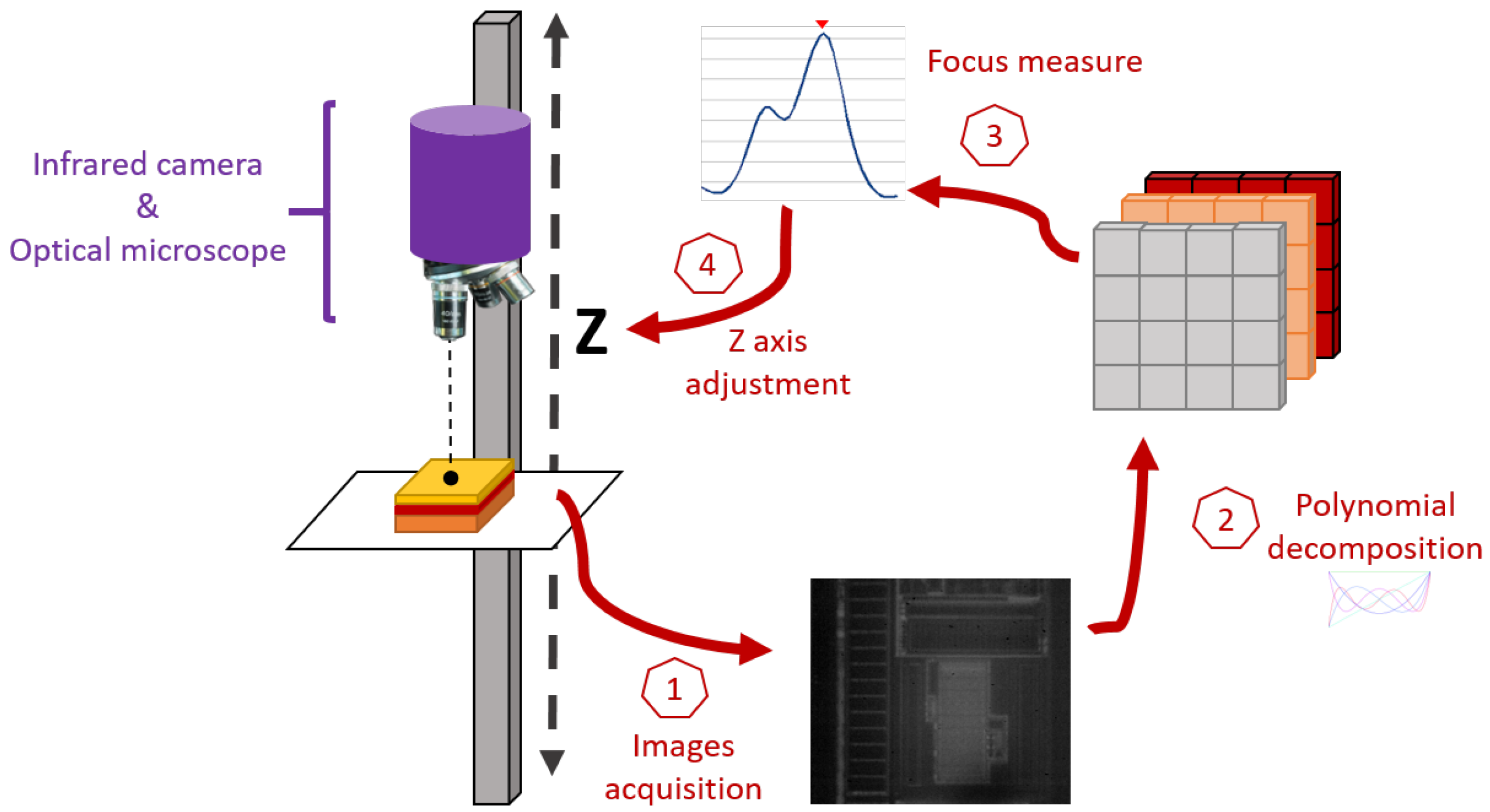

Figure 5 summarizes the autofocus process using FMPOD.

Figure 5.

Representation of the autofocus framework using the Focus Metric based on POlynomial Decomposition (FMPOD): images are acquired at different distances to the IC, decomposed to compute the focus measurement that gives the best focus position.

The FMPOD is robust against impulsive noise and other image degradations that typically occur for infrared IC viewing. Moreover, it is scale-invariant since it stays accurate whatever the magnification. Thus, this autofocus method allows the SOI to be accurately scanned to produce an overall view of it in the IR field. This view enables the automation of the targeting process conducted to disturb an IC structure during a characterization campaign. For that purpose, we investigated a pattern-recognition method to automate the location and the self-positioning of the targeting system on a given structure inside the IC.

3. Pattern Recognition

Today, pattern recognition is widely used with the development of learning-based methods, whose performance is well established. However, such approaches cannot apply, as no databases exist about IC structures in our context of infrared viewing. This issue is mostly due to the trade secret policies, but also to the fact that, in characterization processes, the studied IC may be prototypes designed with new technologies. On the opposite, methods based on signal analysis (e.g., cross-correlation [39]) are efficient for optimal recognition contexts where a template image exactly occurs in a target image, even though linear transforms can be tolerated (such as scale or rotation). In our study, a structure to locate in a target IC is subjected to linear transforms like scale, rotation and intensity variation: from one IC to the next, the material properties may imply less contrasts compared to the reference IC. Moreover, contaminants described in Section 1.2 could randomly and partially hide structure features (cf. Figure 2). To overcome all these constraints, we investigated another matching method based on graph theory. We studied graphs for pattern recognition in digital imaging, as template retrieval can be translated in terms of graph matching. Interpreting images as graphs allowed us to give a high abstraction level to problems and to break free from linear transforms applied to images. It then allowed us to overcome the context of image acquisition that may vary substantially. Above all, the key advantage of using graphs comes from the abstraction level of this method—identifying a pattern from a simple scheme of an image, which overrides the need for its imaging view. Indeed, in graph matching, we no longer consider images as we compare graphs, whatever the data they represent.

3.1. Graph-Based Methods

For decades, graphs have been studied for pattern recognition in digital imaging, as template retrieval in an image can be translated in term of graph matching. The first and basic approach for graph matching was to consider exact graph matching, that is, the one-to-one correspondence between the nodes of one graph and those of a similar graph modified according to a linear function. Practically, for image analysis, outliers could occur as a consequence of any kind of deformation. Outlier stands for unexpected node or edge, being modified, added or deleted. This case is dealt with inexact Graph Matching (GM) methods, where matching is approximated. Therefore, the problem is redefined as finding the best match between two sets of nodes and edges.

Several studies translate graphs into string- or path-like representations using methods such as random walks [40,41,42] or eigenvector decomposition of a graph adjacency matrix [43]. Strongly inspired from the Levenstein string-edit-distance [44], the Graph Edit Distance (GED) between two paths is defined by the structural modification needed to obtain one from the other (deleting, adding or modifying nodes and/or edges). Finding the path or sub-path that minimizes the edit cost is equivalent to finding the best matching nodes between graphs. Recently, the GED was reformulated into a Quadratic Assignement Problem (QAP), which is usually used for GM methods [45].

QAP was designed to solve the assignment of items from two different groups, while minimizing the global cost of each assignment. A general formulation of QAP, still used nowadays for graph matching, was proposed by Lawler [46] and allows the matching problem to be formulated as the maximization of an objective function:

where X is a binary correspondence matrix and K is a second-order affinity matrix encoding nodes and edges as pairwise similarities. With the aim of achieving better robustness against deformations, several methods were proposed, which consider features of higher orders to build the objective function, and transpose the graph topology into constraints for matching optimization. The problem is that the objective function is not convex, which makes an optimal global solution hard to achieve. Several methods proposed to relax the problem on the convex space through the Semi-Definite Programming (SDP) method, which ensures an optimal solution [47]. Despite its mathematical consistency, this approach suffers from its high complexity, critical for large graphs. On the opposite, methods like graduated assignment [46,48,49] try to approximate the solution using an ascent gradient. The approximation is guided by a graduated non-convexity procedure that aims to avoid sticking to local optima. Another approach proposed in [50], the path-following strategy, alternates convex and concave relaxations to gradually reach an optimal solution. Solutions reached by path-following methods are competitive in terms of precision compared to graduated assignments and attempts at simplifying their complexity are still ongoing in the doubly-stochastic domain [51].

Other graph-matching methods avoid the quadratic form of the assignment problem by elevating the graph formulation to a higher subjectivity level. For example, this is the case of methods based on hypergraphs such as described in [52,53], formulated by similarity tensors to create high-order features. In this way, the assignment is solved with linear approximation functions. A recent method [54] was proposed to compute contextual similarities between pairwise nodes and edges using a remastered random walk. This gives high-order features without dealing with hypergraphs, but still linearly solves the assignment. These higher-order methods avoid the quadratic optimization problem, which is an interesting point. For a larger review of the assignment optimization, the reader can refer to [55,56,57].

3.2. Application to Integrated Circuits

In the case of infrared microscopy applied to IC, the recognition of patterns faces two main difficulties. The first is inherent to the data topology, while the second comes from the imaging system. On the one hand, to answer conception constraints such as space and simplicity, the structures visible on the SOI correspond to rectangular shapes. As these structures can be basically described as orthogonal connected edges, data are highly redundant. From this description, it is easy to build graphs, but discriminating features have to be defined. We propose a solution in Section 3.2.1. On the other hand, our infrared images are poorly contrasted, noisy, and can be degraded by contaminants. Consequently, interpreting these images can lead to wrong graphs with outliers. Graphs containing outliers can be matched by using an inexact graph matching algorithm, which must also be compliant with the sub-graph matching technique to efficiently locate a small template graph in a larger one (approximately 10 nodes against 1000).

Section 3.2.2 describes such a flexible method.

3.2.1. From Integrated-Circuit Image to Labeled Graph

Let and be two labeled graphs (directed or not), where N represents a set of nodes, E a set of edges, and L a set of labels for nodes and edges. and correspond to the template image and the IC image, respectively.

Considering electronic structures as characterized by horizontal and vertical connected components, we pre-process our images by:

- anisotropic-like filtering to reduce the infrared granular noise, following the method proposed in [34], based on polynomial decomposition and of an image and its adaptive reconstruction,

- binarizing using an adaptive Gaussian thresholding,

- skeletonizing based on the distance transform [58] and its ridge extraction.

We decompose the thus obtained skeleton into a sum of linear components using the Hough transform [59], and we create nodes as the intersections between two components. Edges are created to link nodes if they belong to the same component. A graph can therefore be a disconnected graph containing connected subgraphs if some electronic structures are fully disconnected from the others. As theses graphs are topologically highly redundant due to the design of IC, labels must be computed to discriminate nodes and edges. In the following section we describe two textural and structural descriptors.

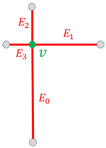

Structural Descriptor

Through this descriptor, we aim to synthesize the structural features of a local connexity relative to nodes and edges. For that purpose, let S be a superincreasing series of s.t.

For each node and edge in the graph, the proposed descriptor consists of a single value computed as a weighted sum of edge lengths connected to an element. It is important to order the edges by length. In other words,

- Considering a node and , the set of its x connected edges, ordered by length its structural descriptor is given bywhere is the length of edge .

- Considering an edge and , the set of its y connected edges, ordered by length its structural descriptor is given by

Finally, for scale-adaptive purpose, each element of the sum is normalized with respect to the greatest one. This process is represented for both nodes and edges in Table 1.

Table 1.

Structural descriptor computation for a node v and an edge e according to their neighborhood in the graph.

Supercrescent series were originally introduced in 1978 in the context of data encryption keys. They led to the creation of the first existing cryptographic systems, enjoyed for their simplicity, but quickly outperformed by advances in cryptography. In our context, the use of such a series is original and allows features to be stored to a single value. Indeed, thanks to the ordering, the weighting allows each value to be saved at a specific scale. Finally, this kind of descriptor takes the step over classical containers such as vectors due to its simplicity and storage requirement. Moreover, as we will see in Section 3.2.2, it is far easier to compare values than vectors.

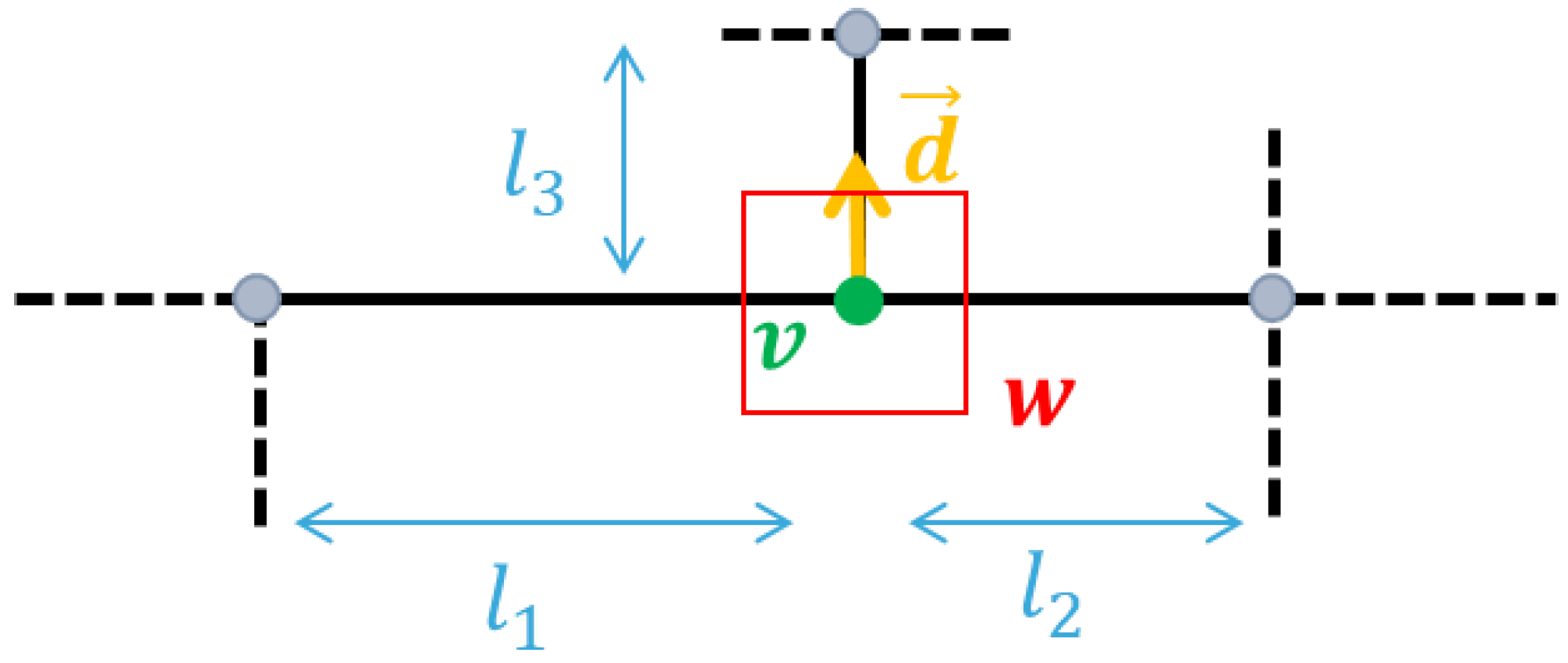

Textural Descriptor

The Histogram of Oriented Gradients (HOG) constitutes a powerful tool for texture description and it has been used extensively over the years. Its effectiveness has been proven by its many applications in shape recognition [60,61,62,62] thanks to its robust recognition abilities and its ease of use. The textural descriptor used in our study concerns an histogram of gradients containing nine bins covering the degree interval, according to [60]. This descriptor is computed for a window around each node, as they correspond to joint points between electronic line segments. In an invariance purpose,

- each window is centered on its corresponding node and of a size related to its smallest connected edges,

- if d is the main orientation provided by its connected edges, the gradients in the window are oriented according to d,

- the window is split into four sub-windows and each of the gradient intensity is weighted according to the global window intensity so that each of their histogram is less sensitive to non-linear brightness [60]. Number four is related to the maximal node connexity (at most four neighbors).

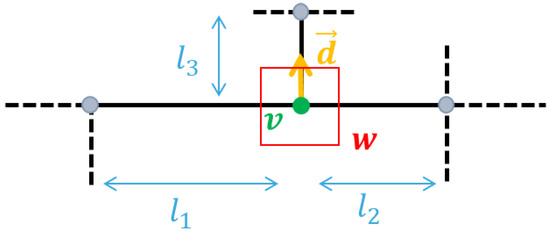

The resulting descriptor is then a vector of bins. The computation rules are represented in Figure 6.

Figure 6.

Required elements for invariant computation of our textural descriptor for a node v: the histogram is computed for the window W around v, of size proportional to , and whose gradients are relative to the main orientation described by its connected edges.

Finally, these two descriptors are used as labels to add discriminative data for nodes and edges. In that way,

- for each node , its label is bipartite such that

- for each edge , its label is such that .

By this labeling, we aim to collect as much available and relevant information as possible to complete our graphs. Since we are now able to transpose electronic structures as graphs, we can locate any template through graph matching methods.

3.2.2. Matching Method for Integrated Pattern Location

In our study, we need to find some structures specific to IC in IR images. Let us summarize the constraints we have to deal with: graphs can be very noisy; they are structurally very redundant and a the large size difference between graphs implies a huge number of outliers. Let correspond to an IR image containing a partial or full structure of an IC image, and let be the IR image of an IC (potentially unknown) to be characterized. The latter potentially contains a noisy version of the template. is likely to be much smaller than (in size and order).

Among the numerous graph matching methods proposed over the years, we found that the method proposed by Dutta et al. [54] matches our constraints. Indeed, their approach is based on the computation of higher order similarities between pairwise nodes and edges of input graphs. To that end, they computed a Tensor Product Graph (PG), whose nodes and edges consistently model potential matching across the two graphs. They computed a similarity measure for each element of this graph, and applied a diffusion process to it. Then, they computed new contextual similarities from this diffusion process. This approach takes the advantages of the hypergraphs-based techniques but without the complexity due to the use of costly manipulation of tensors. Thanks to this formulation, the matching problem is reduced to a linear optimization to which constraints may be added as the number of matches per node, the incidence degree of two associated nodes and so on [63]. We propose investigating this approach for our context, together with the discriminating features previously described. We also propose a numerical threshold to finally validate a match.

The PG of two graphs and is represented by the ⊗ operator, such that , with , where and . Each resulting node and edge correspond to a structurally possible pairwise match in the assignment problem (as an association graph does). Strong contextual information over nodes and edges can be computed from a PG, using a diffusion method [64] or a random walk [65] to produce neighborhood context information. In the second case and in a similar way as in [66], once pairwise similarities are used as transition probabilities of a walk, the probabilities of a walker ending on node n at instant t are used to compute a new contextual similarity. In [54], the backtrackless random walk is computed on the PG, from which the new contextual similarity is strengthened compared to usual walks. It is proven that the backtrackless walk has a more discriminating power of the resulting contextual similarities [67].

In our study, according to the labeling system previously proposed, similarities between nodes , and between edges , are given by:

To prevent confusion, note that e is used for edges and not for exponents (). Finally, these pairwise similarities are reevaluated by the backtrackless walk computed on the association graph . Acting like a diffusion process, and now incorporate contextual information. Once computed, the assignment problem can be reduced to a node and edge selection from according to the contextual similarity and is solved as a constrained linear optimization in polynomial time. Finally, the selected nodes and edges from , namely and , constitute the best match.

However, the selected nodes and edges could form disconnected sub-graphs, as:

- input graphs may be disconnected and PG preserves connectivity,

- no optimization condition constrains any global connectivity in the solution.

Then, a template graph could be matched to several sub-graphs in the target graph. This flexibility is necessary in noisy graphs. To find the final template location, we consider the largest connected sub-graph among every matched sub-graph. The template graph location is evaluated by projecting it on the final matched sub-graph. This selection is submitted to a numerical validation by computing the similarity rate:

where x is the size of the node set and y the size of the edge set . These two sets form the selected sub-graph. If is greater than a critical threshold, this solution is validated.

In order to test the robustness of such a location process (i.e., graph matching and projection), we present experimental results over a large data set in the next section.

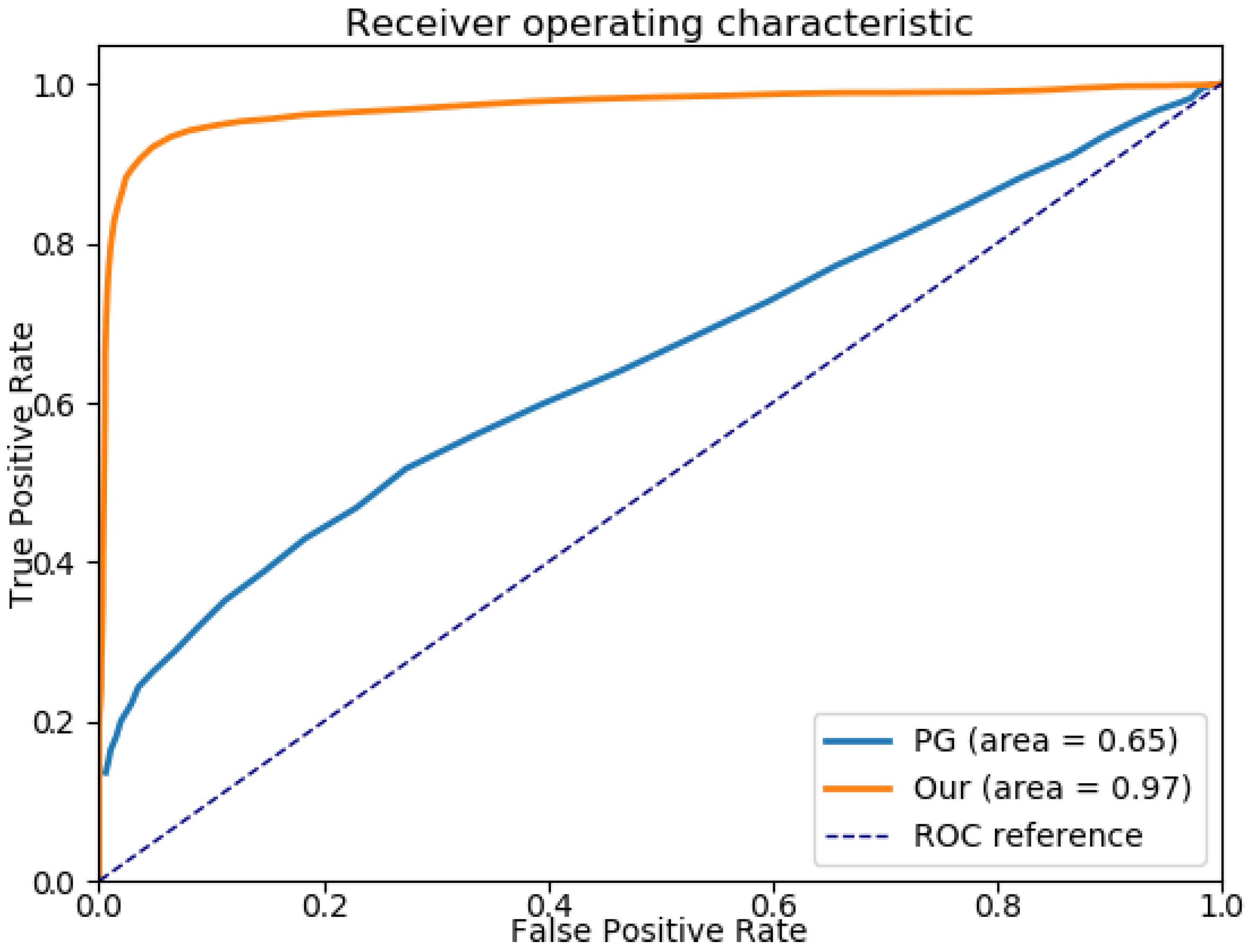

3.2.3. Location Method Validation

As far as we know, there exists no database of infrared views of integrated circuits. Moreover, the trade secret context does not allow us to create one. For that reason, we validated our method on synthetic data. In [68], a data set is built from synthetic images that match the practical constraints encountered in our context, where noisy graphs occur. In line with the design of IC, electronic structures are simulated through combination of random but orthogonal white lines. These templates are noised with a random multiplicative noise to simulate thermal noise. Synthetic IC are built from the combination of several templates that are scaled and rotated in black images. The resulting database is then composed of synthetic IC, on which we test our location framework of synthetic templates.

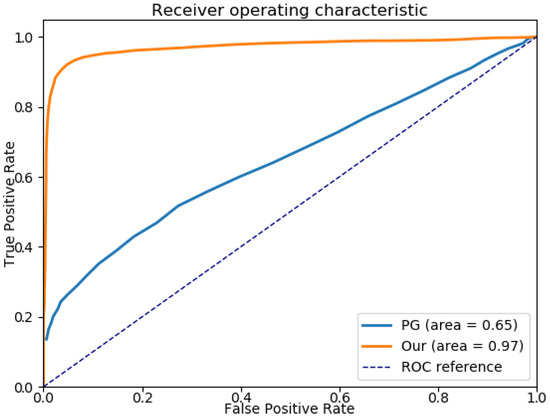

In this experiment, successful location corresponds to both a correct graph matching and a correct projection of this graph on the target one. In [68], we compared the success rate of our framework with the method proposed in [54], based on PG. Their overall performances are presented as true/false rates in Figure 7, where the area under each curve represents the overall location success rate for the corresponding method. The detection rate of the templates following our method is evaluated at approximately 97%, outperforming the one proposed by Dutta et al.

Figure 7.

ROC curves for Dutta’s method and our framework. The area under their respective curve represent the overall location success rate.

We did not make a comparison with additional methods, as this is not the purpose of this paper. The interested reader can refer to [51,53,54] for a benchmark of several methods. Also, if some other methods could be relevant for our experiment, few of them match our overall constraints of flexibility to accept outliers, be robust against noise and solve the problem in polynomial time.

From this validation, we present some practical experiments in Section 4. More specifically, the experiments include template location for two particular cases allowed by our graph-based approach—(1) both template and target images are real images; (2) the target image is a real image, and the template image is synthetic. This second case is critical, as only workable through graph matching approaches. Moreover, it makes sense in the experimental context that prototypes may include unknown structures, for which no image acquisition was ever made. Locating an electronic structure from its theoretical shape is then necessary for prototype characterization.

4. Experiments & Results

Implemented for IC secure characterization, the whole process presented in this paper brings automation and reproducibility where human driven tasks are usually required. In this section, we describe the experiment from the scan of an IC to characterize to the location of the structures of interest.

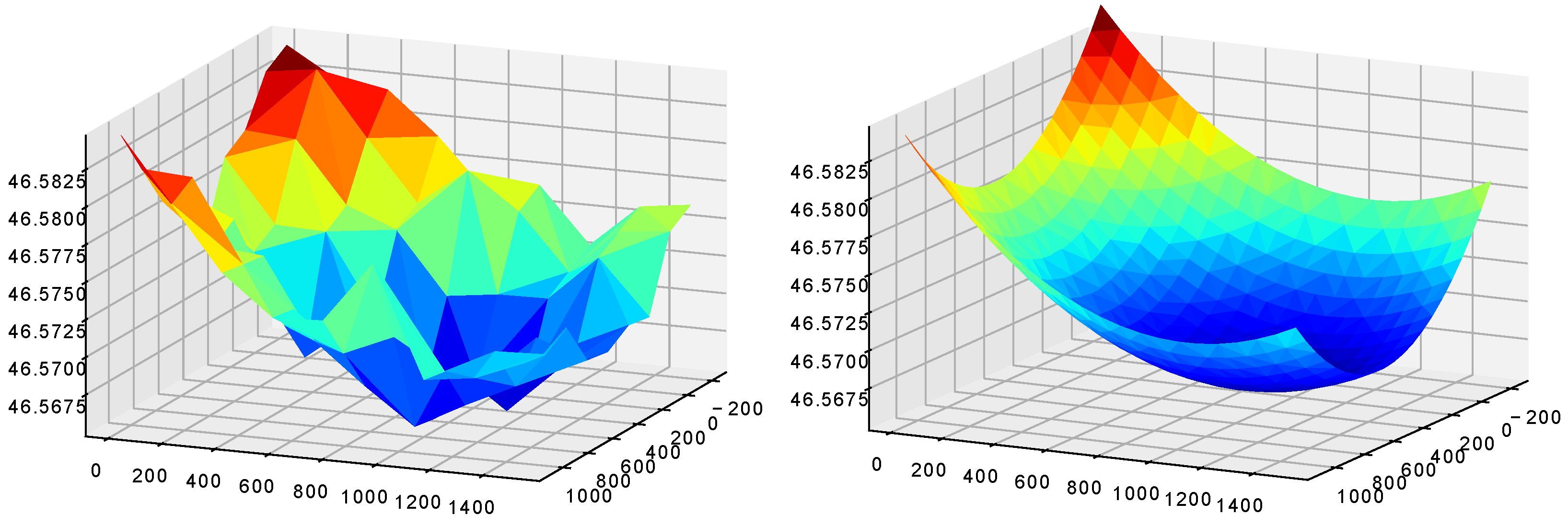

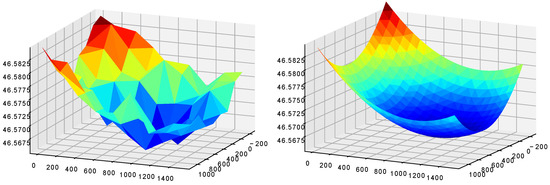

In this case of study, we positioned an IC under the optical system such that the SOI was visible through the silicon layer. To optimize the runtime of the scan process, we first precomputed a focus map using the autofocus system presented in Section 2. Several autofocuses were processed on the SOI. From these samples, we approximated a focus map by projection onto a 3D Gaussian surface, which is the surface that better fits the topology of such an SOI compared to a simple quadratic surface. This approximation step allowed us to refine the autofocus while keeping an overall reasonable runtime (around 2 min). Furthermore, this limited the impact of the lack of precision of the autofocus due to the presence of contaminants and/or the absence of details in the field of view to help determine whether the focus is correct or not. Figure 8 shows an example of focus map.

Figure 8.

The focus sampling (9 × 9) on the SOI (left), and its 3D Gaussian surface approximation (right).

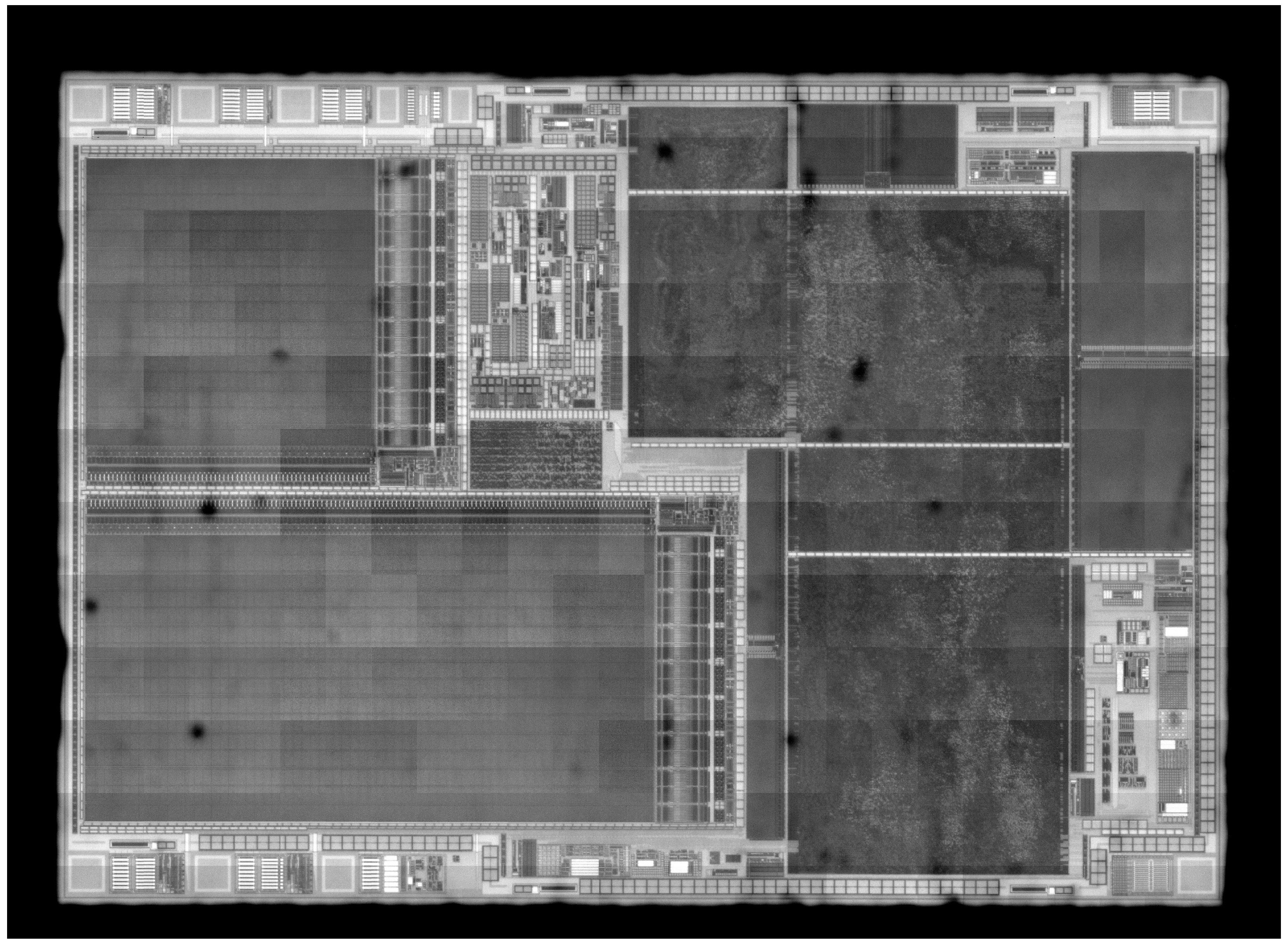

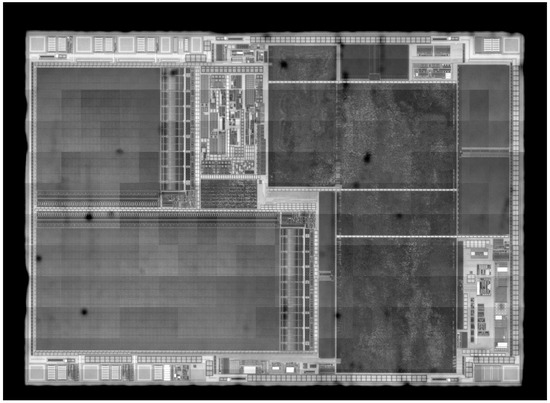

With this focus map, the image acquisition was carried out with a 0.5 s exposure time of the optical system in the iterative stepping scan to cover the entire SOI. To prevent possible lack of precision induced by motor stepping, the assembly process cross correlated the overlapping image borders with those of the 4 surrounding images (neighbors). The final image was obtained when each image was stitched to its neighbors with the best alignment. Figure 9 presents the resulting scan of the SOI in the infrared field.

Figure 9.

Resulting scan of conductive tracks (the of Interest (SOI) inside an IC under infrared microscope magnification 20×. The black spots correspond to contaminants present on the silicon surface. The small intensity gap between the mosaic images is camera dependent.

We built our graphs from the processed IR image of IC. At first, we applied an anisotropic smoothing through the polynomial decomposition method proposed in [34]. Then, we binarized the image using a local adaptive thresholding, and we detected the horizontal and the vertical components of the binary image. The graph was built from the connectivity analysis of these horizontal and vertical components. Similarly, the graph was built from the IR image of a structure to detect in the IC. The graph-matching based pattern location presented in Section 3 was used to detect in (if the corresponding structure was present). In the followings we show the location result for two use cases where the template graph was created from:

- (1)

- a photo acquisition of an electronic structure,

- (2)

- a synthetic image representing an electronic structure.

The first is a classical case where the template is known and has already been encountered, whereas the second case occurs if the template is only theoretically known (for example a prototype). In both cases, the target graph was created from a real IC view. However, for the sake of clarity, the presented images show partial IC views, as the corresponding graphs are dense and contain many details.

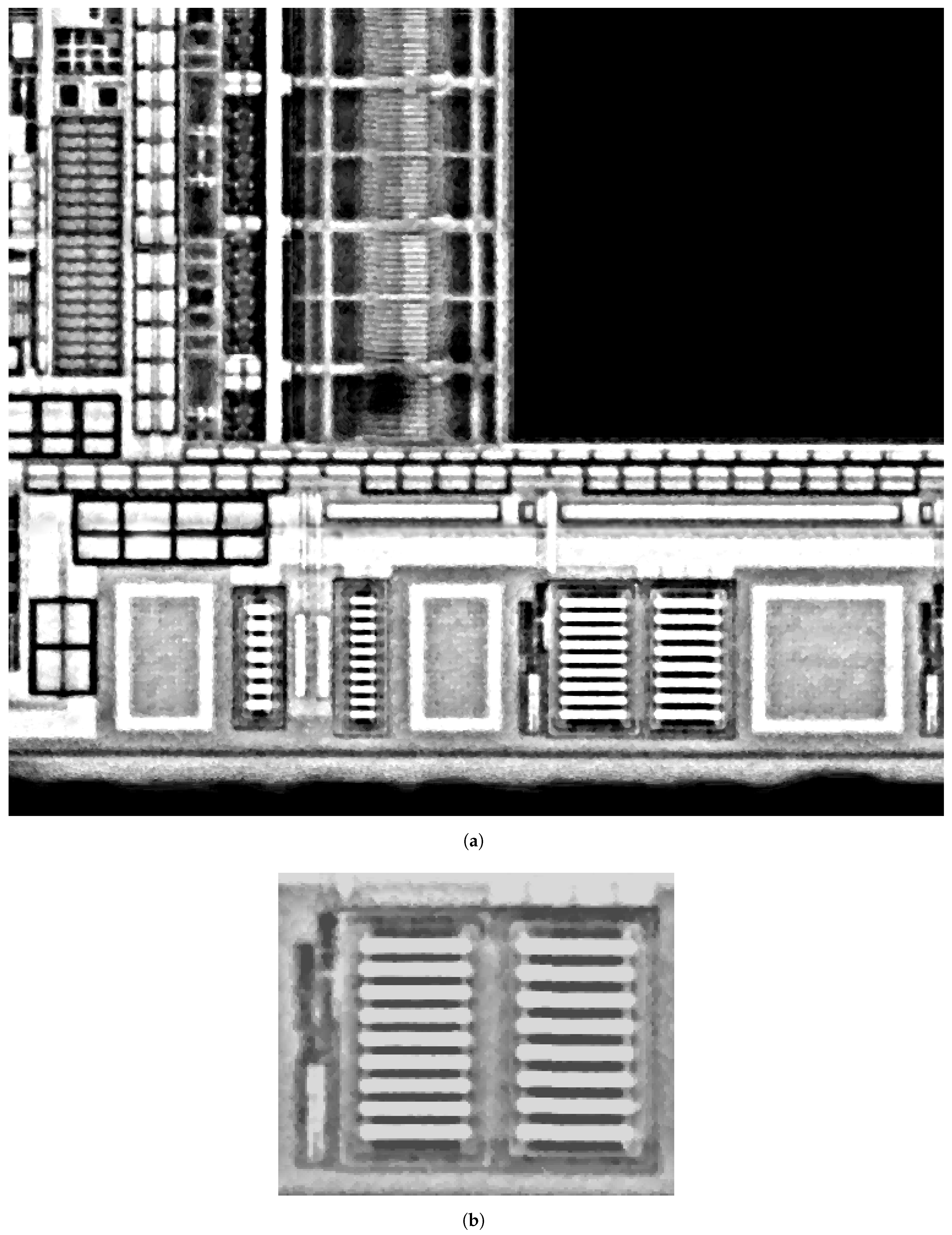

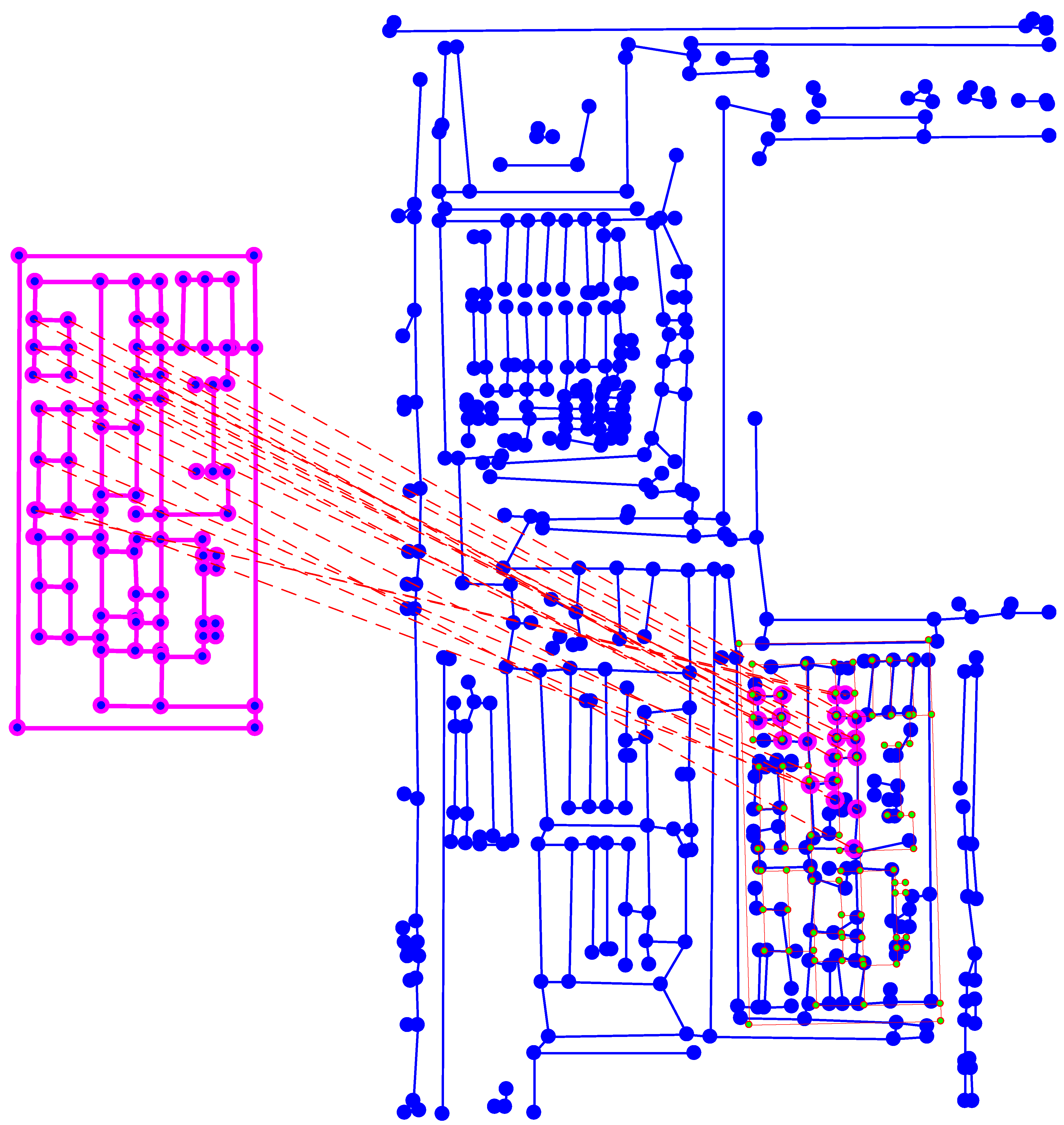

We experimented case (1) for the input template and IC illustrated in Figure 10. The location of this template is presented in Figure 11, where the template projection is confirmed by the similarity rate .

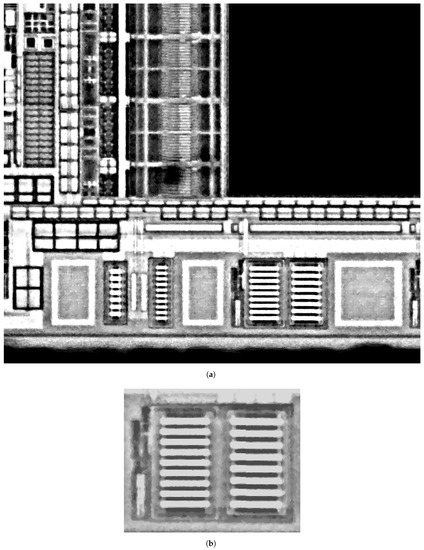

Figure 10.

Image acquisition under a optical magnifier of (a) a partial view of an integrated circuit (target) (b) an electronic structure of interest (template).

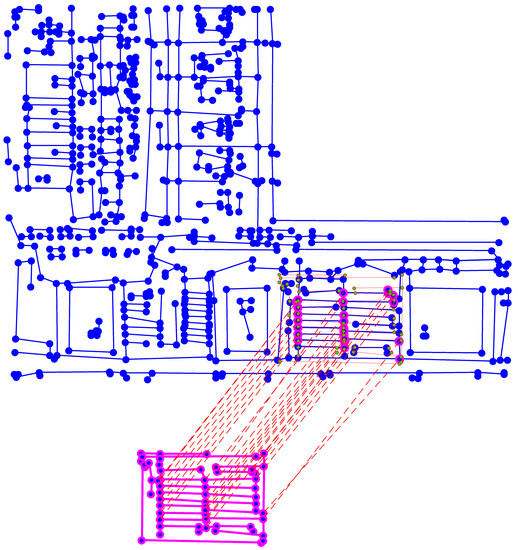

Figure 11.

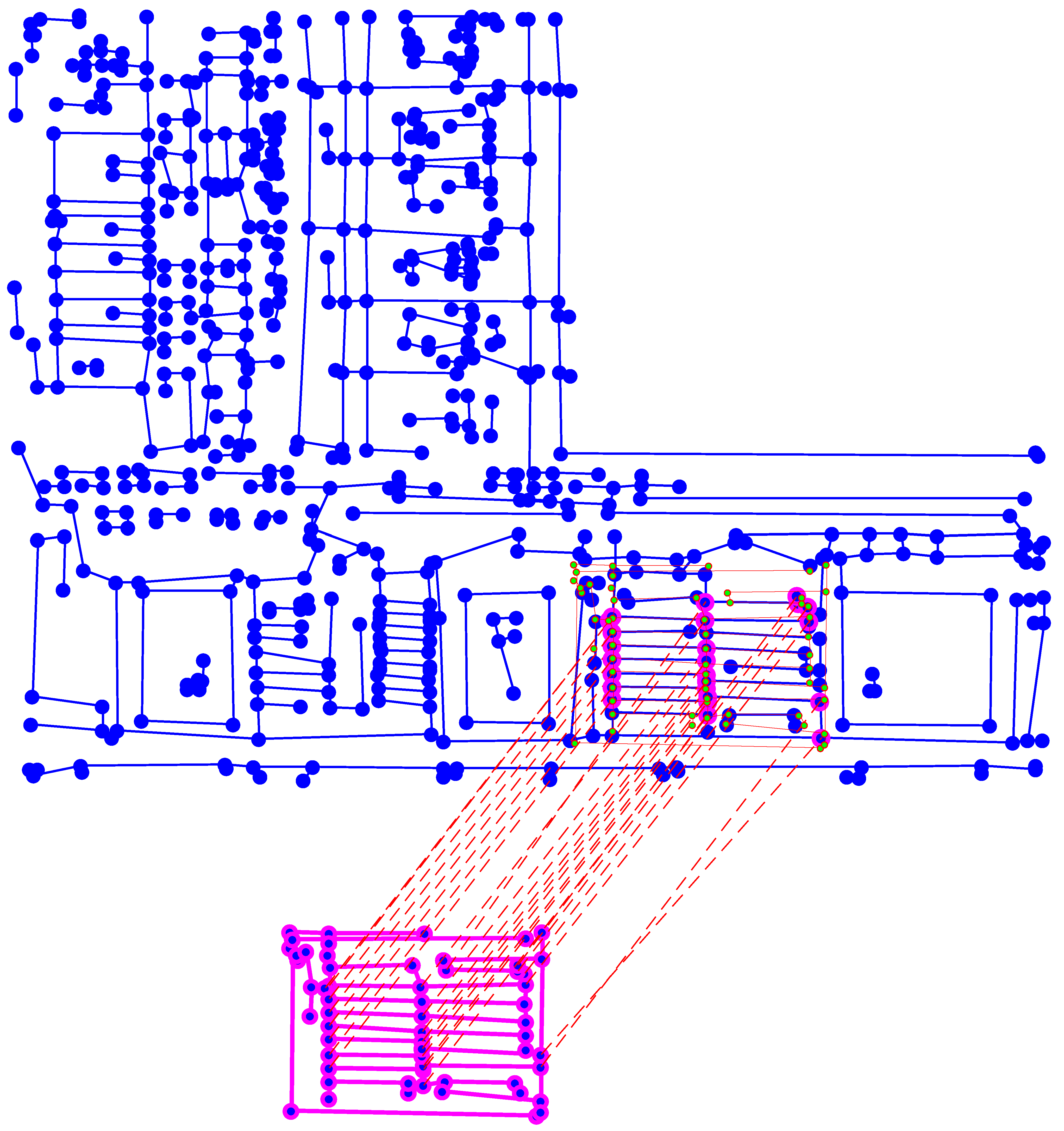

Projection of the template graph (bottom) located in the target graph (top). These graphs were created from the images shown in Figure 10. From the matching, only the largest connected sub-graph is kept (highlighted in pink in the target) and used to estimate the template projection. This projection is represented by the graph with green nodes and red edges. .

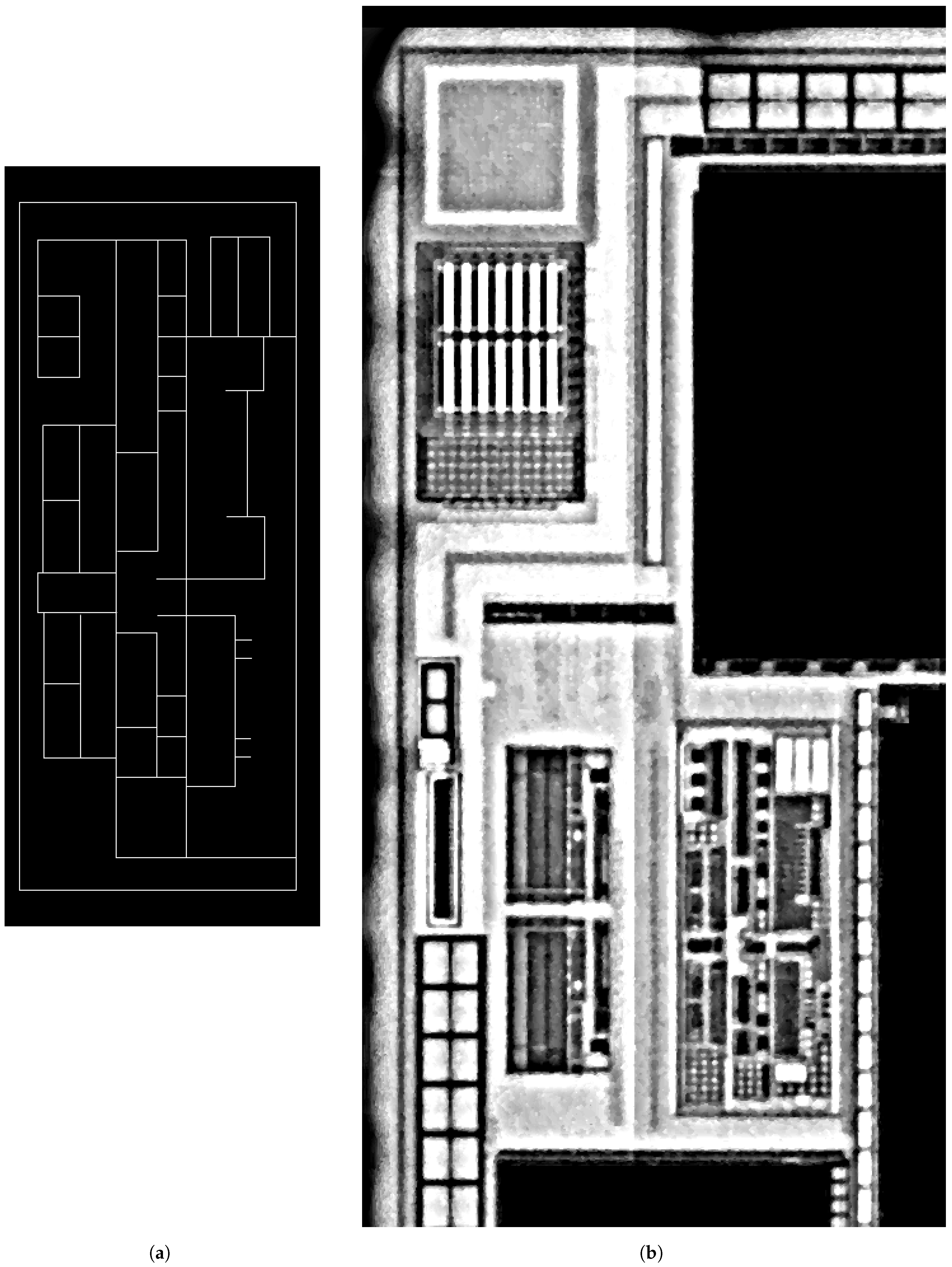

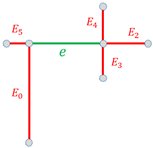

To validate the relevance of our location method in our context, we experimented case (2) where the provided template was a scheme. Figure 12 illustrates the input images while Figure 13 presents the location result, which was numerically validated by . The small number of highlighted matched nodes was a consequence of outliers between the schematic template and the target. This revealed a risk of lack of connectivity in the matching resolution.

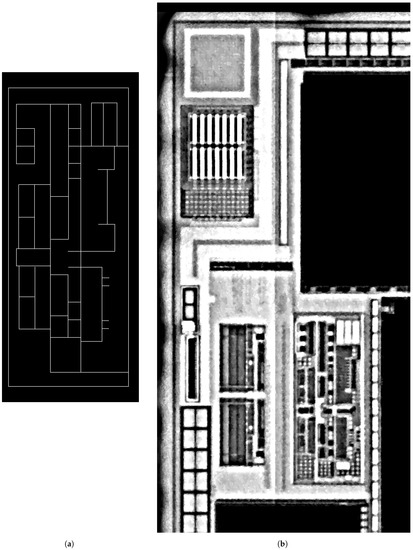

Figure 12.

A schematic template (a) to match and locate in the target image acquired under a optical magnifier (b).

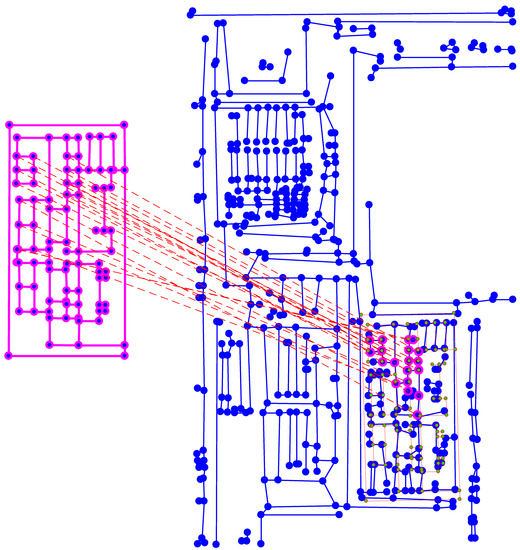

Figure 13.

Projection of the template graph (left) located in the target graph (right). These graphs were created from the images shown in Figure 12. From the matching, only the largest connected sub-graph is kept (highlighted in pink in the target) and used to estimate the template projection. This projection is represented by the graph with green nodes and red edges. .

Both experiments were conducted successfully. Validation over a synthetic database and the experiments presented here may constitute sufficient evidence of the validity of our method and good reason to continue investigating it: the presented results, from the IC viewing system to the template location system, show that our framework achieved the purpose of this study.

5. Conclusions

In the context of IC secure characterization, several tasks “traditionally” accomplished with the human visual system can be automated. This paper proposes state-of-the-art methods, compatible with infrared microscopy constraints, for automating structure recognition (location) in an integrated circuit for the secure characterization process. To that aim, we proposed a framework that uses different tools to scan conductive tracks in an integrated circuit and locate the patterns to be characterized. To conduct an infrared microscopy scan of the integrated circuit we used a specialized autofocus system that analyzes image features through a discrete polynomial image transformation. Locating the structures of interest from the scan relies on a graph-matching approach whose efficiency proves the relevance of the labeling step, and the flexibility of the assignment approach. Moreover, the use of a graph-matching approach allows the location of a template from its simple design, which is an advantage that can be of real interest for innovating companies that work with innovative components. By improving precision in spatial location, the proposed framework ensures reproducibility during integrated-circuit secure characterization.

To go further, the use of tools proposed in this paper could lead to additional automation that usually requires the human visual system and its understanding abilities. Firstly, the autofocus system could be used for refining the IC positioning on the characterization bench, for example, for tilt adjustment and rotation correction. It could also be useful for focusing the laser beam, visible as a bright spot on the conductive tracks, and for detecting contaminants, which are visible as black spots. Secondly, the scan process that produces a full view of the IC can be computed through different magnifying lenses. These views could be combined to produce a multi-resolution view, as each magnification provides a precision level. The use of such a view could gain considerable time in the characterization of IC.

Author Contributions

Conceptualization, R.A. and D.M.; methodology, R.A. and D.M.; software, R.A., R.E.M.; validation, R.A., D.M., P.-Y.L., D.F. and J.-L.D.; formal analysis, R.A., D.M., J.-L.D., D.F., P.-Y.L.; investigation, R.A., D.M.; data curation, R.A.; writing—original draft preparation, R.A.; writing, review and editing, R.A., D.M., J.-L.D., D.F., P.-Y.L., and J.-M.B.; visualization, R.A.; supervision, D.M., P.-Y.L., D.F., J.-L.D. and J.-M.B.; project administration, D.M.; funding acquisition, D.M., J.-L.D., D.F., P.-Y.L., and J.-M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Association Nationale de la Recherche et de la Technologie (ANRT) convention number 2017/0167.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rahaman, H.; Champion, E. To 3D or Not 3D: Choosing a Photogrammetry Workflow for Cultural Heritage Groups. Herit. Sci. 2019, 2, 112. [Google Scholar] [CrossRef]

- Kang, T.W. 3D Image Scan Automation Planning based on Mobile Rover. J. Korea Acad. Ind. Coop. Soc. 2019, 20, 1–7. [Google Scholar] [CrossRef]

- Davis, A.; Belton, D.; Helmholz, P.; Bourke, P.; McDonald, J. Pilbara rock art: Laser scanning, photogrammetry and 3D photographic reconstruction as heritage management tools. Herit. Sci. 2017, 5, 1–16. [Google Scholar] [CrossRef]

- Bulgarevich, D.S.; Tsukamoto, S.; Kasuya, T.; Demura, M.; Watanabe, M. Pattern recognition with machine learning on optical microscopy images of typical metallurgical microstructures. Sci. Rep. 2018, 8, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Kaur, P.; Krishan, K.; Sharma, S.K.; Kanchan, T. Facial-recognition algorithms: A literature review. Med. Sci. Law 2020, 60, 131–139. [Google Scholar] [CrossRef] [PubMed]

- Rosyda, S.S.; Purboyo, T.W. A Review of Various Handwriting Recognition Methods. Int. J. Appl. Eng. Res. 2018, 13, 1155–1164. [Google Scholar]

- Ngugi, L.C.; Abelwahab, M.; Abo-Zahhad, M. Recent advances in image processing techniques for automated leaf pest and disease recognition—A review. Inf. Process. Agric. 2020. [Google Scholar] [CrossRef]

- Asgari, S.; Scalzo, F.; Kasprowicz, M. Pattern Recognition in Medical Decision Support. BioMed Res. Int. 2019, 2019, 1–2. [Google Scholar] [CrossRef]

- Bertocci, F.; Grandoni, A.; Djuric-Rissner, T. Scanning Acoustic Microscopy (SAM): A Robust Method for Defect Detection during the Manufacturing Process of Ultrasound Probes for Medical Imaging. Sensors 2019, 19, 4868. [Google Scholar] [CrossRef] [PubMed]

- Zhao, G.; Qin, S. High-Precision Detection of Defects of Tire Texture Through X-ray Imaging Based on Local Inverse Difference Moment Features. Sensors 2018, 18, 2524. [Google Scholar] [CrossRef]

- Aryan, P.; Sampath, S.; Sohn, H. An Overview of Non-Destructive Testing Methods for Integrated Circuit Packaging Inspection. Sensors 2018, 18, 1981. [Google Scholar] [CrossRef] [PubMed]

- Courbon, F. Retro-Conception Matérielle Partielle Appliquée à L’injection Ciblée de Fautes Laser et à la Détection Efficace de Chevaux de Troie Matériels. Ph.D. Thesis, Mines Saint-Etienne, Saint-Etienne, France, 2015. [Google Scholar]

- Courbon, F.; Fournier, J.J.A.; Loubet-Moundi, P.; Tria, A. Combining Image Processing and Laser Fault Injections for Characterizing a Hardware AES. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2015, 34, 928–936. [Google Scholar] [CrossRef]

- Neumann, B. Autofokussierung. Leitz-Mitt. Wiss. Techn. 1985, 8, 228–232. [Google Scholar]

- Neumann, B.; Dämon, A.; Hogenkamp, D.; Beckmann, E.; Kollmann, J. A laser-autofocus for automatic microscopy and metrology. Sens. Actuators 1989, 17, 267–272. [Google Scholar] [CrossRef]

- Hansard, M.; Lee, S.; Choi, O.; Horaud, R. Time of Flight Cameras: Principles, Methods, and Applications; SpringerBriefs in Computer Science, Springer Science & Business Media: Berlin, Germany, 2012; p. 95. [Google Scholar] [CrossRef]

- Dutton, N.; Yang, X.; Channon, K. Time of Flight Sensing for Brightness and Autofocus Control in Image Projection Devices. U.S. Patent 2018091784A1, 29 March 2018. [Google Scholar]

- Bathe-Peters, M.; Annibale, P.; Lohse, M.J. All-optical microscope autofocus based on an electrically tunable lens and a totally internally reflected IR laser. Opt. Express 2018, 26, 2359–2368. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Lu, L. Why is image quality assessment so difficult? In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2002), Orlando, FL, USA, 13–17 May 2002; pp. 3313–3316. [Google Scholar] [CrossRef]

- Krotkov, E. Focusing. Int. J. Comput. Vis. 1988, 1, 223–237. [Google Scholar] [CrossRef]

- Xu, X.; Wang, Y.; Tang, J.; Zhang, X.; Liu, X. Robust Automatic Focus Algorithm for Low Contrast Images Using a New Contrast Measure. Sensors 2011, 11, 8281–8294. [Google Scholar] [CrossRef]

- Fonseca, E.; Fiadeiro, P.; Pereira, M.; Pinheiro, A. Comparative analysis of autofocus functions in digital in-line phase-shifting holography, Autofocus. Appl. Opt. 2016, 55, 7663. [Google Scholar] [CrossRef] [PubMed]

- Podlech, S. Autofocus by Bayes Spectral Entropy Applied to Optical Microscopy. Microsc. Microanal. 2016, 22, 199–207. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, H.; Ma, Y. A new auto-focus measure based on medium frequency discrete cosine transform filtering and discrete cosine transform. Appl. Comput. Harmon. Anal. 2016, 40, 430–437. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Y.; Xiong, Z.; Li, J.; Zhang, M. Focus and Blurriness Measure Using Reorganized DCT Coefficients for an Autofocus Application. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 15–30. [Google Scholar] [CrossRef]

- Fan, Z.; Chen, S.; Hu, H.; Chang, H.; Fu, Q. Autofocus algorithm based on Wavelet Packet Transform for infrared microscopy. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; Volume 5, pp. 2510–2514. [Google Scholar] [CrossRef]

- Zhang, X.; Jia, C.; Xie, K. Evaluation of Autofocus Algorithm for Automatic Dectection of Caenorhabditis elegans Lipid Droplets. Prog. Biochem. Biophys. (PBB) 2016, 43, 167–175. [Google Scholar] [CrossRef]

- Abelé, R.; Fronte, D.; Liardet, P.; Boï, J.; Damoiseaux, J.; Merad, D. Autofocus in infrared microscopy. In Proceedings of the 23rd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA 2018), Torino, Italy, 4–7 September 2018; pp. 631–637. [Google Scholar] [CrossRef]

- Srivastava, A.K.; Kandpal, N. Design and Implementation of a Real-Time Autofocus Algorithm for Thermal Imagers. In Proceedings of the International Conference on Computer Vision and Image Processing (CVIP 2016), Roorkee, India, 26–28 February 2016; Raman, B., Kumar, S., Roy, P.P., Sen, D., Eds.; Springer: Singapore, 2017; Volume 1, pp. 377–387. [Google Scholar] [CrossRef]

- Abelé, R.; El Moubtahij, R.; Fronte, D.; Liardet, P.Y.; Damoiseaux, J.L.; Boï, J.M.; Merad, D. FMPOD: A Novel Focus Metric Based on Polynomial Decomposition for Infrared Microscopy. IEEE Photonics J. 2019, 11, 1–17. [Google Scholar] [CrossRef]

- Eden, M.; Unser, M.; Leonardi, R. Polynomial representation of pictures. Signal Process. 1986, 10, 385–393. [Google Scholar] [CrossRef]

- Kihl, O.; Tremblais, B.; Augereau, B. Multivariate orthogonal polynomials to extract singular points. In Proceedings of the International Conference on Image Processing (ICIP 2008), San Diego, CA, USA, 12–15 October 2008; pp. 857–860. [Google Scholar] [CrossRef]

- Kihl, O. Modélisations Polynomiales Hiérarchisées Applications à L’analyse de Mouvements Complexes. Ph.D. Thesis, Université de Poitiers, Poitiers, France, 2012. [Google Scholar]

- El Moubtahij, R.; Augereau, B.; Tairi, H.; Fernandez-Maloigne, C. A polynomial texture extraction with application in dynamic texture classification. In Proceedings of the Twelfth International Conference on Quality Control by Artificial Vision (CQAV 2015), Le Creusot, France, 3–5 June 2015; Volume 9534, p. 953407. [Google Scholar] [CrossRef]

- Bordei, C.; Bourdon, P.; Augereau, B.; Carré, P. Polynomial based texture representation for facial expression recognition, polynomial. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2014), Florence, Italy, 4–9 May 2014; pp. 529–533. [Google Scholar] [CrossRef]

- El Moubtahij, R.; Augereau, B.; Tairi, H.; Fernandez-Maloigne, C. Spatial image polynomial decomposition with application to video classification. J. Electron. Imaging 2015, 24, 061114. [Google Scholar] [CrossRef][Green Version]

- El Moubtahij, R. Transformations Polynomiales: Applications à L’estimation de Mouvements et à la Classification de Vidéos. Ph.D. Thesis, Université de Poitiers, Poitiers, France, 2016. [Google Scholar]

- Mallat, S.G. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Lewis, J.P. Fast Normalized Cross-Correlation. Circuits Syst. Signal Process. 2009, 28, 819–843. [Google Scholar]

- Robles-Kelly, A.; Hancock, E.R. String Edit Distance, Random Walks And Graph Matching. Int. J. Pattern Recognit. Artif. Intell. 2004, 18, 315–327. [Google Scholar] [CrossRef]

- Chen, X.; Huo, H.; Huan, J.; Vitter, J.S. Efficient Graph Similarity Search in External Memory. IEEE Access 2017, 5, 4551–4560. [Google Scholar] [CrossRef]

- Wang, R.; Fang, Y.; Feng, X. Efficient Parallel Computing of Graph Edit Distance. In Proceedings of the 35th IEEE International Conference on Data Engineering Workshops, ICDE Workshops 2019, Macao, China, 8–12 April 2019; pp. 233–240. [Google Scholar] [CrossRef]

- Robles-Kelly, A.; Hancock, E.R. Graph edit distance from spectral seriation. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 365–378. [Google Scholar] [CrossRef]

- Levenshtein, V.I. Binary codes capable of correcting deletions, insertions, and reversals. Sov. Phys. Dokl. 1966, 10, 707–710. [Google Scholar]

- Bougleux, S.; Brun, L.; Carletti, V.; Foggia, P.; Gaüzère, B.; Vento, M. Graph edit distance as a quadratic assignment problem. Pattern Recognit. Lett. 2017, 87, 38–46. [Google Scholar] [CrossRef]

- Lawler, E.L. The Quadratic Assignment Problem. Manag. Sci. 1963, 9, 586–599. [Google Scholar] [CrossRef]

- Lyzinski, V.; Fishkind, D.E.; Fiori, M.; Vogelstein, J.T.; Priebe, C.E.; Sapiro, G. Graph Matching: Relax at Your Own Risk. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 60–73. [Google Scholar] [CrossRef]

- Gold, S.; Rangarajan, A. A Graduated Assignment Algorithm for Graph Matching. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 377–388. [Google Scholar] [CrossRef]

- Hazan, E.; Levy, K.Y.; Shalev-Shwartz, S. On Graduated Optimization for Stochastic Non-Convex Problems. In Proceedings of the 33nd International Conference on Machine Learning (ICML 2016), New York City, NY, USA, 19–24 June 2016; Volume 48, pp. 1833–1841. [Google Scholar] [CrossRef]

- Zaslavskiy, M.; Bach, F.; Vert, J. A Path Following Algorithm for the Graph Matching Problem. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 2227–2242. [Google Scholar] [CrossRef] [PubMed]

- Zhou, F.; De la Torre, F. Factorized Graph Matching. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1774–1789. [Google Scholar] [CrossRef]

- Duchenne, O.; Bach, F.R.; Kweon, I.; Ponce, J. A Tensor-Based Algorithm for High-Order Graph Matching. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2383–2395. [Google Scholar] [CrossRef]

- Yan, J.; Li, C.; Li, Y.; Cao, G. Adaptive Discrete Hypergraph Matching. IEEE Trans. Cybern. 2018, 48, 765–779. [Google Scholar] [CrossRef]

- Dutta, A.; Lladós, J.; Bunke, H.; Pal, U. Product graph-based higher order contextual similarities for inexact subgraph matching. Pattern Recognit. 2018, 76, 596–611. [Google Scholar] [CrossRef]

- Yan, J.; Yin, X.C.; Lin, W.; Deng, C.; Zha, H.; Yang, X. A Short Survey of Recent Advances in Graph Matching. In Proceedings of the 2016 ACM on International Conference on Multimedia Retrieval (ICMR 2016), New York, NY, USA, 6–9 June 2016; pp. 167–174. [Google Scholar] [CrossRef]

- Emmert-Streib, F.; Dehmer, M.; Shi, Y. Fifty years of graph matching, network alignment and network comparison. Inf. Sci. 2016, 346–347, 180–197. [Google Scholar] [CrossRef]

- Goyal, P.; Ferrara, E. Graph embedding techniques, applications, and performance: A survey. Knowl.-Based Syst. 2018, 151, 78–94. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D. Distance Transforms of Sampled Functions. Theory Comput. 2012, 8, 415–428. [Google Scholar] [CrossRef]

- Hough, P.V.C. Method and Means For recognizing Complex Patterns. U.S. Patent 3,069,654, 18 December 1962. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2005), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- McConnell, R.K. Method of and Apparatus for Pattern Recognition. U.S. Patent 4,567,610, 28 January 1986. [Google Scholar]

- Soler, J.D.; Beuther, H.; Rugel, M.; Wang, Y.; Clark, P.C.; Glover, S.C.O.; Goldsmith, P.F.; Heyer, M.; Anderson, L.D.; Goodman, A.; et al. Histogram Of Oriented Gradients: A Technique For The Study Of Molecular Cloud Formation. Astron. Astrophys. 2019, 622, A166. [Google Scholar] [CrossRef]

- Le Bodic, P.; Héroux, P.; Adam, S.; Lecourtier, Y. An integer linear program for substitution-tolerant subgraph isomorphism and its use for symbol spotting in technical drawings. Pattern Recognit. 2012, 45, 4214–4224. [Google Scholar] [CrossRef]

- Yang, X.; Prasad, L.; Latecki, L.J. Affinity Learning with Diffusion on Tensor Product Graph. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 28–38. [Google Scholar] [CrossRef] [PubMed]

- Le, T.N.; Luqman, M.M.; Dutta, A.; Héroux, P.; Rigaud, C.; Guérin, C.; Foggia, P.; Burie, J.C.; Ogier, J.M.; Lladós, J.; et al. Subgraph spotting in graph representations of comic book images. Pattern Recognit. Lett. 2018, 112, 118–124. [Google Scholar] [CrossRef]

- Cho, M.; Lee, J.; Lee, K.M. Reweighted Random Walks for Graph Matching. In Proceedings of the Computer Vision- ECCV 2010-11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Part V. pp. 492–505. [Google Scholar] [CrossRef]

- Aziz, F.; Wilson, R.C.; Hancock, E.R. Backtrackless Walks on a Graph. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 977–989. [Google Scholar] [CrossRef] [PubMed]

- Abelé, R.; Damoiseaux, J.; Fronte, D.; Liardet, P.; Boï, J.; Merad, D. Graph Matching Applied For Textured Pattern Recognition. In Proceedings of the IEEE International Conference on Image Processing (ICIP 2020), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 1451–1455. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).