Abstract

We deal with the use of different metrics in the framework of the Singular Value Optimization (SVO) technique for near-field antenna characterization. SVO extracts the maximum amount of information on an electromagnetic field over a certain domain from field samples on an acquisition domain, with a priori information on the source, e.g., support information. It determines the field sample positions by optimizing a functional featuring the singular value dynamics of the radiation operator and representing a measure of the information collected by the field samples. Here, we discuss in detail and compare the use, in the framework of SVO, of different objective functionals and so of different information measures: Shannon number, mutual information, and Fisher information. The numerical results show that they yield a similar performance.

1. Introduction

Extracting the maximum amount of information on an electromagnetic field over a specified domain from field measurements on an acquisition domain, with a priori information on the source, is relevant in a large number of applications.

In optics, we mention the object restoration and image extrapolation problems, in particular, extrapolation outside the pupil from measurements within the pupil [1] as well as image interpolation [2]. In this framework, quantifying the maximum amount of information on a source acquirable from field measurements has been long studied to provide bounds to the information extraction techniques [3,4,5,6,7,8,9,10,11,12,13].

In [3,4,5], the number of significant degrees of freedom of an image is considered as a measure of information and, in [3], its equivalence with the Shannon number is pointed out. The transinformation measure, exploiting the concept of mutual information, is used in [6,7,8,9] to define the information supplied by data samples in a linear imaging system, also accounting for a priori information, which can be then maximized by suitably positioning the acquired samples. The case of both coherent and incoherent imaging has been dealt with in [10]. A formalism for defining, evaluating, and optimizing the degrees of freedom of an optical system has been introduced in [11] and refined in [12]. Finally, the information gained by performing a measurement on a physical system is assessed by the Fisher information in [13].

In microwave and millimeter-wave applications, such problem is of interest for Near-Field/Far-Field (NFFF) [14,15] or Very Near-Field/ Far-Field (V-NFFF) [16] transformations and also for electromagnetic compatibility [17].

To this end, an approach for the fast NF characterization formulating the problem as a linear inverse one has been developed in [14,15,16,17,18]. For such an approach, a linear operator links the source to the field measured over the observation domain so that the source properties can be reconstructed thanks to a proper inversion procedure. When both spectral and spatial support information about the source are available, a reduction, even remarkable, of the number of measurement points can be obtained. In particular, in [14,15], the number and the distribution of the optimal measurement locations are determined by means of the Singular Value Optimization (SVO) procedure [14,15,16,17,18,19], aimed to optimize a proper functional involving the Singular Value Behavior (SVB) of the relevant operator. The effectiveness of the approach has been experimentally validated for several sources and scanning geometries configurations [14,15], leading to a drastic reduction of the number of the measurement samples and of the scanning path length, with respect to conventional as well as optimized approaches. In more detail, in [14], a plane-polar acquisition geometry is used and results over a Ku-band horn antenna are presented while, in [15], “quasi-raster” and plane-polar multi-frequency scannings have been dealt with and a single-ridged broadband horn antenna characterized. The optimality of SVO in terms of capability to reach the same performance of optimal, virtual receiving antennas provided by the application of the Singular Value Decomposition (SVD) approach has been recently shown in [19].

In SVO, the SVB is expressed in terms of a quality parameter. Throughout SVO literature, such a quality parameter has always been expressed in terms of the Shannon number which indicates the amount of information collected through the measured field [3]. Obviously, other ways to measure the information can be adopted in the SVO procedure. Accordingly, it is interesting to compare the performance that different metrics (points of view) have with respect to the SVO approach, a point that is missing throughout the literature.

The novelty of this paper is presenting different metrics for SVO related to different objective functionals handling the SVB of the relevant operator. Besides the Shannon number, mutual information [5,6,7,8,9] and Fisher information [20,21,22] metrics will be adopted in the reported analysis.

For applications other than SVO, different information metrics are indeed often used in preconditioning strategies [23] to improve the convergence properties of quasi-Newton optimizers [24], to quantify the ill-posedness of the reconstruction [25], and to explicitly describe the trade-off between accuracy and resolution [26,27].

The paper is organized as follows. In Section 2, the linear inverse problem dealt with in this paper is introduced in an abstract way for a simplified scalar problem. Section 3 is devoted at first to a short recall of the SVO technique. Later on, the SVO functionals arising from the use of the three differently considered metrics are detailed and discussed. In Section 4, the results are presented. Finally, in Section 5, conclusions follow and future developments are foreseen.

2. The Problem

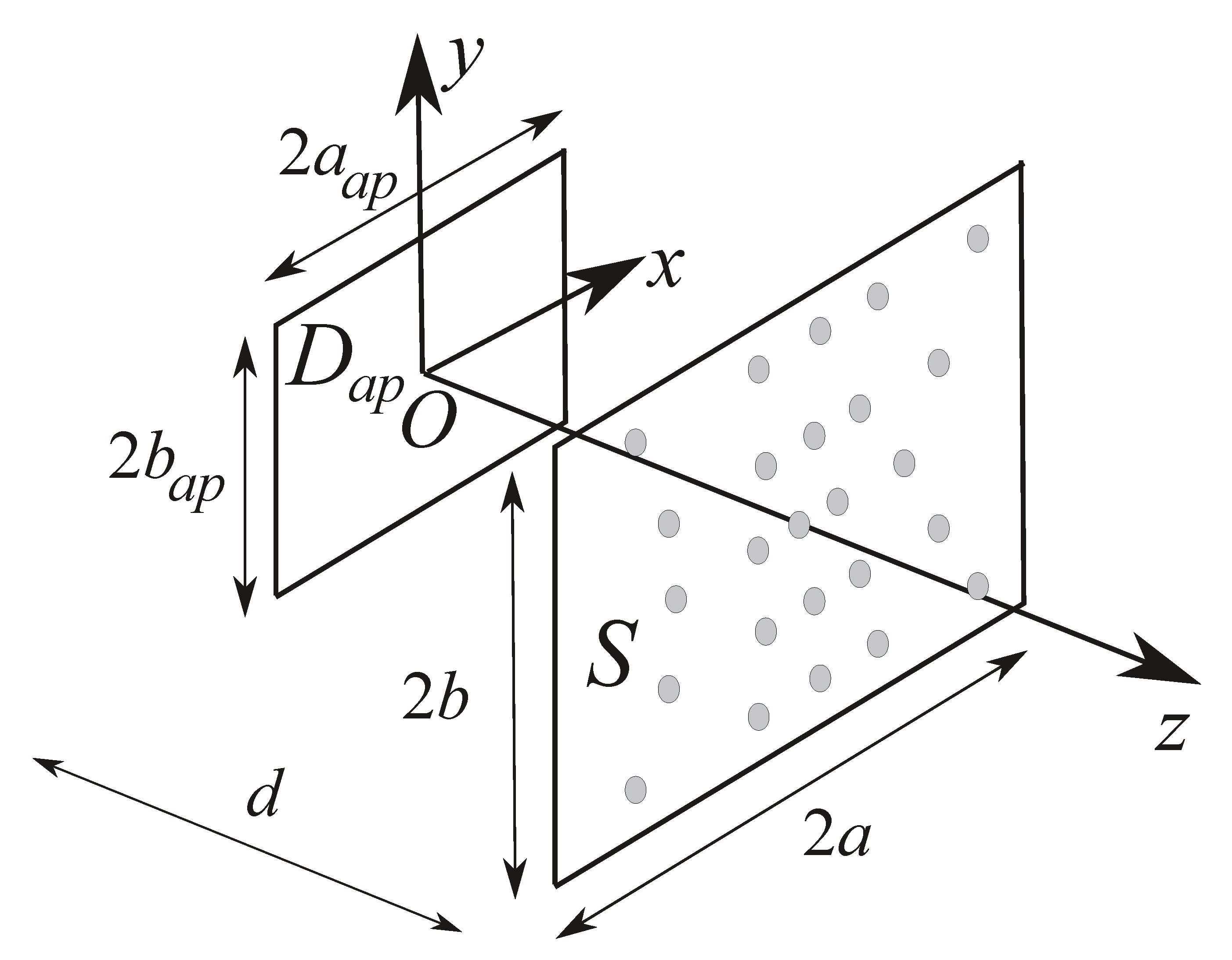

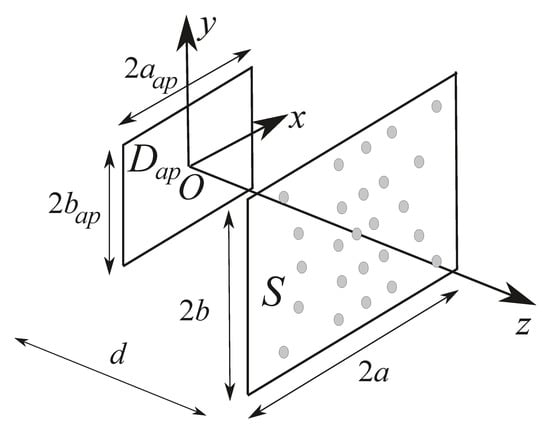

Let us consider the problem in Figure 1 depicting a rectangular aperture with effective shape , sized, located in the plane of a Cartesian reference system , and centred in O. The rectangular aperture represents the Antenna Under Test (AUT).

Figure 1.

Problem geometry.

The radiated field is acquired over a portion S, sized, of a NF plane located in front of the source, orthogonal to the z-axis, and at a distance d from the aperture. A time-harmonic formulation is considered with angular frequency , wavelength , and propagation constant .

is the (transverse) aperture field and is the transverse field to be measured on S. and are linearly related, in an abstract form, as:

We assume an ideal, elementary probe sensing individual field components so that, considering the transverse components only, Equation (1) can be re-written as:

Accordingly, we can refer to a scalar problem involving just one Cartesian transverse component:

The linear inverse problem we consider is that of recovering from E by solving Equation (3). Once is retrieved, the radiated field and, in particular, the FF can be calculated. For the expression of operator , the reader is referred, for example, to [15].

3. The SVO Approach under Different Perspectives

To solve the problem at hand, two points must be addressed: A discretization strategy making the numerical solution affordable and the ill-conditioning issue.

3.1. Discretization

To get a discrete version of the problem in Equation (3), as a first step we need to evaluate it at a finite number, say N, of observation points . As a second step, we need to describe the aperture field by a finite number of unknown parameters. To this end, let us observe that, since both and its Plane Wave Spectrum (PWS) have bounded support, the relevant belongs to the finite M-dimensional space spanned by Prolate Spheroidal Wave Functions (PSWFs) , , with a proper space-bandwidth product [14,15,16,17,18], namely:

Details on the representation (4) can be found in [28]. Determining thus amounts to determine the vector .

3.2. Ill-Conditioning

To face ill-conditioning, the “complete” reconstruction of the unknowns must be dismissed and a regularization method must be adopted, e.g., the Truncated SVD (TSVD) or the L-curve strategy [29], to retrieve only the information robustly contained in the data. Furthermore, even though the inverse problem to be solved is linear, a number of data larger than that of the unknowns is required.

However, before applying regularization, the following question arises: Which is the most convenient sampling point distribution from the ill-conditioning point of view? In other words, are all the possible distributions of the sampling points equivalent, or it is possible to select a NF measurement grid able to improve the SVB? Indeed, the SVs of , say , can be properly tuned by changing the number and locations of the sampling points. Henceforth, we will assume the ’s ordered in a decreasing way. In particular, to mitigate the ill-conditioning, the number and the spatial distribution of the NF samples should be chosen as the ones optimizing a functional, say , expressing the degree of conditioning of . This is a way of preconditioning [23], or pre-filtering, or a first form of regularization consisting of shaping the spectrum (SVB) of and that we have called SVO. The quality parameter of the SVB depends on the definition of , but we expect that different quality parameters, if coherently defined, lead to similar results, apart from optimization issues.

It should be finally noticed that the ill-conditioning concept is intimately related to that of the amount of collected information [30]. Accordingly, ill-conditioning can be mitigated by improving the amount of information acquired by the data. In the following, three measures of information will be detailed.

3.3. Condition Number

A first possible definition of is through the condition number of . As known, the condition number is the ratio between the largest and smallest considered SVs , where r is the number of vector components of the unknowns retained during the inversion. Anyway, what is observed, also by a numerical analysis not shown here for the sake of brevity, is that is often not much responsive on the sampling locations ’s, which is opposite to what happens to the larger SVs. In other words, an optimization procedure is typically not able to effectively improve the smallest singular value , leading to a very poor dynamic. This makes the use of the condition number in the definition of the objective functional unreliable to improve the SVB. Fortunately, it is possible to improve singular values not considered in the merit figure . This enables the use of the below detailed metrics.

3.4. Shannon Number

To achieve a satisfactory SVB, also improving the degree of conditioning, the following functional, assuming , has been considered up to now in SVO [14,15,16,17,18]:

Functional should be maximized to obtain the sampling. Details on the maximization of can be found, for example, in [18].

To interpret its meaning, let us first observe that, at the numerator, the sum of the SVs is the Shannon number [3]. The subsequent normalization by can be easily explained as follows. For the applications of interest, the ’s exhibit a step-like behavior. In other words, they are approximately constant up to a certain index after which they suddenly fall to zero. Exasperating such a behavior, let us assume for a moment that, up to , the SVs are all equal, and, then, beyond , they all vanish. In this case, the normalized sum would furnish the number of non-zero SVs. In the actual case when the ’s do not exhibit such a sharp behavior and generalizing the expounded extreme case, the functional (7) can be interpreted as a measure of the relevant SVs. Here, by relevant SVs, we mean all those SVs retained by the TSVD, namely, those whose square amplitude is larger than the noise level. This corresponds to the (weighted) dimension of the vector space of the unknowns that can be actually reconstructed from the data [11,12].

3.5. Mutual Information

Using the SVD of , the unknown can be expressed as:

where , , are the right singular vectors. Accordingly, the actual unknowns become the ’s.

By using the SVD expansion, the measured field can be written as:

where the ’s are the left singular vectors of .

The presence of additive noise leads, in the first place, changes Equation (9) into:

where and is the noise vector. Obviously, the noise corrupting the data, depending on its source, can have different statistics and can be differently modeled. Here, we are assuming additive noise.

Let us denote by the Probability Distribution Function (PDF) of the unknown , with , and by the conditional PDF of the data, given the unknown. From such PDFs, we can obtain the unconditional PDF of the data, namely, , and the a posteriori PDF of the unknown, namely, . The quantity expresses the state of knowledge on the unknown , bearing in mind the observed data .

Mutual information is provided by [6]:

In order to determine a manageable closed-form expression for (11), statistical models for the noise and unknown aperture field are needed. A Gaussian model for the noise is easily acceptable, leading to:

concerning the unknown, in absence of a priori information, a uniform statistical distribution of the coefficients of the aperture field would be the most natural choice. Such an assumption is typically exploited to provide a statistical interpretation of TSVD in absence of a priori information, see [31]. However, it should be noticed that, by proper minimization of divergence measures, for example, the Kullback–Leibler divergence [32], a Gaussian distribution can approximate, within certain limits, a uniform one. Consequently, we will assume both Gaussian, namely and:

where and are the covariance matrices of the unknown and of the noise, respectively. According to the above hypotheses, the mutual information I can be written as [6,7,8]:

Under the same hypotheses as in [6,7,8,9], and can be written as and . Accordingly, Equation (14) becomes:

which readily rewrites as:

The expression of the mutual information by Equation (16) has a meaningful interpretation. Indeed, represents the power of the m-th vector component of the data, on the SVD basis, while represents the power of the noise superimposed to the same vector component [11,12]. Accordingly, can be interpreted as the contribution to I gathered from the m-th vector component of the unknown when measuring the corresponding vector component of the data [9], and the information sums up thanks to the exploited hypotheses. Thus, whenever , the information associated to the measurement of the m-th component is approximately , with is a value approaching 0, and thus vanishing. This occurs since the corresponding datum is corrupted by the noise and, when applying the regularization strategy, this component should be filtered out, for example, by a Truncated SVD (TSVD). Therefore, to avoid such a filtering, should be made as large as possible. This corresponds to the idea, already exploited in the Shannon number case, to increase the ’s as much as possible. This purpose can be pursued by maximizing the mutual information, I.

We stress that the above assumption of uniform statistical distribution of the coefficients of the aperture field and its Gaussian distribution approximation tackle the case when no a priori information is available on the aperture field distribution apart from the rectangular shape and dimensions. In the case when a priori information is available, the above formulation should be updated accordingly and the performance of SVO changes.

Nevertheless, a normalization of the mutual information is convenient, in the same fashion as for the Shannon number case, to make the functional express a measure of the (weighted) dimensionality of the actually reconstructable unknown space. For the considered case of mutual information, this can be done by considering the ratio of the contribution gathered by measuring the M vector components of the data and that gathered by the measurement of most relevant component, in particular, that associated to the largest singular value (SV) [7]:

Obviously, the dimensionality D depends on the NF sampling points, which can be then chosen to maximize it. Accordingly, using mutual information leads to the optimization of the following functional:

Note that the rationale for optimizing functional is essentially the same as that for optimizing functional .

3.6. Fisher Information

The problem of optimally placing sensors using the Fisher Information Matrix (FIM) has already been faced throughout the literature. In particular, in [20,21,22], the maximization of the determinant of the FIM has been used to rank potential sensor locations for on-orbit modal testing. Indeed, sensor locations providing dependent information which contributes to lower the value of such a determinant, should receive lower rank and should be eventually deleted. Moreover, in [27], the maximization of the determinant of the FIM has been used to face the problem of optimizing the measurement locations in antenna near-field characterization and in particular to determine a probability measure where allocating the probe, once the overall number of measurements is prefixed. Therefore, we test the use of the Fisher information also in SVO.

Accordingly, under the same hypotheses of the previous Section, we here introduce the FIM whose generic element is equal to [27,33]:

By using the SVD expansion (9), the ’s can be expressed as:

On resorting to the orthonormality of the ’s, then:

with as the Kronecker symbol.

The introduction of the FIM enables expressing the Cramér–Rao bounds for estimating the k-th component of by an unbiased estimator [34]. Following [34], the larger the singular values of the FIM, the more accurate the reconstructions.

According to the above, in this paper, we consider the maximization of the determinant of the FIM as a further possibility to determine the sampling locations. Being the determinant of the FIM related to the product of the ’s, then the functional to be optimized would be:

However, to prevent very large values and overflow problems during optimization, the log function, not changing the convexity properties, is first applied to (22). Furthermore, to transform the measure provided by the FIM into a measure of the (weighted) dimensionality of the actually reconstructable unknown space in the same way as before, then (22) is further normalized by its value when only a single data component is exploited. Therefore, the functional to be optimized amounts to:

The considerations done for and apply also to .

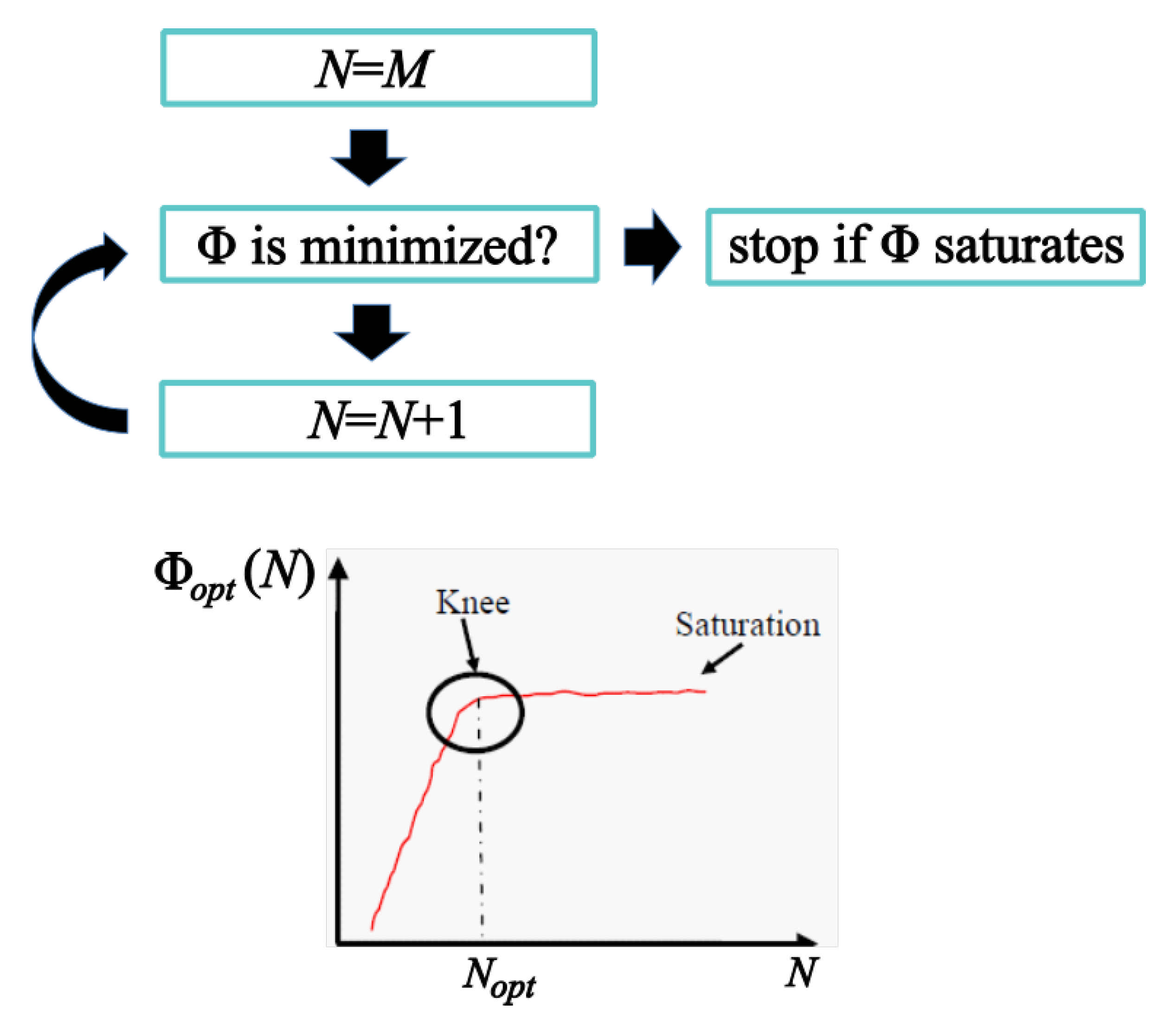

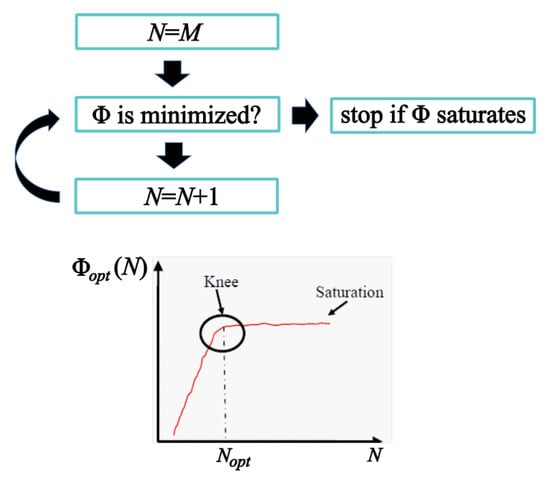

3.7. Determining N and Optimizing the Functionals

Concerning the determination of the number N of sampling points, an iterative approach is adopted, see Figure 2. Starting with N equal to M, N is progressively increased by one unit at each step, namely, a new sample is added, and the spatial distribution of the NF samples optimizing is determined, reaching the optimum functional value . Since the adopted functional provides an information measure, the ’s are expected to be essentially increasing functions of N. However, a saturation behavior is expected since, beyond a certain threshold , even by adding samples, further information is not gathered. This value is the optimal (minimum) number of samples to retrieve as much information as possible about from the field samples.

Figure 2.

Flow chart for the determination of the number N of sampling points.

Regarding the maximization of the ’s, the optimization technique must be efficient, since the computational burden can be significant, due to the large number of unknowns involved, and effective, since we are interested in the global maximum for , and the use of local optimization techniques can be stuck into local optima. In order to simultaneously face both these issues, we resort to scheme in [14,15,16,17,18]. It should be mentioned that, in [18], a global optimizer was used. However, our experience is that, typically, local optimization, which has been used throughout this paper, returns already satisfactory results.

4. Numerical Results

We present now two test cases to discuss the behavior of SVO when using the three considered information measures, namely, Shannon number, mutual information, and Fisher information. The analysis is performed on numerically generated data to achieve full control of the tests and make a fair comparison among the metrics. In the first test case, say case A, a horn antenna is examined, while, in the second one, say case B, the AUT is a broadside array. In both cases, the radiated field has been obtained numerically by using the commercial software Altair FEKO. The SVO results will be compared against a reference provided by a regularized TSVD inversion exploiting the same PSWFs-based aperture field representation and a standard sampling on S.

4.1. Horn Antenna

In case A, a sized aperture working at 10 GHz and represented with visible PSWFs is considered while the domain S corresponds to a sized NF surface located at . The aperture size will be larger than the physical horn aperture to incorporate the decay to zero region of the horn field on the plane.

The numerically generated data have been corrupted with noise with a Signal to Noise Ratio (SNR) of and the value of has been fixed consequently.

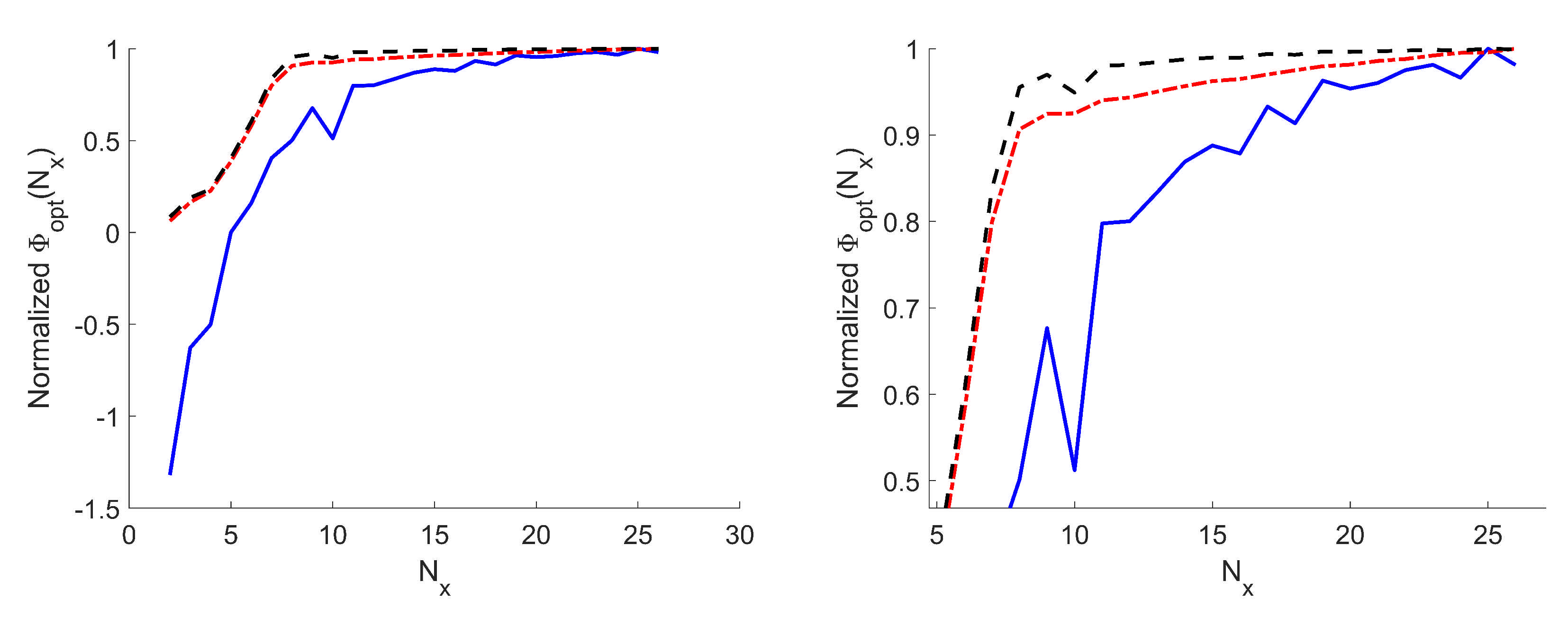

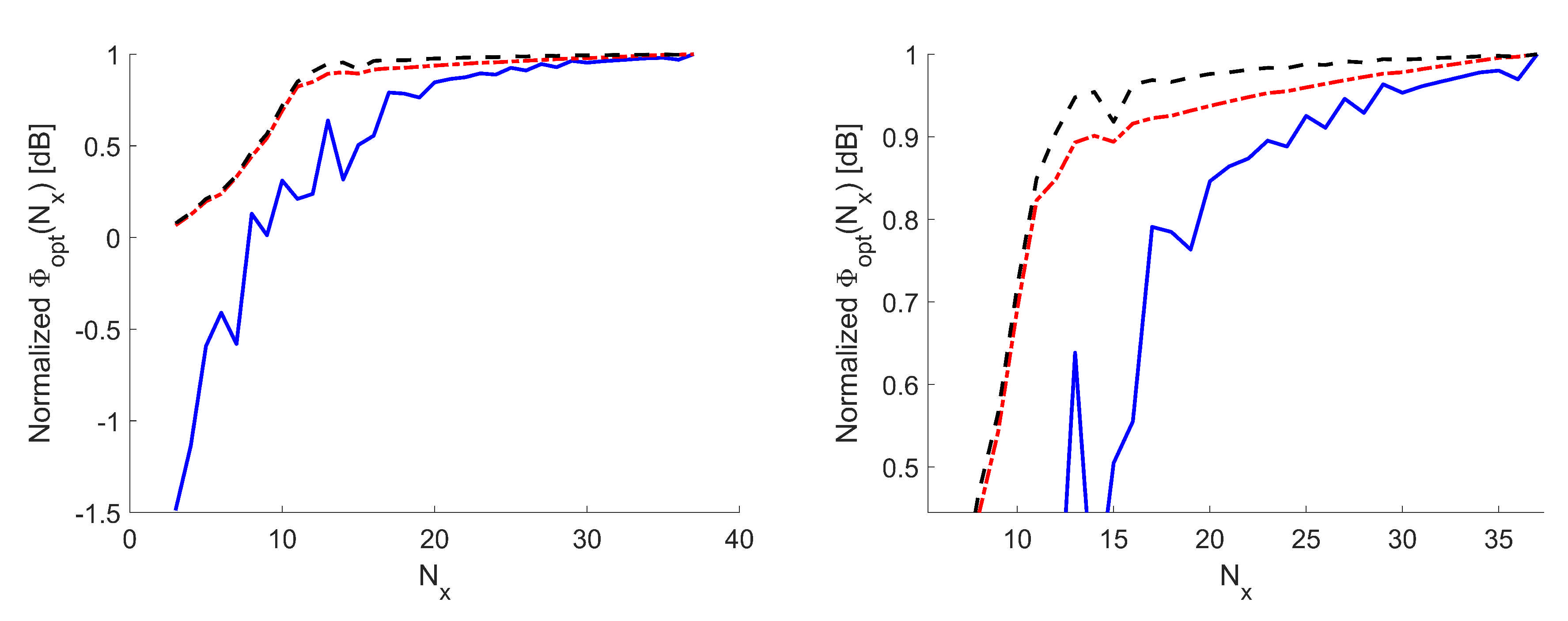

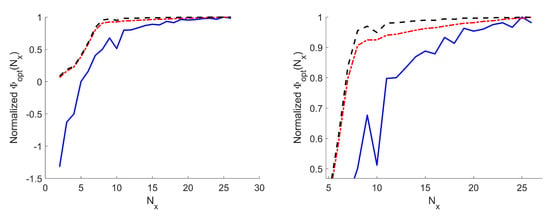

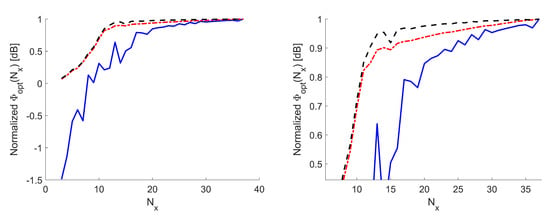

Thanks to the fact that, using the approach in [14,15,16,17,18], the actual sampling grid is obtained as a distortion of a Cartesian grid, to simplify the determination of the optimal value of N, we have set , with and the number of samples along the x- and y-axes, respectively, while the ratio has been set equal to the ratio between the PSWFs needed to expand along x and y, namely . In this way, . Figure 3 illustrates the behavior of in order to proceed to the choice of for case A. As seen in Figure 3, both and are quite regular, while is less smooth due to the local minima issue. For the three metrics, saturation is reached approximately for . In other words, by increasing beyond 19, none of , , significantly increases, meaning that the maximum amount of collectable information is reached with .

Figure 3.

Case A. curves—The curve is reported in a log scale—all curves are normalized to their respective maxima. Blue solid line: Shannon number. Black dashed line: Mutual information. Red dash-dotted line: Fisher information. (Left) full curves. (Right) zoom around the knees.

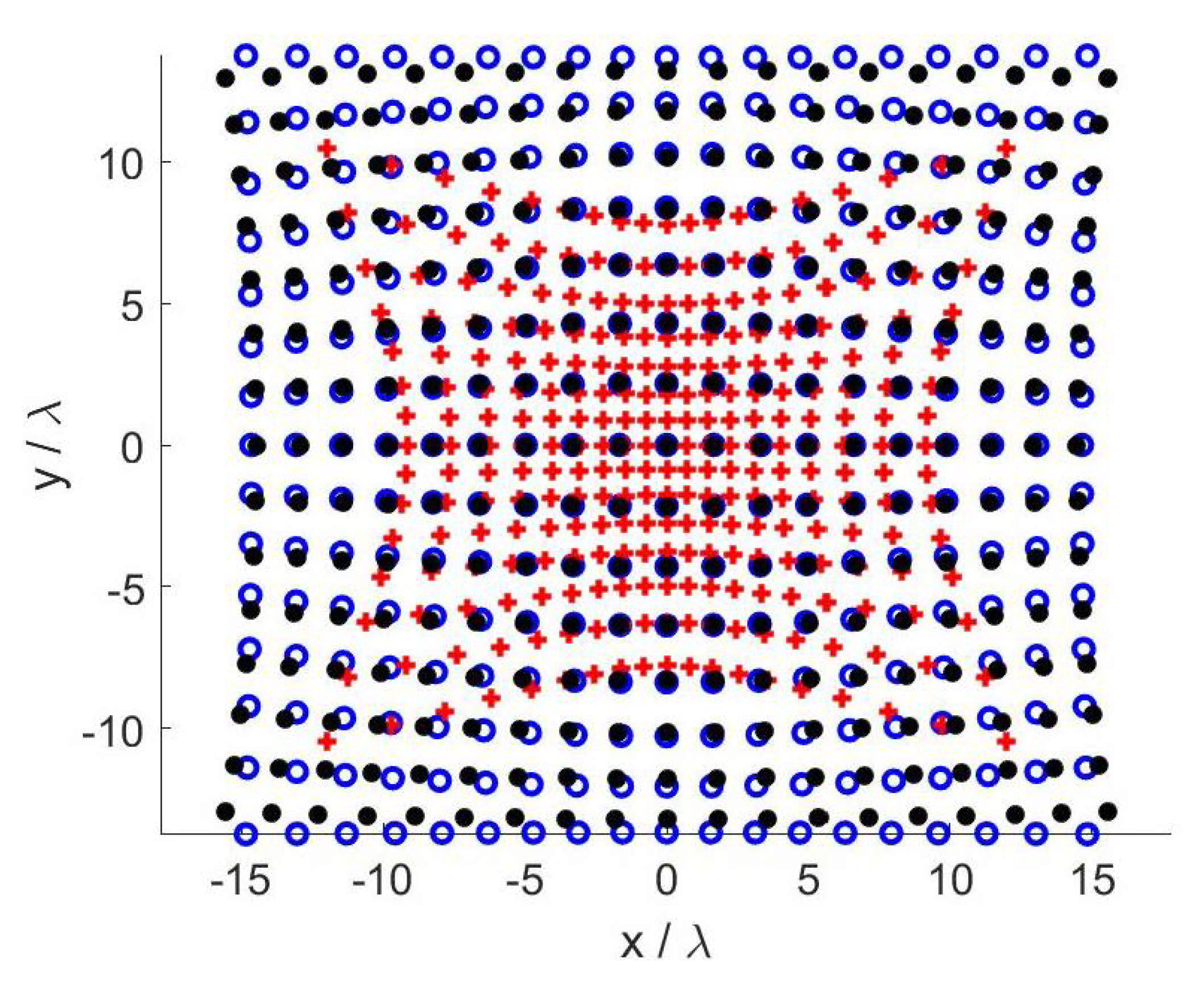

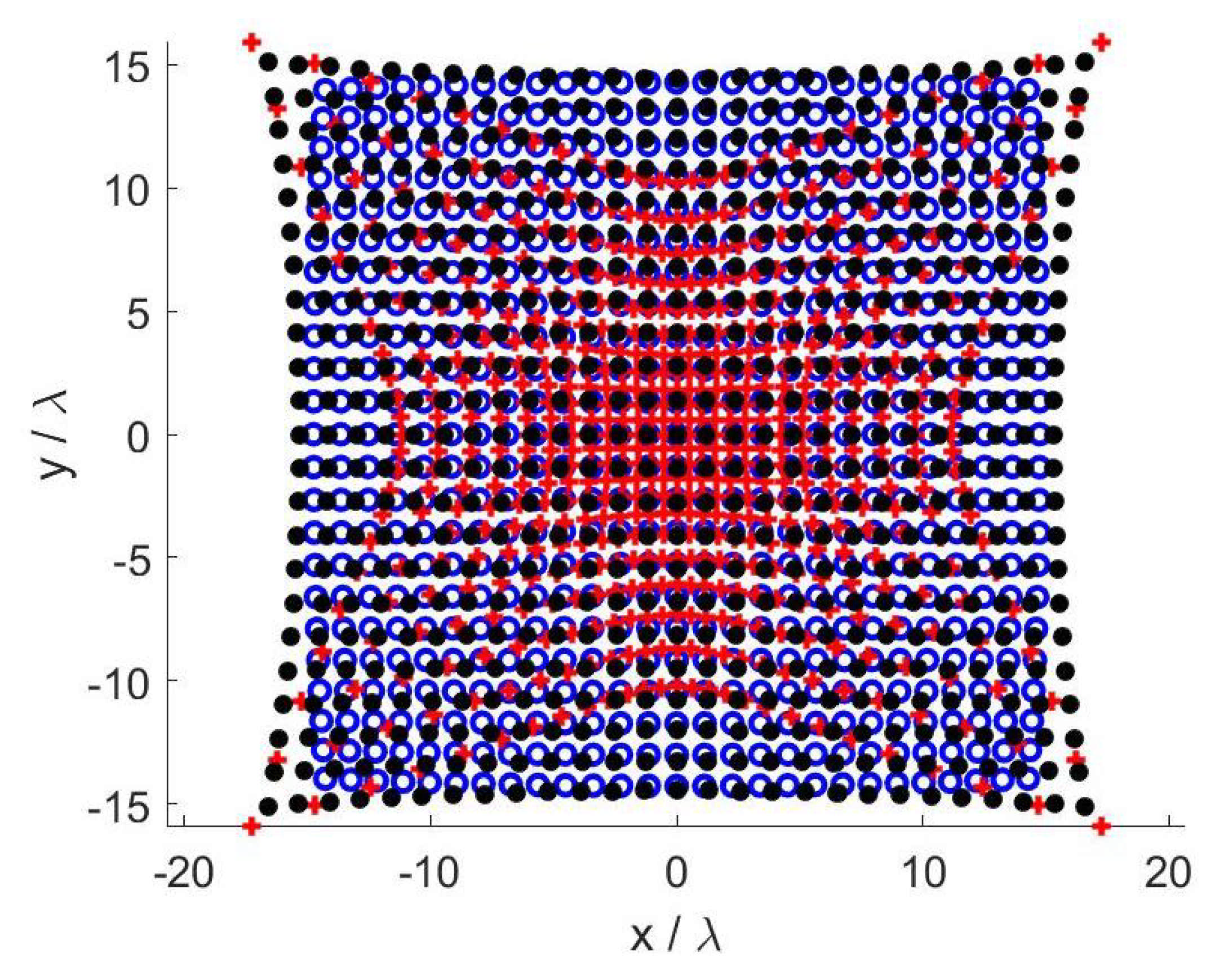

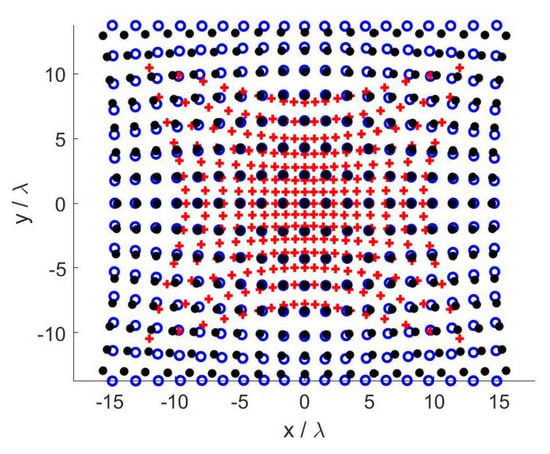

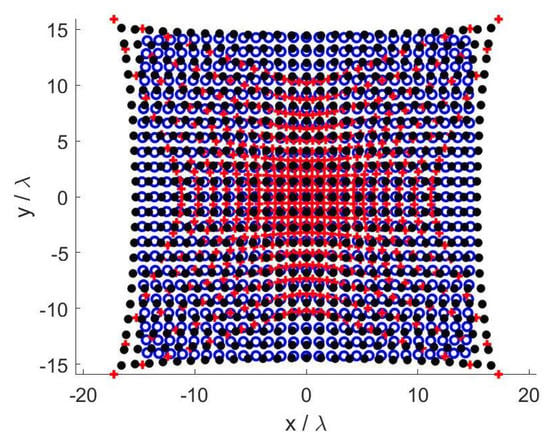

Figure 4 depicts the optimized sampling points for the three SVO applications in case A.

Figure 4.

Case A—Optimized sampling points. Blue circles: Shannon number. Black dots: Mutual information. Red pluses: Fisher information.

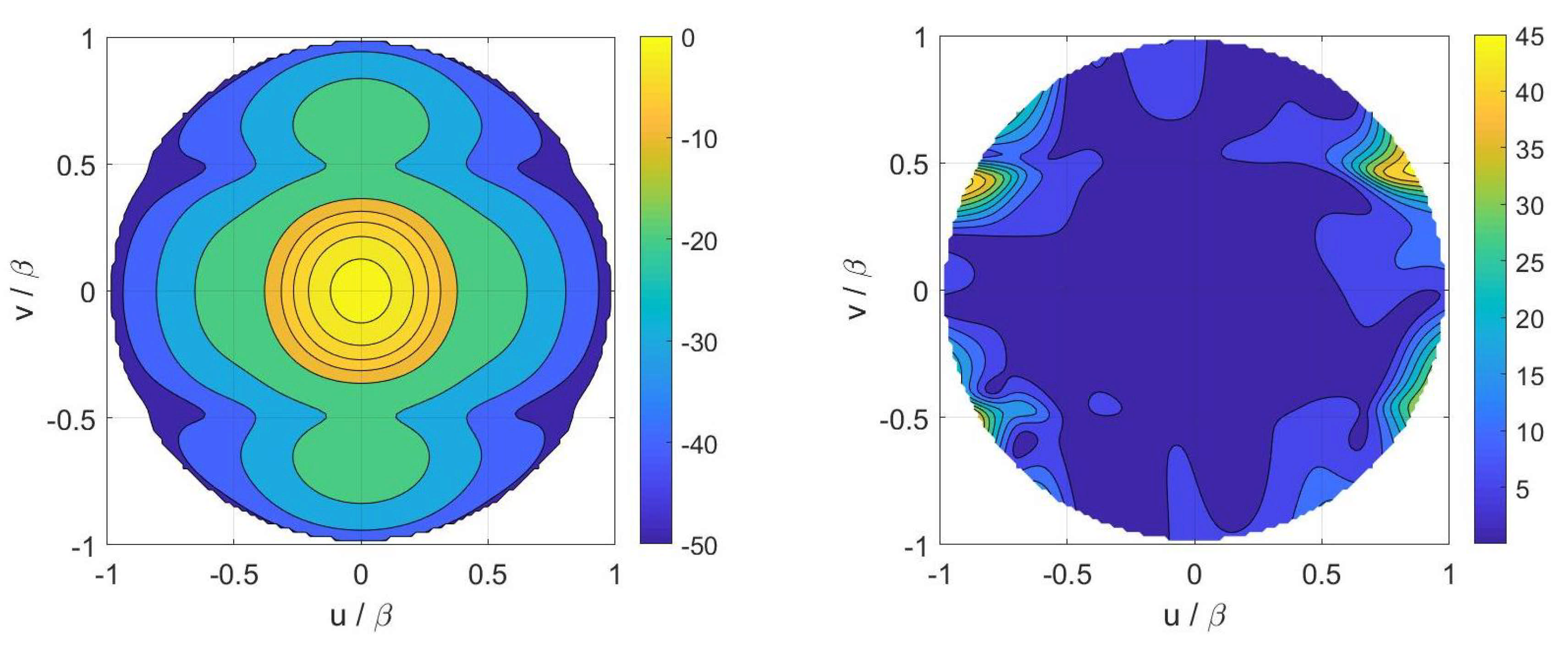

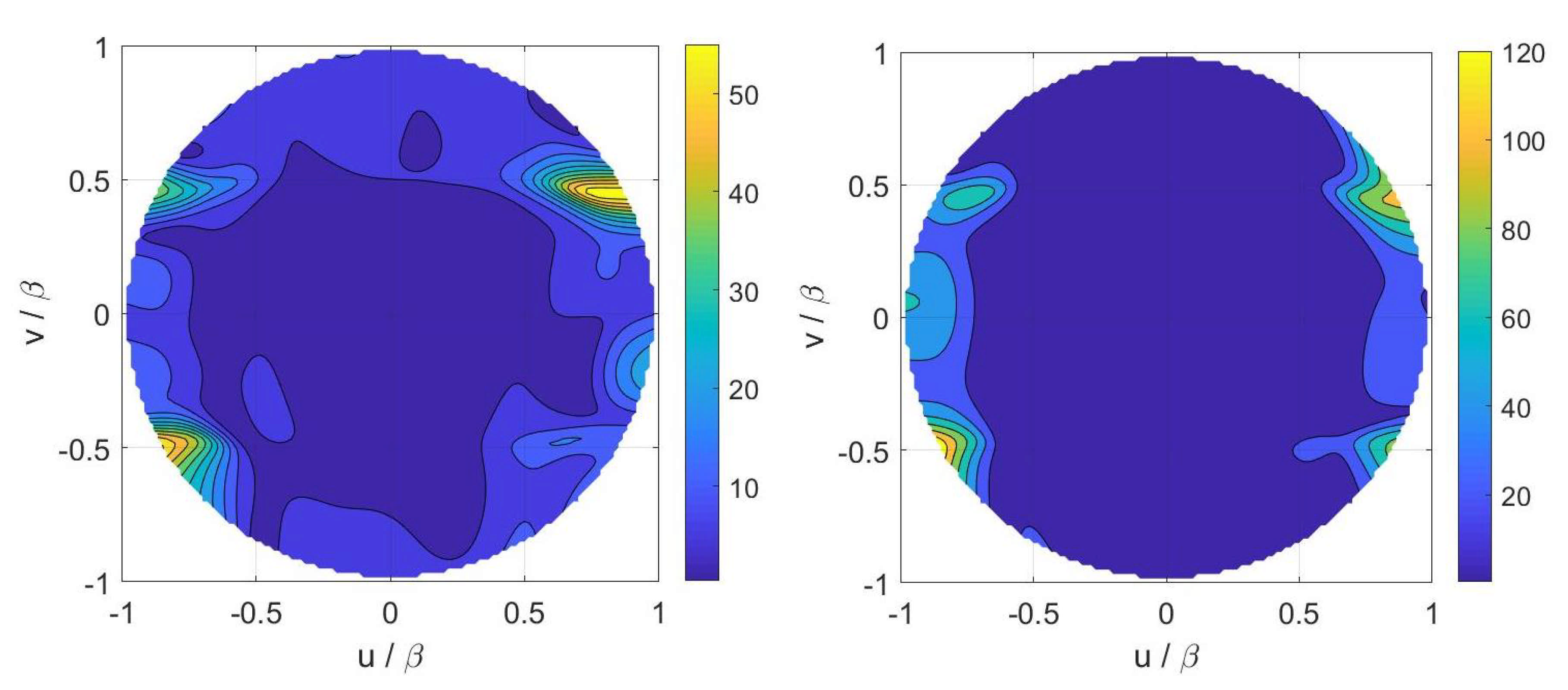

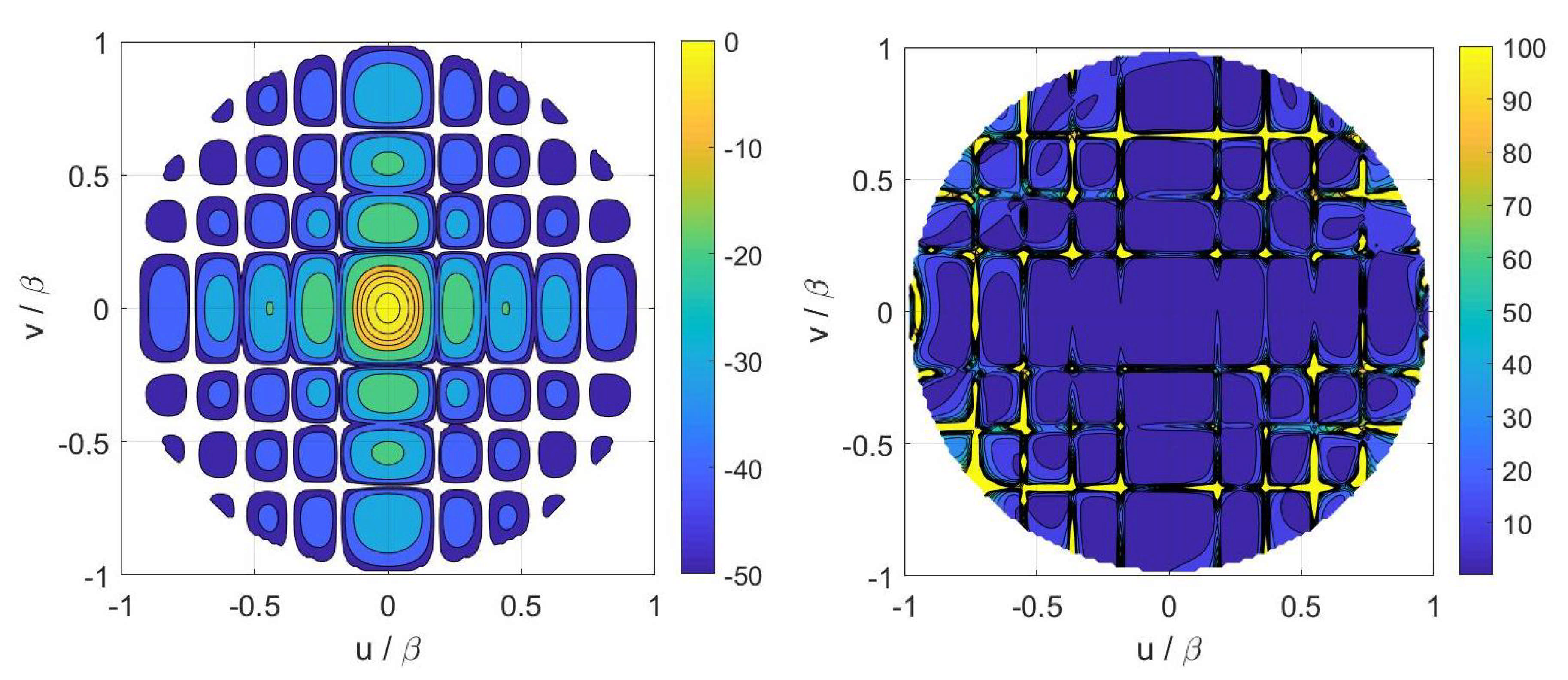

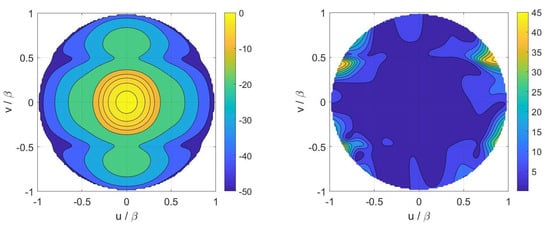

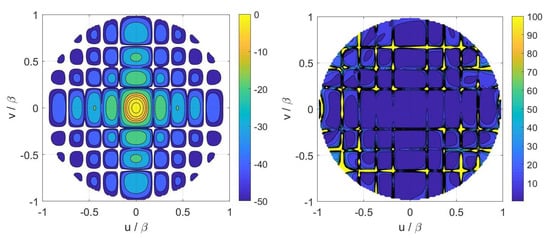

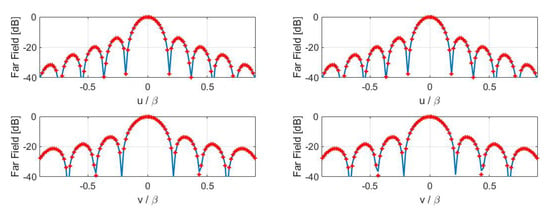

Finally, inversions for case A have been performed by considering the numerical field radiated by a horn antenna having a physical aperture size equal to . In particular, Figure 5 displays the contour plot of the reference Far Field Pattern (FFP) in the plane along with the percentage errors achieved in the Shannon number case while Figure 6 shows those achieved in the mutual information and Fisher information cases. Furthermore, Figure 7 and Figure 8 report cuts, along the spectral u and v axes, of the retrieved FFPs for the three metrics superimposed to the numerical reference. The cuts have been reported for due to the limitations of the aperture model. As it can be seen, satisfactory results are obtained in all the considered cases. Furthermore, the performance is very similar notwithstanding the different distribution of the samples. This should not be surprising since this behavior is related to the analytical properties of the involved fields.

Figure 5.

Case A—(Left) contour plot of the reference FFP. (Right) percentage error of the FFP retrieved in the Shannon number case.

Figure 6.

Case A—(Left) percentage error of the FFP (Far Field Pattern) retrieved in the mutual information case. (Right) percentage error of the FFP retrieved in the Fisher information case.

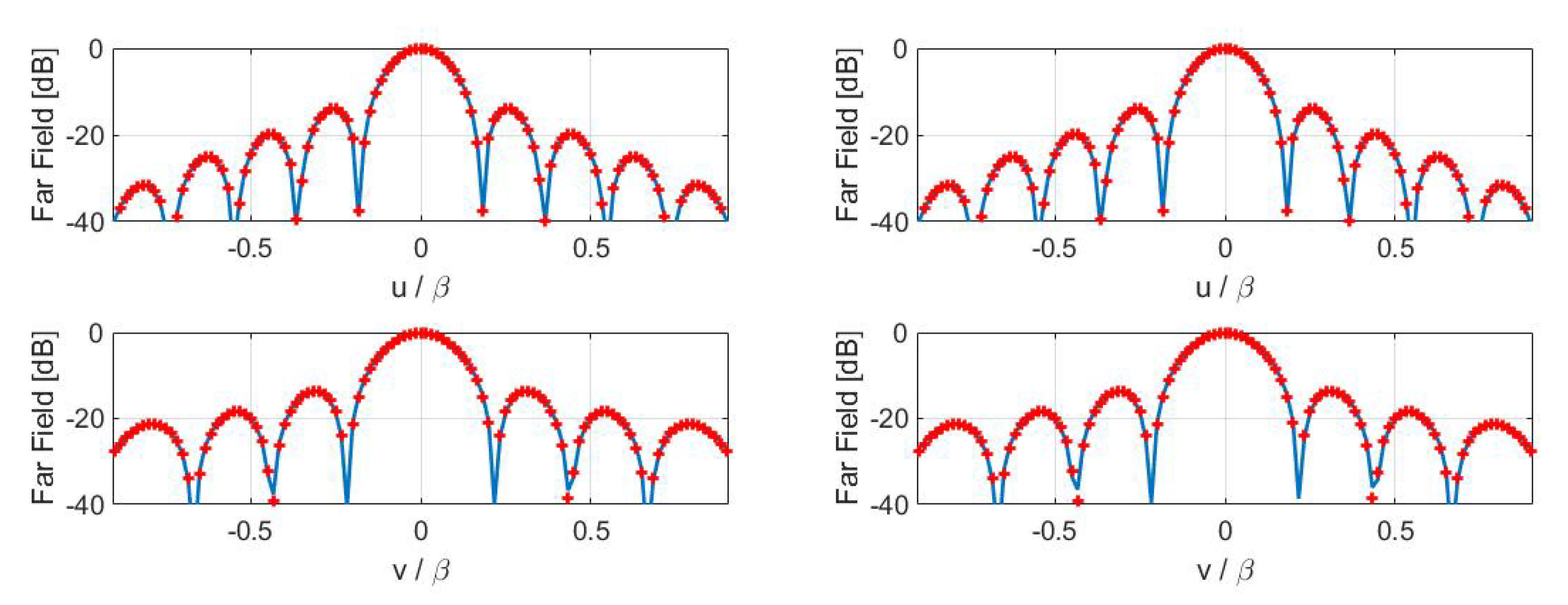

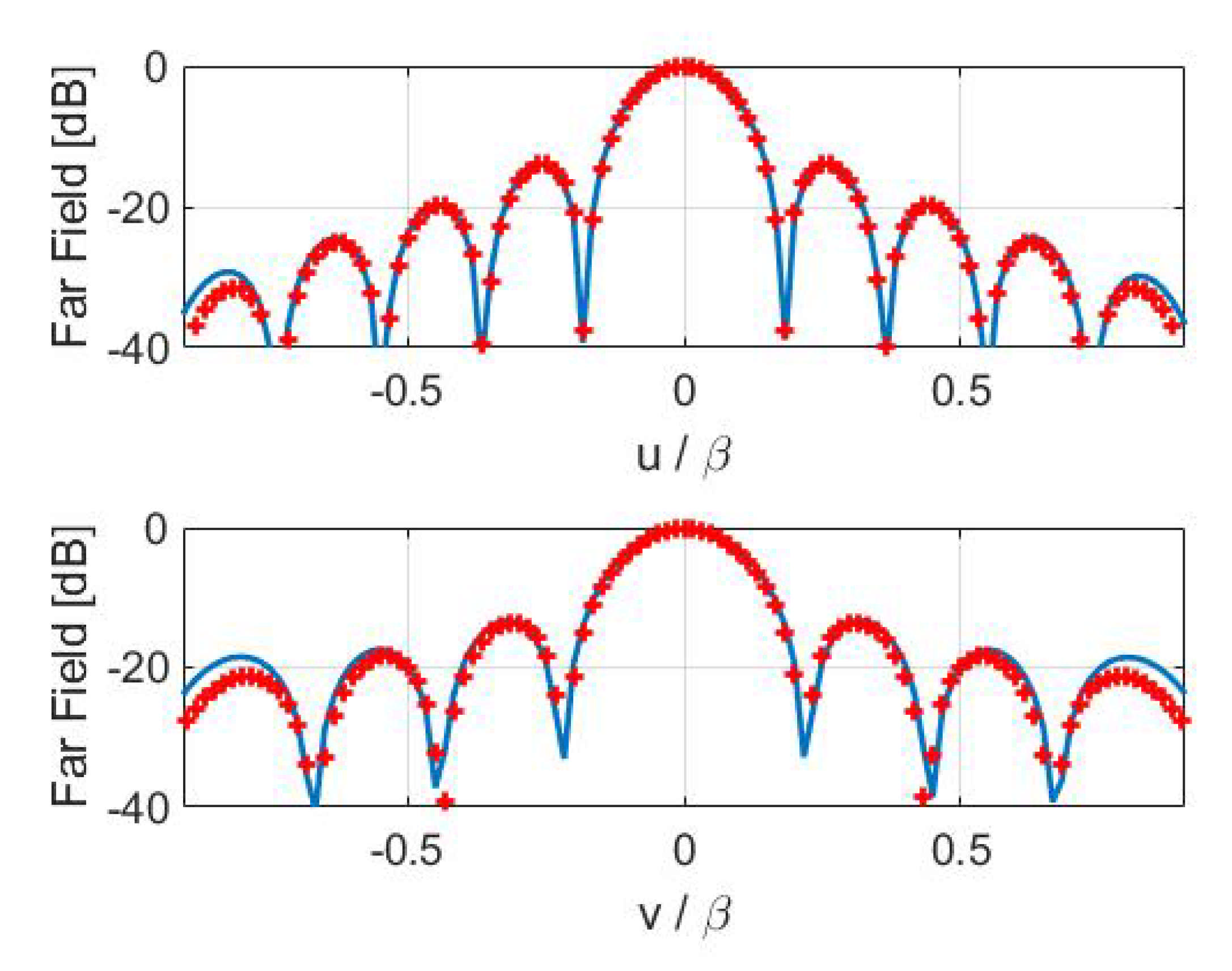

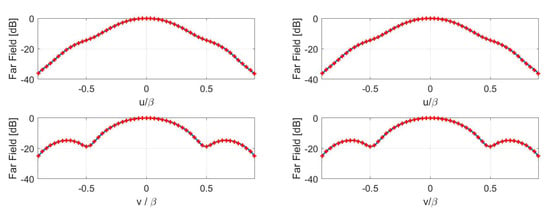

Figure 7.

Case A—Cuts, along the u and v planes, of the reference (red pluses) and retrieved (blue solid line) FFP. (Left) Shannon number. (Right) mutual information.

Figure 8.

Case A—Cuts, along the u and v planes, of the reference (red pluses) and retrieved (blue solid line) FFP. Fisher information.

4.2. Array

In case B, a sized aperture working at 2.4 GHz with an aperture field represented with visible PSWFs is considered. The domain S is sized and located at .

In addition, for this case, the numerically generated data have been corrupted with noise with a SNR of and the value of has been fixed consequently.

Again, we have set and the ratio has been fixed equal to according to the number of PSWFs needed to represent the aperture field along x and y. In Figure 9, the choice of N for the three cases is reported. As before, and are quite regular, while shows small oscillations. For all the three metrics, the adopted value for has been 27. In other words, by increasing beyond 27, none of , , significantly increases thus meaning that the maximum amount of collectable information is reached with . The corresponding distributions of the measurement locations for the three variants are reported in Figure 10.

Figure 9.

Case B. curves. The curve is reported in a log scale—all the curves are normalized to their respective maxima. Blue solid line: Shannon number. Black dashed line: Mutual information. Red dash-dotted line: Fisher information. (Left) full curves. (Right) zoom around the knees.

Figure 10.

Case B—Optimized sampling points. Blue circles: Shannon number. Black dots: Mutual information. Red pluses: Fisher information.

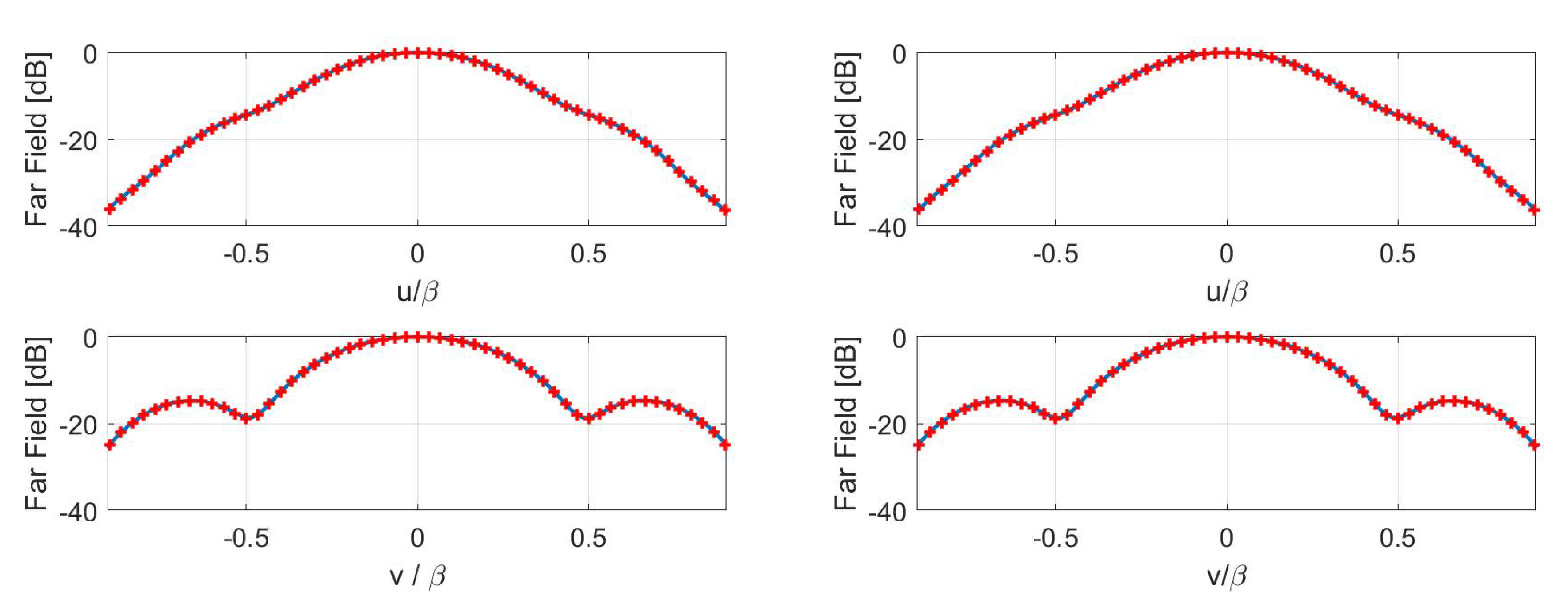

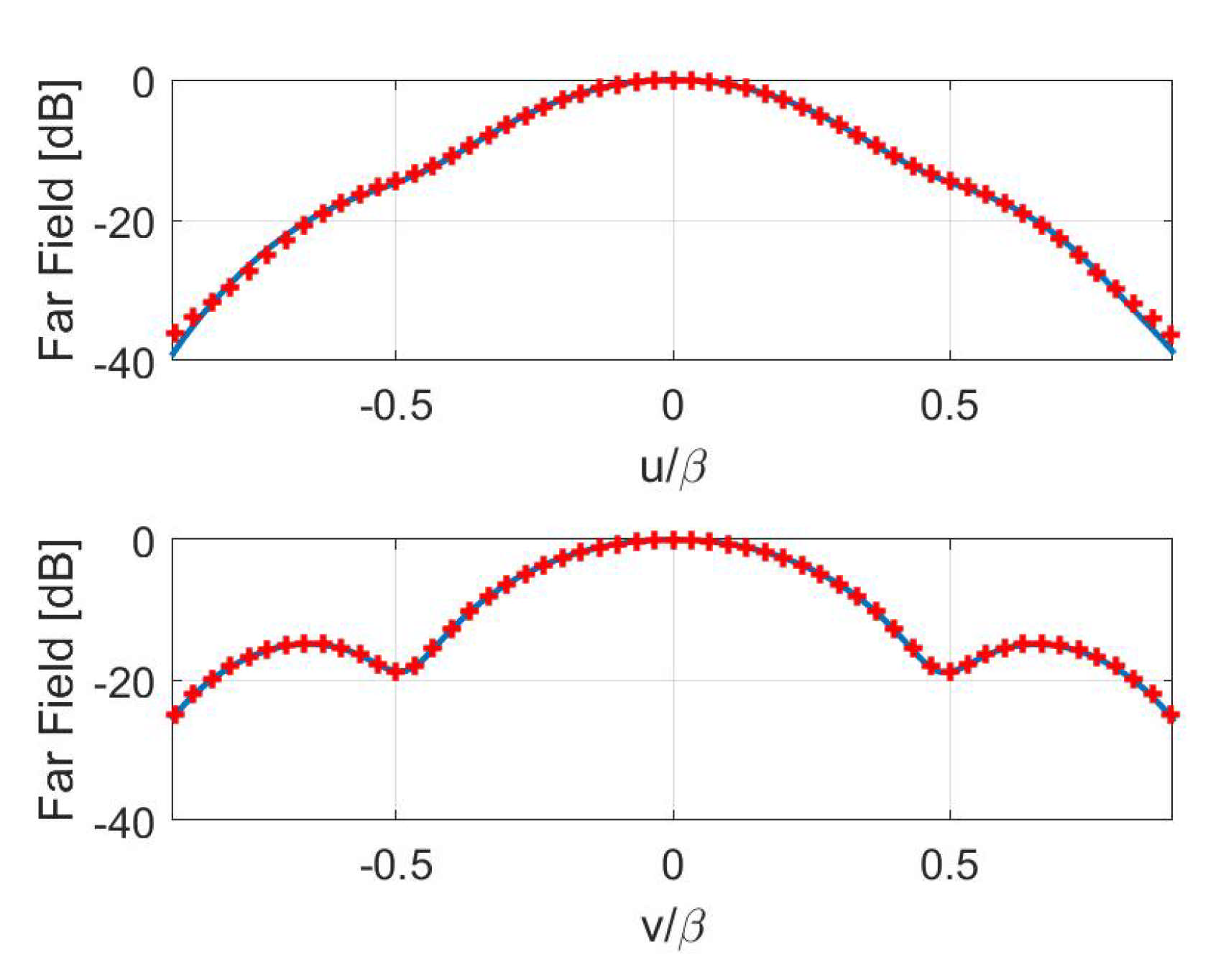

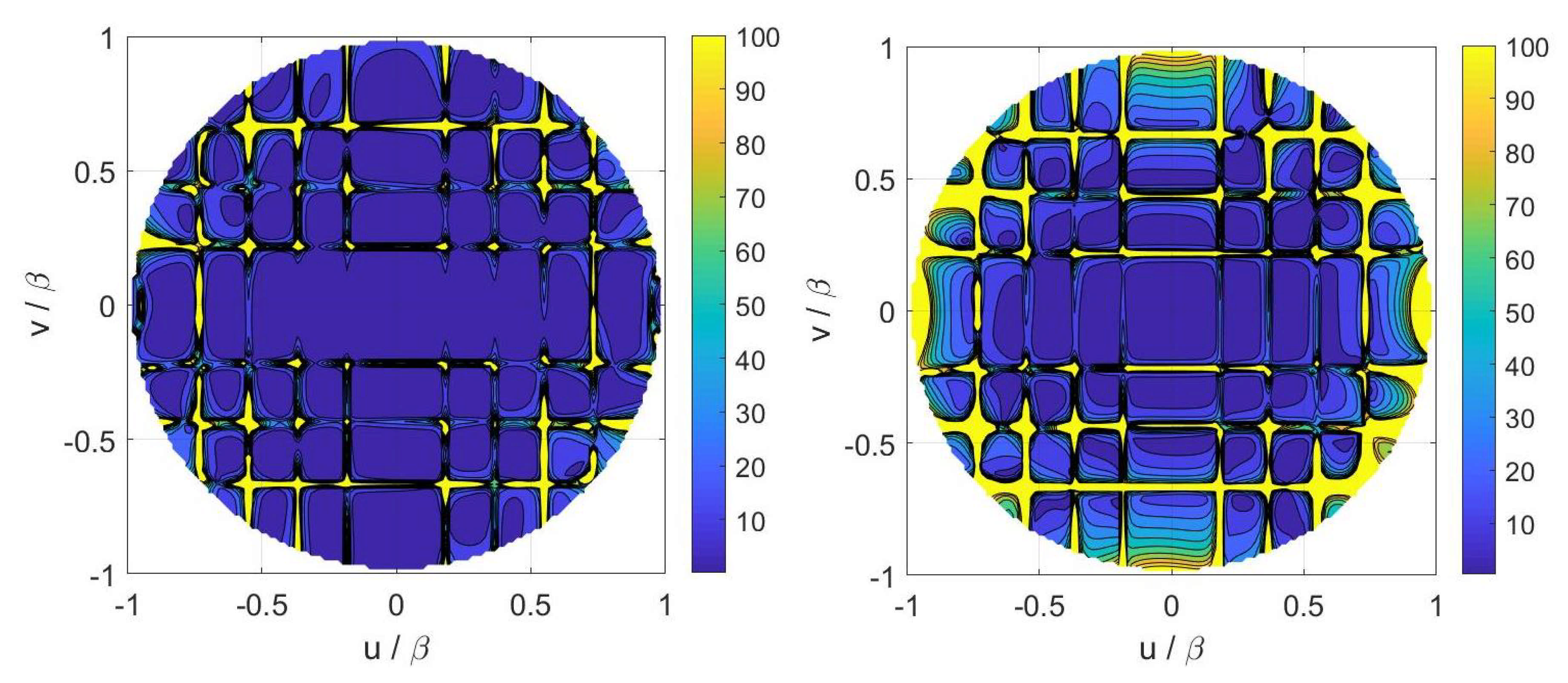

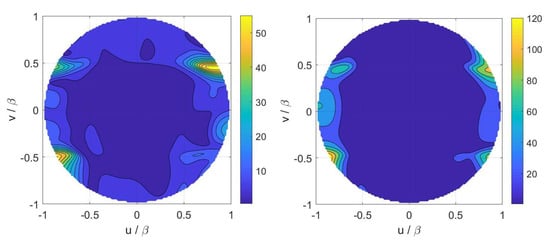

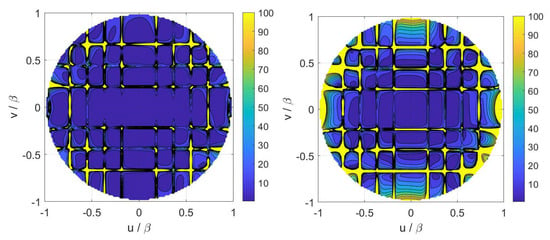

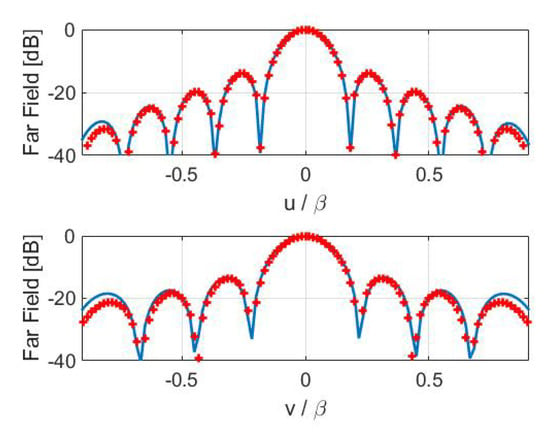

Concerning the inversions for case B, a broadside array of elements with half-wavelength spacing has been simulated. The percentage reconstruction errors for the three approaches are shown in Figure 11, Figure 12, Figure 13 and Figure 14 as for case A. The results confirm that all the metrics have a satisfactory and comparable performance.

Figure 11.

Case B—(Left) contour plot of the reference FFP. (Right) percentage error of the FFP retrieved in the Shannon number case.

Figure 12.

Case B—(Left) Percentage error of the FFP retrieved in the mutual information case. (Right) percentage error of the FFP retrieved in the Fisher information case.

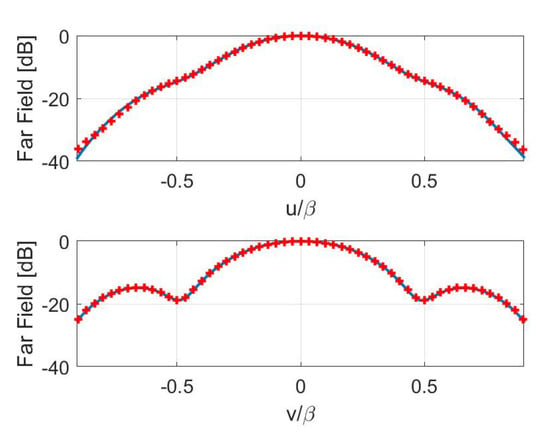

Figure 13.

Case B—Cuts, along the u and v planes, of the reference (red pluses) and retrieved (blue solid line) FFP. (Left) Shannon number. (Right) mutual information.

Figure 14.

Case B—Cuts, along the u and v planes, of the reference (red pluses) and retrieved (blue solid line) FFP. Fisher information.

5. Conclusions and Future Developments

The SVO technique, when applied to a NFFF transformation problem, has the following features:

- It formulates the NFFF transformation problem as a linear inverse one;

- It uses an effective representation of the aperture field of the AUT (PSWFs);

- It determines the “optimal” near-field samples by maximizing the information acquired by the samples;

- The information measure acquired so far has been based on the Shannon information.

The challenge faced in the present paper was:

- To analyze the performance of SVO when different information metrics are considered.

We have therefore reconsidered the SVO approach for the characterization of antennas from NF data under different perspectives provided by different quality metrics. In particular, we also dealt with, apart from Shannon number, quality metrics provided by mutual information and Fisher information and numerically compared the performance of three SVO implementations.

In all the worked out cases, the number of exploited NF samples were the same and the achieved performance was essentially comparable although the optimized samples exhibited different distributions. This should not be a surprise due to the analytical properties of the radiated NF.

We explicitly mention that, in the authors’ experience, a similar performance achieved by Shannon number, mutual information, and Fisher information is not dependent of the particular test case under consideration, but it occurs uniformly over a wide range of parameters of the measurement configuration. We also underline that the SVO approach and the analysis herein contained applies to either individual radiating elements or more complex antenna systems and that SVO has been recently applied to tomographic problems in [35].

Future development of the present investigation are generalizing the approach to phaseless NFFF transformations [14,28] and considering the effect of correlated statistics of unknown and noise.

Author Contributions

All the authors have equally contributed to the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Severcan, M. Restoration of images of finite extent objects by a singular value decomposition technique. Appl. Opt. 1982, 21, 1073–1076. [Google Scholar] [CrossRef]

- Kao, C.M.; Pan, X.; La Riviere, P.J.; Anastasio, M.A. Fourier-based optimal recovery method for anti-aliasing interpolation. Opt. Eng. 1999, 38, 2041–2044. [Google Scholar] [CrossRef]

- Gori, F.; Guattari, G. Shannon number and degrees of freedom of an image. Opt. Commun. 1973, 7, 163–165. [Google Scholar] [CrossRef]

- Stern, A.; Javidi, B. Shannon number and information capacity of three-dimensional integral imaging. J. Opt. Soc. Am. A 2004, 21, 1602–1612. [Google Scholar] [CrossRef] [PubMed]

- Frieden, B.R. Evaluation design and extrapolation methods for optical signals, based on use of the prolate functions Progress in Optics. In Progress in Optics; Wolf, E., Ed.; North-Holland: Amsterdam, The Netherlands, 1971; Volume 9, pp. 311–407. [Google Scholar]

- Luttrell, S.P. A new method of sample optimisation. Opt. Acta 1985, 32, 255–257. [Google Scholar] [CrossRef]

- Luttrell, S.P. The use of transinformation in the design of data sampling schemes for inverse problems. Inverse Probl. 1985, 1, 199–218. [Google Scholar] [CrossRef]

- Luttrell, S.P. Prior knowledge and object reconstruction using the best linear estimate technique. Opt. Acta 1985, 32, 703–716. [Google Scholar] [CrossRef]

- Blacknell, D.; Oliver, C.J. Information content of coherent images. J. Phys. D Appl. Phys. 1993, 26, 1364–1370. [Google Scholar] [CrossRef]

- Neifeld, M.A. Information, resolution, and space–bandwidth product. Opt. Lett. 1993, 23, 1477–1479. [Google Scholar] [CrossRef] [PubMed]

- Piestun, R.; Miller, D.A.B. Electromagnetic degrees of freedom of an optical system. J. Opt. Soc. Am. A 2000, 17, 892–902. [Google Scholar] [CrossRef]

- Miller, D.A.B. Waves, modes, communications and optics. Adv. Opt. Photon. 2019, 11, 679–825. [Google Scholar] [CrossRef]

- Motka, L.; Stoklasa, B.; D’Angelo, M.; Facchi, P.; Garuccio, A.; Hradil, Z.; Pascazio, S.; Pepe, F.V.; Teo, Y.S.; Řeháček, J.; et al. Optical resolution from Fisher information. Europ. Phys. J. Plus 2016, 131, 1–13. [Google Scholar] [CrossRef]

- Capozzoli, A.; Curcio, C.; Liseno, A. NUFFT-accelerated plane-polar (also phaseless) near-field/far-field transformation. Progr. Electromagn. Res. M. 2012, 27, 59–73. [Google Scholar] [CrossRef]

- Capozzoli, A.; Curcio, C.; Liseno, A. Multi-frequency planar near-field scanning by means of SVD optimization. IEEE Antennas Prop. Mag. 2011, 53, 212–221. [Google Scholar] [CrossRef]

- Capozzoli, A.; Curcio, C.; D’Elia, G.; Liseno, A.; Vinetti, P.; Ameya, M.; Hirose, M.; Kurokawa, S.; Komiyama, K. Dielectric field probes for very-near-field and compact-near-field antenna characterization. IEEE Antennas Prop. Mag. 2009, 51, 118–125. [Google Scholar] [CrossRef]

- Capozzoli, A.; Curcio, C.; Liseno, A. Experimental field reconstruction of incoherent sources. Progr. Electromagn. Res. B 2013, 47, 219–239. [Google Scholar] [CrossRef][Green Version]

- Capozzoli, A.; Curcio, C.; Liseno, A.; Vinetti, P. Field sampling and field reconstruction: A new perspective. Radio Sci. 2010, 45, 31. [Google Scholar] [CrossRef]

- Capozzoli, A.; Curcio, C.; Liseno, A. SVO optimality in near-field antenna characterization. In Proceedings of the 2019 IEEE International Conference on Microwaves, Antennas, Communications and Electronic Systems (COMCAS), Tel-Aviv, Israel, 4–6 October 2019; pp. 1–4. [Google Scholar]

- Qureshi, Z.H.; Ng, T.S.; Goodwin, G.C. Optimum experimental design for identification of distributed parameter systems. Int. J. Control 1980, 31, 21–29. [Google Scholar] [CrossRef]

- Kammer, D.C. Sensor placement for on-orbit modal identification and correlation of large space structures. J. Guid. Control. Dyn. 1991, 14, 251–259. [Google Scholar] [CrossRef]

- Poston, W.L.; Tolsont, R.H. Maximizing the determinant of the information matrix with the effective independence method. J. Guid. Control. Dyn. 1992, 15, 1513–1514. [Google Scholar] [CrossRef]

- Capozzoli, A.; Celentano, L.; Curcio, C.; Liseno, A.; Savarese, S. Optimized trajectory tracking of a class of uncertain systems applied to optimized raster scanning in near-field measurements. IEEE Access 2018, 6, 8666–8681. [Google Scholar] [CrossRef]

- Nordebo, S.; Bayford, R.; Bengtsson, B.; Fhager, A.; Gustafsson, M.; Hashemzadeh, P.; Nilsson, B.; Rylander, T.; Sjöden, T. Fisher information analysis and preconditioning in electrical impedance tomography. J. Phys.: Conf. Ser. 2010, 224, 1–4. [Google Scholar] [CrossRef]

- De Micheli, E.; Viano, G.A. Fredholm integral equations of the first kind and topological information theory. Integr. Equ. Oper. Theory 2012, 73, 553–571. [Google Scholar] [CrossRef][Green Version]

- Scalas, E.; Viano, G.A. Resolving power and information theory in signal recovery. J. Opt. Soc. Am. A 1993, 10, 991–996. [Google Scholar] [CrossRef]

- Nordebo, S.; Gustafsson, M. On the design of optimal measurements for antenna near-field imaging problems. AIP Conf. Proc. 2006, 834, 234–251. [Google Scholar]

- Capozzoli, A.; Curcio, C.; D’Elia, G.; Liseno, A. Phaseless antenna characterization by effective aperture field and data representations. IEEE Trans. Antennas Prop. 2009, 57, 215–230. [Google Scholar] [CrossRef]

- Capozzoli, A.; Curcio, C.; Liseno, A. Regularization of residual ill-conditioning in planar near-field measurements. In Proceedings of the 2016 10th European Conference on Antennas and Propagation (EuCAP), Davos, Switzerland, 10–15 April 2016; pp. 1–4. [Google Scholar]

- Gustafsson, M.; Nordebo, S. Cramér–Rao lower bounds for inverse scattering problems of multilayer structures. Inv. Probl. 2006, 22, 1359–1380. [Google Scholar] [CrossRef]

- Bertero, M. Linear inverse and ill-posed problems. Adv. Electron. Electron Phys. 1989, 75, 1–120. [Google Scholar]

- Xiong, Y.; Jing, Y.; Chen, T. Abnormality detection based on the Kullback–Leibler divergence for generalized Gaussian data. Control Engineer. Pract. 2019, 85, 257–270. [Google Scholar] [CrossRef]

- Kay, S.M. Fundamentals of Statistical Signal Processing: Estimation Theory; Prentice Hall: Upper Saddler River, NJ, USA, 1993. [Google Scholar]

- Nordebo, S.; Gustafsson, M.; Khrennikov, A.; Nilsson, B.; Toft, J. Fisher information for inverse problems and trace class operators. J. Math. Phys. 2012, 53, 1–11. [Google Scholar] [CrossRef]

- Capozzoli, A.; Curcio, C.; Liseno, A. Singular Value Optimization in inverse electromagnetic scattering. IEEE Antennas Wirel. Prop. Lett. 2017, 16, 1094–1097. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).