Abstract

Recent advances in the field of neural rehabilitation, facilitated through technological innovation and improved neurophysiological knowledge of impaired motor control, have opened up new research directions. Such advances increase the relevance of existing interventions, as well as allow novel methodologies and technological synergies. New approaches attempt to partially overcome long-term disability caused by spinal cord injury, using either invasive bridging technologies or noninvasive human–machine interfaces. Muscular dystrophies benefit from electromyography and novel sensors that shed light on underlying neuromotor mechanisms in people with Duchenne. Novel wearable robotics devices are being tailored to specific patient populations, such as traumatic brain injury, stroke, and amputated individuals. In addition, developments in robot-assisted rehabilitation may enhance motor learning and generate movement repetitions by decoding the brain activity of patients during therapy. This is further facilitated by artificial intelligence algorithms coupled with faster electronics. The practical impact of integrating such technologies with neural rehabilitation treatment can be substantial. They can potentially empower nontechnically trained individuals—namely, family members and professional carers—to alter the programming of neural rehabilitation robotic setups, to actively get involved and intervene promptly at the point of care. This narrative review considers existing and emerging neural rehabilitation technologies through the perspective of replacing or restoring functions, enhancing, or improving natural neural output, as well as promoting or recruiting dormant neuroplasticity. Upon conclusion, we discuss the future directions for neural rehabilitation research, diagnosis, and treatment based on the discussed technologies and their major roadblocks. This future may eventually become possible through technological evolution and convergence of mutually beneficial technologies to create hybrid solutions.

1. Introduction

Neural rehabilitation, to the extent this is possible, aims to restore the functionality of impaired neurological circuits or complement remaining functionality. This aims to enhance patient independence and quality of life through exploitation of neural plasticity [1,2]. Predominant focus of the neural rehabilitation field lies on restoration of sensorimotor control and functions. Neural plasticity is based on the hypothesis that central nervous system (CNS) and peripheral nervous system (PNS) circuits can be retrained after a lesion in order to facilitate effective rehabilitation [3]. The main neural rehabilitation research approaches can be described as bottom-up procedures where, by acting on the affected limbs, one aims to influence the CNS; however, the exact afferent mechanisms of neuroplasticity behind this approach are still unknown [4]. Neural prosthetic grasping hands and legs, support exoskeletons, and body-weight support/robotic treadmill systems all share the bottom-up approach, where plasticity is driven by the device and by rehabilitation practice. Recently an emerging research trend is exploring top-down approaches as a new paradigm for exploiting neuroplasticity by first studying and modulating the state of the brain [4]. As such, rehabilitation is driven by said neuroplastic changes [4]. Brain–computer interfaces and virtual reality variants all fall in this category where neural plasticity is recruited to accommodate lasting rehabilitation effects. Additionally, there is a current focus on repetitive and intensive training; however, other principles of motor learning (such as transferability of learning to daily activities, active engagement, and problem solving) are not yet fully explored [5]. Due to its repetitive, intensive, and task-specific nature, robotics rehabilitation is a great candidate for integrating the abovementioned hypotheses for retraining the CNS [4,5].

The idea of robotic devices for rehabilitation dates back to the beginning of the previous century [1], and it is up to this day rapidly expanding [6]. Not all relevant technologies have evolved though at the same pace. Compared to wearable exoskeletons, the field of neuroprosthetics has experienced significant longstanding technological advances that led up to this point to larger clinical and market applications [7,8,9]. Such devices can facilitate neuroplasticity via targeted and repetitive exercises for the lower [2] and upper limbs [10]. Those technologies display an array of advantages. Robotic rehabilitation can reduce the burden of therapists by automating tedious and labor-intensive therapy [2] and by adapting to the specific needs of the targeted individuals [1]. Additionally, it can offer a multisensory rehabilitation experience [11] when combined with other technologies such as virtual and augmented reality (VR/AR) and gaming [12] or haptics [13]. This way it can provide additional sensory feedback to facilitate neuroplasticity and become more intuitive for individuals with cognitive deficiencies [13]. Robotic technologies have demonstrated a clear potential for rehabilitation and daily use. However, the results of robotic rehabilitation as a standalone intervention are limited in terms of clinical and functional daily life outcomes [10,14,15]. This may be attributed to problems regarding robotic control interfaces [7,10], weak or unexplored synergies between robotics and other emerging technologies, or even poor understanding of human motor control impairment [1,2]. We further expand upon these advantages and disadvantages in the relevant subsections of each technology.

Better understanding of the neurophysiological specifics that underlie impaired motor control should therefore be considered particularly important and should also drive technological rehabilitation developments to increase their clinical impact [1]. In Section 2, we discuss the application of robotic neurorehabilitation to a number of indicative impairments. These key conditions limit human motor control and present researchers with a broad range of open-ended problems capable of illustrating the aims and current clinical challenges for robotic neurorehabilitation. They include stroke (acquired CNS neuron loss of vascular cause), traumatic brain and spinal cord injury (acquired CNS injuries), and amputation (acquired PNS injury and loss of tissue) (See Section 2.1, Section 2.2, Section 2.3 and Section 2.4), as well as neuromuscular disorders such as Duchenne muscular dystrophy (DMD, see Section 2.5). Additionally, we discuss applications in mental disorders, as they may impair motor control through lack of motivation (see Section 2.6). We would like to point out that this is by no means a classification of neurological diseases; rather it is an attempt to model indicative impairments according to the specific challenges they present to the field of robotic rehabilitation [16,17,18]. As such, while other conditions could be included, most of their characteristics can be modeled by a combination of the selected impairments.

The field of rehabilitation robotics is a multidisciplinary field and involves the combination of numerous technologies [6,19,20], which we discuss in Section 3. Electrodes directly link the impaired CNS and PNS with robotic control interfaces (see Section 3.1.1). Control interfaces such as digital–neural interfaces, electromyography (EMG), and brain–computer interfaces (BCIs) enable patients with impaired motor control to communicate with assistive robotic devices (see Section 3.1.2 and Section 3.1.3). Such devices can be exoskeletons or neuroprostheses, employed either for rehabilitation or daily use (see Section 3.2). VR/AR can create immersive environments for patients undergoing rehabilitation and expand the functionality of rehabilitation robotics beyond the physical world (see Section 3.3). Lastly, artificial intelligence (AI) algorithms have the capacity to bring all these technologies together and enable the meaningful integration of control interfaces and robotic devices by decoding human motor intention and enabling safe synergistic human–robot interaction (see Section 3.4).

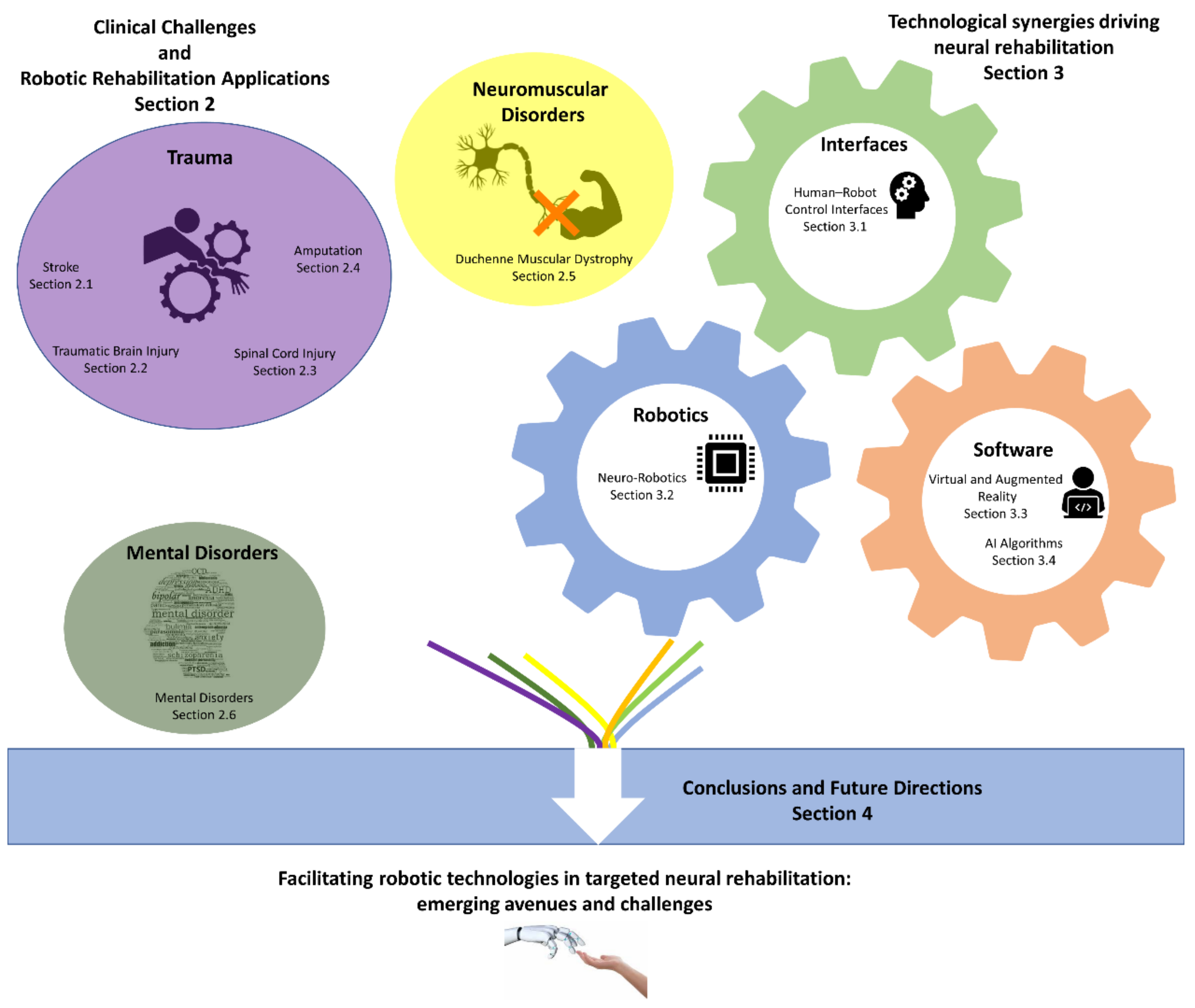

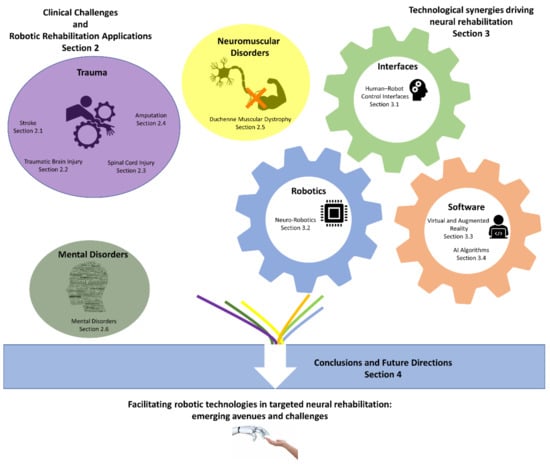

As our knowledge and understanding related to impaired human motor control is improving [1,2], robotics receive great attention and funding as a rehabilitation and neuromodulation modality [7]. Additionally, several new technologies are emerging with the potential to assist in the field of neural rehabilitation [7]. Given these facts and trends, it is time to discuss how such recent advances will lead to a convergence among technological and medical insights. This discussion aims to create new research avenues for targeted robotic neural rehabilitation related to a multitude of impairments (see Section 4). Figure 1 illustrates the structure of this article.

Figure 1.

Illustrated schematic overview of the contents of this article and their connections.

In our research we applied a narrative review approach (see Section 5), which offers a more comprehensive way to organize and analyze the existing literature in the field of robotic neurorehabilitation compared to a systematic review. To that end, a large number of pivotal articles in peer-reviewed scientific journals were selected, which helped to identify key technologies in neurorehabilitation. In this narrative review, we provide an overview of the published literature on robotic rehabilitation combined with neuroplasticity principles and a number of key impairments that can benefit from neurorehabilitation. Our aim is to highlight recent advancements in robotic rehabilitation technology and insights on impaired motor control and to offer an integrative view on how such new knowledge from diverse fields can be combined to benefit robotic neurorehabilitation. As a second step, we are considering a new generation of robotic rehabilitation technologies, which will be implemented on a larger scale and result in better, faster, and less expensive clinical and functional rehabilitation outcomes.

2. Clinical Challenges and Robotic Rehabilitation Applications

2.1. Stroke

Stroke refers to the interruption of blood supply and drainage to the brain or to the interruption of brain blood vessel wall continuity and extravasation of blood that leads to brain tissue damage [21]. In 2017 it led to the death of 6.2 million people worldwide [21], and the predicted acceleration of the ageing population is expected to raise these numbers even further [22]. Stroke survivors often suffer from impaired motor control of their limbs [23]. Despite traditional rehabilitation efforts that try to exploit the neuroplasticity of the brain to fully or partially restore motor control [23], stroke remains the leading cause of chronic disability in the US [23].

Robotics provide a way to deliver effective rehabilitation as a standalone modality or in combination with traditional rehabilitation [7,15,24]. This is mainly due to their ability to perform repetitive rehabilitation, to adjust the intensity of rehabilitation, to make use of a standardized training environment, and to reduce the physical burden of physiotherapists [1,2]. However, the current use of robotics for stroke rehabilitation is rather limited in clinical practice probably due to their high cost and complex implementation [15]. Additionally, their clinical effectiveness is still unclear [7,14,23] and yields relatively modest results [25] as a standalone therapy. However, robotics rehabilitation combined with other therapies (functional electrical stimulation (FES), transcranial magnetic stimulation (TMS), transcranial direct current stimulation (TDCS), VR/AR, and Botox injection) shows promising results [24].

Rehabilitation robots together with conventional therapy in the clinic can deliver intensive training with beneficial effects, especially early after stroke [1]. Novel developments in sensors, materials, actuation, and artificial intelligence algorithms [1,7] are expected to address their current limitations. Additionally, the development of tailored human–robot interfaces should be an integral priority for further research as it currently presents one of the main limitations for further clinical integration [7].

2.2. Traumatic Brain Injury

Sensorimotor, cognitive, and psychosocial function impairments, either temporary or permanent, are common long-term outcomes following acute insults to the brain due to external mechanical forces. This condition and its sequelae are covered by the term traumatic brain injury (TBI) [26]. Long-term or lifetime disability and need for neurorehabilitation due to moderate or severe TBI annually affects almost 10 million people worldwide [27]. Mild TBI, characterized by alteration of consciousness level and possibly brief loss of consciousness or post-traumatic memory loss, usually results in only minor or mild cognitive and functional disorders in the long run [28,29]. Moderate or severe TBI on the other hand usually involves widespread brain damage, diffuse axonal injury, and secondary physiological/metabolic alterations and often results in severe disability in the form of sensorimotor deficits, altered consciousness states, and neurobehavioral and affect disorders [26,30,31]. TBI patients present abnormalities to their quantitative electroencephalography (EEG) profiles, varying according to the severity of their injury [32]. Neurorehabilitation interventions based on quantitative EEG-driven neurofeedback have been considered for treating mild TBI symptoms, improving quality of life and cognitive function [33,34,35]. Cognitive functions—namely, attention, inhibition, and memory—can be also the target of robotic neurorehabilitation. Versatile affective robotics for TBI patients, especially for children, have been employed to improve cognitive impairments [36]. Furthermore, social robots are also useful in driving engagement and motivation for TBI patients during sensorimotor rehabilitation protocols [37].

Rehabilitation for TBI aims at improving the quality of life and specific disorders of TBI populations and robotics have seen a particular rise in interest for sensorimotor deficits, aiming to enhance compensatory and recovery-associated neural plasticity mechanisms [16]. Robotic technologies for the rehabilitation of TBI vary from employing treadmills and exoskeletons to robotic orthoses and hybrid systems. Demonstrating adaptive neural plasticity in addition to functional improvement should be considered an important aspect of demonstrating the added value of robotic technologies to neurorehabilitation, as tools to promote neural recovery [38]. Moreover, robotic-assisted neurological assessment of motor skills may prove sensitive enough to reveal occult visuomotor and proprioception deficits, otherwise not traceable in traditional neurological examination, and effectively guide rehabilitation interventions [39]. Robotic assistance implementations, especially wearable devices such as exoskeletons, also display further advantages. Those include reproducibility of rehabilitation training and increased support during training, especially with regard to moderate and severe sensorimotor deficits [40]. Moreover, the feasibility of a hybrid approach has been demonstrated as well. Using both a passive exoskeleton and FES for actuation of a paretic arm and training of reaching movements has initial reports of high satisfaction scores [41]. Finally, the integration of VR to neural rehabilitation protocols that use robotic technologies has demonstrated the added value it can bring to rehabilitation from TBI [42]. VR improves the sense of embodiment and has been tested in the form of Cave systems (specialized room-wide projection of virtual environments) for improving balance and gait improvement after TBI [43]. Such systems have also been tested for motor skills and affective states in moderate TBI [44], as well as for enhancing neurobehavioral function during Lokomat robotic treadmill training [45]. In conclusion, a variety of assistive, robotic, and complementary neural technologies have been tested with promising results for various TBI populations. Robotic-assisted neurorehabilitation for TBI seems to already be at a more advanced stage than for other neurological disorders. What currently is perchance lacking with regard to their scalability and wide implementation can be identified as a need for standardization of neurorehabilitation protocols and interventions.

2.3. Spinal Cord Injury

Motor vehicle accidents and falls account for almost 8 out of 10 incidents of spinal cord injury (SCI). This condition affects predominantly young male adults, although the average age at injury has reportedly increased in recent years to 42 years in the US [46]. Life expectancy ranges from approximately 9 to 35 years for patients suffering an injury at the age of 40, up to 20 to 53 years for patients suffering an injury at the age of 20, depending on the level of injury and the extent of neurological damage. That leads to many years of disability at a decreased overall health status [47,48], increased burden on their families and social environment [49], and greatly increased private and public health care costs and living expenses [46,50].

Rehabilitation of SCI victims remains a significant challenge, aimed at restoring independence in activities of daily living (ADL) and walking in those patients [51]. Still, poor neurological outcomes are common with almost one-third of injuries resulting in permanent complete paraplegia or tetraplegia [46]. Robotics have nowadays been incorporated in the mainstay of rehabilitation interventions for SCI victims [52] in the form of robotic-assisted gait training, bodyweight support treadmill systems, and exoskeletons [53,54,55], especially for patients with incomplete paralysis. Anthropomorphic robotics have also been used to study sensorimotor network function and to demonstrate BCI feasibility for SCI patients. Positive neurological outcome and motivation were shown to play a role in robotic control using a BCI channel [56].

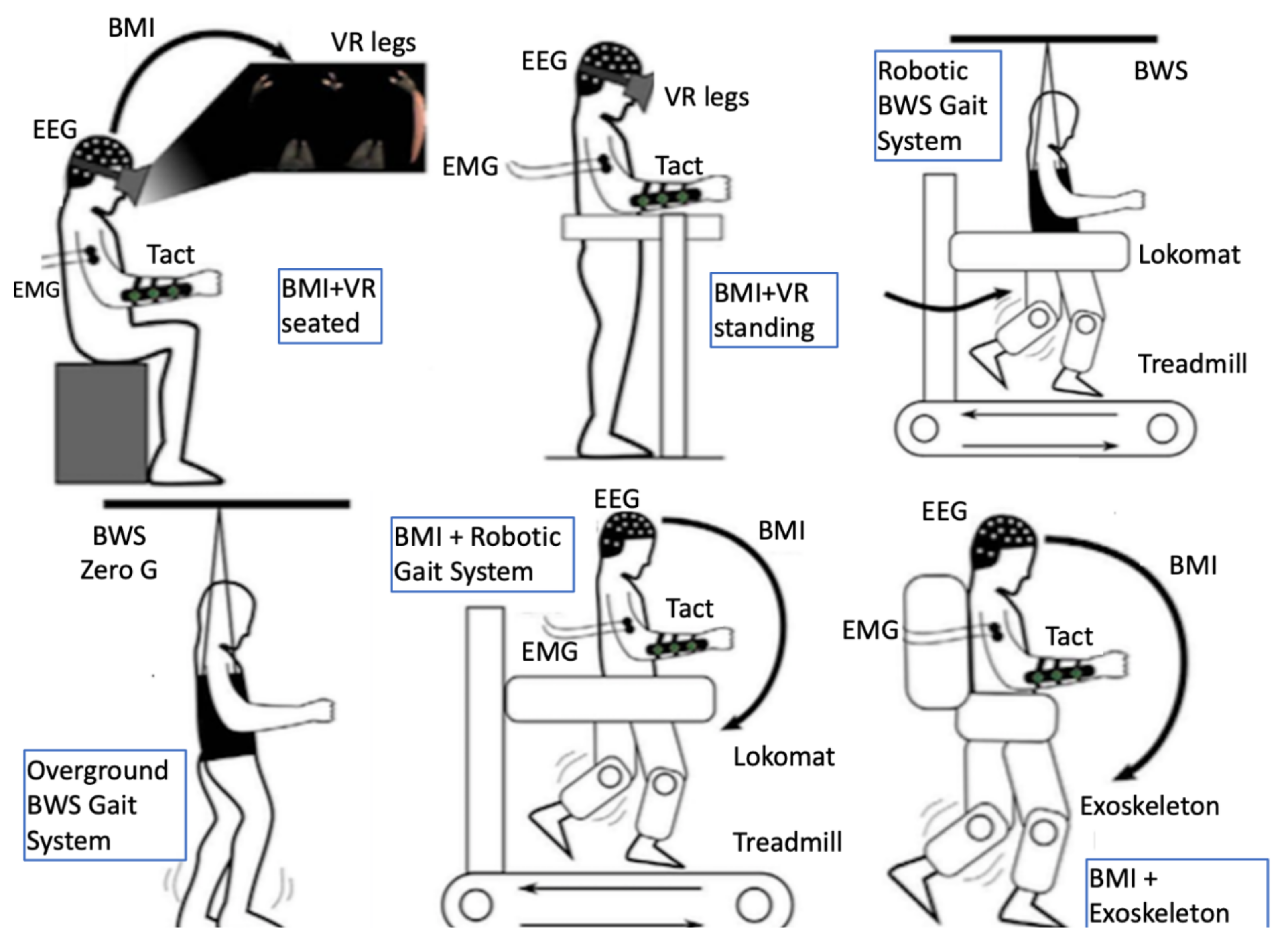

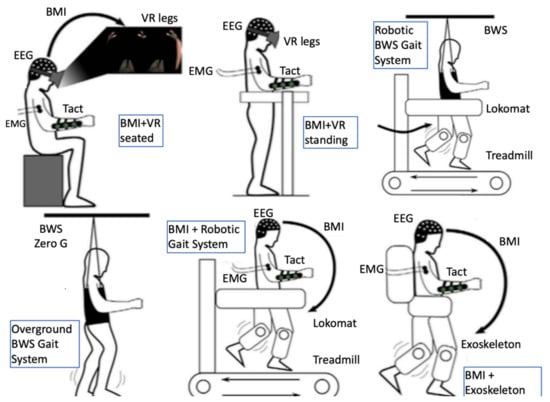

It has been demonstrated that adaptive plasticity in the sensorimotor networks of the brain and spinal cord can be promoted even in chronic stages of the condition [57]. Recently, neuroplasticity was demonstrated even in complete injuries as functional fibers crossing the injury level can be recruited into plastic changes that may lead to neurological improvement through intensive multimodal interventions [58]. The sensorimotor network is affected dynamically following an SCI [59] and research targets for promoting adaptive plasticity therein lie with different domains (for an overview see Fakhoury et al. [57]) including molecular and regenerative medicine [60,61], brain and spinal cord stimulation [62,63,64], and multimodal immersive man–machine interfaces [58,65]. The latter approach involves most of the facilitating robotics-related technologies presented later in this paper, such as BCIs, VR/AR, exoskeletons, and EMG-based assistive orthoses (Figure 2). This multimodal approach combines the advantages of maximizing immersiveness and minimizing invasiveness while currently arguably being closer to providing a solution to neural rehabilitation of SCI victims [66] to other approaches aiming for a definite cure.

Figure 2.

Multiple immersive man-machine interfaces and a combination of facilitating technologies have been demonstrated to have synergistic effect in promoting adaptive neuroplasticity in chronic complete spinal cord injury; figure modified from Donati et al. 2016 [58]). BMI, brain–machine interface; BWS, body weight support; EEG, electroencephalography; EMG, electromyography; Tact, tactile feedback; VR, virtual reality.

Unobtrusiveness of robotic devices aiming at upper limb rehabilitation and assisting ADLs is also a key goal within reach of technological advances in this field, which the category of “soft robotics” is aiming at [67]. Body–machine interfaces using neurophysiological signals such as EEG and EMG as input and FES as output to control muscle activity are also under consideration in the context of assistive technologies both for lower limb [66] and for upper limb rehabilitation [68] after SCI. This approach extends the concept of “soft robotics” and “exosuits” to reciprocally incorporate a patient’s body in the control scheme and the assistive technology into the body schema.

2.4. Amputation

Nearly 2.1 million people were living with amputation in the United States in 2019, which is 0.63% of the population, and that number is estimated to double by 2050 [69]. Worldwide estimations are not easy to collect largely due to the variability of what causes the amputation, as well as underreporting in less-developed countries [70]. Prosthetics and bionics are usually employed to assist people with amputation [7]. To secure an optimum prosthetic benefit for the traumatic amputees, we need to ensure prompt prosthetic fitting and proper rehabilitation in addition to post-traumatic counselling [71].

Important progress has been made over the past several years in creating prosthetic technologies aiming to restore human functions to those that suffer from the partial or full loss of their limbs [70]. Some of the advances that allow more amputees to have independent lifestyles are in sources of power and electronic controls, socket fabrication and fitting techniques, components, and suspension systems [72]. The rehabilitation for upper limb amputees has also benefited from technological advances such as myoelectric and proportionally controlled devices and elbow joints. For the lower limb amputees, some of the advances that are now used are ankle rotators, electronic control hydraulic knees, energy-storing feet, and shock absorbers [72].

Prosthetic types should be selected based on a patient’s individual needs and preferences. There are indications that body-powered prostheses have benefits in durability, frequency of adjustment, maintenance, training time, and feedback, but control can still be further improved. Myoelectric prostheses are more well-established for light-intensity work, and they can improve phantom-limb pain and cosmesis [73].

However, many challenges remain, such as the complexity of neural changes after amputation and the still enigmatic fundamentals of motor control in amputees, to understand and address the barriers in patient adoption of technology [70]. Understanding how these critical factors work with device designs and emerging technology can have a great impact on the functional outcomes of patients with limb amputation [72].

2.5. Duchenne Muscular Dystrophy (DMD)

DMD is an X chromosome-linked progressive neuromuscular disease that results in muscle fiber degeneration, motor deficiencies [74], and shortened life expectancy [75]. DMD is the most common form of muscular dystrophy [76], with an incidence of 1 out of 4000 male births [77]. The population of people with DMD is expected to grow, due to technological advances that significantly increased their life expectancy [78]. However, their independence-related quality of life remains poor [77].

Robotic exoskeletons have the potential to increase their quality of life by supporting the upper limb to improve their independence [79]. Such exoskeletons can prevent the disuse of the limb and tissue degeneration [80]. Additionally, individuals with DMD need robotic devices for daily assistance and for a significant amount of time [81]. In recent years robotic devices to support the arm [82], the trunk [83,84], and the hand [85,86] of people with DMD were developed and tested with promising results. Novel sensors were used [84,85] in integration with novel robotic designs [83,86] to achieve robust interfacing between the user and the robotic device.

Current studies for the use of robotic exoskeletons in DMD are not many and are limited by a small number of participants [82,87]. More extensive longitudinal studies can give further insights into how DMD affects motor control in different individuals with high functional heterogeneity [88]. Furthermore, novel control interfaces customized to the needs of individuals with DMD need to be developed to achieve robust and intuitive interfacing between them and the assistive devices [74,87]. A complete and multidisciplinary rehabilitation approach may create a favorable environment for robotic rehabilitation in DMD [89,90].

2.6. Mental Disorders

Immersive affective applications based on robotics and related technologies have been well under consideration in the study and treatment of psychiatric disorders [42,91]. Immersiveness of affective applications has been demonstrated as a parameter influencing motivation, as well as efficacy of neurotherapy [92,93]. Computational modelling of affect spans already two decades [94,95], and a variety of humanoid or anthropomorphic robots have already been tested in the treatment of cognitive or developmental disorders, among others [91]. Nonetheless, affective and social robots still demonstrate limitations in scope, engagement, and validation, while they are still not universally applied in mental healthcare [96,97]. An approach to improving the affective impact of robotics can be identified in repurposing neurotechnologies in the form of serious gaming [98]. Such an example of repurposed neurotechnologies for affective study can be seen in a 3D graphic environment BCI implemented in UNITY engine [99]. This example is using real-time functional magnetic resonance imaging-based neurofeedback to study emotion regulation—exploring synergistic effects and combinations, which could help develop affective robotics that play a definite role in the multimodal treatment of psychiatric disorders [100].

3. Technological Synergies Driving Neural Rehabilitation

3.1. Human–Robot Control Interfaces

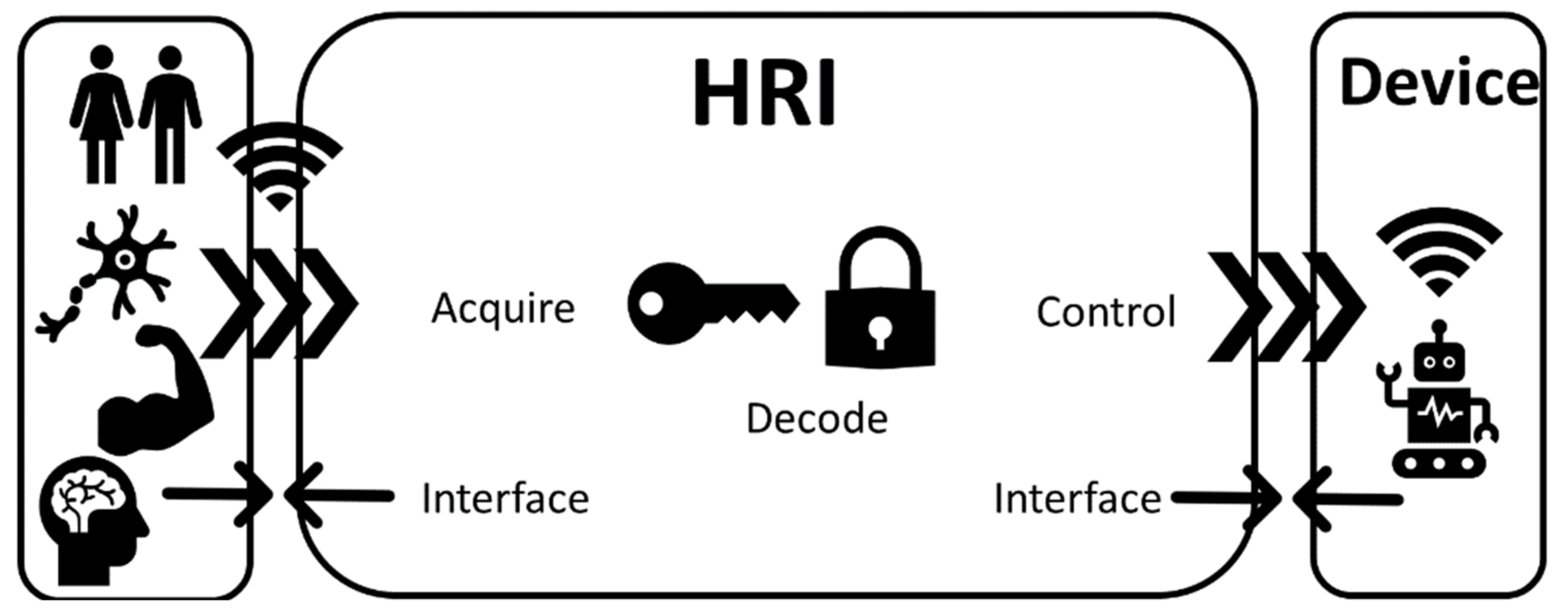

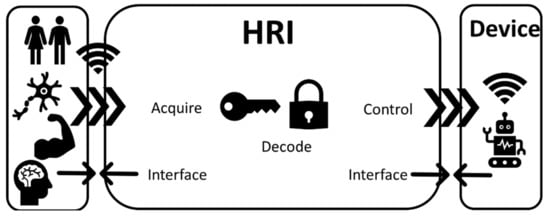

Human–robot control interfaces (invasive and noninvasive) are technologies that acquire, decode, and communicate the intention of a human user to a device via control commands (Figure 3). Such a device can be a wearable exoskeleton or a prosthetic/bionic device [7]. The technologies mentioned in this section interface either directly (digital–neural interfaces) or indirectly (EMG interfaces) with the PNS or with the CNS (BCIs) [101,102]. Such technologies use a physical interface via electrodes that based on invasiveness can be discriminated into either implantable (applied directly at the brain/muscle/nerve) or surface (applied on the skin) devices [102].

Figure 3.

Human–robot interfaces (HRIs) are interfacing the human (brain, muscle, and nerves) with a device by acquiring biological signals, decoding them, and translating them to control commands for various assistive, rehabilitation, or prosthetic devices.

3.1.1. Digital–Neural Interfaces

Digital–neural interfaces are connections that enable two-way information exchange between the nervous system and the user (Figure 3). Such interactions can occur at various levels, including interfacing among the peripheral nerves and the spinal cord, as well as the brain. In many instances, some of the fundamental biophysical and biological implementation challenges are shared across these levels. Furthermore, such interfaces can be either invasive—such as implantable microelectrode arrays [103]—or noninvasive, such as in the cases of EEG [104] and wireless interfaces. Interfacing with the neural system in an invasive manner for rehabilitation purposes can also be achieved with deeper brain sensing and stimulation. Robotic physical assistance has been converging with deep brain stimulation (DBS), leading to novel forms of neural engineering technology, although this field does not necessarily precisely fit the scope of neural rehabilitation [105].

Neural sensing and control interfaces used for the first time back in the 1960s were technologically primitive by modern standards and were mostly focused on prosthetics. Muscles were activated as a group, essentially reducing multiple degrees of freedom (DOF) to a single movement (e.g., grasping). In the decades that followed, several key technological advances relevant to neural rehabilitation have taken place. Fueled by rapid development in digital electronics, microcontrollers, signal processing, control and machine learning algorithms, robotics engineering advanced and synergistically converged with prosthetics engineering, leading to ever more sophisticated artificial limbs. Implantable wireless electronic devices subsequently appeared capable of transmitting signals directly to and from the PNS, avoiding the necessity to maintain an open skin wound. The prospect consequently appeared for mixed-signal assistive electronics to help bypass irreparable trauma to the nervous system by acting as signal amplifier, repeater, and filter.

Interfacing with the PNS via neural electrodes is considered one of the most promising ways to control sophisticated neuroprosthetics [102]. For direct physical interfacing with the nerves, there are two main types of electrodes—namely, extraneural (placed around the nerve) and intraneural (inserted inside the nerve) [101,102]. Extraneural electrodes include epineurial, helicoidal, book, and interfascicular electrodes (which are mainly used for stimulating peripheral nerves), and cuff electrodes, which are capable of both nerve stimulation and recording of neural signals [101,102]. Intraneural electrodes include intrafascicular, penetrative microelectrodes, and regenerative electrodes, which are used for both stimulation and recording [101,102].

At the point that this paper went to press, there is a technological trend toward wireless and less invasive implantable electrodes, as well as toward further shrinking the electrode contact features from the micron to the submicron scale (e.g., carbon nanotubes). Neural cuff electrodes with embedded electronics—which can handle wireless power, data transfer, and have adequate computing power for fundamental signal processing—is, in our opinion, one of the most promising sets of converging technologies. It can potentially provide selective, low impedance signal recording and stimulation without requiring an open skin wound for wire passage.

Despite the aforementioned technological advances, numerous challenges still remain in the application and widespread adoption of digital–neural interfaces. Frequently, significant compromises have to be made regarding the accuracy, response time, and number of degrees of muscle movement freedom [102]. Issues with the powering of neural implants as well as in the wireless transmission of data from and to the implants limit optimal control of external devices [102]. These control issues are further inhibited by the amount of selectivity and resolution that can be currently achieved by existing hardware [102]. Sensing and stimulation electrodes are often a weak link in the signal flow; thus, research and development in the past two decades have increasingly focused on the technological improvement of electrodes and sensors [106]. Additionally, the surgical procedures required to implant neural electrodes, as well as the physical properties of the materials comprising the electrodes, may result in an injury of neural tissue or rejection of the implants by the human body due to biocompatibility issues [102]. Last but not least, due to the novel nature and recent clinical application of implanted digital–neural interfaces, their long-term effectiveness, stability, and reliability are still unclear due to issues related to the longevity of the electrodes [102].

3.1.2. Electromyography

To elicit motion, the human nervous system recruits motor units (MUs), motor neurons, and muscle fibers innervated by that motor neuron [107]. The electrical activity of motor neurons can be detected using EMG [108]. An EMG signal is composed of the superposition of action potentials produced by multiple MUs when movement is elicited [109]. Myoelectric interfaces using electrodes can be considered an established technology as they have been proposed since the 1940s and implemented since the 1960s [110]. EMG is routinely used as a tool to indirectly study nervous system motor control organization and function [109] for both healthy and impaired individuals [74,111], as well as a means to acquire data to control assistive robotic devices such as orthoses and prostheses (Figure 3) [112,113,114].

EMG uses electrical current to measure motor unit activity noninvasively on the surface of the overlying skin [109] or invasively using needle electrodes and implanted sensors [115]. For surface measurements, low-density EMG is traditionally used, where two sensors are placed closely above the muscle belly [116]. More recently, multichannel and high-density EMG have been developed, where a grid of sensors is deployed over the muscle(s) of interest. Such methods address common shortcomings of regular low-density EMG, including the need for accurate sensor placement [117], and enable EMG decomposition [118]. By decomposing EMG signals, the motor unit action potentials can be reconstructed in vivo without the need to resort to invasive techniques [119]. Lately, implantable EMG systems (iEMG) have been introduced in prosthetic control research [120,121,122] due to their ability to overcome some surface EMG (sEMG) limitations (mitigate the effects of cross talk by specific insertion to the targeted muscle and changes in limb position [122]). However, so far iMEG shows moderate clinical implementation [123]. This may be due to the invasive nature of those sensors. Their chronic implementation is impeded by the small pick-up area and a limited number of MUs measured [123]. However, iEMG is currently being explored for a broad range of applications in robotic rehabilitation for clinical scenarios such as SCI, stroke, and amputation [119,124,125,126], even though for some applications sEMG is still performing better [122]. The development of those measurement methods in combination with a variety of EMG decoding algorithms [124] led to EMG evolving into one of the most common control interfaces for assistive robotics [7] for cases where the residual muscle structure is intact. Additionally, in cases of people with degenerated muscle tissue, EMG showed promising results for interfacing with assistive devices [85]. Even in cases of the complete absence of residual muscle, EMG in combination with targeted muscle and sensory reinnervation (TMSR) and decomposition enabled interfacing with prosthetics for upper limb amputees [125].

Despite the variety of options EMG offers for wearable robotics control, its implementation is in practice limited by multiple factors. Wearable robotics or prosthetics for daily use need to be able to perform unrestricted dynamic motions [126]. Currently, the number of cables and large amplification circuit boxes, especially for high-density EMG, restrict movement, induce movement artefacts, and may result in user discomfort [126]. However, recent breakthroughs resulted in the development of more portable amplification circuitry, which addresses these limitations successfully [126]. Additionally, EMG signal quality heavily depends on electrode placement, movement and cross-talk artefacts, electromagnetic noise, and changes in skin condition [7,127]. This has consequences for human–robot control interfaces as it often results in unreliable and unpredictable control [128], which is further deteriorated outside of controlled lab conditions and can lead to user rejection of prostheses [129]. The development of safe and long-term implantable electrodes can successfully address these issues and improve signal quality for EMG human–robot interfacing [128,130]. Last but not least, the robustness of machine-learning algorithms for EMG is currently limited by the need for retraining [128], significant setup time [131], and the inability to generalize training that happens in a specific spatiotemporal setting [132]. Recently, a new approach using neuromuscular biomechanical modelling for EMG-based human–robot interfacing showed significant advantages over traditional machine learning approaches [131,133].

3.1.3. Brain–Computer Interfaces

BCIs refer to computer-based systems that interface with the brain to acquire, decode, and translate the brain activity of the user into control commands for various devices [134]. A complete BCI framework (Figure 3) does not include only the sensors (to acquire brain activity), but also includes the software (to decode brain activity [135]) and the hardware (to process brain activity and control a device) [136]. BCIs offer a communication method that can be useful when the CNS is impaired by disease or trauma [134]. Depending on the nature of the CNS impairment, BCIs offer a broad set of applications [137], spanning from enabling communication and environmental control to enhancing neuroplasticity and assisting robotic rehabilitation [104,138,139].

The latter presents an exciting clinical application of BCIs, which investigates their use in neurorehabilitation or ADL assistance of people suffering CNS-impairing trauma or disease, or limb amputation [7,104,138,140]. BCIs are often used in combination with exoskeletons to assist robotic rehabilitation [141] or as a means of providing feedback and monitoring recovery [142,143]. Additionally, BCIs can act as neuromodulation techniques exploiting the dormant neuroplasticity of the CNS to promote functional recovery [144] during traditional rehabilitation. BCIs may also be used to enhance neuroplasticity in multimodal combinations with functional electrical stimulation (FES) [145], brain stimulation [146], virtual reality [147], and assistive robotics for motor relearning [145,146]. BCIs show a universal potential as they can address a range of impairments that include stroke [148], SCI [138], and muscular dystrophies [149], while also being able to adapt to their internal variability (i.e., stroke severity). This may increase rehabilitation efficiency (custom-made training regimens and multiple training modes [148]) and reduce costs of rehabilitation (home rehabilitation, lower the burden for rehabilitation physicians). Therefore, BCIs can be a realistic option for ADL assistance or rehabilitation once the associated risks and costs are overcome by their benefits [146].

However, it is still unclear if the therapeutic effects of BCIs are because of their standalone application or due to combination with other therapies [148]. Additionally, there is no significant improvement shown compared to traditional therapy [148], and the generalizability and retainment of BCI rehabilitation effects are still dubious [138]. This may be proven with more studies that include affected subjects as opposed to healthy, with an equal focus to both upper and lower limbs [148], and multidisciplinary protocol designs to assess cost-effectiveness and impact on the quality of life [134]. Improvements in sensor technologies [138] (see Section 3.1) are attempting to solve many of the implementation problems of BCIs, such as complex user interfaces [138] and time-consuming application of gel that hinders the clinical application of BCIs [138], and also to reduce electrical artefacts due to adjacent devices [138]. BCI intention-decoding algorithms (software) are limited by their very lengthy calibration processes and the questionable generalizability between different conditions and people [135,150]. However, the recent rapid development of deep learning, with impressive results [151], is expected to further increase the efficiency of deep learning algorithms for BCIs [135] and their robotic rehabilitation applications [152].

3.2. Neuro-Robotics

3.2.1. Exoskeletons

Exoskeletons are devices that aim to interface with the human (Figure 3) and assist with the recovery of the walking function compromised due to sensory and cognitive deficits. Repetitive training using such technological aids assists the human nervous system to create alternative neuron paths to replace the damaged ones [153].

Technological Challenges of Exoskeletons

Stroke patients who receive electromechanical gait training assistance in addition to physiotherapy are more likely to achieve independent walking [154]. Some of the roadblocks remaining for developing commercially successful lower limb (LL) exoskeletons are human–machine interface compliance, the optimization of the control algorithms, and the smooth coordination with the physiology of the human body with low metabolic energy expenditure [155].

The actuation system of exoskeletons is a determinant factor since it affects their design and defines their portability, performance, and effectiveness [156]. There are four main types of actuators used in modern exoskeletons: electric motor (the predominant type), pneumatic, hydraulic, and elastic actuators. LL exoskeletons can be further categorized into assistive and rehabilitation. Assistive are those that assist users to complete ADL that they are no longer able to do due to various impairments. Exoskeletons of this type are frequently controlled with predefined trajectories activated by the patient’s moving intention, and they require high precision control. Most assistive exoskeletons are overground and driven by DC motors, such as Indego [157], eLEGS, AUSTIN, ReWalk, and HAL [156]. The MindWalker exoskeleton [158] has DC motor actuators with series elastic actuators.

Early upper limb (UL) rehabilitation robotic devices were end-effector type, which means that they were simpler in design and had only one point of attachment to the user’s limb [153]. The InMotionArm of MIT-Manus [159] is attached to the patient’s forearm and is used with robotic therapy games to encourage and synchronize therapeutic tasks, a method widely used in UL rehabilitation systems. The RATULS trial showed analogous clinical effectiveness of robot-assisted training using the MIT-Manus compared to repetitive UL functional task therapy and usual care [159]. Even though these types of end-effector devices for neurorehabilitation are less costly, they are often restricted to mimic the joints, measure joint torques, and drive joint-specific rehabilitation. These limitations are addressed by the use of wearable robotic exoskeletons in an anthropometric fashion. Such devices can be worn by the patient and are attached to multiple locations, permitting a much larger range of movements and the ability to focus on specific joint movements [160].

Wearable exoskeletons can be active, with one or more actuators that actively augment the user, or passive, in which case they do not use an external power source and have the ability to store energy and use it when required. Some examples of passive exoskeletons are the Armeo Spring, which allows variable upper limb gravity assistance [161], the GENTLE/s, which is an elbow orthosis setup suspended from the ceiling using cables, and the L-Exos [162], which has a passive forearm DOF. Active exoskeletons aim to assist people with impairments that require further assistance than the one provided by passive due to severity or need for active neurorehabilitation (thus the focus of this work).

Examples of Exoskeletons

Representative examples of active UL wearable exoskeletons include: the NeReBot, which is a cable-driven exoskeleton actuated by three motors that maneuver the user’s arm [153]; the Armeo Power, which supports the rehabilitation of multiple DOF of the arm; and the Exorn, which is a portable exoskeleton developed to support all DOFs of the arm, also containing two at the shoulder and four at the glenohumeral joint [153]. The UL part of the full-body Recupera-Reha [163] exoskeletal system is the latest dual-arm robotic setup designed for stroke rehabilitation. Regarding the distal part of the UL, the SymbiHand finger exoskeleton was designed to provide daily support to patients with DMD [85,86]. Finally, the soft arm exosuit designed for elbow rehabilitation in [164] and the SaeboGlove, which is a lightweight solution for assisting finger and thumb extension, highlight a new and promising technological evolutionary trend toward soft exosuits.

LL assistance-as-needed rehabilitation exoskeletons aim to help users regain functional abilities through repetitive exercise with progressively reduced assistance. Thus, the control is partially predefined and uses online modifications so that it only assists when it is necessary based on patient feedback. Rehabilitation exoskeletons are traditionally stationary and fixed to a treadmill. The Lokomat [165] is an example of a medical exoskeleton for gait rehabilitation that uses electric motor actuators. One of its main advantages is that it can also support severely affected patients to train manually and relieve the therapists from strenuous physical work. Other similar stationary exoskeletons are ALEX with DC motors and PAM with pneumatic actuators [156]. However, the distinctive lines between overground/mobile systems being intended for assistive purposes and treadmill systems being intended for rehabilitation have recently become blurred, as there are also mobile rehabilitation exoskeletons, such as Wearable Walking Helper; Honda:SMA with DC motors; MIRAD, H2, and HAL with electric motor actuators; and LOPES and XoR with pneumatic actuators [156]. In fact, overground systems can provide strong proprioceptive feedback to induce neural plasticity and have been used in combination with treadmill systems in novel rehabilitation protocols [58].

3.2.2. Neuroprosthetics

Prosthesis (a word that comes from Greek and means addition and attachment) is a device or system that replaces a missing body part to supplement its functionality [166]. Thus, a neuroprosthetic is a device (Figure 3) that interacts with the nervous system and supplements or restores functions in the user’s body [167].

Technological Challenges of Neuroprosthetics

The purpose of controlled neuroprosthetics is to transfer control intent from the central nervous system to drive the prosthetic devices of users with immobilized body parts [168]. Such control generally requires high levels of concentration by the patient and long training periods, often resulting in high rejection rates of prosthetics [129]. To accomplish robust control, there are two main challenges: (1) development of neural interfaces that last a long period, and (2) skillful control of the prosthetic device comparable to natural movements [168]. Up-to-date hand prostheses are actuated by advanced motors, allowing the restoration of fine motor skills with direct muscular signals connection [169]. In the long run, the goal is to accomplish a quantum-leap advance in neural controllable degrees of autonomy that should permit the user to perform tasks of daily living without effort [168]. Some points to consider regarding control and feedback are the location of the interface with the CNS and the PNS, as well as the invasiveness of the interface, from noninvasive interfaces to the most invasive interfaces requiring surgical implantation [170]. Surgical procedures, such as targeted muscle and sensory reinnervation (TMSR) [171,172,173] and osseointegration [174], greatly improved PNS signal decoding and subsequently improved prosthetic control, as well as the donning/doffing and stability of the prosthetic fixture. In TMSR, motor and sensory nerves of a severed limb are surgically rerouted to reinnervate regions of large intact muscles (the pectoralis for the upper extremity and the hamstring for the lower extremity [171,172,173]). Osseointegration is a surgical procedure where a load-bearing implant is directly integrated with the residual bone of the amputee to improve prosthetic connection and sensory feedback [174].

Regarding control, the key technologies used for interfacing prosthetics with an amputee are EMG, neural and BCI interfaces (interfacing directly or indirectly with the PNS), and body-powered and impedance/admittance control (interfacing with the residual/unimpaired anatomy of the user) [7,102,168]. The three key ways to interface with the PNS/CNS are described in Section 3.1. and are very popular with UL prosthetic control [7]. Impedance control manages the relation between position and force and is mainly applicable in LL prosthetics controlled by the multi-joint mechanical impedance. To control a prosthetic, it is equally important that the user can have a proper feedforward and feedback signal. This can be achieved via body-powered control methods that focus on the use of the remaining anatomy of the human to mechanically control a prosthetic limb [7]. Body-powered control can be complemented/combined with other approaches such as cineplasty, muscle bulging, myoelectricity, and myo-acoustics to create hybrid control interfaces for UL prosthetics. Additionally, FES based neuroprostheses (in the case of FES, the impaired existing limb is considered the prosthesis) can stimulate muscles or nerves [175,176] to elicit movement in the impaired limb and enable UL function restoration [177] and LL gait training [178]. FES can act as a standalone [177,178] or in combination with robotic exoskeletons [179].

Some of the most common decoding algorithms for neuroprosthetics control can be categorized as (1) independent models, (2) dynamic models, (3) reinforcement, and (4) classifiers. In the first category we have algorithms such as, Bang-Bang control, which is activated when a specific limit for a measured variable is reached, used in cases such as the delivery of cortical electrical stimulation [180] and the mapping of stimulus thresholds in high electrode count implanted neurostimulators [181]. A finite state machine contains the measurement of a system variable, which in combination with the modelled system’s present state activates an action and a state shift [182] and is used in periodic functions such as the gait during walking. A population vector algorithm is based on the fact that there are directional preferences in different neurons [183], and it can be applied for the cortical representation of the arm motor control, encoding the lengthening or shortening of specific muscles. In the second category, there is the Kalman filter—which is a recursive optimum estimator and is mostly used for taking out signal from noisy measurements [184], variants of which have been proposed for neuroprosthetic closed-loop control to capture features of the neuroprosthetic task—and the point process filters—where the activity of individual neurons can be modelled as point procedures [184]. The reinforcement learning scheme may also be suitable to neuroprosthetic control in a real-life usage scenario where the task and related trajectory varies and accomplishing the task may be the only reinforcement signal offered [185]. Finally, in the last category are artificial neural networks, which are a data-driven method arranged in layers with neurons or nodes that can be used to achieve control of a myoelectric prosthetic hand [186], and support vector machines, which are supervised machine learning methods that can implement regression or classification.

Examples of Neuroprosthetics

For UL amputees, existing commercial prosthetic hands offer single-DOF actuator designs to open and close the fingers and thumb, such as Ottobock’s Sensorhand Speed, products from Motion Control Inc. and RLSSteeper Inc., or multiple-DOF actuator designs with articulated fingers, such as the Touch Bionics i-LIMB and the BeBionic hand [9]. The Otto Bock Michelangelo hand is a combination of fully articulated and single-DOF hand-design [9]. More actuated DOFs, various grasps, and control mechanisms are provided by several intrinsically actuated (actuation, transmission, and control elements are embedded in the prosthetic) prosthetic hands. Such hands are the Fluidhands, the DLR hands, the Cyberhand, and the Smarthand [9]. The need to transmit sensory feedback from the prosthesis [187] led to the development of the Modular Prosthetic Limb (MPL) with 26 articulated and 17 controllable DOFs with bidirectional capability and the DEKA arm, which provides powered movement complemented by surgical procedures (such as TMSR) for sophisticated control over multiple joints [8]. Other successful examples of prostheses that allow sensory feedback to enhance motor control include Revolutionizing Prosthetics, the HAPTIX, the Cyberhand, and the NEBIAS [169]. Lastly, applications of additive technologies in the manufacturing of prosthetic limbs, such as rapid-prototyping (3D printing), are becoming an integral part of UL prostheses [188], with commercial outcomes such as the Robohand and the Andrianesis’ Hand [189].

For LL amputees, neuroprosthetics assist with movement and balance [8]. While passive devices offer only basic functionality, semi-active prostheses are capable of adapting their behavior. They achieve this by controlling magnetorheological systems (Rheo knee, Ossur) or valves (C-Leg, Ottobock) with information from the gait cycle [190]. Active or powered prostheses are actuated by motors and provide greater performance and functionality. Various research groups are developing powered knee, ankle, or leg prostheses that provide kinematics that are similar to able-bodied movement in a more effective way than passive and semi-active systems [191]. LL prosthetics that control both knee and ankle joints are the Vanderbilt Prosthetic Leg by Center for Intelligent Mechatronics, the OSL by Neurobionics Lab, and the AMPRO by Advanced Mechanical Bipedal Experimental Robotics Lab [191]. Prosthetics of the knee joint with impedance control have been developed by Biomechatronics Group, Massachusetts Institute of Technology, and Delft University of Technology [190]. Other commercial examples of bionic ankles are: the MIT Powered Ankle, the first commercialized powered ankle prosthesis by Ottobock and previously by BionX; the one from Arizona State University, commercialized by SpringActive; one from the Mechanics Research Group (Vrije Universiteit Brussel), commercialized by Axiles Bionics; and one from the Biomechatronics Lab (Stanford University), commercialized by Humotech [191]. Several 3D printed LL prosthetic designs are being fabricated by companies, such as the bionic leg prostheses by BionX Medical Technologies, the Exo-Prosthetic leg, and the Andiamo leg [192].

3.3. Virtual and Augmented Reality

VR is an artificial simulation or a reproduction of a real-life environment using immersive projections of virtual objects [193]. Furthermore, AR is a technology that projects layers of computer-generated graphics in real world space, while mixed reality (MR) allows for physical interactions of virtual and real elements [194]. These virtual reality environment (VRE) technologies have opened new possibilities for effective neural rehabilitation through accurate feedback and presentation [195]. However, while they have existed for approximately two decades in the form of applied research, they have yet to be fully integrated into mainstay neural rehabilitation [196,197,198]. A primary objective of neural recovery is for patients with motor disabilities to reacquire the ability to perform functional activities. Repetition, encouragement, inspiration, and task-driven preparation can promote successful recovery [199].

Contemporary literature on motor control indicates that enhancing functional tasks may benefit from task-oriented biofeedback therapy [144,200,201]. Task-oriented biofeedback can be described as the use of instruments to engage subtle physiological processes while integrating task-dependent physical therapy and cognitive stimuli [202]. Furthermore, VR/AR environments can significantly help task-oriented biofeedback and robotic-assisted rehabilitation by providing visual, auditory, and physical interaction in an immersive manner. The key underlying hypothesis in this direction states that virtual-trained skills and functional gain can also transfer to the real-world, which has been demonstrated for advanced motor skill acquisition in healthy motivated individuals [203]. A critical point toward applying this concept for rehabilitation of motor-impaired individuals lies with ecological validity. Until recently, VRE technology applications in neural rehabilitation have been limited to proof-of-concept, novelty, and immersion improvement techniques, yet the question of whether they provide added value in generalized real-world settings still remains unanswered [197].

Advances in technology and affordability, as well as their advent popularization, allow for critical study of the aforementioned systems’ efficacy and ecological validity [198,204]. As such, the novelty and immersion factors related to VRE have been proven to promote motivation, excitement, and task engagement, subsequently leading to increased efficacy of virtual rehabilitation regimens over traditional forms of rehabilitation [198]. Furthermore, the physiological mechanisms of presence and immersion (which we discuss below) provide added value to motor function gain well beyond the benefits of increased motivation [204].

It should be noted that multimodality and synergistic effects of VR with other novel neural rehabilitation technologies have also been recently shown in patients with motor impairment due to SCI [58] and that the neural plasticity effects, while demonstrated, are still under investigation [65]. Task-oriented biofeedback using VR/AR systems in conjunction with robotic-assisted rehabilitation can improve patients’ recovery. For example, L-EXOS is an exoskeleton that covers the full spectrum of human arm movement by integrating a wearable structure with an anthropomorphic workspace that offers five DOF. L-EXOS uses a VR technology for task-oriented therapy in the form of a task-oriented exercise program mission [162]. Additionally, Tageldeen et al. designed a VR-based serious game for arm rehabilitation using a wearable robotic exoskeleton. Their VR serious game goal is to improve the patient’s motivation for repetition, which is critical in a patient’s therapy [205]. Comani et al. integrated high-resolution EEG (HR-EEG) recordings, a passive robotic device, and VR for stroke recovery. The robotic device works with five VR task-driven training applications and is synchronized with an HR-EEG system. This set-up enabled them to acquire EEG signals in association with the execution of specific training tasks to quantify the task-related changes in the brain activation patterns during recovery of motor function [206].

Furthermore, VR/AR systems without robotic devices attached have also been demonstrated to offer significant gains in neural rehabilitation [202]. For example, in a VR system designed by Kynan Eng et al., the patient is seated at a table facing a monitor that projects virtual arms in the same orientation as their own. The goal of this exercise is to maximize a point score by hitting, catching, and grasping virtual balls. During therapy sessions, the patient’s real arm’s movement is correlated to the virtual arm’s movement to encourage the patient to treat the virtual arm as part of their own body [207]. Sucar et al. also suggested another gesture therapy using a VR system, in which they developed a VR-based motor rehabilitation therapy that enhances gestures. Within a secure virtual world, the patient is challenged to perform everyday tasks in the form of brief, intense games [199]. On the other hand, AR-based systems can provide help during task-oriented rehabilitation therapy. For instance, YouMove is an AR system using an AR mirror that reflects the patient’s movement and provides feedback and guidance. The system trains the patient through a series of stages that gradually reduce the system’s guidance and feedback [208]. Another noteworthy AR-based motor therapy protocol was designed by Hondori et al., in which they designed a spatial AR system for hand and arm movement [209]. The device monitors the hand of a subject and creates an audio–visual interface for rehabilitation-related activities requiring gestures of the wrist, elbow, and shoulder. It tests the length, speed, and smoothness of movement locally and can send the images and data in real time to the clinic for further evaluation.

In conclusion, VR/AR systems for neurorehabilitation offer significant results to the therapy process due to the feedback they provide to the patient. Additionally, task-oriented training and gamification of therapy provide the motivation necessary for the patient to perform repetitive tasks. This convergence between gamification and task-oriented training is one of the core components in VR/AR systems, thus making them a promising modality for neurorehabilitation and home-based therapy systems. There are, however, certain challenges in VR/AR-based rehabilitation therapies that need to be overcome. There is theoretical ambiguity and immaturity in clinical VR research for the contexts of immersion and presence, where the terms are mistakenly used interchangeably [210]. Immersion can be achieved by delivering inclusive, extensive, surrounding, and vivid illusion of reality to the end user [211]. Presence, on the other hand, refers to the sense of being within a simulated environment [212,213]. The sense of presence is boosted by the vividness of the simulation—which leads to immersion—and by the interactivity between the user and the environment [213]. In addition, reducing motion sickness and discomfort ought to result in improved interactivity [214]. This can be achieved by optimizing image processing, which will boost framerates and minimize system latency [215].

3.4. AI Algorithms

3.4.1. AI Algorithms for Human–Robot Interaction

Human–robot interaction (HRI) is a diverse research and development field that involves artificial intelligence, robotics, and the social sciences for the purpose of interaction and communication between humans and robots. It has a wide range of applications, such as industrial robots, medical robots, social robots, automated driving, search and rescue, and several more [216,217]. In this paper, the focus of HRI is on medical robots and, more specifically, in those employed for neural rehabilitation applications.

Neurological disorders are globally one of the leading causes of disability and death, representing a severe public health problem [218,219]. Robot-aided neural therapy systems assist in addressing this significant issue by helping patients heal more quickly and safely. Interaction between a patient and a robot is not a trivial task, and a wide range of issues need be addressed, such as safety, learning by demonstration, imitation learning, cognition and reasoning, perception, and sensation, etc. [220]. Most of these issues are typically handled by AI algorithms and systems that improve the overall interaction and experience of the patients. Due to multiple representations in an environment, there is usually an excess of multimodal data, such as visual, audio, infrared, and ultrasound, which are used as input in AI algorithms capable of performing object classification, prediction, and task planning tasks [221]. Bayesian models, hidden Markov models, and genetic algorithms are some examples of widely used AI algorithms that routinely perform such tasks. Recently, the application of machine learning and, more specifically, deep learning (DL) have demonstrated promise for significant performance increase; thus, this paper will focus on DL.

An essential task for most robotic systems is object classification (OC), during which the robot classifies features and attempts to perceive its environment. Currently, convolutional neural networks (CNNs) are considered part of the state-of-the-art among AI models for OC [222]. Because of their topology architecture, CNNs can capture both low- and high-level features in visual data. A promising CNN model is PointNet, which is based on point clouds, an important type of geometric data structure. It provides a unified architecture for applications ranging from object classification and part segmentation to scene semantic parsing, all of which are applicable in robotic systems [223]. One of the most widely used and state-of-the-art CNN models for OC is the “You Only Look Once” (YOLO) model, which uses a different approach from most CNN models [224]. The entire image is used as input to the CNN, which separates it into distinct regions and estimates the bounding boxes for each one. The YOLO model requires just a single forward propagation through the neural network to generate predictions, thus it “only looks once” at the input image. The algorithm makes sure that the OC algorithm only detects each object once and then outputs recognized objects along with their bounding boxes.

Another important task for robotic systems that interact with humans is natural language understanding (NLU), in which a human operator commands the robot through natural language. One of the state-of-the-art artificial neural network (ANN) architectures for NLU are recurrent neural networks (RNNs), which are based on the idea that decisions are influenced by past experiences. In other words, RNNs allow previous outputs to be used as inputs while considering hidden states. A state-of-the-art model for robotic NLU is the Mbot [225]. In particular, Mbot uses an RNN with long short-term memory (LSTM) cells to perform action detection and an RNN with LSTM cells to perform slot filling. The action detection network identifies corresponding activity associated with the natural language commands, while the slot filling network assigns labels to all words and identifies slots such as object, destination, source, sentence, and person.

Another key component in almost all robotic systems is action planning. In machine learning (ML) the most promising approach for action planning is reinforcement learning (RL). RL refers to goal-oriented algorithms, which learn how to attain a complex objective (goal) or how to maximize a particular function over multiple steps; for instance, RL networks can maximize the points won in a game over several moves. These algorithms are penalized, like a pet incentivized through scolding and punishment, when they make the wrong choices and are rewarded when they make the correct ones (hence the concept of reinforcement). One of the modern ANNs that leverage RL are deep Q-Networks (DQNs), which are based on the idea of Q-learning [226]. In Q-learning, a robot learns a policy of how to take an action at a particular state. Google Deepmind has developed a DQN that discovers end-to-end policies directly from raw pixels: it is capable of solving more than 20 tasks in various simulated physical environments, such as pendulum swing-up, cartpole swing-up, balancing, and object grabbing, etc. [227].

To conclude, robot perception, command processing, and action planning are key components in rehabilitation robotics and HRI, which are enhanced by DL models. However, DL models require substantial computational resources and powerful hardware to run efficiently, which makes them less preferable in mobile robotics and wearable applications. Nonetheless, quantum ML is an emerging and rather promising field that could potentially provide solutions to the computational costs challenge of classical ML [228]. As has been demonstrated, quantum ML can dramatically decrease computation complexity in certain algorithms, such as principal component analysis (PCA): in classical ML we face complexity with a big-O notation factor of O(d2), while in quantum ML we face O((logd)2) [229]. Thus, quantum ML can provide DL/ML algorithms with an advantage to leverage even more data and tackle even more complex computations.

Another concern regarding AI algorithms is safety risks due to the uncertainty of the robot’s actions under particular circumstances. So far, very few exoskeletons have secured Food and Drug Administration (FDA) approval for in-home use, and it is rather important to design rehabilitation robotic devices with tight and demonstrable safety standards to raise their trustworthiness in the consumer base. One emerging field that could potentially provide solutions to safety concerns, due to the nature of the materials used for the fabrication of mechanical components, is soft robotics [230]. The field has been highlighted as an emerging research field by the US National Science Foundation [231].

3.4.2. AI Algorithms for Neural Signal Processing

With the growing complexity and scale of neural recordings, the role of neural signal processing has been gaining significance in the field of neurorehabilitation. The aim of neural signal processing is to process and analyze neural signals from which useful information and insights about the brain’s information processing will be extracted and transmitted through neuronal ensembles. Reading out neural signals is significant to transmit neurofeedback to the brain or computer devices that support communication through brain–machine interfaces (BMIs) in neural engineering. Furthermore, assessing neural feedback, monitoring/decoding brain activity, and controlling prosthetics are key components for BMIs used in neural rehabilitation. Therefore, BMIs that are involved in robot-aided neurorehabilitation can benefit the most from increasingly accurate neural signal processing because of the significance of neurofeedback involved in neural therapies [232].

Neural signal processing involves an algorithmic framework that is often divided into the following stages: signal capture, signal preprocessing, and main processing [232]. In signal capture, two approaches (invasive and noninvasive) are used to acquire information. The invasive approach includes significant modalities such as electrocorticography (ECoG) [230], microelectrodes, and multiple electrode arrays [233,234]. In the case of noninvasive techniques, approaches are loosely divided into two groups: contact and remote methods. EEG is a contact-based technique, magnetoencephalography (MEG) is one of the remote-based methods, along with functional magnetic resonance imaging (fMRI) [235]. During the preprocessing stage, the signal is converted into a digital format, denoised, and imported into a signal processor. Furthermore, during the main processing stage, an essential algorithmic step is spike detection. A popular approach for spike detection is using wavelet transform (WT) but also advances in ML bring promising results using CNNs [236,237]. After spike detection, feature extraction is done mainly using PCA [238] or local Fisher discriminant analysis (LFDA) [239]. The final step during neural signal processing is feature clustering. There are many unsupervised clustering algorithms that can be used in feature clustering, and one of the most common is the k-nearest neighbor (k-NN) [238]. A clustering algorithm commonly used in neural signal processing is the expectation-maximization (EM) algorithm [240].

The application of the aforementioned AI-based algorithmic framework aims to boost the accuracy of signal processors, which form an integral part of neural biofeedback-based rehabilitation and assistive device control tasks. For instance, a CNN developed for feature extraction and spike detection in epileptic cases using EEG recordings outperformed all other tested models, achieving 0.947 AUC (compared to, for instance, support vector machines with a Gaussian kernel, which achieved an AUC of 0.912 [237]). In addition, a combination of LFDA and a Gaussian mixture model was implemented by Kilicarslan et al. to decode a user’s intention and control a lower-body exoskeleton. Their decoding method, when tested on a paraplegic patient, presented ~98% offline accuracy and short on-site training (~38 s) [239]. Furthermore, HEXOES is a soft hand exoskeleton that helps post-stroke patients in ADL by using EMG signals from the left hand to coordinate movement of the right hand. An artificial neural network was trained to decode the neural signals and extract the combinations from the left hand in real-time, achieving a low 8.3 ± 3.4% validation error [241].

In final analysis, neural signal decoding in real-time is a rather tough engineering challenge for all BMIs involved in neural rehabilitation and needs to be rather accurate to boost the BMIs’ effectiveness and usability. However, most of these algorithms can be successfully applied only on a limited number of neural signal inputs, in some cases only on one type. Therefore, an algorithmic framework for processing all types of neural signals in parallel is needed. Neural parallel processing is an emerging and rather promising field that can significantly boost neural signal processing tasks. In neural parallel processing, advanced algorithms are used for spike detection, feature extraction, and clustering in all parallel signals, such as exponential-component–power-component (EC-PC) spike detection and binary pursuit spike sorting [242]. Because of the large amount of data and heavy computation required, graphic processing units (GPUs) are used for massively parallel data processing [243].

4. Conclusions and Future Directions

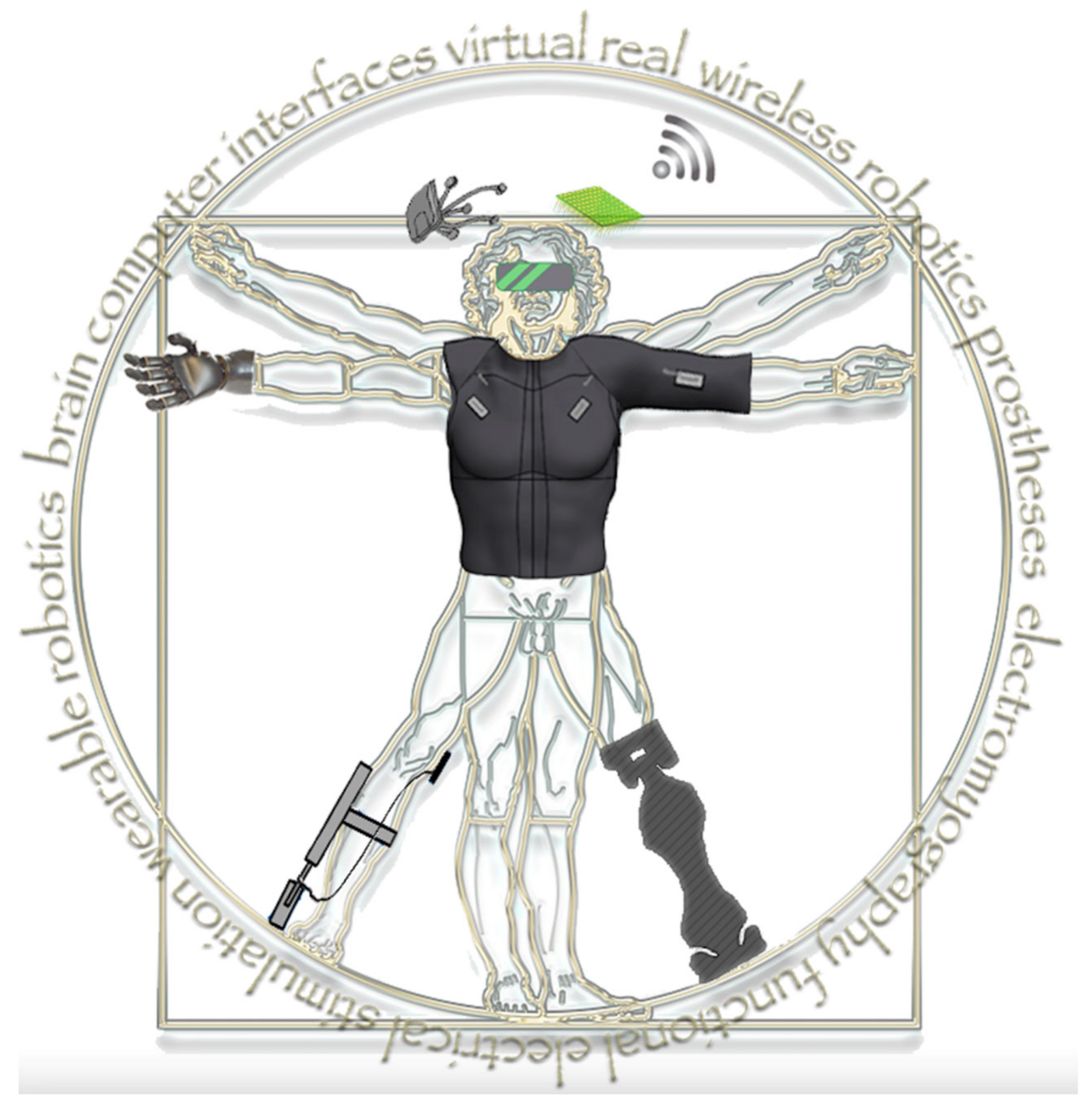

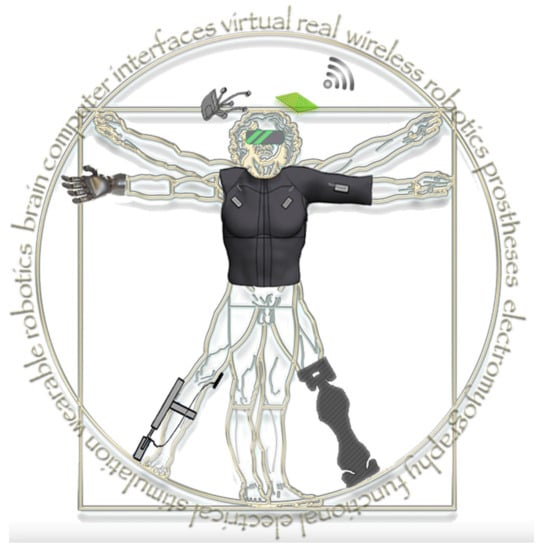

In this article we have reviewed key robotic technologies that facilitate neural rehabilitation and discussed roadblocks in their development and application, as well as presented current and emerging directions in the field (see Table 1). These technologies feature different points of origin but address clinical challenges and generate synergistic added value in the field of neural rehabilitation. This continuous evolution and convergence, as well as occasional inventions that have a particularly strong impact in the field, are likely to lead to an amalgam of neural rehabilitation assistive technologies whose utility increasingly exceeds the sum of its parts in terms of applications and outcomes (Figure 4).

Table 1.

Summary of all the technologies discussed in this review and their area of application, readiness level, and major roadblocks. Additionally, we highlight the existing interaction between those and propose beneficial potential interactions.

Figure 4.

Convergence of key technologies will synergistically enable complex applications of neural rehabilitation and improve outcomes of patients with disabilities.

During this ongoing transformation of the field, certain technologies stand out with respect to the relative advantages they offer. Through a natural paradigm shift, neural interfaces can now be considered as connections that allow information to be reciprocally exchanged between robotics and the human nervous system. As such, BCIs can provide decades-worth of experience in signal recording from the central nervous system, interpretation of motor and sensory outputs, as well as modulation of its functionality. Retraining CNS circuits and promoting adaptive neural plasticity have been recognized among key principles of neural pathways repair by the US National Institute of Neurological Disorders and Stroke [244].

Direct digital–neural interfaces and peripheral neurophysiological recordings, such as EMG, can provide pinpoint accuracy, increased resolution, and quality of neuronal signal detection, as well as the ability to exert command control. Exoskeletons, prostheses, wearable and soft robotics, rapid prototyping technologies, and electrical stimulation each demonstrate the unique ability to overcome neurological impairments and bridge lesions according to their applications and indications. These man–machine interfaces and robotics provide a way to deliver effective rehabilitation to, i.e., stroke survivors as a standalone modality or in combination with traditional rehabilitation [7,14,15,24]. This is mainly due to their ability to perform repetitive rehabilitation, adjust the intensity of rehabilitation, use a standardized training environment, and reduce the physical burden of physiotherapists [1,2].

As scientific analysis and understanding of human motor control advances in tandem with modern electronics and computational power, the pursuit of more disruptive therapeutic interventions has become possible. For example, the use of robot-driven locomotor perturbations in order to manipulate an individual’s motor control strategy for therapeutic purposes is a radical and particularly promising new such approach [245,246]. Such approaches also offer novel insight into human motor control and adaptation that need to be taken into account during the design of protocols for robot-assisted gait rehabilitation [247]. Advances in wireless transmission and battery technology facilitate their overall networking and autonomy. Finally, our understanding of embodiment has led to the integration of virtual, augmented, and mixed reality techniques to improve the registration of the aforementioned technologies and devices into a perceived body schema. Body and CNS scheme modification and body area networks work together to overcome disability. Examples can be identified with the mirror box and rubber hand illusions, both intriguing cross-modal illusions used in clinical neurorehabilitation that employ multisensory integration and that are also used to improve neuroprosthetics [248]. Furthermore, VREs offer a unique mode for multisensory integration, taking advantage of increased immersion and presence to deliver neural rehabilitation regimens [197].

Furthermore, fundamental lower-level technological improvements such as advances in software and hardware speed, accuracy, computational power, power autonomy, and the capacity to learn from data (machine learning) can be reasonably expected to continue offering incremental contributions to neural rehabilitation assistive technologies at an ever-increasing pace. To achieve further synergies, a multidisciplinary approach is essential: traditional research in physics and engineering must now work alongside chemical, biological, and medical science to develop new applications and acquire new capabilities [249]. As an example that stands out, it is worth considering that multiple immersive man–machine interfaces have been demonstrated to synergistically promote dormant neural plasticity and patient functional recovery even in chronic conditions previously thought irredeemable, such as in cases of chronic complete SCI [58,65].

System-on-chip integration has been revolutionizing the integration of analogue, digital, and radio frequency (RF) electronics on a monolithic piece of silicon during the past four decades. This technology has been merging with biologically targeted MEMS and creating new possibilities in the design of biochips, electrodes for neural interfacing, low-power implantable systems, and energy harvesting solutions. Apart from the obvious benefits of crafting less invasive and more effective electrodes, such technological convergence may also facilitate improvements in on-chip power transmission coils, so that power to implantable systems is supplied noninvasively from outside the body, in tandem with a wireless data link.