Towards Motor-Based Early Detection of Autism Red Flags: Enabling Technology and Exploratory Study Protocol

Abstract

1. Introduction

2. State of the Art

3. The MoVEAS System for Motion Capture

3.1. Reference Scenario

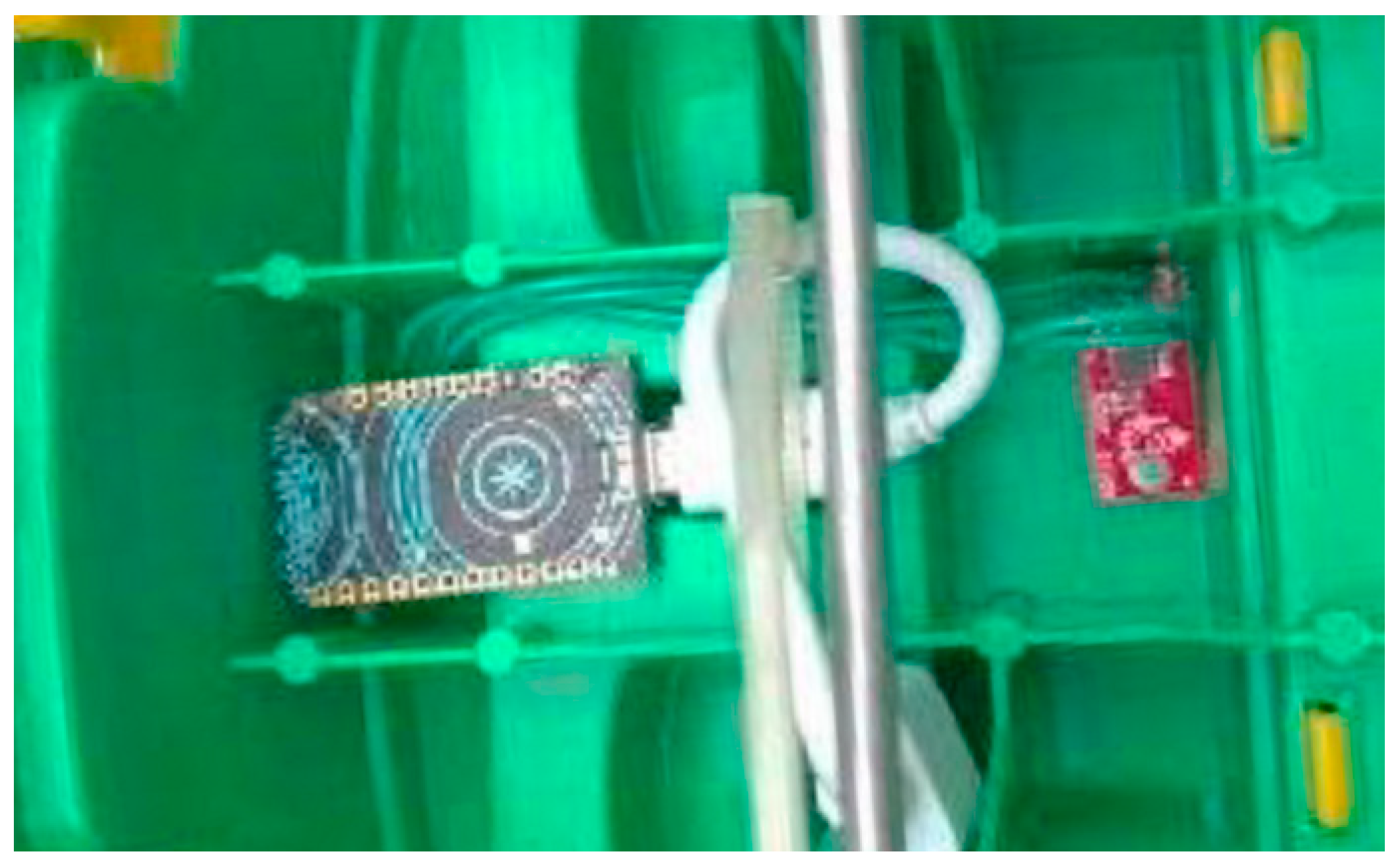

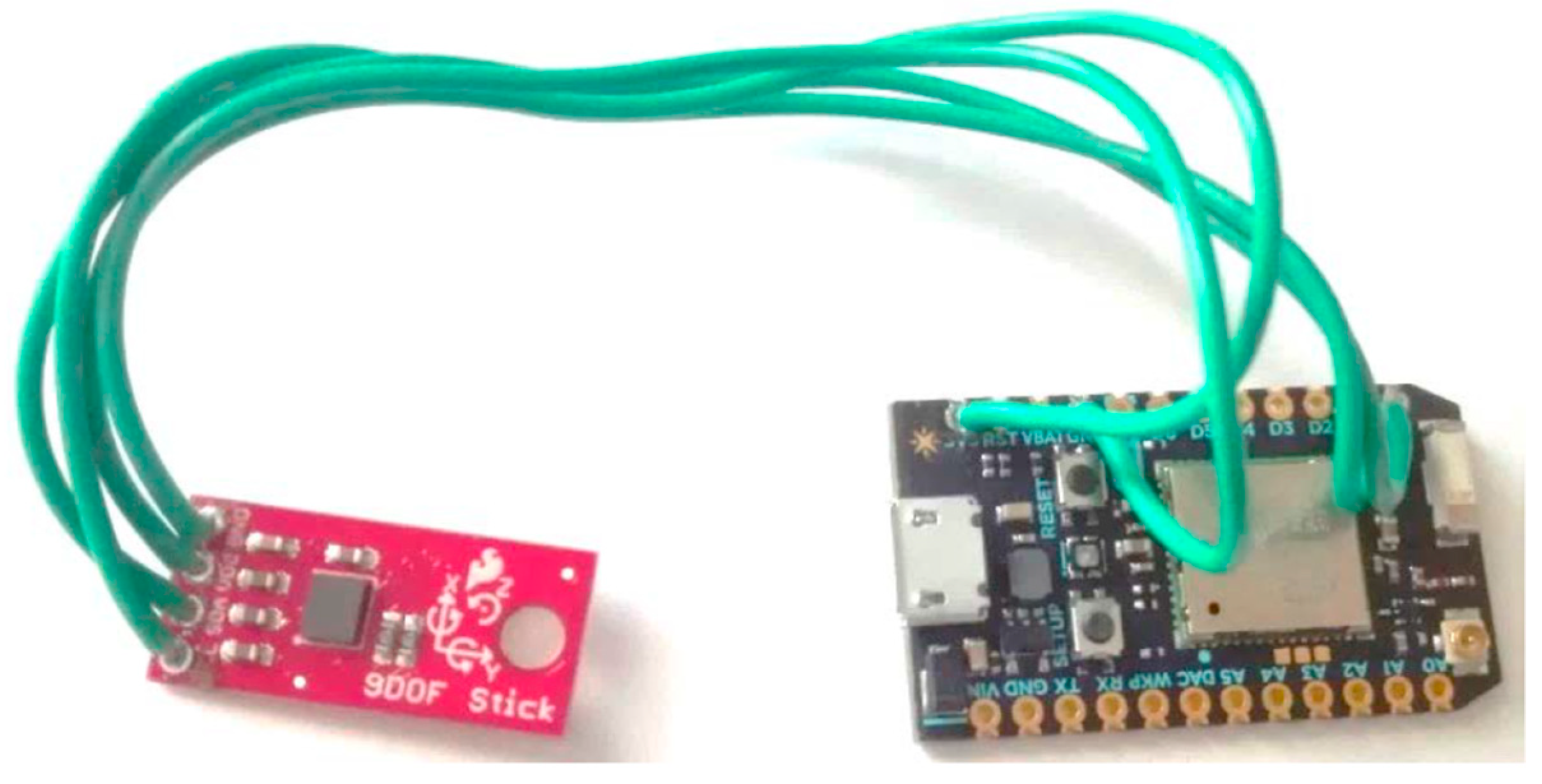

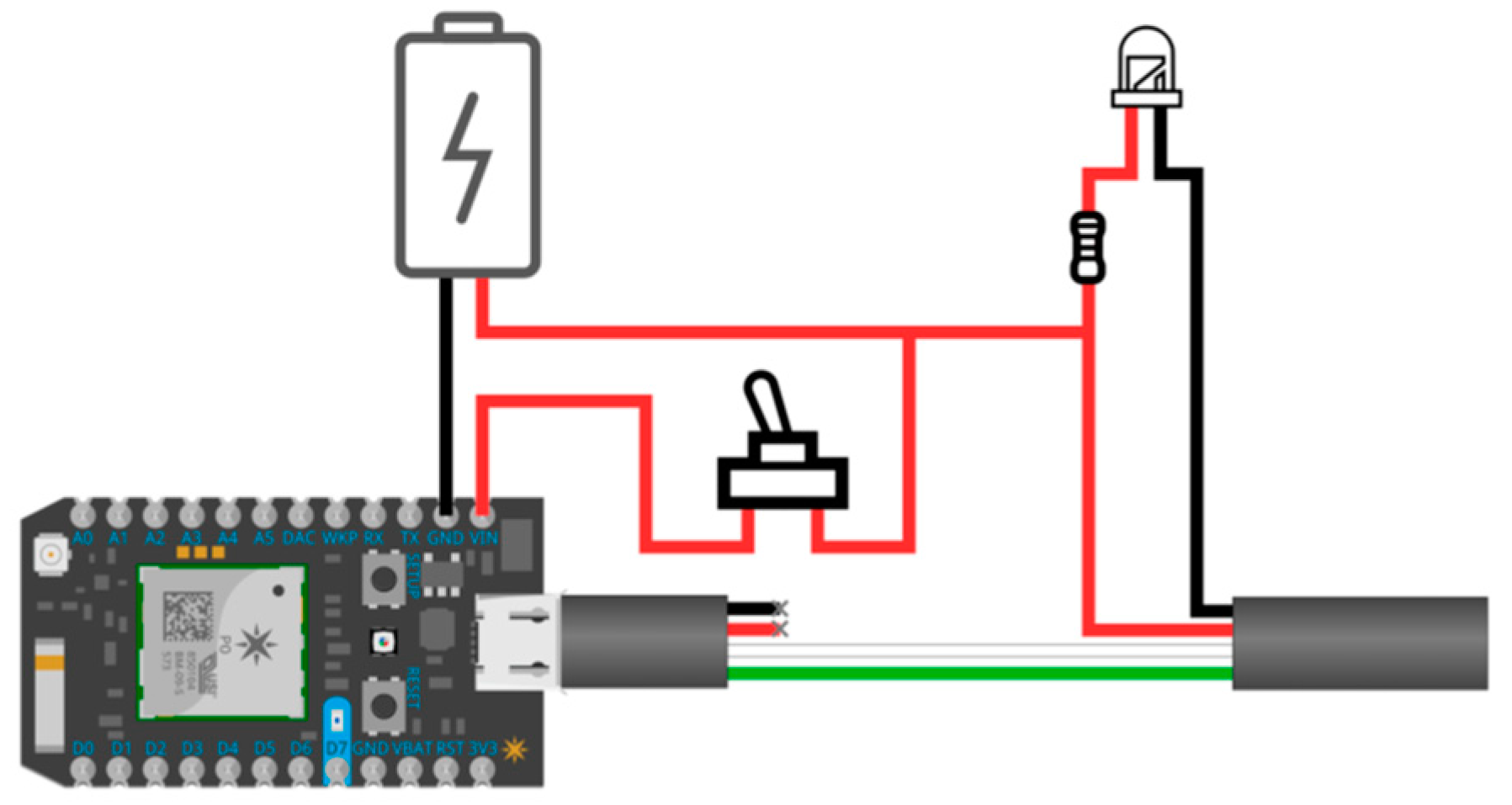

3.2. Smart Toy Prototype Overview

3.2.1. Smart Toys

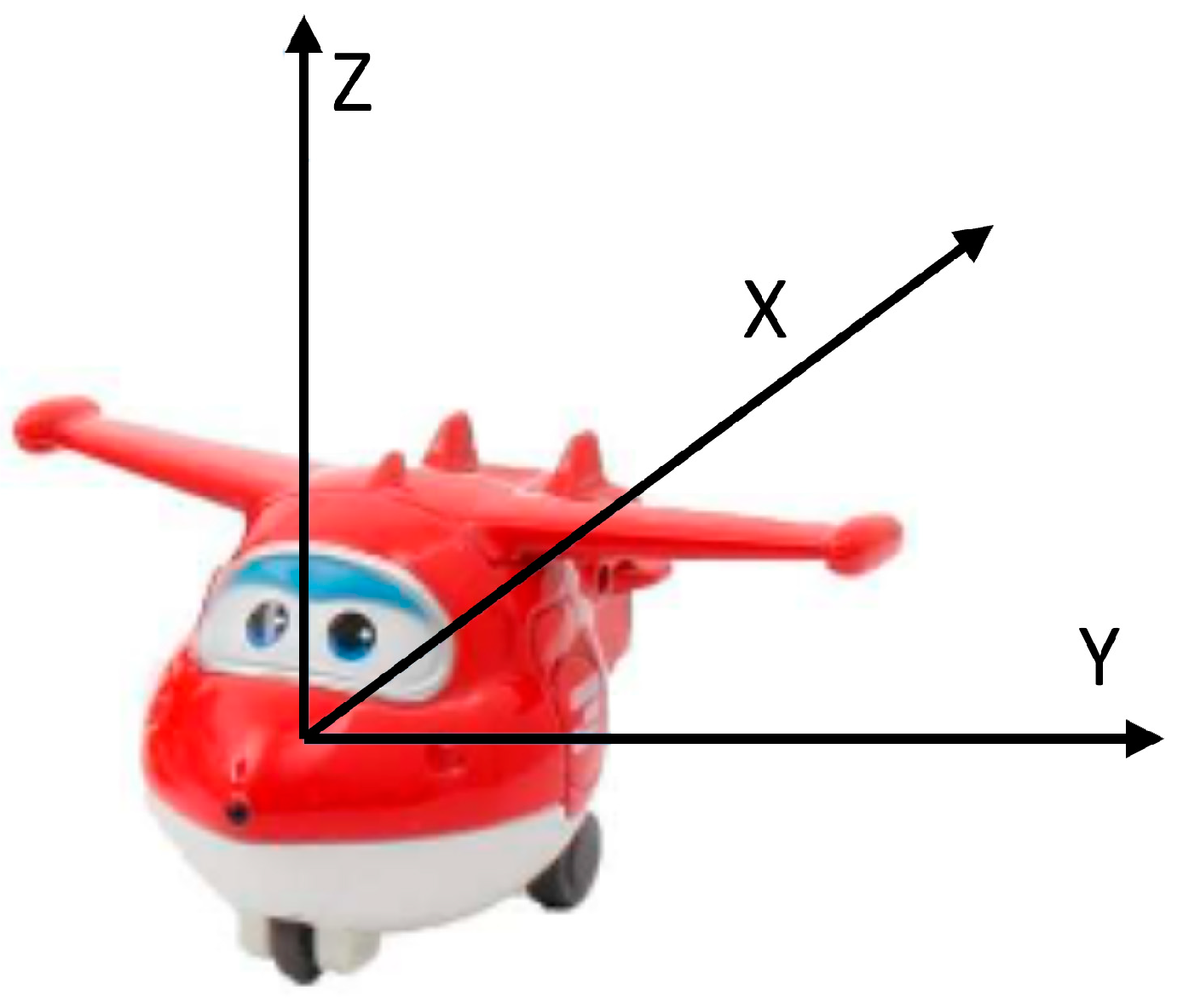

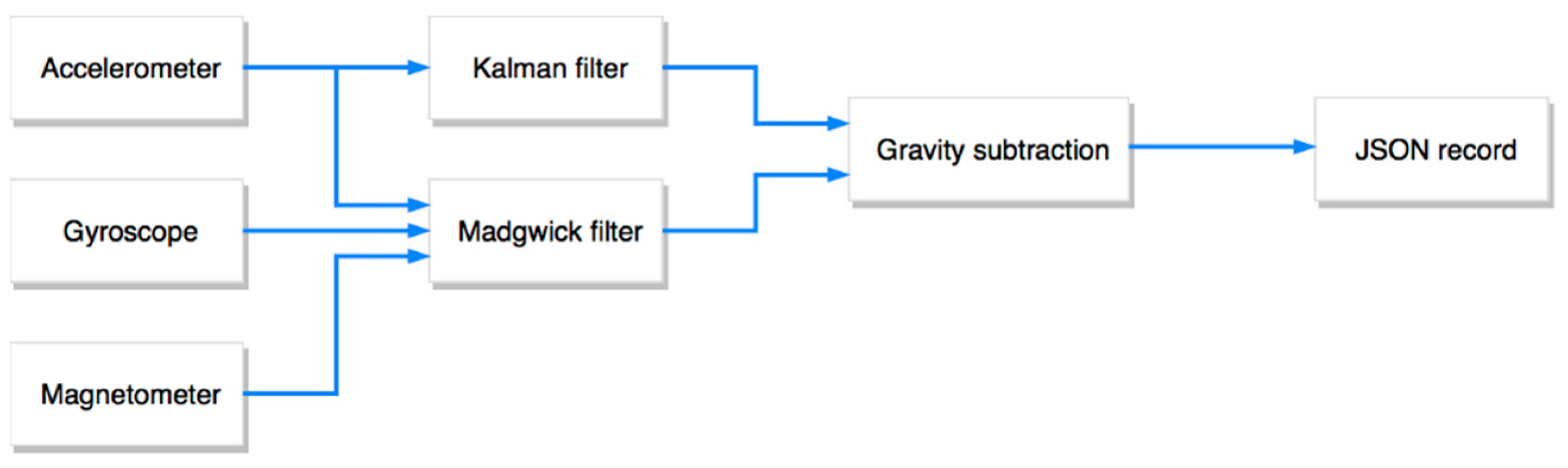

3.2.2. Activity Recognition Component

3.2.3. Backend and Data Storage

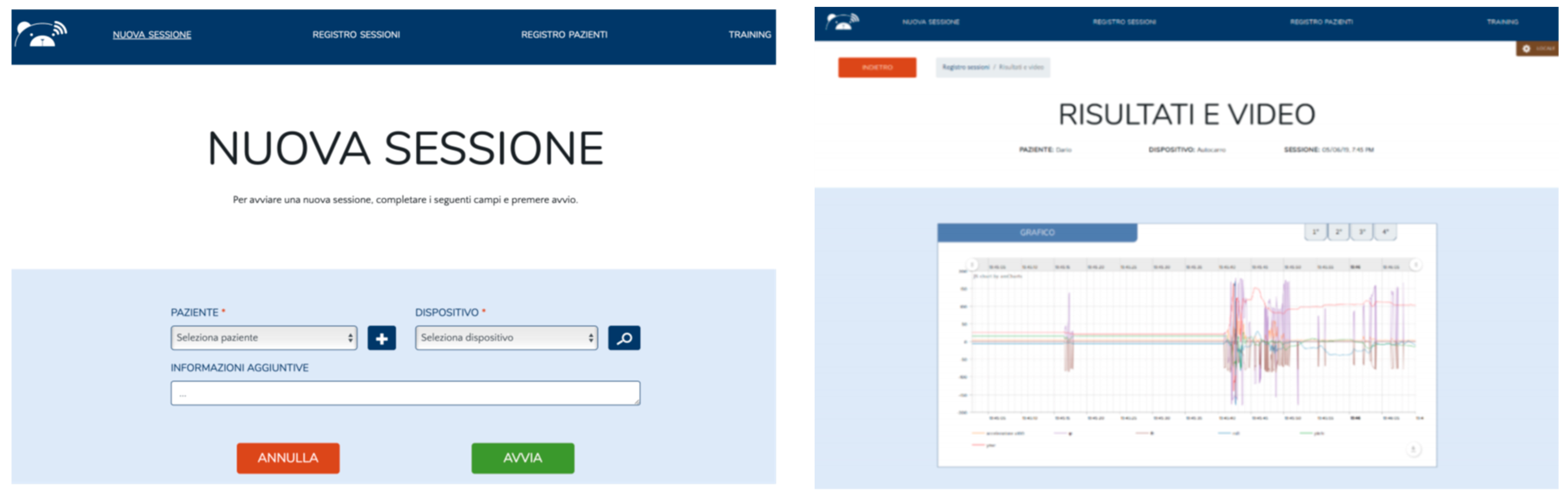

3.2.4. MoVEAS User Interface

4. Technical Validation

4.1. Dataset Collection

- 120 patterns with the toy moving forward;

- 120 patterns of the toy moving backward;

- 250 patterns of simulated flight;

- 250 patterns with the toy still;

- 250 patterns of the toy carried while walking;

- 250 patterns of the toy thrown (against a pillow).

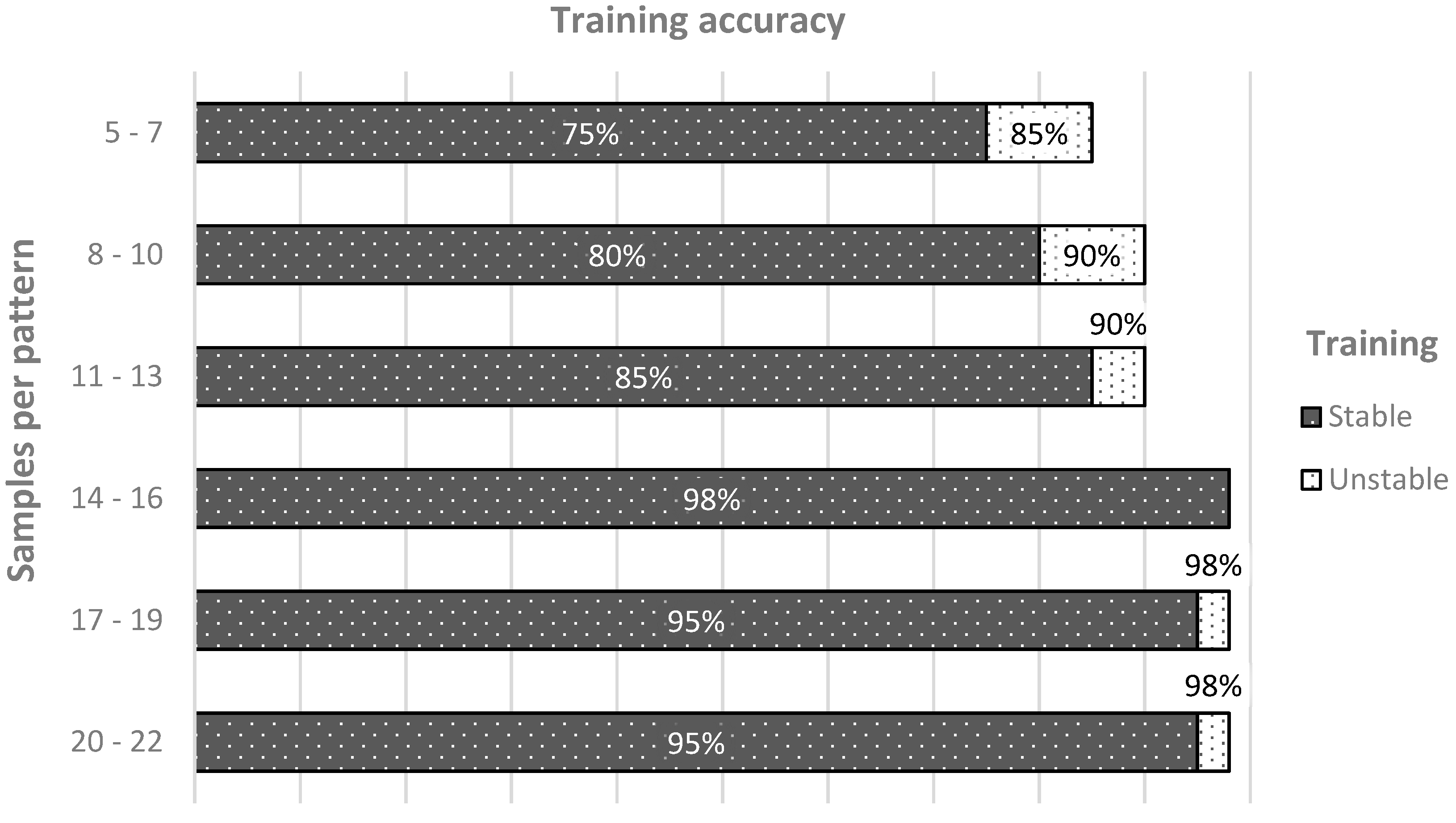

4.2. Neural Network Models Training and Validation

- the number of filters up to 7 and a kernel size up to 5 made the network able to classify correctly only after a long and unstable training;

- from 7 filters upwards, the training curve was stable, and the overfitting occurs only from 15;

- kernel sizes from 9 upwards tended to overfit the network; the patterns were initially made of 22 samples, and the sliding window results were too big;

- with filters bigger than 20, the overfitting was mitigable only with very low kernel sizes, but in that case the network was hard to train, and very easy to underfit.

- up to 5 units, the network was not able learn the training samples nor to generalize;

- up to 10 units, the validation accuracy reached 90%, but when the training continued, it overfit;

- up to 12 units, the network was hard to train, and with 14 units the network was trained smoothly and the validation accuracy grew up to 93.5%;

- from 16 upwards, stricter regularization was needed, but the dropout layers make the training stable and avoid overfitting. In the end, the best number of units resulted to be 20.

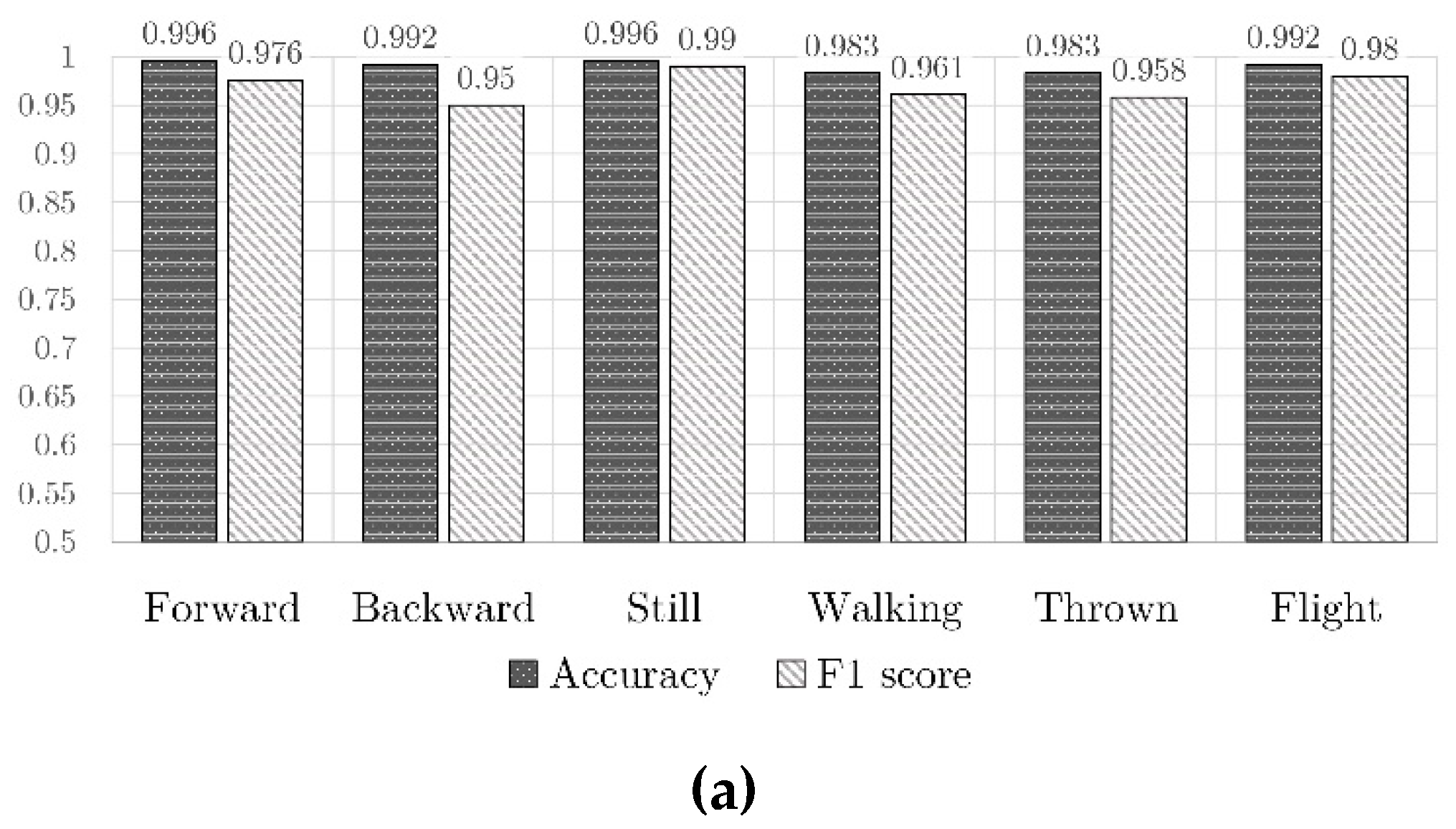

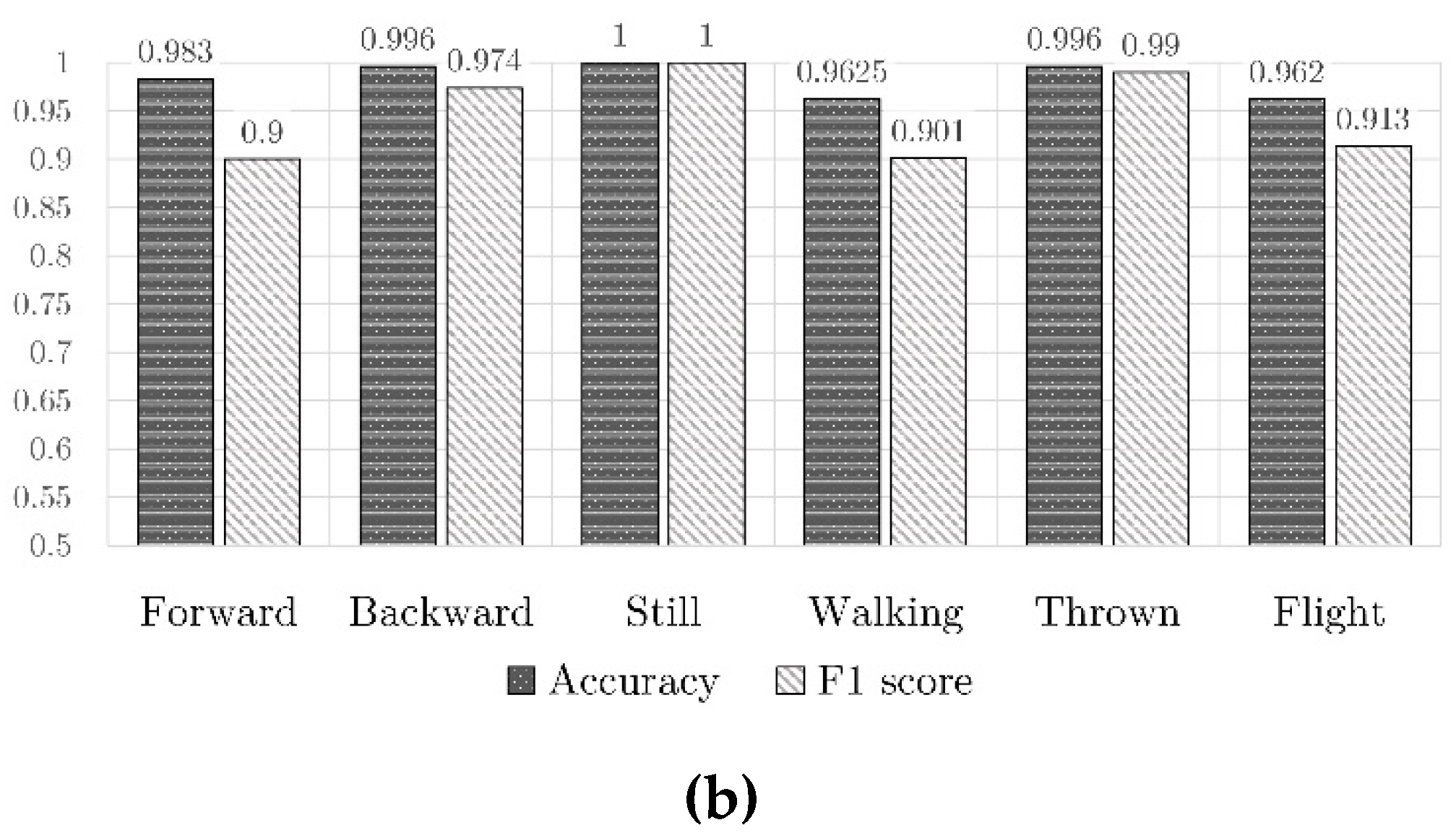

4.3. Results of Classification

5. Study Protocol

5.1. Participants

5.2. Measures

5.3. Procedures

5.4. Data and Statistical Analysis

- (a)

- compare ASD vs. TD groups regarding a part of the clinical standardized protocols (SPM-P; RBS-R; CBCL; CARS);

- (b)

- compare ASD vs. TD groups regarding object manipulation as captured by MoVEAS;

- (c)

- correlate, in ASD and TD groups, MoVEAS data with qualitative data;

- (d)

- correlate, in the ASD group, the scores of clinical standardized protocols with the data of the object manipulation captured through MoVEAS.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; (DSM-5); American Psychiatric Association: Washington, DC, USA, 2013. [Google Scholar]

- Maenner, M.J.; Shaw, K.A.; Baio, J.; Washington, A.; Patrick, M.; DiRienzo, M.; Christensen, D.L.; Wiggins, L.D.; Pettygrove, S.; Andrews, J.G.; et al. Prevalence of Autism Spectrum Disorder Among Children Aged 8 Years—Autism and Developmental Disabilities Monitoring Network, 11 Sites, United States, 2016. Mmwr Surveill. Summ. 2020, 69, 1–12. [Google Scholar] [CrossRef]

- Chiarotti, F.; Venerosi, A. Epidemiology of Autism Spectrum Disorders: A Review of Worldwide Prevalence Estimates Since 2014. Brain Sci. 2020, 10, 274. [Google Scholar] [CrossRef] [PubMed]

- Narzisi, A.; Posada, M.; Barbieri, F.; Chericoni, N.; Ciuffolini, D.; Pinzino, M.; Romano, R.; Scattoni, M.; Tancredi, R.; Calderoni, S.; et al. Prevalence of Autism Spectrum Disorder in a large Italian catchment area: A school-based population study within the ASDEU project. Epidemiol. Psychiatr. Sci. 2020, 29, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.C.; Lu, L.; Jeng, S.F.; Tsao, P.N.; Cheong, P.L.; Li, Y.J.; Wang, S.Y.; Huang, H.C.; Wu, Y.T. Multidimensional devel-opments and free-play movement tracking in 30- to 36-month-old toddlers with autism spectrum disorder who were full term. Phys. Therapy 2019, 99, 1535–1550. [Google Scholar] [CrossRef] [PubMed]

- Morgan, L.; Wetherby, A.M.; Barber, A. Repetitive and stereotyped movements in children with autism spectrum disorders late in the second year of life. J. Child Psychol. Psychiatry 2008, 49, 826–837. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.H.; Lord, C. Restricted and repetitive behaviors in toddlers and preschoolers with autism spectrum disorders based on the Autism Diagnostic Observation Schedule (ADOS). Autism Res. 2010, 3, 162–173. [Google Scholar] [CrossRef]

- Barber, A.B.; Wetherby, A.M.; Chambers, N.W. Brief Report: Repetitive Behaviors in Young Children with Autism Spectrum Disorder and Developmentally Similar Peers: A Follow Up to Watt et al. (2008). J. Autism Dev. Disord. 2012, 42, 2006–2012. [Google Scholar] [CrossRef]

- Kaur, M.; Srinivasan, S.M.; Bhat, A.N. Comparing motor performance, praxis, coordination, and interpersonal synchrony between children with and without Autism Spectrum Disorder (ASD). Res. Dev. Disabil. 2018, 72, 79–95. [Google Scholar] [CrossRef]

- Green, D.; Baird, G.; Barnett, A.L.; Henderson, L.; Huber, J.; Henderson, S.E. The severity and nature of motor impairment in Asperger’s syndrome: A comparison with Specific Developmental Disorder of Motor Function. J. Child Psychol. Psychiatry 2002, 43, 655–668. [Google Scholar] [CrossRef]

- Hilton, C.; Wente, L.; LaVesser, P.; Ito, M.; Reed, C.; Herzberg, G. Relationship between motor skill impairment and severity in children with Asperger syndrome. Res. Autism Spectr. Disord. 2007, 1, 339–349. [Google Scholar] [CrossRef]

- Ming, X.; Brimacombe, M.; Wagner, G.C. Prevalence of motor impairment in autism spectrum disorders. Brain Dev. 2007, 29, 565–570. [Google Scholar] [CrossRef]

- Fournier, K.A.; Hass, C.J.; Naik, S.K.; Lodha, N.; Cauraugh, J.H. Motor Coordination in Autism Spectrum Disorders: A Synthesis and Meta-Analysis. J. Autism Dev. Disord. 2010, 40, 1227–1240. [Google Scholar] [CrossRef] [PubMed]

- Anzulewicz, A.; Sobota, K.; Delafield-Butt, J.T. Toward the Autism Motor Signature: Gesture patterns during smart tablet gameplay identify children with autism. Sci. Rep. 2016, 6, 31107. [Google Scholar] [CrossRef] [PubMed]

- Etrevarthen, C.; Delafield-Butt, J.T. Autism as a developmental disorder in intentional movement and affective engagement. Front. Integr. Neurosci. 2013, 7, 49. [Google Scholar] [CrossRef]

- Gowen, E.; Hamilton, A. Motor Abilities in Autism: A Review Using a Computational Context. J. Autism Dev. Disord. 2013, 43, 323–344. [Google Scholar] [CrossRef] [PubMed]

- Sinha, P.; Kjelgaard, M.M.; Gandhi, T.K.; Tsourides, K.; Cardinaux, A.L.; Pantazis, D.; Diamond, S.P.; Held, R.M. Autism as a disorder of prediction. Proc. Natl. Acad. Sci. USA 2014, 111, 15220–15225. [Google Scholar] [CrossRef] [PubMed]

- Delafield-Butt, J.T.; Gangopadhyay, N. Sensorimotor intentionality: The origins of intentionality in prospective agent action. Dev. Rev. 2013, 33, 399–425. [Google Scholar] [CrossRef]

- Green, D.; Charman, T.; Pickles, A.; Chandler, S.; Loucas, T.; Simonoff, E.; Baird, G. Impairment in movement skills of children with autistic spectrum disorders. Dev. Med. Child Neurol. 2009, 51, 311–316. [Google Scholar] [CrossRef] [PubMed]

- Mullen, E. Mullen Scales of Early Learning; AGS: Circle Pines, MN, USA, 1995. [Google Scholar]

- Libertus, K.; Sheperd, K.A.; Ross, S.W.; Landa, R.J. Limited Fine Motor and Grasping Skills in 6-Month-Old Infants at High Risk for Autism. Child Dev. 2014, 85, 2218–2231. [Google Scholar] [CrossRef]

- Kaur, M.; Srinivasan, S.M.; Bhat, A.N. Atypical object exploration in infants at-risk for autism during the first year of life. Front. Psychol. 2015, 6, 798. [Google Scholar] [CrossRef] [PubMed]

- A Koterba, E.; Leezenbaum, N.B.; Iverson, J.M. Object exploration at 6 and 9 months in infants with and without risk for autism. Autism 2014, 18, 97–105. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, S.M.; Bhat, A.N. Differences in object sharing between infants at risk for autism and typically developing infants from 9 to 15 months of age. Infant Behav. Dev. 2016, 42, 128–141. [Google Scholar] [CrossRef] [PubMed]

- Lanini, M.; Bondioli, M.; Narzisi, A.; Pelagatti, S.; Chessa, S. Sensorized Toys to Identify the Early ‘Red Flags’ of Autistic Spectrum Disorders in Preschoolers. Adv. Intell. Syst. Comput. 2018, 190–198. [Google Scholar] [CrossRef]

- Bondioli, M.; Chessa, S.; Narzisi, A.; Pelagatti, S.; Piotrowicz, D. Capturing Play Activities of Young Children to Detect Autism Red Flags. Adv. Intell. Syst. Comput. 2019, 71–79. [Google Scholar] [CrossRef]

- Zoncheddu, M. Development of the Sensors Data Stream Analysis Module of MoVEAS. Bachelor’s Thesis, University of Pisa, Pisa, Italy, 2020. [Google Scholar]

- GoodwinNStephen, M.S.; Intille, S.S.; Albinali, F.; Velicer, W.F. Automated Detection of Stereotypical Motor Movements. J. Autism Dev. Disord. 2011, 41, 770–782. [Google Scholar] [CrossRef]

- Plötz, T.; Hammerla, N.Y.; Rozga, A.; Reavis, A.; Call, N.; Abowd, G.D. Automatic assessment of problem behavior in individuals with developmental disabilities. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PE, USA, 5–8 September 2012; pp. 391–400. [Google Scholar]

- Goncalves, N.; Rodrigues, J.; Costa, S.; Soares, F. Automatic detection of stereotyped hand flapping movements: Two different approaches. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 392–397. [Google Scholar]

- Kang, J.Y.; Kim, R.; Kim, H.; Kang, Y.; Hahn, S.; Fu, Z.; Khalid, M.; Schenck, E.; Thesen, T. Automated Tracking and Quantification of Autistic Behavioral Symptoms Using Microsoft Kinect; IOS Press: Amsterdam, The Netherlands, 2016; pp. 167–170. [Google Scholar]

- Najafi, B.; Aminian, K.; Paraschiv-Ionescu, A.; Loew, F.; Büla, C.J.; Robert, P. Ambulatory system for human motion analysis using a kinematic sensor: Monitoring of daily physical activity in the elderly. Ieee Trans. Biomed. Eng. 2003, 50, 711–723. [Google Scholar] [CrossRef]

- Sukor, A.S.A.; Zakaria, A.; Rahim, N.A. Activity recognition using accelerometer sensor and machine learning classifiers. In Proceedings of the 2018 IEEE 14th International Colloquium on Signal Processing & Its Applications (CSPA), Batu Feringghi, Malaysia, 9–10 March 2018; pp. 233–238. [Google Scholar]

- Jordao, A.; Torres, L.A.B.; Schwartz, W.R. Novel approaches to human activity recognition based on accelerometer data. SignalImage Video Process. 2018, 12, 1387–1394. [Google Scholar] [CrossRef]

- Sekine, M.; Tamura, T.; Togawa, T.; Fukui, Y. Classification of waist-acceleration signals in a continuous walking record. Med Eng. Phys. 2000, 22, 285–291. [Google Scholar] [CrossRef]

- Lee, S.; Park, H.D.; Hong, S.Y.; Lee, K.J.; Kim, Y.H. A study on the activity classification using a triaxial accelerometer. In Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE Cat. No.03CH37439), Cancun, Mexico, 17–21 September 2003; 3, pp. 2941–2943. [Google Scholar] [CrossRef]

- Allen, F.R.; Ambikairajah, E.; Lovell, N.H.; Celler, B.G. Classification of a known sequence of motions and postures from accelerometry data using adapted Gaussian mixture models. Physiol. Meas. 2006, 27, 935–951. [Google Scholar] [CrossRef]

- Ha, S.; Choi, S. Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 381–388. [Google Scholar]

- Panwar, M.; Dyuthi, S.R.; Prakash, K.C.; Biswas, D.; Acharyya, A.; Maharatna, K.; Gautam, A.; Naik, G.R. CNN based approach for activity recognition using a wrist-worn accelerometer. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2017; IEEE: Piscataway, NJ, USA, 2017; Volume 2017, pp. 2438–2441. [Google Scholar]

- Harrop, C.; Green, J.; Hudry, K. PACT Consortium Play complexity and toy engagement in preschoolers with autism spectrum disorder: Do girls and boys differ? Autism 2017, 21, 37–50. [Google Scholar] [CrossRef]

- Madgwick, S.O.H. An efficient orientation filter for inertial and inertial/magnetic sensor arrays. Rep. X-Io Univ. Bristol (UK) 2010, 25, 113–118. [Google Scholar]

- Lord, C.; Luyster, R.; Gotham, K.; Guthrie, W. (ADOS-2) Manual (Part II): Toddler Module. In Autism Diagnostic Observation Schedule, 2nd ed.; Western Psychological Services: Torrence, CA, USA, 2012. [Google Scholar]

- Schopler, E.; Van Bourgondien, M.E.; Wellman, G.J.; Love, S.R. The Childhood Autism Rating Scale (CARS-2) Manual; Western Psychological Services: Los Angeles, CA, USA, 2010. [Google Scholar]

- Achenbach, T.M.; Rescorla, L. Manual for the ASEBA Preschool Forms and Profile; University of Vermont: Burlington, VT, USA, 2000. [Google Scholar]

- Folio, M.; Fewell, R. Peabody Developmental Motor Scales—Second Edition (PDMS-2): Examiner’s Manual; Pro-Ed: Austin, TX, USA, 2000. [Google Scholar]

- Griffiths, R. The Griffiths Mental Developmental Scales, Extended Revised. UK: Association for Research in Infant and Child Development, The Test Agency. 2006. Available online: www.hogrefe.com (accessed on 8 March 2021).

- Lam, K.S.L.; Aman, M.G. The Repetitive Behavior Scale-Revised: Independent Validation in Individuals with Autism Spectrum Disorders. J. Autism Dev. Disord. 2007, 37, 855–866. [Google Scholar] [CrossRef] [PubMed]

- Glennon, T.J.; Kuhaneck, H.M.; Herzberg, D. The Sensory Processing Measure–Preschool (SPM-P)—Part One: Description of the Tool and Its Use in the Preschool Environment. J. Occup. Sch. Early Interv. 2011, 4, 42–52. [Google Scholar] [CrossRef]

- Sparrow, S.S.; Balla, D.A.; Cicchetti, D.V.; Doll, E.D. Vineland-II: Vineland Adaptive Behavior Scales: Survey Forms Manual; American Guidance Service: Circle Pines, MN, USA, 2005. [Google Scholar]

- Balboni, G.; Belacchi, C.; Bonichini, S.; Coscarelli, A. Vineland-II. Vineland Adaptive Behavior Scales Second Edition Survey Interview Form; Giunti OS Organizzazioni Speciali: Firenze, Italy, 2016. [Google Scholar]

| Kernel Size | |||||

|---|---|---|---|---|---|

| 3 | 5 | 7 | 9 | ||

| Filters | 3 | 90.00% | 92.57% | 95.00% | 98.25% |

| 5 | 94.50% | 95.50% | 98.50% | 99.00% | |

| 7 | 98.80% | 99.00% | 99.87% | 99.90% | |

| 9 | 97.93% | 99.00% | 99.65% | 99.87% | |

| 11 | 98.06% | 98.52% | 99.59% | 99.83% | |

| 13 | 96.94% | 99.53% | 99.91% | 99.84% | |

| 15 | 99.20% | 99.88% | 99.90% | 99.90% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bondioli, M.; Chessa, S.; Narzisi, A.; Pelagatti, S.; Zoncheddu, M. Towards Motor-Based Early Detection of Autism Red Flags: Enabling Technology and Exploratory Study Protocol. Sensors 2021, 21, 1971. https://doi.org/10.3390/s21061971

Bondioli M, Chessa S, Narzisi A, Pelagatti S, Zoncheddu M. Towards Motor-Based Early Detection of Autism Red Flags: Enabling Technology and Exploratory Study Protocol. Sensors. 2021; 21(6):1971. https://doi.org/10.3390/s21061971

Chicago/Turabian StyleBondioli, Mariasole, Stefano Chessa, Antonio Narzisi, Susanna Pelagatti, and Michele Zoncheddu. 2021. "Towards Motor-Based Early Detection of Autism Red Flags: Enabling Technology and Exploratory Study Protocol" Sensors 21, no. 6: 1971. https://doi.org/10.3390/s21061971

APA StyleBondioli, M., Chessa, S., Narzisi, A., Pelagatti, S., & Zoncheddu, M. (2021). Towards Motor-Based Early Detection of Autism Red Flags: Enabling Technology and Exploratory Study Protocol. Sensors, 21(6), 1971. https://doi.org/10.3390/s21061971