A Robot Object Recognition Method Based on Scene Text Reading in Home Environments

Abstract

1. Introduction

- (1)

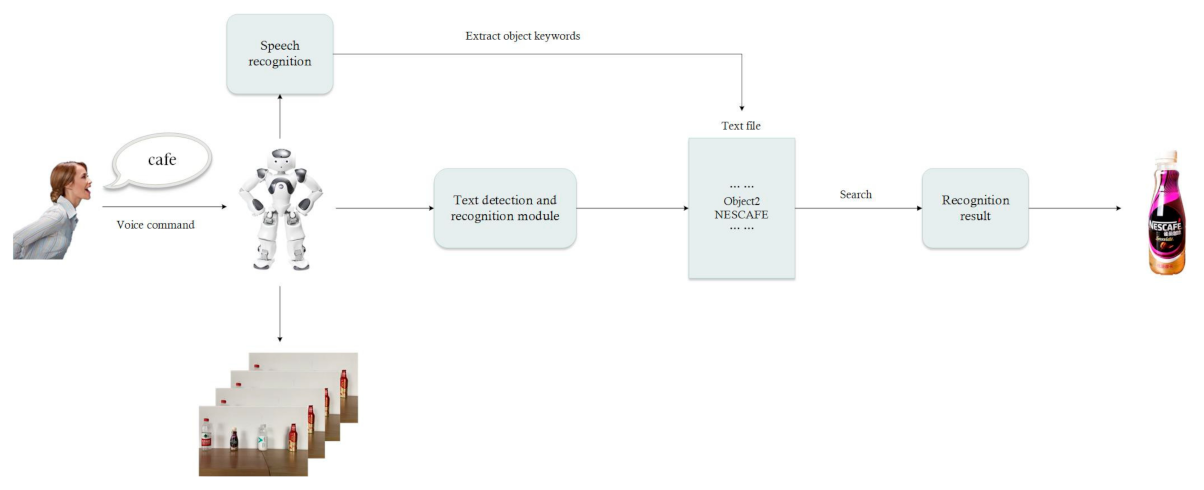

- This paper proposes an object recognition approach based on scene text reading. With this approach, robots can recognize arbitrary-shape and arbitrary-category objects. In addition, this approach speeds up robot mimicking of human recognition behavior.

- (2)

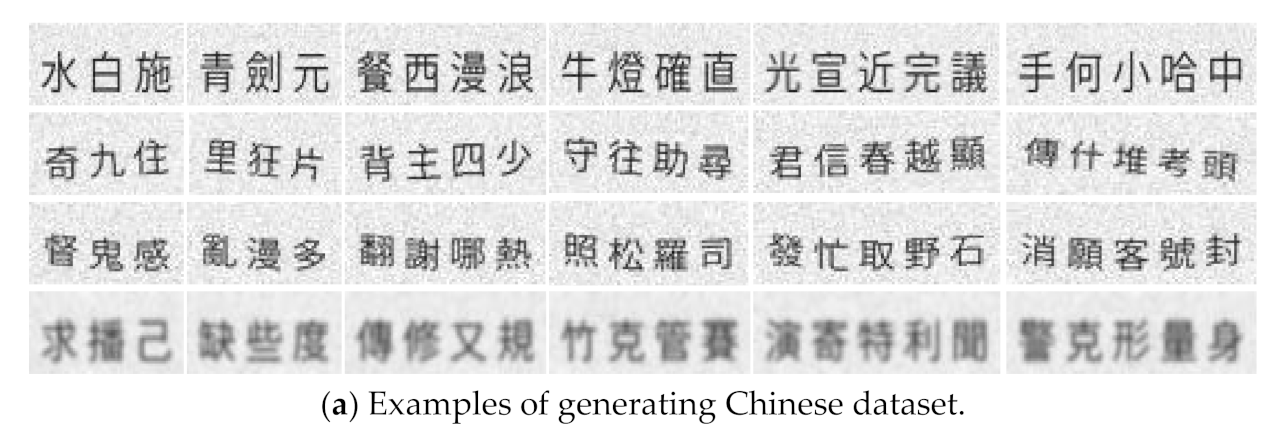

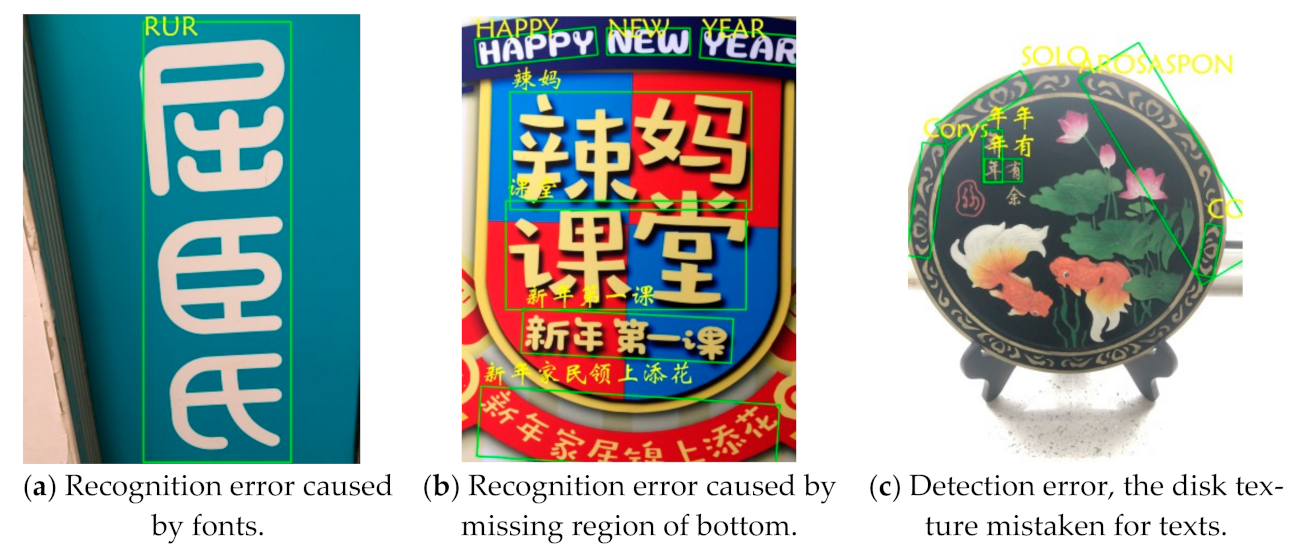

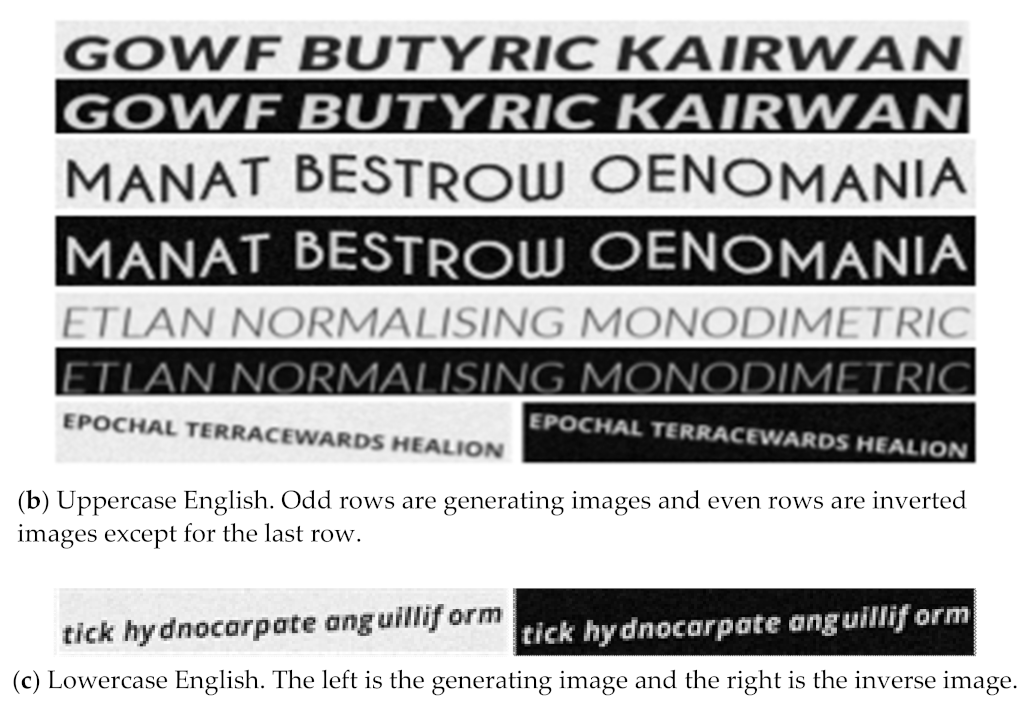

- To improve the recognition accuracy of models from reference [9], we generate a new dataset and its inverse. The generated dataset contains 102,000 images with labeled documents, while the inverse dataset inverts the pixel value of generated images without changing the labels. After training on these datasets, the recognition accuracy of the model is improved by 1.26%.

- (3)

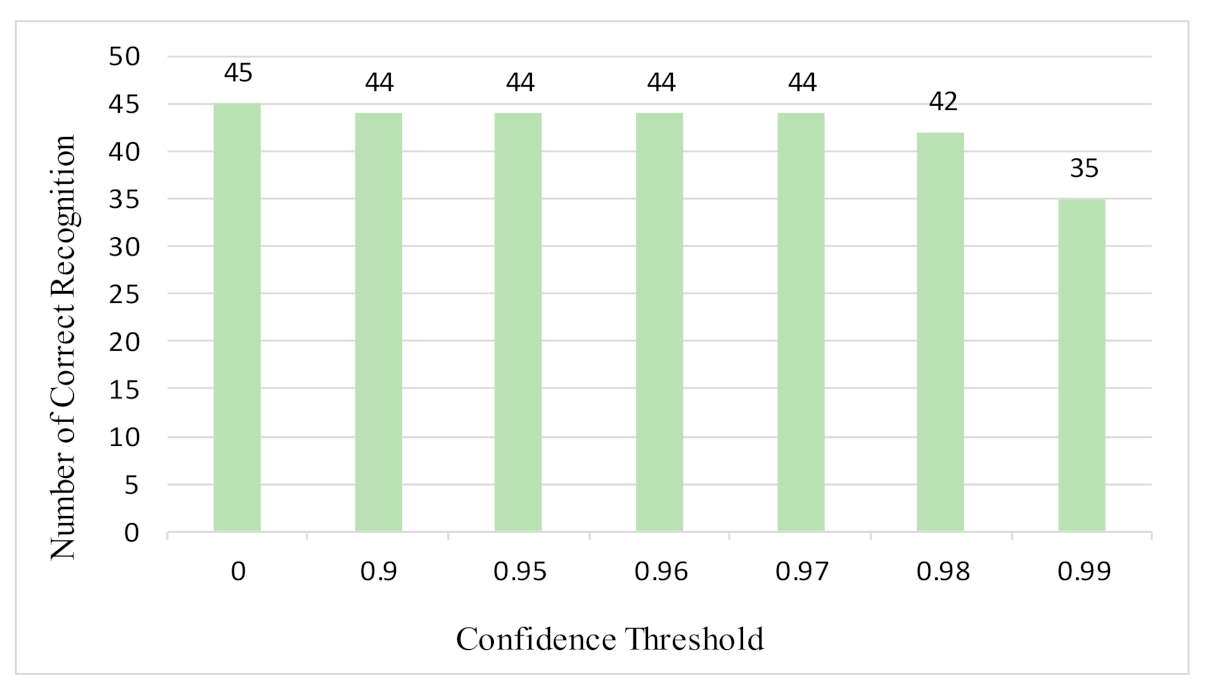

- Experiments are carried out on relations between confidence thresholds of text boxes and recognition effect. A higher confidence threshold results in more accurate recognition. However, useful information may be missed. By statistics of test samples, the confidence threshold is set at 0.97, which is a good balance that indicates that the key information is reserved and the recognition accuracy is high with few wrong words.

2. Related Works

3. Methodology

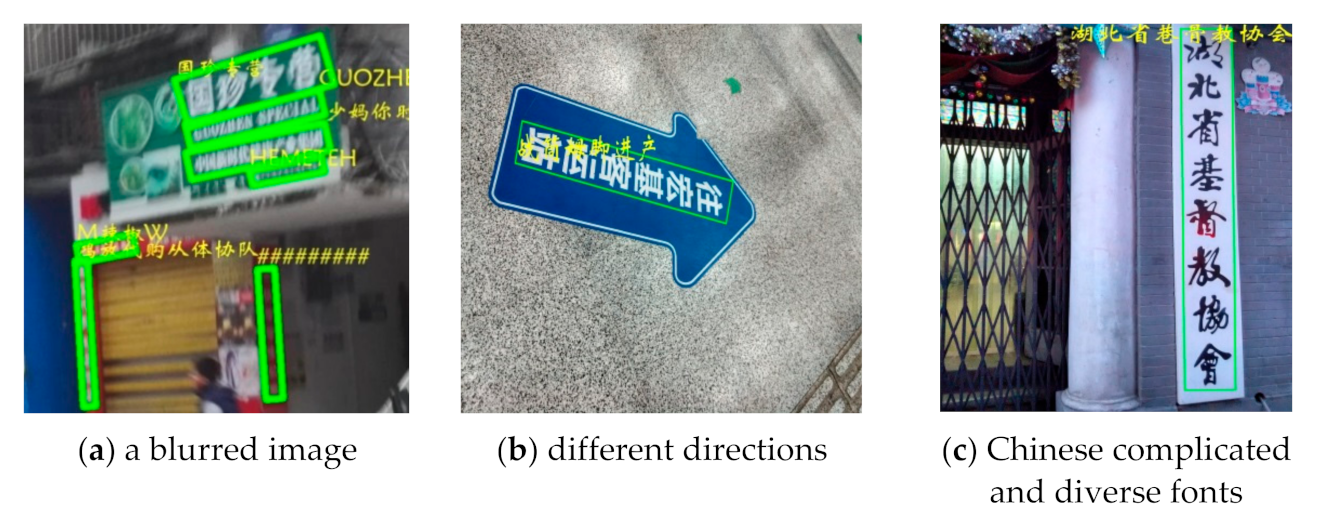

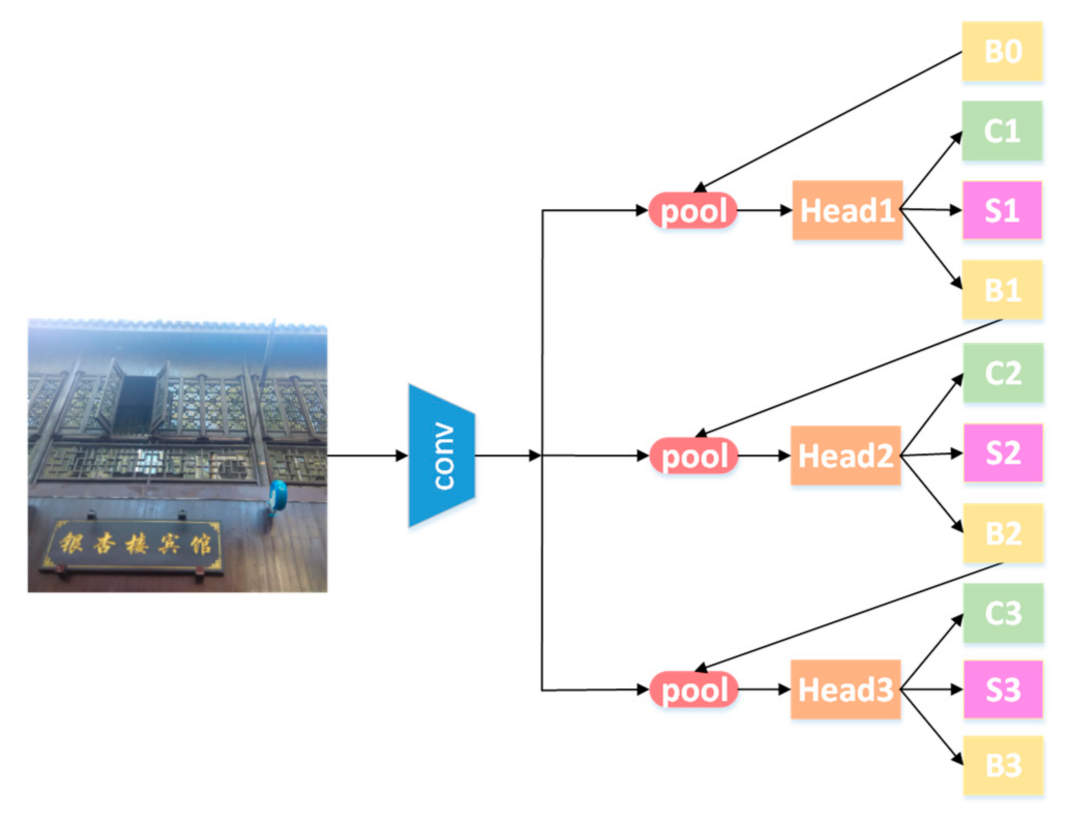

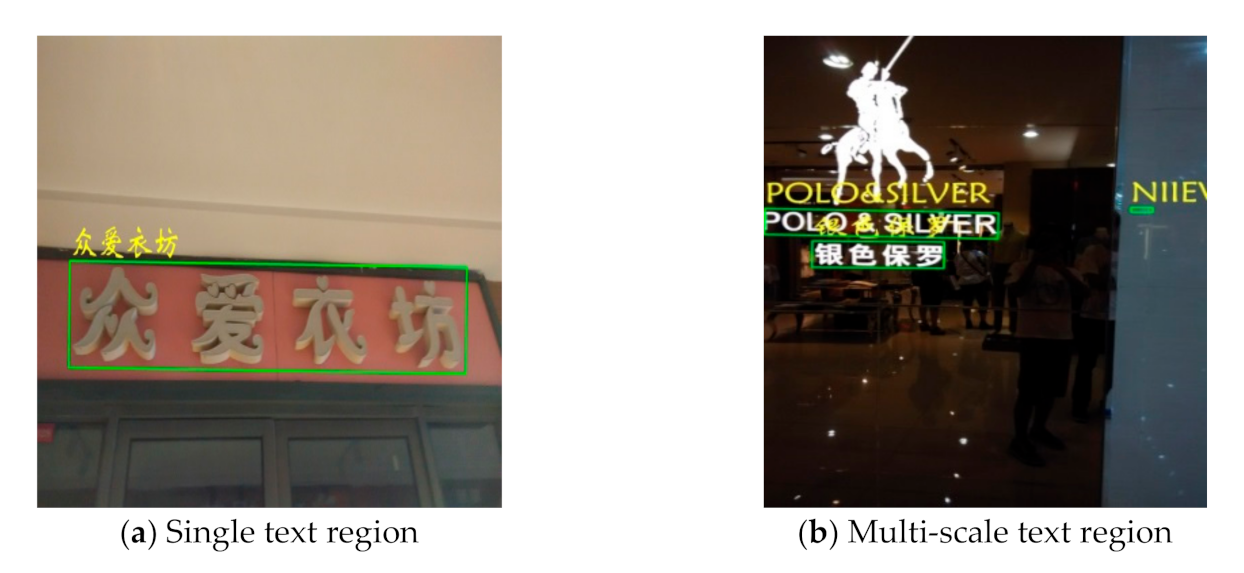

3.1. Text Detection

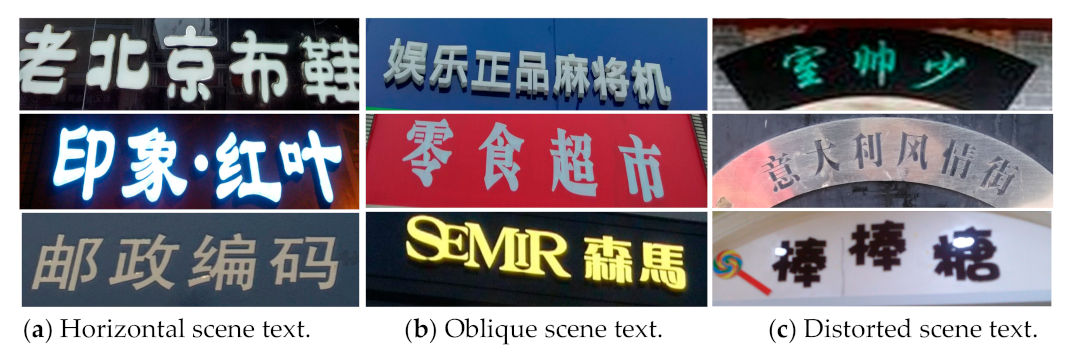

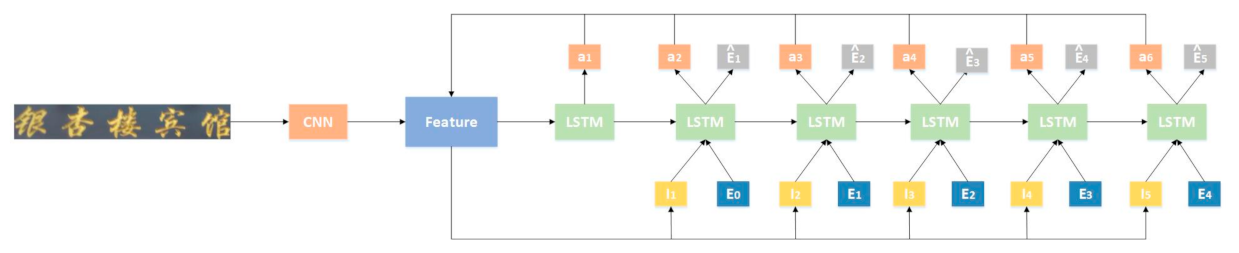

3.2. Arbitrary-Shaped Scene Text Recognition

4. Experiments on Detection and Recognition Models

4.1. Dataset

4.2. Parameter Settings

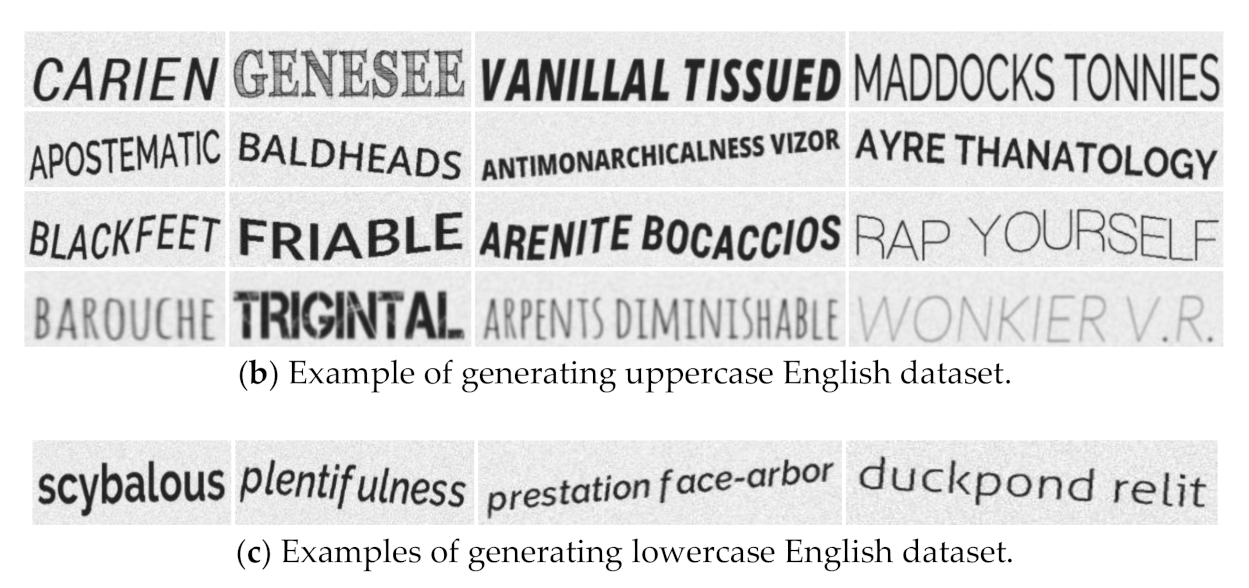

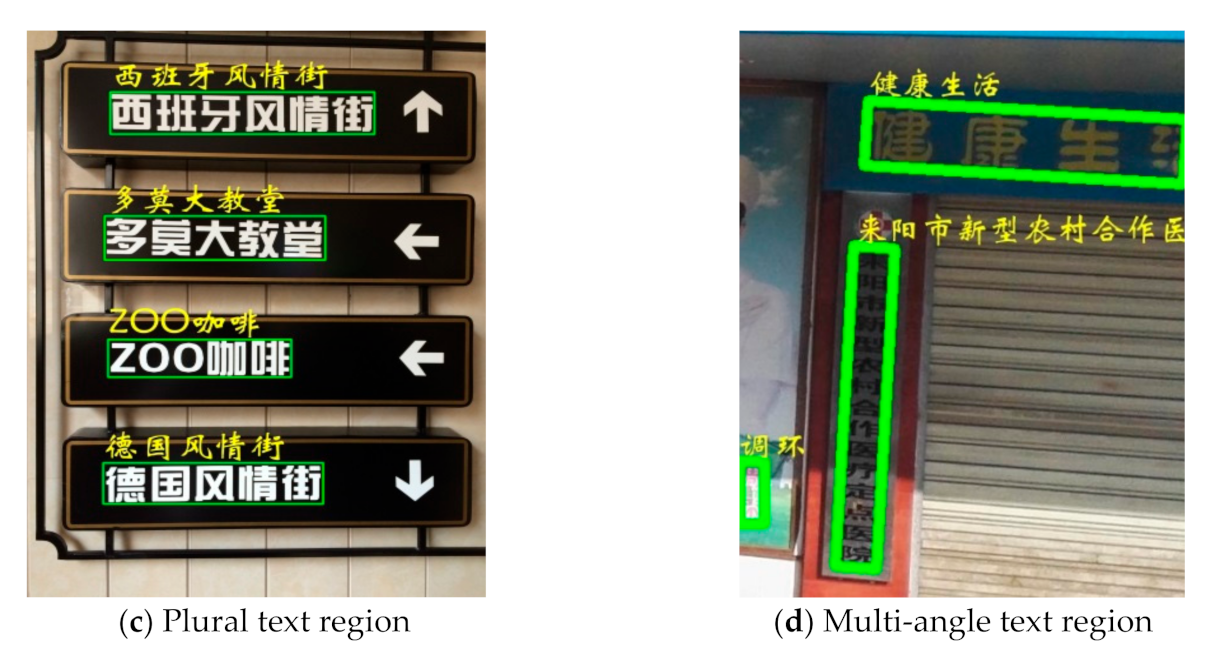

4.3. Text Detection Results

4.4. Text Recognition Result

4.5. Inverse Experiment

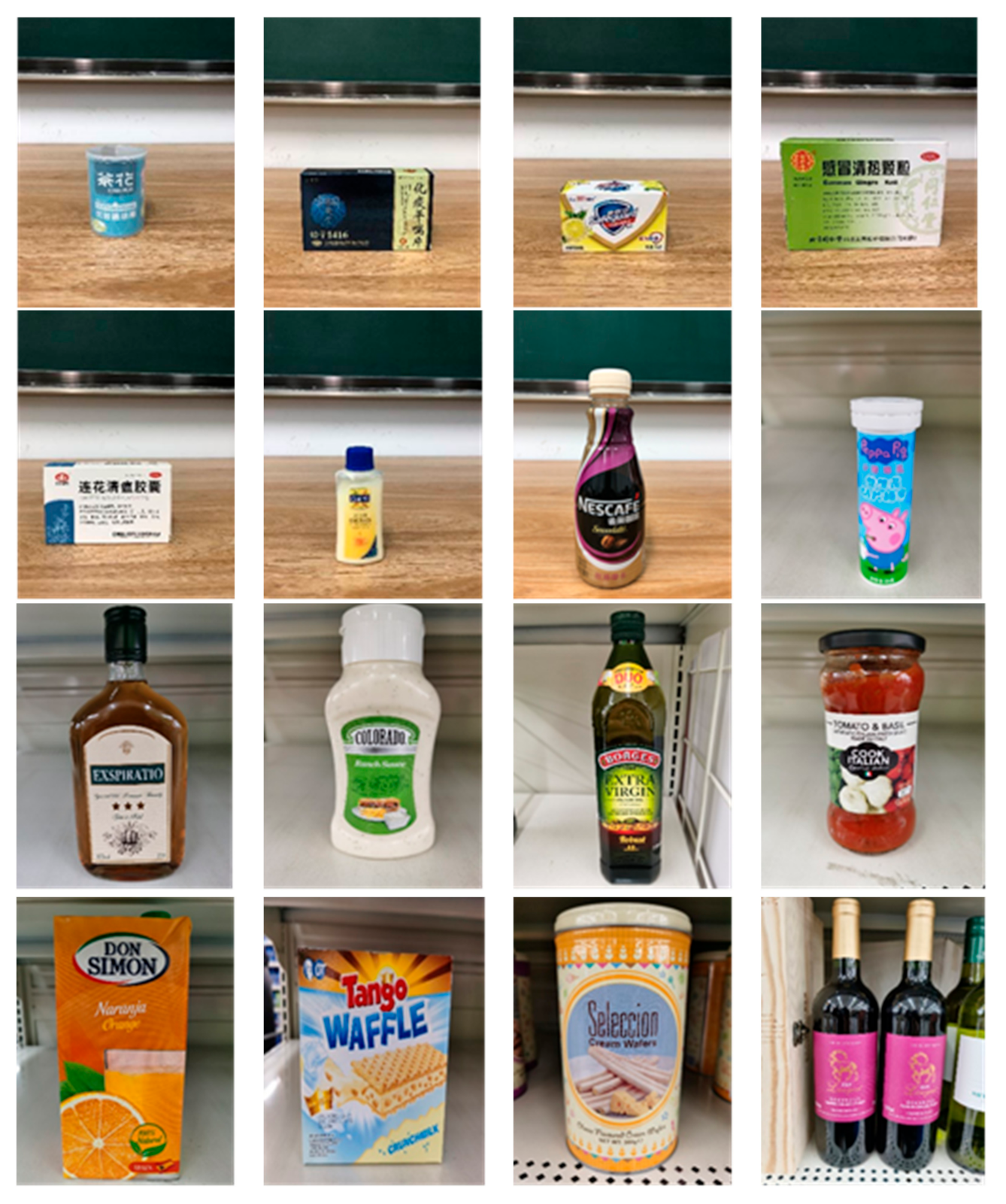

5. Robot Object Recognition Experiments

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Uijlings, J.R.R.; Van De Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2020; Springer Science and Business Media LLC: New York, NY, USA, 2016; pp. 21–37. [Google Scholar]

- Zhang, J.; Wang, W.; Huang, D.; Liu, Q.; Wang, Y. A feasible framework for Ar-bi-trary-shaped scene text recognition. arXiv 2019, arXiv:1912.04561. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into high quality object detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Rudi, A.; Carratino, L.; Rosasco, L. Falkon: An optimal large scale kernel method. arXiv 2017, arXiv:1705.10958. [Google Scholar]

- Maiettini, E.; Pasquale, G.; Rosasco, L.; Natale, L. On-line object detection: A robotics challenge. Auton. Robot. 2019, 44, 739–757. [Google Scholar] [CrossRef]

- Maiettini, E.; Pasquale, G.; Tikhanoff, V.; Rosasco, L.; Natale, L. A weakly supervised strategy for learning object detection on a humanoid robot. In Proceedings of the 2019 IEEE-RAS 19th International Conference on Humanoid Robots (Humanoids), Munich, Germany, 2–4 December 2020; pp. 194–201. [Google Scholar]

- Maiettini, E.; Pasquale, G.; Rosasco, L.; Natale, L. Speeding-up object detection training for robotics with FALKON. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 5770–5776. [Google Scholar]

- Ceola, F.; Maiettini, E.; Pasquale, G.; Rosasco, L.; Natale, L. Fast region proposal learning for object detection for robotics. arXiv 2020, arXiv:2011.12790. [Google Scholar]

- Maiettini, E.; Camoriano, R.; Pasquale, G.; Tikhanoff, V.; Rosasco, L.; Natale, L. Data-efficient weakly-supervised learning for on-line object detection under domain shift in robotics. arXiv 2020, arXiv:2012.14345. [Google Scholar]

- Browatzki, B.; Tikhanoff, V.; Metta, G.; Bülthoff, H.H.; Wallraven, C. Active object recognition on a humanoid robot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 2021–2028. [Google Scholar]

- Alam, M.; Vidyaratne, L.; Wash, T.; Iftekharuddin, K.M. Deep SRN for robust object recognition: A case study with NAO humanoid robot. In Proceedings of the SoutheastCon 2016, Norfolk, VA, USA, 30 March–3 April 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Yoshimoto, Y.; Tamukoh, H. Object recognition system using deep learning with depth images for service robots. In Proceedings of the 2018 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Okinawa, Japan, 27–30 November 2018; pp. 436–441. [Google Scholar]

- Chen, X.; Guhl, J. Industrial Robot Control with Object Recognition based on Deep Learning. Procedia CIRP 2018, 76, 149–154. [Google Scholar] [CrossRef]

- Fu, M.; Sun, S.; Ni, K.; Hou, X. Mobile robot object recognition in the internet of things based on fog computing. In Proceedings of the 2019 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Lanzhou, China, 18–21 November 2019; pp. 1838–1842. [Google Scholar]

- Takaki, S.; Tana, J.K.; Ishikawa, S. A human care system by a mobile robot employing cooperative objects recognition. In Proceedings of the TENCON 2017—2017 IEEE Region 10 Conference, Penang, Malaysia, 5–8 November 2017; pp. 1148–1152. [Google Scholar]

- Cartucho, J.; Ventura, R.; Veloso, M. Robust object recognition through symbiotic deep learning in mobile robots. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2336–2341. [Google Scholar]

- Kasaei, S.H.; Oliveira, M.; Lim, G.H.; Lopes, L.S.; Tomé, A.M. Towards lifelong assistive robotics: A tight coupling between object perception and manipulation. Neurocomputing 2018, 291, 151–166. [Google Scholar] [CrossRef]

- Eriksen, C.; Nicolai, A.; Smart, W. Learning object classifiers with limited human supervision on a physical robot. In Proceedings of the 2018 Second IEEE International Conference on Robotic Computing (IRC), Laguna Hills, CA, USA, 31 January–2 February 2018; pp. 282–287. [Google Scholar]

- Venkatesh, S.G.; Upadrashta, R.; Kolathaya, S.; Amrutur, B. Teaching robots novel objects by pointing at them. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 1101–1106. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and trans-late. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhutdinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. Int. Conf. Mach. Learn. 2015, 2048–2057. [Google Scholar]

- Chng, C.K.; Liu, Y.; Sun, Y.; Ng, C.C.; Luo, C.; Ni, Z.; Fang, C.; Zhang, S.; Han, J.; Ding, E.; et al. ICDAR2019 Robust Reading Challenge on Arbitrary-Shaped Text—RRC-ArT. In Proceedings of the 2019 International Con-ference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1571–1576. [Google Scholar] [CrossRef]

- Sun, Y.; Karatzas, D.; Chan, C.S.; Jin, L.; Ni, Z.; Chng, C.-K.; Liu, Y.; Luo, C.; Ng, C.C.; Han, J.; et al. ICDAR 2019 Competition on Large-Scale Street View Text with Partial Labeling—RRC-LSVT. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1557–1562. [Google Scholar]

- Zhang, R.; Yang, M.; Bai, X.; Shi, B.; Karatzas, D.; Lu, S.; Jawahar, C.V.; Zhou, Y.; Jiang, Q.; Song, Q.; et al. ICDAR 2019 robust reading challenge on reading Chinese text on signboard. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1577–1581. [Google Scholar]

- Shi, B.; Yao, C.; Liao, M.; Yang, M.; Xu, P.; Cui, L.; Belongie, S.; Lu, S.; Bai, X. ICDAR2017 Competition on Reading Chinese Text in the Wild (RCTW-17). In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 1429–1434. [Google Scholar]

- Zhou, X.; Yao, C.; Wen, H.; Wang, Y.; Zhou, S.; He, W.; Liang, J. EAST: An Efficient and Accurate Scene Text Detector. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2642–2651. [Google Scholar]

- Liao, M.; Zhu, Z.; Shi, B.; Xia, G.-S.; Bai, X. Rotation-sensitive regression for oriented scene text detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5909–5918. [Google Scholar]

- Zhang, C.; Liang, B.; Huang, Z.; En, M.; Han, J.; Ding, E.; Ding, X. Look More Than Once: An Accurate Detector for Text of Arbitrary Shapes. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 10544–10553. [Google Scholar]

- Zhu, Y.; Du, J. TextMountain: Accurate scene text detection via instance segmentation. Pattern Recognit. 2021, 110, 107336. [Google Scholar] [CrossRef]

- Yang, Q.; Cheng, M.; Zhou, W.; Chen, Y.; Qiu, M.; Lin, W. IncepText: A new inception-text module with deformable PSROI pooling for multi-oriented scene text detection. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 1071–1077. [Google Scholar]

- Xue, C.; Lu, S.; Zhan, F. Accurate scene text detection through border semantics awareness and bootstrapping. In Proceedings of the Reversible Computation; Springer Science and Business Media LLC: New York, NY, USA, 2018; pp. 370–387. [Google Scholar]

- Sun, Y.; Liu, J.; Liu, W.; Han, J.; Ding, E.; Liu, J. Chinese Street View Text: Large-scale chinese text reading with partially supervised learning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9085–9094. [Google Scholar]

| Word Count | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Chinese | |||||||||||

| standard | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 10,000 |

| oblique | 5000 | 5000 | 5000 | 5000 | 5000 | 5000 | 5000 | 5000 | 5000 | 5000 | 50,000 |

| distorted | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 | 10,000 |

| obscure | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 1000 |

| English (uppercase) | |||||||||||

| standard | 1000 | 1000 | 1000 | 1000 | 1000 | 5000 | |||||

| oblique | 2000 | 2000 | 2000 | 2000 | 2000 | 10,000 | |||||

| distorted | 1000 | 1000 | 1000 | 1000 | 1000 | 5000 | |||||

| obscure | 200 | 200 | 200 | 200 | 200 | 1000 | |||||

| English (lowercase) | |||||||||||

| oblique | 2000 | 2000 | 2000 | 2000 | 2000 | 10,000 | |||||

| Methods | Precision % | Recall % | F1-Measure% |

|---|---|---|---|

| EAST [35] | 59.7 | 47.8 | 53.1 |

| RRD [36] | 72.4 | 45.3 | 55.1 |

| LOMO [37] | 80.0 | 50.8 | 62.3 |

| TextMountain [38] | 80.8 | 55.2 | 65.6 |

| IncepText [39] | 78.5 | 56.9 | 66.0 |

| Border (DenseNet) [40] | 78.2 | 58.5 | 67.1 |

| End2End-PSL-MS [41] | 81.7 | 57.8 | 67.7 |

| Ours | 74.2 | 63.0 | 68.1 |

| Methods | Average_dist | Normalized % |

|---|---|---|

| End2End [41] | 27.5 | 72.9 |

| Attention ocr [9] | 26.3 | 74.2 |

| End2End-PSL [41] | 26.2 | 73.5 |

| Ours | 25.6 | 74.9 |

| Methods | Normalized % |

|---|---|

| Baseline | 76.65 |

| Ours (generating) | 76.68 |

| Ours (inverse) | 77.91 |

| Image | Recognition Result |

|---|---|

| WAFLE/Tango/CRUNCHMILK/New/REGIPE/OT/012704/Jakarta/INDONESIA/PRIMA/Content/Wafer/ABADI/Barat/Milk/Product/2020/Contentl Contenu: 20x89/ULTRA/Halanta Barat 11850-INDONESIA |

| Image | Confidence ≥ 0 | Confidence ≥ 0.9 | Confidence ≥ 0.97 | Confidence ≥ 0.98 |

|---|---|---|---|---|

| 德新康 DEXCON 德新康牌7596酒精消毒瓶 适用于完整皮肤的消费 净含量:500mL sonsloe 山东德新康医疗科技有限公 Sonsloe x.c 1bsbbM ygulondbsloia | 德新康 DEXCON 德新康牌7596酒精消毒瓶 适用于完整皮肤的消费 净含量:500mL sonsloe 山东德新康医疗科技有限公 | 德新康 DEXCON 德新康牌7596酒精消毒瓶 | 德新康 DEXCON |

| BORGES DUO Robust EXCLUSIVE MEDITERRANEAN EXTRA CAP DRESSING DRESSING COOKING VIRGIN Cold OLIVE SINCE OIL 750n Extraction MASTERS BOROES INTENSE 1896 PATENEDINOUE INTENSITY INTENSE DRESSING | BORGES DUO Robust EXCLUSIVE MEDITERRANEAN EXTRA CAP DRESSING DRESSING COOKING VIRGIN Cold OLIVE SINCE OIL 750n Extraction MASTERS BOROES | BORGES DUO Robust EXCLUSIVE MEDITERRANEAN EXTRA CAP DRESSING DRESSING COOKING VIRGIN | BORGES DUO Robust EXCLUSIVE MEDITERRANEAN EXTRA CAP |

| Text detection result |  |  |  |  |

| Text recognition result | 丝滑摩卡 Smoollatté 雀巢咖啡 NESCAFE | 甘源 椒盐味花生 坚果与籽类食品 | TOMATO SAUCE PASTA ITALIAN | EXSPIRATIO Biandy |

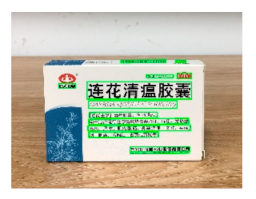

| Image 1 | Image 2 | Description |

|---|---|---|

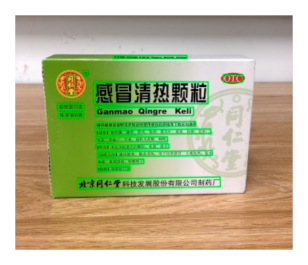

|  | Different Medicine. |

| 连花清盒胶襄 | 感冒清热颗粒 | |

|  | Different Liquid: Left is a drink and right is alcohol. |

| 秋林·格瓦斯 | 德新康牌7596酒精消毒瓶 | |

|  | Hair care: left is conditioner and right is shampoo. |

| 吕臻参换活御时生机护发乳 | 吕臻参换活御时生机洗发水 | |

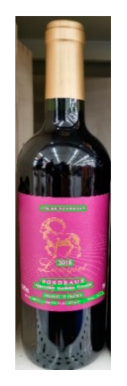

|  | Different brand Wine: left is BORDEAUX and right is CABERNET. |

| BORDEAUX | CABERNET |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Xu, H.; Li, Q.; Zhang, F.; Hou, K. A Robot Object Recognition Method Based on Scene Text Reading in Home Environments. Sensors 2021, 21, 1919. https://doi.org/10.3390/s21051919

Liu S, Xu H, Li Q, Zhang F, Hou K. A Robot Object Recognition Method Based on Scene Text Reading in Home Environments. Sensors. 2021; 21(5):1919. https://doi.org/10.3390/s21051919

Chicago/Turabian StyleLiu, Shuhua, Huixin Xu, Qi Li, Fei Zhang, and Kun Hou. 2021. "A Robot Object Recognition Method Based on Scene Text Reading in Home Environments" Sensors 21, no. 5: 1919. https://doi.org/10.3390/s21051919

APA StyleLiu, S., Xu, H., Li, Q., Zhang, F., & Hou, K. (2021). A Robot Object Recognition Method Based on Scene Text Reading in Home Environments. Sensors, 21(5), 1919. https://doi.org/10.3390/s21051919