Design and Implementation of a Real-Time Multi-Beam Sonar System Based on FPGA and DSP

Abstract

:1. Introduction

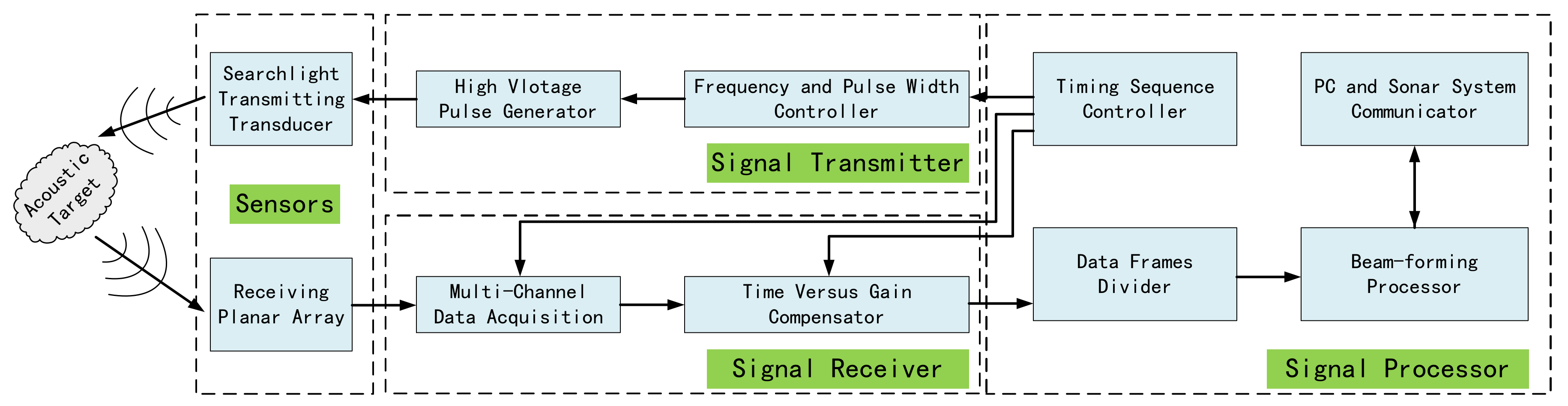

2. Operating Principle of Multibeam Sonars

2.1. The Positioning Method of Multibeam Sonars

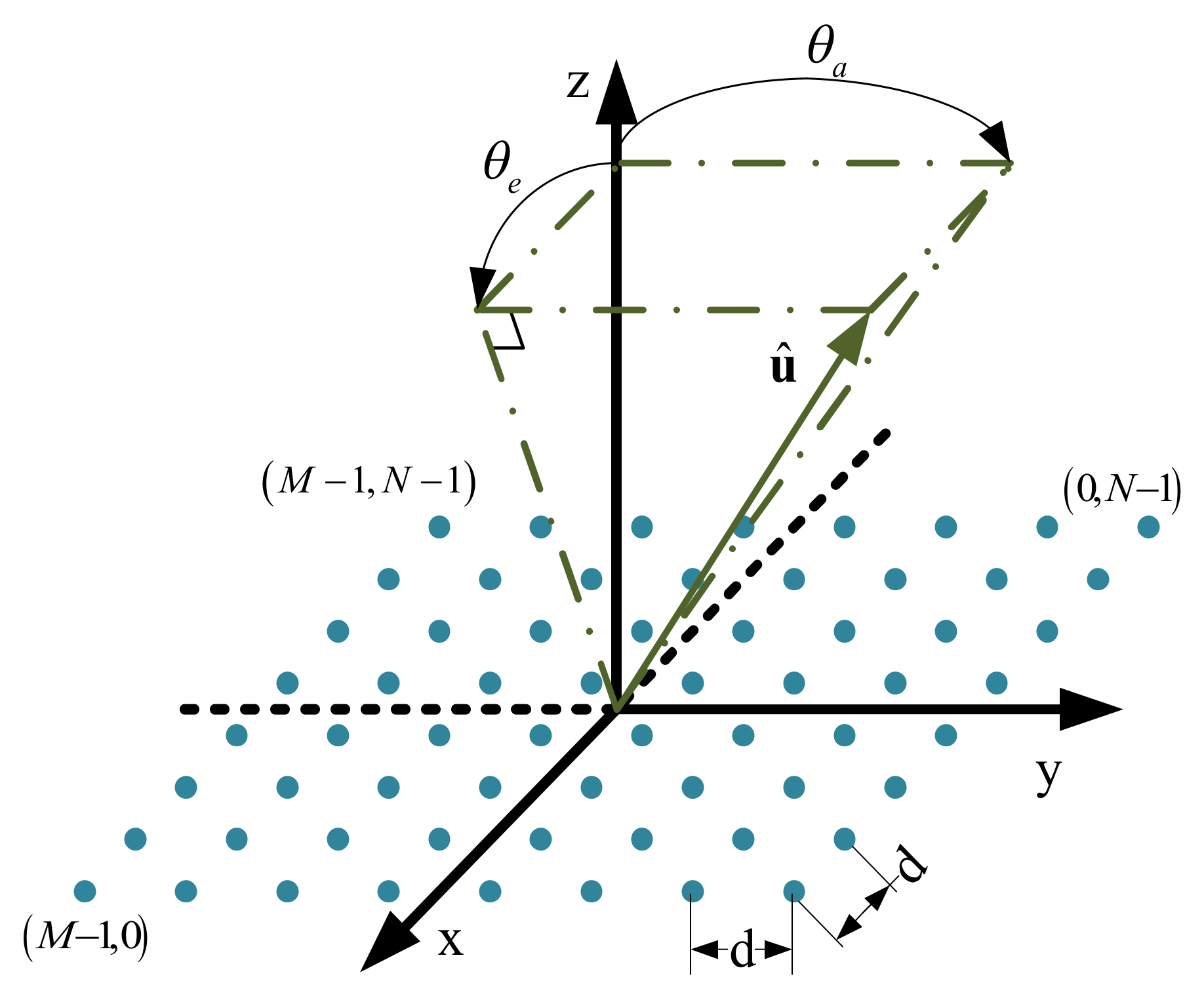

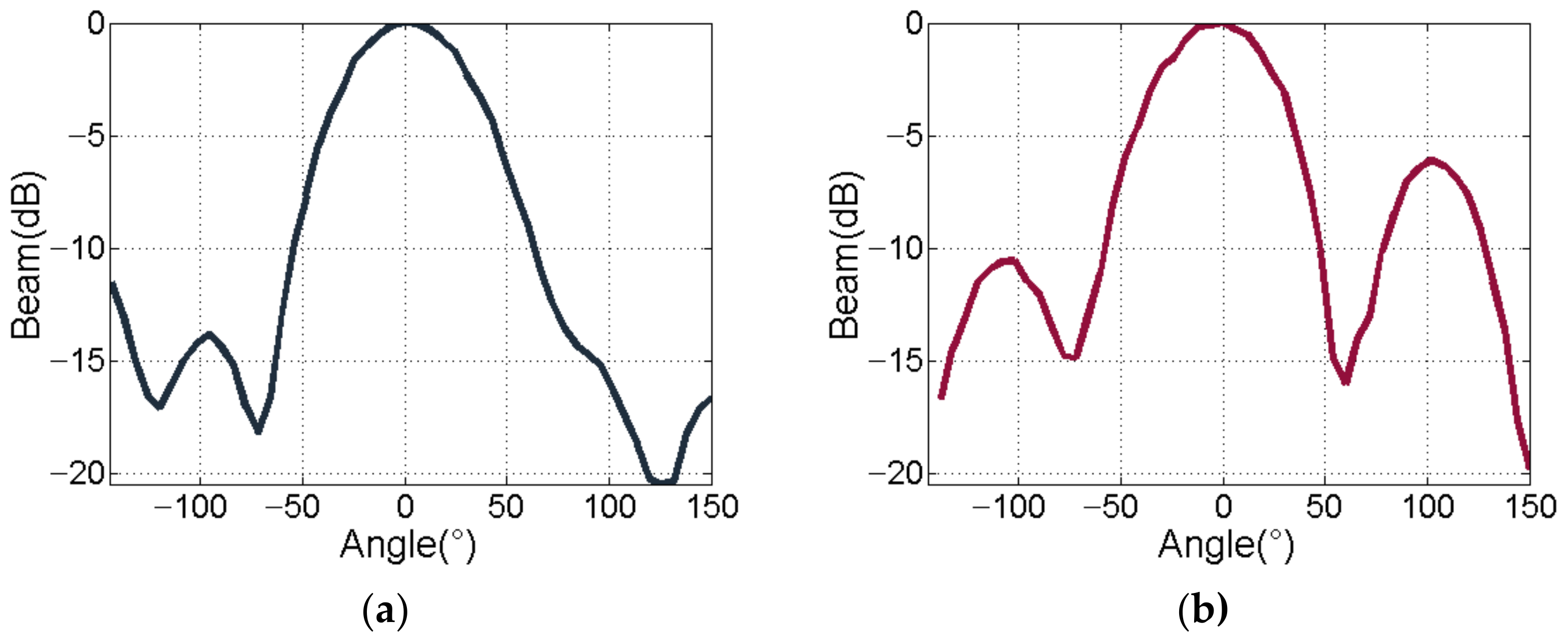

2.2. Study on Beamforming Method for Planar Arrays

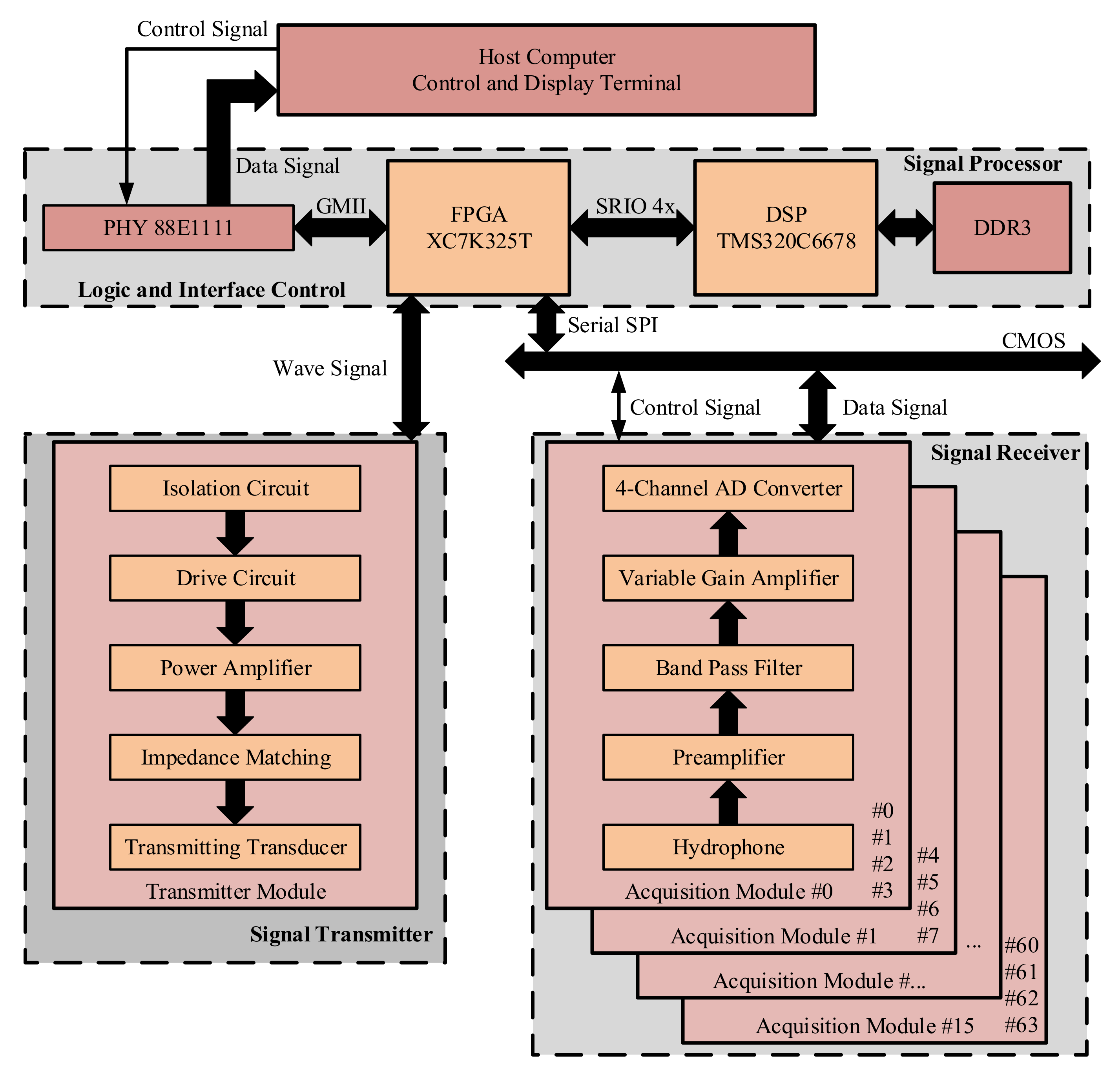

3. Hardware Design

3.1. Overall Hardware Design

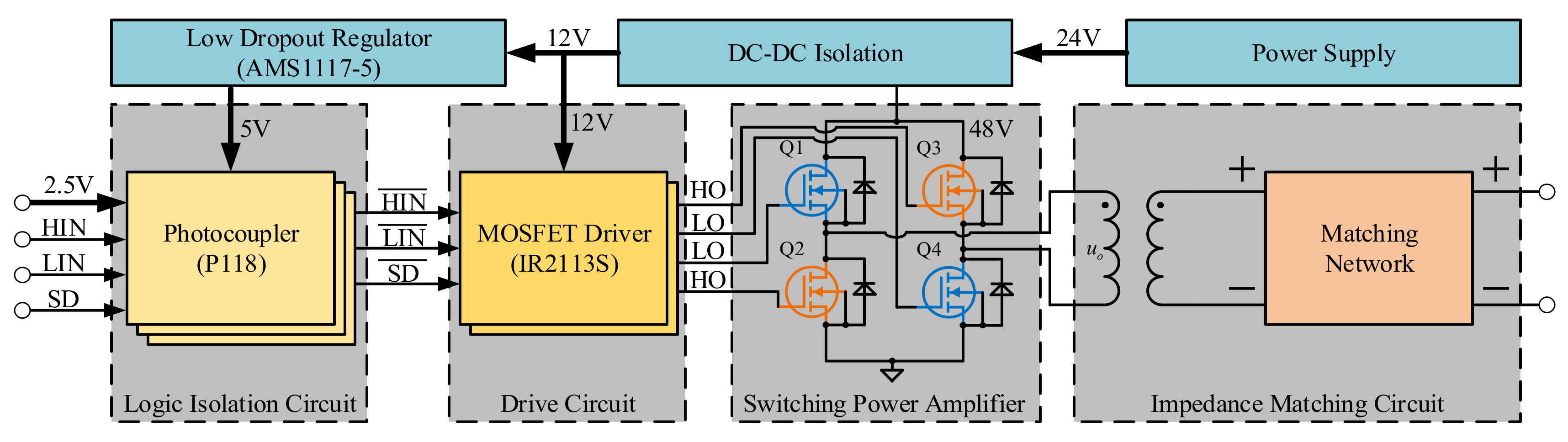

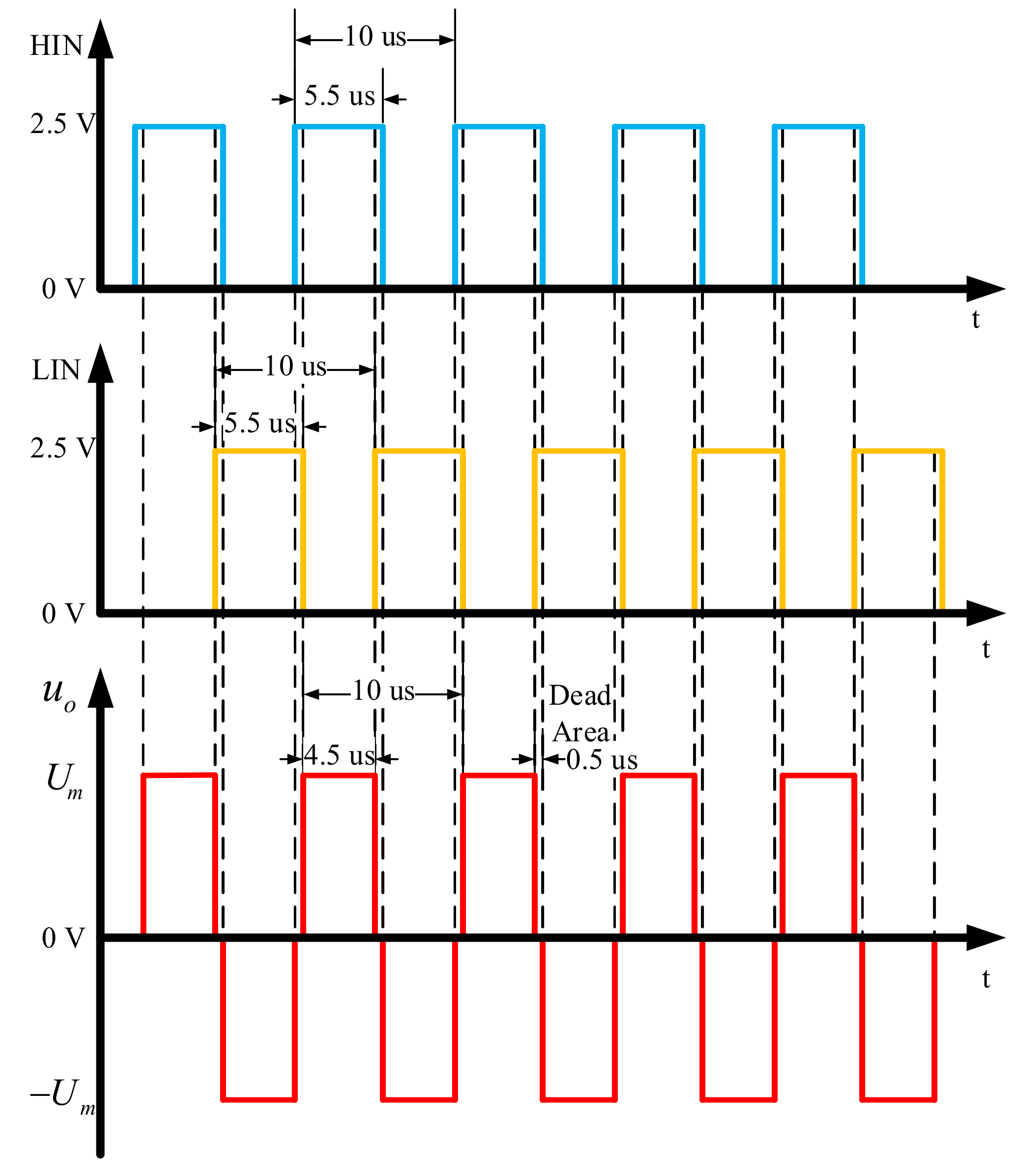

3.2. Design of High Voltage Pulse Transmitting Moudle

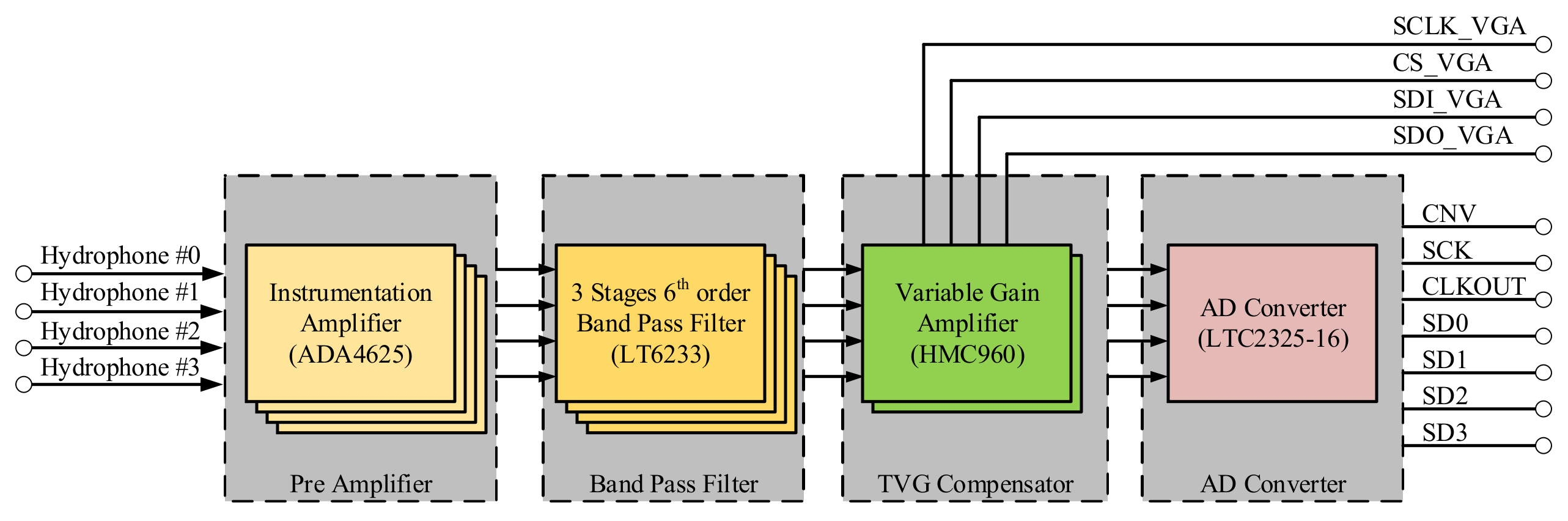

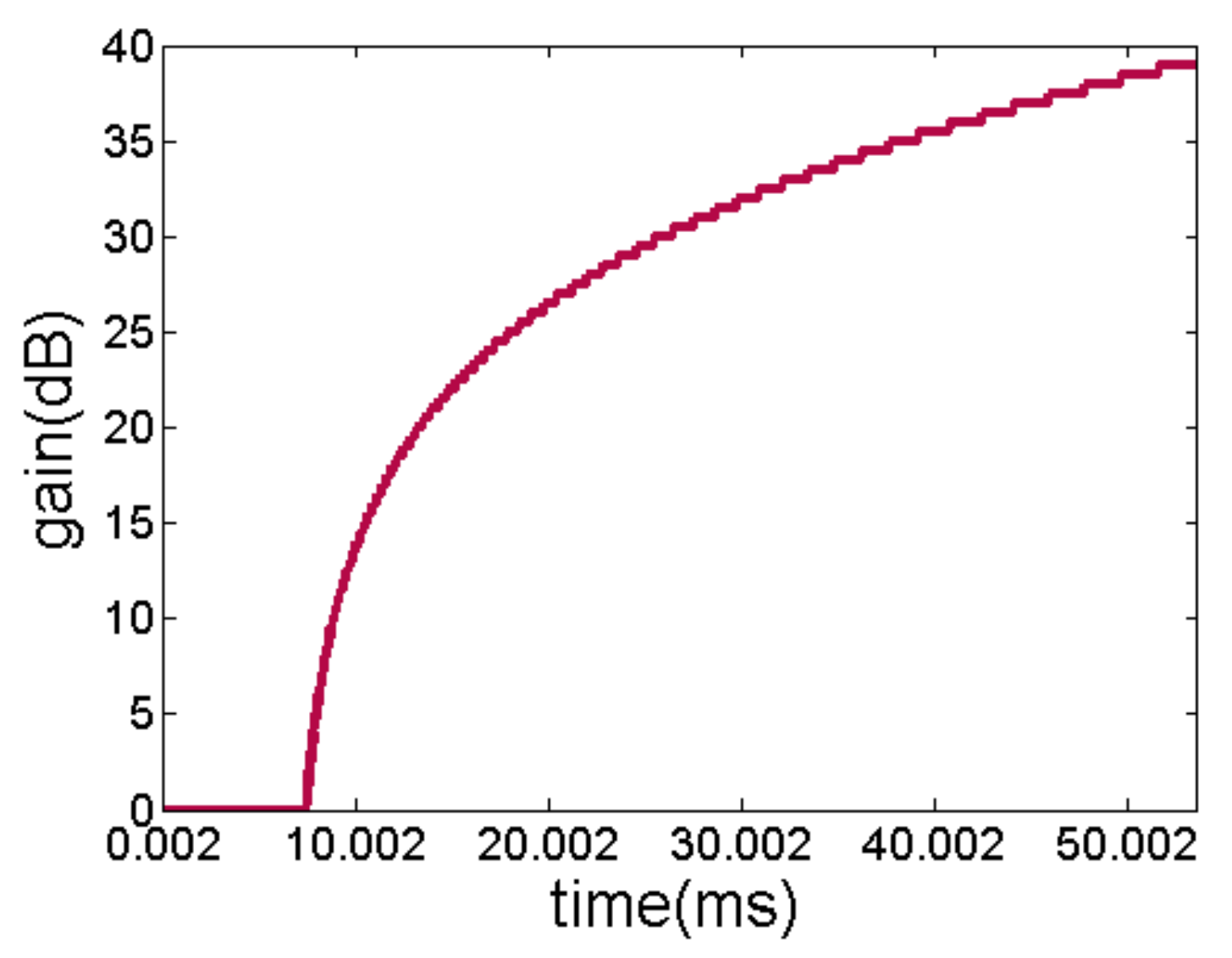

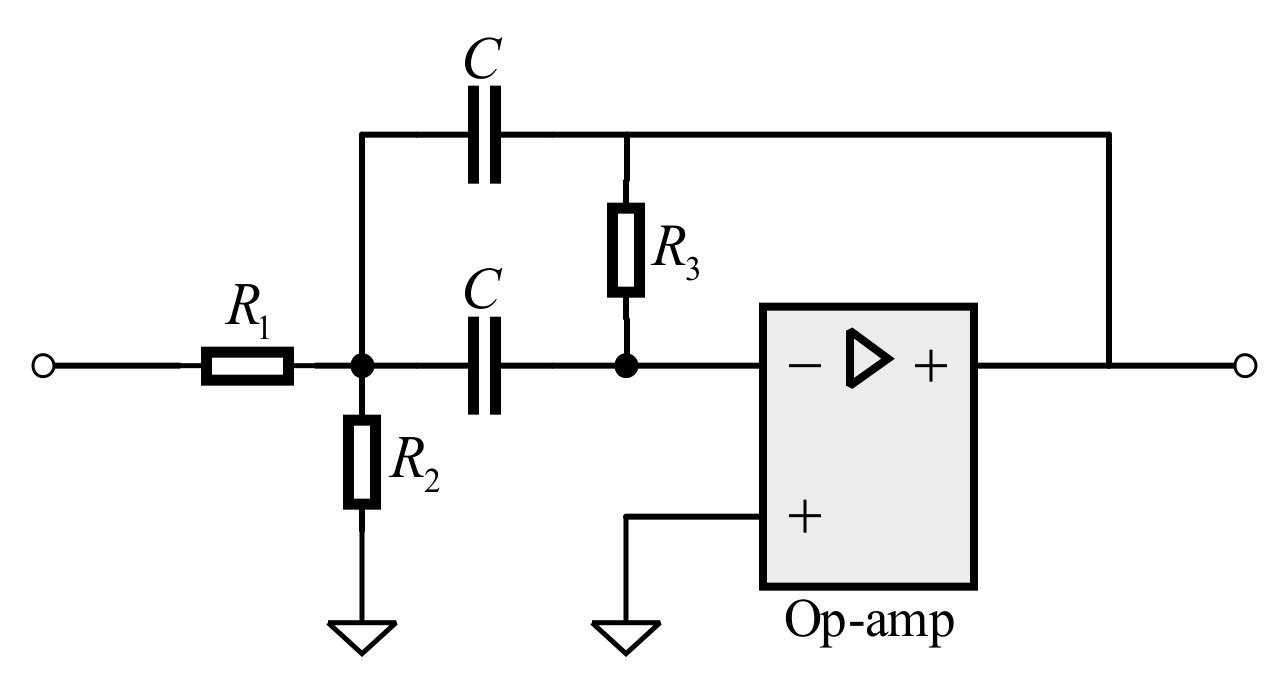

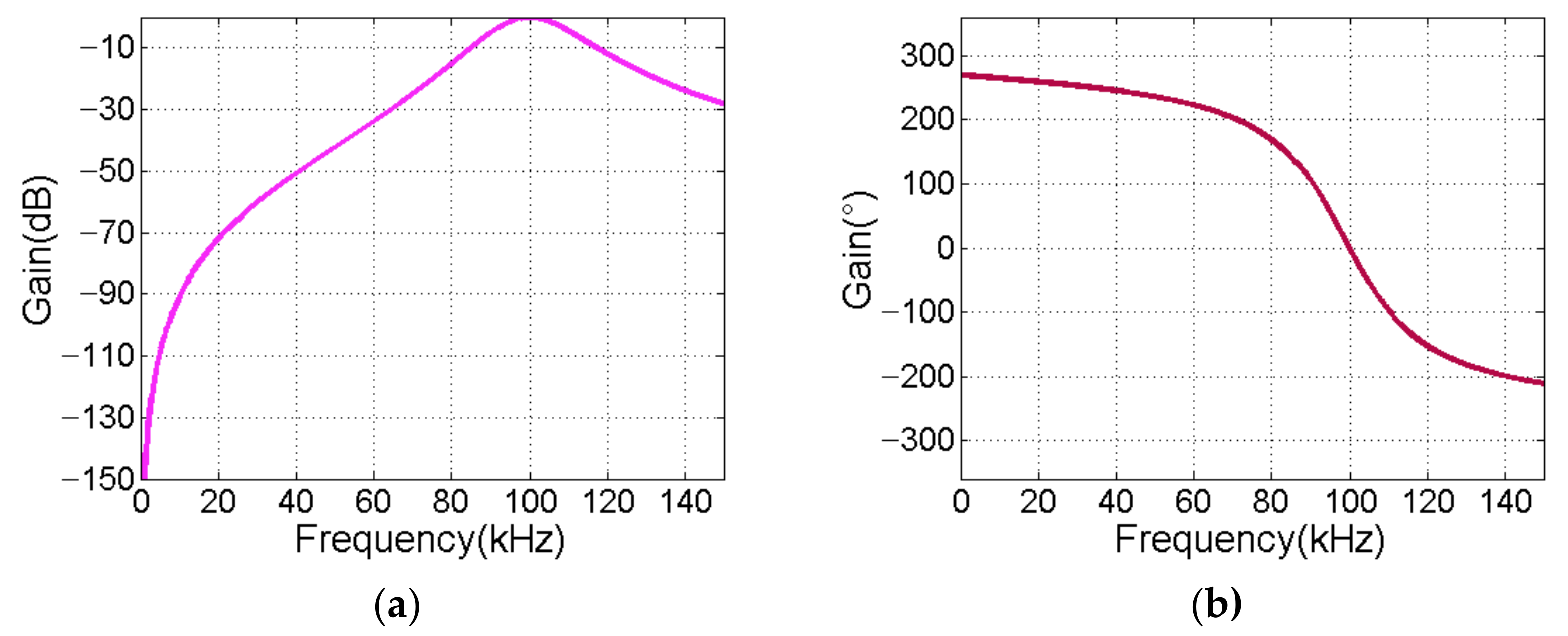

3.3. Design of Multi-Channel Data Acquisition Module

4. Software Design

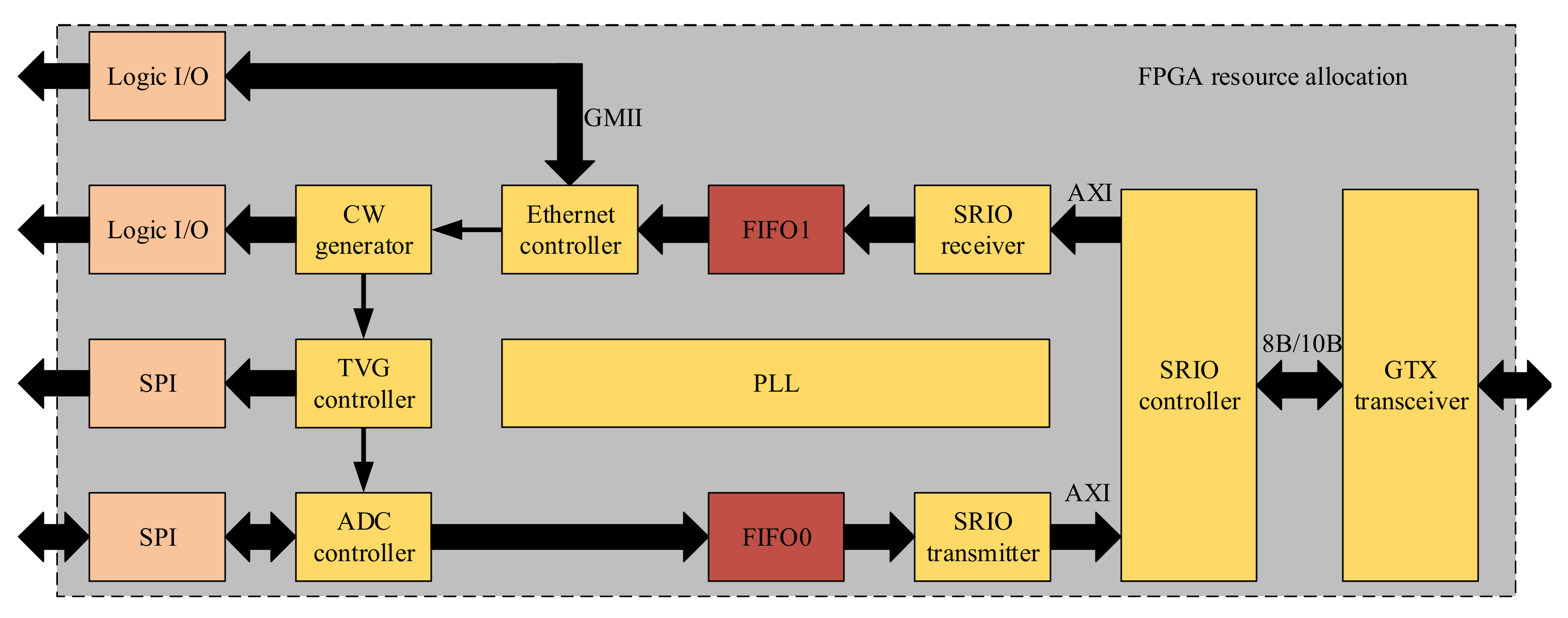

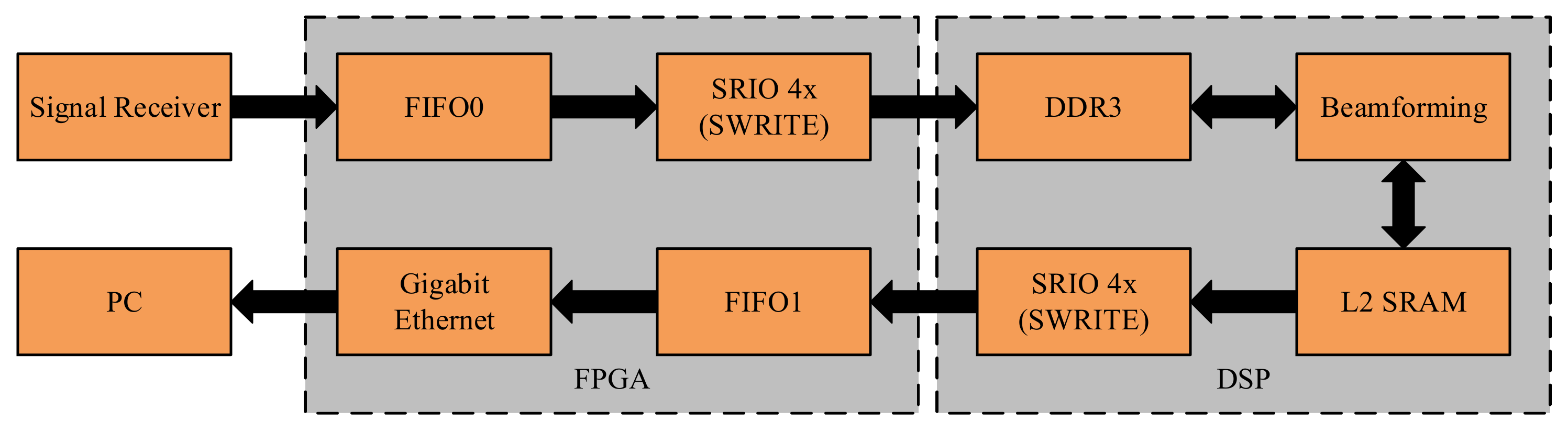

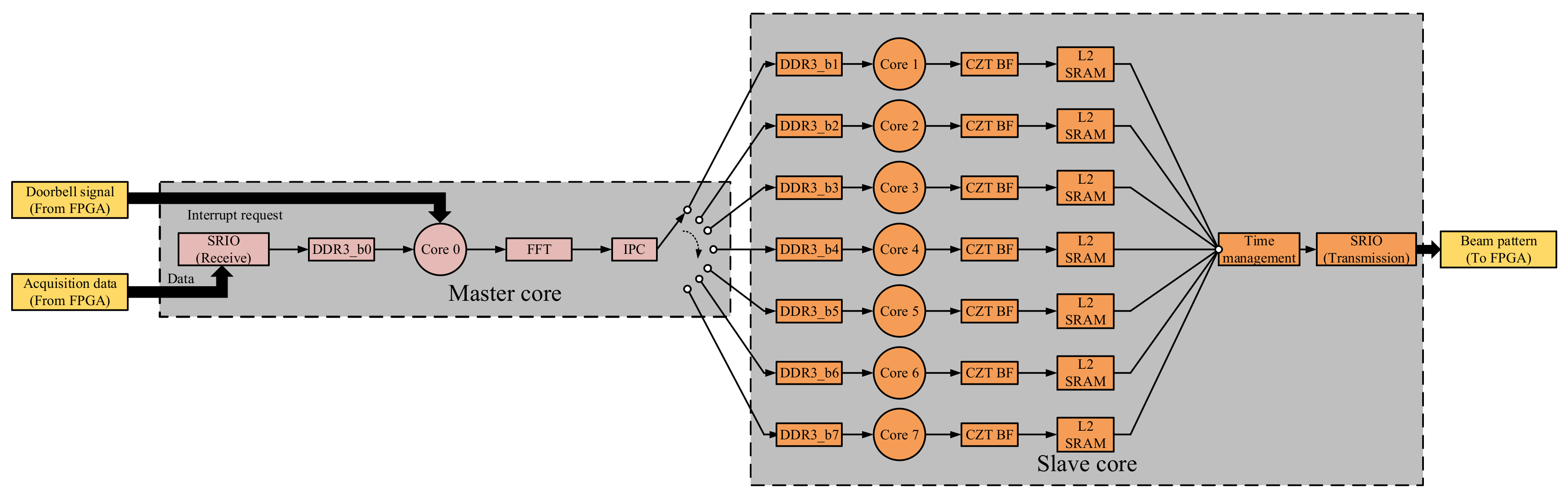

4.1. Overall Software Design

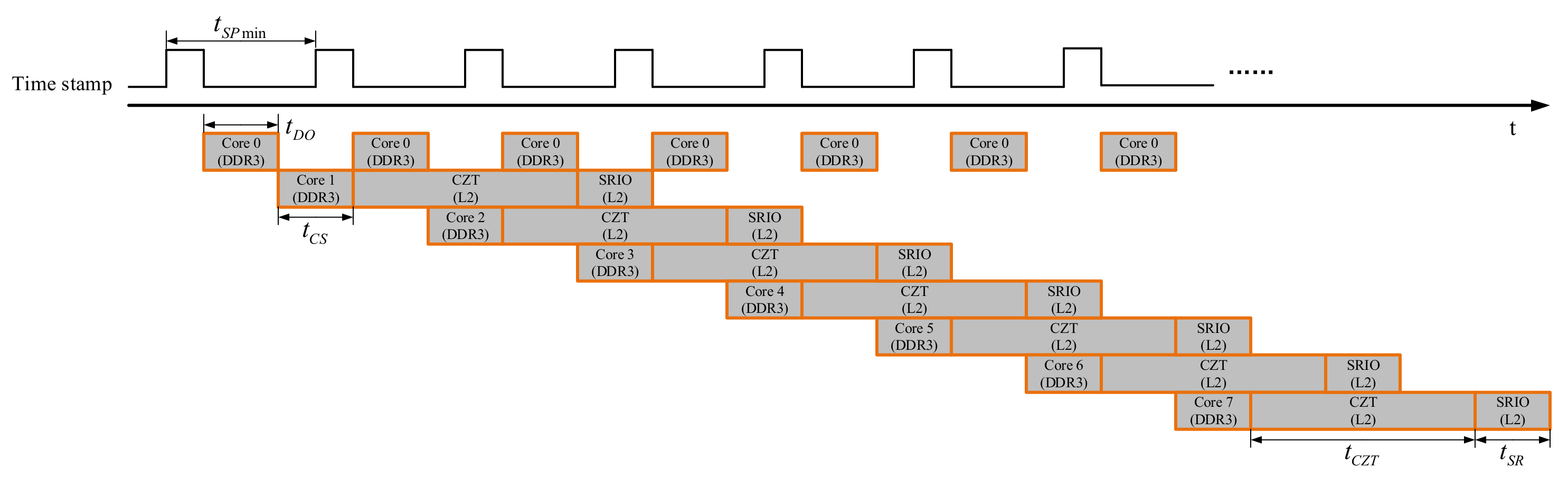

4.2. Analysis and Planning of Data Flow

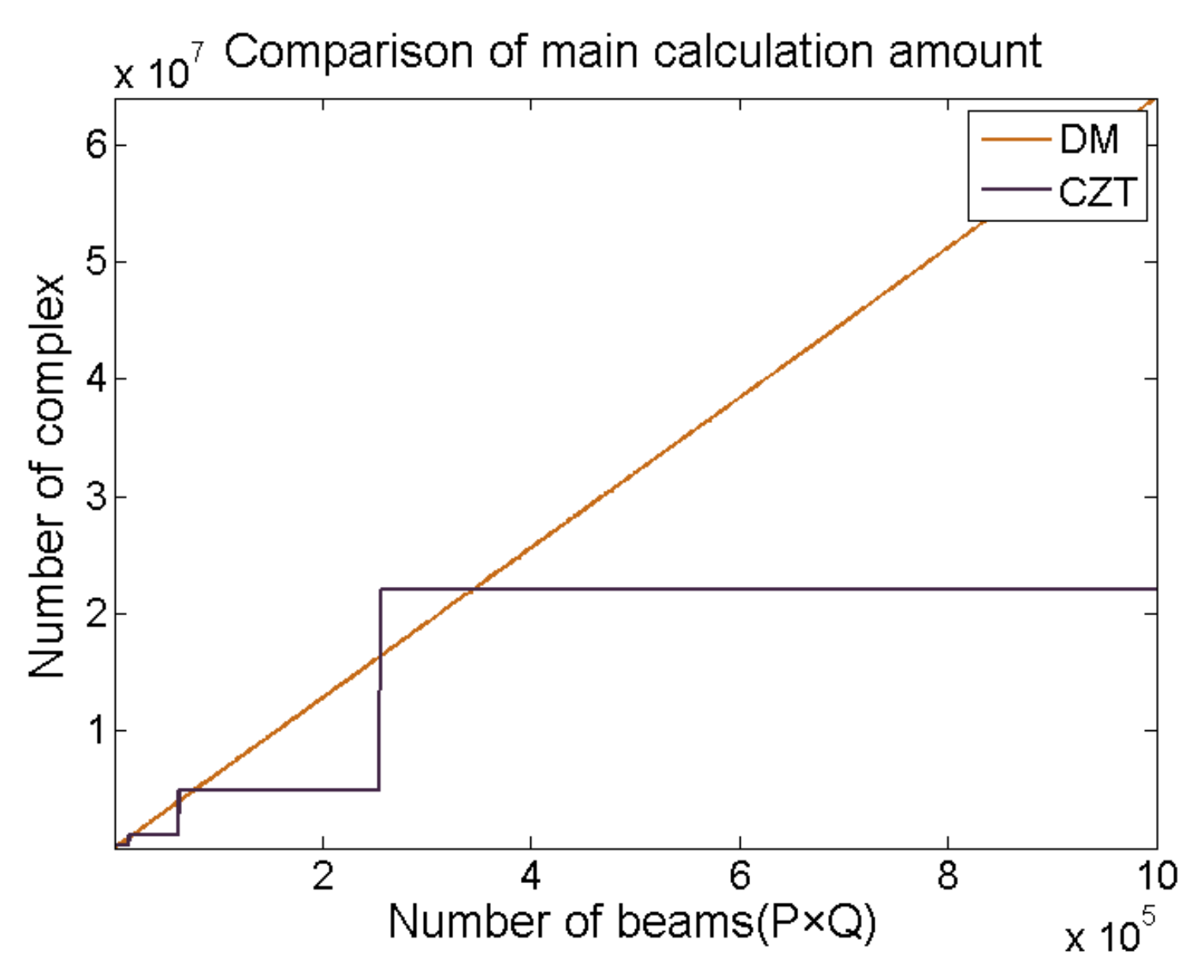

4.3. Design of 2-D CZT Beamforming Based on Multicore DSP

5. Experiment and Analysis

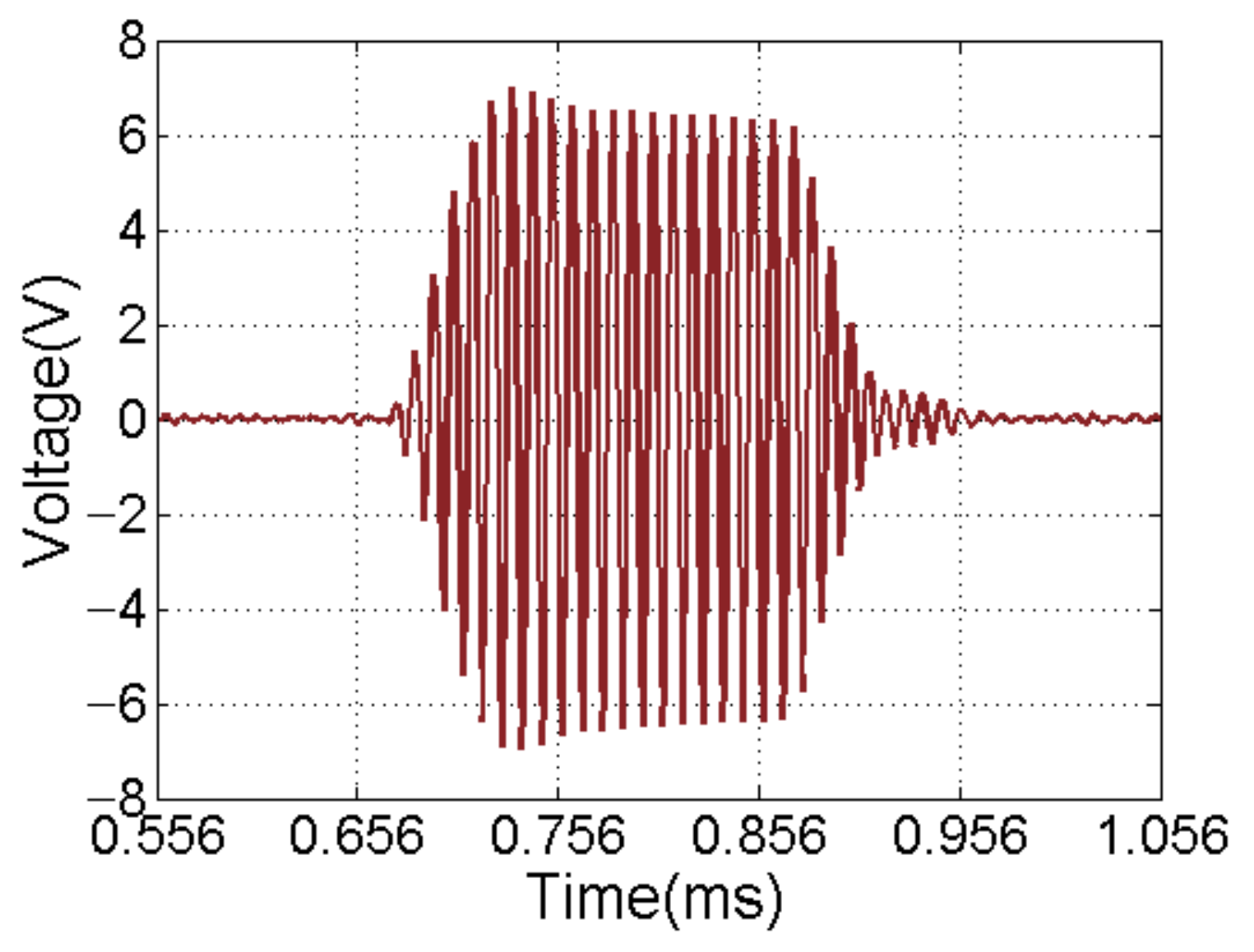

5.1. Measurment of Transmiting Sound Souce Level and Directivity

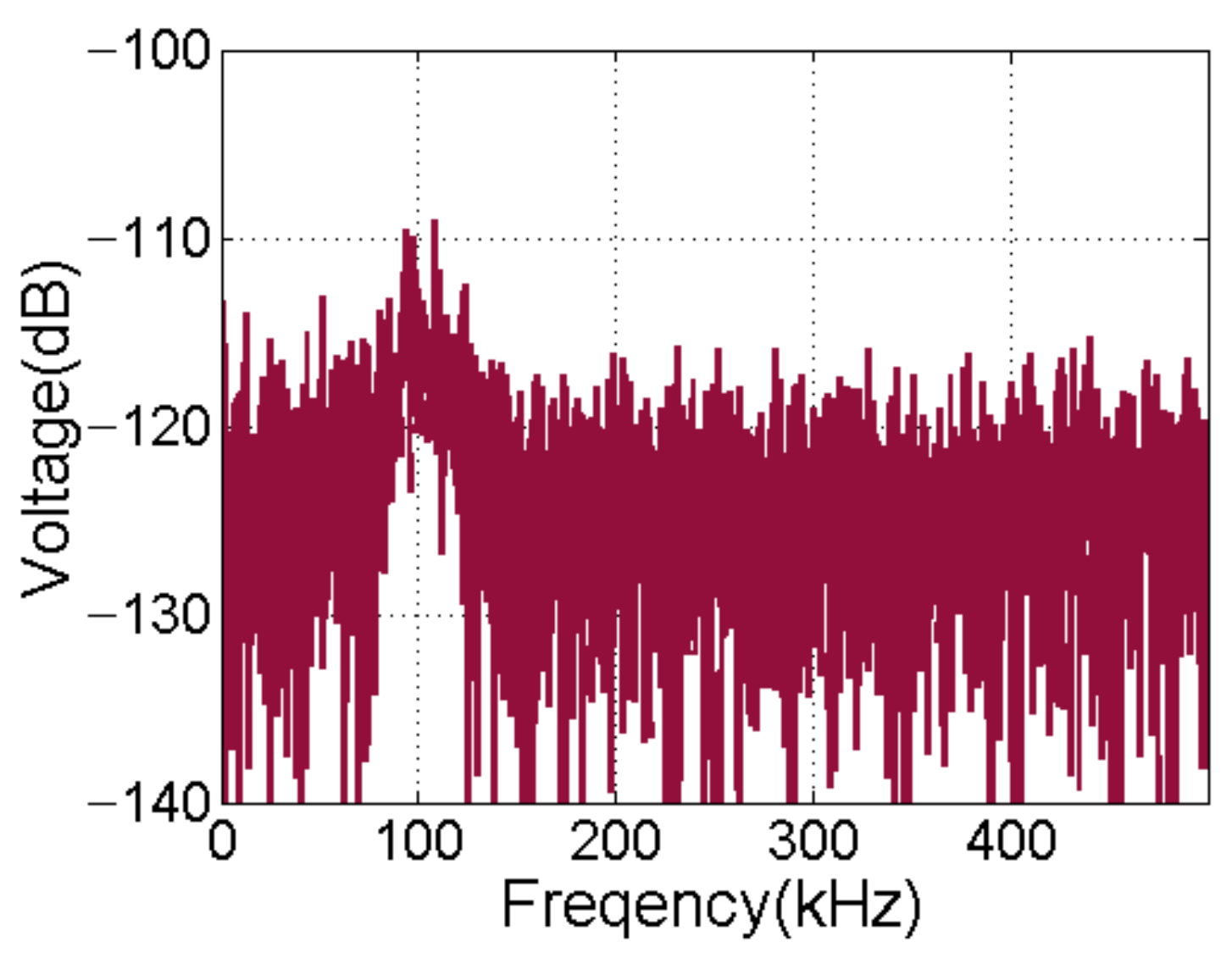

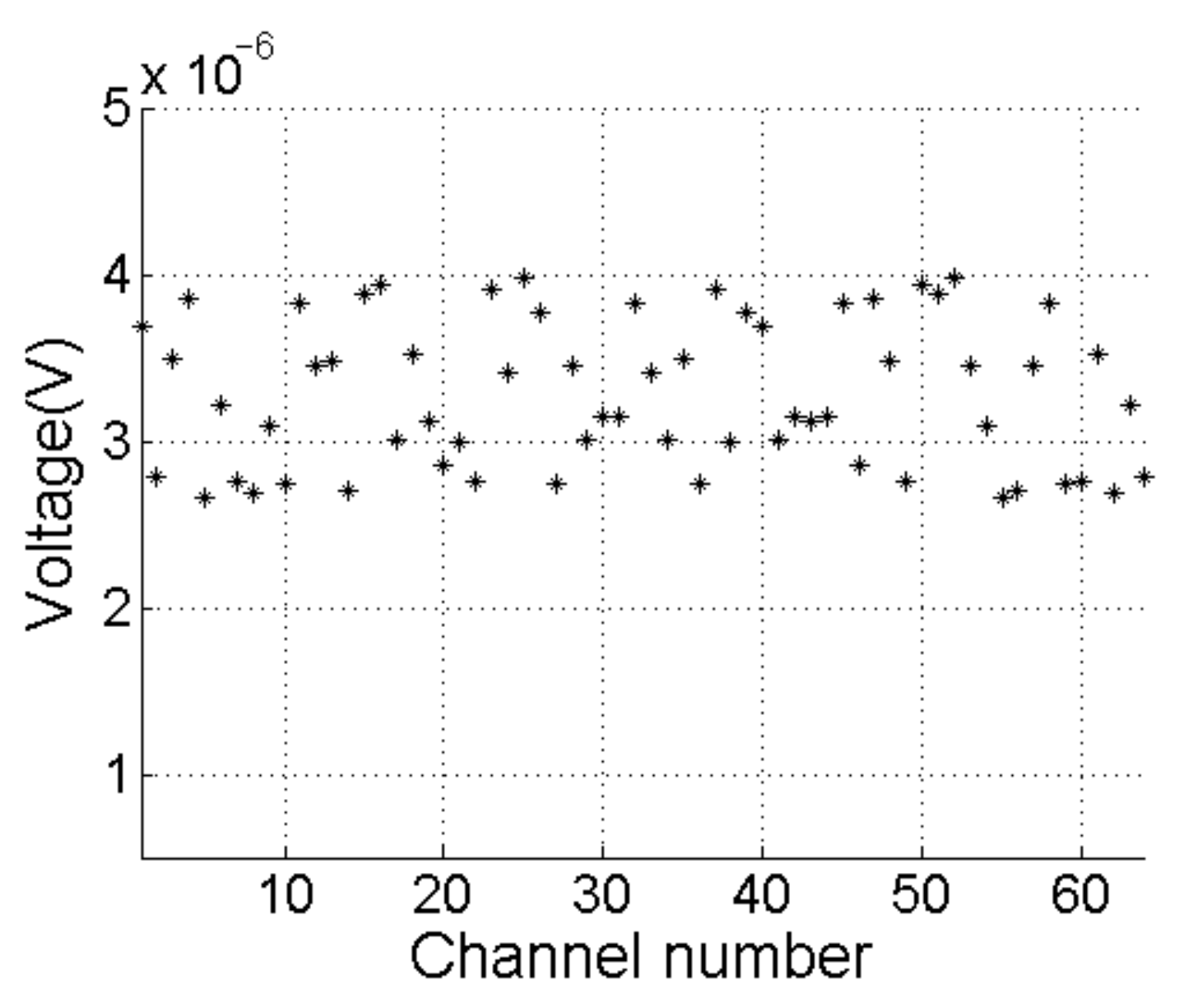

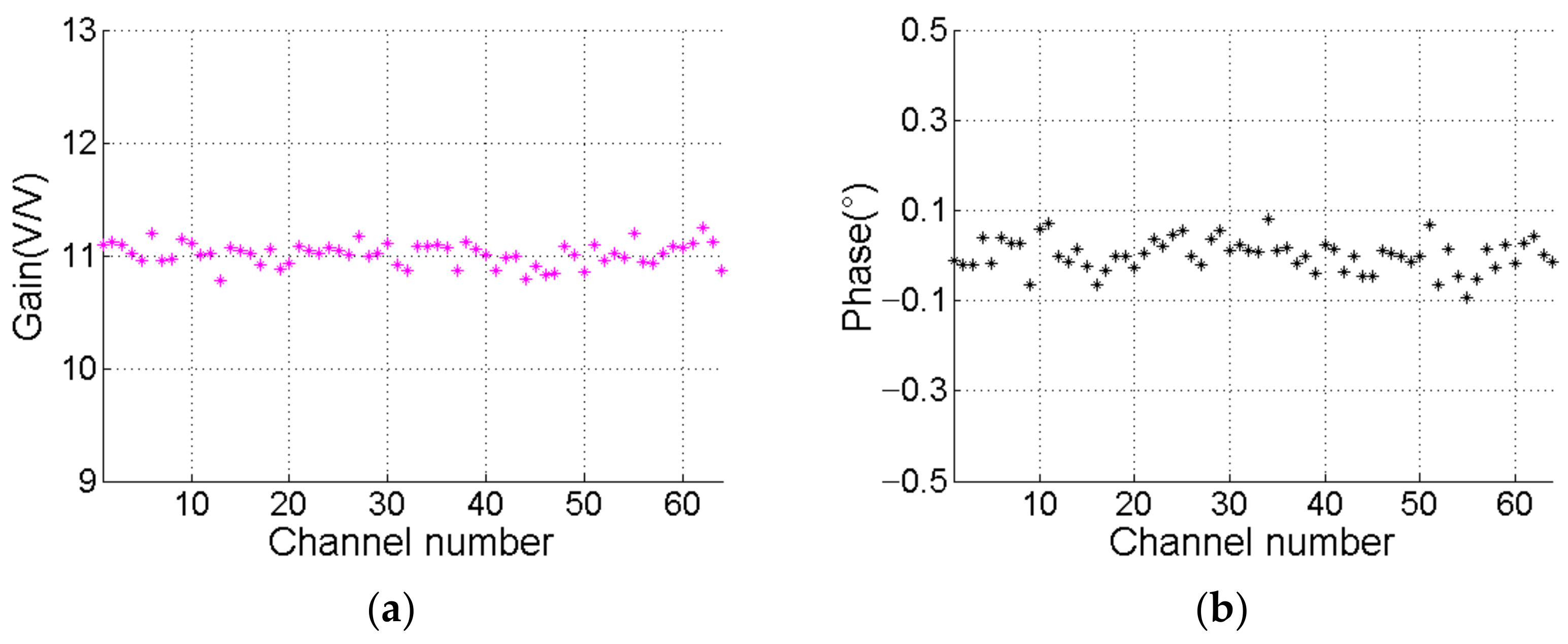

5.2. Measurement of Receiving Background Noise and Consistency

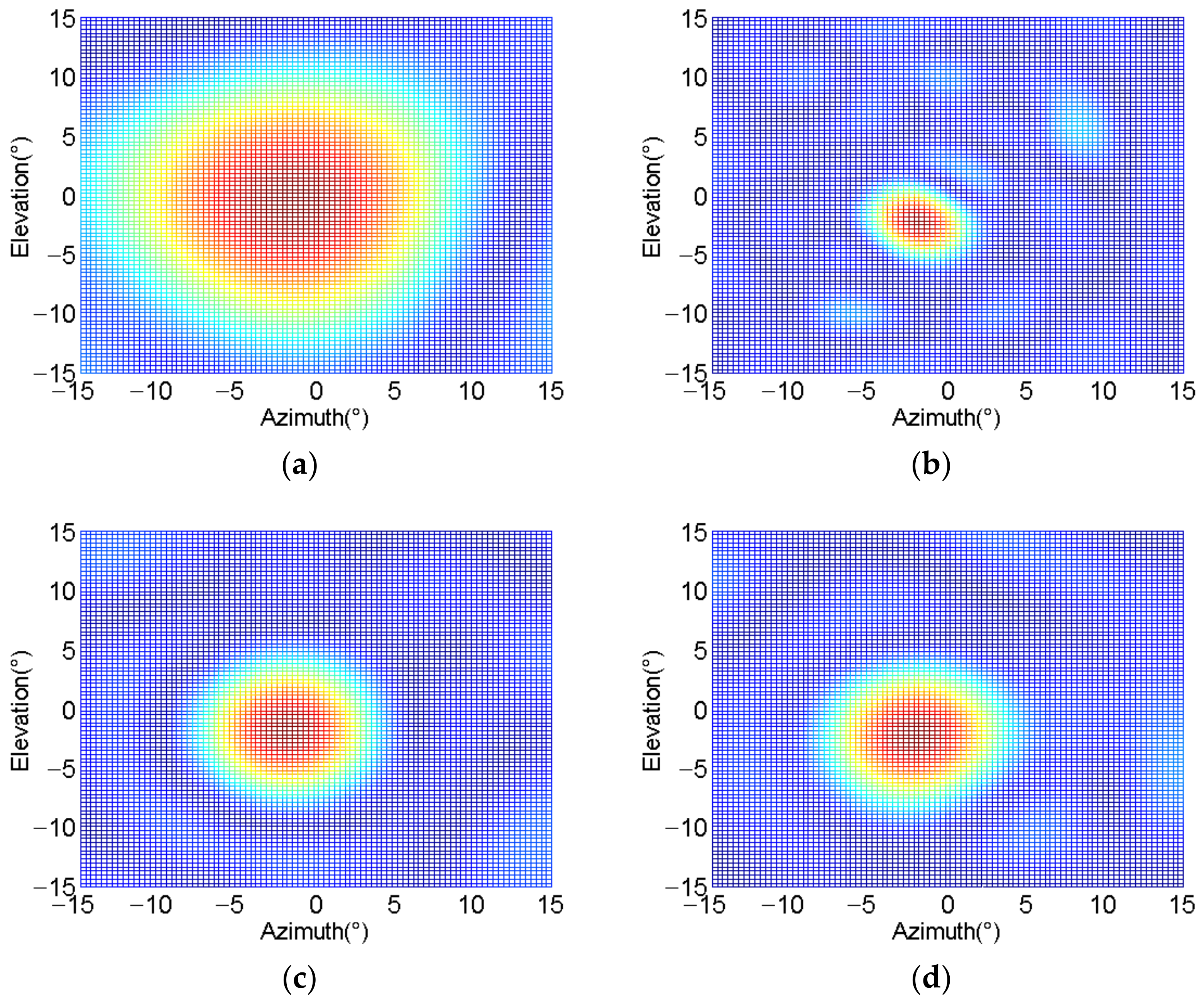

5.3. Underwater Positioning Experiment

5.4. Discussion on Scalability of Multibeam Sonar

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Van Trees, H.L. Optimum Array Processing Part IV of Detection, Estimation, and Modulation Theory; John Wiley & Sons: Hoboken, NJ, USA, 2002. [Google Scholar]

- Liu, X.; Zhou, F.; Zhou, H.; Tian, X.; Jiang, R.; Chen, Y. A Low-Complexity Real-Time 3-D Sonar Imaging System with a Cross Array. IEEE J. Ocean. Eng. 2015, 41, 262–273. [Google Scholar]

- Okino, M.; Higashi, Y. Measurement of seabed topography by multibeam sonar using cfft. IEEE J. Ocean. Eng. 1986, 11, 474–479. [Google Scholar] [CrossRef]

- Yan, L.; Piao, S.; Yan, L.; Xu, F.; Liu, J.; Yang, J. High Precision Imaging Method Utilizing Calibration and Apodization in Multibeam Imaging Sonar. In Proceedings of the OCEANS 2018 MTS/IEEE Charleston, Charleston, SC, USA, 22–25 October 2018; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Baggeroer, A.B.; Cox, H. Passive Sonar Limits Upon Nulling Multiple Moving Ships with Large Aperture Arrays. In Proceedings of the Asilomar Conference on Signals Systems and Computers, Pacific Grove, CA, USA, 24–27 October 1999; IEEE: Piscataway, NJ, USA, 1999. [Google Scholar]

- Cox, H. Multi-rate adaptive beamforming (MRABF). In Proceedings of the Sensor Array & Multichannel Signal Processing Workshop, Cambridge, MA, USA, 17 March 2000; IEEE: Piscataway, NJ, USA, 2000. [Google Scholar]

- Johnson, D.H.; Dudgeon, D.E. Array Signal Processing: Concepts and Techniques; Prentice Hall: Englewood Cliffs, NJ, USA, 1993. [Google Scholar]

- Murino, V.; Trucco, A. Three-dimensional image generation and processing in underwater acoustic vision. Proc. IEEE 2000, 88, 1903–1948. [Google Scholar] [CrossRef]

- Trucco, A.; Palmese, M.; Repetto, S. Devising an Affordable Sonar System for Underwater 3-D Vision. IEEE Trans. Instrum. Meas. 2008, 57, 2348–2354. [Google Scholar] [CrossRef]

- Vaccaro, R.J. The past, present, and the future of underwater acoustic signal processing. IEEE Signal Process. Mag. 1998, 15, 21–51. [Google Scholar] [CrossRef]

- Paull, L.; Saeedi, S.; Seto, M.; Li, H. Auv navigation and localization: A review. IEEE J. Ocean. Eng. 2014, 39, 131–149. [Google Scholar] [CrossRef]

- Kazimierczuk, M.K. Class d voltage-switching mosfet power amplifier. Electr. Power Appl. IEEE Proc. B 1991, 138, 285–296. [Google Scholar] [CrossRef]

- Budihardjo, I.K.; Lauritzen, P.O.; Mantooth, H.A. Performance requirements for power mosfet models. IEEE Trans. Power Electron. 1997, 12, 36–45. [Google Scholar] [CrossRef]

- Hwu, K.I.; Yau, Y.T. Application-oriented low-side gate drivers. IEEE Trans. Ind. Appl. 2009, 45, 1742–1753. [Google Scholar] [CrossRef]

- Ali, R.; Daut, I.; Taib, S.; Jamoshid, N.S.; Razak, A.R.A. Design of high-side MOSFET driver using discrete components for 24V operation. In Proceedings of the Power Engineering & Optimization Conference, Shah Alam, Malaysia, 23–24 June 2010; IEEE: Piscataway, NJ, USA, 2010. [Google Scholar]

- Hurley, W.G. Optimizing core and winding design in high frequency transformers. In Proceedings of the IEEE International Power Electronics Congress, Guadalajara, Mexico, 20–24 October 2002; IEEE: Piscataway, NJ, USA, 2002. [Google Scholar]

- Coates, R.; Mathams, R.F. Design of matching networks for acoustic transducers. Ultrasonics 1988, 26, 59–64. [Google Scholar] [CrossRef]

- Gao, Y. Optimal design of preamplifiers for broadband passive sonar. In Proceedings of the 2013 MTS/IEEE OCEANS-Bergen, Bergen, Norway, 10–14 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–5. [Google Scholar]

- Jensen, F.B.; Kuperman, W.A.; Porter, M.B. Henrik Schmidt; Computational Ocean Acoustics; Springer Science & Business Media: Berlin, Germany, 2011. [Google Scholar]

- Lin, J.; Ma, X.; Yang, L.; Lin, G. A Large-Scale Sonar Signal Acquisition and Storage System Based on FPGA. In Proceedings of the Fourth International Conference on Instrumentation & Measurement, Harbin, China, 18–20 September 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Bailey, D.; Hughes-Jones, R.; Kelly, M. Using FPGAs to Generate Gigabit Ethernet Data Transfers and Studies of the Network Performance of DAQ Protocols. In Proceedings of the Real-time Conference, Batavia, IL, USA, 29 April–4 May 2007; IEEE: Piscataway, NJ, USA, 2007. [Google Scholar]

- Palmese, M.; Trucco, A. Three-dimensional acoustic imaging by chirp zeta transform digital beamforming. IEEE Trans. Instrum. Meas. 2009, 58, 2080–2086. [Google Scholar] [CrossRef]

- Palmese, M.; Trucco, A. An efficient digital czt beamforming design for near-field 3-d sonar imaging. IEEE J. Ocean. Eng. 2010, 35, 584–594. [Google Scholar] [CrossRef]

- Zhan, Z.H.; Hao, W.; Tian, Y.; Yao, D.; Wang, X. A Design of Versatile Image Processing Platform Based on the Dual Multi-core DSP and FPGA. In Proceedings of the Fifth International Symposium on Computational Intelligence & Design, Hangzhou, China, 28–29 October 2012; IEEE Computer Society: Washington, DC, USA, 2012. [Google Scholar]

- Yang, J.; Yan, L.; Xu, F.; Chen, T. An Improved Fast Real-time Imaging Approach Based on C6678 DSP Array and Parallel Pipeline in Sonar. In Proceedings of the OCEANS 2019 MTS/IEEE SEATTLE, Seattle, WA, USA, 27–31 October 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Jian, G.; Ming, D.Y.; Cheng, L.Y. Design and Implementation of High Speed Data Transmission for X-Band Dual Polarized Weather Radar. In Proceedings of the 2019 International Conference on Meteorology Observations (ICMO), Chengdu, China, 28–31 December 2019. [Google Scholar]

- Kharin, A.; Vityazev, S.; Vityazev, V. Teaching multi-core DSP implementation on EVM C6678 board. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greek, 28 August–2 September 2017. [Google Scholar]

- Xue, Y.; Chang, L.; Kjaer, S.B.; Bordonau, J.; Shimizu, T. Topologies of single-phase inverters for small distributed power generators: An overview. IEEE Trans. Power Electron. 2004, 19, 1305–1314. [Google Scholar] [CrossRef]

| 512 μs | 190.45 μs | 6.32 μs | 2994.26 μs | 9.2 μs |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, H.; Guo, S.; Zhao, P.; Gong, M.; Shen, C. Design and Implementation of a Real-Time Multi-Beam Sonar System Based on FPGA and DSP. Sensors 2021, 21, 1425. https://doi.org/10.3390/s21041425

Tian H, Guo S, Zhao P, Gong M, Shen C. Design and Implementation of a Real-Time Multi-Beam Sonar System Based on FPGA and DSP. Sensors. 2021; 21(4):1425. https://doi.org/10.3390/s21041425

Chicago/Turabian StyleTian, Haowen, Shixu Guo, Peng Zhao, Minyu Gong, and Chao Shen. 2021. "Design and Implementation of a Real-Time Multi-Beam Sonar System Based on FPGA and DSP" Sensors 21, no. 4: 1425. https://doi.org/10.3390/s21041425

APA StyleTian, H., Guo, S., Zhao, P., Gong, M., & Shen, C. (2021). Design and Implementation of a Real-Time Multi-Beam Sonar System Based on FPGA and DSP. Sensors, 21(4), 1425. https://doi.org/10.3390/s21041425