Abstract

The lack of high-resolution thermal images is a limiting factor in the fusion with other sensors with a higher resolution. Different families of algorithms have been designed in the field of remote sensors to fuse panchromatic images with multispectral images from satellite platforms, in a process known as pansharpening. Attempts have been made to transfer these pansharpening algorithms to thermal images in the case of satellite sensors. Our work analyses the potential of these algorithms when applied to thermal images from unmanned aerial vehicles (UAVs). We present a comparison, by means of a quantitative procedure, of these pansharpening methods in satellite images when they are applied to fuse high-resolution images with thermal images obtained from UAVs, in order to be able to choose the method that offers the best quantitative results. This analysis, which allows the objective selection of which method to use with this type of images, has not been done until now. This algorithm selection is used here to fuse images from thermal sensors on UAVs with other images from different sensors for the documentation of heritage, but it has applications in many other fields.

1. Introduction

The use of thermal cameras with a sensor that is sensitive to the long-wave thermal infrared part of the electromagnetic spectrum (9–14 micrometres) is becoming increasingly widespread. However, unlike other kinds of sensors such as visible spectrum range RGB cameras, the resolution of even the most advanced commercial sensors, that are sensitive to wavelengths usually between 2.5 and 15 µm, does not exceed the megapixel frontier. This is due to technical limitations: the miniaturization of the microbolometers, the elements that react to incoming infrared thermal waves, is inversely proportional to the signal-noise ratio [1]. It can reasonably be assumed that the resolution of thermal sensors will not equal that of other sensors (visible and near-infrared spectrum range) in the short and medium term [2].

Our work studies the quality of the results when we set out to increase the resolution of thermal images by fusing them with images from another sensor. This is particularly interesting as it is quite common to take thermal imaging simultaneously with other visible spectrum sensors. It is essential to visually inspect the study zone at the time the thermal data is taken, as objects in thermal imaging lack contrast, making it difficult to identify the focus. That is the reason almost every thermal sensor is combined with visible spectrum cameras to assure the right frame of capture.

Since the 1970s a variety of algorithms have been developed in remote sensing to improve the resolution of one type of low-resolution sensors with information from images with a higher resolution. These procedures are called pansharpening. This name was selected as these algorithms originally improved the low resolution of multispectral images using the panchromatic images taken by both satellite-mounted sensors [3].

Although pansharpening procedures are widely known, the first approaches to merging thermal and RGB images to enhance the resolution of the original thermal image involved applying the intensity-hue-saturation (IHS) pansharpening algorithm [4,5]. Other authors subsequently conducted research combining information from high-resolution visible spectrum images with thermal images obtained from terrestrial sensors [6,7,8].

The industry’s strategies to enhance thermal imaging include the development by the thermal camera maker FLIR of the Ultramax© technology, which combines numerous shots (16 shots per second), each slightly different from the other due to the inevitable movements and vibrations during the capture process. This proposed solution achieves a twofold improvement in the resolution [9].

Another manufacturer, InfraTec, devised a hardware solution with a fast-rotating wheel, which allows four images to be taken in rotation, which are fused in the final image [9].

Other approaches include Deep Learning techniques applied to this problem, introducing RGB images as part of the established network architecture [10]. The limitation of these approaches is that they require a prior training phase, and the extrapolation of this training may not be adequate in all situations.

In the field of enhancement and super-resolution algorithms of thermal images, focused only on sensors onboard satellite, there are other options different from pansharpening algorithms. Processes called downscaling land surface temperature (DLST) try to obtain high-resolution thermal images from satellite data [11,12].

Apart from hardware solutions, we consider pansharpening algorithms applied to thermal imaging to be the best method to improve image resolution where simultaneous visible spectrum imaging is available.

New pansharpening algorithms known as hyperpansharpening are currently available for fusing several high-resolution images with multi and hyperspectral images [13,14,15,16,17]. These new algorithms are not studied in this analysis, as our aim is to relate our results with previous research on how to improve the resolution of thermal images with pansharpening algorithms [4,5,18,19].

The main aim of our study is to analyse the quality of the various pansharpening methods when using thermal images, based on the composition of a pseudo-multispectral (PS-MS) image from the raw thermal image. When fused with other much higher-resolution images using pansharpening methods, these PS-MS images will provide enhanced thermal imaging with a higher resolution than the original thermal image. This is the first quantitative analysis of UAV thermal images until now, and it allows a far more objective criterion for the algorithm for selecting the method to be used when processing this type of images.

In our work we have studied over ten pansharpening algorithms used in satellite image pansharpening from the two main families in order to determine their possibilities, performance, and results when used in thermal imaging. We apply our study to the case of UAVs, where the resolution and close geometry of these devices substantially modifies the results, and where it is necessary to fuse images from a range of image sensors. This research confirms the performance of pansharpening algorithms, and analyses the final products by means of numerical quality imaging indices to establish their quality. Prior research on thermal image pansharpening did not monitor performance in measurable and comparable numerical parameters, and as the findings were based merely on visual observation, it was impossible to ensure the quality in further processes and analyses using these enhanced images.

The rest of this manuscript is organized as follows. Section 2 introduces the pansharpening algorithms tested, the sample data and the testing methodology, which are the basis of the proposed qualitative assessment method. Finally, the algorithms are evaluated. Section 3 presents the quantitative quality results obtained for the selected algorithms. Section 4 contains a discussion of these results and their implications. The work is concluded in Section 5.

2. Materials and Methods

Multispectral images are composed of spectral bands that represent different parts of the electromagnetic spectrum. The typical bands in these images correspond to “colours” from the visible spectrum: red, green, and blue. Other common bands in multispectral imaging denote separate parts of the infrared spectrum such as near-infrared (NIR) or short-wavelength infrared (SWIR). The part known as long wave infrared (LWIR) in the infrared spectrum corresponds to thermal imaging. Other bands commonly found in multispectral imaging are from the ultraviolet spectrum.

In summary, we can define a multispectral image as the compound of multiple images (usually between 3 and 15) corresponding to different parts of the spectrum or “colours”.

Thermal images are usually processed using various masks or colour charts to form a false colour image. This aids the visual analysis and makes it easier for users to interpret. The colour chart most commonly used in these images shows lower temperatures in cold colours such as blue and violet, and higher temperatures in colours like yellow, orange and red. Although this is merely an artificial representation of the value of the raw grayscale image, it helps us form our pseudo-multispectral image (PS-MS).

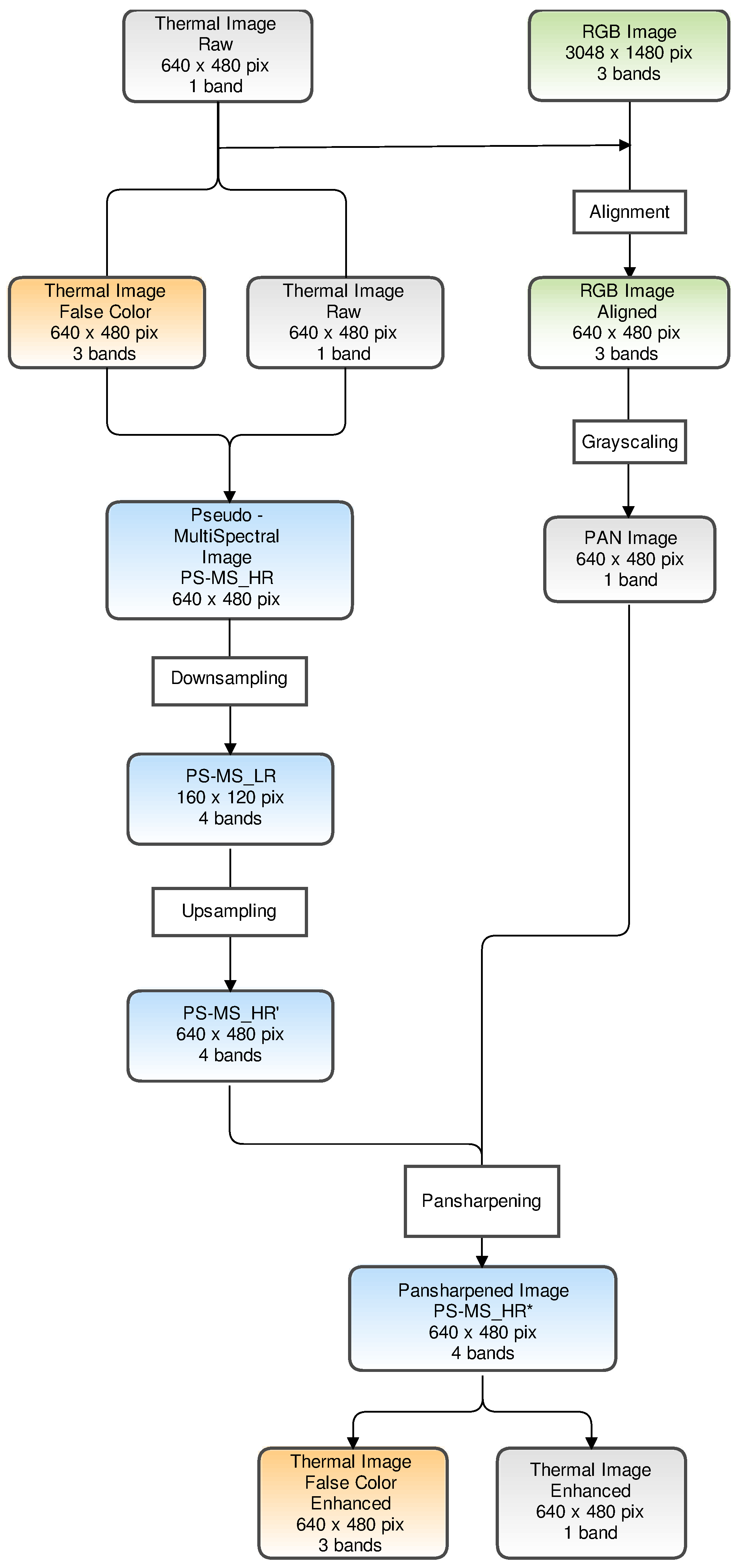

Our PS-MS image is composed of four bands: three bands (red, green and blue) from the false colour image and the band corresponding to the original thermal image in grayscale. To clarify our assessment methodology, Figure 1 shows the workflow we followed, from the raw thermal image to the pansharpened final products.

Figure 1.

Proposed workflow for the pansharpening assessment methodology of thermal and RGB images with pseudo-multispectral image composition, and the down- and upsampling resolution steps.

To verify the performance of the various pansharpening algorithms we started by obtaining the PS-MS image in low resolution (PS-MS_LR), as the image was taken with a lower resolution sensor (160 × 120 pixels). This is done by applying a gaussian pyramidal algorithm, with ratio = 4 and = 4/3 (downsampling) [20].

The visible spectrum RGB images must have approximately the same field of view as the raw thermal image. The alignment step consists of calculating an affine transformation, identifying common points from both images, and then applying it. Most popular image alignment algorithms are feature-based and include keypoints detectors and local invariant descriptors [21]. In this work, we have implemented an ORB alignment algorithm [22,23], calculating the parameters which define the affine transformation.

The thermal and visible spectrum images are now coherent. The next step is to express the three RGB image bands in a single band in grayscale (grayscaling step). This is the panchromatic image (PAN) that is required for every pansharpening algorithm [16]. This PAN image is a simulation of the image that would be taken with a single specific sensor with a spectral range from blue to red (400–700 nm). As we are not using a high-resolution multispectral image, we do not analyse the hyperpansharpening algorithms.

The PAN image in our work has a resolution of 640 × 480 pixels (the original was 3048 × 1480 pixels). This will help us in later steps, as our aim is to analyse the pansharpening of the simulated low-resolution pseudo-multispectral image (160 × 120 pixels) and compare the final product with the original pseudo-multispectral image, with a resolution of 640 × 480 pixels.

The prior step for all the pansharpening algorithms analysed is the conversion of the low-resolution images to match the resolution of the panchromatic image. The size of both the low-resolution pseudo-multispectral (PS-MS_LR) and panchromatic image must match. This is achieved by applying a nearest neighbour-upsampling method, which yields a PS-MS_HR’ image (upsampling).

We can now apply all the selected pansharpening algorithms to obtain the enhanced resolution image PS-MS_HR*, formed by four bands: three RGB false colour bands and one thermal band (Figure 1). For further analysis, we split the final pansharpened image PS-MS_HR* into two images: one false colour and one thermal image.

2.1. Pansharpening Algorithms

Pansharpening algorithms belong to the image fusion branch of computer imaging, and their purpose is to enhance low-resolution images using images from another sensor with a higher resolution. It should be noted that both images must show the same object and have the same field of view. Two well-defined families of pansharpening algorithms are described in the scientific literature, mainly differentiated by whether their approach to the problem is spatial or spectral.

Algorithms known as COMPONENT SUBSTITUTION (CS) are based on the low resolution (LR) image colour space transformation in another space, and disassociate spatial and spectral information. The spatial information is then substituted by the information from the high resolution (HR) image. The process ends with the inverse colour space transformation. CS algorithms are global, as they act uniformly throughout the entire extension of the image [24].

MULTIRESOLUTION ANALYSIS (MRA) methods use linear space-invariant digital filtering of the HR image to extract the spatial details to be added to the LR bands [25].

MRA-based techniques substantially split the spatial information from the LR bands and the HR image into a series of bandpass spatial frequency channels. The high-frequency channels are inserted into the corresponding channels of the interpolated LR bands [25].

Our work focuses on the following algorithms from among all the pansharpening methods:

- IHS: Fast Intensity-Hue-Saturation (FIHS) image fusion [26].

- PCA: Principal Component Analysis [3].

- BDSD: Band-Dependent Spatial-Detail with local parameter estimation [27].

- GS: Gram Schmidt (Mode 1) [28].

- PRACS: Partial Replacement Adaptive Component Substitution [29].

- HPF: High-Pass Filtering with 5 × 5 box filter for 1:4 fusion [3]

- SFIM: Smoothing Filter-based Intensity Modulation (SFIM) [30,31].

- INDUSION: Decimated Wavelet Transform (DWT) using an additive injection model [32].

- MTF-GLP: Generalized Laplacian Pyramid (GLP) [33] with Modulation Transfer Function (MTF) matched filter [34] with unitary injection model.

- MTF-GLP-HPM: GLP with MTF-matched filter [34] and multiplicative injection model [35].

- MTF-GLP-HPM-PP: GLP with MTF-matched filter [34], multiplicative injection model and post-processing [36].

- MTF-GLP-ECB: MTF-GLP with Enhanced Context-Based model (ECB) algorithm [34].

Algorithms IHS, PCA, GS, BDSD, and PRACS belong to the CS category, and we selected HPF, SFIM, INDUSION and the different MTF variations from the group of MRA algorithms. All these algorithms have been computed using a MATLAB library distributed by Vivone et al. [37].

After establishing the scope of our study, we then define the characteristics to be met by the final products to ensure an adequate quantitative assessment. These properties are defined by Wald’s protocol [38].

2.2. Wald’s Protocol

Before proceeding, the images resulting from the pansharpening methods must be evaluated in terms of quantitative quality indices, as a visual inspection of the result is insufficient to determine their suitability.

The research community accepts Wald’s protocol [38,39] as establishing the essential properties of the products of image fusion algorithms where possible. These are as follows, as expressed by Aiazzi et al. [25]

Theorem 1.

Consistency: any fused image Â, once degraded to its original resolution, should be as identical as possible to the original image A

Theorem 2.

Synthesis: any image  fused by means of a high-resolution (HR) image should be as identical as possible to the ideal image that the corresponding sensor, if it exists, would observe at the resolution of the HR image.

Theorem 3.

The multispectral vector of images  fused by means of a high-resolution (HR) image should be as identical as possible to the multispectral vector of the ideal images that the corresponding sensor, if it exists, would observe at the spatial resolution of the HR image.

As the original image is available in our research, we can comply with Theorems 2 and 3 of Wald’s protocol.

The quality of the final products of fusion imaging must then be assured. Visual checking may be necessary, but an objective numerical comparison is compulsory. Various image fusion quality indices have been proposed to assess the quality of the fusion image procedures.

2.3. Quality Metrics

Fusion imaging quality indices aim to measure spatial and spectral distortion based on different statistical expressions with variations between them. They examine one particular aspect: some focus on the quality of the spatial reconstruction, whereas others are designed to evaluate the spectral variation.

Some terms must be defined in order to explain the indices involved, some terms must be defined. Let us define High Resolution Pseudo-Multiespectral image PS-MS_HR as , with B bands and P pixels. , where is the ith band and is the feature vector of the jth pixel . is the resulting image product after the pansharpening method (PS-MS_HR*). All the indices have been computed using the SEWAR python package [40].

2.3.1. Root Mean Squared Error (RMSE)

The computed root mean squared error of the two images reveals the variation in the pansharpening process [41]. RMSE expresses both the spectral and spatial distortion of the improved image. The optimal value of RMSE is zero.

RMSE may lead to an error in interpretation. It should be noted that under human perception, images that are unquestionably different may have an identical RMSE. Although the RMSE statistic may not be the most specific for expressing quality results, it contributes to the global vision with more complex indices such as SAM, ERGAS, etc. [42].

2.3.2. Erreur Relative Globale Adimensionnelle de Synthèse (ERGAS)

A more advanced image quality index than RMSE was proposed by Ranchin and Wald [39]. ERGAS is a global statistic expressing the quality of the enhanced resolution image. ERGAS measures the transition between spatial and spectral information [43].

where d is the resolution ratio between the LR image and HR image (, in this case), and . ERGAS is the band-wise normalized root-mean-squared error multiplied by the GSD ratio in order to consider the difficulty of the fusion problem into consideration [44]. The optimal value of ERGAS is 0.

2.3.3. Spectral Angle Mapper (SAM)

Another quality index, this time focused on spectral information, is the Spectral Angle Mapper SAM [44]. SAM measures the spectral distortion with the angle formed by two vectors of the spectrum of both images.

The equation determines the similarity between two spectra by calculating the angle between them and treating them as vectors in a space with a dimensionality equal to the number of bands [45]. The optimal value of SAM is zero. Here we express SAM as the average of all pixels in the image, in radians.

2.3.4. Peak Signal to Noise Ratio (PSNR)

PSNR describes the spatial reconstruction in the final images [44], and is defined by the ratio between the maximum power of the signal and the power of the residual errors

where is the maximum pixel value in ith band in the PS-MS_HR image.

A higher PSNR value implies a greater quality of the spatial reconstruction in the final image. If the images are identical, PSNR is equal to infinity.

2.3.5. Universal Quality Index (UQI)

UQI estimates the distortion produced by combining three factors: correlation loss, luminance distortion and contrast distortion [46], as can be seen in the following equation.

where , , , , and .

UQI values move inside the [−1, 1] interval, where 1 is the optimal.

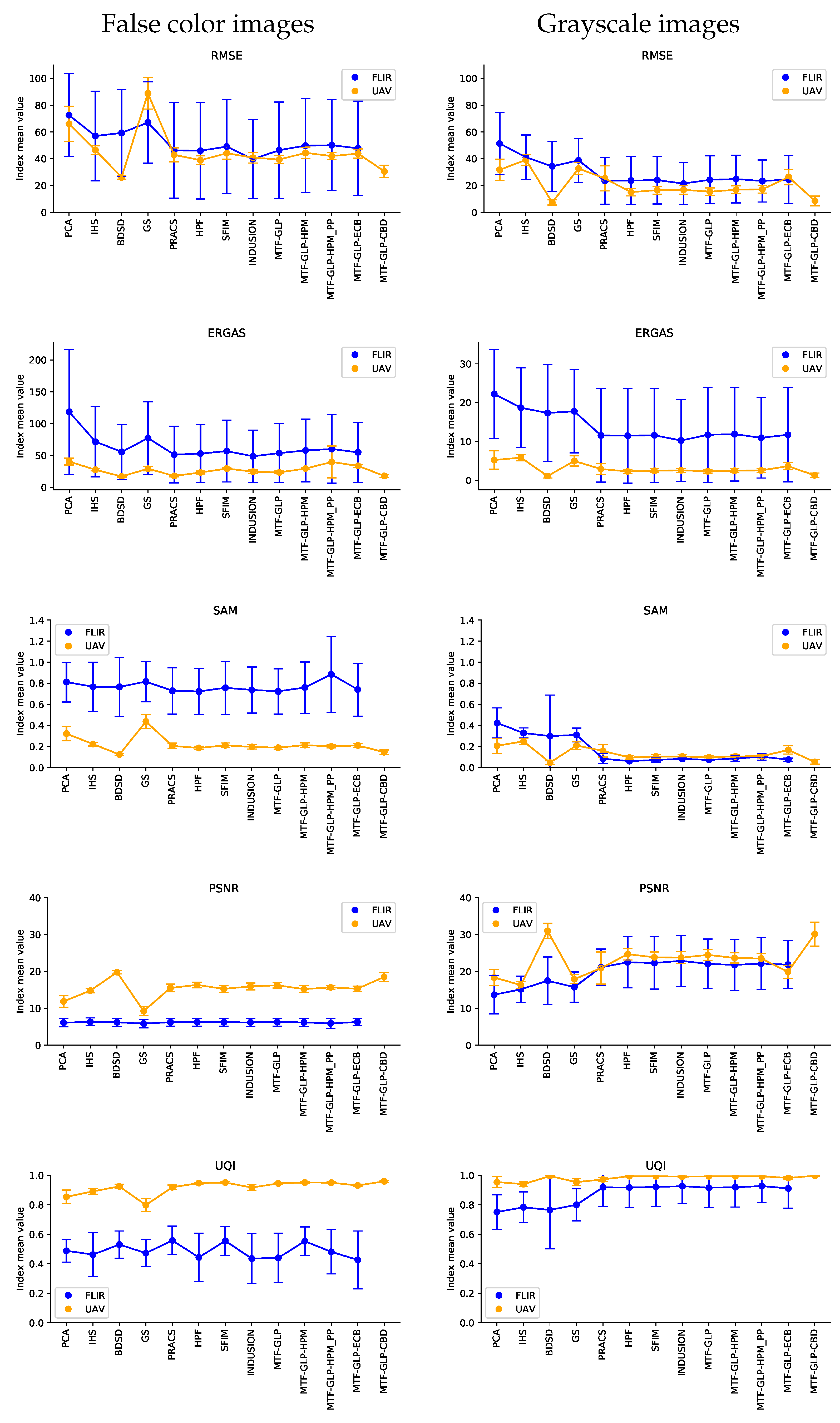

The quality indices have been computed separately for a more detailed analysis: false colour images and the image in grayscale corresponding to the fourth band in the PS-MS_HR and PS-MS_HR* images. This allows us to distinguish the transformation quality independently of the colour mask applied.

2.4. Datasets

Two different image datasets were built in order to test the performance of the pansharpening algorithms in thermal imaging. We started working with the FLIR ADAS dataset to evaluate the thermal quantification. This dataset is provided by FLIR thermal sensors brand and can be understood as a theoretical collection. For that reason, Illescas UAV was captured by us to evaluate and contrast the first dataset, this time focused on UAV specifically.

2.4.1. FLIR ADAS Dataset

The FLIR Thermal Starter Dataset [47] was originally designed to supply a thermal image and a set of RGB images for training and validating neural networks for object detection. It provides thermal and RGB images simultaneously, making it optimal for applying pansharpening methods.

The dataset was acquired via a RGB and thermal camera mounted on a vehicle (car). It contains 14,452 annotated thermal images with 10,228 images sampled from short videos, and 4224 images from a continuous 144 s video. All videos were taken on streets and highways in Santa Barbara, CA, USA, under generally clear-sky conditions during both day and night.

Thermal images were acquired with a FLIR Tau2 (13 mm f/1.0, 45-degree horizontal field of view (HFOV) and a vertical field of view (VFOV) of 37 degrees). RGB images were acquired with a FLIR BlackFly at 1280 × 512 pixels (4–8 mm f/1.4–16 megapixel lens with the field of view (FOV) set to match Tau2). The cameras were 48 ± 2 mm apart in a single enclosure.

As both sensors were mounted on the same structure with different lenses and resolutions, a previous work of alignment is essential [48]. Image alignment (also known as image registration) is the technique of warping one image (or sometimes both images) to ensure the features in the two images line up perfectly so that both images show the same field. We calculated an affine transformation to resolve this by identifying clearly-distinguished common points in both images. The result of this transformation is that both images are now aligned in preparation for further pansharpening analysis.

Once the performance of the algorithms was confirmed, we obtained our own dataset with the requirements needed for our application, with a focus on aerial surveying.

2.4.2. Illescas UAV Dataset

This second dataset comprised images taken from an unmanned aerial vehicle over an industrial building located in the town of Illescas (Toledo, Spain) on 13 August 2019 (40°841 N, 3°4912 W).

The aerial vehicle was equipped with two sensors: a 4K RGB CMOS sensor with a resolution of 3840 × 2160 pixels; and an uncooled VOx microbolometer radiometric thermal infrared sensor with a pixel size of 17 micrometres. The thermal images have 640 × 512 pixels, spectral bands of between 7.5 and 13.5 micrometres, and a temperature sensitivity of 50 mK.

As with the FLIR ADAS dataset, an affine transformation must be computed to ensure both images are aligned before further analysis.

3. Results

Table 1, Table 2, Table 3 and Table 4 show a summary of the quality indices explained in Section 2.3 and calculated from the FLIR ADAS and Illescas UAV datasets. As stated above, these indices have been computed independently for false colour images and raw grayscale images to allow us to distinguish real performance without the influence of the false colour table. Bold values show the column best index value.

Table 1.

Quality indices for the False Colour Thermal Pansharpened images of the FLIR ADAS dataset for each pansharpening algorithm tested.

Table 2.

Quality indices for the False Colour Thermal Pansharpened images of the Illescas UAV dataset for each pansharpening algorithm tested.

Table 3.

Quality indices for the Grayscale Thermal Pansharpened images of the FLIR ADAS dataset for each pansharpening algorithm tested.

Table 4.

Quality indices for the Grayscale Thermal Pansharpened images of the Illescas UAV dataset for each pansharpening algorithm tested.

We have chosen a sample of 12 images from each dataset following our complete proposed workflow, and then computed all the quality indices with all the final products obtained from the sample 12 images from both datasets. The following values correspond to the mean values of the group and their dispersion expressed by their standard deviation.

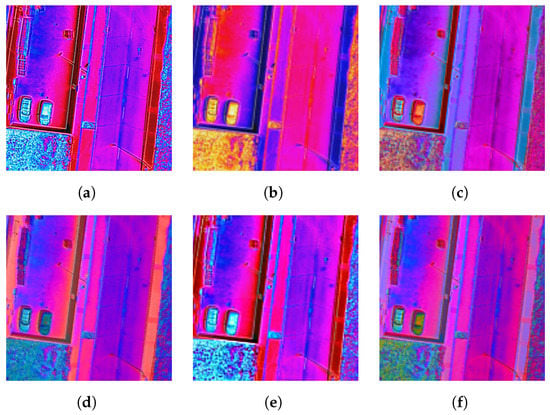

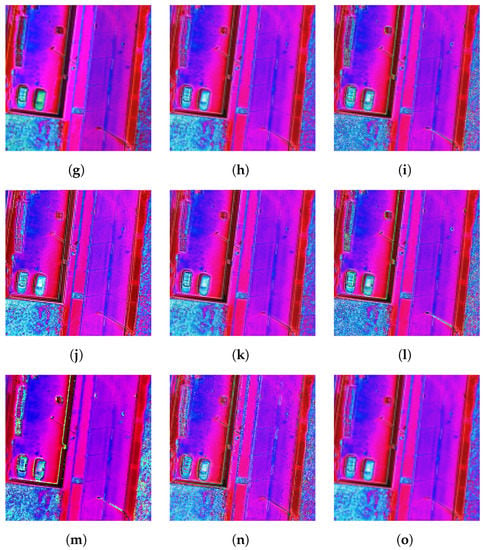

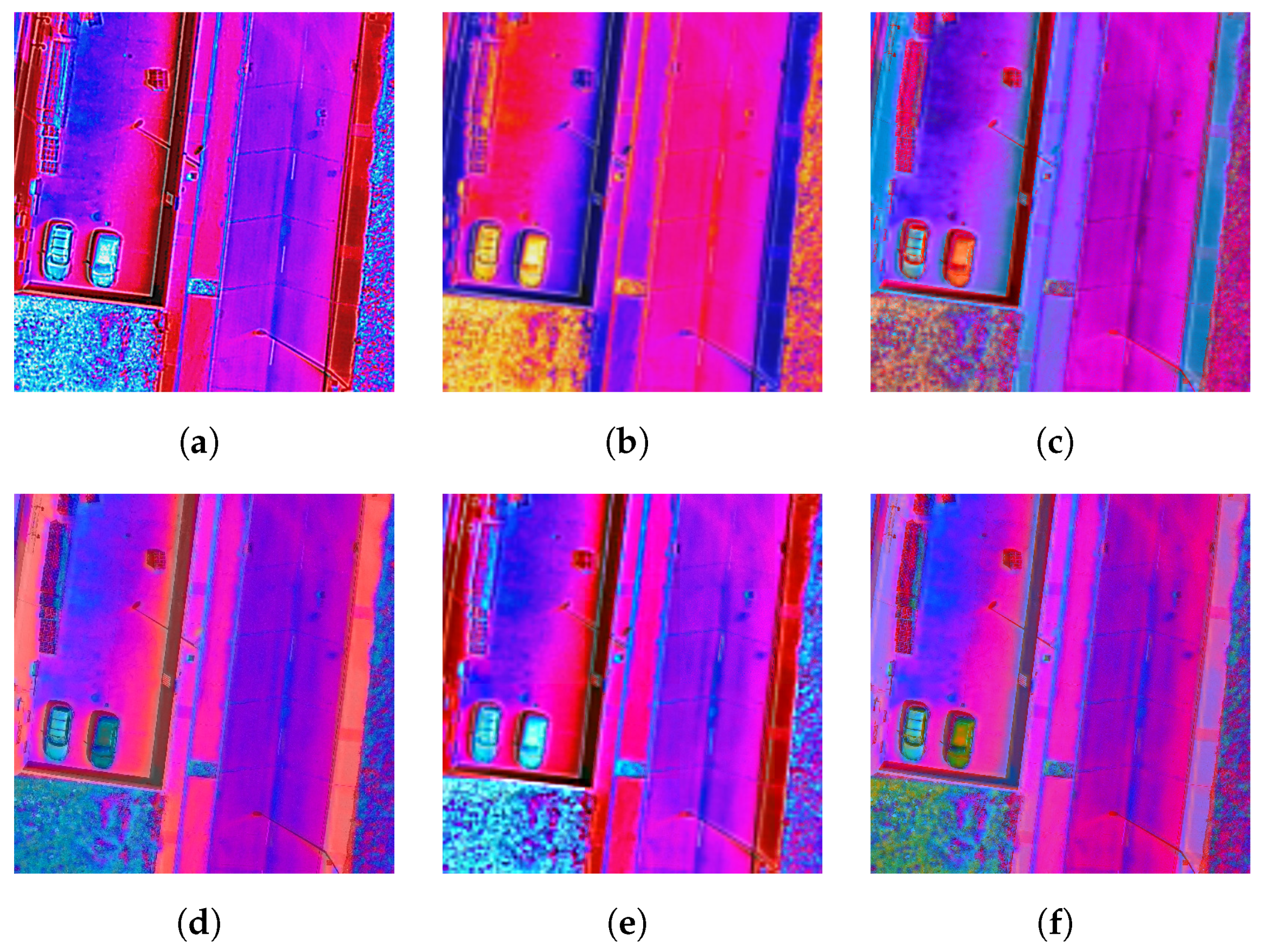

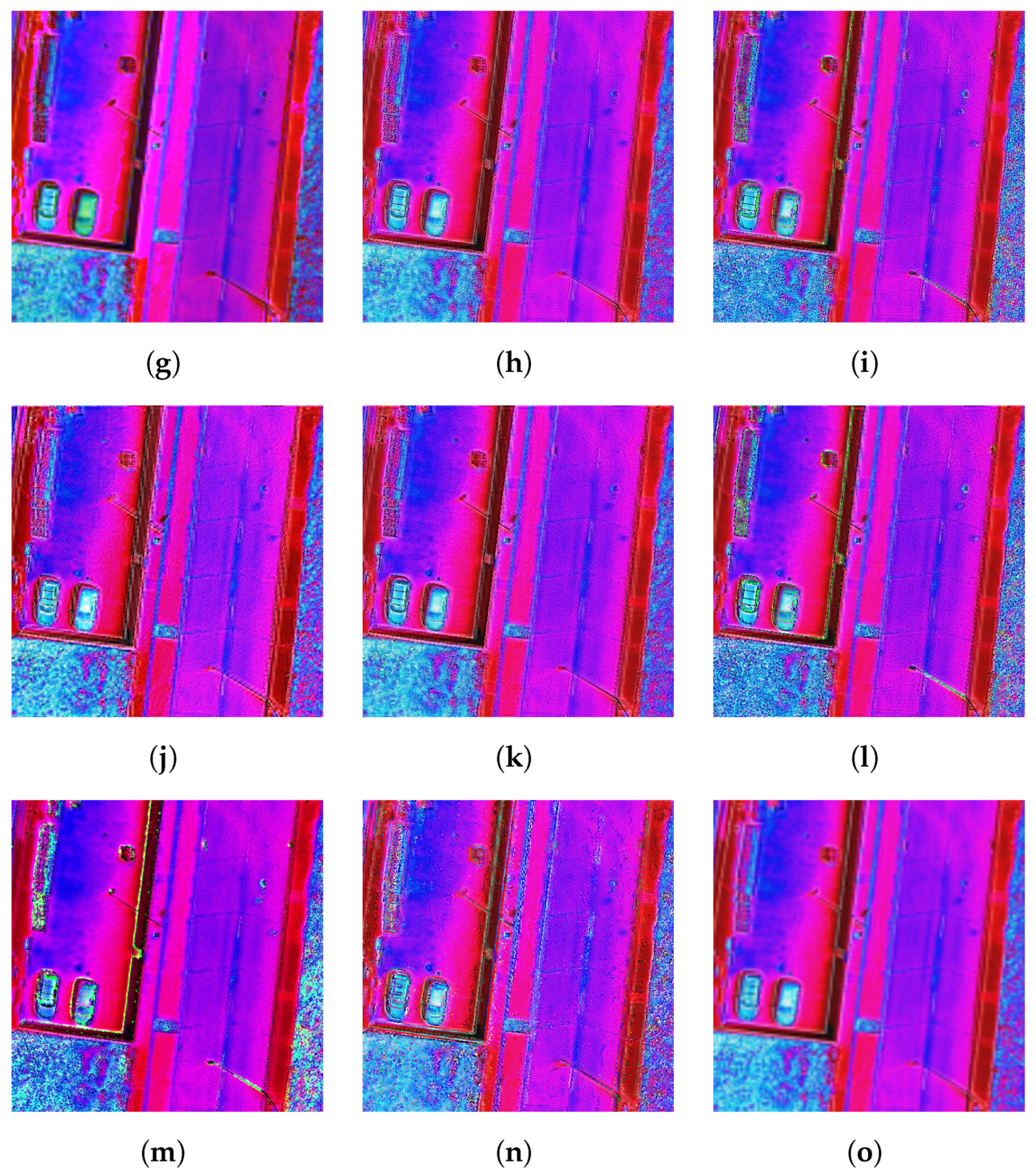

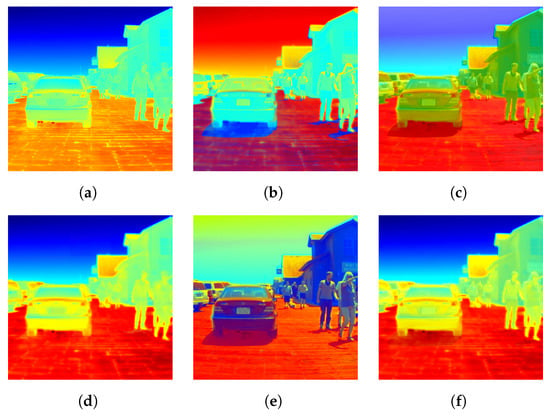

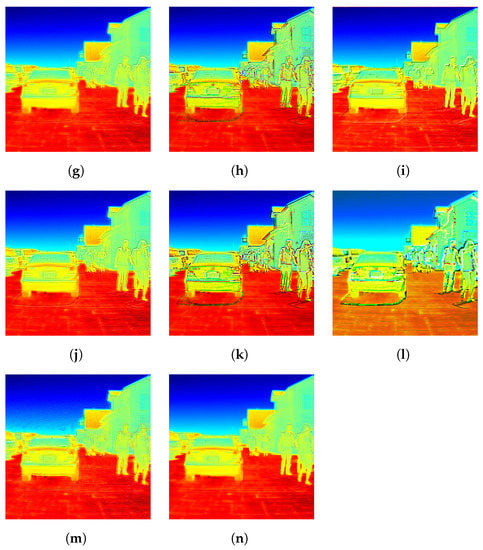

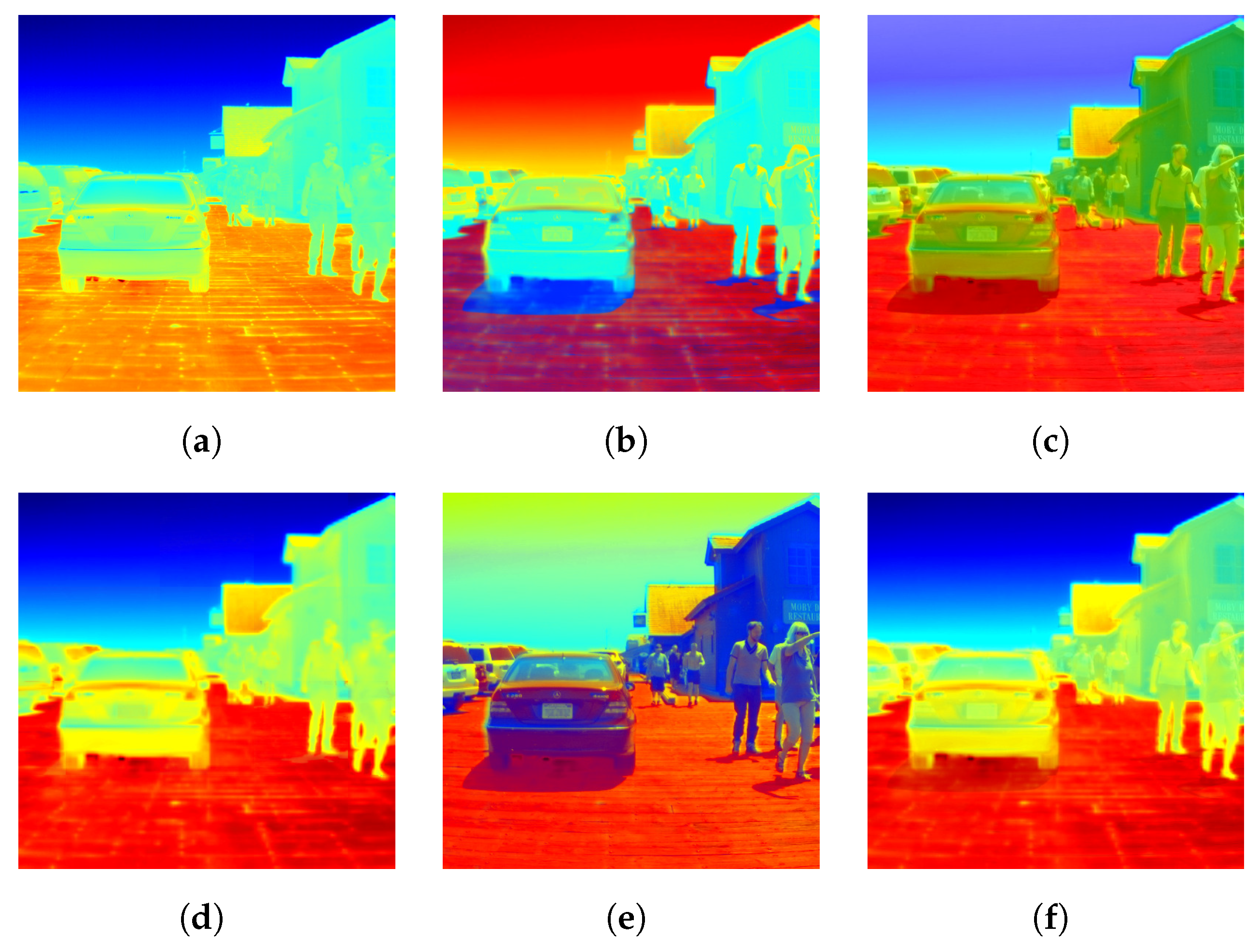

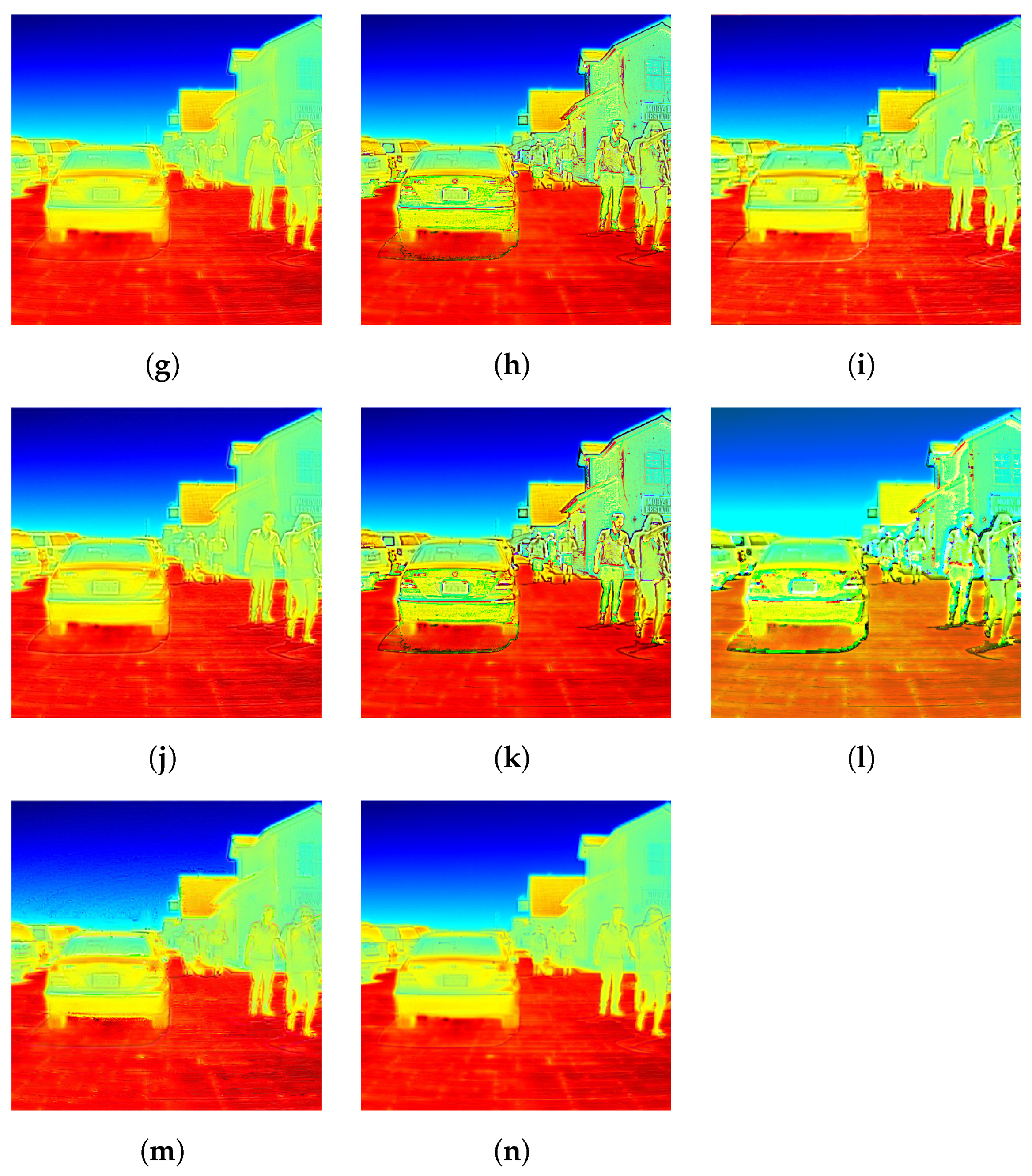

Figure A1, Figure A2, Figure A3 and Figure A4 in the Appendix A show a composition of a sample image from each dataset: the original, the upsampled, and pansharpened images from every studied algorithm. We confirm that a visual analysis is insufficient to validate the final quality of the image fusion process.

Figure 2 contains a graphic representation of the values of the various indices to aid the interpretation of the results.

Figure 2.

Graphic representation of computed quality indices. The different indices have been categorized in false color and grayscale. Dots represent quality index value, and vertical line length shows sample standard deviations. Graphics in ERGAS row are not directy comparable due to different y-axis scale.

4. Discussion

The FLIR ADAS and Toledo UAV datasets were processed and analysed, with the following results:

- The results for the false colour and grayscale images are quantitatively different. Grayscale images perform better than false colour images, thus confirming our hypothesis of separating the image fusion products into false colour and grayscale. The values of the RMSE index obtained for the images in grayscale are similar or even lower than in researches in the same field (RMSE similar to 31) [9]. The final grayscale image should be chosen for the subsequent processes, even when the same or a different false colour table needs to be applied again

- Apart from certain specific values, the two different families of algorithms have a similar performance. Minor differences in the way the different algorithms process the data produce better results. One instance of this can be seen in the case of the CS family with the BDSD algorithm, which performs better than the rest of the family. Figure 2 also reveals homogeneity among the values in the MRA family in all the indices.

- In general, MRA algorithms perform better than CS methods in thermal imaging, except in the case of the Component Substitution BDSD algorithm in the Illescas UAV dataset (Table 4), which has the best values in almost all the quality indices (RMSE = 7.400, ERGAS = 1.084, SAM = 0.048, PSNR = 31.014, UQI = 0.995). Haselwimmer et al. [5] suggest the IHS algorithm to fuse thermal and RGB images. Our work confirms that IHS is not the best choice of algorithm. Among the CS methods, the BDSD algorithm achieves the best results.

- Radiometrically speaking, there is no single best choice. ERGAS and SAM indices appear similar in both cases, although the algorithms from the MRA family perform slightly better. This agrees with the general behaviour described for these algorithms in the literature [49]. The values obtained in the SAM index (SAM < 1) are even better than those from other works on multi- and hyperspectral data fusion (SAM > 1) [17].

- Spatial reconstruction is better in MRA methods. PSNR has higher values in both datasets, denoting a greater geometrical quality of the spatial details. Again, the BDSD algorithm is the best in terms of spatial reconstruction.

- Regarding the behavior of the datasets, the UAV dataset obtains better results in all indices, possibly due to the nature of the FLIR ADAS dataset. The lack of homogeneity between the distances to the objects, may explain the poorer performance of the pansharpening algorithms, and may also be the reason for higher dispersion values in the whole FLIR dataset. We could fix this by decomposing the images in subzones where the distances were homogeneous and analyzing their influence.

- Our work allows the use of thermal sensors with a lower resolution than other types of sensors used simultaneously in the same project, since this method enhances the resolution of the thermal images and homogenises their resolution. One limitation is that it depends on the resolution ratio between visible and thermal spectrum images. Here, a ratio of more than four may lead to unexpected artifacts and to the failure of processes [50].

- Although the results may vary depending on the false colour representation of the thermal information adopted, the validation by the grayscale band highlights the interest of further developments to adjust the parameters of the algorithms to adapt them specifically to infrared thermal images.

5. Conclusions

The use of certain pansharpening algorithms applied to thermal images has been tested in previous research. This work contains a complete review of a number of algorithms, and provides an in-depth study of thermal imaging pansharpening, with a numerical assessment.

We have validated the potential of pansharpening algorithms to enhance the resolution of thermal images with the help of higher-resolution visible spectrum RGB images. Algorithms from the two main pansharpening families have been tested on different datasets, and the quality of the results has been verified. This quantitative analysis allows us to make a critical comparison.

Our focus on UAV imaging suggests a primary application, as all UAV platforms have quite different sensor resolutions between the thermal and visible spectrum. This type of aerial platforms fitted with this type of sensors are already very useful in such key areas as volcanism, the detection of temperature changes as a possible parameter for forecasting future events, and the inspection of industrial electromechanical elements, where they can be a key factor in preventing system malfunctions. The availability of a more accurate estimate of the quality of thermal image pansharpening algorithms will make it easier to develop more reliable automatic remote sensing systems.

Author Contributions

All authors have made significant contributions to this manuscript. Conceptualization, J.R., J.F.P. and S.L.-C.M.; methodology, J.R.; software, J.R.; validation, J.R.; formal analysis, J.R., J.F.P. and S.L.-C.M.; investigation, J.R.; field observations: J.R., J.A.d.M. and S.L.-C.M.; resources, J.R., J.F.P. and S.L.-C.M.; data curation, J.R. and S.L.-C.M.; writing–original draft preparation, J.R.; writing–review and editing, J.R., J.F.P., J.A.d.M. and S.L.-C.M.; supervision, J.F.P., J.A.d.M. and S.L.-C.M. All authors have read and agreed to the published version of the manuscript.

Funding

The work from J.F.P., S.L-C.M. and J.A.M. has been partially supported by the Spanish Ministerio de Ciencia, Innovación y Universidades research project DEEP-MAPS (RTI2018-093874-B-100), the MINECO research project AQUARISK (CGL2017-86241-R), the CAM research project LABPA-CM (H2019/HUM-5692), and also by the collaboration agreement ‘Fusión de información con sensores hiper y multiespectrales para el uso en agricultura de precisión’ of Premio Arce Foundation.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We want to thank Vivone et al. [37] for making the MATLAB pansharpening toolbox available to the research community, and the staff of the GESyP Research Group for their dedicated work and support.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

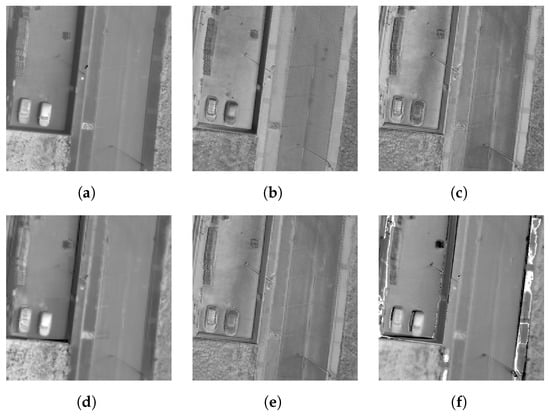

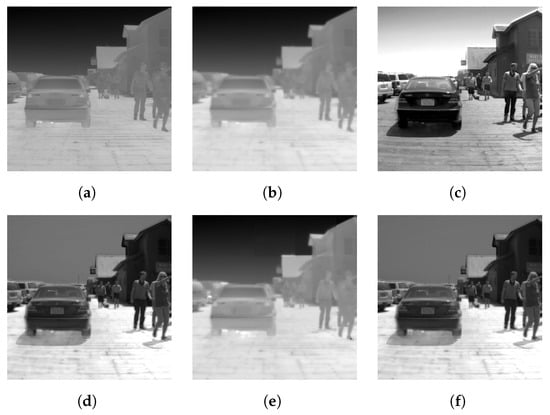

Figure A1.

UAV Illescas dataset RGB: (a) Reference image; (b) Low-resolution upsampled image; (c) PCA; (d) IHS; (e) BDSD; (f) GS; (g) PRACS; (h) HPF; (i) SFIM; (j) INDUSION; (k) MTF-GLP; (l) MTF-GLP-HPM; (m) MTF-GLP-HPM-PP; (n) MTF-GLP-ECB; (o) MTF-GLP-CBD.

Figure A1.

UAV Illescas dataset RGB: (a) Reference image; (b) Low-resolution upsampled image; (c) PCA; (d) IHS; (e) BDSD; (f) GS; (g) PRACS; (h) HPF; (i) SFIM; (j) INDUSION; (k) MTF-GLP; (l) MTF-GLP-HPM; (m) MTF-GLP-HPM-PP; (n) MTF-GLP-ECB; (o) MTF-GLP-CBD.

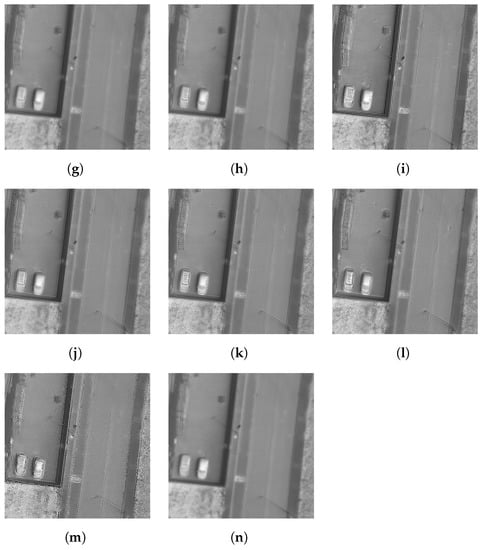

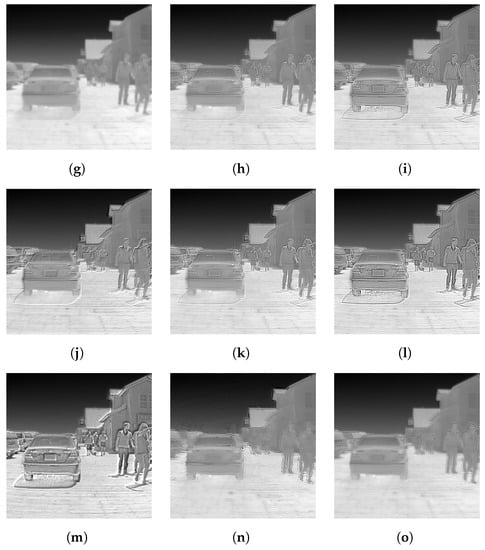

Figure A2.

UAV Illescas dataset grayscale: (a) Reference image; (b). PCA, (c) IHS; (d) BDSD; (e) GS; (f) PRACS; (g) HPF; (h) SFIM; (i) INDUSION; (j) MTF-GLP; (k) MTF-GLP-HPM; (l) MTF-GLP-HPM-PP; (m) MTF-GLP-ECB; (n) MTF-GLP-CBD.

Figure A2.

UAV Illescas dataset grayscale: (a) Reference image; (b). PCA, (c) IHS; (d) BDSD; (e) GS; (f) PRACS; (g) HPF; (h) SFIM; (i) INDUSION; (j) MTF-GLP; (k) MTF-GLP-HPM; (l) MTF-GLP-HPM-PP; (m) MTF-GLP-ECB; (n) MTF-GLP-CBD.

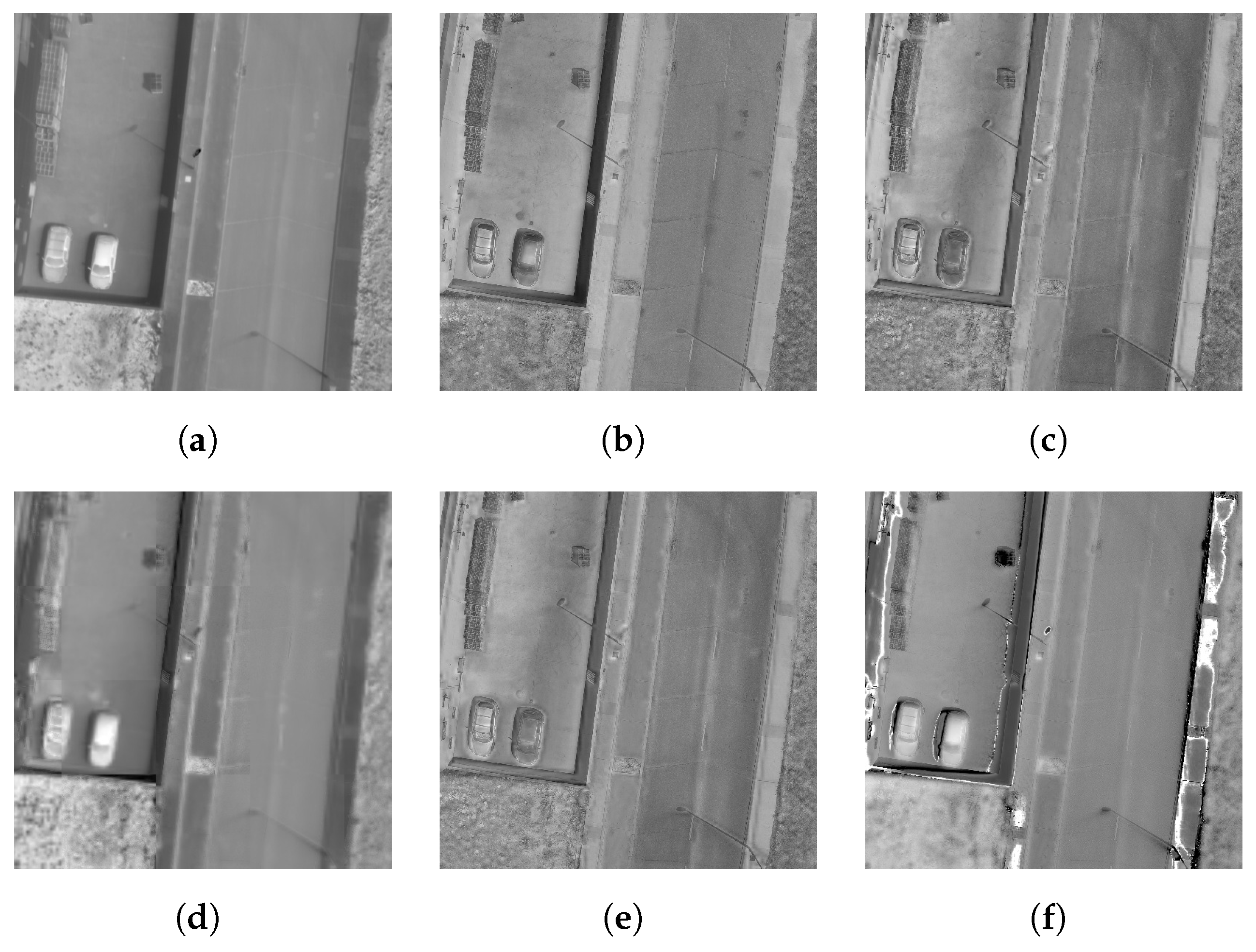

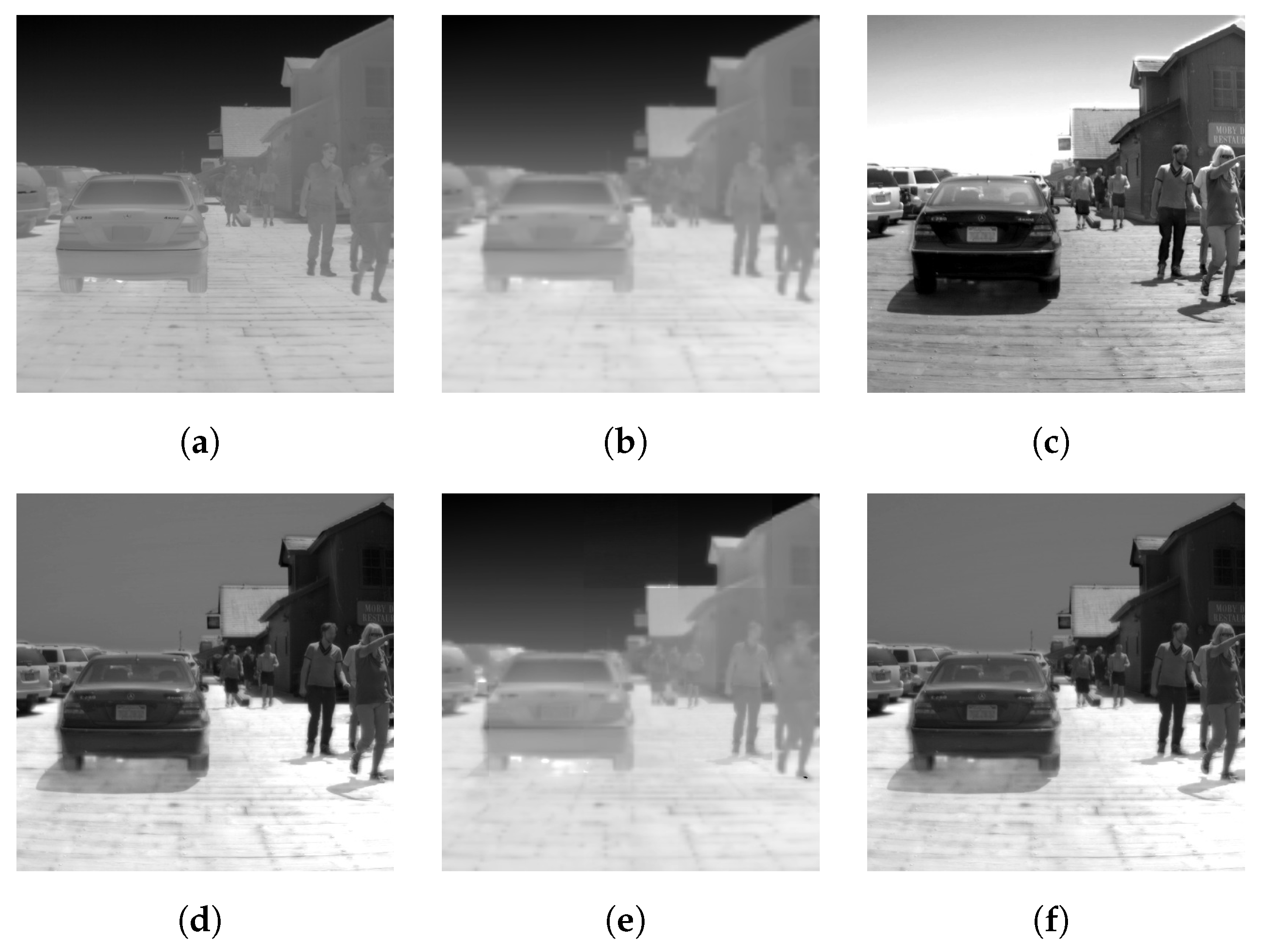

Figure A3.

FLIR ADAS dataset [47] grayscale: (a) Reference image; (b) Low-resolution upsampled image; (c) PCA; (d) IHS; (e) BDSD; (f) GS; (g) PRACS; (h) HPF; (i) SFIM; (j) INDUSION; (k) MTF-GLP; (l) MTF-GLP-HPM; (m) MTF-GLP-HPM-PP; (n) MTF-GLP-ECB; (o) MTF-GLP-CBD.

Figure A3.

FLIR ADAS dataset [47] grayscale: (a) Reference image; (b) Low-resolution upsampled image; (c) PCA; (d) IHS; (e) BDSD; (f) GS; (g) PRACS; (h) HPF; (i) SFIM; (j) INDUSION; (k) MTF-GLP; (l) MTF-GLP-HPM; (m) MTF-GLP-HPM-PP; (n) MTF-GLP-ECB; (o) MTF-GLP-CBD.

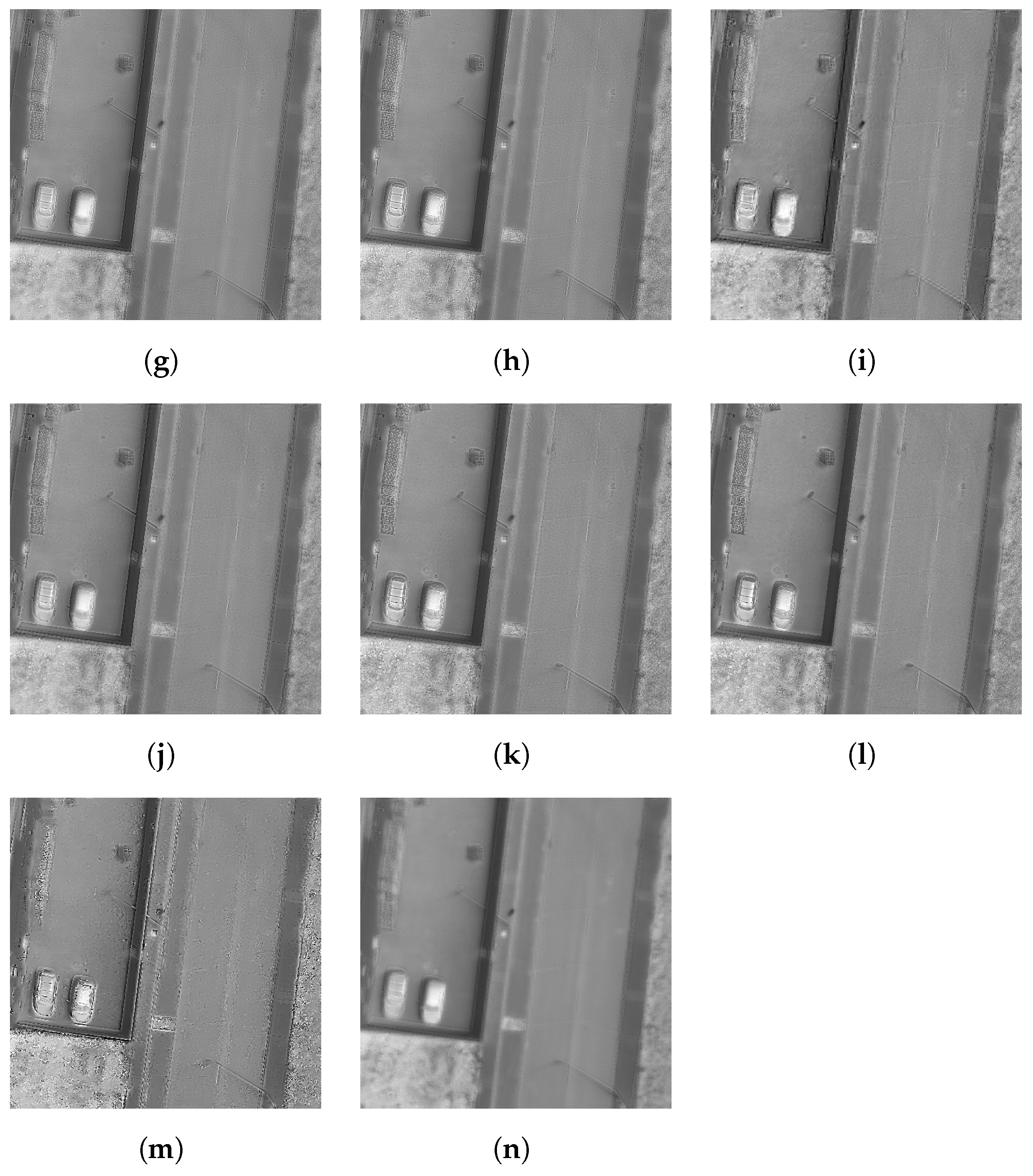

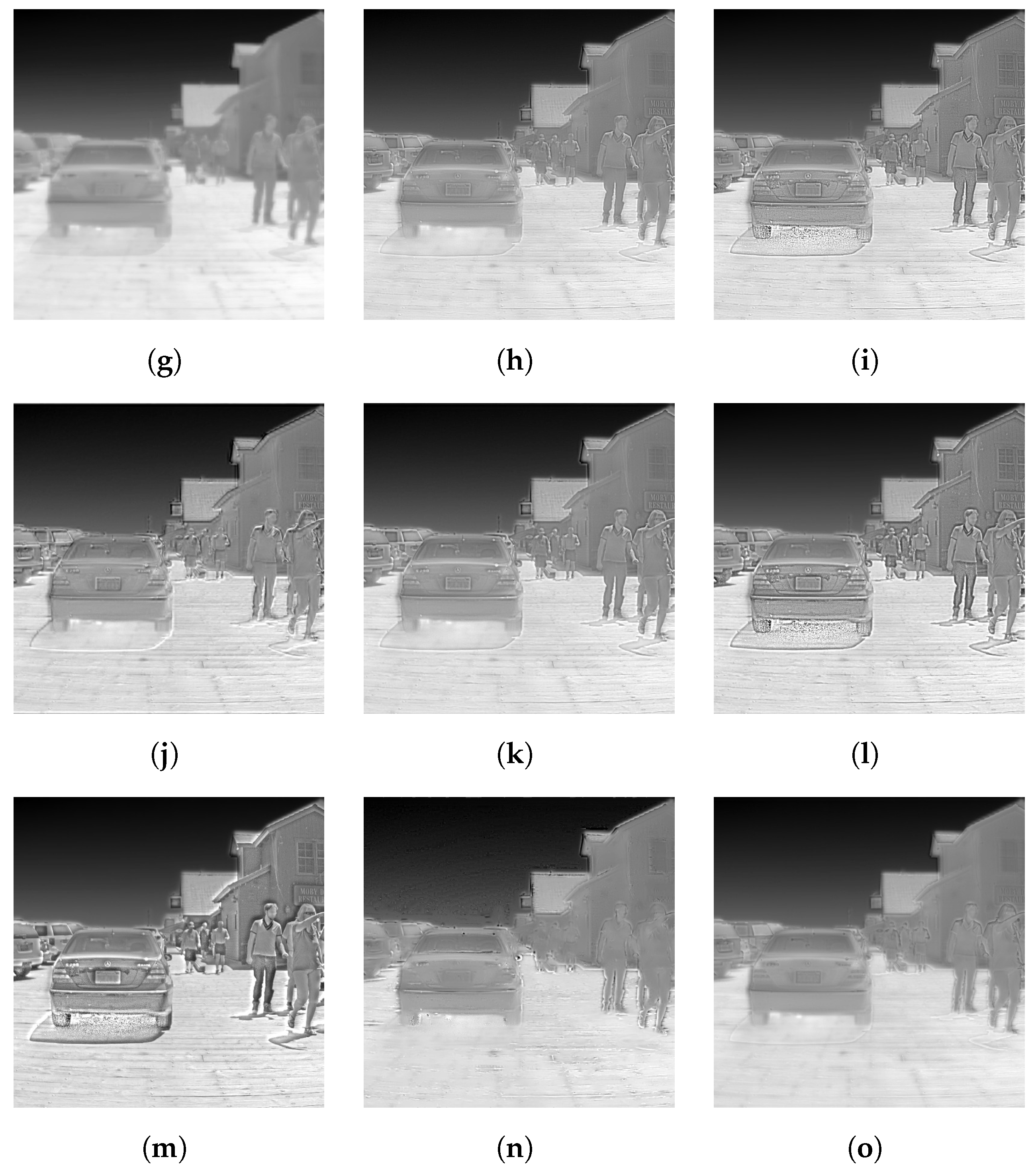

Figure A4.

FLIR ADAS dataset [47] RGB: (a) Reference image; (b) PCA, (c) IHS; (d) BDSD; (e) GS; (f) PRACS; (g) HPF; (h) SFIM; (i) INDUSION; (j) MTF-GLP; (k) MTF-GLP-HPM; (l) MTF-GLP-HPM-PP; (m) MTF-GLP-ECB; (n) MTF-GLP-CBD.

Figure A4.

FLIR ADAS dataset [47] RGB: (a) Reference image; (b) PCA, (c) IHS; (d) BDSD; (e) GS; (f) PRACS; (g) HPF; (h) SFIM; (i) INDUSION; (j) MTF-GLP; (k) MTF-GLP-HPM; (l) MTF-GLP-HPM-PP; (m) MTF-GLP-ECB; (n) MTF-GLP-CBD.

References

- Kohin, M.; Butler, N.R. Performance limits of uncooled VO x microbolometer focal plane arrays. In Proceedings of the Infrared Technology and Applications XXX, Orlando, FL, USA, 12–16 April 2004; Volume 5406, p. 447. [Google Scholar] [CrossRef]

- Yue, L.; Shen, H.; Li, J.; Yuan, Q.; Zhang, H.; Zhang, L. Image super-resolution: The techniques, applications, and future. Signal Process. 2016, 128, 389–408. [Google Scholar] [CrossRef]

- Chavez, P.S.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data: Landsat TM and SPOT panchromatic. Photogramm. Eng. Remote Sens. 1991. [Google Scholar] [CrossRef]

- Lagüela, S.; Armesto, J.; Arias, P.; Herráez, J. Automation of thermographic 3D modelling through image fusion and image matching techniques. Autom. Constr. 2012, 27, 24–31. [Google Scholar] [CrossRef]

- Kuenzer, C.; Dech, S. Thermal remote sensing Sensors, Methods, Applications. In Remote Sensing and Digital Image Processing; Kuenzer, C., Dech, S., Eds.; Springer: Dordrecht, The Netherlands, 2013; Volume 17, pp. 287–313. [Google Scholar] [CrossRef]

- Chen, X.; Zhai, G.; Wang, J.; Hu, C.; Chen, Y. Color guided thermal image super resolution. In Proceedings of the VCIP 2016—30th Anniversary of Visual Communication and Image Processing, Chengdu, China, 27–30 November 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Poblete, T.; Ortega-Farías, S.; Ryu, D. Automatic Coregistration Algorithm to Remove Canopy Shaded Pixels in UAV-Borne Thermal Images to Improve the Estimation of Crop Water Stress Index of a Drip-Irrigated Cabernet Sauvignon Vineyard. Sensors 2018, 18, 397. [Google Scholar] [CrossRef] [PubMed]

- Turner, D.; Lucieer, A.; Malenovský, Z.; King, D.H.; Robinson, S.A. Spatial co-registration of ultra-high resolution visible, multispectral and thermal images acquired with a micro-UAV over antarctic moss beds. Remote Sens. 2014, 6, 4003–4024. [Google Scholar] [CrossRef]

- Mandanici, E.; Tavasci, L.; Corsini, F.; Gandolfi, S. A multi-image super-resolution algorithm applied to thermal imagery. Appl. Geomat. 2019, 11, 215–228. [Google Scholar] [CrossRef]

- Almasri, F.; Debeir, O. Multimodal Sensor Fusion In Single Thermal image Super-Resolution. In Asian Conference on Computer Vision; Springer: Cham, Switzerland, 2018; Volume 11367, pp. 418–433. [Google Scholar] [CrossRef]

- Zhan, W.; Chen, Y.; Zhou, J.; Wang, J.; Liu, W.; Voogt, J.; Zhu, X.; Quan, J.; Li, J. Disaggregation of remotely sensed land surface temperature: Literature survey, taxonomy, issues, and caveats. Remote Sens. Environ. 2013, 131, 119–139. [Google Scholar] [CrossRef]

- Pu, R. Assessing scaling effect in downscaling land surface temperature in a heterogenous urban environment. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102256. [Google Scholar] [CrossRef]

- Eismann, M.T.; Hardie, R.C. Hyperspectral resolution enhancement using high-resolution multispectral imagery with arbitrary response functions. IEEE Trans. Geosci. Remote Sens. 2005, 43, 455–465. [Google Scholar] [CrossRef]

- Kwan, C.; Budavari, B.; Bovik, A.C.; Marchisio, G. Blind Quality Assessment of Fused WorldView-3 Images by Using the Combinations of Pansharpening and Hypersharpening Paradigms. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1835–1839. [Google Scholar] [CrossRef]

- Loncan, L.; de Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral Pansharpening: A Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Selva, M.; Aiazzi, B.; Butera, F.; Chiarantini, L.; Baronti, S. Hyper-sharpening: A first approach on SIM-GA data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3008–3024. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and multispectral data fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Jung, H.S.; Park, S.W. Multi-sensor fusion of landsat 8 thermal infrared (TIR) and panchromatic (PAN) images. Sensors 2014, 14, 24425–24440. [Google Scholar] [CrossRef]

- Liao, W.; Huang, X.; Van Coillie, F.; Thoonen, G.; Pizurica, A.; Scheunders, P.; Philips, W. Two-stage fusion of thermal hyperspectral and visible RGB image by PCA and guided filter. In Proceedings of the Workshop on Hyperspectral Image and Signal Processing, Evolution in Remote Sensing, Tokyo, Japan, 2–5 June 2015; Volume 2015, pp. 1–4. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. Sentinel-2 image fusion using a deep residual network. Remote Sens. 2018, 10, 1290. [Google Scholar] [CrossRef]

- Wu, D.; Zhou, M.Y.; Sun, W.B.; Bai, X.W.; Li, D.J.; Zhang, Y.Y. Image Alignment Software Development Based on OpenCV. In Proceedings of the 2015 4th International Conference on Energy and Environmental Protection (ICEEP 2015), Shenzhen, China, 3–4 June 2015. [Google Scholar]

- Adel, E.; Elmogy, M.; Elbakry, H. Image Stitching System Based on ORB Feature-Based Technique and Compensation Blending. Int. J. Adv. Comput. Sci. Appl. 2015, 6, 55–62. [Google Scholar] [CrossRef]

- Lei, Y.; Yu, Z.; Gong, Y. An Improved ORB Algorithm of Extracting and Matching Features. Int. J. Signal Process. Image Process. Pattern Recognit. 2015, 8, 117–126. [Google Scholar] [CrossRef][Green Version]

- Chang, N.B.; Bai, K. Multisensor Data Fusion and Machine Learning for Environmental Remote Sensing; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar] [CrossRef]

- Chen, C.; Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. Twenty-Five Years of Pansharpening. In Signal and Image Processing for Remote Sensing, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2012; pp. 533–548. [Google Scholar] [CrossRef]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F.; Capobianco, L. Optimal MMSE pan sharpening of very high resolution multispectral images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 228–236. [Google Scholar] [CrossRef]

- Laben, C.; Brower, B. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6011875A, 4 January 2000. [Google Scholar]

- Choi, J.; Yu, K.; Kim, Y. A new adaptive component-substitution-based satellite image fusion by using partial replacement. IEEE Trans. Geosci. Remote Sens. 2011, 49, 295–309. [Google Scholar] [CrossRef]

- Liu, J.G. Smoothing Filter-based Intensity Modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T. Liu ’Smoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details’. Int. J. Remote Sens. 2002, 23, 593–597. [Google Scholar] [CrossRef]

- Khan, M.M.; Chanussot, J.; Condat, L.; Montanvert, A. Indusion: Fusion of multispectral and panchromatic images using the induction scaling technique. IEEE Geosci. Remote Sens. Lett. 2008, 5, 98–102. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution MS and pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. An MTF-based spectral distortion minimizing model for pan-sharpening of very high resolution multispectral images of urban areas. In Proceedings of the 2nd GRSS/ISPRS Joint Workshop on Remote Sensing and Data Fusion over Urban Areas, URBAN 2003, Berlin, Germany, 22–23 May 2003; pp. 90–94. [Google Scholar] [CrossRef]

- Lee, J.; Lee, C. Fast and efficient panchromatic sharpening. IEEE Trans. Geosci. Remote Sens. 2010, 48, 155–163. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Ranchin, T.; Wald, L. Fusion of high spatial and spectral resolution images: The ARSIS concept and its implementation. Photogramm. Eng. Remote Sens. 2000, 66, 49–61. [Google Scholar]

- Sewar 0.4.4 Python Package. Available online: https://pypi.org/project/sewar/ (accessed on 26 September 2020).

- Vijayaraj, V.; O’Hara, C.; Younan, N. Quality analysis of pansharpened images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, 2004. IGARSS ’04, Anchorage, AK, USA, 20–24 September 2004; Volume 1, pp. 85–88. [Google Scholar] [CrossRef]

- Pohl, C.; van Genderen, J. Remote Sensing Image Fusion; CRC Press, Taylor & Francis: Boca Raton, FL, USA, 2016. [Google Scholar] [CrossRef]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the Third Conference Fusion of Earth Data: Merging Point Measurements, Raster Maps and Remotely Sensed Images, Sophia Antipolis, France, 26–28 January 2000; pp. 99–103. [Google Scholar]

- Yokoya, N. Texture-guided multisensor superresolution for remotely sensed images. Remote Sens. 2017, 9, 316. [Google Scholar] [CrossRef]

- Bayarri, V.; Sebastián, M.A.; Ripoll, S. Hyperspectral imaging techniques for the study, conservation and management of rock art. Appl. Sci. 2019, 9, 5011. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- FLIR Thermal Dataset for Algorithm Training. Available online: https://www.flir.com/oem/adas/dataset/ (accessed on 19 October 2020).

- Lagüela, S.; González-Jorge, H.; Armesto, J.; Arias, P. Calibration and verification of thermographic cameras for geometric measurements. Infrared Phys. Technol. 2011, 54, 92–99. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Lotti, F.; Selva, M. A comparison between global and context-adaptive pansharpening of multispectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 302–306. [Google Scholar] [CrossRef]

- Dumitrescu, D.; Boiangiu, C.A. A Study of Image Upsampling and Downsampling Filters. Computers 2019, 8, 30. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).