GLAGC: Adaptive Dual-Gamma Function for Image Illumination Perception and Correction in the Wavelet Domain

Abstract

1. Introduction

2. Related Works

- Improved gamma correction: For parameter adjustment, some adaptive methods are derived, such as adaptive gamma correction based on cumulative histogram (AGCCH) [15], adaptive gamma correction to enhance the contrast of brightness-distorted images [16], adaptive correction with weight distribution (AGCWD) method [17], and a 2-D adaptive gamma correction method [18], which takes into account the variable brightness map of image spatial information while excessive contrast enhancement may occur. In addition, few methods consider both local and global enhancement, and overenhancement sometimes appears in some portions of the image.

- Retinex-based model: Fu et al. [19] proposed a simultaneous illumination and reflectance estimation (SIRE) method to preserve more image details when estimating the reflection intensity. Wang [20] used Retinex theory to construct an image prior model and used a hierarchical Bayesian model to estimate the model parameters and achieved good results. Cheng [21] proposed a nonconvex variational Retinex model to improve the brightness while maintaining the texture and naturalness of an image. These models based on Retinex theory can achieve pleasing reflection separation through iterations. However, the algorithms are time-consuming and may limit their practical applications. Low-light image enhancement via well-constructed illumination map estimation (LIME) was proposed by Guo [2]. Oversaturation in some portion of an image usually occurs.

- Combining the wavelet transform approach: By introducing the wavelet transform, a nonlinear enhancement function was designed based on the local dispersion of the wavelet coefficients [21]. Zotin [22] proposed an algorithm combining the MSR algorithm with the wavelet transform algorithm and achieved a better correction effect in terms of efficiency. A dual-tree complex wavelet transform for low-light image enhancement was proposed in [23]. However, it is unreasonable to utilize only the low-frequency subband for illumination enhancement. The image edges will appear jagged after transformation according to our experiments.

3. Proposed Method: GLAGC

3.1. Algorithm Scheme

3.2. Luminance Extraction in the Wavelet Domain

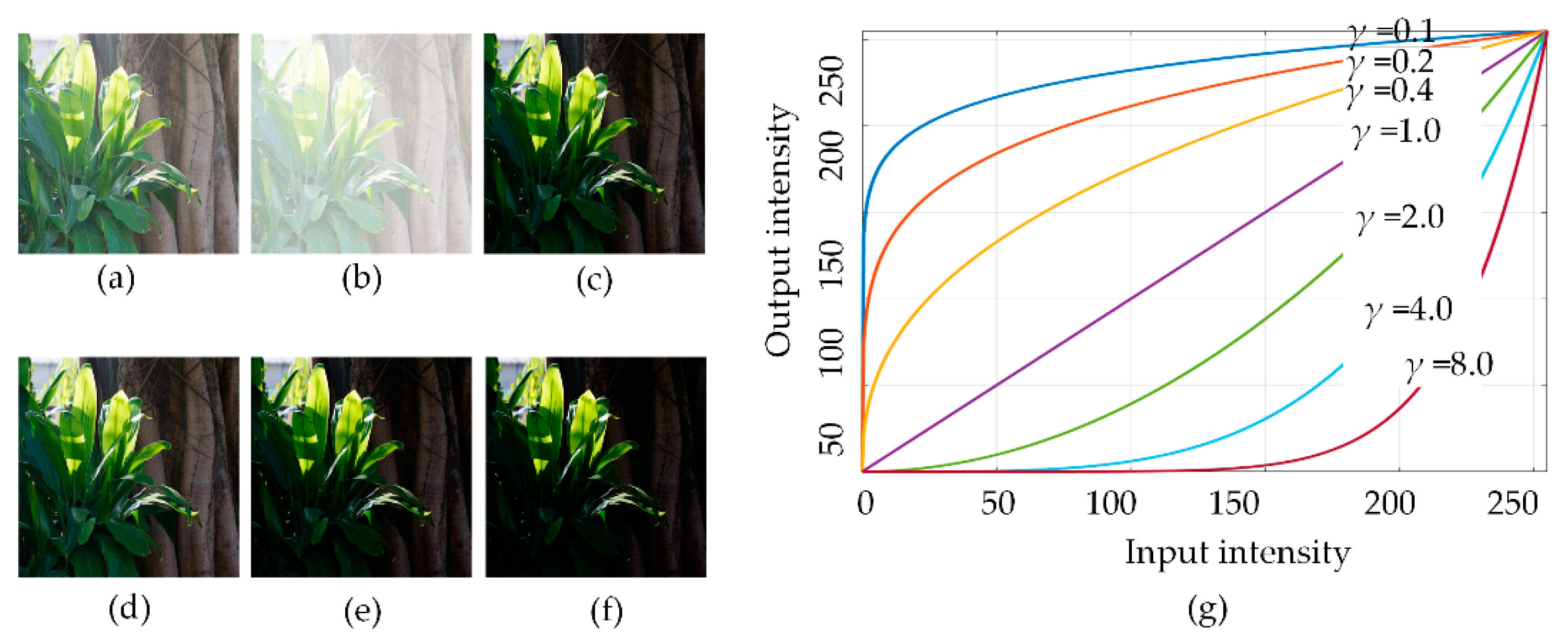

3.3. Local Spatial Adaptive Gamma Correction (LSAGC)

- (1)

- The frequency components of the illumination extracted by the MSR algorithm are included in the frequency components of the LL subband, which means that the illumination of the image can be extracted only in the LL subband.

- (2)

- As the frequency increases, the amplitude of SLDF (x, y) attenuates faster. This property is helpful in preserving the image details from the perspective of the local illumination characteristics.

- (3)

- For images with common image sizes, the proposed SLDF illumination extraction time is much less than that of the MSR algorithm, and the benefit of the SLDF scheme compared with the MSR algorithm increases as the image size increases.

3.4. Global Statistics Adaptive Gamma Correction (GSAGC)

Global Statistical Luminance Feature (GSLF)

3.5. Smoothness Preservation

3.6. Color Restoration

| Algorithm 1 Algorithm for the adaptive dual-gamma function for image illumination perception and correction in the wavelet domain (GLAGC) |

| Algorithm’s inputs: Original image S(x, y) |

| Algorithm’s output: Enhanced image O(x, y) |

| Step (1):Convert to HSV space to obtain the V component |

| Step (2):Convert image to the logarithmic domain v = log(V + 1) |

| Step (3): Fast illuminance extraction in the LL subband by the wavelet transform |

| Step (4): Illuminance feature extraction: |

| Spatial luminance distribution feature (SLDF) |

| Global statistical luminance feature (GSLF) |

| Step (5): Adaptive dual-gamma correction γ(Θ[χ,σ]) for the LL subband |

| γ(Θχ) (obtained by the SLDF) |

| γ(Θσ) (obtained by the GSLF and Gamma training) |

| Step (6): Smoothness preservation L(Θγ) for high-frequency coefficients |

| Step (7): Inverse wavelet and inverse logarithmic transform |

| Step (8): Color restoration |

4. Experiments

- (1)

- The computational cost of the algorithm;

- (2)

- The information entropy, which is used to quantify and evaluate the information richness of the enhanced image;

- (3)

- The absolute mean brightness error (AMBE) [27], which is used to evaluate illuminance retention, is defined as follows:where xm and ym represent the average value of the input image and output image, respectively.

- (4)

- The lightness order error (LOE), which is used to evaluate the naturalness of image enhancement [26]:where m, n is the image size, RD(i, j) is the relative order of pixels (i, j), ⊕ is the exclusive or (XOR) operator, and L(x, y) and Le(x, y) are the original image and enhanced image, respectively. The smaller the LOE value is, the better the naturalness of the original image that can be maintained.

4.1. LSAGC Tests

4.2. GSAGC Tests

4.3. Naturalness Preservation

4.4. Comparative Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Srinivas, K.; Bhandari, A.K. Low light image enhancement with adaptive sigmoid transfer function. IET Image Process 2020, 14, 668–678. [Google Scholar] [CrossRef]

- Xiaojie, G.; Yu, L.; Haibin, L. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar]

- Fan, C.-N.; Zhang, F.-Y. Homomorphic filtering based illumination normalization method for face recognition. Pattern Recognit. Lett. 2011, 32, 1468–1479. [Google Scholar] [CrossRef]

- Land, E.H.; McCann, J.J. Lightness and the Retinex theory. J. Opt. Soc. Amer. 1971, 61, 1–11. [Google Scholar] [CrossRef]

- Land, E.H. The Retinex theory of color vision. Sci. Amer. 1977, 237, 108–128. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. Properties and performance of the center/surround Retinex. IEEE Trans. Image Process 1997, 6, 451–462. [Google Scholar] [CrossRef]

- Rahman, Z.; Jobson, D.J.; Woodell, G.A. Multi-scale Retinex for color image enhancement. Proc. ICIP 1996, 3, 1003–1006. [Google Scholar] [CrossRef]

- Yue, H.; Yang, J.; Sun, X.; Wu, F.; Hou, C. Contrast enhancement based on intrinsic image decomposition. IEEE Trans. Image Process 2017, 26, 3981–3994. [Google Scholar] [CrossRef]

- Celik, T.; Tjahjadi, T. Image Resolution Enhancement Using Dual-Tree Complex Wavelet Transform. IEEE Geosci. Remote Sens. Lett. 2010, 7. [Google Scholar] [CrossRef]

- Glenn, R.; Easley, L.D.; Colonna, F. Shearlet-Based Total Variation Diffusion for Denoising. IEEE Trans. Image Process 2009, 18, 260–268. [Google Scholar]

- Karel, Z. Contrast limited adaptive histograph equalization. In Graphic Gems IV; Academic: San Diego, CA, USA, 1994; pp. 474–485. [Google Scholar]

- Kim, Y.-T. Contrast enhancement using brightness preserving bi—histogram equalization. IEEE Trans. Consum. Electron. 1997, 43, 1–8. [Google Scholar]

- Clement, J.C.; Parbukunmar, M.; Basker, A. Color image enhancement in compressed DCT domain. ICGST GVIP J. 2010, 10, 31–38. [Google Scholar]

- Grigoryan, A.M.; Jenkinson, J.; Agaian, S.S. Quaternion Fourier transform based alpha-rooting method for color image measurement and enhancement. Signal Process. 2015, 109, 269–289. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, T.; Li, Q.; Fang, H. Adaptive gamma correction based on cumulative histogram for enhancing near-infrared images. Infrared Phys. Technol. 2016, 79, 205–215. [Google Scholar] [CrossRef]

- Cao, G.; Huang, L.; Tian, H.; Huang, X.; Wang, Y.; Zhi, R. Contrast enhancement of brightness-distorted images by improved adaptive gamma correction. Comput. Electr. Eng. 2018, 66, 569–582. [Google Scholar] [CrossRef]

- Huang, S.C.; Cheng, F.C.; Chiu, Y.S. Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process 2013, 22, 1032–1041. [Google Scholar] [CrossRef]

- Lee, S.; Kwon, H.; Han, H.; Lee, G.; Kang, B. A space-variant luminance map based color image enhancement. IEEE Trans. Consum. Electron. 2010, 56, 2636–2643. [Google Scholar] [CrossRef]

- Fu, X.; Zeng, D.; Huang, Y.; Zhang, X.P.; Ding, X. A weighted variational model for simultaneous’ reflectance and illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2782–2790. [Google Scholar]

- Wang, L.; Xiao, L.; Liu, H.; Wei, Z. Variational Bayesian Method for Retinex. IEEE Trans. Image Process 2014, 23, 3381–3396. [Google Scholar] [CrossRef]

- Loza, D.; Bull, A.; Achim, A.M. Automatic contrast enhancement of low-light images based on local statistics of wavelet coefficients. Proc. ICIP 2010, 3553–3556. [Google Scholar]

- Zotin, A. Fast Algorithm of Image Enhancement based on Multi-Scale Retinex. Procedia Comput. Sci. 2018, 131, 6–14. [Google Scholar] [CrossRef]

- Jung, C.; Yang, Q.; Sun, T.; Fu, Q.; Song, H. Low light image enhancement with dual-tree complex wavelet transform. J. Vis. Commun. Image Represent. 2017, 42, 28–36. [Google Scholar] [CrossRef]

- Ye, Z.; Mohamadian, H.; Ye, Y. Information Measures for Biometric Identification Via 2D Discrete Wavelet Transform. In Proceedings of the 2007 IEEE International Conference on Automation Science and Engineering, Scottsdale, AZ, USA, 22–25 September 2007. [Google Scholar]

- Hou, X.; Zhang, L. Saliency Detection: A Spectral Residual Approach. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Wang, S.; Zheng, J.; Hu, H.M.; Li, B. Naturalness preserved enhancement algorithm for non-uniform ilumination images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef] [PubMed]

- Rajavel, P. Image Dependent Brightness Preserving Histogram Equalization. IEEE Trans. Consum. Electron. 2010, 56, 756–763. [Google Scholar] [CrossRef]

| Images | Index | LIME | Lee’s Method | AGCWD | SIRE | GLAGC |

|---|---|---|---|---|---|---|

| Urban | Entropy | 7.59 | 7.62 | 7.67 | 7.72 | 7.78 |

| LOE | 204.36 | 171.13 | 39.70 | 29.05 | 118.32 | |

| AMBE | 59.62 | 19.50 | 34.80 | 9.82 | 37.92 | |

| Baby | Entropy | 7.09 | 7.77 | 7.67 | 7.83 | 7.76 |

| LOE | 333.85 | 100.08 | 176.69 | 120.13 | 111.95 | |

| AMBE | 49.18 | 3.83 | 25.78 | 15.78 | 14.98 | |

| Street | Entropy | 7.57 | 7.68 | 7.57 | 7.67 | 7.82 |

| LOE | 282.43 | 93.54 | 89.56 | 141.58 | 173.6 | |

| AMBE | 56.03 | 15.48 | 24.46 | 18.29 | 39.15 | |

| Building | Entropy | 7.54 | 7.11 | 7.50 | 7.42 | 7.35 |

| LOE | 191.90 | 162.11 | 30.19 | 147.51 | 177.09 | |

| AMBE | 49.29 | 41.17 | 43.97 | 41.98 | 64.93 | |

| Goddess | Entropy | 7.49 | 7.38 | 7.79 | 7.70 | 7.47 |

| LOE | 199.21 | 283.96 | 43.77 | 192.93 | 105.01 | |

| AMBE | 72.12 | 19.35 | 44.42 | 34.17 | 43.87 | |

| Landscape | Entropy | 7.83 | 7.64 | 7.78 | 7.46 | 7.82 |

| LOE | 84.73 | 152.60 | 59.83 | 172.58 | 85.30 | |

| AMBE | 14.41 | 18.60 | 16.32 | 33.83 | 9.33 | |

| AVE. | Entropy | 7.52 | 7.53 | 7.66 | 7.63 | 7.67 |

| LOE | 204.36 | 138.24 | 66.04 | 127.58 | 115.88 | |

| AMBE | 50.111 | 19.66 | 31.62 | 27.31 | 35.03 |

| Lee’s Method | LIME | AGCWD | SIRE | Ours (GLAGC) |

|---|---|---|---|---|

| 0.067 | 0.21 | 0.136 | 8.51 | 0.095 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, W.; Yao, H.; Li, D.; Li, G.; Shi, H. GLAGC: Adaptive Dual-Gamma Function for Image Illumination Perception and Correction in the Wavelet Domain. Sensors 2021, 21, 845. https://doi.org/10.3390/s21030845

Yu W, Yao H, Li D, Li G, Shi H. GLAGC: Adaptive Dual-Gamma Function for Image Illumination Perception and Correction in the Wavelet Domain. Sensors. 2021; 21(3):845. https://doi.org/10.3390/s21030845

Chicago/Turabian StyleYu, Wenyong, Haiming Yao, Dan Li, Gangyan Li, and Hui Shi. 2021. "GLAGC: Adaptive Dual-Gamma Function for Image Illumination Perception and Correction in the Wavelet Domain" Sensors 21, no. 3: 845. https://doi.org/10.3390/s21030845

APA StyleYu, W., Yao, H., Li, D., Li, G., & Shi, H. (2021). GLAGC: Adaptive Dual-Gamma Function for Image Illumination Perception and Correction in the Wavelet Domain. Sensors, 21(3), 845. https://doi.org/10.3390/s21030845