Arduino-Based Myoelectric Control: Towards Longitudinal Study of Prosthesis Use

Abstract

1. Introduction

2. Methods

2.1. System Features

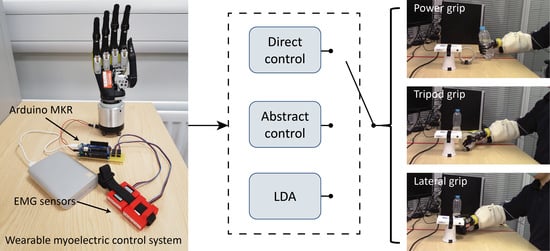

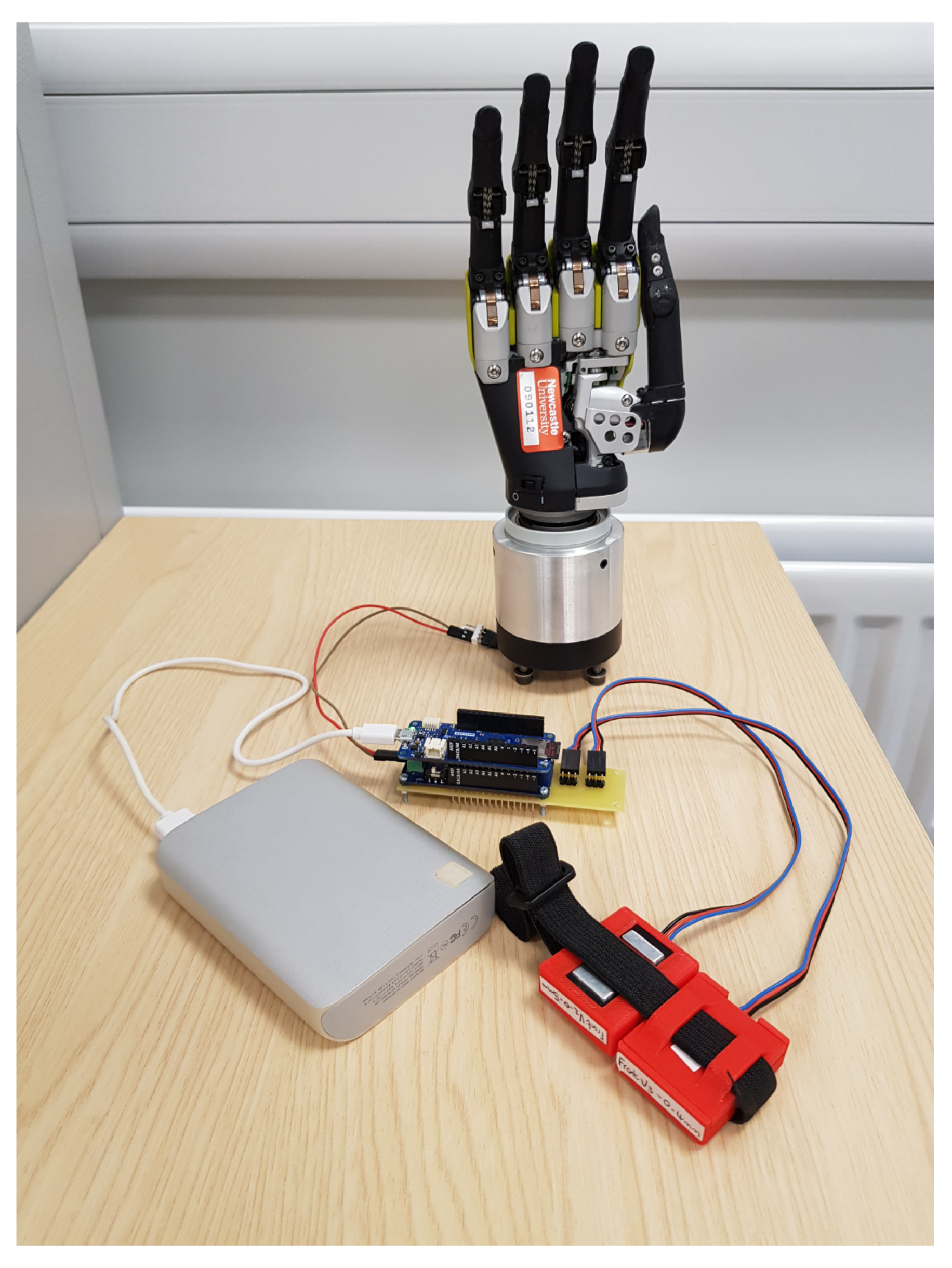

2.1.1. System Overview

2.1.2. Signal Recording and Pre-Processing

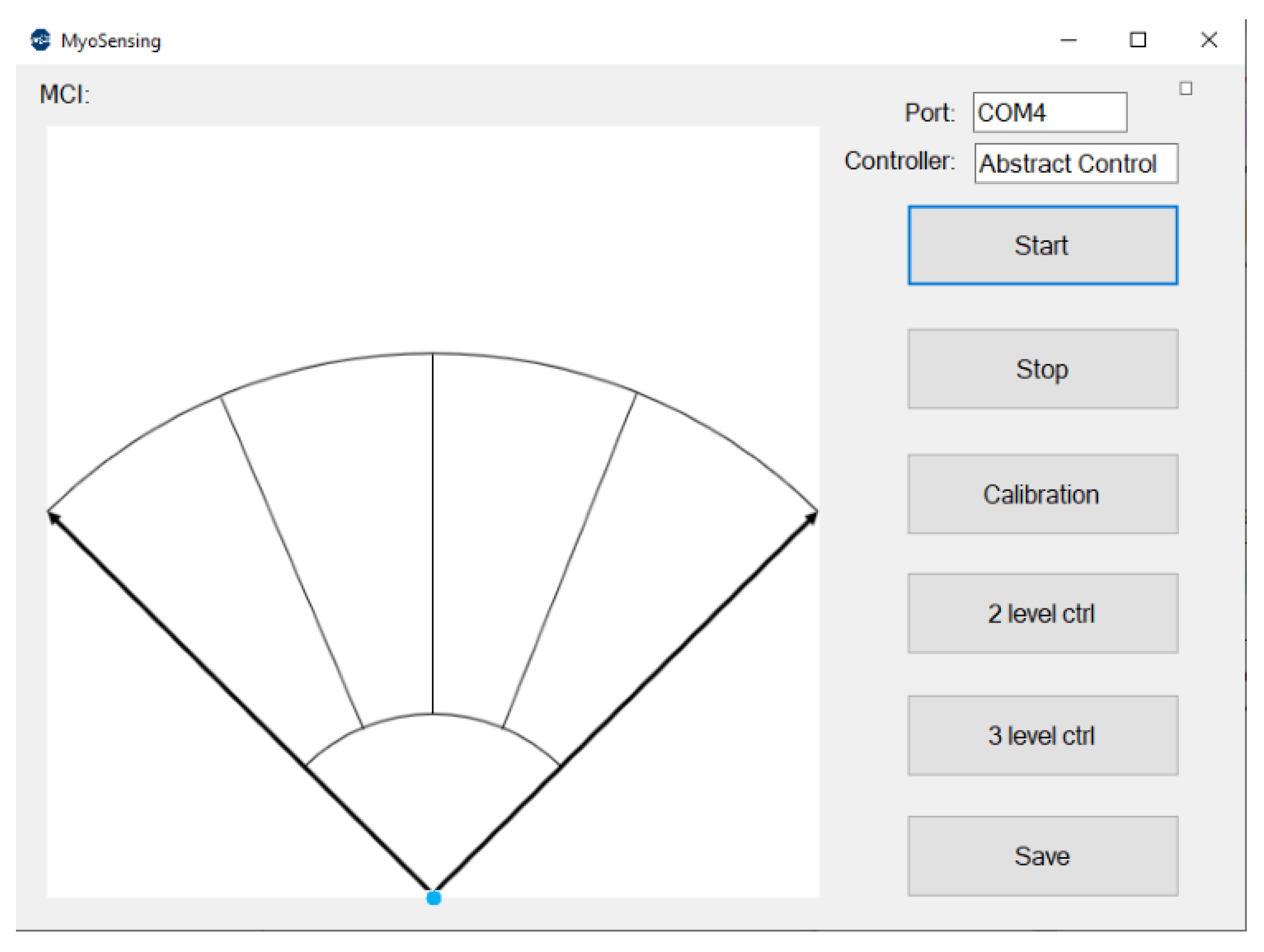

2.1.3. User-Friendly Control Interface

2.2. Controller Modules

2.2.1. Direct Controller

2.2.2. LDA Classifier

2.2.3. Abstract Controller

2.3. System Evaluation

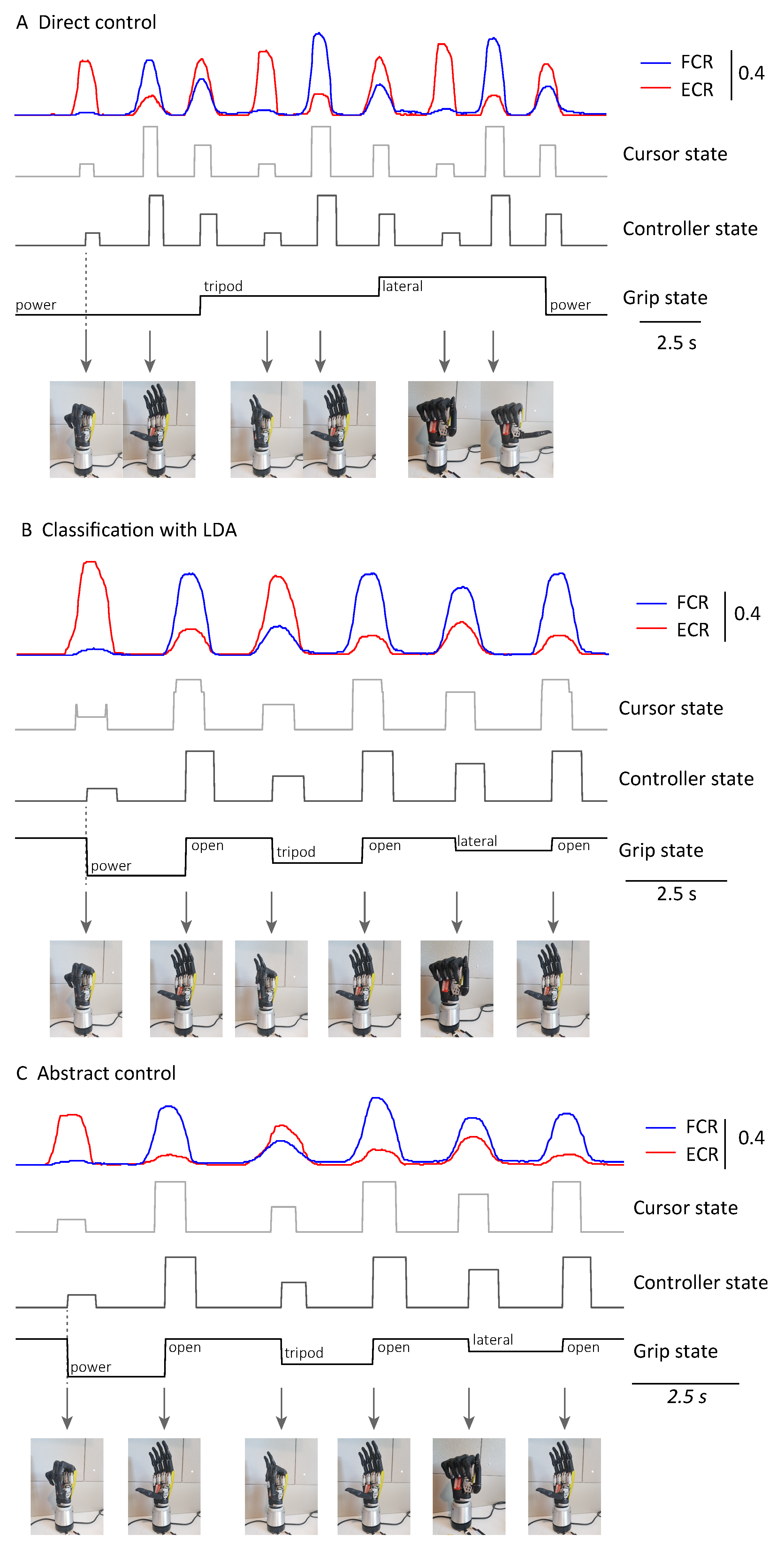

3. Results

3.1. Control Signal Analysis

3.2. Pick-and-Place Experiment

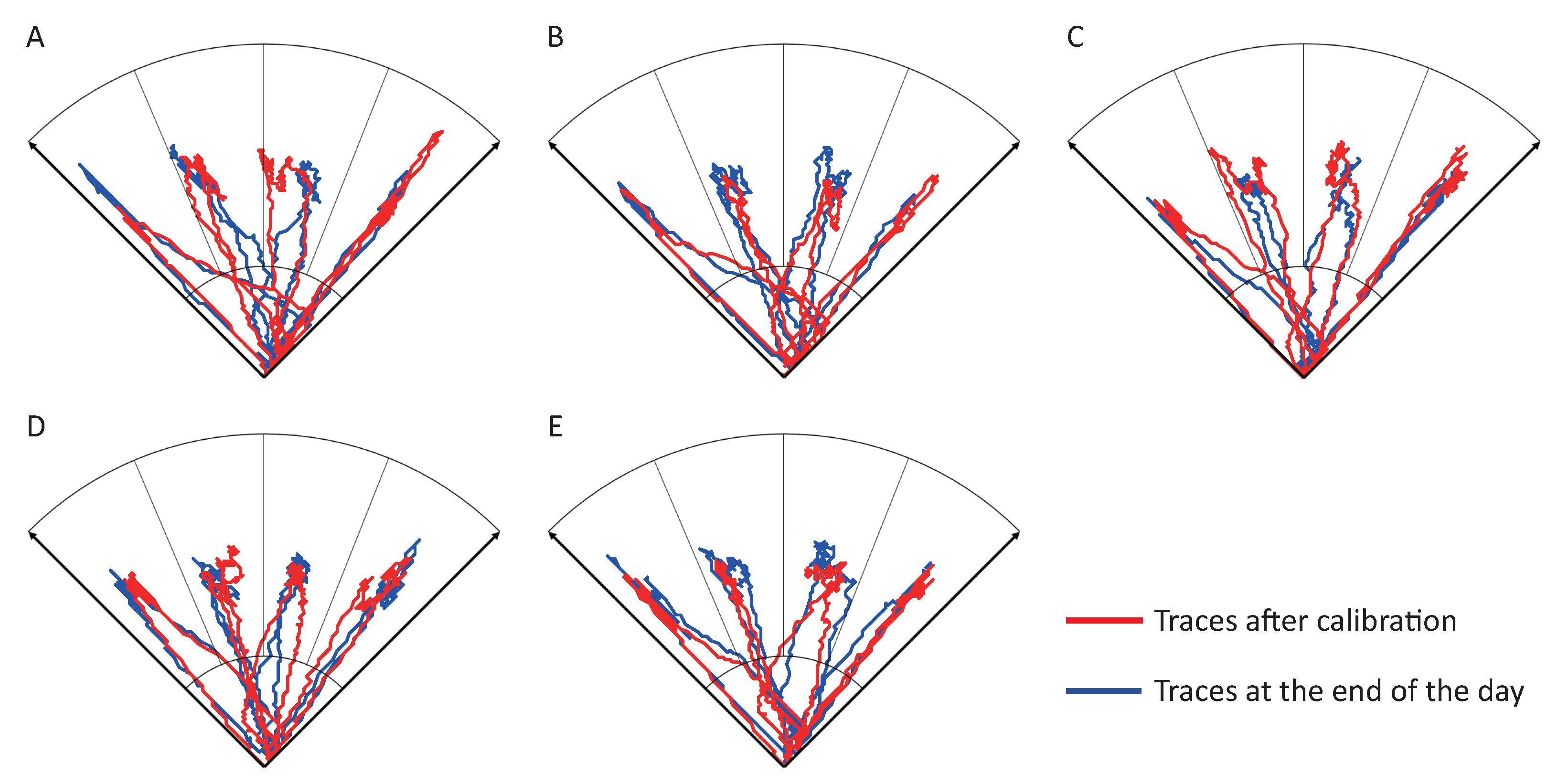

3.3. System Performance in a Day-Long Study

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zuo, K.J.; Olson, J.L. The evolution of functional hand replacement: From iron prostheses to hand transplantation. Plast. Surg. 2014, 22, 44–51. [Google Scholar] [CrossRef]

- Chadwell, A.; Diment, L.; Micó-Amigo, M.; Ramírez, D.Z.M.; Dickinson, A.; Granat, M.; Kenney, L.; Kheng, S.; Sobuh, M.; Ssekitoleko, R.; et al. Technology for monitoring everyday prosthesis use: A systematic review. J. Neuroeng. Rehabil. 2020, 17, 1–26. [Google Scholar] [CrossRef] [PubMed]

- Biddiss, E.A.; Chau, T.T. Upper limb prosthesis use and abandonment: A survey of the last 25 years. Prosthet. Orthot. Int. 2007, 31, 236–257. [Google Scholar] [CrossRef] [PubMed]

- Espinosa, M.; Nathan-Roberts, D. Understanding prosthetic abandonment. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2019, 63, 1644–1648. [Google Scholar] [CrossRef]

- Jiang, N.; Dosen, S.; Muller, K.R.; Farina, D. Myoelectric control of artificial limbs—Is there a need to change focus? [In the spotlight]. IEEE Signal Process. Mag. 2012, 29, 150–152. [Google Scholar] [CrossRef]

- Young, A.J.; Hargrove, L.J.; Kuiken, T.A. The effects of electrode size and orientation on the sensitivity of myoelectric pattern recognition systems to electrode shift. IEEE Trans. Biomed. Eng. 2011, 58, 2537–2544. [Google Scholar] [CrossRef]

- Scheme, E.; Englehart, K. Electromyogram pattern recognition for control of powered upper-limb prostheses: State of the art and challenges for clinical use. J. Rehabil. Res. Dev. 2011, 48, 643–659. [Google Scholar] [CrossRef]

- Vujaklija, I.; Roche, A.D.; Hasenoehrl, T.; Sturma, A.; Amsuess, S.; Farina, D.; Aszmann, O.C. Translating research on myoelectric control into clinics-are the performance assessment methods adequate? Front. Neurorobot. 2017, 11, 7. [Google Scholar] [CrossRef]

- Chadwell, A.; Kenney, L.; Thies, S.; Galpin, A.; Head, J. The reality of myoelectric prostheses: Understanding what makes these devices difficult for some users to control. Front. Neurorobot. 2016, 10, 7. [Google Scholar] [CrossRef]

- Cipriani, C.; Sassu, R.; Controzzi, M.; Carrozza, M.C. Influence of the weight actions of the hand prosthesis on the performance of pattern recognition based myoelectric control: Preliminary study. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 1620–1623. [Google Scholar]

- Vidovic, M.M.C.; Hwang, H.J.; Amsüss, S.; Hahne, J.M.; Farina, D.; Müller, K.R. Improving the robustness of myoelectric pattern recognition for upper limb prostheses by covariate shift adaptation. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 961–970. [Google Scholar] [CrossRef]

- Hargrove, L.J.; Miller, L.A.; Turner, K.; Kuiken, T.A. Myoelectric pattern recognition outperforms direct control for transhumeral amputees with targeted muscle reinnervation: A randomized clinical trial. Sci. Rep. 2017, 7, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Dyson, M.; Dupan, S.; Jones, H.; Nazarpour, K. Learning, generalization, and scalability of abstract myoelectric Control. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1539–1547. [Google Scholar] [CrossRef] [PubMed]

- Hahne, J.M.; Dähne, S.; Hwang, H.J.; Müller, K.R.; Parra, L.C. Concurrent adaptation of human and machine improves simultaneous and proportional myoelectric control. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 23, 618–627. [Google Scholar] [CrossRef] [PubMed]

- Simon, A.M.; Lock, B.A.; Stubblefield, K.A. Patient training for functional use of pattern recognition–controlled prostheses. J. Prosthet. Prthotics JPO 2012, 24, 56. [Google Scholar] [CrossRef] [PubMed]

- Brinton, M.; Barcikowski, E.; Davis, T.; Paskett, M.; George, J.A.; Clark, G. Portable take-home system enables proportional control and high-resolution data logging with a multi-degree-of-freedom bionic arm. Front. Robot. AI 2020, 7, 133. [Google Scholar] [CrossRef]

- Cuberovic, I.; Gill, A.; Resnik, L.J.; Tyler, D.J.; Graczyk, E.L. Learning artificial sensation through long-term home use of a sensory-enabled prosthesis. Front. Neurosci. 2019, 13, 853. [Google Scholar] [CrossRef] [PubMed]

- Resnik, L.; Acluche, F.; Borgia, M. The DEKA hand: A multifunction prosthetic terminal device-patterns of grip usage at home. Prosthet. Orthot. Int. 2018, 42, 446–454. [Google Scholar] [CrossRef]

- Farina, D.; Jiang, N.; Rehbaum, H.; Holobar, A.; Graimann, B.; Dietl, H.; Aszmann, O.C. The extraction of neural information from the surface EMG for the control of upper-limb prostheses: Emerging avenues and challenges. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 797–809. [Google Scholar] [CrossRef]

- Graczyk, E.L.; Resnik, L.; Schiefer, M.A.; Schmitt, M.S.; Tyler, D.J. Home use of a neural-connected sensory prosthesis provides the functional and psychosocial experience of having a hand again. Sci. Rep. 2018, 8, 1–17. [Google Scholar] [CrossRef]

- Hahne, J.M.; Schweisfurth, M.A.; Koppe, M.; Farina, D. Simultaneous control of multiple functions of bionic hand prostheses: Performance and robustness in end users. Sci. Robot. 2018, 3, 1–9. [Google Scholar] [CrossRef]

- Sreenivasan, N.; Ulloa Gutierrez, D.F.; Bifulco, P.; Cesarelli, M.; Gunawardana, U.; Gargiulo, G.D. Towards ultra low-cost myoactivated prostheses. BioMed Res. Int. 2018, 2018, 9634184. [Google Scholar] [CrossRef] [PubMed]

- George, J.A.; Davis, T.S.; Brinton, M.R.; Clark, G.A. Intuitive neuromyoelectric control of a dexterous bionic arm using a modified Kalman filter. J. Neurosci. Methods 2020, 330, 108462. [Google Scholar] [CrossRef] [PubMed]

- Pai, U.J.; Sarath, N.; Sidharth, R.; Kumar, A.P.; Pramod, S.; Udupa, G. Design and manufacture of 3D printed myoelectric multi-fingered hand for prosthetic application. In Proceedings of the 2016 International Conference on Robotics and Automation for Humanitarian Applications (RAHA), Kollam, India, 18–20 December 2016; pp. 1–6. [Google Scholar]

- Nguyen, N. Developing a Low-Cost Myoelectric Prosthetic Hand. Bachelor’s Thesis, Metropolia University of Applied Sciences, Helsinki, Finland, May 2018. [Google Scholar]

- Schorger, K.; Simon, J.P.; Clark, D.; Williams, A. Pneumatic Hand Prosthesis Project. Bachelor’s Thesis, Cal Maritime, Vallejo, CA, USA, April 2018. [Google Scholar]

- Curline-Wandl, S.A.; Azam Ali, M. Single channel myoelectric control of a 3D printed transradial prosthesis. Cogent Eng. 2016, 3, 1245541. [Google Scholar] [CrossRef]

- Ariyanto, M.; Ismail, R.; Setiawan, J.D.; Yuandi, E.P. Anthropomorphic transradial myoelectric hand using tendon-spring mechanism. Telkomnika 2019, 17, 537–548. [Google Scholar] [CrossRef]

- Canizares, A.; Pazos, J.; Benítez, D. On the use of 3D printing technology towards the development of a low-cost robotic prosthetic arm. In Proceedings of the 2017 IEEE International Autumn Meeting on Power, Electronics and Computing (ROPEC), Ixtapa, Mexico, 8–10 November 2017; pp. 1–6. [Google Scholar]

- Gevins, A.S.; Durousseau, D.; Libove, J. Dry Electrode Brain Wave Recording System. U.S. Patent 4,967,038, 30 October 1990. [Google Scholar]

- Forvi, E.; Bedoni, M.; Carabalona, R.; Soncini, M.; Mazzoleni, P.; Rizzo, F.; O’Mahony, C.; Morasso, C.; Cassarà, D.G.; Gramatica, F. Preliminary technological assessment of microneedles-based dry electrodes for biopotential monitoring in clinical examinations. Sens. Actuators A Phys. 2012, 180, 177–186. [Google Scholar] [CrossRef]

- Dyson, M.; Barnes, J.; Nazarpour, K. Myoelectric control with abstract decoders. J. Neural Eng. 2018, 15, 056003. [Google Scholar] [CrossRef]

- Pistohl, T.; Cipriani, C.; Jackson, A.; Nazarpour, K. Abstract and proportional myoelectric control for multi-fingered hand prostheses. Ann. Biomed. Eng. 2013, 41, 2687–2698. [Google Scholar] [CrossRef]

- Fang, Y.; Zhou, D.; Li, K.; Liu, H. Interface prostheses with classifier-feedback-based user training. IEEE Trans. Biomed. Eng. 2016, 64, 2575–2583. [Google Scholar]

- Moritz, C.T.; Perlmutter, S.I.; Fetz, E.E. Direct control of paralysed muscles by cortical neurons. Nature 2008, 456, 639–642. [Google Scholar] [CrossRef]

- Krasoulis, A.; Vijayakumar, S.; Nazarpour, K. Multi-Grip Classification-Based Prosthesis Control with Two EMG-IMU Sensors. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 508–518. [Google Scholar] [CrossRef]

- Krasoulis, A.; Nazarpour, K. Myoelectric digit action decoding with multi-label, multi-class classification: An offline analysis. Sci. Rep. 2020, 10, 16872. [Google Scholar] [CrossRef] [PubMed]

- Phinyomark, A.; Quaine, F.; Charbonnier, S.; Serviere, C.; Tarpin-Bernard, F.; Laurillau, Y. EMG feature evaluation for improving myoelectric pattern recognition robustness. Expert Syst. Appl. 2013, 40, 4832–4840. [Google Scholar] [CrossRef]

- Wolpert, D.M.; Kawato, M. Multiple paired forward and inverse models for motor control. Neural Netw. 1998, 11, 1317–1329. [Google Scholar] [CrossRef]

- Liu, X.; Mosier, K.M.; Mussa-Ivaldi, F.A.; Casadio, M.; Scheidt, R.A. Reorganization of finger coordination patterns during adaptation to rotation and scaling of a newly learned sensorimotor transformation. J. Neurophysiol. 2011, 105, 454–473. [Google Scholar] [CrossRef] [PubMed]

- Barnes, J.; Dyson, M.; Nazarpour, K. Comparison of hand and forearm muscle pairs in controlling of a novel myoelectric interface. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; p. 002846. [Google Scholar]

- Farrell, T. Analysis window induced controller delay for multifunctional prostheses. In Proceedings of the Myoelectric Controls Symp, Fredericton, NB, Canada, 13–15 August 2008; pp. 225–228. [Google Scholar]

- Farrell, T.R.; Weir, R.F. The optimal controller delay for myoelectric prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2007, 15, 111–118. [Google Scholar] [CrossRef]

- Portnova-Fahreeva, A.A.; Rizzoglio, F.; Nisky, I.; Casadio, M.; Mussa-Ivaldi, F.A.; Rombokas, E. Linear and Non-linear Dimensionality-Reduction Techniques on Full Hand Kinematics. Front. Bioeng. Biotechnol. 2020, 8, 429. [Google Scholar] [CrossRef]

- Pylatiuk, C.; Schulz, S.; Döderlein, L. Results of an Internet survey of myoelectric prosthetic hand users. Prosthet. Orthot. Int. 2007, 31, 362–370. [Google Scholar] [CrossRef]

- Dyson, M.; Nazarpour, K. Data Driven Spatial Filtering Can Enhance Abstract Myoelectric Control in Amputees. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 3770–3773. [Google Scholar]

- Hahne, J.M.; Wilke, M.A.; Koppe, M.; Farina, D.; Schilling, A.F. Longitudinal case study of regression-based hand prosthesis control in daily life. Front. Neurosci. 2020, 14, 600. [Google Scholar] [CrossRef]

- Mussa-Ivaldi, F.A.; Casadio, M.; Danziger, Z.C.; Mosier, K.M.; Scheidt, R.A. Sensory motor remapping of space in human–machine interfaces. In Progress in Brain Research; Elsevier: Amsterdam, The Netherlands, 2011; Volume 191, pp. 45–64. [Google Scholar]

- Powell, M.A.; Thakor, N.V. A training strategy for learning pattern recognition control for myoelectric prostheses. J. Prosthet. Orthot. JPO 2013, 25, 30. [Google Scholar] [CrossRef]

- Resnik, L.J.; Acluche, F.; Lieberman Klinger, S. User experience of controlling the DEKA Arm with EMG pattern recognition. PLoS ONE 2018, 13, e0203987. [Google Scholar] [CrossRef]

- Domingo, M.C. An overview of the Internet of Things for people with disabilities. J. Netw. Comput. Appl. 2012, 35, 584–596. [Google Scholar] [CrossRef]

- Sethi, P.; Sarangi, S.R. Internet of things: Architectures, protocols, and applications. J. Electr. Comput. Eng. 2017, 2017, 9324035. [Google Scholar] [CrossRef]

- Baker, S.B.; Xiang, W.; Atkinson, I. Internet of things for smart healthcare: Technologies, challenges, and opportunities. IEEE Access 2017, 5, 26521–26544. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, H.; Dyson, M.; Nazarpour, K. Arduino-Based Myoelectric Control: Towards Longitudinal Study of Prosthesis Use. Sensors 2021, 21, 763. https://doi.org/10.3390/s21030763

Wu H, Dyson M, Nazarpour K. Arduino-Based Myoelectric Control: Towards Longitudinal Study of Prosthesis Use. Sensors. 2021; 21(3):763. https://doi.org/10.3390/s21030763

Chicago/Turabian StyleWu, Hancong, Matthew Dyson, and Kianoush Nazarpour. 2021. "Arduino-Based Myoelectric Control: Towards Longitudinal Study of Prosthesis Use" Sensors 21, no. 3: 763. https://doi.org/10.3390/s21030763

APA StyleWu, H., Dyson, M., & Nazarpour, K. (2021). Arduino-Based Myoelectric Control: Towards Longitudinal Study of Prosthesis Use. Sensors, 21(3), 763. https://doi.org/10.3390/s21030763