A Robust Lane Detection Model Using Vertical Spatial Features and Contextual Driving Information

Abstract

1. Introduction

- We propose a robust lane detection model using vertical spatial features and contextual driving information that works well for unclear or occluded lanes in complex driving scenes, such as crowded scene, poor light condition, etc.

- The information exchange block and feature merging block are designed to make the proposed model use the contextual information effectively and only use the vertical spatial features combined with lane line distribution features to robustly detect unclear and occluded lane lines.

- We present many comparisons with other state-of-the-art lane detection models on the CuLane and TuSimple lane detection datasets. The experimental results show that our proposed model can detect the lane lines more robustly and precisely than others in the complex driving scenes.

2. Related Work

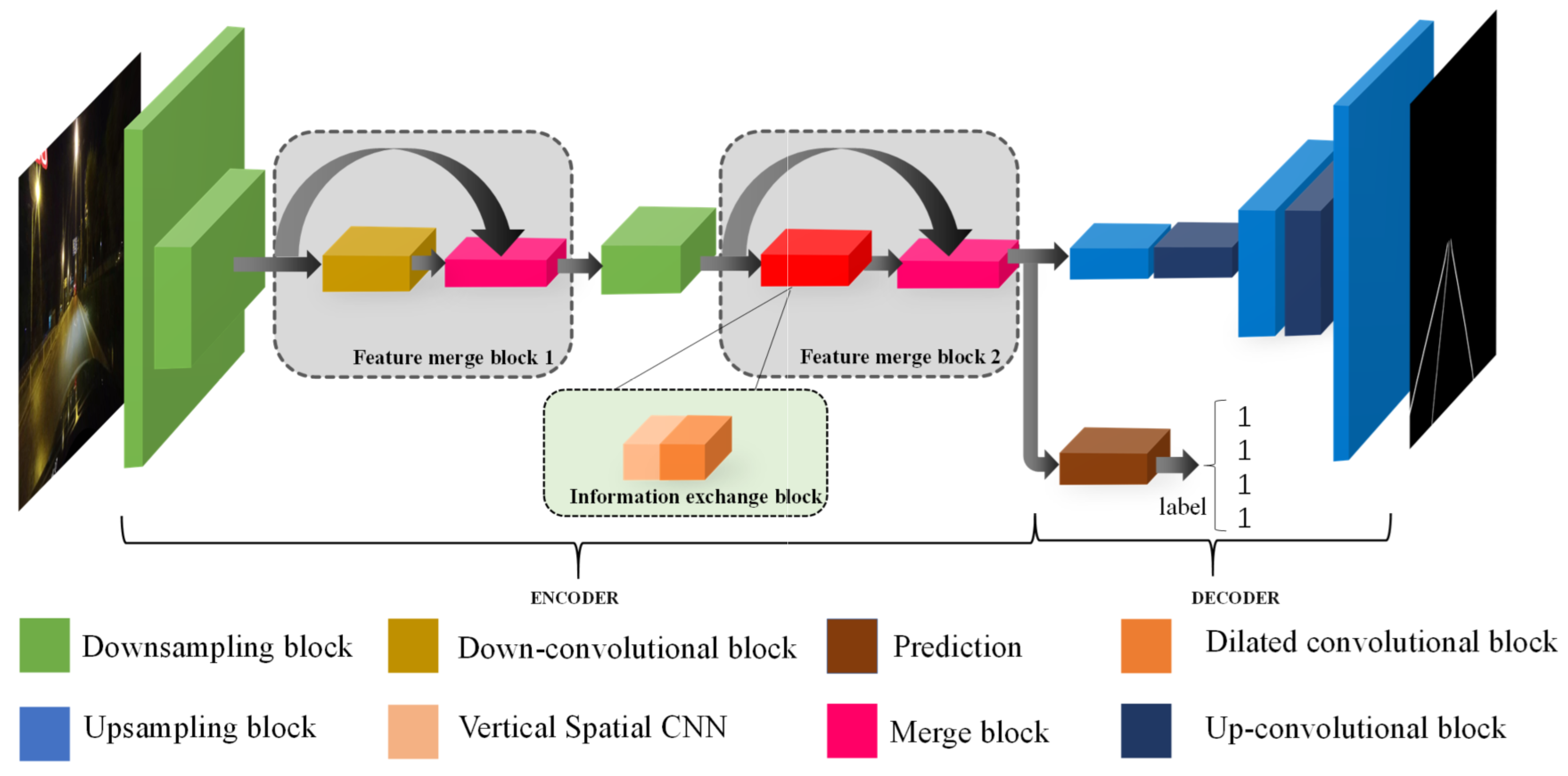

3. Network Architecture

3.1. Encoder

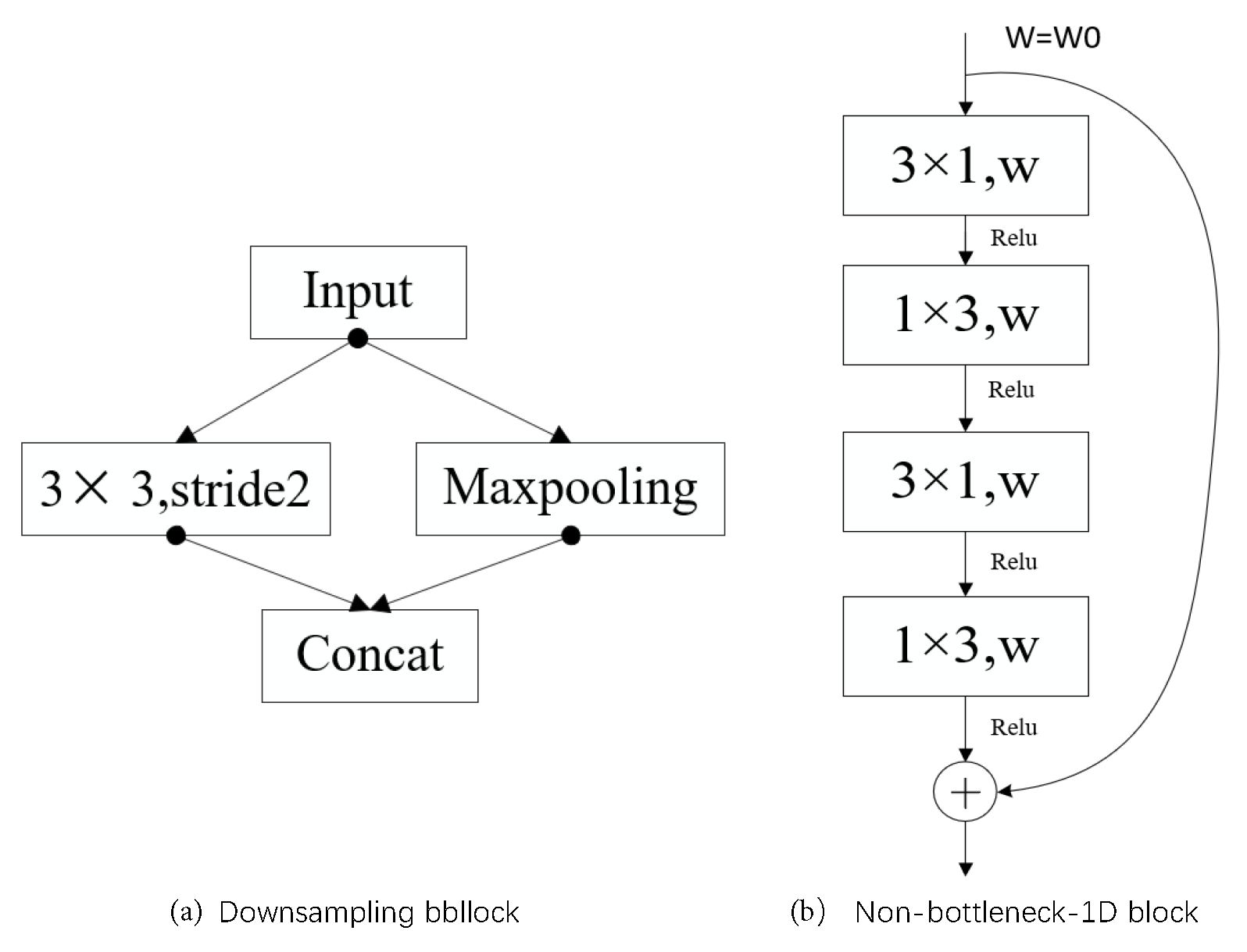

3.1.1. Downsampling and Non-Bottleneck-1D Blocks

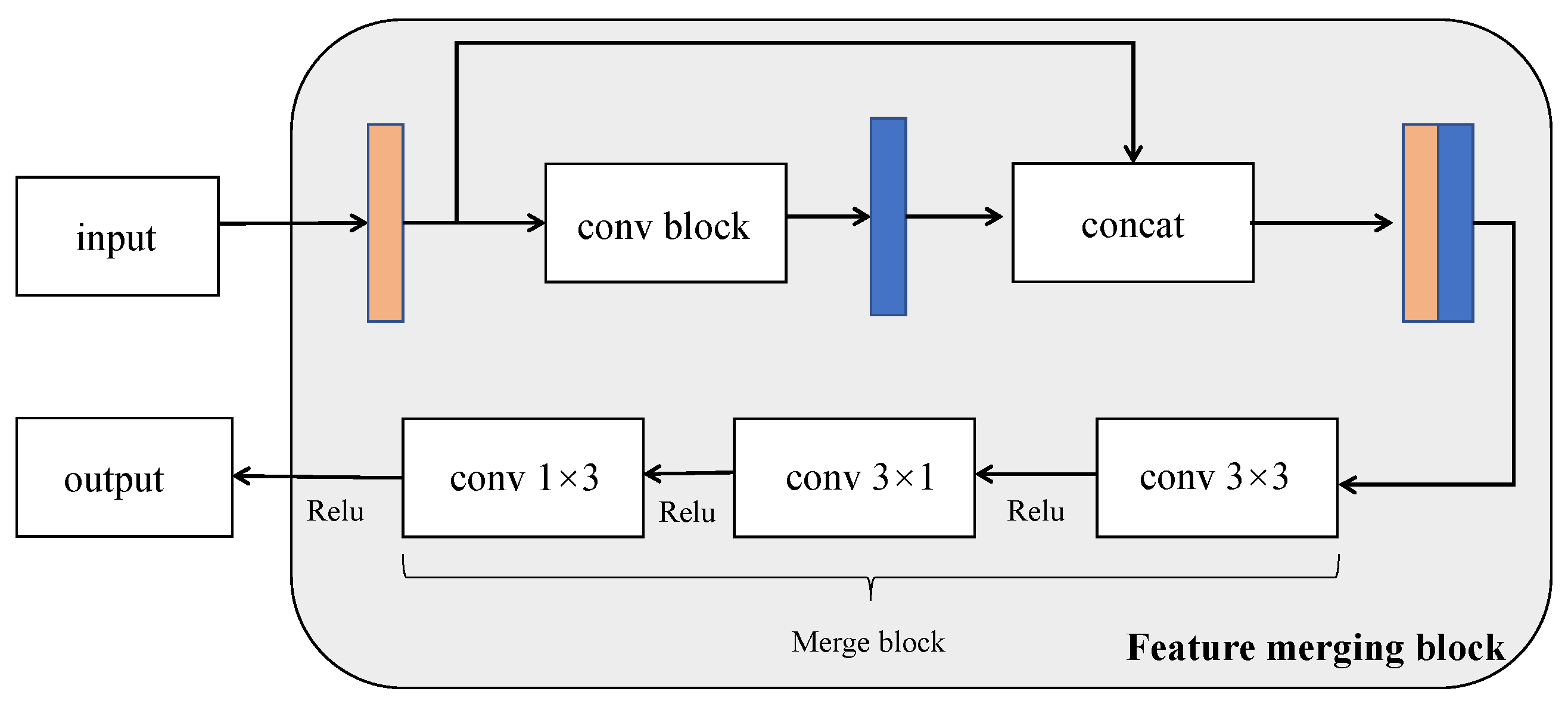

3.1.2. Feature Merging Block

3.1.3. Information Exchange Block

3.2. Prediction of the Probability Map

3.3. Prediction of the Existing Lanes

3.4. Loss Function

4. Experiments

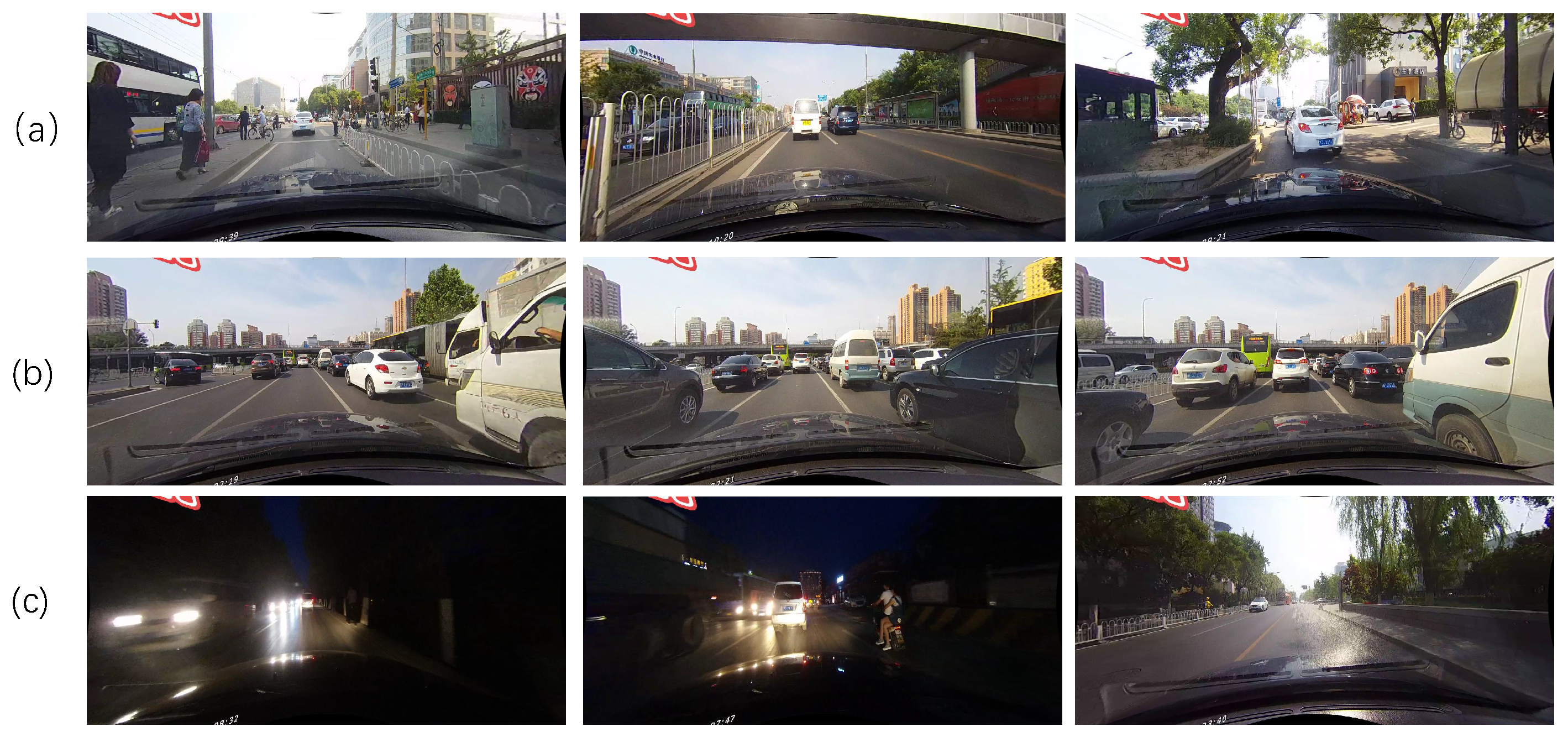

4.1. Dataset

4.1.1. TuSimple Lane Detection Benchmark Dataset

4.1.2. CULane Dataset

4.2. Evaluation Metric

4.2.1. TuSimple Lane Detection Benchmark Dataset

4.2.2. CULane Dataset

4.3. Ablation Study

4.3.1. Comparison with Similar Models

4.3.2. Different Dilated Convolution Rates

4.3.3. Method Used for Spatial Convolution

4.4. Qualitative and Quantitative Comparisons

4.4.1. Qualitative Evaluation

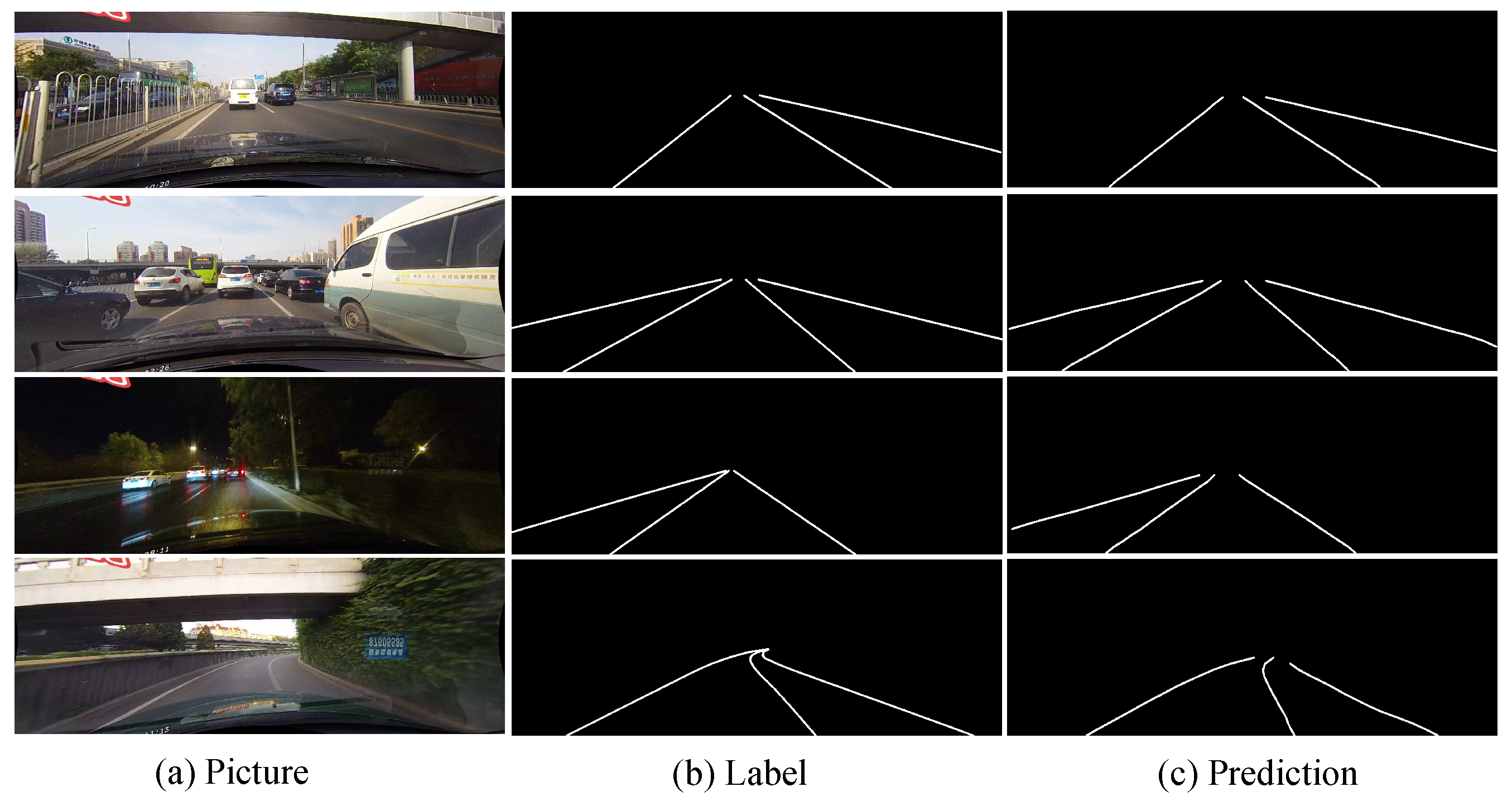

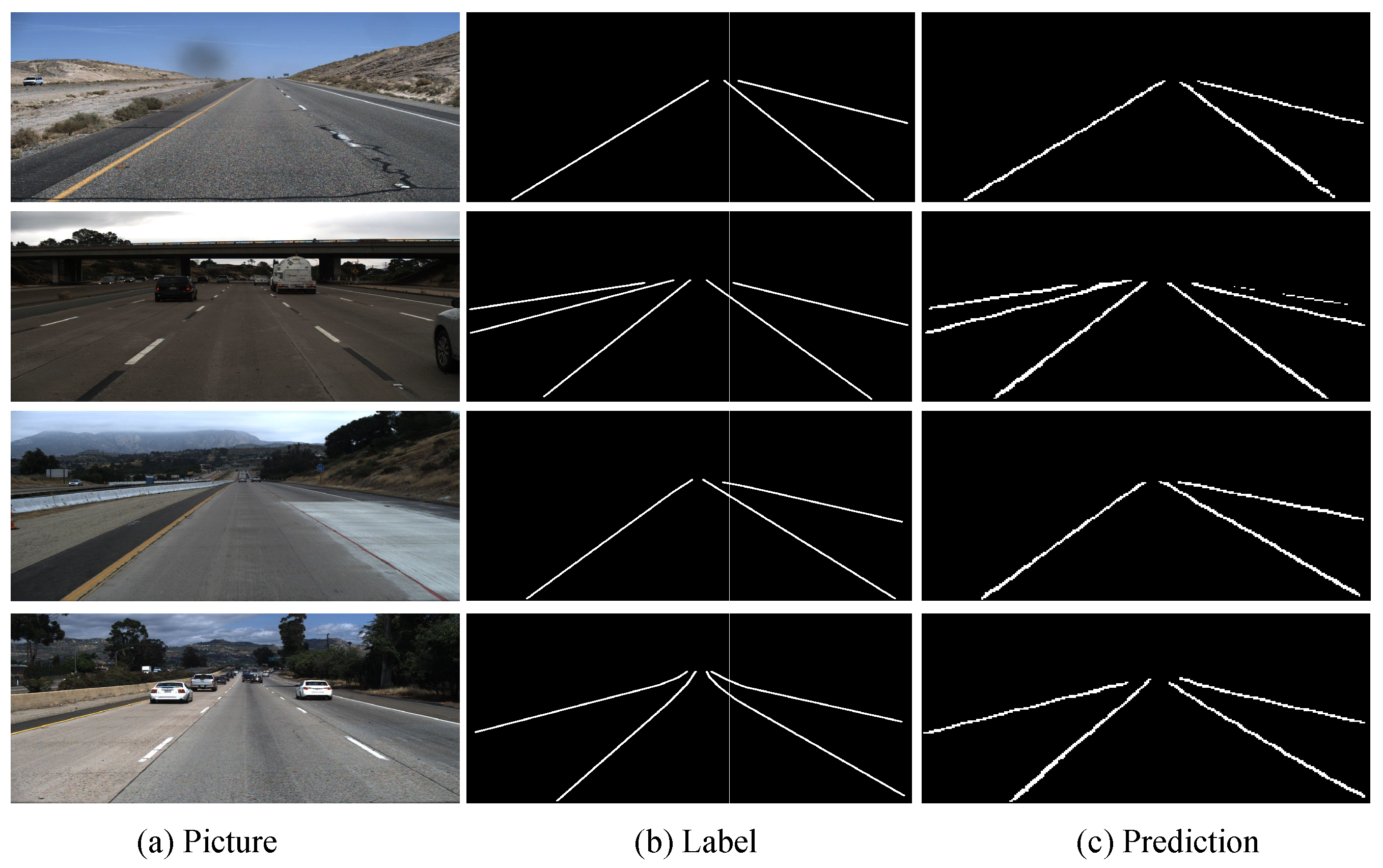

- TuSimple lane detection benchmark datasetAs shown in Figure 7, our model can accurately detect the lane lines on TuSimple dataset. As shown in the first row in Figure 7, even if the lane is damaged, it can be clearly detected by our model. This result indicates that the proposed model can use effective context information to robustly detect lane lines when the lane lines are unclear. The third row in Figure 7 shows our proposed model is not disturbed by the white line on the wall. Our proposed model still detects the lanes in the scene stably, this result also shows that our network has strong robustness to lane detection in complex driving scenarios. As shown in the last row in Figure 7, our model can accurately detect the lanes in simple driving scenarios.

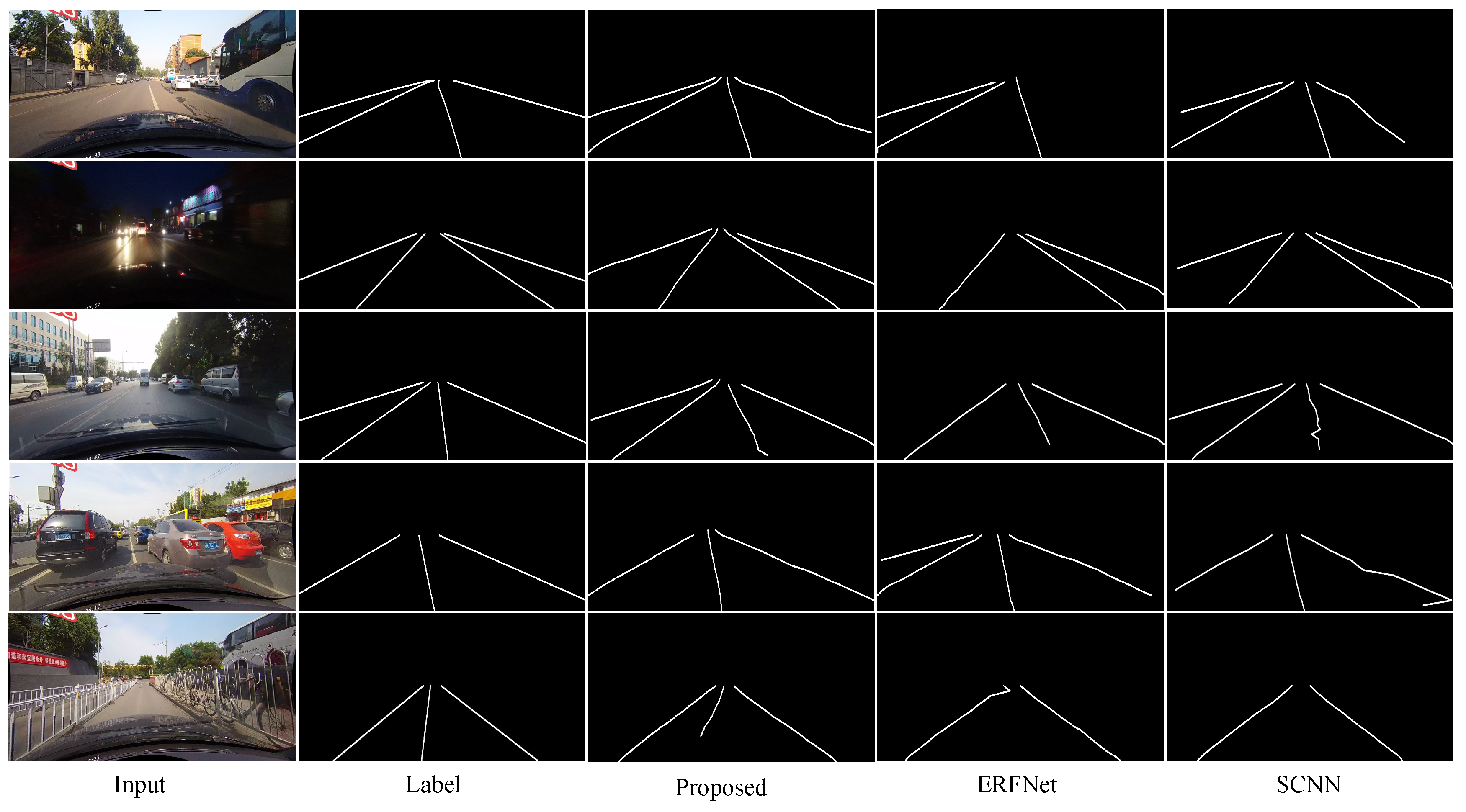

- CULane datasetFigure 8 shows the comparison of the lane detection results obtained by different models on CULane dataset. By comparing the output results of ERFNet and the basic SCNN, we can find that the quality of the line detected by ERFNet is better. However, due to its lack of understanding of the driving environment, it detects fewer or more lines than SCNN (the first four rows in Figure 8). Comparing these results with the experimental results of our proposed network, we can see that our model obtains a good combination of the advantages of the two. Some unclear (the second and last rows in Figure 8) and occluded (the first and forth rows in Figure 8) lanes also can be detected by our model precisely, but other models fail to these detections more or less. The use of vertical spatial features and contextual driving information has a more robust effect on the lane detection of our proposed model in complex driving scenes with occlusions and unclear lane lines. Our method has a good understanding of the traffic driving scenes, and the quality of the detected lines is higher than those of the other methods (the last row of Figure 8).

4.4.2. Quantitative Evaluation

- TuSimple lane detection benchmark datasetAccording to the evaluation scores shown in Table 7, we can see that our model can effectively perform lane detection in normal scenarios. However, the value of FP is the highest, indicating that our model is most likely to detect places that are not lanes in the labeled map as lanes. Like the second row in Figure 7, the rightmost lane does not appear in the labeled map, but our network can detect it. The proportion of challenging driving scenes on TuSimple dataset is small, but the proposed model is still robust to lane detection. Especially our proposed model performs better in some complex driving scenarios with unclear or occluded lane lines.

- CULane datasetIn Table 8, we compare the effects of different models on the CULane dataset and compare the lane detection effect for each scenario in the form of the F1-measure. It can be seen that our model is superior to other models in most driving scenarios. We can find that the effect of lane detection in normal scenes is significantly improved. The proposed model obtains the best lane detection results in the complex driving scenes of no line, shadow, arrow and dazzle light. Moreover, in the complex driving scenes of crowded and night, our model is very close to the optimal model. Our proposed model can achieve excellent performance in complex driving scenarios including unclear and occluded lane lines, which prove that the addition of vertical spatial features and effective context information can make the proposed model more robust to detection the lane lines. More contextual information obtained by the feature merge block and vertical spatial features extracted by the information exchange block can be fully utilized to make the proposed model with a strong ability to understand the complex driving environment. Furthermore, the total F1 (0.5) score of our proposed model is the highest in the Table 8. Recently, Liang et al. proposed the LineNet [26] can get the total F1-measure result of 73.1% that is a little higher than ours. However, they did not publish each scenario result and the source codes yet, so we cannot make more in-depth qualitative and quantitative comparisons with LineNet.

5. Discussion

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, D.; Zhao, D.; Zhang, Q.; Chen, Y. Reinforcement learning and deep learning based lateral control for autonomous driving [application notes]. IEEE Comp. Intell. Mag. 2019, 14, 83–98. [Google Scholar] [CrossRef]

- Deng, T.; Yan, H.; Qin, L.; Ngo, T.; Manjunath, B. How do drivers allocate their potential attention? Driving fixation prediction via convolutional neural networks. IEEE Trans. Intell. Transp. Syst. 2020, 21, 2146–2154. [Google Scholar] [CrossRef]

- Bertozzi, M.; Broggi, A. GOLD: A parallel real-time stereo vision system for generic obstacle and lane detection. IEEE Trans. Image Process. 2002, 7, 62–81. [Google Scholar] [CrossRef] [PubMed]

- Hillel, A.B.; Lerner, R.; Levi, D.; Raz, G. Recent progress in road and lane detection: A survey. Mach. Vis. Appl. 2014, 25, 727–745. [Google Scholar] [CrossRef]

- Kuang, P.; Zhu, Q.; Chen, X. A Road Lane Recognition Algorithm Based on Color Features in AGV Vision Systems. In Proceedings of the International Conference on Communications, Circuits and Systems, Guilin, China, 25–28 June 2006; Volume 1, pp. 475–479. [Google Scholar]

- Wang, J.; Zhang, Y.; Chen, X.; Shi, X. A quick scan and lane recognition algorithm based on positional distribution and edge features. In Proceedings of the International Conference on Image Processing and Pattern Recognition in Industrial Engineering, Xi’an, China, 12–15 July 2010; Volume 7820. [Google Scholar]

- Tan, H.; Zhou, Y.; Zhu, Y.; Yao, D.; Li, K. A novel curve lane detection based on Improved River Flow and RANSA. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 133–138. [Google Scholar]

- Niu, J.; Lu, J.; Xu, M.; Lv, P.; Zhao, X. Robust Lane Detection Using Two-stage Feature Extraction with Curve Fitting. Pattern Recognit. 2016, 59, 225–233. [Google Scholar] [CrossRef]

- Chen, P.; Lo, S.; Hang, H.; Chan, S.; Lin, J. Efficient Road Lane Marking Detection with Deep Learning. In Proceedings of the IEEE International Conference on Digital Signal Processing, Shanghai, China, 19–21 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- Wang, M.; Liu, B.; Foroosh, H. Factorized convolutional neural networks. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 29 October 2017; pp. 545–553. [Google Scholar]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as deep: Spatial cnn for traffic scene understanding. arXiv 2017, arXiv:1712.06080. [Google Scholar]

- Liu, W.; Yan, F.; Tang, K.; Zhang, J.; Deng, T. Lane detection in complex scenes based on end-to-end neural network. arXiv 2020, arXiv:2010.13422. [Google Scholar]

- Beyeler, M.; Mirus, F.; Verl, A. Vision-based robust road lane detection in urban environments. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 4920–4925. [Google Scholar]

- Kamble, A.; Potadar, S. Lane Departure Warning System for Advanced Drivers Assistance. In Proceedings of the Second International Conference on Intelligent Computing and Control Systems, Madurai, India, 14–15 June 2018; pp. 1775–1778. [Google Scholar]

- Wennan, Z.; Qiang, C.; Hong, W. Lane Detection in Some Complex Conditions. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 117–122. [Google Scholar] [CrossRef]

- Yim, Y.U.; Oh, S.Y. Three-feature based automatic lane detection algorithm (TFALDA) for autonomous driving. IEEE Trans. Intell. Transp. Syst. 2003, 4, 219–225. [Google Scholar]

- Wang, Y.; Teoh, E.K.; Shen, D. Lane detection and tracking using B-Snake. Image Vis. Comput. 2004, 22, 269–280. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Chen, L.; Wang, H.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.Y. Advances in vision-based lane detection: Algorithms, integration, assessment, and perspectives on ACP-based parallel vision. IEEE/CAA J. Autom. Sin. 2018, 5, 645–661. [Google Scholar] [CrossRef]

- Hou, Y.; Ma, Z.; Liu, C.; Loy, C.C. Learning lightweight lane detection cnns by self attention distillation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 22 April 2019; pp. 1013–1021. [Google Scholar]

- Ghafoorian, M.; Nugteren, C.; Baka, N.; Booij, O.; Hofmann, M. El-gan: Embedding loss driven generative adversarial networks for lane detection. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Wang, Z.; Ren, W.; Qiu, Q. Lanenet: Real-time lane detection networks for autonomous driving. arXiv 2018, arXiv:1807.01726. [Google Scholar]

- Neven, D.; De Brabandere, B.; Georgoulis, S.; Proesmans, M.; Van Gool, L. Towards End-To-End Lane Detection: An Instance Segmentation Approach. In Proceedings of the IEEE Intelligent Vehicles Symposium, Changshu, China, 26–30 June 2018; pp. 286–291. [Google Scholar]

- Ko, Y.; Jun, J.; Ko, D.; Jeon, M. Key Points Estimation and Point Instance Segmentation Approach for Lane Detection. arXiv 2020, arXiv:2002.06604. [Google Scholar]

- Liang, D.; Guo, Y.C.; Zhang, S.K.; Mu, T.J.; Huang, X. Lane Detection: A Survey with New Results. J. Comput. Sci. Technol. 2020, 35, 493–505. [Google Scholar] [CrossRef]

- Li, J.; Mei, X.; Prokhorov, D.; Tao, D. Deep Neural Network for Structural Prediction and Lane Detection in Traffic Scene. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 690–703. [Google Scholar] [CrossRef] [PubMed]

- Yang, W.; Zhang, X.; Lei, Q.; Shen, D.; Huang, Y. Lane Position Detection Based on Long Short-Term Memory (LSTM). Sensors 2020, 20, 3115. [Google Scholar] [CrossRef] [PubMed]

- Zou, Q.; Jiang, H.; Dai, Q.; Yue, Y.; Chen, L.; Wang, Q. Robust Lane Detection From Continuous Driving Scenes Using Deep Neural Networks. IEEE Trans. Veh. Technol. 2020, 69, 41–54. [Google Scholar] [CrossRef]

- Zhang, J.; Deng, T.; Yan, F.; Liu, W. Lane Detection Model Based on Spatio-Temporal Network with Double ConvGRUs. arXiv 2020, arXiv:2008.03922. [Google Scholar]

- Romera, E.; Alvarez, J.M.; Bergasa, L.M.; Arroyo, R. Erfnet: Efficient residual factorized convnet for real-time semantic segmentation. IEEE Trans. Intell. Transp. Syst. 2017, 19, 263–272. [Google Scholar] [CrossRef]

- Luo, W.; Li, Y.; Urtasun, R.; Zemel, R. Understanding the effective receptive field in deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4898–4906. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. Enet: A deep neural network architecture for real-time semantic segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

| Layer | Block Name | Channel |

|---|---|---|

| 1 | Downsampling block | 16 |

| 2 | Downsampling block | 64 |

| 3 | Down-convolution block | 64 |

| 4 | Merge block | 128 → 64 |

| 5 | Downsampling block | 64 → 128 |

| 6 | Vertical spatial convolution | 64 → 128 |

| 7 | dilated convolution block | 128 |

| 8 | Merge block | 256 → 128 |

| Layer | Block Name | Type | Channel |

|---|---|---|---|

| 9 | upsampling | transposed convolution | 128 → 64 |

| 10 | up-convolution block | Non-bottleneck-1D block | 64 |

| 11 | upsampling | transposed convolution | 64 → 16 |

| 12 | up-convolution block | Non-bottleneck-1D block | 16 |

| 13 | upsampling | transposed convolution | 16 → 3 |

| Scenario | Normal | Crowded | Night | No Line | Shadow | Arrow | Dazzle Light | Curve | Crossroad |

|---|---|---|---|---|---|---|---|---|---|

| Proportion | 27.7% | 23.4% | 20.3% | 11.7% | 2.7% | 2.6% | 1.4% | 1.2% | 9.0% |

| Network Model | ERFNet | SCNN | ERFNet + SCNN | Proposed |

|---|---|---|---|---|

| F1 (0.3) | 80.4 | 80.9 | 79.8 | 80.6 |

| F1 (0.5) | 71.8 | 71.6 | 71.0 | 71.9 |

| Dilation Rate | Dilation Rates [2, 4, 8, 16] | Dilation Rates [1, 1, 1, 1] | Dilation Rates [1, 2, 1, 4] |

|---|---|---|---|

| F1 (0.3) | 80.5 | 80.4 | 80.6 |

| F1 (0.5) | 71.4 | 71.5 | 71.9 |

| Form | Spatial Convolution | Horizontalspatial Convolution | Vertical Spatial Convolution |

|---|---|---|---|

| F1 (0.3) | 78.9 | 79.8 | 80.6 |

| F1 (0.5) | 70.5 | 68.7 | 71.9 |

| Module | FP | FN | Accuracy |

|---|---|---|---|

| ResNet-18 | 0.0948 | 0.0822 | 92.69% |

| ResNet-34 | 0.0918 | 0.0796 | 92.84% |

| ENet | 0.0886 | 0.0734 | 93.02% |

| Proposed | 0.1875 | 0.0467 | 96.2% |

| Category | SCNN | R-101-SAD | R-34-SAD | ENet-SAD | Proposed |

|---|---|---|---|---|---|

| Normal | 90.6 | 90.7 | 89.9 | 90.1 | 91.1 |

| Crowded | 69.7 | 70.0 | 68.5 | 68.8 | 69.8 |

| Night | 66.1 | 66.3 | 64.6 | 66.0 | 66.2 |

| No line | 43.4 | 43.5 | 42.2 | 41.6 | 44.6 |

| Shadow | 66.9 | 67.0 | 67.7 | 65.9 | 68.1 |

| Arrow | 84.1 | 84.4 | 83.8 | 84.0 | 86.4 |

| Dazzle light | 58.5 | 59.9 | 59.9 | 60.2 | 61.5 |

| Curve | 64.4 | 65.7 | 66.0 | 65.7 | 63.9 |

| Crossroad | 1990 | 2052 | 1960 | 1998 | 2678 |

| Total | 71.6 | 71.8 | 70.7 | 70.8 | 71.9 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Yan, F.; Zhang, J.; Deng, T. A Robust Lane Detection Model Using Vertical Spatial Features and Contextual Driving Information. Sensors 2021, 21, 708. https://doi.org/10.3390/s21030708

Liu W, Yan F, Zhang J, Deng T. A Robust Lane Detection Model Using Vertical Spatial Features and Contextual Driving Information. Sensors. 2021; 21(3):708. https://doi.org/10.3390/s21030708

Chicago/Turabian StyleLiu, Wenbo, Fei Yan, Jiyong Zhang, and Tao Deng. 2021. "A Robust Lane Detection Model Using Vertical Spatial Features and Contextual Driving Information" Sensors 21, no. 3: 708. https://doi.org/10.3390/s21030708

APA StyleLiu, W., Yan, F., Zhang, J., & Deng, T. (2021). A Robust Lane Detection Model Using Vertical Spatial Features and Contextual Driving Information. Sensors, 21(3), 708. https://doi.org/10.3390/s21030708