Learning Region-Based Attention Network for Traffic Sign Recognition

Abstract

1. Introduction

- (1)

- (2)

- Based on the attention mechanism, we designed two types of attention modules and a new type of traffic sign classification network called PFANet overcoming the complex factors of the ice and snow environment, and we achieved the state-of-the-art 93.570% accuracy with the ITSRB and verified its robustness on the public dataset.

2. Related Works

2.1. Classification of Traffic Signs

2.2. Attention Mechanism and Applications in TSR

3. Materials and Methods

3.1. Benchmark

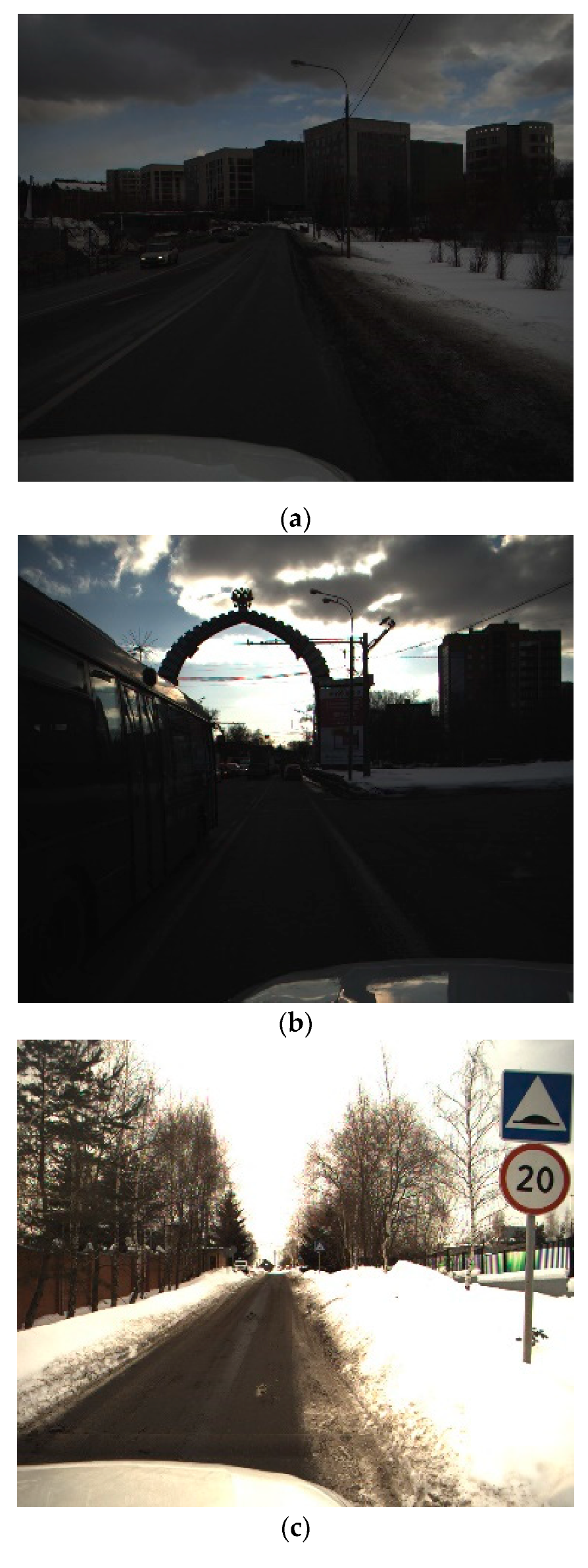

3.1.1. Data Collection

3.1.2. ITSDB

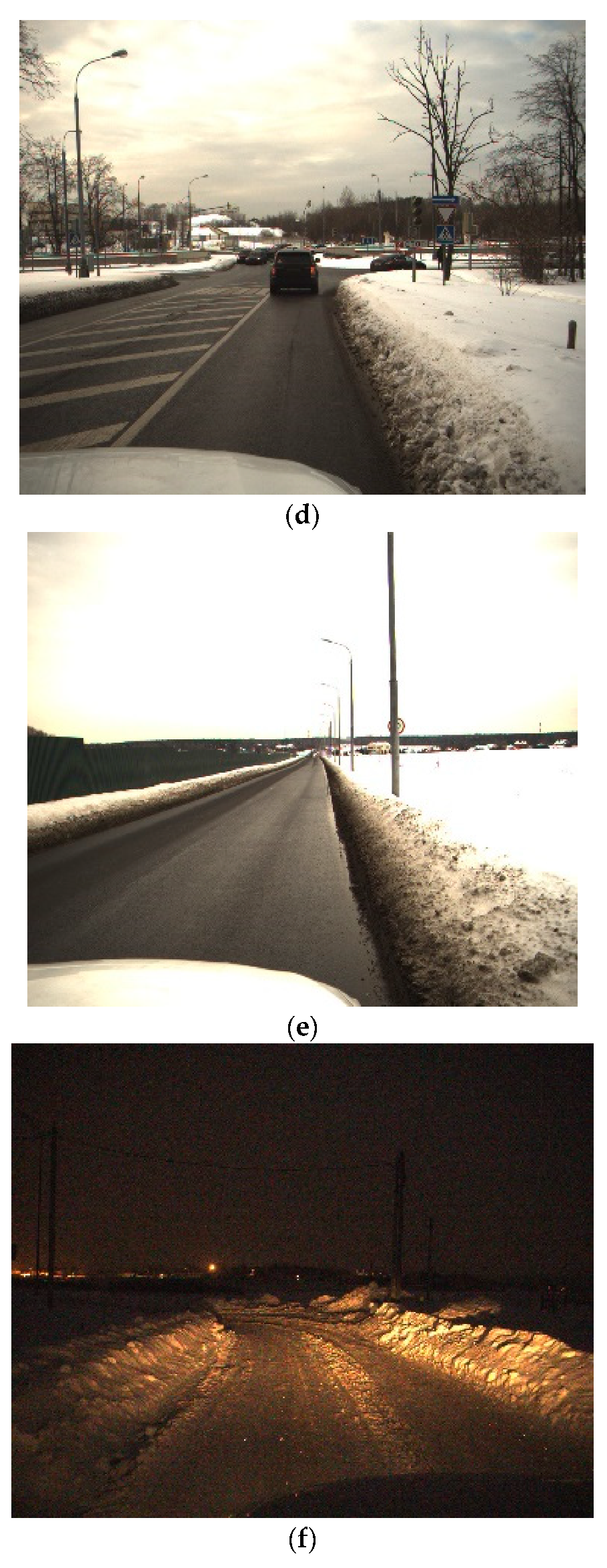

3.1.3. ITSRB

3.2. Method

3.2.1. Attention Module

3.2.2. Parallel Fusion Attention Network

4. Results

4.1. Evaluation Metrics

- IoU is the ratio of intersection and union of the predicted bounding box and the true bounding box. The mathematic form is shown as:

- Precision is defined as the ratio of true detected items in all the detected items. Let True denote the true classification and Positive denote detected. The mathematic form is shown as:

- Recall is defined as the ratio of true detected items in all the items that should be detected. The mathematic form is shown as:

- Average Precision (AP) is defined as the average of the average precision value when the recall value ranging from 0 to 1.00 by 0.01 with ten different thresholds for the IoU (Intersection over Union) ranging from 0.5 to 0.95 by 0.05. The mathematic form is shown as:

- APS, APM, and APL are defined the same as AP, while only accounting for objects of fixed size including Small (denoted as S), Medium (denoted as M), and Large (denoted as L) instead of all objects detected. The mathematic form is shown as:

- AP50, and AP75 are defined the same as AP, while only taking the threshold of 0.5 and 0.75 into account instead of averaging the corresponding value of threshold from 0.5 to 0.95. The mathematic form is shown as:

- Accuracy is defined as the percentage of correctly predicted samples among the whole sample set. The mathematic form is shown as:

4.2. Detection

4.2.1. Experiment Description

4.2.2. Performance Comparison

4.3. Classification

4.3.1. Experiment Description

4.3.2. Performance Comparison

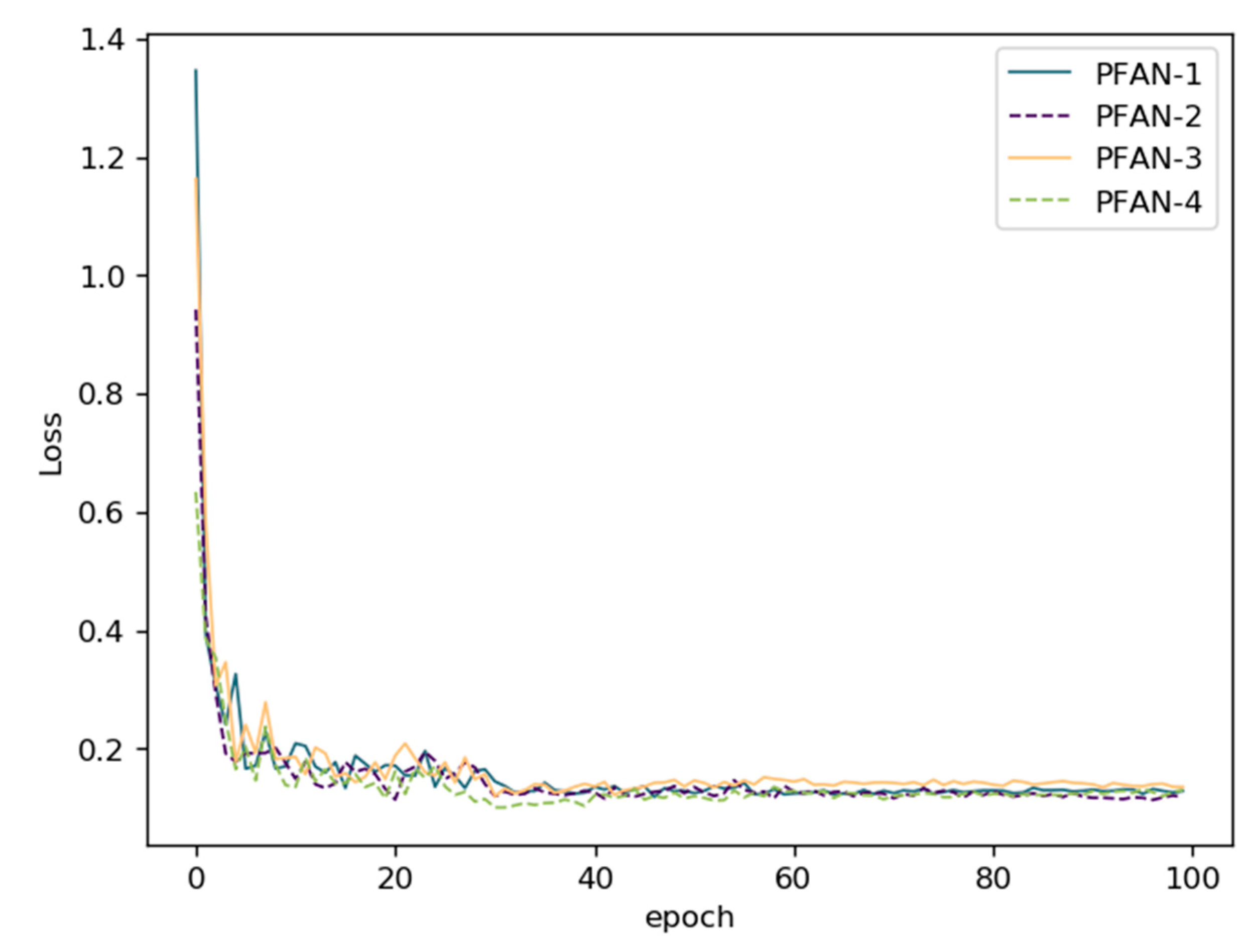

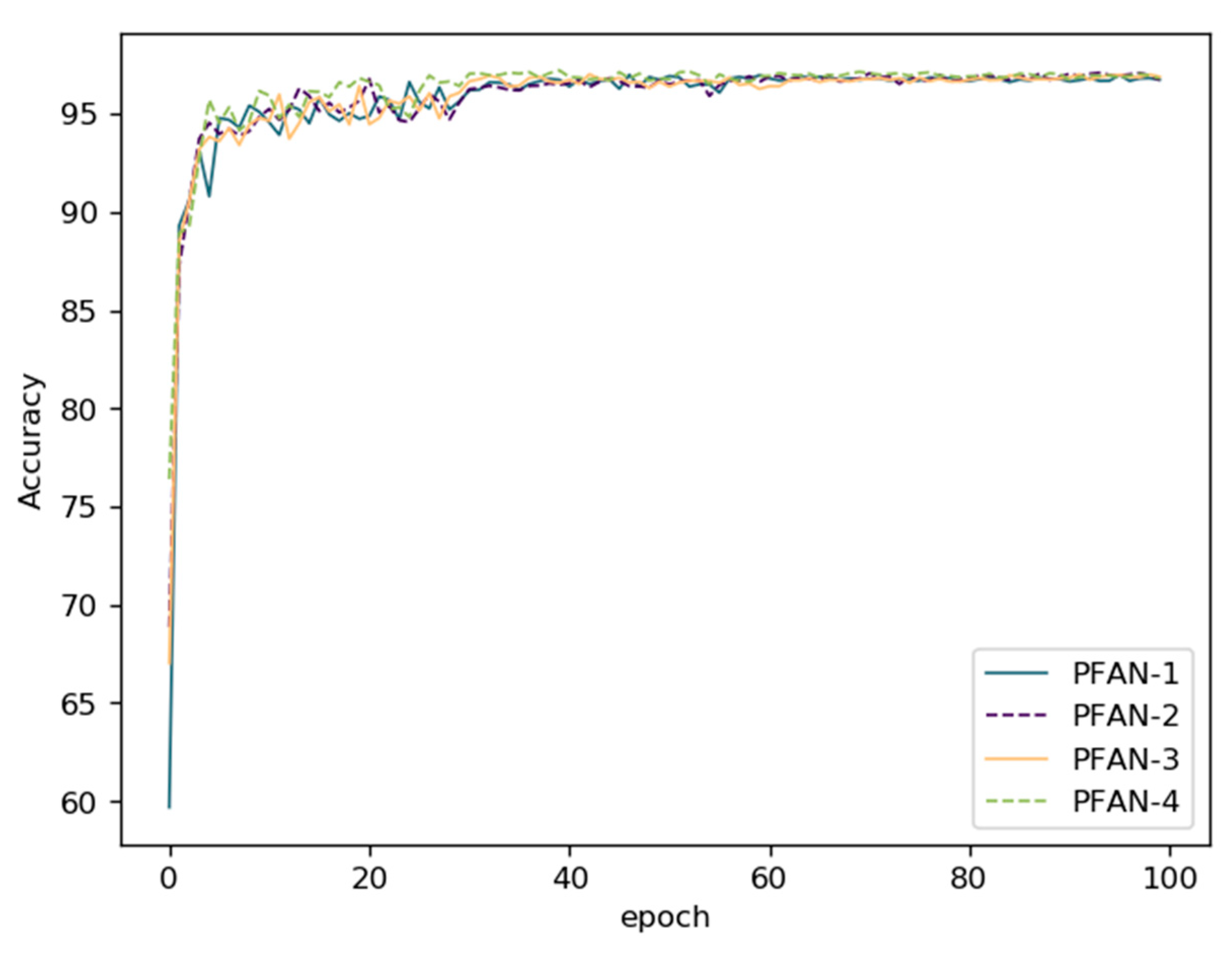

4.4. Ablation Study

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. The German traffic sign recognition benchmark: A multi-class classification competition. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011. [Google Scholar]

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. Man vs. computer: Benchmarking machine learning algorithms for traffic sign recognition. Neural Netw. 2012, 32, 323–332. [Google Scholar] [CrossRef] [PubMed]

- Mathias, M.; Timofte, R.; Benenson, R.; Van Gool, L. Traffic Sign Recognition—How far are we from the solution? In Proceedings of the International Joint Conference on Neural Networks (IJCNN 2013), Dallas, TX, USA, 4–9 August 2013. [Google Scholar]

- Larsson, F.; Felsberg, M. Using Fourier Descriptors and Spatial Models for Traffic Sign Recognition. In Proceedings of the 17th Scandinavian Conference on Image Analysis, Ystad, Sweden, 23–25 May 2011; pp. 238–249. [Google Scholar]

- Zhu, Z.; Liang, D.; Zhang, S.; Huang, X.; Li, B.; Hu, S. Traffic-sign detection and classification in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Han, Y.; Virupakshappa, K.; Oruklu, E. Robust traffic sign recognition with feature extraction and k-NN classification methods. In Proceedings of the 2015 IEEE International Conference on Electro/Information Technology (EIT), Dekalb, IL, USA, 21–23 May 2015; pp. 484–488. [Google Scholar]

- Zaklouta, F.; Stanciulescu, B.; Hamdoun, O. Traffic sign classification using K-d trees and Random Forests. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 2151–2155. [Google Scholar]

- Maldonado-Bascon, S.; Lafuente-Arroyo, S.; Gil-Jimenez, P.; Gomez-Moreno, H.; Lopez-Ferreras, F. Road-Sign Detection and Recognition Based on Support Vector Machines. IEEE Trans. Intell. Transp. Syst. 2007, 8, 264–278. [Google Scholar] [CrossRef]

- Fleyeh, H.; Dougherty, M. Traffic sign classification using invariant features and Support Vector Machines. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 530–535. [Google Scholar]

- Ciresan, D.; Meier, U.; Masci, J.; Schmidhuber, J. A committee of neural networks for traffic sign classification. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 1918–1921. [Google Scholar]

- Sermanet, P.; LeCun, Y. Traffic sign recognition with multi-scale Convolutional Networks. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 2809–2813. [Google Scholar]

- Ciresan, D.; Meier, U.; Schmidhuber, J. Multi-column deep neural networks for image classification. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Wong, A.; Shafiee, M.J.; Jules, M.S. MicronNet: A Highly Compact Deep Convolutional Neural Network Architecture for Real-Time Embedded Traffic Sign Classification. IEEE Access 2018, 6, 59803–59810. [Google Scholar] [CrossRef]

- Li, J.; Wang, Z. Real-Time Traffic Sign Recognition Based on Efficient CNNs in the Wild. IEEE Trans. Intell. Transp. Syst. 2019, 20, 975–984. [Google Scholar] [CrossRef]

- Pavlov, A.L.; Karpyshev, P.A.; Ovchinnikov, G.V.; Oseledets, I.V.; Tsetserukou, D. IceVisionSet: Lossless video dataset collected on Russian winter roads with traffic sign annotations. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-resolution representations for labeling pixels and regions. arXiv 2019, arXiv:1904.04514. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra r-cnn: Towards balanced learning for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019. [Google Scholar]

- Huval, B.; Wang, T.; Tandon, S.; Kiske, J.; Song, W.; Pazhayampallil, J.; Andriluka, M.; Rajpurkar, P.; Migimatsu, T.; Cheng-Yue, R.; et al. An empirical evaluation of deep learning on highway driving. arXiv 2015, arXiv:1504.01716. [Google Scholar]

- Hou, Y.-L.; Hao, X.; Chen, H. A cognitively motivated method for classification of occluded traffic signs. IEEE Trans. Syst. ManCybern. Syst. 2016, 47, 255–262. [Google Scholar] [CrossRef]

- Khan, J.A.; Yeo, D.; Shin, H. New dark area sensitive tone mapping for deep learning based traffic sign recognition. Sensors 2018, 18, 3776. [Google Scholar] [CrossRef] [PubMed]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.S.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Luong, M.-T.; Pham, H.; Manning, C.D. Effective approaches to attention-based neural machine translation. arXiv 2015, arXiv:1508.04025. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Arcos-García, A.; Álvarez-García, J.A.; Soria-Morillo, L.M. Deep neural network for traffic sign recognition systems: An analysis of spatial transformers and stochastic optimisation methods. Neural Netw. 2018, 99, 158–165. [Google Scholar] [CrossRef] [PubMed]

- Uittenbogaard, R.; Sebastian, C.; Viiverberg, J.; Boom, B.; De With, P.H. Conditional Transfer with Dense Residual Attention: Synthesizing traffic signs from street-view imagery. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar]

- Zhang, J.; Hui, L.; Lu, J.; Zhu, Y. Attention-based Neural Network for Traffic Sign Detection. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar]

- Chung, J.H.; Kim, D.W.; Kang, T.K.; Lim, M.T. Traffic Sign Recognition in Harsh Environment Using Attention Based Convolutional Pooling Neural Network. Neural Process. Lett. 2020, 51, 2551–2573. [Google Scholar] [CrossRef]

- Qiu, Z.; Qiu, K.; Fu, J.; Fu, D. Learning Recurrent Structure-Guided Attention Network for Multi-person Pose Estimation. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

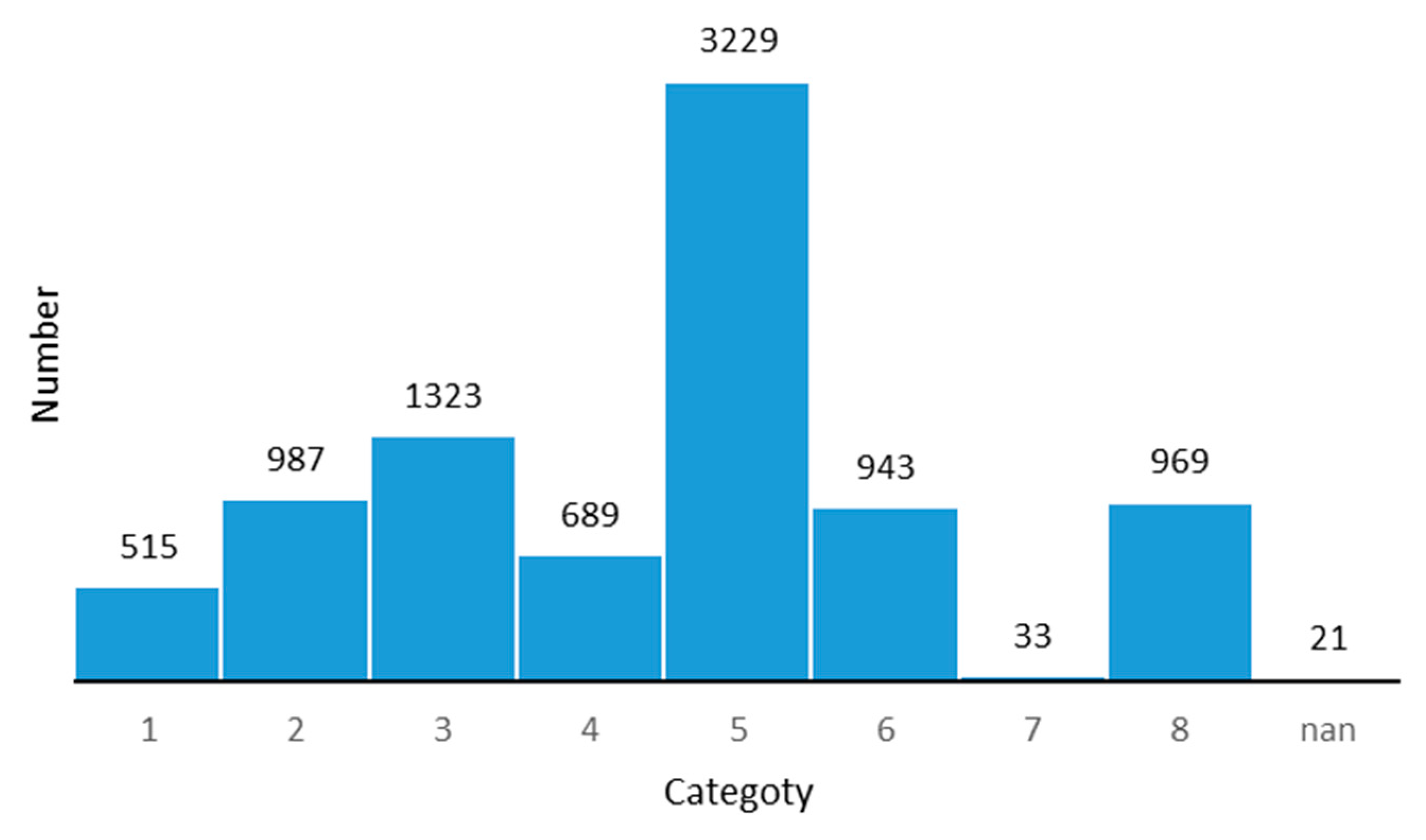

” denotes scaled matrix multiplication. “

” denotes scaled matrix multiplication. “ ” denotes element-wise sum Wk, Wv, and Wq. are 1 × 1 Convs with zero padding and the stride of 1. Tem denotes the scaled dot operation output. (b) PFAN-B block, “

” denotes element-wise sum Wk, Wv, and Wq. are 1 × 1 Convs with zero padding and the stride of 1. Tem denotes the scaled dot operation output. (b) PFAN-B block, “ ” denotes the down-sample operation, implemented by 2 × 2 MaxPooling.

” denotes the down-sample operation, implemented by 2 × 2 MaxPooling.

” denotes scaled matrix multiplication. “

” denotes scaled matrix multiplication. “ ” denotes element-wise sum Wk, Wv, and Wq. are 1 × 1 Convs with zero padding and the stride of 1. Tem denotes the scaled dot operation output. (b) PFAN-B block, “

” denotes element-wise sum Wk, Wv, and Wq. are 1 × 1 Convs with zero padding and the stride of 1. Tem denotes the scaled dot operation output. (b) PFAN-B block, “ ” denotes the down-sample operation, implemented by 2 × 2 MaxPooling.

” denotes the down-sample operation, implemented by 2 × 2 MaxPooling.

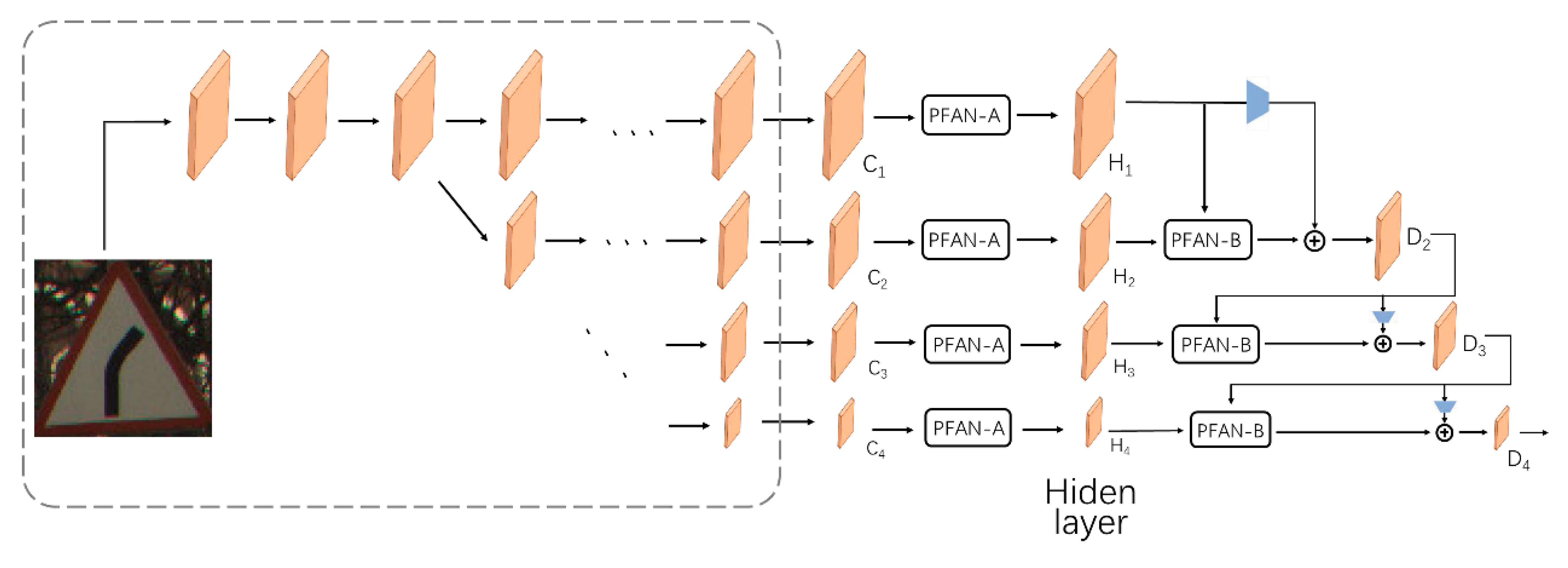

” denotes down-sampling implemented by 2 × 2 MaxPooling and “

” denotes down-sampling implemented by 2 × 2 MaxPooling and “ ” denotes an element-wise sum. In the dashed rectangle, the horizontal arrow represents the bottleneck or the basic block operation, and the diagonally downward arrow indicates down-sampling implemented by 2 × 2 MaxPooling. Arrows outside the rectangle only represent the data flow direction.

” denotes an element-wise sum. In the dashed rectangle, the horizontal arrow represents the bottleneck or the basic block operation, and the diagonally downward arrow indicates down-sampling implemented by 2 × 2 MaxPooling. Arrows outside the rectangle only represent the data flow direction.

” denotes down-sampling implemented by 2 × 2 MaxPooling and “

” denotes down-sampling implemented by 2 × 2 MaxPooling and “ ” denotes an element-wise sum. In the dashed rectangle, the horizontal arrow represents the bottleneck or the basic block operation, and the diagonally downward arrow indicates down-sampling implemented by 2 × 2 MaxPooling. Arrows outside the rectangle only represent the data flow direction.

” denotes an element-wise sum. In the dashed rectangle, the horizontal arrow represents the bottleneck or the basic block operation, and the diagonally downward arrow indicates down-sampling implemented by 2 × 2 MaxPooling. Arrows outside the rectangle only represent the data flow direction.

| Size Category | Size | ITSDB (Ours) | GTSDB [2] |

|---|---|---|---|

| Small | 28,487 (65.8%) | 8778 (16.9%) | |

| Medium | 11,916 (27.5%) | 40,091 (77.3%) | |

| Large | 2887 (3.7%) | 2970 (5.7%) |

| Backbone | AP | AP50 | AP75 | APS | APM | APL | |

|---|---|---|---|---|---|---|---|

| Faster-RCNN [32] | Resnet-50 | 46.5 | 66.6 | 53.8 | 33.1 | 71.5 | 89.3 |

| HRNetv2p-w18 [16] | - | 29.8 | 40.3 | 33.6 | 11.5 | 65.0 | 87.7 |

| Libra-RCNN [17] | Resnet-50 | 47.0 | 64.4 | 54.8 | 34.0 | 70.7 | 90.4 |

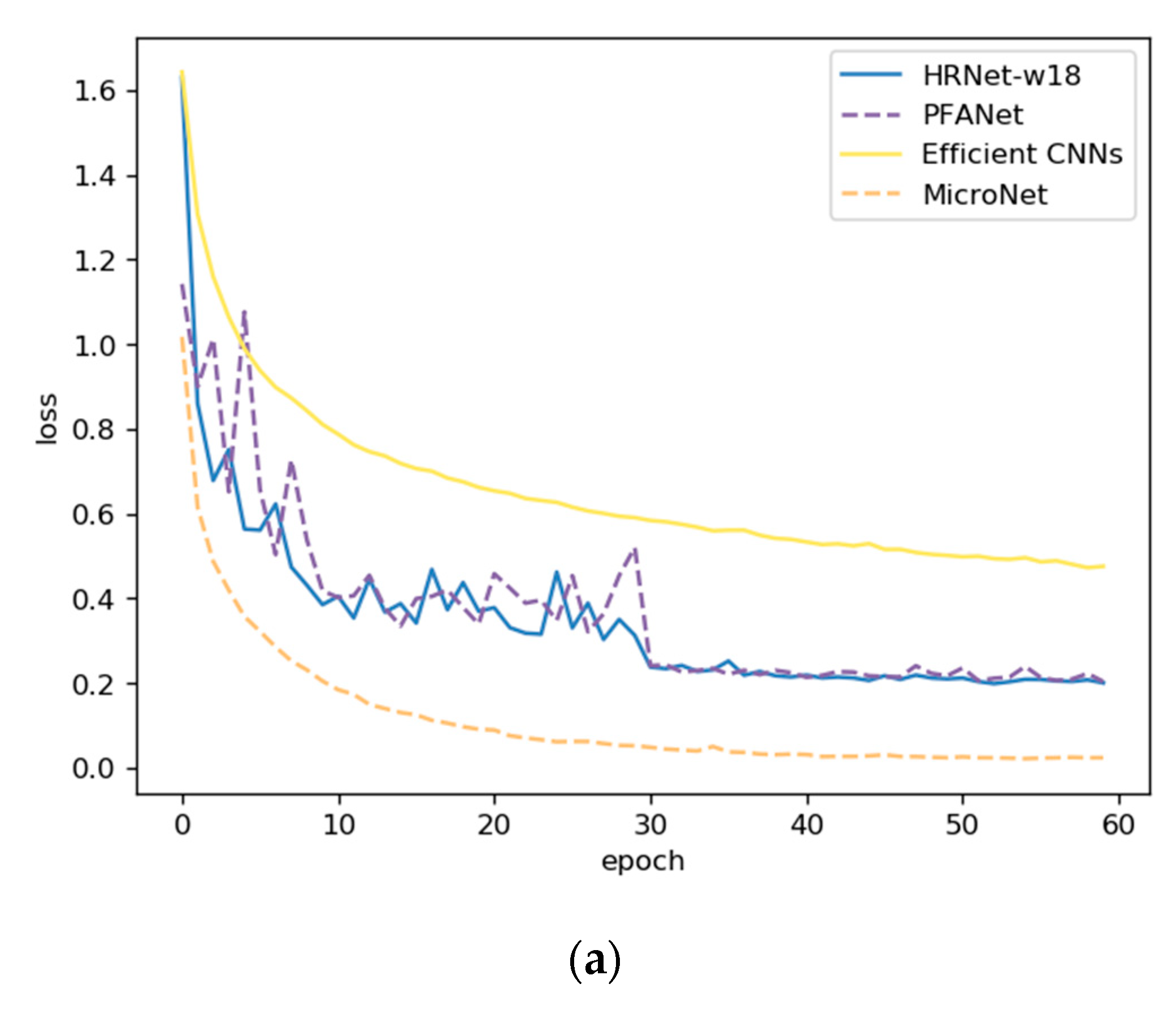

| Method | #Params. | Data Augmentation | Input Size | Epoch | Accuracy |

|---|---|---|---|---|---|

| HRNet-w18 [16] | 19.3 M | × | 224 × 224 × 3 | 60 | 93.42 |

| EfficientNet [14] | 0.95 M | × | 48 × 48 × 3 | 60 | 84.04 |

| MicroNet [13] | 0.51 M | × | 48 × 48 × 3 | 60 | 83.72 |

| PFANet (ours) | 27.6 M | × | 224 × 224 × 3 | 60 | 93.57 |

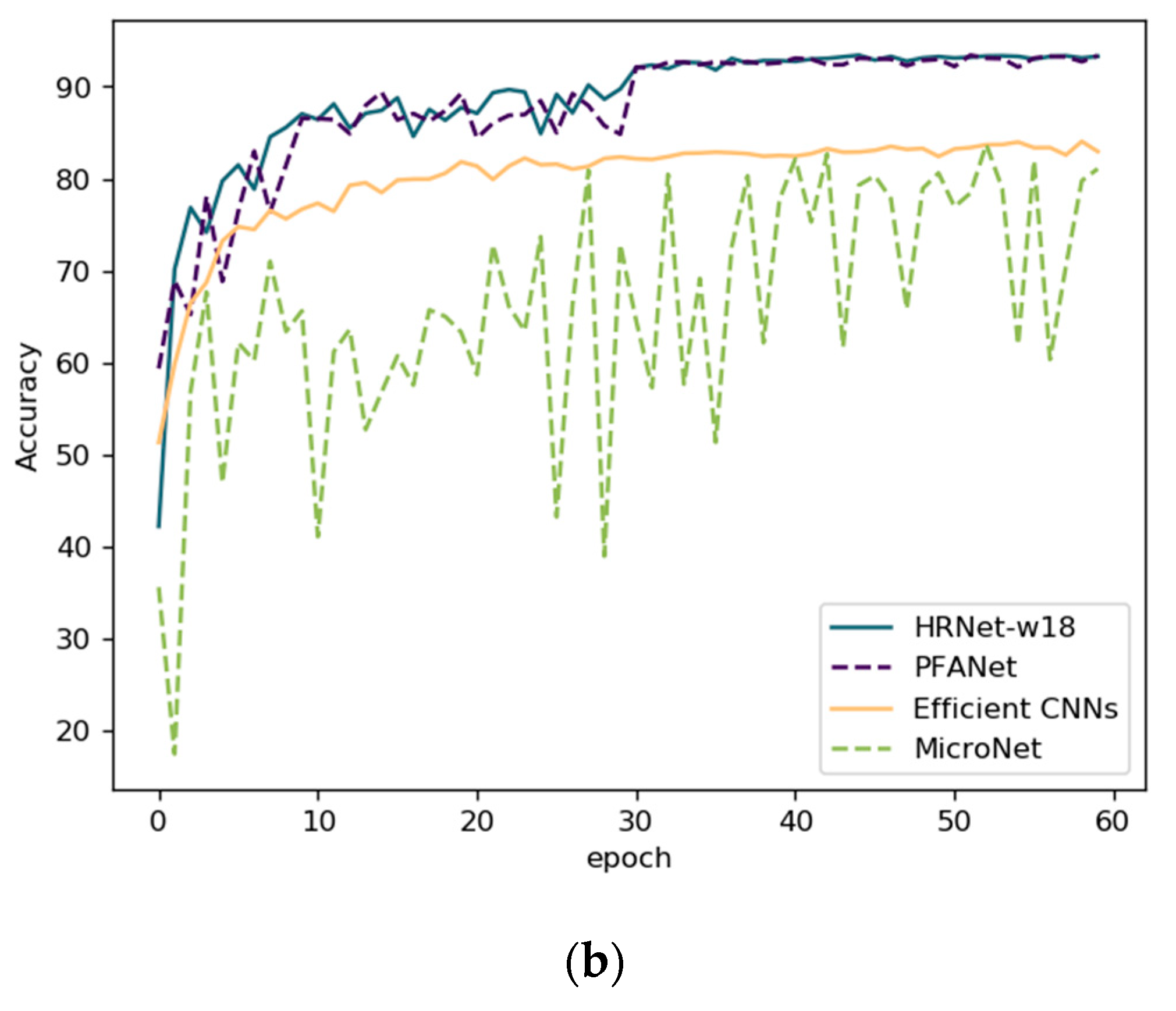

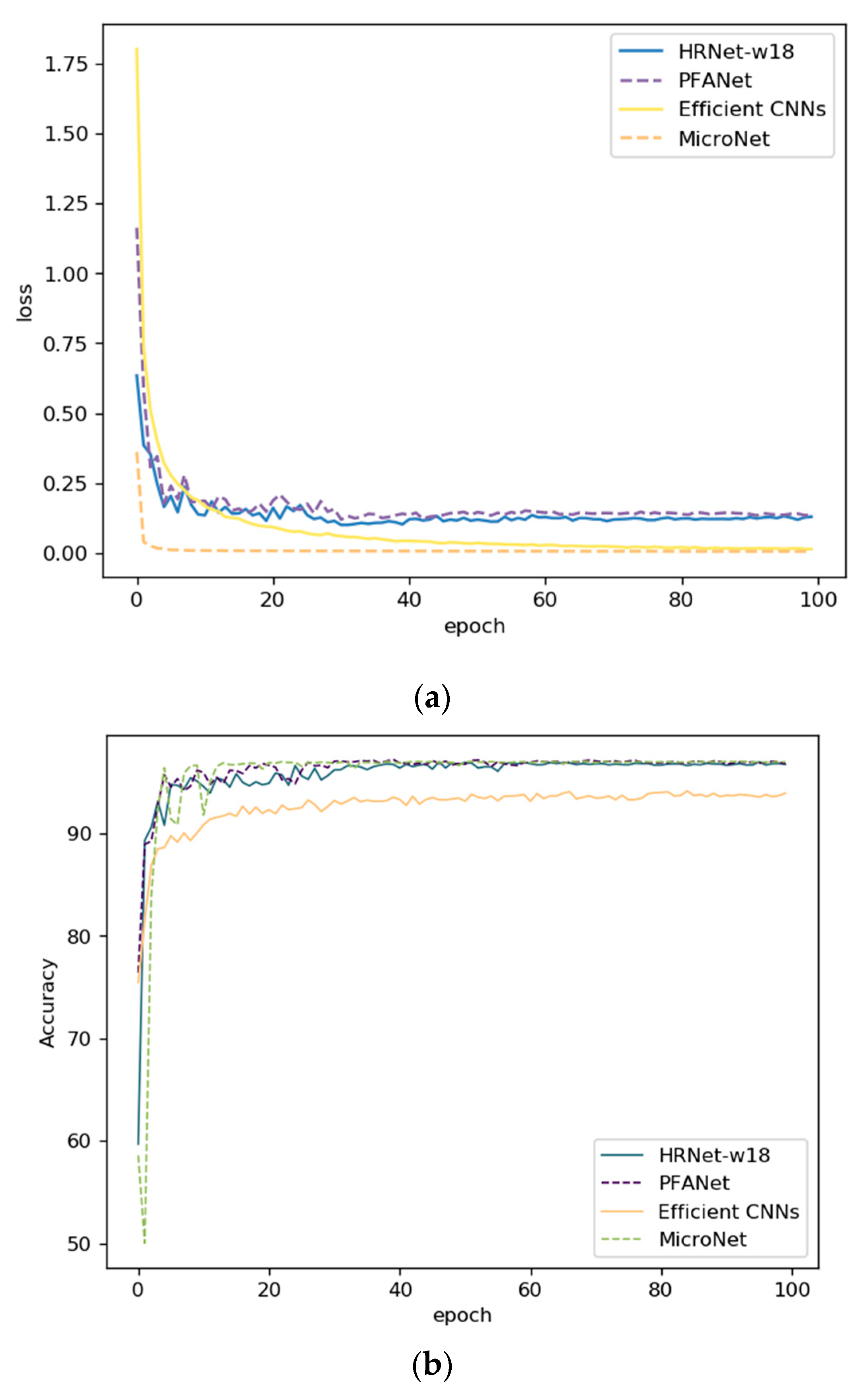

| Method | #Params. | Data Augmentation | Input Size | Epoch | Accuracy |

|---|---|---|---|---|---|

| sermanet * [11] | × | √ | 32 × 32 × 3 | × | 99.17 |

| IDSIA * [10] | × | √ | 48 × 48 × 3 | × | 99.46 |

| HRNet-w18 [16] | 19.3 M | × | 224 × 224 × 3 | 100 | 96.80 |

| EfficientNet [14] | 0.95 M | × | 48 × 48 × 3 | 100 | 94.14 |

| MicroNet [13] | 0.51 M | × | 48 × 48 × 3 | 100 | 97.02 |

| PFANet (ours) | 27.6 M | × | 224 × 224 × 3 | 100 | 97.21 |

| Method | PFAN-A | PFAN-B | #Params. | GFLOPs | Accuracy |

|---|---|---|---|---|---|

| HRNet [16] | × | × | 21.3 M | 3.99 | 96.80 |

| PFAN-2 | √ | × | 23.5 M | 4.56 | 97.09 |

| PFAN-3 | × | √ | 23.5 M | 4.42 | 97.02 |

| PFANet (ours) | √ | √ | 27.7 M | 4.99 | 97.21 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, K.; Zhan, Y.; Fu, D. Learning Region-Based Attention Network for Traffic Sign Recognition. Sensors 2021, 21, 686. https://doi.org/10.3390/s21030686

Zhou K, Zhan Y, Fu D. Learning Region-Based Attention Network for Traffic Sign Recognition. Sensors. 2021; 21(3):686. https://doi.org/10.3390/s21030686

Chicago/Turabian StyleZhou, Ke, Yufei Zhan, and Dongmei Fu. 2021. "Learning Region-Based Attention Network for Traffic Sign Recognition" Sensors 21, no. 3: 686. https://doi.org/10.3390/s21030686

APA StyleZhou, K., Zhan, Y., & Fu, D. (2021). Learning Region-Based Attention Network for Traffic Sign Recognition. Sensors, 21(3), 686. https://doi.org/10.3390/s21030686