A Semi-Supervised Transfer Learning with Dynamic Associate Domain Adaptation for Human Activity Recognition Using WiFi Signals †

Abstract

:1. Introduction

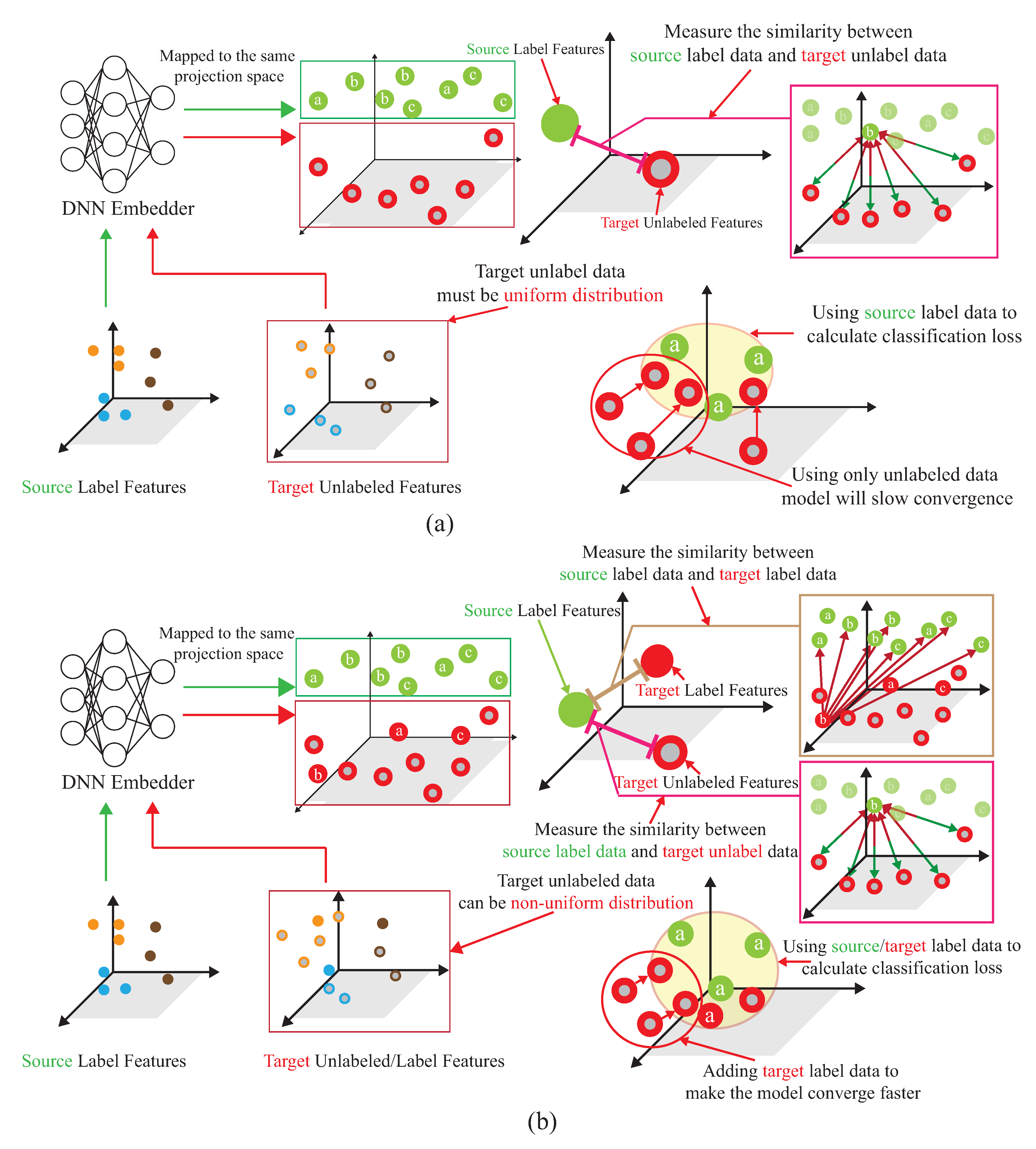

- An existing semi-learning learning work, called an associate domain adaption (ADA) scheme, is developed in [20], while the target domain is limited to be unlabeled dataset. The data of the source domain and the target domain are mapped in the same space through the similarity of relevance, under all data in the target domain that are unlabeled. To further provide a dynamically adjusted ratio of labeled and unlabeled datasets in the target domain, we modified the existing ADA algorithm to dynamic associative domain adaptation (DADA). We design a new semi-supervised transfer learning with dynamic associate domain adaption (DADA) capability for HAR. An improvement of this work is our proposed DADA scheme with the capability of a dynamically adjusted ratio, which is dynamically dependent on the target environment state. To improve the recognition accuracy, we may increase the dynamically adjusted ratio if the target domain encounters a new undesirable environment.

- The traditional ADA [20] has the limitation of data balance for the target domain, To overcome the problem of data imbalance, our proposed DADA also overcomes the problem of data imbalance. The data imbalance issue allows for the data imbalance to occur in our target environment. Our practical experimental results show that the average accuracy of DADA is 4.17% higher than that of ADA if there is data imbalance problem, but only 1.08% if there is no data imbalance problem.

- To increase the recognition accuracy, an attention-based DenseNet model (AD) is designed as our new training network. This modifies the existing DenseNet model and ECA-NeT (efficient channel attention-net) model. To reduce the data size, we adopted the bottleneck structure when ending denseblock and entering the next layer. It halves the data size and compresses the number of features. These operations may lose a lot of hidden information, so we adopt the ECA structure to retain important information. To avoid the loss of hidden information, we incorporate the ECA structure to strengthen the important channels of previous layers, and bring it to the next denseblock of DenseNet. Our experimental results show that the accuracy of AD as our training network is increased by 4.13%, compared to the existing HAR-MN-EF scheme [19].

2. Background

2.1. Related Works

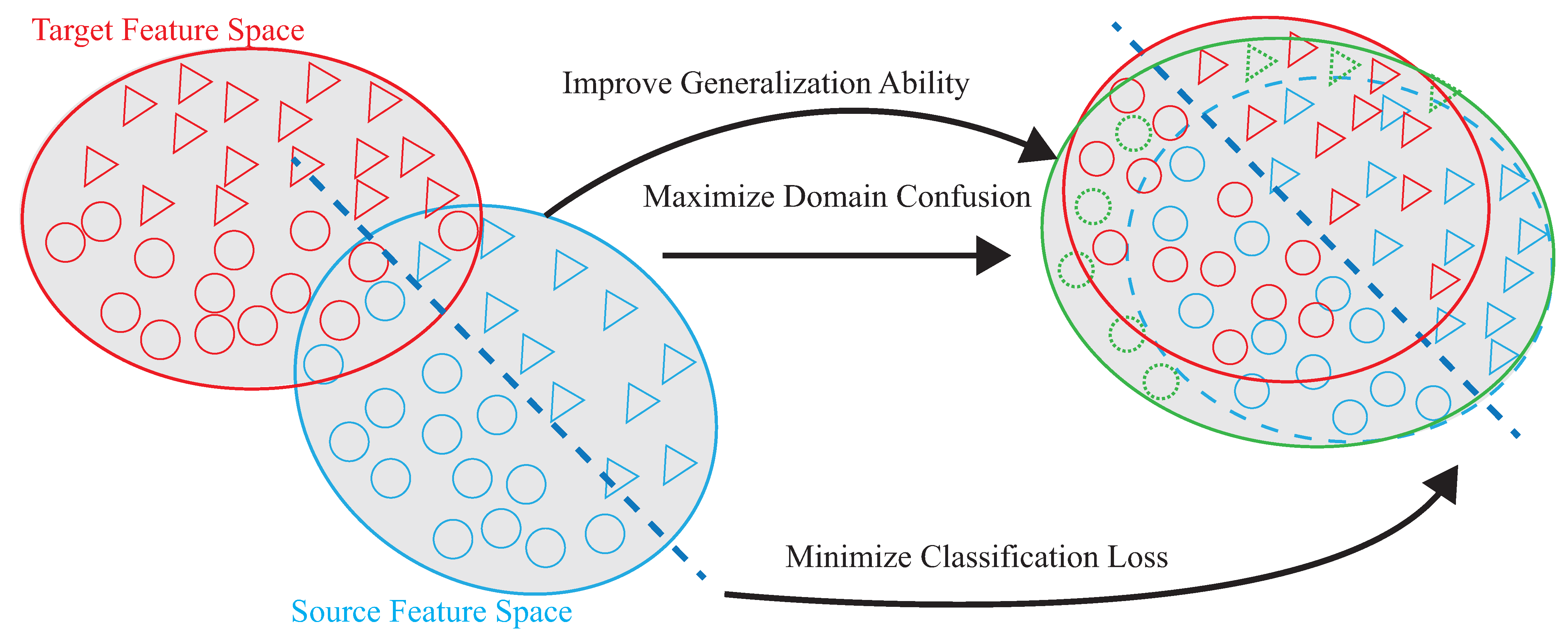

2.2. Motivation

3. Preliminaries

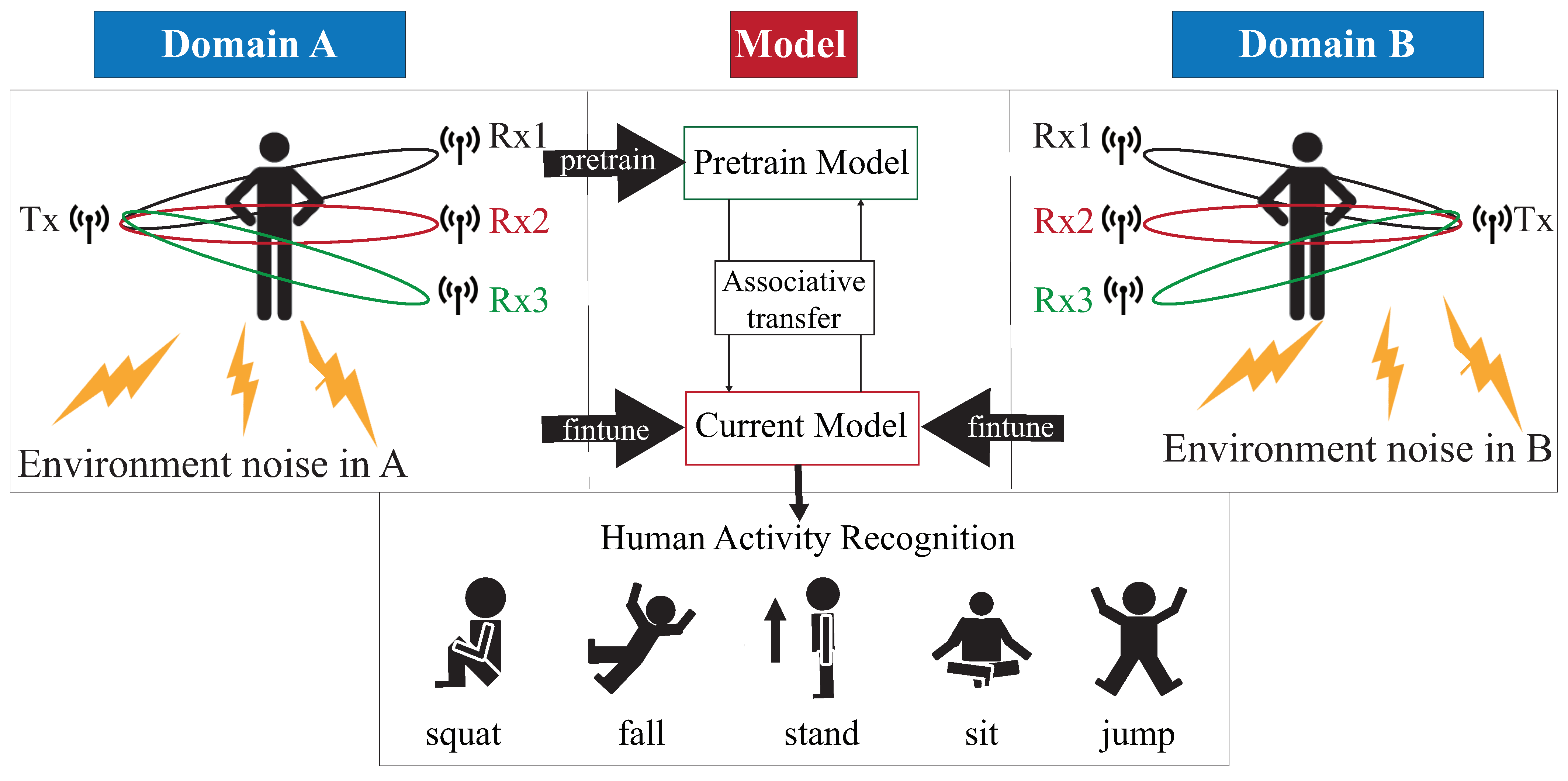

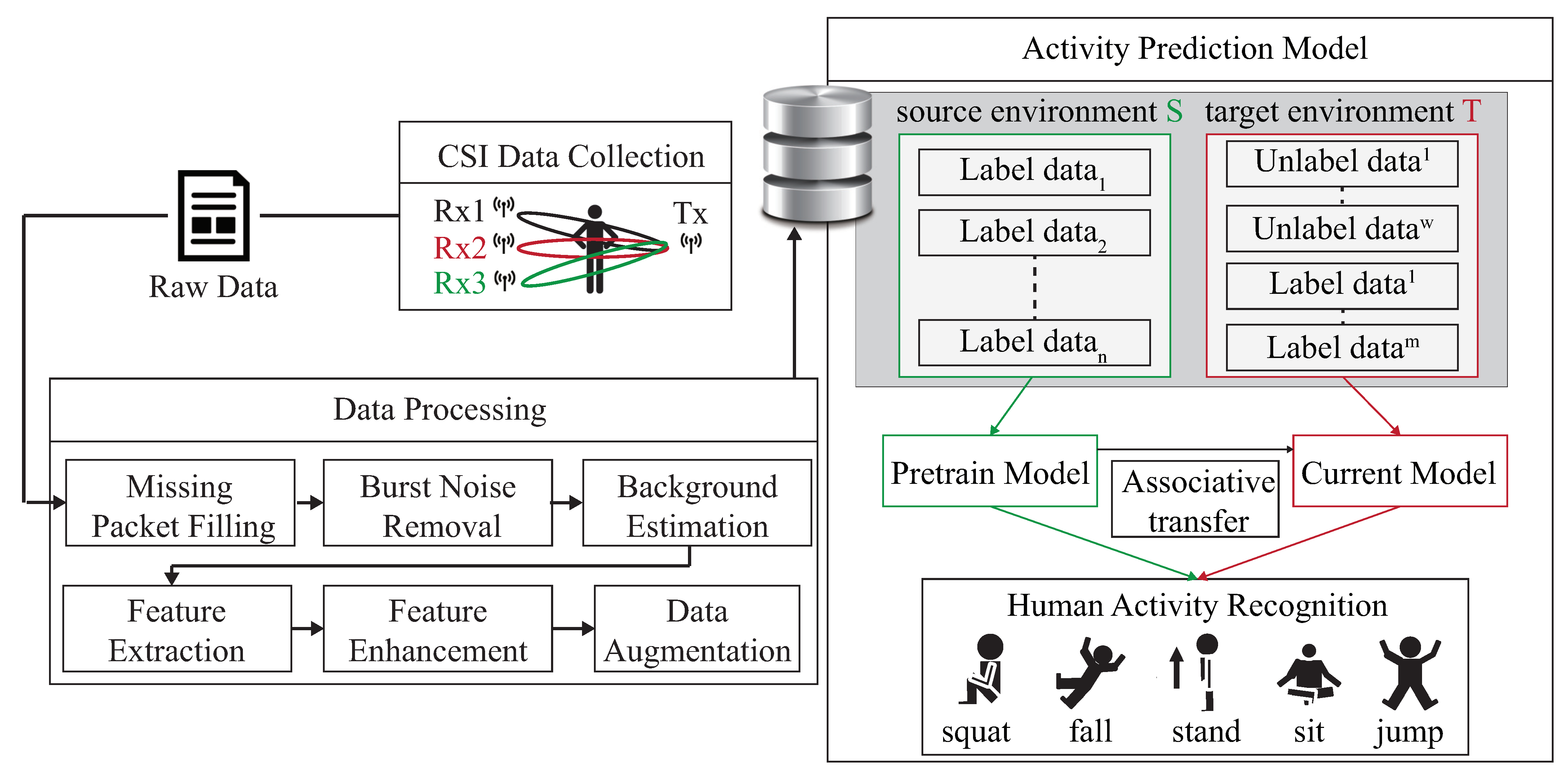

3.1. System Model

3.2. Problem Formulation

3.3. Basic Idea

4. A Semi-Supervised Transfer Learning with Dynamic Associate Domain Adaptation for HAR

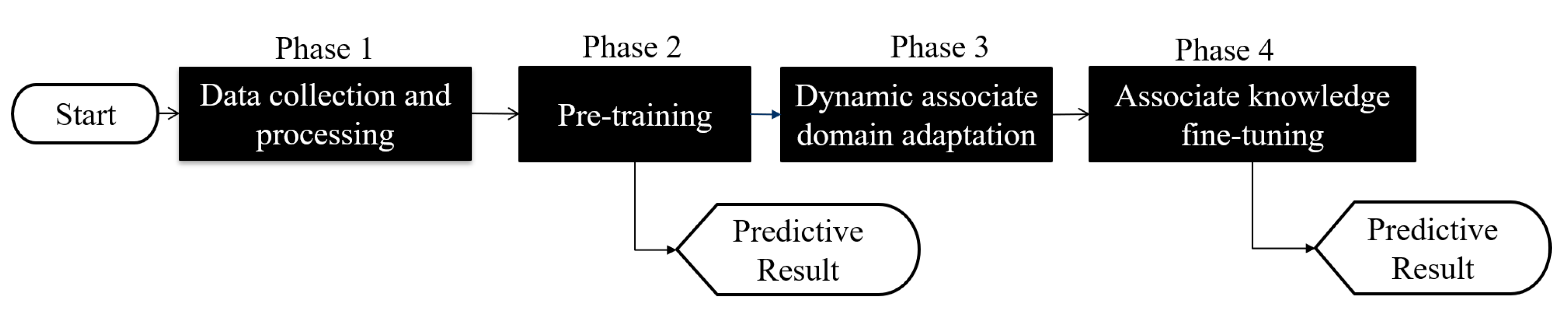

- (1)

- Data collection and processing phase: This phase aims to collect CSI data by keeping the environment reinforcement, and avoiding the hardware defects. The main work of this phase is to discard redundant information, retain the characteristics of enhanced activity, and reduce irrelevant information.

- (2)

- Pre-training phase: This phase is to build an attention-based DenseNet (AD), as our training network. In AD, DenseNet is adopted as backbone network and further add the ECA structure to retain the important training information. The activity classification is pre-trained in this phase through feature reuse and attention mechanism for the transfer training.

- (3)

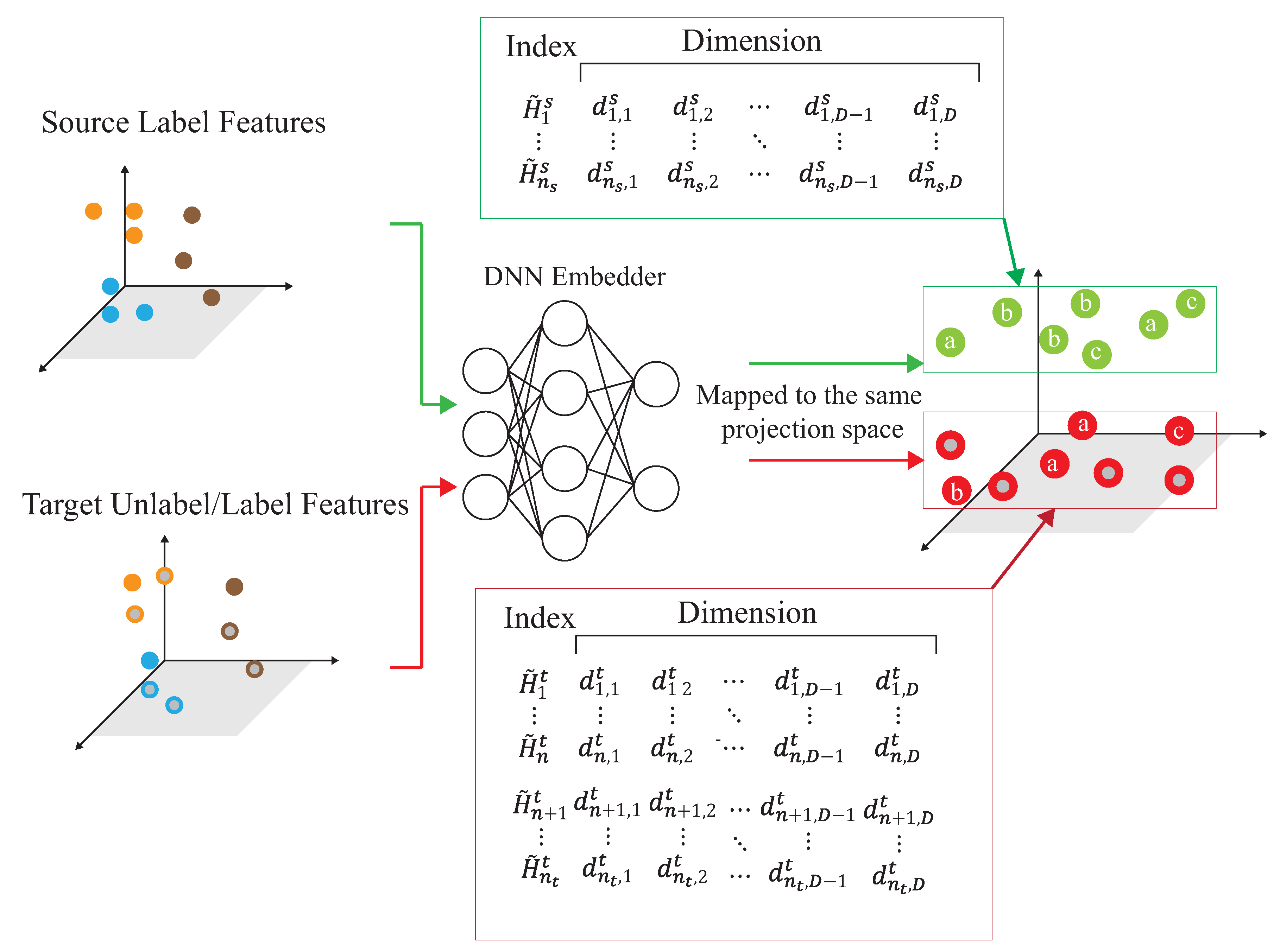

- Dynamic associate domain adaptation phase: This phase aims to project the features of two different domains into the same space through DNN embedding by using a dynamic associate domain adaptation algorithm (DADA). The dynamic associate domain adaptation is to improve previous work, associate domain adaptation, to further consider the data imbalance problem. In addition, our dynamic associate domain adaptation can dynamically adjust the ratio of labeled dataset/unlabeled dataset.

- (4)

- Associate knowledge fine-tuning phase: In this phase, the HAR through the image has the characteristics of domain invariance, the weights of the shallow layers of the source domain learned previously are unchanged and frozen as a common feature, and the knowledge of the deep layers is transferred to new target domain for fine-tuning.

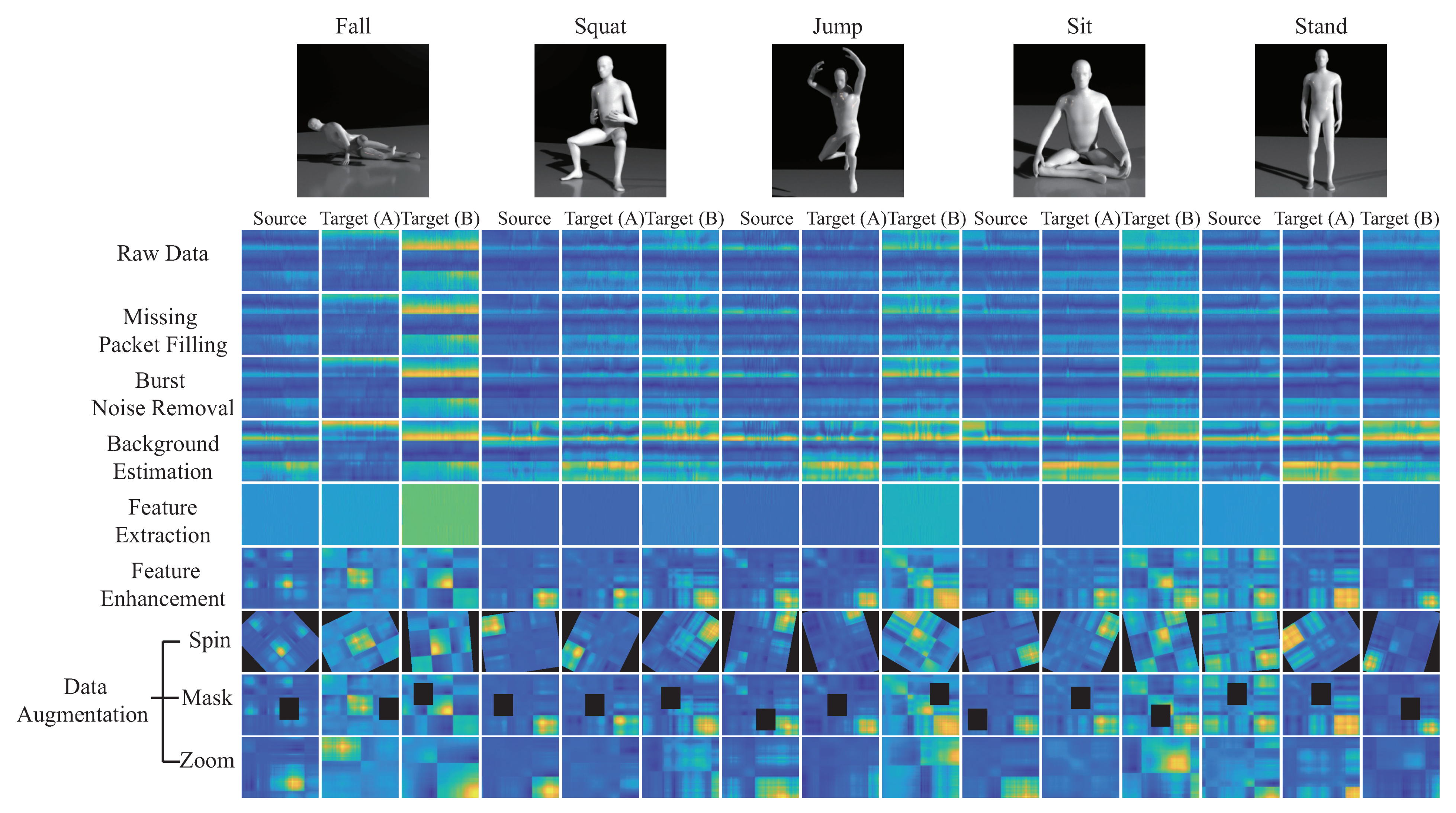

4.1. Data Collection and Processing Phase

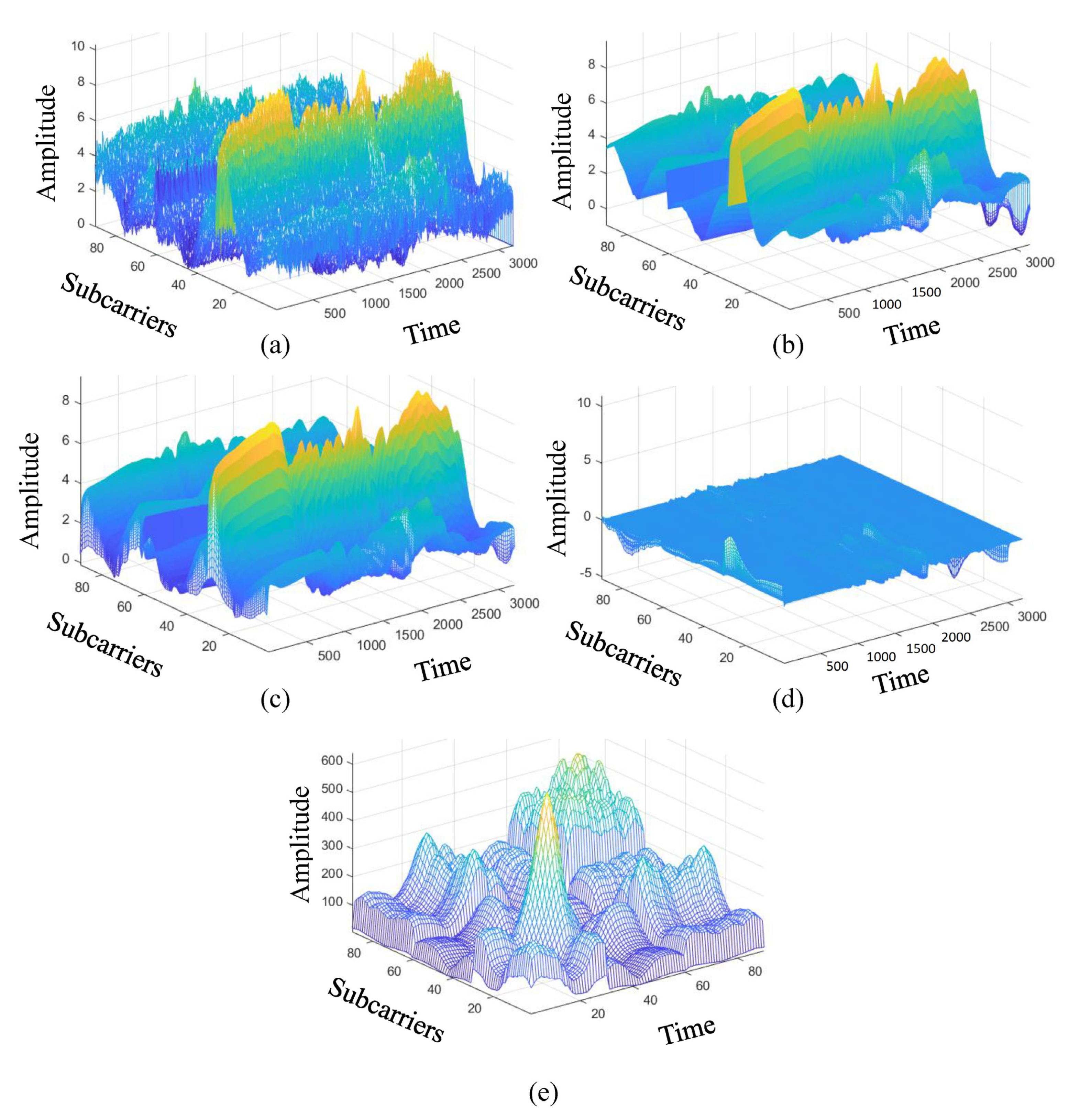

- S1.

- Missing packet filling: To solve the packet loss problem, a timer is set in RX, and the timer starts after the packet is received. To maintain the continuity of the signal, the linear interpolation is used to repair the lost packets. Assuming is a lost packet of , for , and , where and . The lost packet can be repaired by a simple linear interpolation function as:where and are represented as the previous packet and the next packet of , respectively. The output matrix ,where , is obtained, where .

- S2.

- Burst noise removal: To perform the burst noise removal operation on matrix due to the sudden noise caused by the environment and hardware equipment, we adopt the wavelet transform denoising [28] algorithm to matrix to obtain matrix as follow:A six-level discrete wavelet transform is used to decompose, and symlet is used as the wavelet base, and the denoised CSI packet sequence will be reconstructed through inverse transform. The output matrix is obtained for .

- S3.

- Background estimation: There exists some useless background information of human activities in matrix for , which are not related to human activities. These useless feature may reduce the quality of the trained model. We let be represented as for . The useless background information is represented by , which is also denoted as the static CSI vector at time i, represents as the dynamic CSI vectors, which is represented as the useful features of the human motion at time i, where is obtained by a number of activity-related features. The main work is to estimate the dynamic CSI vector , being generated by the human activities, so is initially obtained for by adopting the exponentially weighted moving average (EWMA) algorithm [29] as follows:where , is the forgetting factor, where . Each new estimated point is recursively calculated from the previous observations and attenuated by a forgetting factor. Consequently, the static CSI matrix , where .

- S4.

- If static CSI matrix , where , is finding, so the dynamic CSI matrix is obtained by , where .

- S5.

- Feature enhancement: The matrix size of the dynamic CSI matrix is and a width of 1 kHz s. We adopt the similar feature enhancement algorithm [19] to obtain the correlation matrix , where , to enhance the correlation between the signals on the subcarriers, which is more important than the time dimension data. The correlation matrix between the signals on all subcarriers M eliminates the time dimension information, leaving the characteristics of the correlation between the subcarriers. The matrix size of is smaller than the original size of , which is also reduced in the complexity of the trained model.

- S6.

- Data augmentation: To enhance the robustness of model training, the data augmentation technique is used to enlarge the training dataset to generate more training data. In this work, the correlation matrix will be augmented by adopting the spin, mask, and zoom methods.

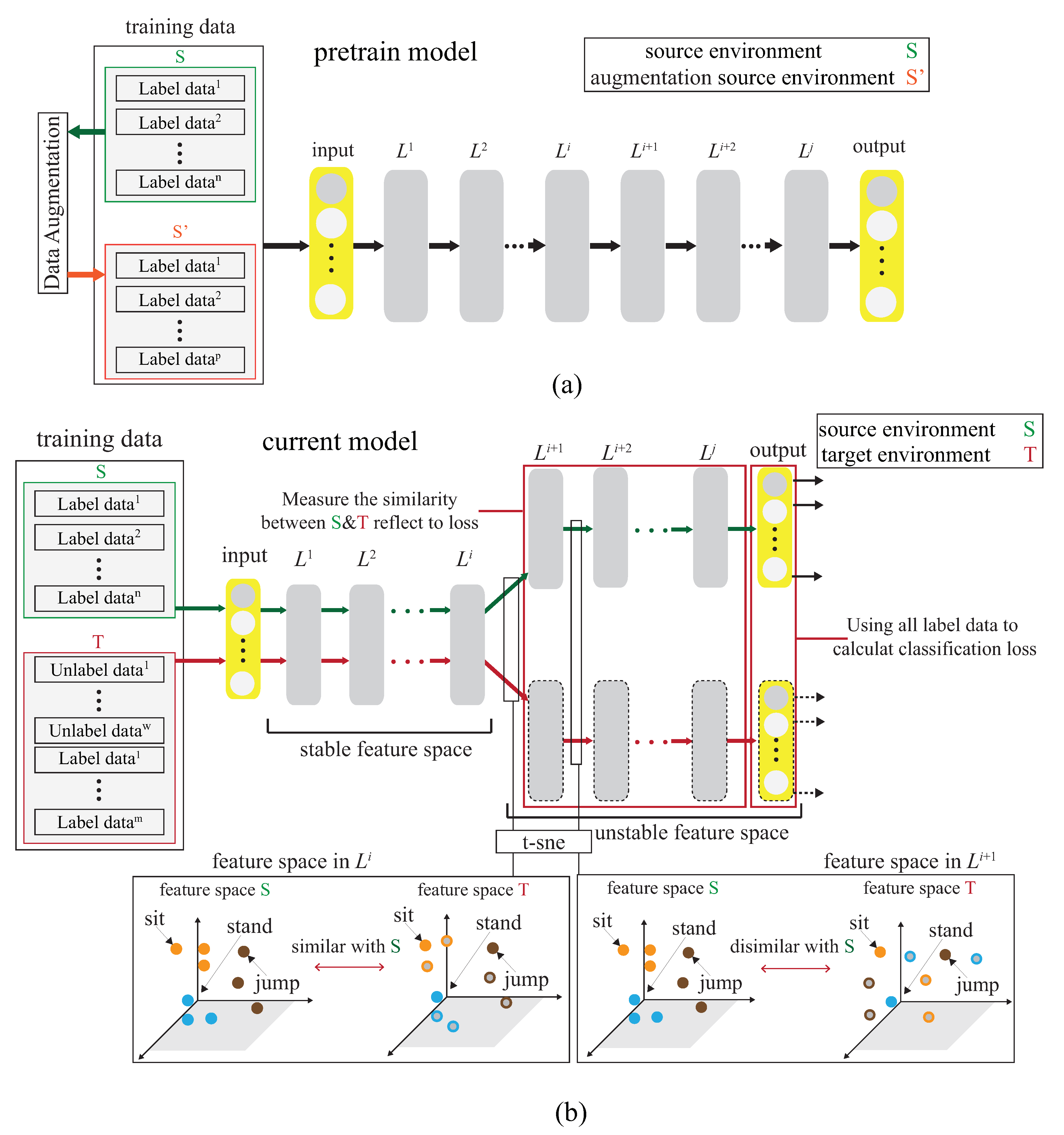

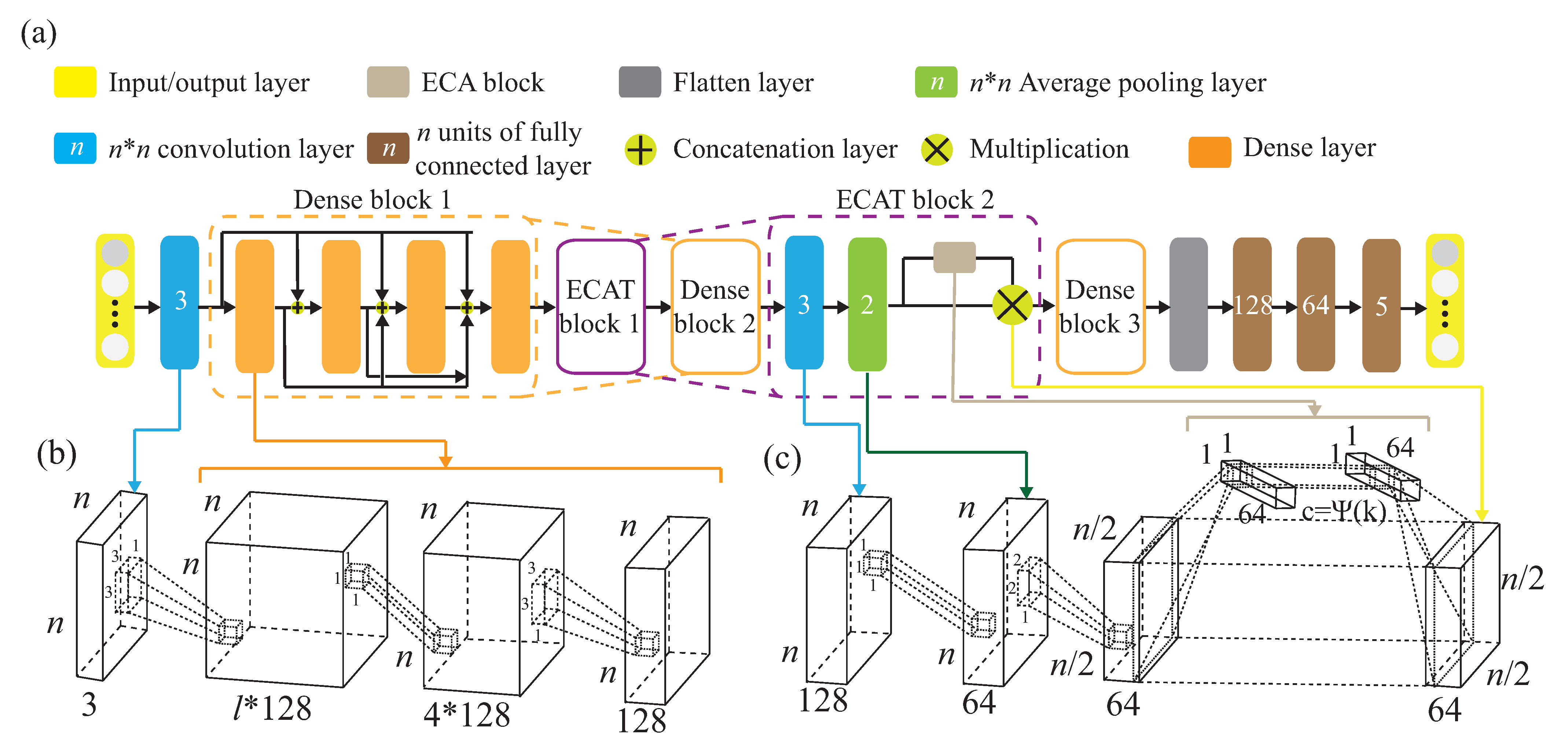

4.2. Pre-Training Phase

- S1.

- The basic training network of this work adopted a deep DenseNet model [30]. A deep DenseNet is constructed by a number of denseblocks. The dense connection [30] is also utilized, where each layer is repeatedly connected with all of the previous layers in the channel dimension. Note that denseblock directly connects feature maps from different layers. In denseblock, the output of all of the previous layers is connected as input, , for the next layer, and can be expressed as:where represents a non-linear transformation function, and represents the output of the layer in the denseblock. Each convolutional layer produces different feature maps. All of the feature maps obtained each time are called a channel. Assuming that each layer in the denseblock uses k convolution kernels, we set the growth rate to be k. Let the channel number of the feature map in the input layer be , and the last output channel number iswhere denseblock utilizes the bottleneck architecture to reduce the calculation cost. Specially, it is noted that each layer produces k output feature maps, and is the same as that of the growth rate (=k) and convolution kernels (=k).

- S2.

- To increase the recognition accuracy, an attention-based DenseNet (AD) model is designed as our new training network. This is the modified existing DenseNet model and ECA-NeT (efficient channel attention-net) model. This modified work is done as follows. The feature is extracted through the dense connection mechanism. For connecting two adjacent denseblock, they are connected by using ECAT architecture [31], while ECAT is a connection layer based on the channel attention mechanism ECA architecture [31]. Because the denseblock input channel number is determined by the number of channels of the denseblock in the upper layer, if the channel dimension is not reduced through the connection layer, this leads to too many parameters and inefficient calculation. We expect to add the channel attention mechanism to strengthen the correlation between feature channels to improve training accuracy. The feature map with the same size is maintained without destroying the features. The number of channels is reduced and the size of the feature map is halved. Suppose there are input channels, the number of output channels, , of a denseblock is expressed as:where , is the compression factor. The output feature, , by the connection layer is expressed as:where represents the non-linear transformation, which is repeatedly used in the transition layer [30], by adding the ECA network as a substructure, it is embedded in the connection layer to learn feature weights to achieve better training results.Through the global average pooling, we designed it to be flattened into . Through the convolution of , the mutual relationship between each channel and its neighboring channels is constructed, where is related to the channel dimension . The larger the number of channels is, the stronger the relationship to adjacent channels will be. The relationship can be expressed as , where is the approximate exponential mapping function, and is expressed as , given the channel dimension , the adaptation channel size [31], i.e., the number of neighboring channels, is expressed as:where and is set as 1 and 2. With ECAT, the channel and feature size is adjusted and the channel dimension is also reduced, and the important channel weight can be increased through the channel attention mechanism. The weight is expressed as:where is an adaptation non-linear transformation which is composed of global average pooling and convolution. Consequently, the output weighted feature isby multiplying weight and output of the connection layer. After repeating the denseblock structures with the ECAT mechanism twice, the maxpooling operation is applied to the feature to extract the maximum value.where is the final output before reaching the flattening layer, where is the flattened feature. Finally, pass through an f-layer fully connected layer to obtain the final feature, denoted as d and used for the final activity prediction through a fully connected layer by an activation function of softmax.where represents the predicted value of , where and are trainable parameters.

- S3.

- After obtaining the final activity prediction, the loss function is calculated through cross-entropy based on the actual label and the activity prediction obtained in the previous phase .where is the number of training data in the source domain, m is the number of classification categories, is expressed as the true category of the i-th data in that belongs to the j-th category, is expressed as the prediction of the i-th data in belonging to the j-th category.

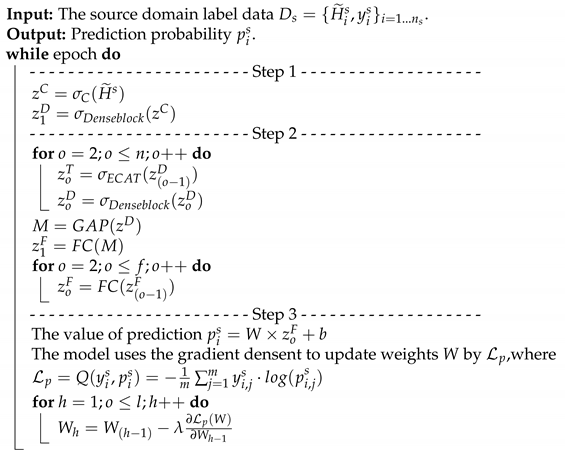

| Algorithm 1: The pre-training phase. |

|

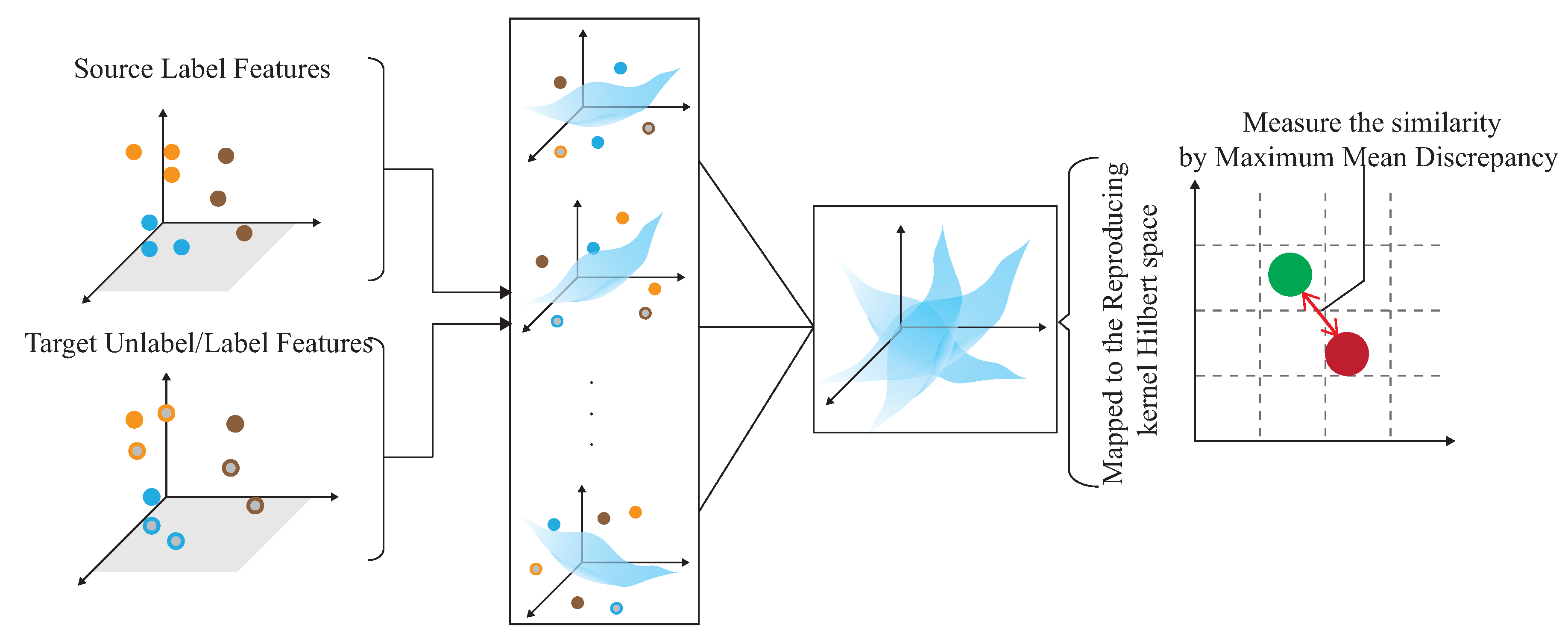

4.3. Dynamic Associate Domain Adaptation Phase

- S1.

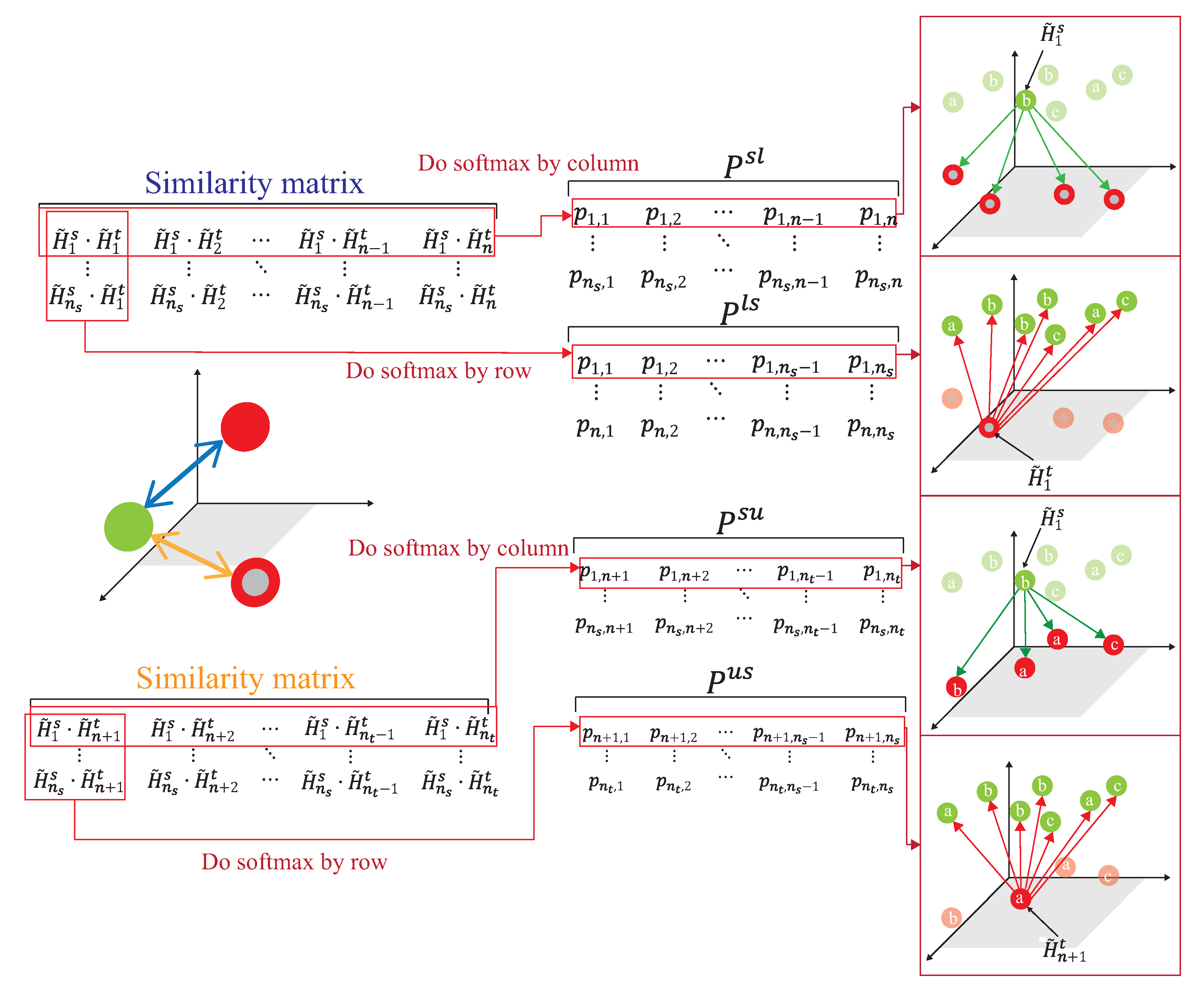

- The source domain and target domain are mapped into the same feature space. Let , , , then dot product is used to calculate the similarity of the source domain and the target domain. The similarity of the domain features is calculated by a similarity matrix between the source domain and the target domain, and , where is the similarity matrix of the unlabeled data of the source domain and the target domain, and is the similarity matrix of the labeled data of the source domain and the target domain.

- S2.

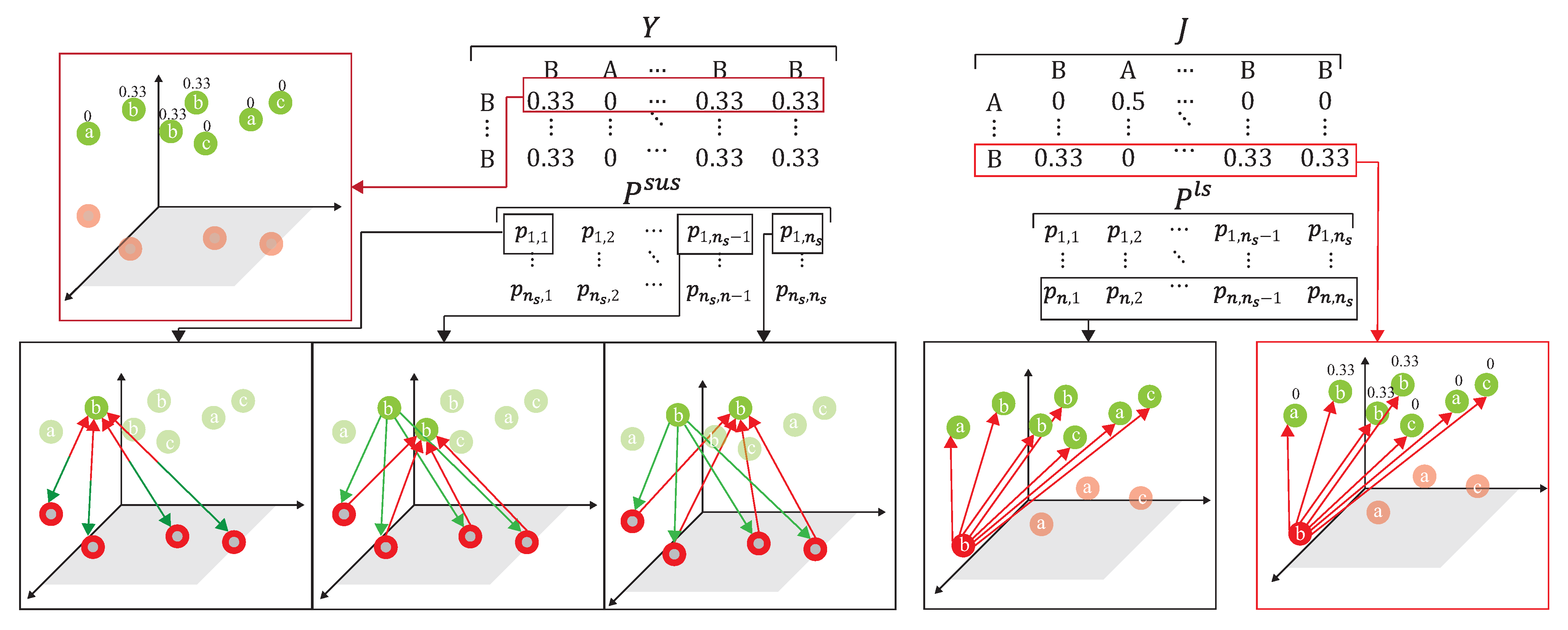

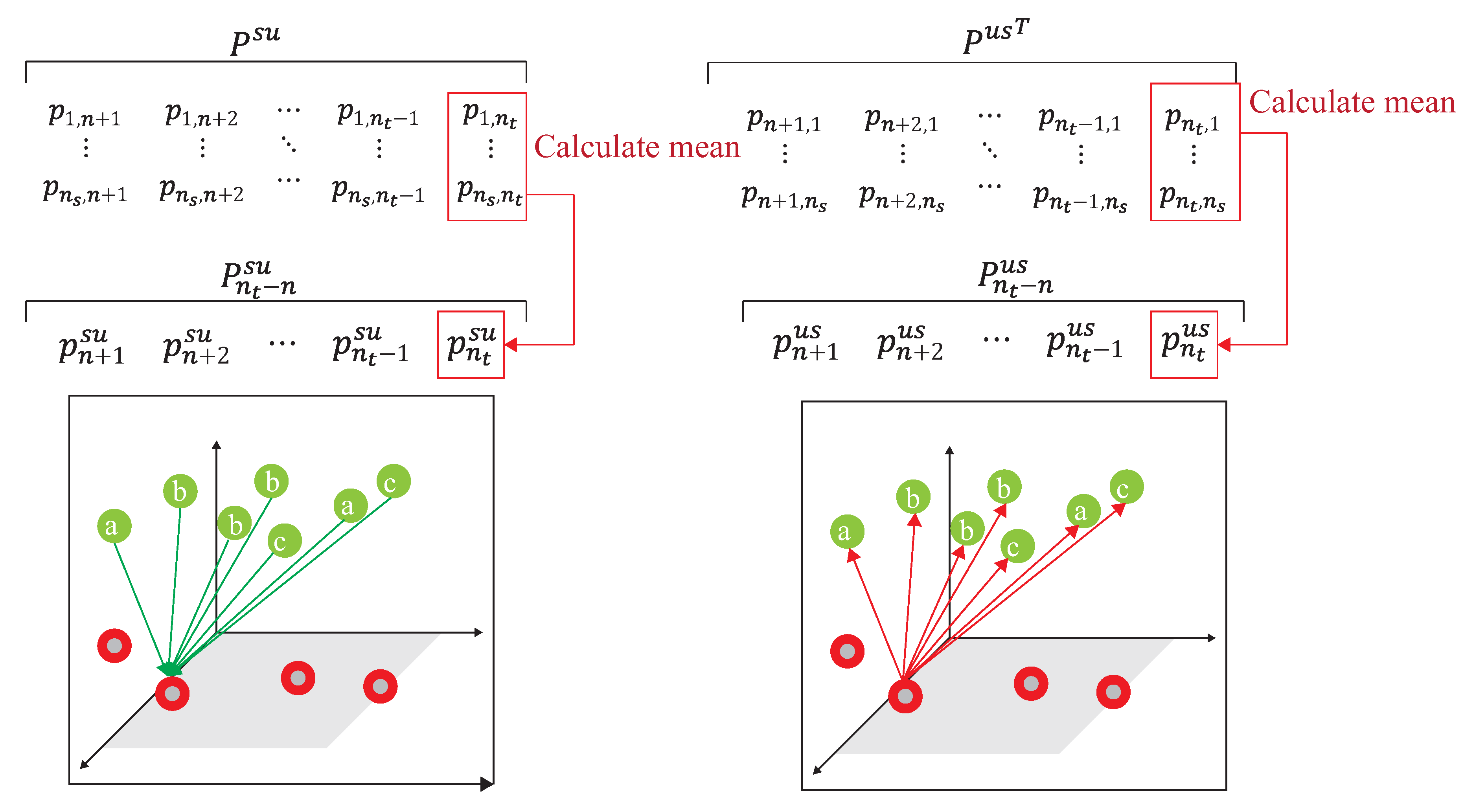

- After obtaining the similarity matrix, , a conversion probability, , of the source domain followed by [20] iswhere is the conversion probability of the similarity matrix by applying the softmax function for the column of , and the first row of is expressed as the probability of similarity of data to all unlabeled data in the target domain. For more consideration of DADA, we further calculate as follows. The i-th row of is expressed as the similarity probability of the source domain data to that of all labeled data of the target domain, where . Similarly, the conversion probability from [20] of the target domain of is,where is the conversion probability of the similarity matrix by applied the softmax function to the row of , the first row of is expressed as the similarity probability of the data to all data of the source domain. For more consideration of DADA, we also further calculate as follows. The i-th row of is expressed as the similarity probability of the labeled data of the target domain to that of the source domain, where Following [20], the subsequent calculation of the associated similarity for unlabeled data in the target domain can be expressed as:where [20] is the round-trip probability of similarity matrix , starting from and ending at . Assuming that the label mapped back to is unchanged relative to , the label distribution of [20] is expressed as:The i-th column of [20] can be expressed as the probability of similarity between and other source domain data, and the cross-entropy with the round-trip probability can be expressed as:where is the difference degree function [20] quantified by cross-entropy, which is mainly mapped to the unlabeled data of the target domain through the label data of the source domain, and then is mapped back to the source domain and distributed with the label data of the source domain, to compare the degree of difference to quantify the distance between the two domains. However, this round-trip mapping cannot directly reflect the difference degree, so we further modify the ADA by dynamically utilizing a different ratio of labeled data of the target domain to map back to the source domain to obtain the difference degree. Since both parties have labels, the new defined cross-entropy calculation, , is performed through the conversion probability of and the distribution probability of the label data of the target domain mapped to the source domain,The i-th row of is the probability of similarity between and all labeled data of the source domain, where . Assuming that the label mapped to relative to is unchanged, the label distribution of can be expressed as,The divergence between the two domains is,where is the loss of the divergence of the two domains. Two different mapping functions are referenced to illustrate the distance degrees.

- S3.

- The difference loss function of two domains is only to correlate the simple and easily correlated data in the unlabeled target domain, a visit loss, followed by [20], is needed and given.where is calculated by adding up the columns in line units, and to calculate the cross-entropy with . It is unreasonable that the calculation of under the data distribution must be balanced [20]. This is because the number of unlabeled data is unknown before training. To provide the data imbalance capability and release the limitation of , our DADA scheme replaced traditional [20] with a new loss function of synchronization, denoted , as follows:where adds up the columns in line units and adds up the columns in line units to make sure that both and are still kept in the same distribution. This work measured the correlation which can only avoid correlating the simple and easily correlated data in the unlabeled target domain, under the data distribution of , which is imbalance. Finally, is obtained bywhere is the hyper-parameter of the combined targets. is represented as the combined loss, and new constructed .

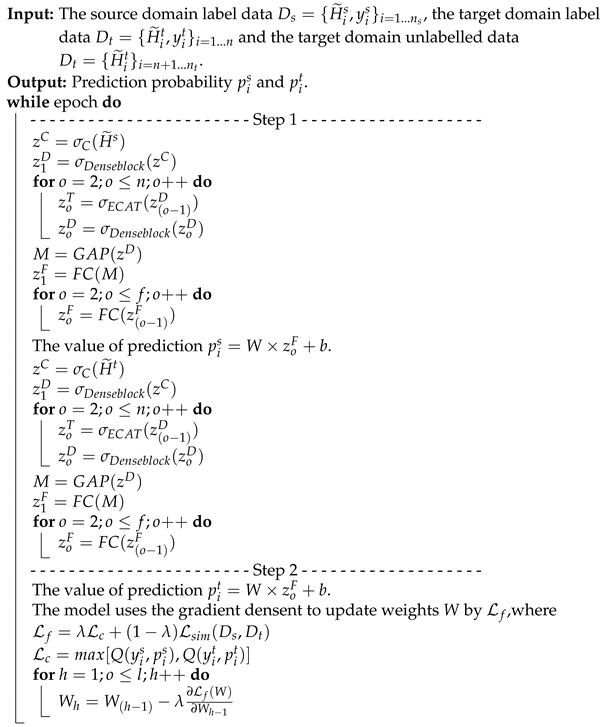

| Algorithm 2: The dynamic associate domain adaptation phase. |

- - - - - - - - - - - - - - - - - - - - - - - Step 1 - - - - - - - - - - - - - - - - - - - - - - Mapped the data to same space by . , , Calculate similarity matrix and by dot product. , Calculate conversion probability matrix of the similarity matrix and by softmax function SM. - - - - - - - - - - - - - - - - - - - - - - - Step 2 - - - - - - - - - - - - - - - - - - - - - - Calculate by conversion probability, is the round-trip probability and the label distribution . Calculate by conversion probability, is the conversion probability and the label distribution . - - - - - - - - - - - - - - - - - - - - - - - Step 3 - - - - - - - - - - - - - - - - - - - - - - Combine and as divergence loss . Calculate synchronize loss . Combine and as . |

4.4. Associate Knowledge Fine-Tuning Phase

- S1.

- The labeled data of the source domain and both of the labeled and unlabeled data of the target domain are trained simultaneously. To preserve the features learned in the pre-training phase, the stable layers are frozen, and the output, before the flattening layer, is expressed as . The maximum pooling operation is applied to the feature to extract the maximum near-row flattening. This operation is expressed as:where is the set of data features from the source domain and domain domain , and is used as a flattened feature, and passes through the k-layer fully connected layer to calculate the similarity of the k-layer feature , given as:where l is the current number of layers. The similarity values of the k-layer features are accumulated as part of the loss.

- S2.

- The final feature is obtained by , which is used for the final activity prediction through the fully connected layer with the activation function of softmax, where , , where and are trainable parameters, and is the predicted value of , and is the predicted value of . Finally, the total loss can be represented as:where and are used to solve the classification of the source domain and the target domain, respectively. Note that and n are the numbers of labeled data of the source domain and the target domain, respectively, m is the number of classification categories, and are denoted as the i-th labeled data in and , respectively, and the data should belong to j-th category, and and are the predicted probabilities of and , respectively. Let be the similarity between two domains of the fully connected layer. The final goal is to minimize , which is the combined function of and , where is the hyper-parameter of the hybrid objective function, which .

| Algorithm 3: The associate knowledge fine-tuning phase. |

|

5. Experimental Result

5.1. Experimental Setup

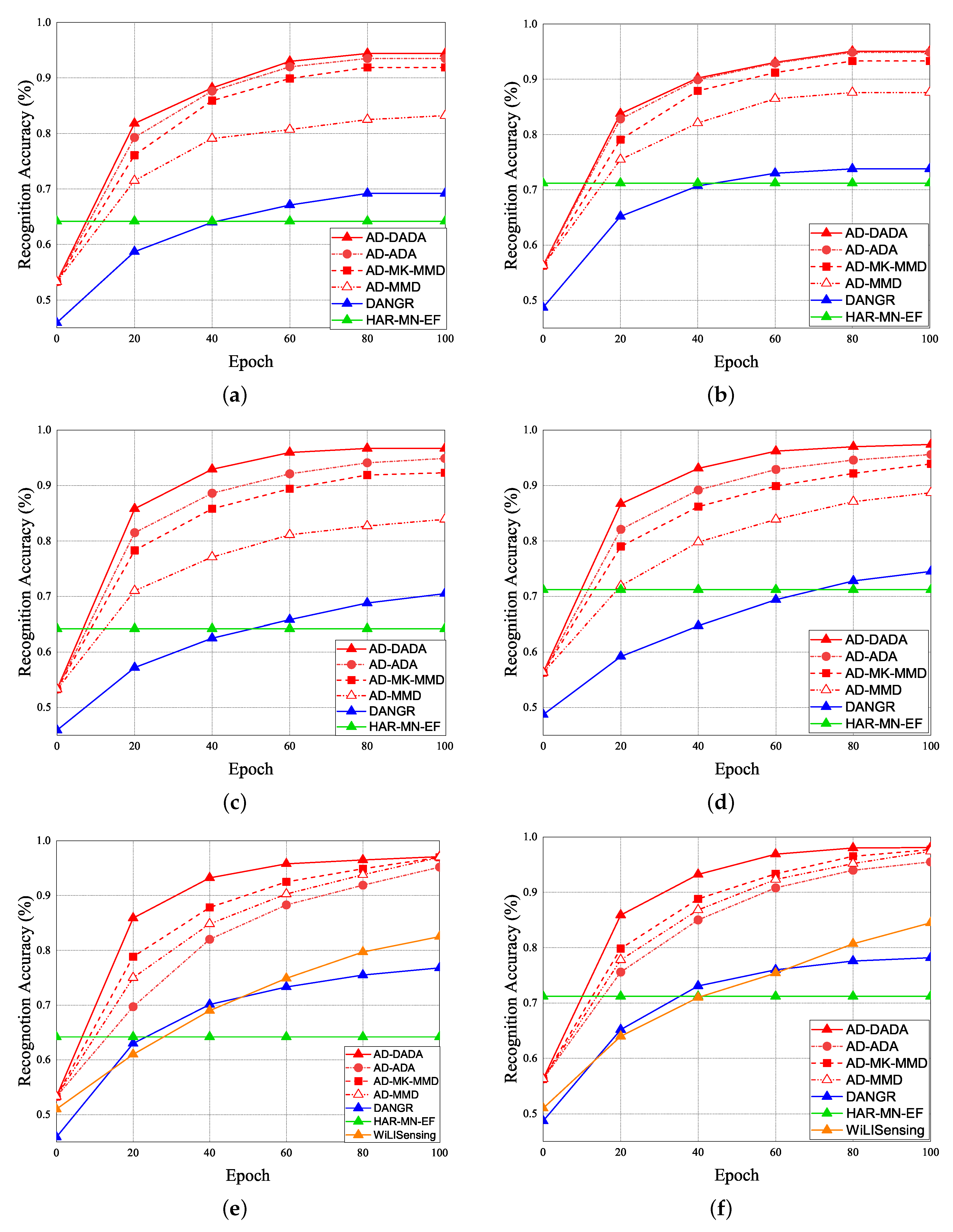

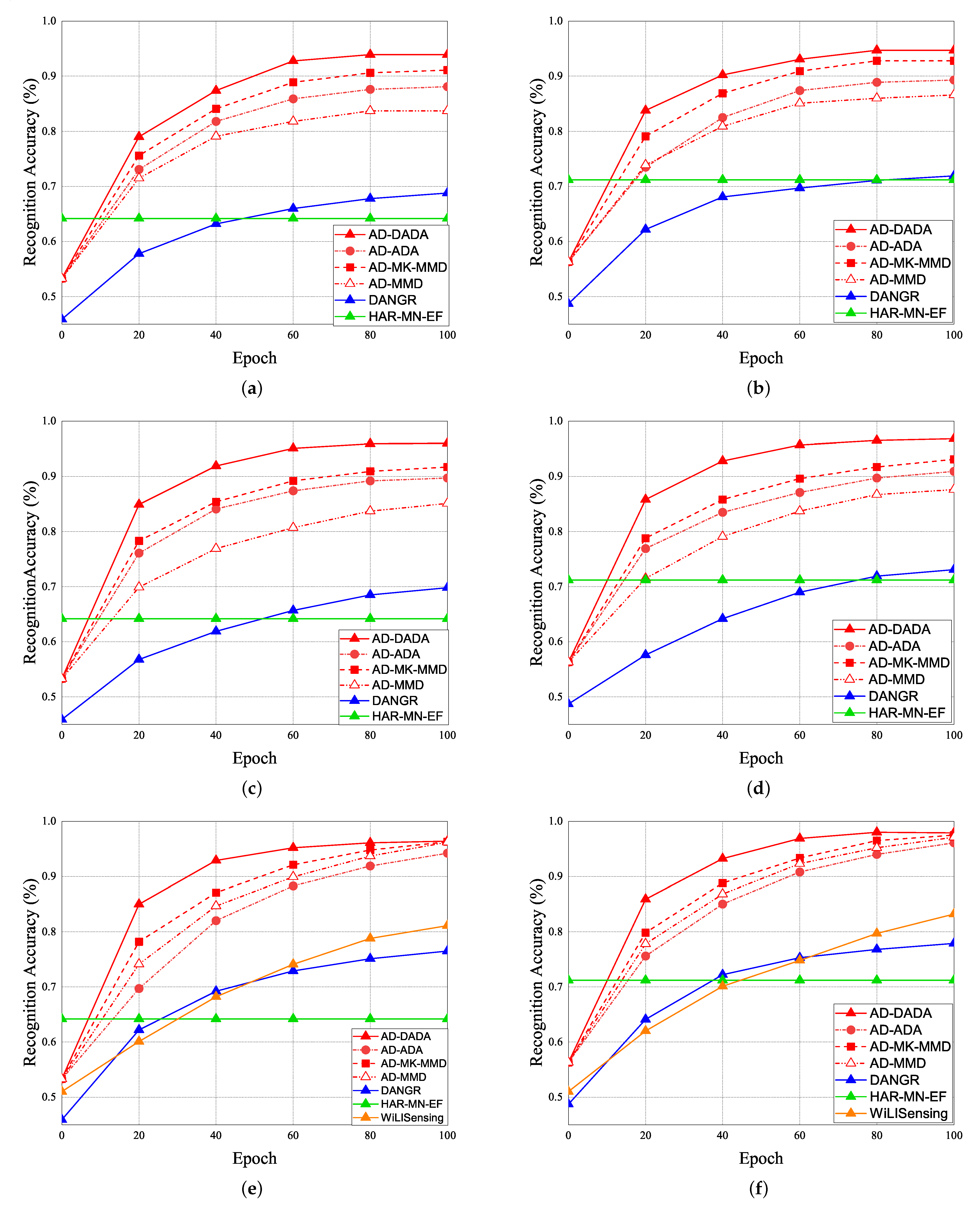

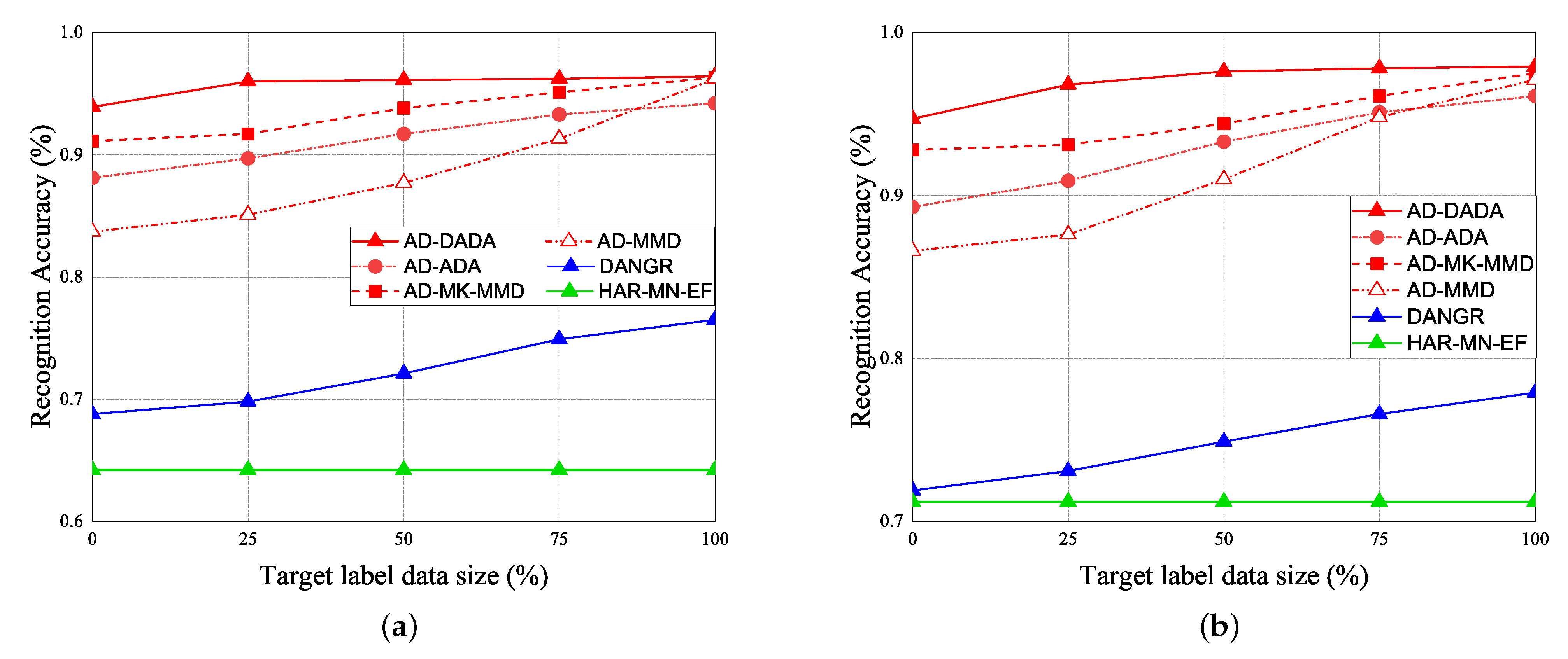

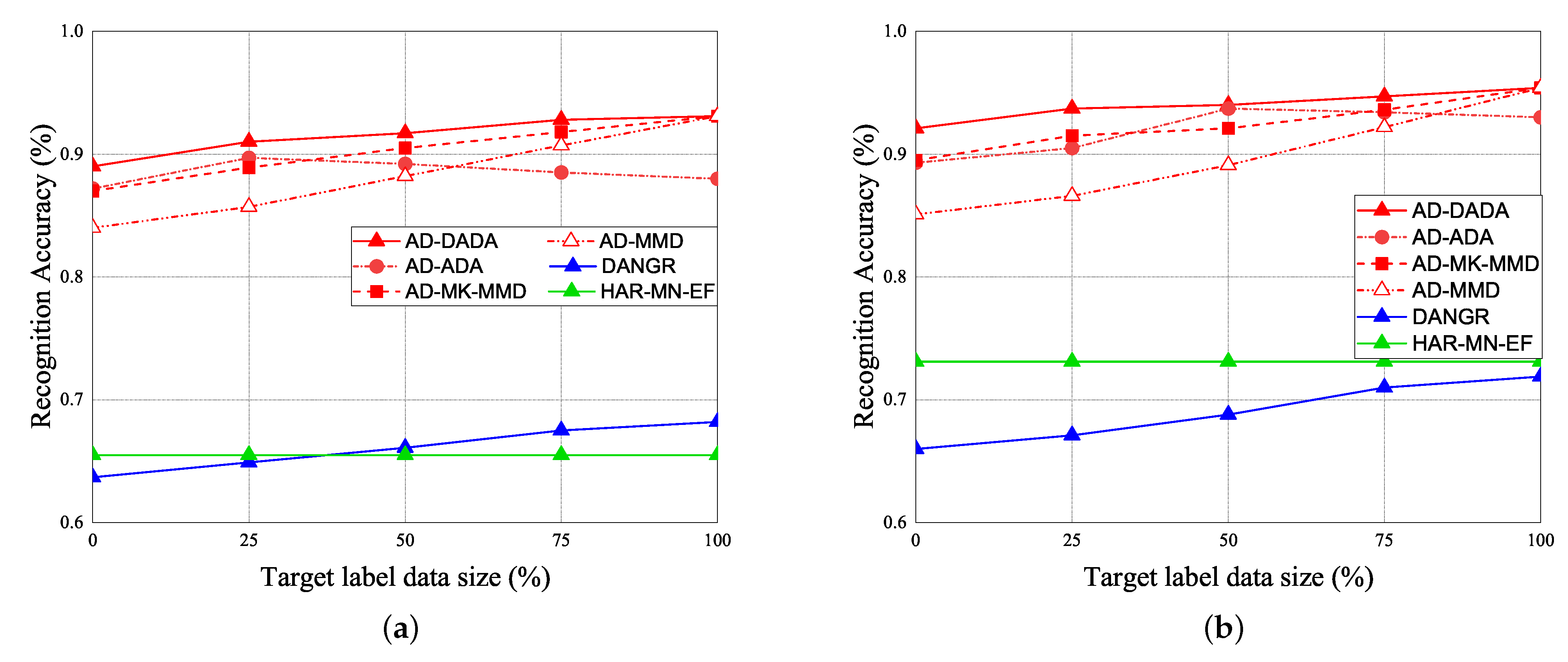

5.2. Performance Analysis

- (1)

- Pre-training accuracy (PTA) is the recognition accuracy to predict the correct HAR pattern from five kinds of HAR patterns in the same source environment, while the pre-trained model is trained from a source domain.

- (2)

- Recognition accuracy (RA) is the recognition accuracy to predict the correct HAR pattern from five kinds of HAR patterns in the new target environment, under a given pre-trained model that is trained from a source domain, which is quite different from the source environment.

- (3)

- Time cost (TC) is the total time cost, which is the sum of the processing time, pre-training time, and fine-tuning time.

5.2.1. Pre-Training Accuracy (PTA)

5.2.2. Recognition Accuracy (RA)

5.2.3. Time Cost (TC)

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, Y.S.; Li, C.Y.; Juang, T.Y. Dynamic Associate Domain Adaptation for Human Activity Recognition Using WiFi Signals. In Proceedings of the IEEE Wireless Communications and Networking Conference (IEEE WCNC 2022), Austin, TX, USA, 10–13 April 2022; pp. 1–6. [Google Scholar]

- Gupta, A.; Gupta, K.; Gupta, K.; Gupta, K. A Survey on Human Activity Recognition and Classification. In Proceedings of the International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 28–30 July 2020; pp. 0915–0919. [Google Scholar]

- Kwon, S.M.; Yang, S.; Liu, J.; Yang, X.; Saleh, W.; Patel, S. Demo: Hands-Free Human Activity Recognition Using Millimeter-Wave Sensors. In Proceedings of the IEEE International Symposium on Dynamic Spectrum Access Networks (DySPAN), Newark, NJ, USA, 11–14 November 2019; pp. 1–2. [Google Scholar]

- Challa, N.S.R.; Kesari, P.; Ammana, S.R.; Katukojwala, S.; Achanta, D.S. Design and Implementation of Bluetooth-Beacon Based Indoor Positioning System. In Proceedings of the IEEE International WIE Conference on Electrical and Computer Engineering (WIECON-ECE), Bangalore, India, 15–16 November 2019; pp. 1–4. [Google Scholar]

- Sanam, T.F.; Godrich, H. FuseLoc: A CCA Based Information Fusion for Indoor Localization Using CSI Phase and Amplitude of Wifi Signals. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 7565–7569. [Google Scholar]

- Wang, F.; Feng, J.; Zhao, Y.; Zhang, X.; Zhang, S.; Han, J. Joint Activity Recognition and Indoor Localization With WiFi Fingerprints. IEEE Access 2019, 7, 80058–80068. [Google Scholar] [CrossRef]

- Sigg, S.; Braunschweig, T.; Shi, S.; Buesching, F.; Yusheng, J.; Lars, W. Leveraging RF-channel fluctuation for activity recognition: Active and passive systems, continuous and RSSI-based signal features. In Proceedings of the International Conference on Advances in Mobile Computing & Multimedia, Vienna, Austria, 2–4 December 2013; pp. 43–52. [Google Scholar]

- Orphomma, S.; Swangmuang, N. Exploiting the wireless RF fading for human activity recognition. In Proceedings of the International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Krabi, Thailand, 15–17 May 2013; pp. 1–5. [Google Scholar]

- Zhang, W.; Zhou, S.; Peng, D.; Yang, L.; Li, F.; Yin, H. Understanding and Modeling of WiFi Signal-Based Indoor Privacy Protection. IEEE Internet Things J. 2021, 8, 2000–2010. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, K.; Ni, L.M. Wifall: Device-Free Fall Detection by Wireless Networks. IEEE Trans. Mob. Comput. 2016, 16, 581–594. [Google Scholar] [CrossRef]

- Huang, M.; Liu, J.; Gu, Y.; Zhang, Y.; Ren, F.; Wang, X. Your WiFi Knows You Fall: A Channel Data-Driven Device-Free Fall Sensing System. In Proceedings of the IEEE International Conference on Communications (ICC), Nanjing, China, 23–25 November 2019; pp. 1–6. [Google Scholar]

- Gu, Y.; Zhang, X.; Li, C.; Ren, F.; Li, J.; Liu, Z. Your WiFi Knows How You Behave: Leveraging WiFi Channel Data for Behavior Analysis. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Zhong, S.; Huang, Y.; Ruby, R.; Wang, L.; Qiu, Y.; Wu, K. Wi-fire: Device-free fire detection using WiFi networks. In Proceedings of the IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–6. [Google Scholar]

- Li, H.; He, X.; Chen, X.; Fang, Y.; Fang, Q. Wi-Motion: A Robust Human Activity Recognition Using WiFi Signals. IEEE Access 2019, 7, 153287–153299. [Google Scholar] [CrossRef]

- Wang, F.; Gong, W.; Liu, J. On Spatial Diversity in WiFi-Based Human Activity Recognition: A Deep Learning-Based Approach. IEEE Internet Things J. 2019, 6, 2035–2047. [Google Scholar] [CrossRef]

- Wang, Z. A Survey on Behavior Recognition Using WiFi Channel State Information. IEEE Commun. Mag. 2017, 55, 98–104. [Google Scholar]

- Vasisht, D.; Kumar, S.; Katabi, D. Decimeter-Level Localization with a Single WiFi Access Point. In Proceedings of the Decimeter-Level Localization with A Single WiFi Access Point, Networked Systems Design and Implementation (NSDI 16), Santa Clara, CA, USA, 16–18 May 2016; pp. 165–178. [Google Scholar]

- Wang, F.; Gong, W.; Liu, J.; Wu, K. Channel Selective Activity Recognition with WiFi: A Deep Learning Approach Exploring Wideband Information. IEEE Trans. Netw. Sci. Eng. 2020, 7, 181–192. [Google Scholar] [CrossRef]

- Shi, Z.; Zhang, J.A.; Xu, R.; Cheng, Q.; Pearce, A. Towards Environment-Independent Human Activity Recognition using Deep Learning and Enhanced CSI. In Proceedings of the IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar]

- Haeusser, P.; Frerix, T.; Mordvintsev, A.; Cremers, D. Associative Domain Adaptation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2784–2792. [Google Scholar]

- Zou, H.; Zhou, Y.; Yang, J.; Jiang, H.; Xie, L.; Spanos, C.J. DeepSense: Device-Free Human Activity Recognition via Autoencoder Long-Term Recurrent Convolutional Network. In Proceedings of the IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- Shi, Z.; Zhang, J.A.; Xu, R.; Cheng, Q. Deep Learning Networks for Human Activity Recognition with CSI Correlation Feature Extraction. In Proceedings of the IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Li, Y.; Jiang, T.; Ding, X.; Wang, Y. Location-Free CSI Based Activity Recognition With Angle Difference of Arrival. In Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC), Seoul, Korea, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Ding, X.; Jiang, T.; Li, Y.; Xue, W.; Zhong, Y. Device-Free Location-Independent Human Activity Recognition using Transfer Learning Based on CNN. In Proceedings of the IEEE International Conference on Communications Workshops (ICC Workshops), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Han, Z.; Guo, L.; Lu, Z.; Wen, X.; Zheng, W. Deep Adaptation Networks Based Gesture Recognition using Commodity WiFi. In Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC), Seoul, Korea, 25–28 May 2020; pp. 1–7. [Google Scholar]

- Arshad, S.; Feng, C.; Yu, R.; Liu, Y. Leveraging Transfer Learning in Multiple Human Activity Recognition Using WiFi Signal. In Proceedings of the IEEE International Symposium on A World of Wireless, Mobile and Multimedia Networks (WoWMoM), Washington, DC, USA, 10–12 June 2019; pp. 1–10. [Google Scholar]

- Peng, X.B.; Kanazawa, A.; Toyer, S.; Abbeel, P.; Sergey, L. Tool Release: Gathering 802.11n Traces with Channel State Information. In Association for Computing Machinery; ACM: New York, NY, USA, 2011; p. 53. [Google Scholar]

- Pragada, S.; Sivaswamy, J. Image Denoising Using Matched Biorthogonal Wavelets. In Proceedings of the Sixth Indian Conference on Computer Vision, Graphics and Image Processing, Bhubaneswar, India, 16–19 December 2008. [Google Scholar]

- Hunter, J.S. The Exponentially Weighted Moving Average. J. Qual. Technol. 1986, 18, 203–210. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Borgwardt, K.M.; Gretton, A.; Rasch, M.J.; Kriegel, H.P. Integrating structured biological data by kernel maximum mean discrepancy. Bioinformatics 2006, 22, 49–57. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Environment | Source | Target (A) | Target (B) |

|---|---|---|---|

| Sampling frequency | 1000 Hz | ||

| Transmit antenna | 1 antenna | ||

| Receiving antenna | 3 antenna | ||

| Sampling time | 3 s | ||

| Subcarriers per link | 30 subcarriers | ||

| Source dataset | 4000 | 1500 | |

| Target dataset | 1500 | 500 | |

| Data expansion factor | 1.5 | ||

| Weight adjustment | 0.5 | ||

| Compression factor | 0.5 | ||

| Weight adjustment | 0.5 | ||

| Learning rate | 0.001 | ||

| Drop out | 0.5 | ||

| Jump | Stand | Sit | Squat | Fall | |

|---|---|---|---|---|---|

| Jump | 1 | 0 | 0 | 0 | 0 |

| Stand | 0 | 0.97 | 0.01 | 0.02 | 0 |

| Sit | 0 | 0 | 0.99 | 0 | 0.01 |

| Squat | 0 | 0.01 | 0.02 | 0.965 | 0.005 |

| Fall | 0.005 | 0 | 0 | 0 | 0.995 |

| Jump | Stand | Sit | Squat | Fall | |

|---|---|---|---|---|---|

| Jump | 0.995 | 0 | 0 | 0 | 0.005 |

| Stand | 0.005 | 0.92 | 0.04 | 0.03 | 0.005 |

| Sit | 0.005 | 0.01 | 0.98 | 0.005 | 0 |

| Squat | 0 | 0.085 | 0.02 | 0.885 | 0.01 |

| Fall | 0.005 | 0.005 | 0 | 0 | 0.99 |

| Model | Schemes | Target (A) | Target (B) | ||

|---|---|---|---|---|---|

| 0% | 25% | 0% | 25% | ||

| AD | DADA | 88.7% | 93.1% | 89.9% | 95.2% |

| ADA | 87.8% | 90.4% | 87.9% | 91.9% | |

| MK-MMD | 85.3% | 88.4% | 86.2% | 89.9% | |

| MMD | 76.9% | 80.1% | 80.8% | 84.0% | |

| Model | Schemes | Target (A) | Target (B) | ||

|---|---|---|---|---|---|

| 0% | 25% | 0% | 25% | ||

| AD | DADA | 94.4% | 96.7% | 95.1% | 97.4% |

| ADA | 93.5% | 94.9% | 94.8% | 95.6% | |

| MK-MMD | 91.9% | 92.3% | 93.3% | 93.9% | |

| MMD | 83.2% | 83.9% | 87.6% | 88.7% | |

| Model | Target Data Ratio | 1:1:1:1:1 | 1:1:0.5:1:0.5 | 1:1:1:1:0.1 | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Domain | Scheme | 0% | 25% | 50% | 75% | 100% | 0% | 25% | 50% | 75% | 100% | 0% | 25% | 50% | 75% | 100% | |

| AD | target (A) | DADA | 94.4% | 96.7% | 96.8% | 96.9% | 97.1% | 93.9% | 96.0% | 96.1% | 96.2% | 96.4% | 89.5% | 91.2% | 91.7% | 92.8% | 93.1% |

| ADA | 93.5% | 94.9% | 95.5% | 95.4% | 95.2% | 88.1% | 89.7% | 91.7% | 93.3% | 94.2% | 87.2% | 89.7% | 89.2% | 88.5% | 88.3% | ||

| MK-MMD | 91.9% | 92.3% | 93.8% | 95.9% | 96.9% | 91.1% | 91.7% | 93.8% | 95.1% | 96.3% | 87.0% | 88.9% | 90.5% | 91.8% | 93.1% | ||

| MMD | 83.2% | 83.9% | 85.1% | 89.3% | 97.0% | 83.7% | 85.1% | 87.7% | 91.3% | 96.2% | 84.9% | 85.7% | 88.2% | 90.7% | 93.0% | ||

| target (B) | DADA | 95.1% | 97.4% | 97.6% | 97.8% | 98.1% | 94.7% | 96.8% | 97.6% | 97.8% | 97.9% | 92.1% | 93.7% | 94.0% | 94.7% | 95.4% | |

| ADA | 94.9% | 95.6% | 96.3% | 95.9% | 95.5% | 89.3% | 90.9% | 93.3% | 95.1% | 96.1% | 89.3% | 90.5% | 93.7% | 93.4% | 92.9% | ||

| MK-MMD | 93.3% | 93.9% | 94.4% | 96.1% | 97.5% | 92.8% | 93.1% | 94.4% | 96.1% | 97.5% | 89.4% | 91.5% | 92.1% | 93.6% | 95.4% | ||

| MMD | 87.6% | 88.7% | 91.0% | 94.8% | 97.4% | 86.6% | 87.6% | 91.0% | 94.8% | 97.1% | 85.1% | 86.6% | 89.1% | 92.2% | 95.4% | ||

| Schemes | Processing Time (s) | Time Cost per Epoch (s) | ||

|---|---|---|---|---|

| Pre-Train | Fine-Tuning | Total | ||

| AD-DADA | 97 | 162 | 102 | 264 |

| AD-ADA | 97 | 259 | ||

| AD-MK-MMD | 147 | 309 | ||

| AD-MMD | 111 | 273 | ||

| DANGR | 41 | 101 | 84 | 185 |

| WiLlSensing | 59 | 84 | 69 | 153 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.-S.; Chang, Y.-C.; Li, C.-Y. A Semi-Supervised Transfer Learning with Dynamic Associate Domain Adaptation for Human Activity Recognition Using WiFi Signals. Sensors 2021, 21, 8475. https://doi.org/10.3390/s21248475

Chen Y-S, Chang Y-C, Li C-Y. A Semi-Supervised Transfer Learning with Dynamic Associate Domain Adaptation for Human Activity Recognition Using WiFi Signals. Sensors. 2021; 21(24):8475. https://doi.org/10.3390/s21248475

Chicago/Turabian StyleChen, Yuh-Shyan, Yu-Chi Chang, and Chun-Yu Li. 2021. "A Semi-Supervised Transfer Learning with Dynamic Associate Domain Adaptation for Human Activity Recognition Using WiFi Signals" Sensors 21, no. 24: 8475. https://doi.org/10.3390/s21248475

APA StyleChen, Y.-S., Chang, Y.-C., & Li, C.-Y. (2021). A Semi-Supervised Transfer Learning with Dynamic Associate Domain Adaptation for Human Activity Recognition Using WiFi Signals. Sensors, 21(24), 8475. https://doi.org/10.3390/s21248475