Experimental Validation of LiDAR Sensors Used in Vehicular Applications by Using a Mobile Platform for Distance and Speed Measurements

Abstract

:1. Introduction

1.1. Distance Measurement Methods

- Methods using the principles of optics;

- Non-optical methods (sonar, capacitive, inductive).

- Passive methods—in which the detection system does not illuminate the target object, this being achieved by ambient light or by the target object itself;

- Active methods—in which the detection system also emits light radiation; depending on the method used, it may be a combination of monochromatic, continuous, pulse-like, coherent or polarized radiation.

- Interferometry—this method uses the wave aspect of light radiation and the fact that these waves can interfere with each other;

- Geometric methods—one example of this type of method is geometric triangulation, which is based on spatial relationships between the source, the target object and the detector sensor;

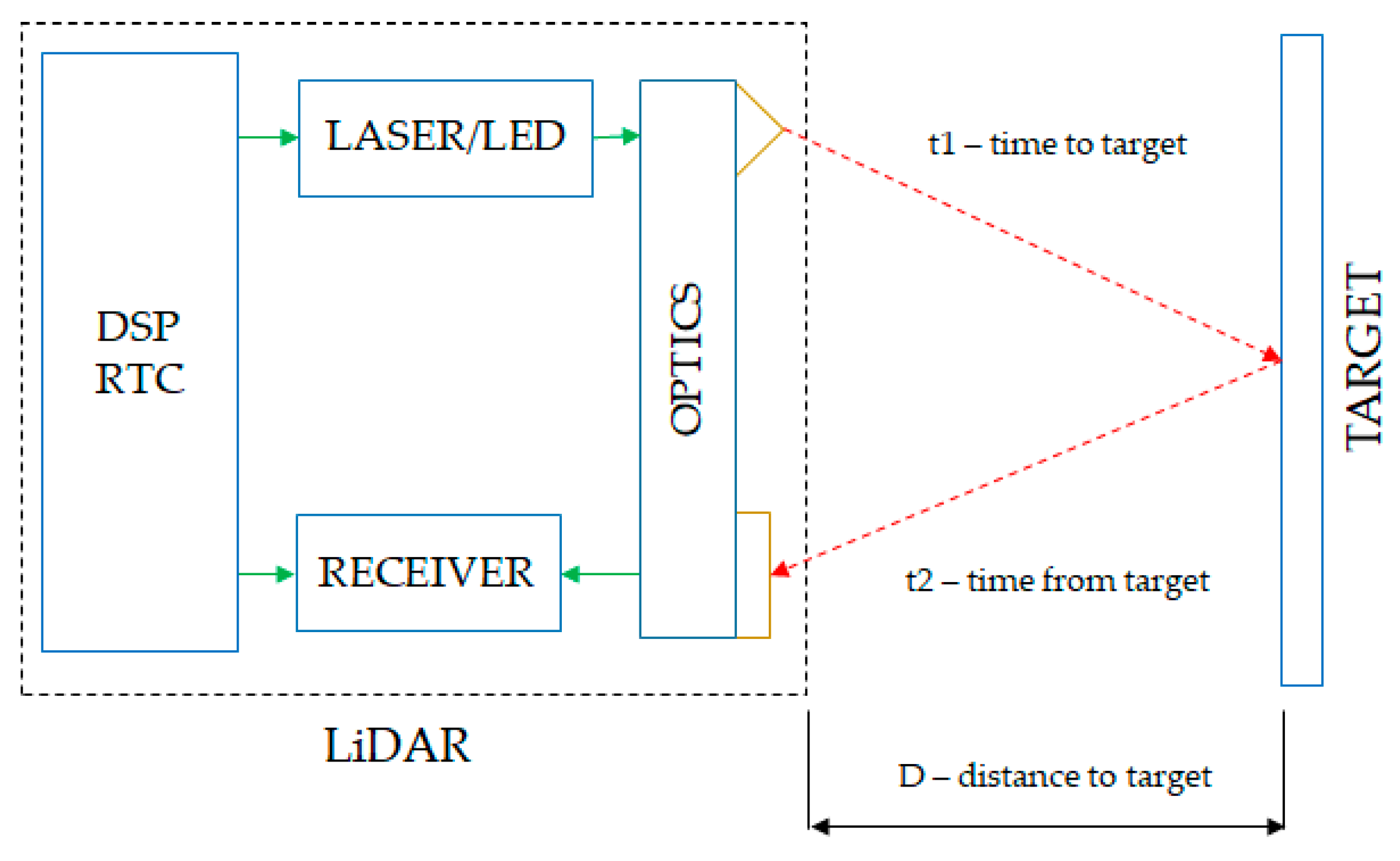

- Methods based on time measurement—these methods are based on the fact that the speed of light has a constant and finite value depending on the environment through which it propagates. This method is based on measuring the actual time elapsed from the light pulse emission until the radiation is received by the sensor. The diagram in Figure 1 shows the general principle of operation of this method:

1.2. LiDAR Sensors Operation Principle

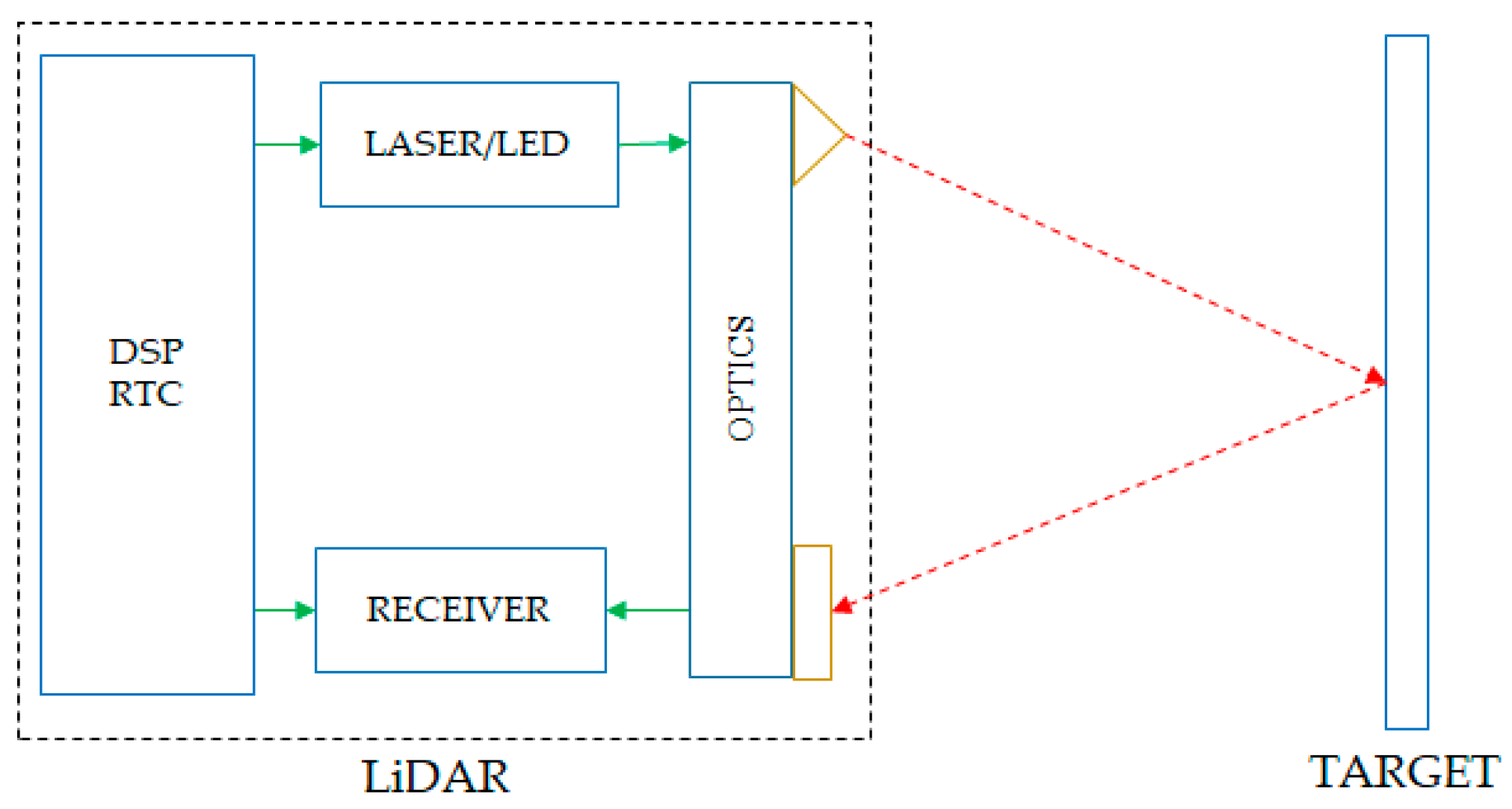

- Lighting unit—this illuminates the scanned object with a pulse of light generated by a LASER or LED;

- Optical system—a lens accumulates reflected light and projects it onto the detector sensor;

- Image sensor—the main component of the system; a large majority of image sensors are composed of semiconductor materials (photodiode, CCD, MOS);

- Control electronics—has the role of synchronizing the emitter and the receiver in order to obtain the correct results;

- User interface—responsible for reporting the measurements over an external interface such as USB, CAN or Ethernet connection.

- Simplicity—LiDAR is compact and the lighting unit is placed next to the lens, thus reducing the size of the system;

- Efficient algorithm—distance information is extracted directly from the measurement of the flight time;

- Speed—such sensors are able to measure distances to objects in a certain area in a single light pulse sweep.

- The disadvantages of LiDAR sensors are as follows:

- Background light—can interfere with the normal functioning of the sensor, generating false results.

- Interference—if multiple cameras are used at the same time, they can interfere with each other, with both generating erroneous results.

1.3. LiDAR Sensor Vehicle Safety Application

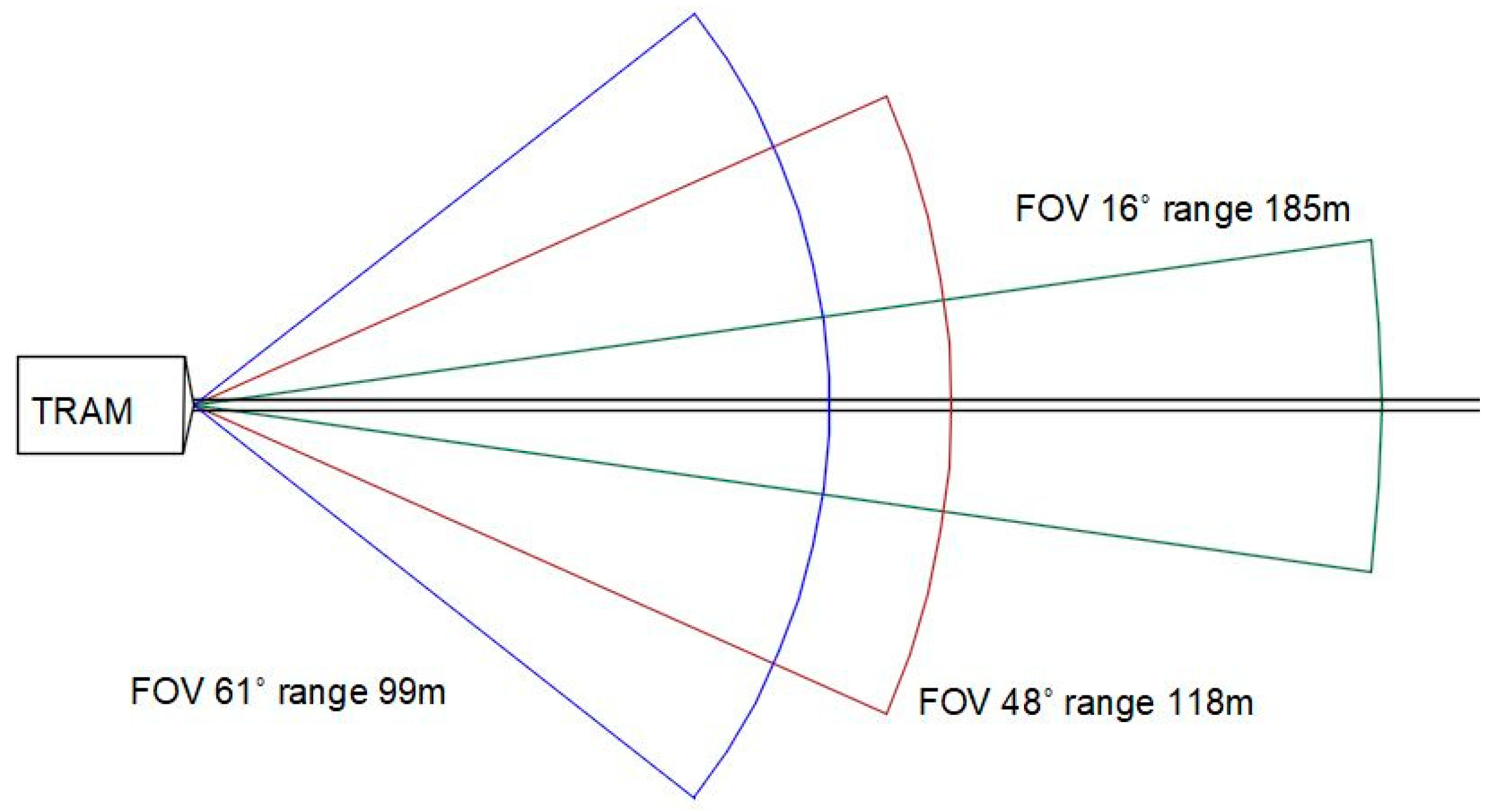

- Short-range sensors are dedicated to virtual machinery;

- Medium-range sensors have been developed for small robots and automated tools and long-range sensors are used for safety tools and perimeter alarms;

- Wide-range sensors have been built for safety tools for use in automated machinery;

- Three-hundred-and-sixty degree sensors are used for automated vehicle driving.

2. Materials and Methods

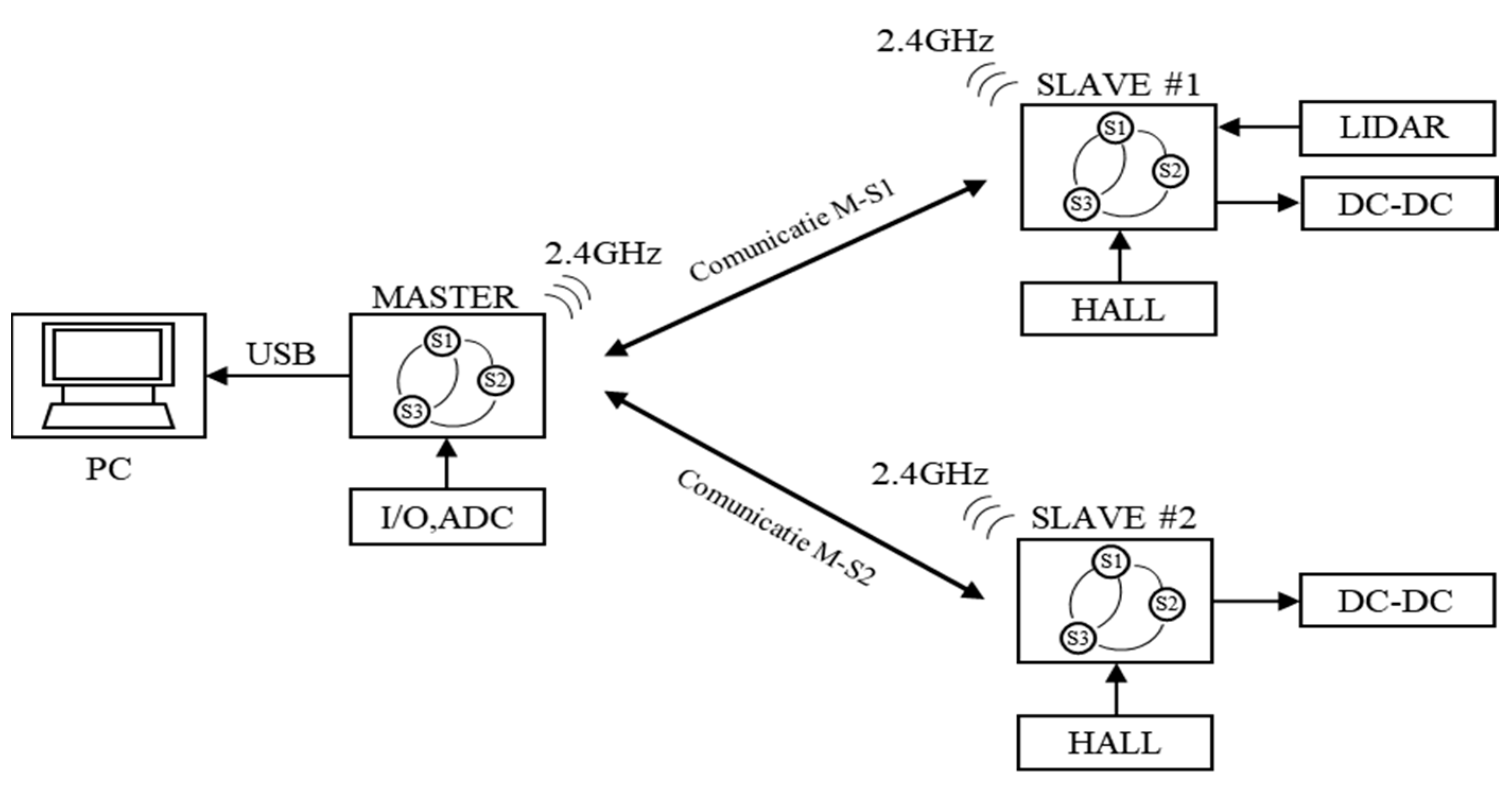

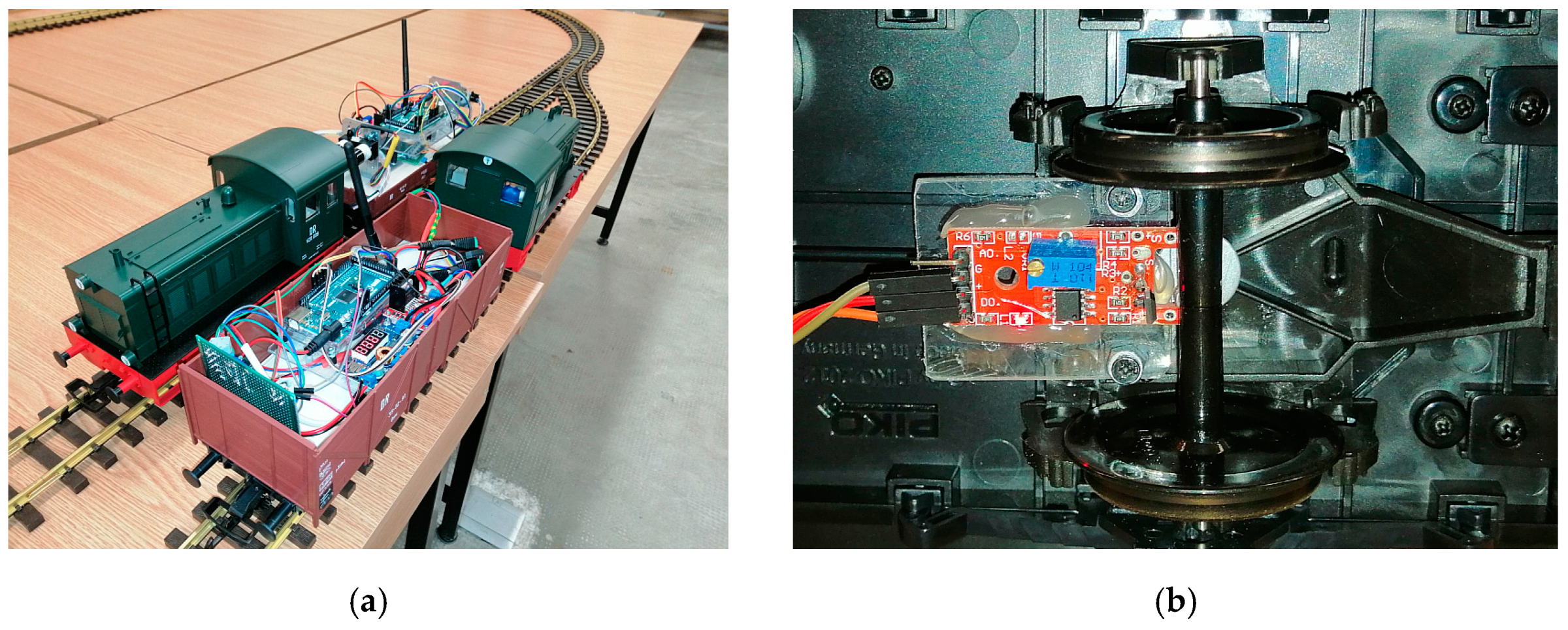

2.1. Test Platform Hardware

2.1.1. Master Control Unit for Remote Control and PC Interface

2.1.2. Train Unit with Distance Sensor

2.1.3. Train Unit without Distance Sensor

2.2. Test Platform Software

2.2.1. Communication Software

2.2.2. Master Controller Software

- SETUP state—in which all the libraries, variables and constants of the program were defined; this was also where the hardware was configured;

- LOOP state—in which the actual functions of the program were implemented.

- Functions responsible for network monitoring, packet reading and updating;

- Functions responsible for unit control.

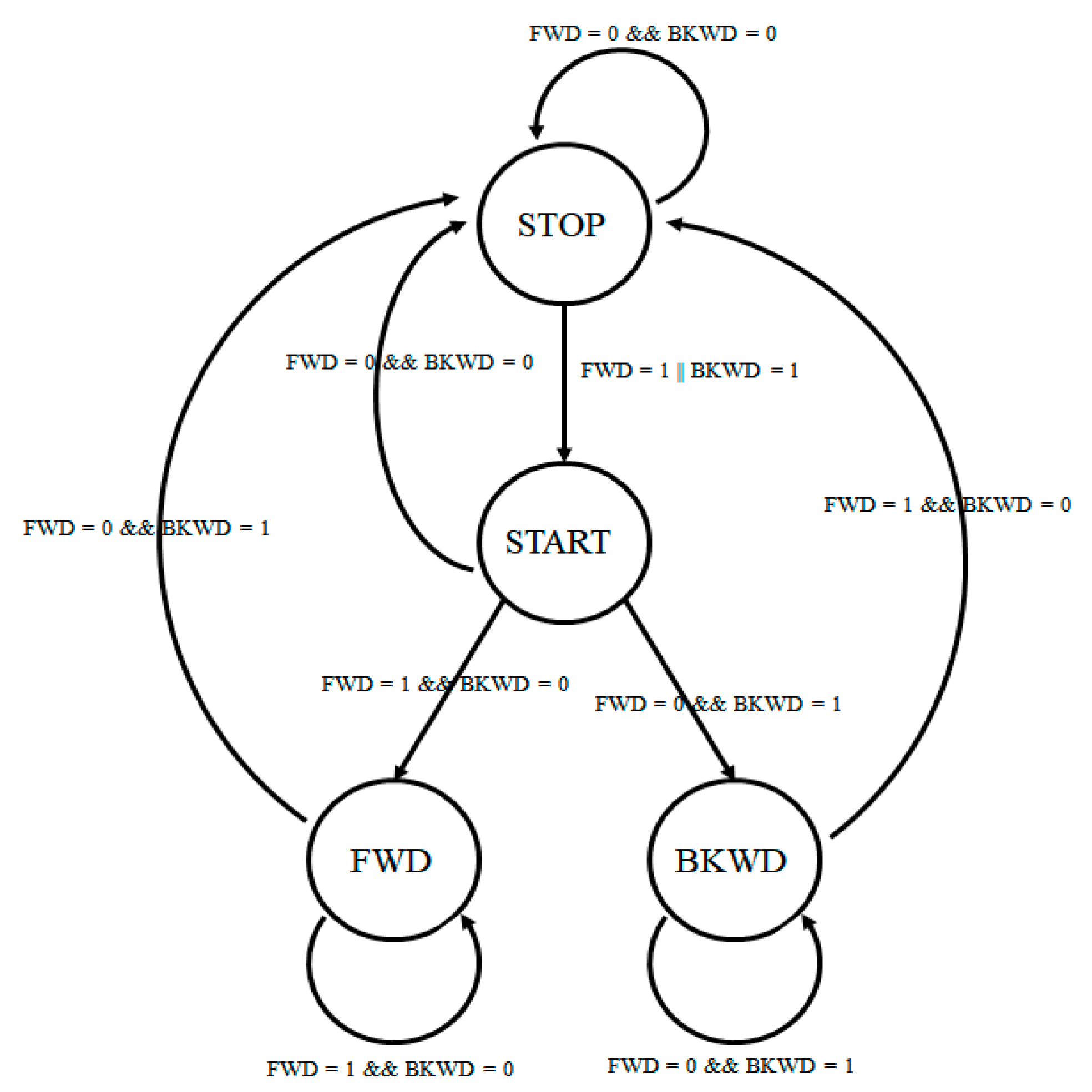

2.2.3. Train Control Software

2.2.4. PC Software

3. Results

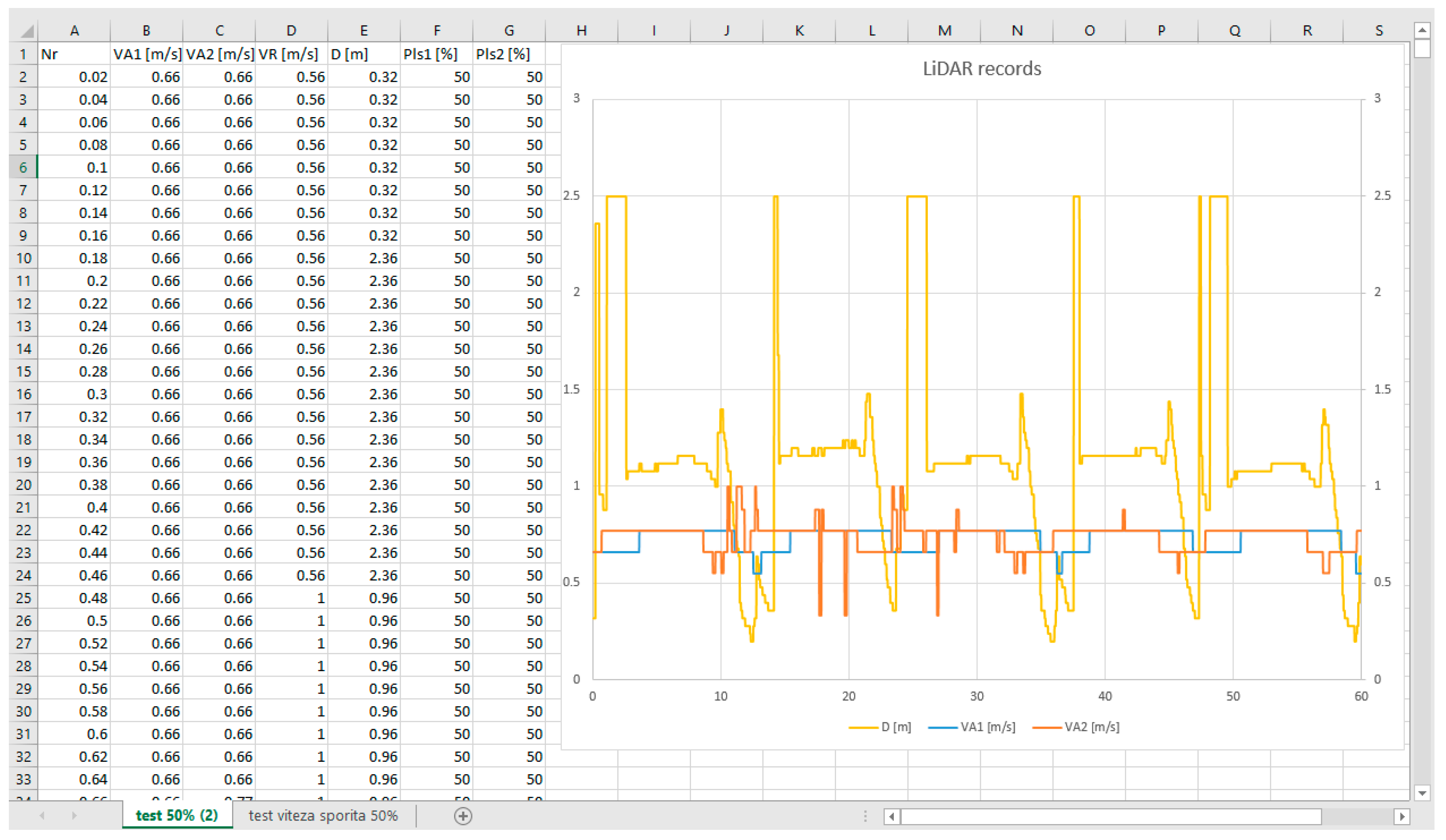

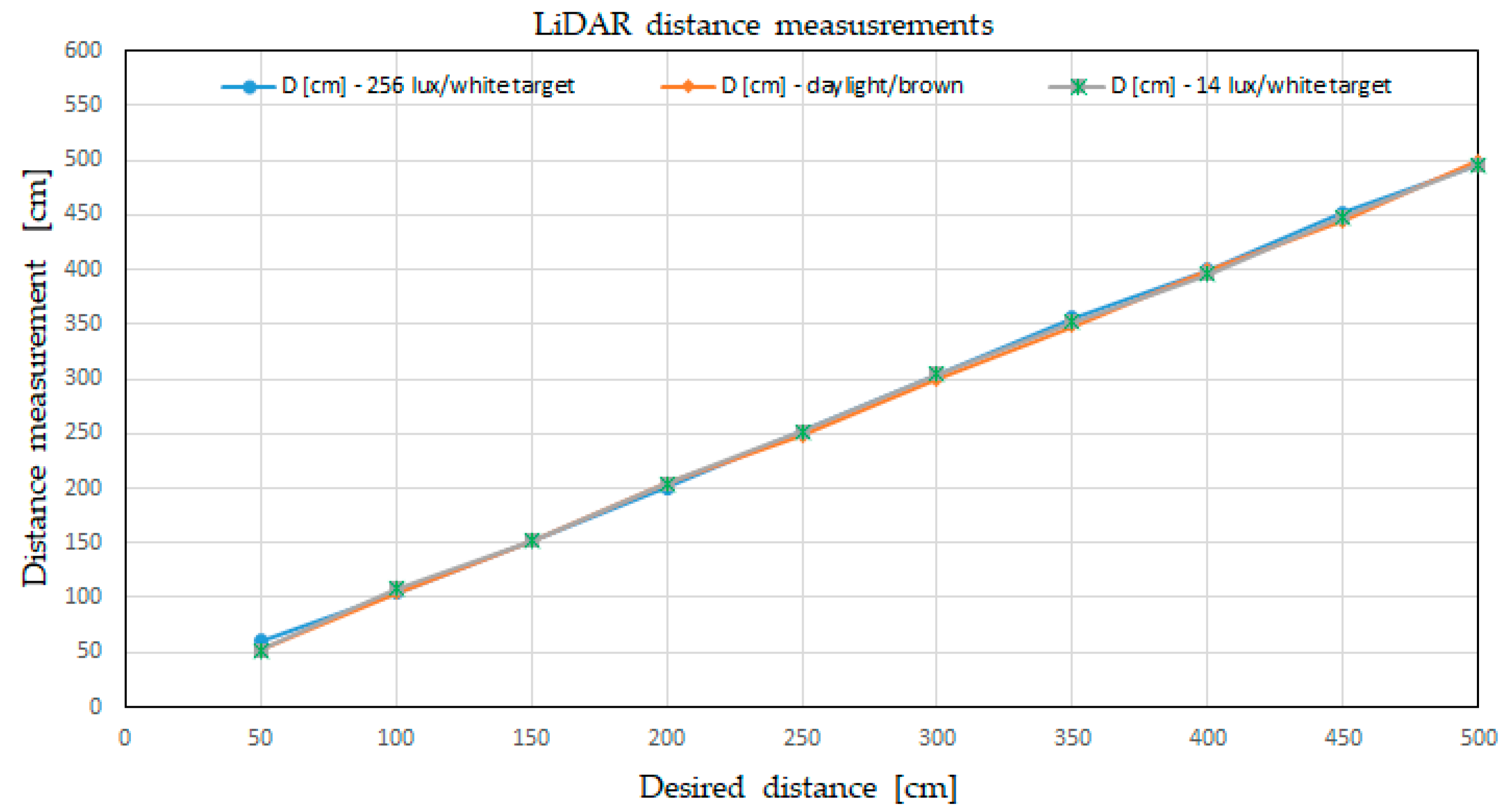

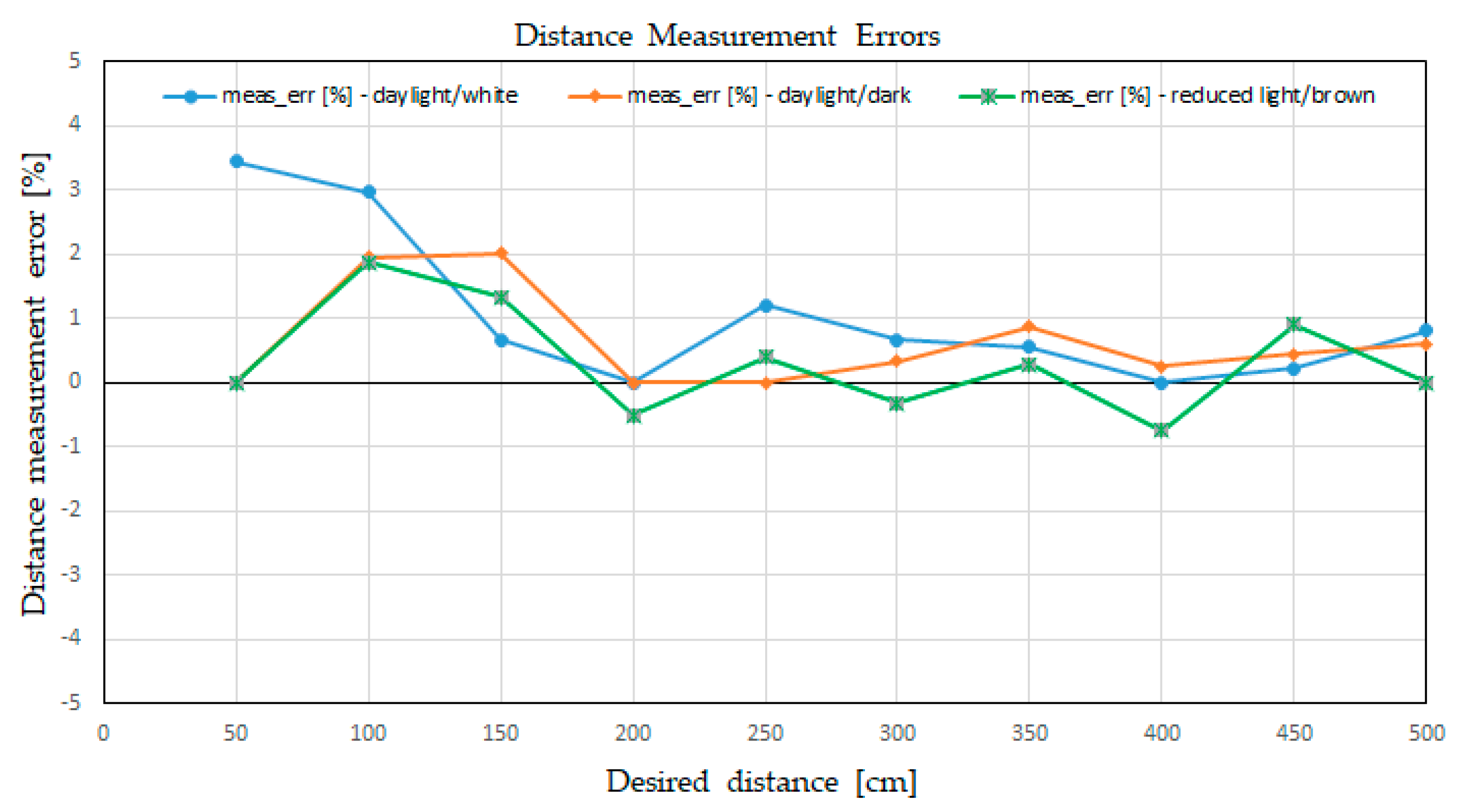

3.1. Distance Measurement

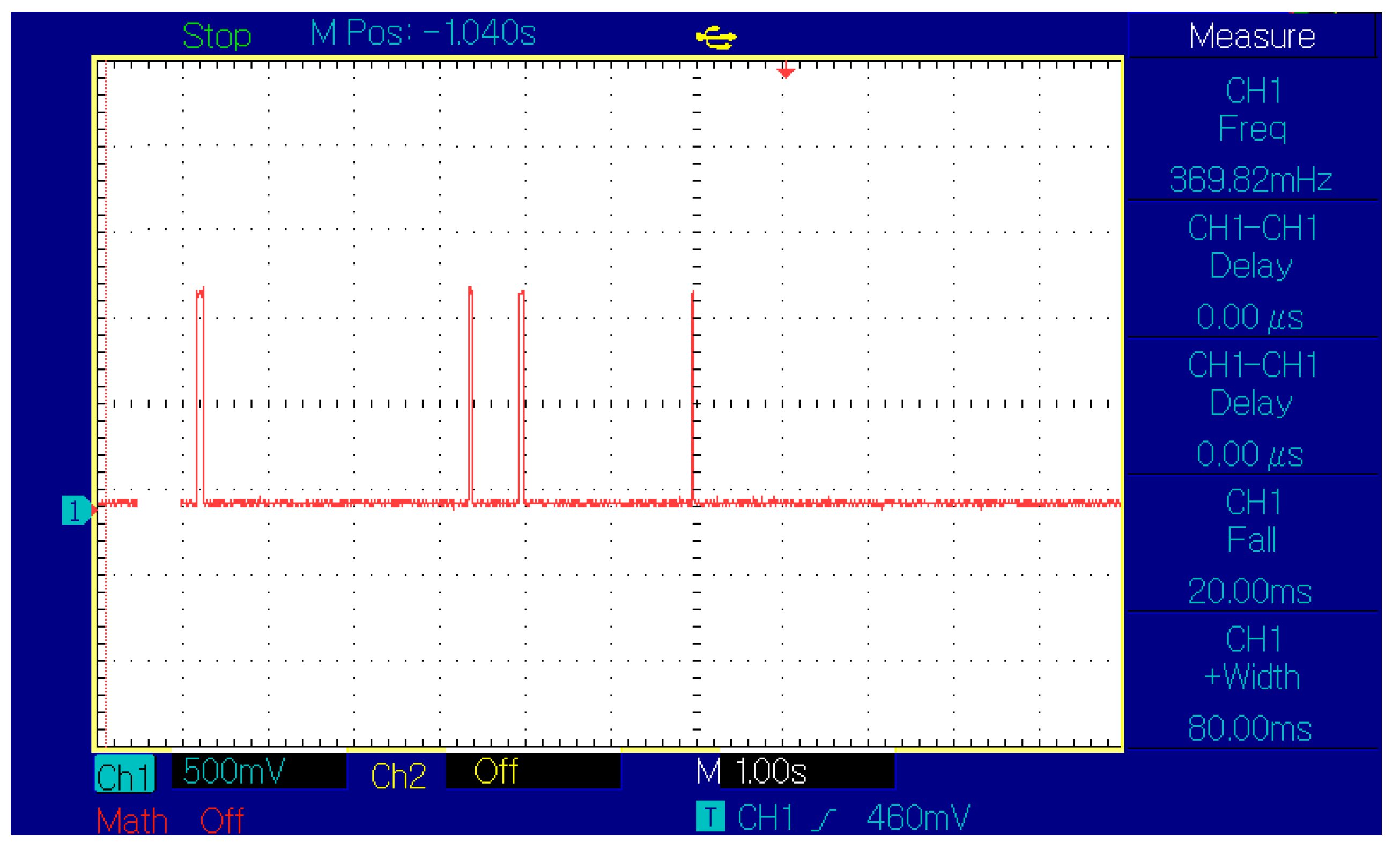

3.2. Speed Measurement

3.2.1. Speed Measurement Using the on-Board Speed Sensor

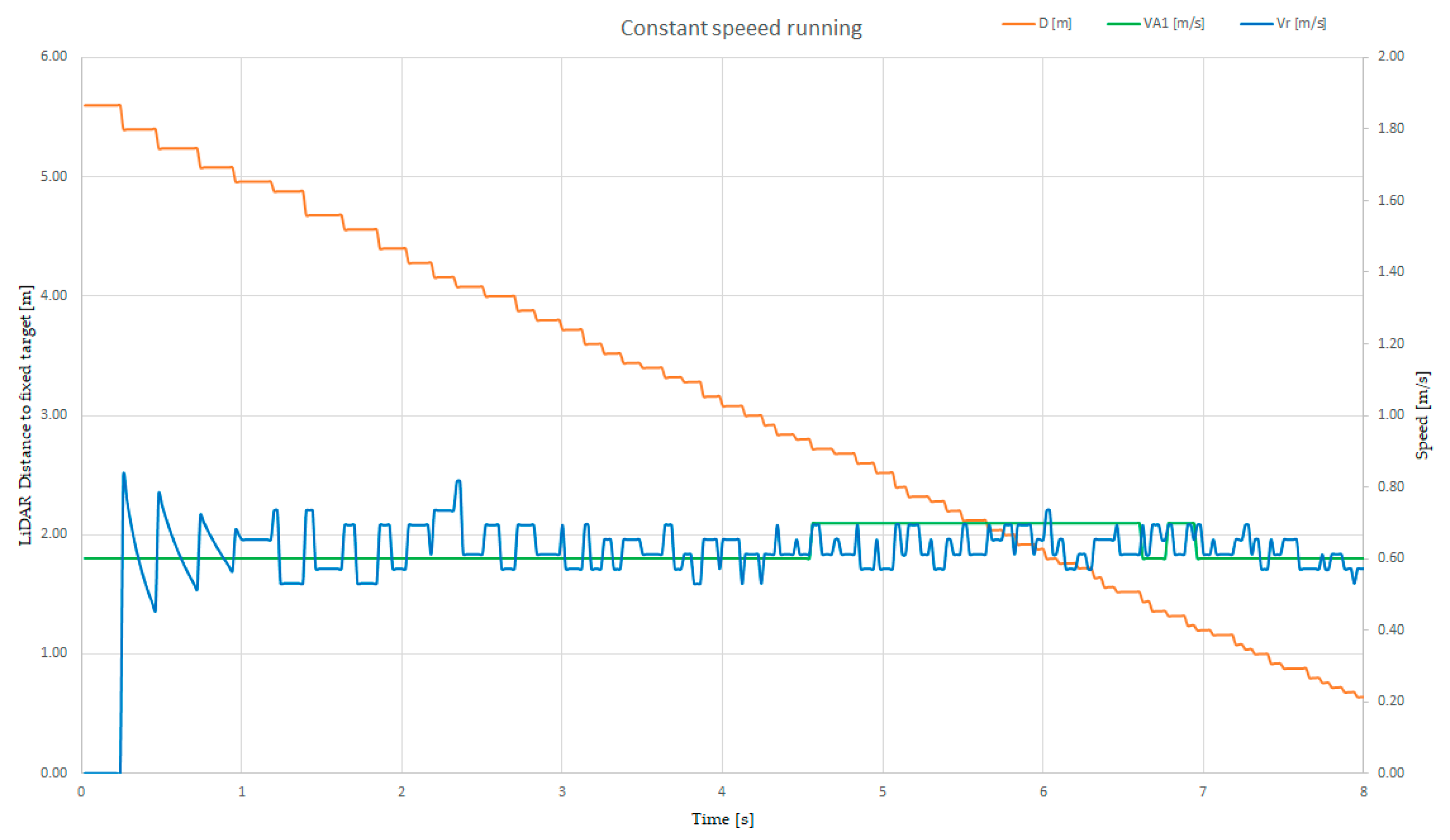

3.2.2. Speed Measurement Using the Reported Distance

3.3. Relative Speed Measurement

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Berkovic, G.; Shafir, E. Optical methods for distance and displacement measurements. Adv. Opt. Photonics 2012, 4, 441–471. [Google Scholar] [CrossRef]

- Ilas, C. Electronic sensing technologies for autonomous ground vehicles: A review. In Proceedings of the 8th International Symposium on Advanced Topics in Electrical Engineering(ATEE), Bucharest, Romania, 23–25 May 2013. [Google Scholar] [CrossRef]

- Wu, T.; Hu, J.; Ye, L.; Ding, K. A Pedestrian Detection Algorithm Based on Score Fusion for Multi LiDAR Systems. Sensors 2021, 21, 1159. [Google Scholar] [CrossRef] [PubMed]

- Nataprawira, J.; Gu, Y.; Goncharenko, I.; Kamijo, S. Pedestrian Detection Using Multispectral Images and a Deep Neural Network. Sensors 2021, 21, 2536. [Google Scholar] [CrossRef] [PubMed]

- Muzal, M.; Zygmunt, M.; Knysak, P.; Drozd, T.; Jakubaszek, M. Methods of Precise Distance Measurements for Laser Rangefinders with Digital Acquisition of Signals. Sensors 2021, 21, 6426. [Google Scholar] [CrossRef] [PubMed]

- Dulău, M.; Oniga, F. Obstacle Detection Using a Facet-Based Representation from 3-D LiDAR Measurements. Sensors 2021, 21, 6861. [Google Scholar] [CrossRef] [PubMed]

- Zeng, Q.; Kan, Y.; Tao, X.; Hu, Y. LiDAR Positioning Algorithm Based on ICP and Artificial Landmarks Assistance. Sensors 2021, 21, 7141. [Google Scholar] [CrossRef] [PubMed]

- Tudor., E.; Vasile, I.; Popa, G.; Gheti, M. LiDAR Sensors Used for Improving Safety of Electronic-Controlled Vehicles. In Proceedings of the 12th International Symposium on Advanced Topics in Electrical Engineering, Bucharest, Romania, 25–27 March 2021. [Google Scholar] [CrossRef]

- Slawomir, P. Measuring Distance with Light. Hamamatsu Corporation & New Jersey Institute of Technology. Available online: https://hub.hamamatsu.com/us/en/application-note/measuring-distance-with-light/index.html (accessed on 31 October 2021).

- Iddan, G.J.; Yahav, G. Three-dimensional imaging in the studio and elsewhere. In Proceedings of the Three-Dimensional Image Capture and Applications IV, San Jose, CA, USA, 20–26 January 2001. [Google Scholar] [CrossRef]

- Ho, H.W.; de Croon, G.C.; Chu, Q. Distance and velocity estimation using optical flow from a monocular camera. Int. J. Micro Air Veh. 2017, 9, 198–208. [Google Scholar] [CrossRef]

- Panagiotis, G.T.; Haneen, F.; Papadimitriou, H.; van Oort, N.; Hagenzieker, M. Tram drivers’ perceived safety and driving stress evaluation. A stated preference experiment. Transp. Res. Interdiscip. Perspect. 2020, 7, 100205. [Google Scholar] [CrossRef]

- Do, L.; Herman, I.; Hurak, Z. Onboard Model-based Prediction of Tram Braking Distance. IFAC-Pap. 2020, 53, 15047–15052. [Google Scholar] [CrossRef]

- Yosuke, I.; Yukihiko, Y.; Akitoshi, M.; Jun, T.; Masayuki, S. Estimated Time-To-Collision (TTC) Calculation Apparatus and Estimated TTC Calculation Method. U.S. Patent Application No. US 20170210360A1, 27 July 2017. [Google Scholar]

- Patlins, A.; Kunicina, N.; Zhiravecka, A.; Shukaeva, S. LiDAR Sensing Technology Using in Transport Systems for Tram Motion Control. Elektron. Ir Elektrotechnika 2010, 101, 13–16. [Google Scholar]

- leddartech.com. Available online: https://leddartech.com/why-LiDAR (accessed on 31 October 2021).

- Shinoda, N.; Takeuchi, T.; Kudo, N.; Mizuma, T. Fundamental experiment for utilizing LiDAR sensor for railway. Int. J. Transp. Dev. Integr. 2018, 2, 319–329. [Google Scholar] [CrossRef]

- Palmer, A.W.; Sema, A.; Martens, W.; Rudolph, P.; Waizenegger, W. The Autonomous Siemens Tram. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodos, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Kadlec, E.A.; Barber, Z.W.; Rupavatharam, K.; Angus, E.; Galloway, R.; Rogers, E.M.; Thornton, J.; Crouch, S. Coherent LiDAR for Autonomous Vehicle Applications. In Proceedings of the 24th OptoElectronics and Communications Conference (OECC) and 2019 International Conference on Photonics in Switching and Computing (PSC), Fukuoka, Japan, 7–11 July 2019; pp. 1–3. [Google Scholar] [CrossRef]

- Garcia, F.; Jimenez, F.; Naranjo, J.E.; Zato, J.G.; Aparicio, F. Environment perception based on LiDAR sensors for real road. Robotica 2012, 30, 185–193. [Google Scholar] [CrossRef] [Green Version]

- Jingyun, L.; Qiao, S.; Zhq, F.; Yudong, J. TOF LiDAR Development in Autonomous Vehicle. In Proceedings of the 3rd Optoelectronics Global Conference, Szenzen, China, 4–7 September 2018. [Google Scholar] [CrossRef]

- Hadj-Bachir, M.; de Souza, P. LiDAR Sensor Simulation in Adverse Weather Condition for Driving Assistance Development. Available online: https://hal.archives-ouvertes.fr/hal-01998668 (accessed on 31 October 2021).

- Kim, J.; Park, B.-j.; Roh, C.-g.; Kim, Y. Performance of Mobile LiDAR in Real Road Driving Conditions. Sensors 2021, 21, 7461. [Google Scholar] [CrossRef] [PubMed]

- Jin, X.; Yan, Z.; Yin, G.; Li, S.; Wei, C. An Adaptive Motion Planning Technique for On-Road Autonomous Driving. IEEE Access 2020, 9, 2655–2664. [Google Scholar] [CrossRef]

- Di Palma, C.; Galdi, V.; Calderaro, V.; De Luca, F. Driver Assistance System for Trams: Smart Tram in Smart Cities. In Proceedings of the 2020 IEEE International Conference on Environment and Electrical Engineering and 2020 IEEE Industrial and Commercial Power Systems Europe (EEEIC/I&CPS Europe), Madrid, Spain, 9–12 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Atmega2560. Available online: https://www.microchip.com/en-us/product/ATmega2560 (accessed on 31 October 2021).

- nRF24 Series. Available online: https://www.nordicsemi.com/products/nrf24-series (accessed on 31 October 2021).

- LiDAR-Lite v4 LED. Available online: https://support.garmin.com/en-US/?partNumber=010-02022-00&tab=manuals (accessed on 31 October 2021).

- LiDAR-Lite v3. Available online: https://support.garmin.com/ro-RO/?partNumber=010-01722-00&tab=manuals (accessed on 31 October 2021).

- AVR-GCC. Available online: https://gcc.gnu.org/wiki/HomePage (accessed on 31 October 2021).

| Type of Distance Measurement Method | |||

|---|---|---|---|

| Optical methods | Passive | Geometrical | - |

| Active | Geometrical | - | |

| Time-of-flight | Direct methods | ||

| Indirect methods | |||

| Interferometry | - | ||

| Non optical methods | - | ||

| Level of Autonomy | Example of Applications | LiDAR Adoption |

|---|---|---|

| Level 1 | Automatic Emergency Braking (AEB) Adaptive Cruise Control (ACC) Lane Keep Assist (LKA) | Little or no LiDAR |

| Level 2 | Parking Assist (PA)) Traffic Jam Assist (TJA) | Some will use LiDAR |

| Level 3 | Highway pilot | Most will use LiDAR |

| Level 4 | Automated Urban Mobility | LiDAR is necessary |

| Level 5 | Full Automation | LiDAR is necessary |

| Pin 4 | Pin 5 | State |

|---|---|---|

| 0 | 0 | Stop |

| 0 | 1 | Forward |

| 1 | 0 | Backward |

| 1 | 1 | Stop |

| Desired | (cm) | 50 | 100 | 150 | 200 | 250 | 300 | 350 | 400 | 450 | 500 |

| Dmas | (cm) | 58 | 101 | 151 | 200 | 249 | 302 | 354 | 400 | 451 | 492 |

| DLiDAR | (cm) | 60 | 104 | 152 | 200 | 252 | 304 | 356 | 400 | 452 | 496 |

| LiDAR Error | (%) | 3.44 | 2.97 | 0.66 | 0 | 1.2 | 0.67 | 0.56 | 0 | 0.22 | 0.81 |

| Desired | (cm) | 50 | 100 | 150 | 200 | 250 | 300 | 350 | 400 | 450 | 500 |

| Dmas | (cm) | 52 | 102 | 149 | 204 | 248 | 299 | 345 | 399 | 442 | 497 |

| DLiDAR | (cm) | 52 | 104 | 152 | 204 | 248 | 300 | 348 | 400 | 444 | 500 |

| LiDAR Error | (%) | 0 | 1.96 | 2.01 | 0 | 0 | 0.34 | 0.87 | 0.25 | 0.45 | 0.6 |

| Desired | (cm) | 50 | 100 | 150 | 200 | 250 | 300 | 350 | 400 | 450 | 500 |

| Dmas | (cm) | 52 | 106 | 150 | 205 | 251 | 305 | 351 | 399 | 444 | 496 |

| DLiDAR | (cm) | 52 | 108 | 152 | 204 | 252 | 304 | 352 | 396 | 448 | 496 |

| LiDAR Error | (%) | 0 | 1.88 | 1.33 | −0.5 | 0.39 | −0.32 | 0.28 | −0.75 | 0.9 | 0 |

| vs Average speed (From mobile platform) | (m/s) | 0.99 | 0.88 | 0.66 | 0.22 |

| d Timing distance | (cm) | 400 | 400 | 400 | 400 |

| Timing t1 | (s) | 4.08 | 4.58 | 6.06 | 15.04 |

| Timing t2 | (s) | 4.18 | 4.62 | 6.05 | 14.72 |

| Timing t3 | (s) | 4.12 | 4.63 | 6.17 | 16.62 |

| Average time tav | [s] | 4.1264 | 4.61 | 6.093 | 15.46 |

| va Speed (average time) | (m/s) | 0.969 | 0.867 | 0.656 | 0.258 |

| Speed error | (%) | 2.12 | 1.47 | 0.61 | 17.6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vasile, I.; Tudor, E.; Sburlan, I.-C.; Gheți, M.-A.; Popa, G. Experimental Validation of LiDAR Sensors Used in Vehicular Applications by Using a Mobile Platform for Distance and Speed Measurements. Sensors 2021, 21, 8147. https://doi.org/10.3390/s21238147

Vasile I, Tudor E, Sburlan I-C, Gheți M-A, Popa G. Experimental Validation of LiDAR Sensors Used in Vehicular Applications by Using a Mobile Platform for Distance and Speed Measurements. Sensors. 2021; 21(23):8147. https://doi.org/10.3390/s21238147

Chicago/Turabian StyleVasile, Ionuț, Emil Tudor, Ion-Cătălin Sburlan, Marius-Alin Gheți, and Gabriel Popa. 2021. "Experimental Validation of LiDAR Sensors Used in Vehicular Applications by Using a Mobile Platform for Distance and Speed Measurements" Sensors 21, no. 23: 8147. https://doi.org/10.3390/s21238147

APA StyleVasile, I., Tudor, E., Sburlan, I.-C., Gheți, M.-A., & Popa, G. (2021). Experimental Validation of LiDAR Sensors Used in Vehicular Applications by Using a Mobile Platform for Distance and Speed Measurements. Sensors, 21(23), 8147. https://doi.org/10.3390/s21238147