Robust Data Association Using Fusion of Data-Driven and Engineered Features for Real-Time Pedestrian Tracking in Thermal Images

Abstract

:1. Introduction

- -

- The number of objects within the field of view (FOV) of the sensor, which may be unknown and in different states.

- -

- Objects enter and leave the sensor FOV; therefore, it is necessary to have good object management and object identity management.

- -

- Since the object detector is not perfect, it may be susceptible to two kinds of errors, missed detections (due to environment conditions, object properties, or occlusions) and false detections or clutter (a detection that is not caused by an object). Both types of errors could lead to disastrous outcomes if they are not handled correctly.

- -

- The origin uncertainty: There is no knowledge about how the new measurements relate to previous sensor data, and

- -

- Motion uncertainty: Objects can have multiple motion patterns, which may change in consecutive frames.

- We designed a family of five Siamese Convolutional Neural Networks that were combined to create a data-driven, appearance-based association score capable of working even in the case of partial occlusions. The base architecture of all neural nets is similar and its design is also a contribution of the current paper.

- We proposed a uniform, local binary pattern descriptor obtained from edge orientations. This engineered feature will be used to compute a similarity score between measurements and tracks. The number will be included in the data association score to provide adaptability to unknown scenarios.

- The creation of the dataset is useful for training a CNN when designing an appearance data association function for tracking pedestrians in thermal images. The dataset is made publicly available.

2. Related Work

2.1. Feature Engineering-Based Tracking Methods

2.2. Data-Driven Tracking Methods

3. Proposed Solution

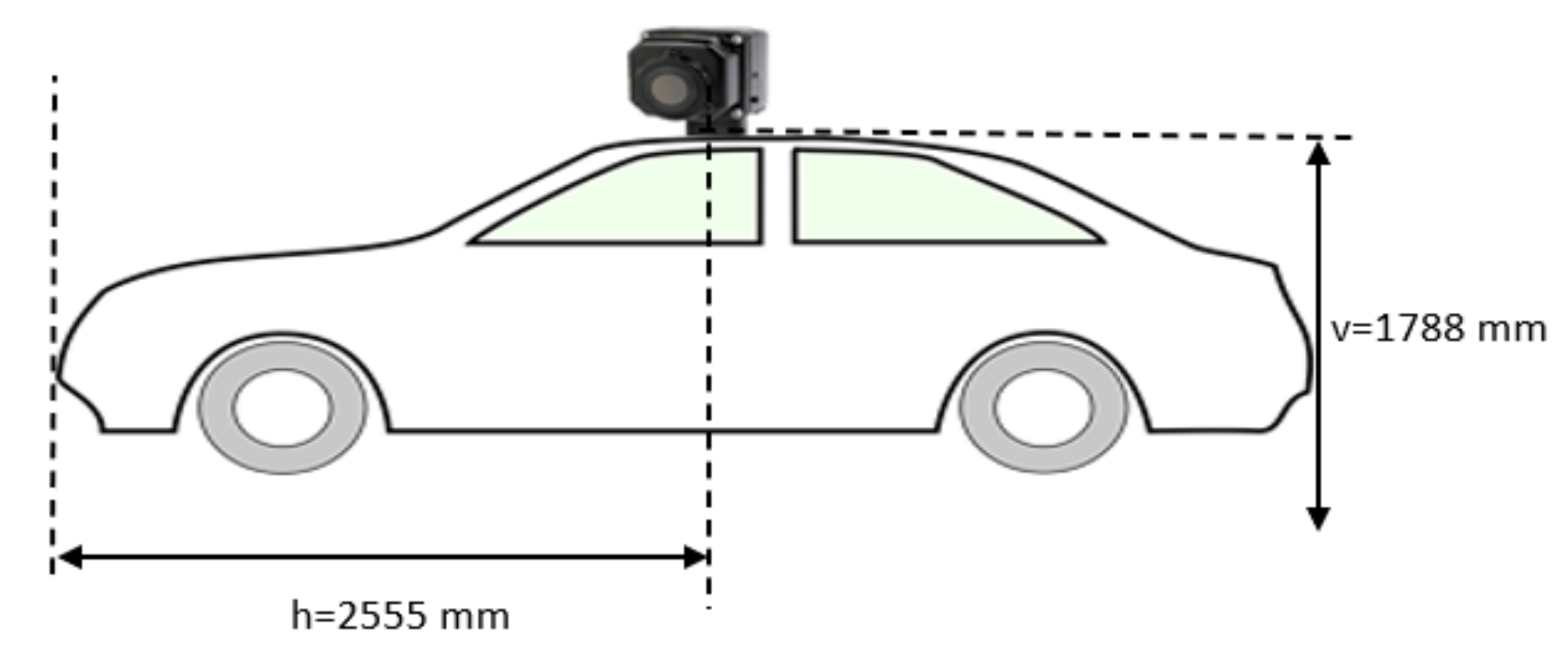

3.1. Camera Setup

3.2. Proposed Approach

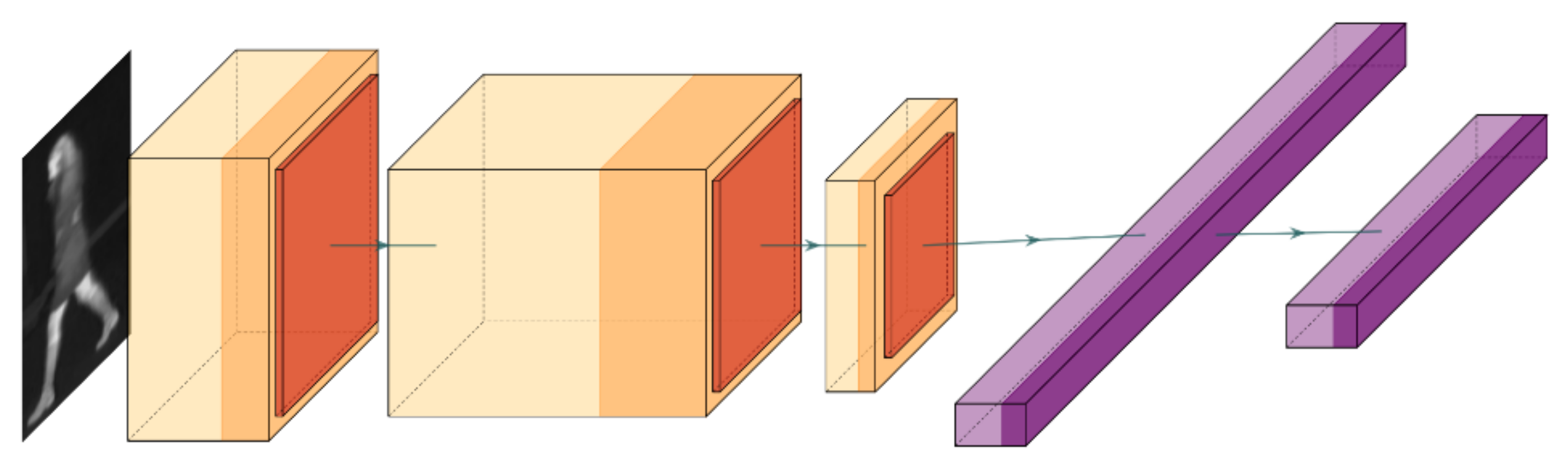

3.2.1. Data-Driven Score

3.2.2. Feature Engineered Score

3.2.3. Data Association Score and Tracking

3.2.4. Pedestrian Dataset from Thermal Images

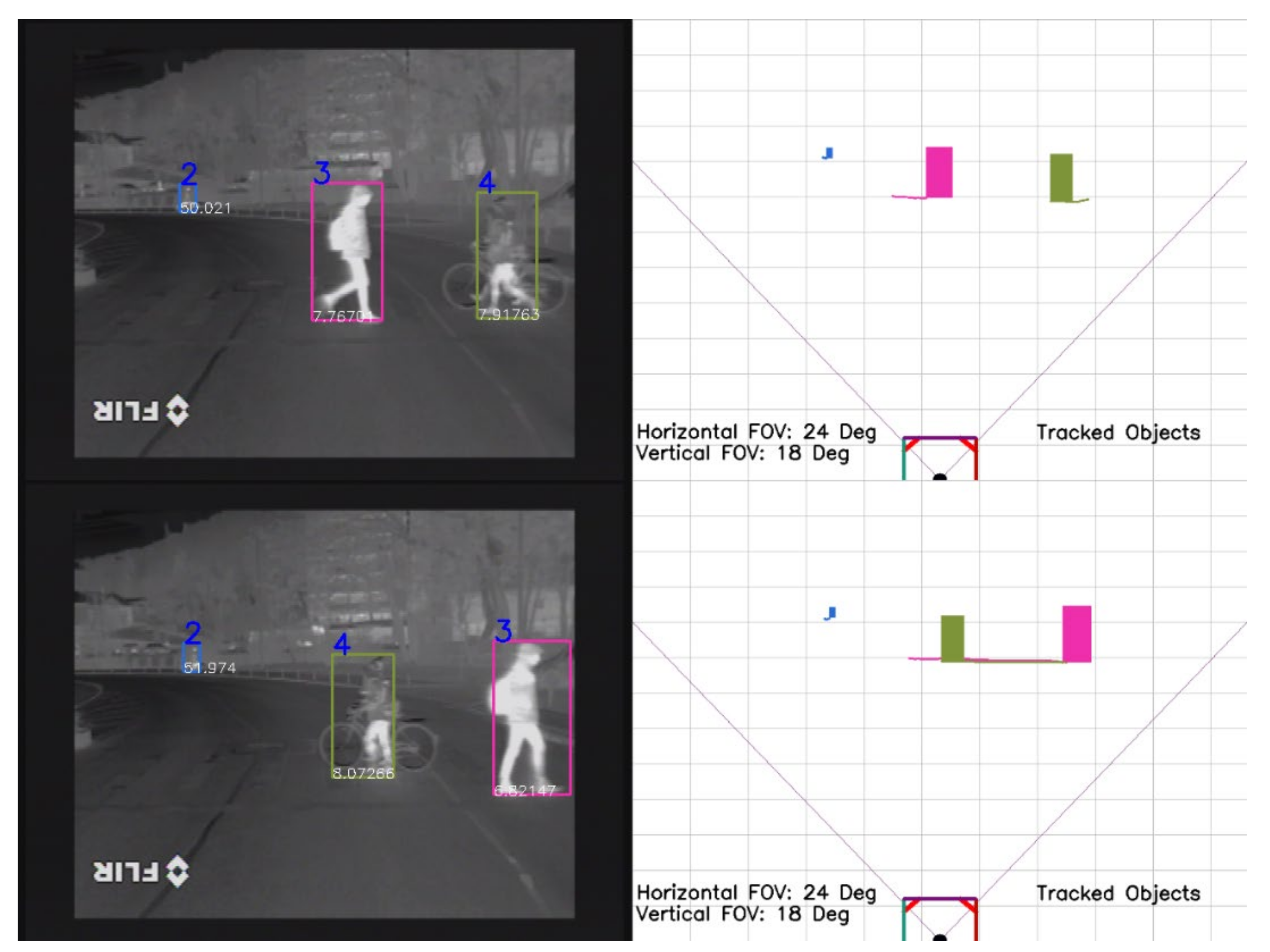

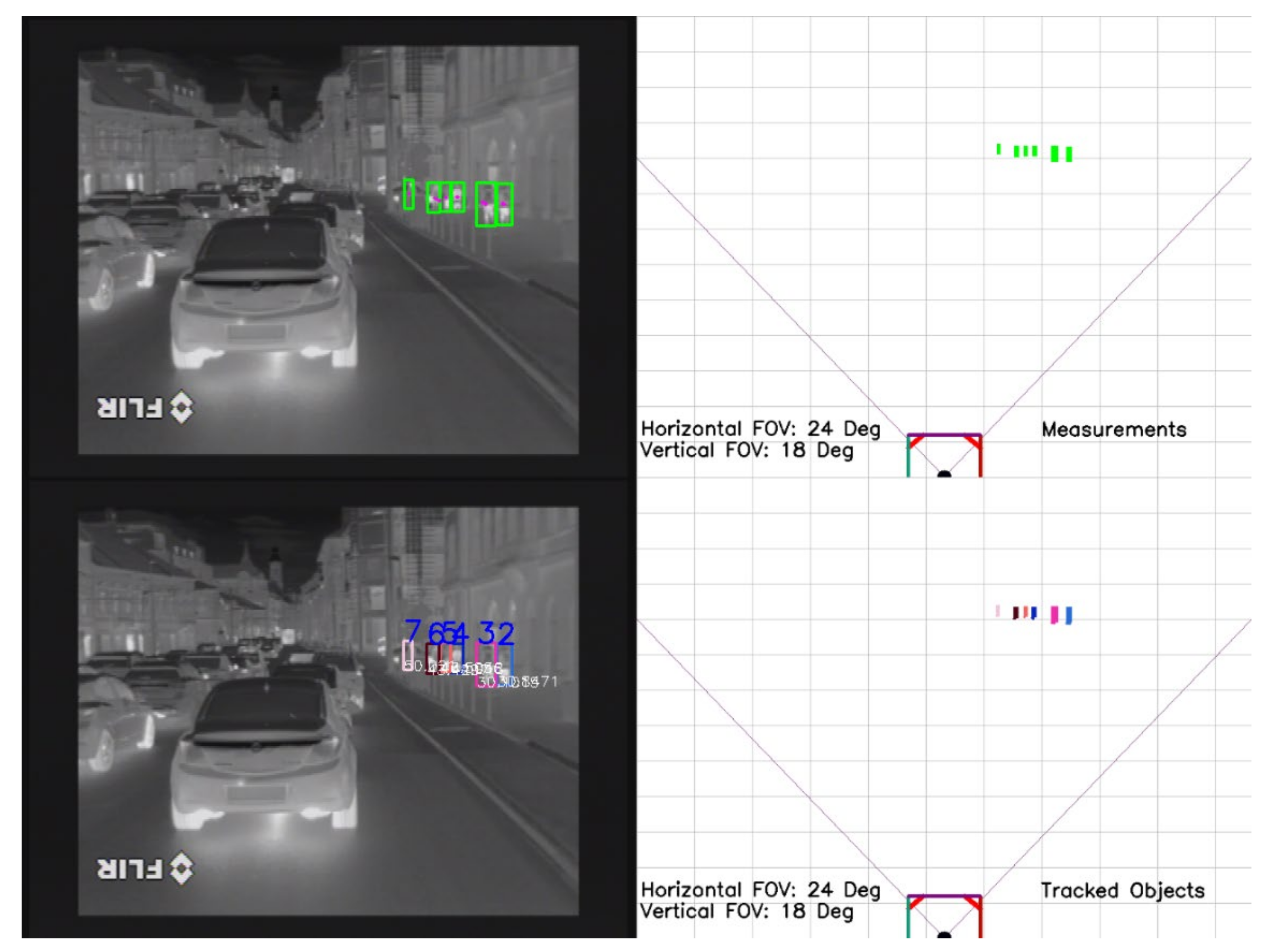

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Berg, A.; Ahlberg, J.; Felsberg, M. A thermal Object Tracking benchmark. In Proceedings of the 2015 12th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Karlsruhe, Germany, 25–28 August 2015; pp. 1–6. [Google Scholar]

- Muresan, M.P.; Nedevschi, S. Multi-Object Tracking of 3D Cuboids Using Aggregated Features. In Proceedings of the 2019 IEEE 15th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 5–7 September 2019; pp. 11–18. [Google Scholar]

- Grudzinski, M.; Marchewka, L.; Pajor, M.; Zietek, R. Stereovision Tracking System for Monitoring Loader Crane Tip Position. IEEE Access 2020, 8, 223346–223358. [Google Scholar] [CrossRef]

- Tang, S.; Andriluka, M.; Andres, B.; Schiele, B. Multiple people tracking by lifted multicut and person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3539–3548. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, P.R.; Zajc, L.C.; Vojír, T.; Bhat, G.; Lukezic, A.; Eldesokey, A.; et al. The Sixth Visual Object Tracking vot2018 Challenge Results. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September; 2018; pp. 3–53. [Google Scholar]

- Lee, B.; Erdenee, E.; Jin, S.; Nam, M.Y.; Jung, Y.G.; Rhee, P.K. Multi-Class Multi-Object Tracking Using Changing Point Detection; Computer Vision—ECCV Workshops; Hua, G., Jégou, H., Eds.; Springer: Cham, Switzerland, 2016; pp. 68–83. [Google Scholar]

- Liu, X.; Fujimura, K. Pedestrian detection using stereo night vision. IEEE Trans. Veh. Technol. 2004, 53, 1657–1665. [Google Scholar] [CrossRef]

- Mureșan, M.P.; Giosan, I.; Nedevschi, S. Stabilization and Validation of 3D Object Position Using Multimodal Sensor Fusion and Semantic Segmentation. Sensors 2020, 20, 1110. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, D.; Kwon, D. Pedestrian detection and tracking in thermal images using shape features. In Proceedings of the 2015 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Goyangi, Korea, 28–30 October; 2015; pp. 22–25. [Google Scholar]

- Gündüz, G.; Acarman, T. A lightweight online multiple object vehicle tracking method. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 427–432. [Google Scholar]

- Karunasekera, H.; Wang, H.; Zhang, H. Multiple Object Tracking With Attention to Appearance, Structure, Motion and Size. IEEE Access 2019, 7, 104423–104434. [Google Scholar] [CrossRef]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef] [Green Version]

- Choi, W. Near-Online Multi-target Tracking with Aggregated Local Flow Descriptor. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3029–3037. [Google Scholar]

- Bertozzi, M.; Broggi, A.; Fascioli, A.; Graf, T.; Meinecke, M.M. IR pedestrian detection for advanced driver assistance systems. IEEE Trans. Veh. Technol. 2004, 53, 1666–1678. [Google Scholar] [CrossRef]

- Munder, S.; Schnorr, C.; Gavrila, D. Pedestrian Detection and Tracking Using a Mixture of View-Based Shape–Texture Models. IEEE Trans. Intell. Transp. Syst. 2008, 9, 333–343. [Google Scholar] [CrossRef]

- Kallhammer, J.E.; Eriksson, D.; Granlund, G.; Felsberg, M.; Moe, A.; Johansson, B.; Wiklund, J.; Forssen, P.E. Near Zone Pedestrian Detection using a Low-Resolution FIR Sensor. In Proceedings of the 2007 IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 339–345. [Google Scholar]

- Kwak, J.-Y.; Ko, B.C.; Nam, J.Y. Pedestrian Tracking Using Online Boosted Random Ferns Learning in Far-Infrared Imagery for Safe Driving at Night. IEEE Trans. Intell. Transp. Syst. 2017, 18, 69–81. [Google Scholar] [CrossRef]

- Yu, X.; Yu, Q.; Shang, Y.; Zhang, H. Dense structural learning for infrared object tracking at 200+ Frames per Second. Pattern Recognit. Lett. 2017, 100, 152–159. [Google Scholar] [CrossRef]

- Zhu, G.; Porikli, F.; Li, H. Beyond local search: Tracking objects everywhere with instance-specific proposals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 943–951. [Google Scholar]

- Brehar, R.D.; Muresan, M.P.; Marita, T.; Vancea, C.-C.; Negru, M.; Nedevschi, S. Pedestrian Street-Cross Action Recognition in Monocular Far Infrared Sequences. IEEE Access 2021, 9, 74302–74324. [Google Scholar] [CrossRef]

- Nam, H.; Baek, M.; Han, B. Modeling and propagating cnns in a tree structure for visual tracking. arXiv 2016, arXiv:1608.07242. [Google Scholar]

- Alahari, K.; Berg, A.; Hager, G.; Ahlberg, J.; Kristan, M.; Matas, J.; Leonardis, A.; Cehovin, L.; Fernandez, G.; Vojir, T.; et al. The thermal infrared visual object tracking vot-tir2015 challenge results. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 639–651. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Computer Vision—ECCV 2016 Workshops. ECCV 2016. Lecture Notes in Computer Science; Hua, G., Jégou, H., Eds.; Springer: Cham, Switzerland, 2016; Volume 9914, pp. 850–865. [Google Scholar]

- Liu, Q.; Yuan, D.; He, Z. Thermal infrared object tracking via Siamese convolutional neural networks. In Proceedings of the 2017 International Conference on Security, Pattern Analysis, and Cybernetics (SPAC), Shenzhen, China, 7–13 December 2017; pp. 1–6. [Google Scholar]

- Zhang, X.; Chen, R.; Liu, G.; Li, X.; Luo, S.; Fan, X. Thermal Infrared Tracking using Multi-stages Deep Features Fusion. In Proceedings of the 2020 Chinese Control And Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 1883–1888. [Google Scholar]

- Guo, Q.; Feng, W.; Zhou, C.; Huang, R.; Wan, L.; Wang, S. Learning Dynamic Siamese Network for Visual Object Tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 December 2017; pp. 1781–1789. [Google Scholar]

- Wang, G.; Luo, C.; Xiong, Z.; Zeng, W. SPM-Tracker: Series-Parallel Matching for Real-Time Visual Object Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3643–3652. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zhang, L.; Gonzalez-Garcia, A.; Weijer, J.; Danelljan, M.; Khan, F.S. Synthetic Data Generation for End-to-End Thermal Infrared Tracking. IEEE Trans. Image Process. 2019, 28, 1837–1850. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-end representation learning for correlation filter-based tracking. Computer Vision and Pattern Recognition (CVPR). In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar]

- Liu, Q.; Li, X.; He, Z.; Fan, N.; Yuan, D.; Wang, H. Learning Deep Multi-Level Similarity for Thermal Infrared Object Tracking. IEEE Trans. Multimedia 2020, 23, 2114–2126. [Google Scholar] [CrossRef]

- Lahdenoja, O.; Poikonen, J.; Laiho, M. Towards Understanding the Formation of Uniform Local Binary Patterns. ISRN Mach. Vis. 2013, 2013, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Lee, F.-F.; Chen, F.; Liu, J. Infrared Thermal Imaging System on a Mobile Phone. Sensors 2015, 15, 10166–10179. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, RealTime Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- FLIR. Flir Thermal Dataset for Algorithm Training. Available online: https://www.flir.com/oem/adas/adas-dataset-form/ (accessed on 29 November 2021).

- Liu, Q.; He, Z.; Li, X.; Zheng, Y. PTB-TIR: A Thermal Infrared Pedestrian Tracking Benchmark. IEEE Trans. Multimedia 2019, 22, 666–675. [Google Scholar] [CrossRef]

- Song, Y.; Ma, C.; Wu, X.; Gong, L.; Bao, L.; Zuo, W.; Shen, C.; Lau, R.W.H.; Yang, M.-H. VITAL: VIsual tracking via adversarial learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8990–8999. [Google Scholar]

- Li, X.; Ma, C.; Wu, B.; He, Z.; Yang, M.H. Target-aware deep tracking. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; pp. 1369–1378. [Google Scholar]

- Liu, Q.; Lu, X.; He, Z.; Zhang, C.; Chen, W.-S. Deep convolutional neural networks for thermal infrared object tracking. Knowl.-Based Syst. 2017, 134, 189–198. [Google Scholar] [CrossRef]

- Qi, Y.; Zhang, S.; Qin, L.; Yao, H.; Huang, Q.; Lim, J.; Yang, M.-H. Hedged deep tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4303–4311. [Google Scholar]

- Ma, C.; Huang, J.-B.; Yang, X.; Yang, M.-H. Hierarchical convolutional features for visual tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3074–3082. [Google Scholar]

| Method | Feature Engineered | Data Driven | Whole Detection | Part-Based |

|---|---|---|---|---|

| Online Tracker [18] | x | x | ||

| Tracker [21] | x | x | ||

| SiamFC [25] | x | x | ||

| MLSSNet [33] | x | x | ||

| Proposed Solution | x | x | x | x |

| Attribute | Value |

|---|---|

| Video Sequences Used | 160 |

| Total Extracted Image Samples | 26,153 |

| Pedestrian Instances | 207 |

| Camera Position | Vehicle Mounted |

| Pixel Resolution | 8 bpp |

| Original Image Resolution | 640 × 480 |

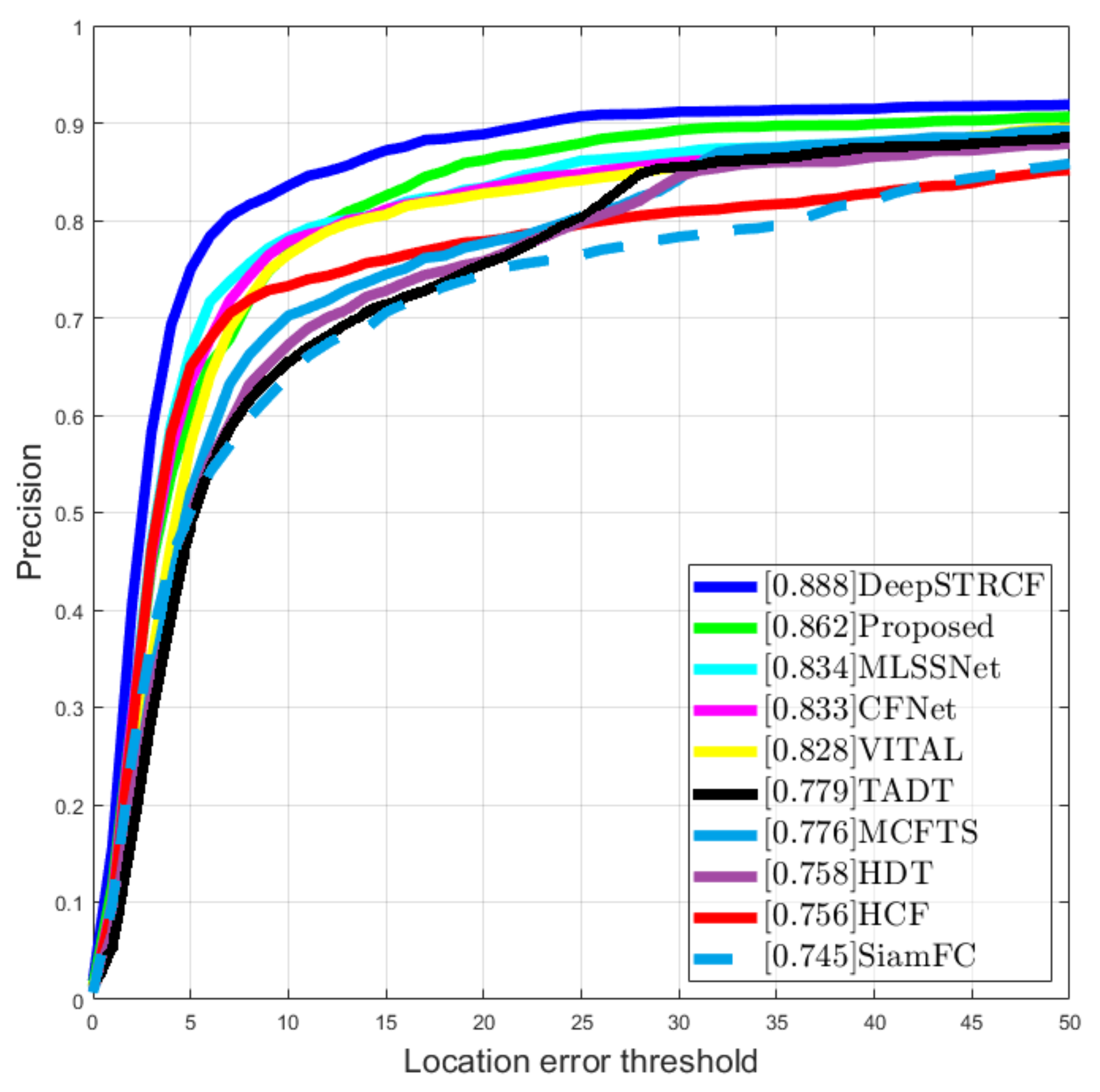

| Method | Tracking Precision Score |

|---|---|

| DeepSTRCF [23] | 88.8% |

| Proposed | 86.2% |

| MLSSNet [33] | 83.4% |

| CFNet [32] | 83.3% |

| VITAL [39] | 82.8% |

| TADT [40] | 77.9% |

| MCFTS [41] | 77.6% |

| HDT [42] | 75.8% |

| HCF [43] | 75.6% |

| SiamFC [25] | 74.5% |

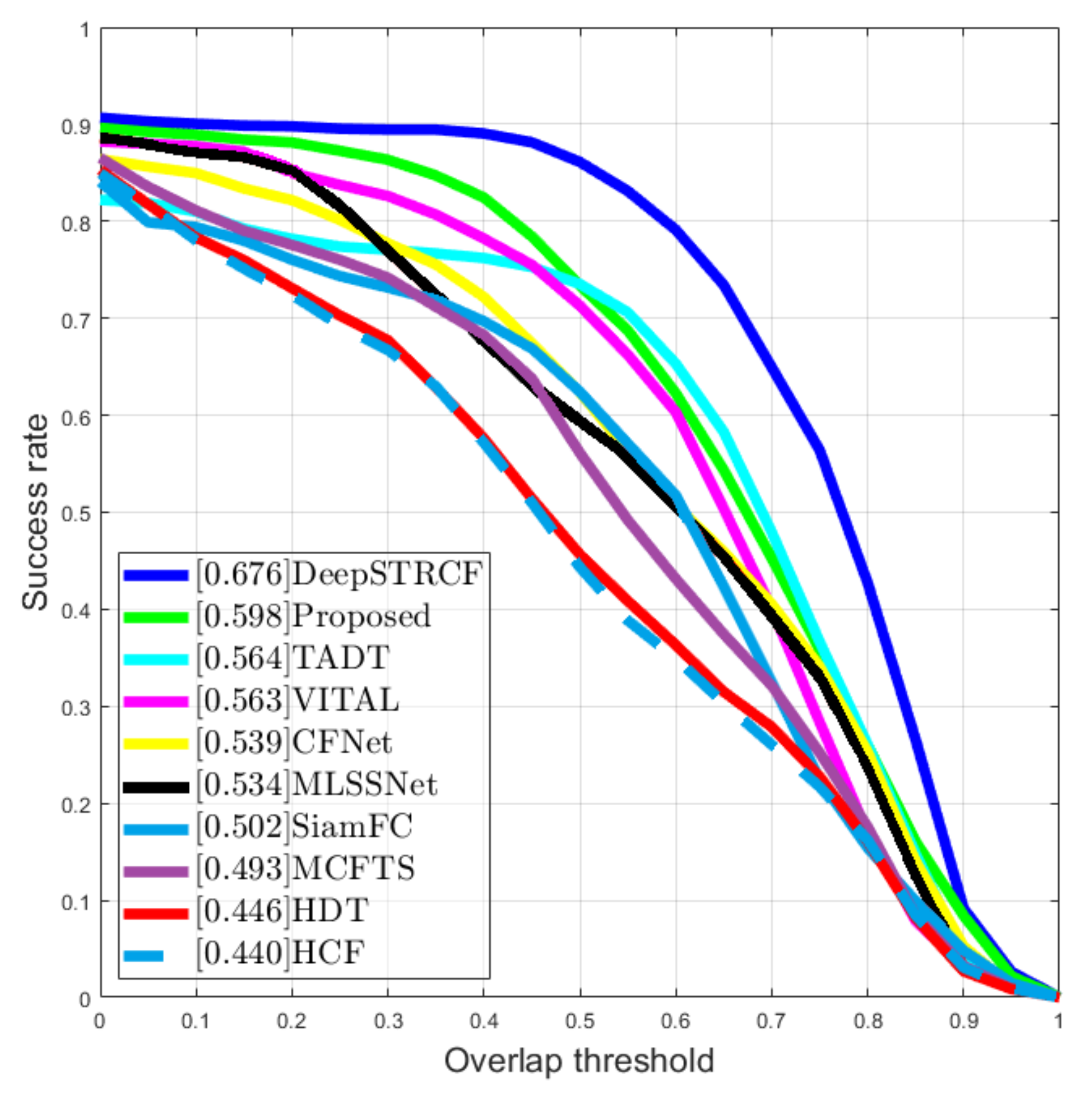

| Method | Tracking Success Score |

|---|---|

| DeepSTRCF [23] | 67.6% |

| Proposed | 59.8% |

| TADT [40] | 56.4% |

| VITAL [39] | 56.3% |

| CFNet [32] | 53.9% |

| MLSSNet [33] | 53.4% |

| SiamFC [25] | 50.2% |

| MCFTS [41] | 49.3% |

| HDT [42] | 44.6% |

| HCF [43] | 44% |

| Method | MOTA | MOTP | IDSW |

|---|---|---|---|

| Proposed | 86.14% | 88.63% | 134 |

| Base Solution | 81.36% | 83.17% | 143 |

| TADT | 80.3% | 81.7% | 121 |

| MLSSNet | 79.8% | 82.3% | 269 |

| SiamFC | 76.4% | 82.1% | 343 |

| Average Precision | Average Accuracy | Average Recall | |

|---|---|---|---|

| Object Detector | 75.98% | 66.47% | 98.67% |

| Base Solution | 80.01% | 76.4% | 95.8% |

| Base with only Data-Driven Score | 86.15% | 79.22% | 93.21% |

| Base with only Engineered Score | 83.25% | 78.43% | 93.71% |

| Base with all Fused Scores (proposed) | 88.61% | 80.02% | 94.8% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muresan, M.P.; Nedevschi, S.; Danescu, R. Robust Data Association Using Fusion of Data-Driven and Engineered Features for Real-Time Pedestrian Tracking in Thermal Images. Sensors 2021, 21, 8005. https://doi.org/10.3390/s21238005

Muresan MP, Nedevschi S, Danescu R. Robust Data Association Using Fusion of Data-Driven and Engineered Features for Real-Time Pedestrian Tracking in Thermal Images. Sensors. 2021; 21(23):8005. https://doi.org/10.3390/s21238005

Chicago/Turabian StyleMuresan, Mircea Paul, Sergiu Nedevschi, and Radu Danescu. 2021. "Robust Data Association Using Fusion of Data-Driven and Engineered Features for Real-Time Pedestrian Tracking in Thermal Images" Sensors 21, no. 23: 8005. https://doi.org/10.3390/s21238005

APA StyleMuresan, M. P., Nedevschi, S., & Danescu, R. (2021). Robust Data Association Using Fusion of Data-Driven and Engineered Features for Real-Time Pedestrian Tracking in Thermal Images. Sensors, 21(23), 8005. https://doi.org/10.3390/s21238005