CyberEye: New Eye-Tracking Interfaces for Assessment and Modulation of Cognitive Functions beyond the Brain

Abstract

:1. Introduction to Eye-Tracking Interfaces

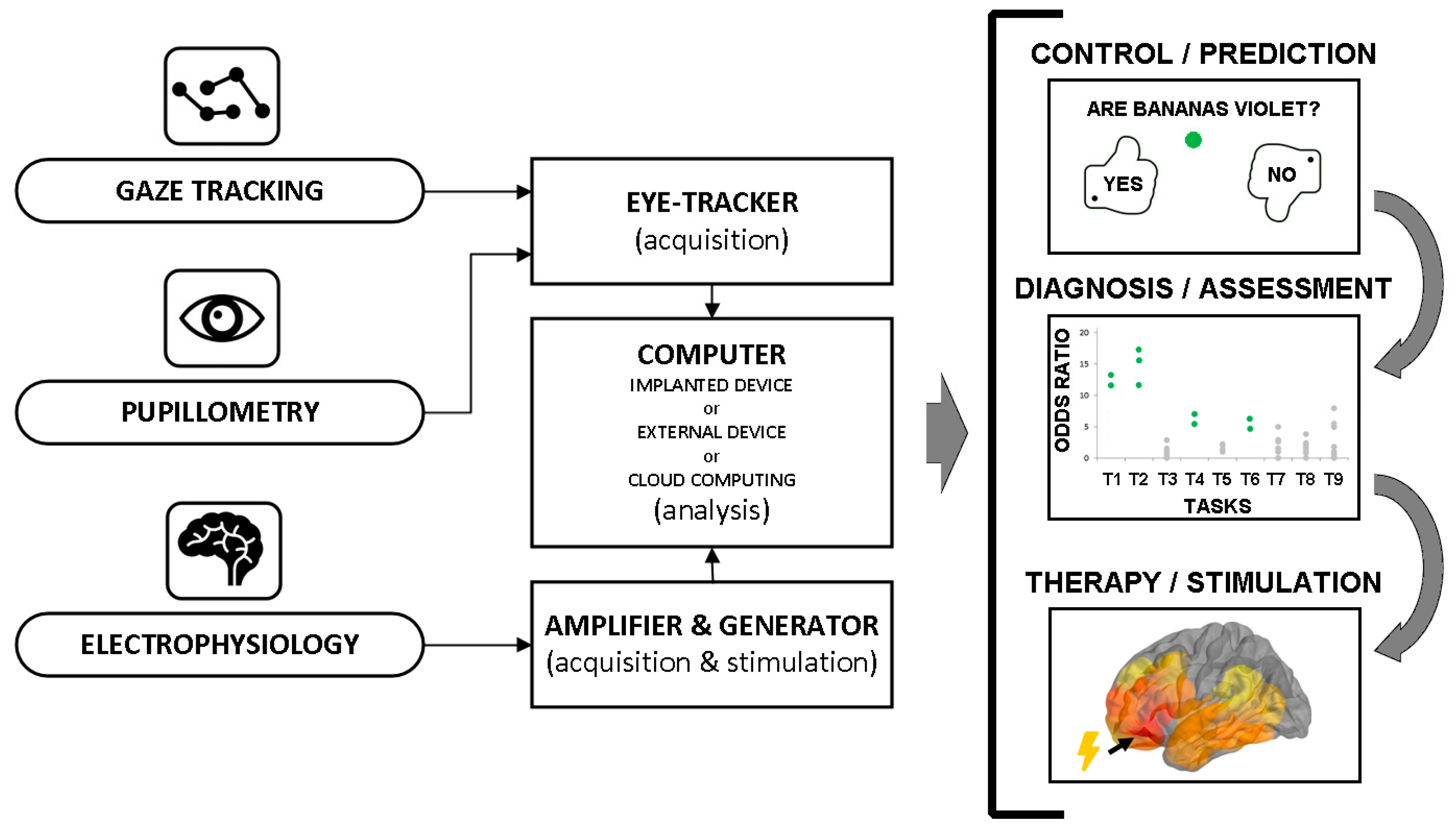

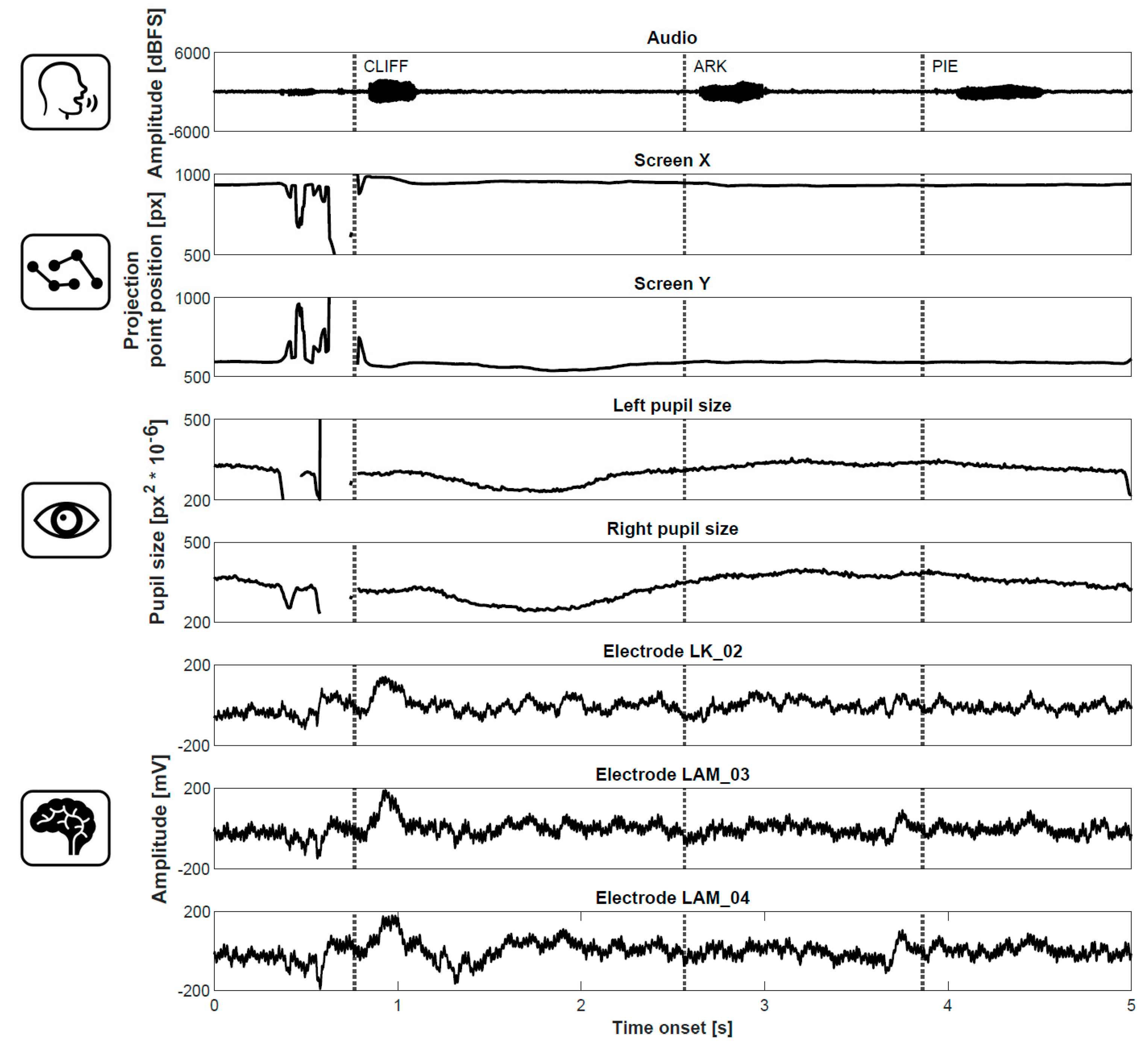

2. Eye-Tracking BCIs for Probing Memory and Cognitive Functions

3. CyberEye—Definition and Future Perspectives

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kawala-Sterniuk, A.; Browarska, N.; Al-Bakri, A.; Pelc, M.; Zygarlicki, J.; Sidikova, M.; Martinek, R.; Gorzelanczyk, E.J. Summary of over Fifty Years with Brain-Computer Interfaces-A Review. Brain Sci. 2021, 11, 43. [Google Scholar] [CrossRef]

- Fox, K.C.R.; Shi, L.; Baek, S.; Raccah, O.; Foster, B.L.; Saha, S.; Margulies, D.S.; Kucyi, A.; Parvizi, J. Intrinsic network architecture predicts the effects elicited by intracranial electrical stimulation of the human brain. Nat. Hum. Behav. 2020, 4, 1039–1052. [Google Scholar] [CrossRef]

- Caldwell, D.J.; Ojemann, J.G.; Rao, R.P.N. Direct electrical stimulation in electrocorticographic brain-computer interfaces: Enabling technologies for input to cortex. Front. Neurosci. 2019, 13, 804. [Google Scholar] [CrossRef]

- Miller, K.J.; Hermes, D.; Staff, N.P. The current state of electrocorticography-based brain-computer interfaces. Neurosurg. Focus 2020, 49, E2. [Google Scholar] [CrossRef]

- Leuthardt, E.C.; Schalk, G.; Wolpaw, J.R.; Ojemann, J.G.; Moran, D.W. A brain-computer interface using electrocorticographic signals in humans. J. Neural Eng. 2004, 1, 63–71. [Google Scholar] [CrossRef] [Green Version]

- Jeremy Hill, N.; Gupta, D.; Brunner, P.; Gunduz, A.; Adamo, M.A.; Ritaccio, A.; Schalk, G. Recording human electrocorticographic (ECoG) signals for neuroscientific research and real-time functional cortical mapping. J. Vis. Exp. 2012, 64, e3993. [Google Scholar] [CrossRef] [Green Version]

- Rowland, N.C.; Breshears, J.; Chang, E.F. Neurosurgery and the dawning age of Brain-Machine Interfaces. Surg. Neurol. Int. 2013, 4, S11. [Google Scholar] [CrossRef]

- Benabid, A.L.; Costecalde, T.; Torres, N.; Moro, C.; Aksenova, T.; Eliseyev, A.; Charvet, G.; Sauter, F.; Ratel, D.; Mestais, C.; et al. Deep brain stimulation. BCI at large, where are we going to? In Progress in Brain Research; Elsevier B.V.: Amsterdam, The Netherlands, 2011; Volume 194, pp. 71–82. [Google Scholar]

- Vansteensel, M.J.; Hermes, D.; Aarnoutse, E.J.; Bleichner, M.G.; Schalk, G.; Van Rijen, P.C.; Leijten, F.S.S.; Ramsey, N.F. Brain-computer interfacing based on cognitive control. Ann. Neurol. 2010, 67, 809–816. [Google Scholar] [CrossRef]

- Rabbani, Q.; Milsap, G.; Crone, N.E. The Potential for a Speech Brain–Computer Interface Using Chronic Electrocorticography. Neurotherapeutics 2019, 16, 144–165. [Google Scholar] [CrossRef] [Green Version]

- Milekovic, T.; Sarma, A.A.; Bacher, D.; Simeral, J.D.; Saab, J.; Pandarinath, C.; Sorice, B.L.; Blabe, C.; Oakley, E.M.; Tringale, K.R.; et al. Stable long-term BCI-enabled communication in ALS and locked-in syndrome using LFP signals. J. Neurophysiol. 2018, 120, 343–360. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Sun, P.; Anumanchipalli, G.K.; Chang, E.F. Brain2Char: A deep architecture for decoding text from brain recordings. J. Neural Eng. 2020, 17, 066015. [Google Scholar] [CrossRef]

- Milsap, G.; Collard, M.; Coogan, C.; Rabbani, Q.; Wang, Y.; Crone, N.E. Keyword Spotting Using Human Electrocorticographic Recordings. Front. Neurosci. 2019, 13, 60. [Google Scholar] [CrossRef]

- Willett, F.R.; Avansino, D.T.; Hochberg, L.R.; Henderson, J.M.; Shenoy, K. V High-performance brain-to-text communication via handwriting. Nature 2021, 593, 249–254. [Google Scholar] [CrossRef]

- Roelfsema, P.R.; Denys, D.; Klink, P.C. Mind Reading and Writing: The Future of Neurotechnology. Trends Cogn. Sci. 2018, 22, 598–610. [Google Scholar] [CrossRef]

- McGinley, M.J.; Vinck, M.; Reimer, J.; Batista-Brito, R.; Zagha, E.; Cadwell, C.R.; Tolias, A.S.; Cardin, J.A.; McCormick, D.A. Waking State: Rapid Variations Modulate Neural and Behavioral Responses. Neuron 2015, 87, 1143–1161. [Google Scholar] [CrossRef] [Green Version]

- Reimer, J.; McGinley, M.J.; Liu, Y.; Rodenkirch, C.; Wang, Q.; McCormick, D.A.; Tolias, A.S. Pupil fluctuations track rapid changes in adrenergic and cholinergic activity in cortex. Nat. Commun. 2016, 7, 13289. [Google Scholar] [CrossRef]

- McGinley, M.J.; David, S.V.; McCormick, D.A. Cortical Membrane Potential Signature of Optimal States for Sensory Signal Detection. Neuron 2015, 87, 179–192. [Google Scholar] [CrossRef] [Green Version]

- Hess, E.H.; Polt, J.M. Pupil Size in Relation to Mental Activity during Simple Problem-Solving. Science 1964, 143, 1190–1192. [Google Scholar] [CrossRef]

- Kahneman, D.; Beatty, J. Pupil diameter and load on memory. Science 1966, 154, 1583–1585. [Google Scholar] [CrossRef]

- Einhäuser, W.; Stout, J.; Koch, C.; Carter, O. Pupil dilation reflects perceptual selection and predicts subsequent stability in perceptual rivalry. Proc. Natl. Acad. Sci. USA 2008, 105, 1704–1709. [Google Scholar] [CrossRef] [Green Version]

- McGarrigle, R.; Dawes, P.; Stewart, A.J.; Kuchinsky, S.E.; Munro, K.J. Pupillometry reveals changes in physiological arousal during a sustained listening task. Psychophysiology 2017, 54, 193–203. [Google Scholar] [CrossRef] [Green Version]

- Kucewicz, M.T.; Dolezal, J.; Kremen, V.; Berry, B.M.; Miller, L.R.; Magee, A.L.; Fabian, V.; Worrell, G.A. Pupil size reflects successful encoding and recall of memory in humans. Sci. Rep. 2018, 8, 4949. [Google Scholar] [CrossRef]

- Goldinger, S.D.; Papesh, M.H. Pupil Dilation Reflects the Creation and Retrieval of Memories. Curr. Dir. Psychol. Sci. 2012, 21, 90–95. [Google Scholar] [CrossRef] [Green Version]

- Clewett, D.; Gasser, C.; Davachi, L. Pupil-linked arousal signals track the temporal organization of events in memory. Nat. Commun. 2020, 11, 4007. [Google Scholar] [CrossRef]

- Madore, K.P.; Khazenzon, A.M.; Backes, C.W.; Jiang, J.; Uncapher, M.R.; Norcia, A.M.; Wagner, A.D. Memory failure predicted by attention lapsing and media multitasking. Nature 2020, 587, 87–91. [Google Scholar] [CrossRef]

- Lech, M.; Kucewicz, M.T.; Czyżewski, A. Human Computer Interface for Tracking Eye Movements Improves Assessment and Diagnosis of Patients With Acquired Brain Injuries. Front. Neurol. 2019, 10, 6. [Google Scholar] [CrossRef] [Green Version]

- Kwiatkowska, A.; Lech, M.; Odya, P.; Czyżewski, A. Post-comatose patients with minimal consciousness tend to preserve reading comprehension skills but neglect syntax and spelling. Sci. Rep. 2019, 9, 19929. [Google Scholar] [CrossRef]

- Duchowski, A. Eye Tracking Methodology: Theory and Practice; Springer: London, UK, 2007; ISBN 9781846286087. [Google Scholar]

- Sáiz-Manzanares, M.C.; Pérez, I.R.; Rodríguez, A.A.; Arribas, S.R.; Almeida, L.; Martin, C.F. Analysis of the Learning Process through Eye Tracking Technology and Feature Selection Techniques. Appl. Sci. 2021, 11, 6157. [Google Scholar] [CrossRef]

- Scalera, L.; Seriani, S.; Gallina, P.; Lentini, M.; Gasparetto, A. Human–Robot Interaction through Eye Tracking for Artistic Drawing. Robotics 2021, 10, 54. [Google Scholar] [CrossRef]

- Shi, L.; Copot, C.; Vanlanduit, S. GazeEMD: Detecting Visual Intention in Gaze-Based Human-Robot Interaction. Robotics 2021, 10, 68. [Google Scholar] [CrossRef]

- Wöhle, L.; Gebhard, M. Towards Robust Robot Control in Cartesian Space Using an Infrastructureless Head- and Eye-Gaze Interface. Sensors 2021, 21, 1798. [Google Scholar] [CrossRef]

- Mele, M.L.; Federici, S. Gaze and eye-tracking solutions for psychological research. Cogn. Process. 2012, 13, 261–265. [Google Scholar] [CrossRef]

- Saboo, K.V.; Varatharajah, Y.; Berry, B.M.; Sperling, M.R.; Gorniak, R.; Davis, K.A.; Jobst, B.C.; Gross, R.E.; Lega, B.; Sheth, S.A.; et al. A Computationally Efficient Model for Predicting Successful Memory Encoding Using Machine-Learning-based EEG Channel Selection. In Proceedings of the 2019 9th International IEEE/EMBS Conference on Neural Engineering, San Francisco, CA, USA, 20–23 March 2019. [Google Scholar]

- Ezzyat, Y.; Kragel, J.E.; Burke, J.F.; Levy, D.F.; Lyalenko, A.; Wanda, P.; O’Sullivan, L.; Hurley, K.B.; Busygin, S.; Pedisich, I.; et al. Direct Brain Stimulation Modulates Encoding States and Memory Performance in Humans. Curr. Biol. 2017, 27, 1251–1258. [Google Scholar] [CrossRef]

- Ezzyat, Y.; Wanda, P.A.; Levy, D.F.; Kadel, A.; Aka, A.; Pedisich, I.; Sperling, M.R.; Sharan, A.D.; Lega, B.C.; Burks, A.; et al. Closed-loop stimulation of temporal cortex rescues functional networks and improves memory. Nat. Commun. 2018, 9, 365. [Google Scholar] [CrossRef] [Green Version]

- Kwiatkowska, A.C. Komputerowe oko Swiadomosci; Akademicka Oficyna Wydawnicza Exit: Warszawa, Polska, 2017; ISBN 9788378370529. [Google Scholar]

- Kremen, V.; Brinkmann, B.H.; Kim, I.; Guragain, H.; Nasseri, M.; Magee, A.L.; Pal Attia, T.; Nejedly, P.; Sladky, V.; Nelson, N.; et al. Integrating Brain Implants With Local and Distributed Computing Devices: A Next Generation Epilepsy Management System. IEEE J. Transl. Eng. Health Med. 2018, 6, 2500112. [Google Scholar] [CrossRef]

- Gilron, R.; Little, S.; Perrone, R.; Wilt, R.; de Hemptinne, C.; Yaroshinsky, M.S.; Racine, C.A.; Wang, S.S.; Ostrem, J.L.; Larson, P.S.; et al. Long-term wireless streaming of neural recordings for circuit discovery and adaptive stimulation in individuals with Parkinson’s disease. Nat. Biotechnol. 2021, 39, 1078–1085. [Google Scholar] [CrossRef]

- Sladky, V.; Nejedly, P.; Mivalt, F.; Brinkmann, B.H.; Kim, I.; St. Louis, E.K.; Gregg, N.M.; Lundstrom, B.N.; Crowe, C.M.; Attia, T.P.; et al. Distributed Brain Co-Processor for Neurophysiologic Tracking and Adaptive Stimulation: Application to Drug Resistant Epilepsy. bioRxiv 2021. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lech, M.; Czyżewski, A.; Kucewicz, M.T. CyberEye: New Eye-Tracking Interfaces for Assessment and Modulation of Cognitive Functions beyond the Brain. Sensors 2021, 21, 7605. https://doi.org/10.3390/s21227605

Lech M, Czyżewski A, Kucewicz MT. CyberEye: New Eye-Tracking Interfaces for Assessment and Modulation of Cognitive Functions beyond the Brain. Sensors. 2021; 21(22):7605. https://doi.org/10.3390/s21227605

Chicago/Turabian StyleLech, Michał, Andrzej Czyżewski, and Michał T. Kucewicz. 2021. "CyberEye: New Eye-Tracking Interfaces for Assessment and Modulation of Cognitive Functions beyond the Brain" Sensors 21, no. 22: 7605. https://doi.org/10.3390/s21227605

APA StyleLech, M., Czyżewski, A., & Kucewicz, M. T. (2021). CyberEye: New Eye-Tracking Interfaces for Assessment and Modulation of Cognitive Functions beyond the Brain. Sensors, 21(22), 7605. https://doi.org/10.3390/s21227605