Drain Structural Defect Detection and Mapping Using AI-Enabled Reconfigurable Robot Raptor and IoRT Framework

Abstract

:1. Introduction

2. Literature Survey

3. Methodology

4. Defect Detection and Mapping with IoRT Framework

4.1. Physical Layer

4.1.1. System Architecture

4.1.2. Locomotion Module

4.1.3. Control Unit

4.1.4. Power Distribution Module

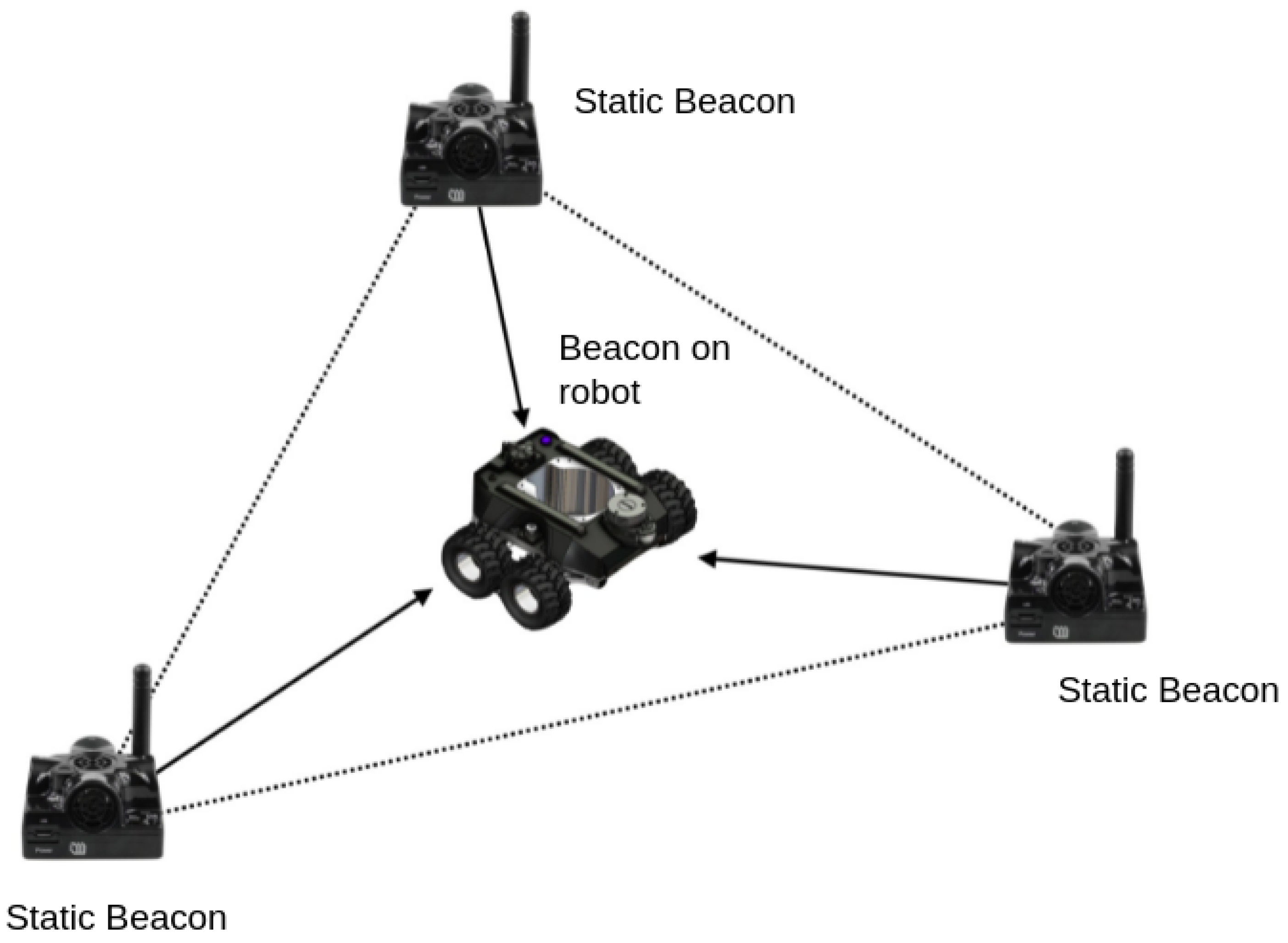

4.1.5. Localization Module

4.1.6. Collision Detection and Navigation Module

4.1.7. Reconfigurable Module

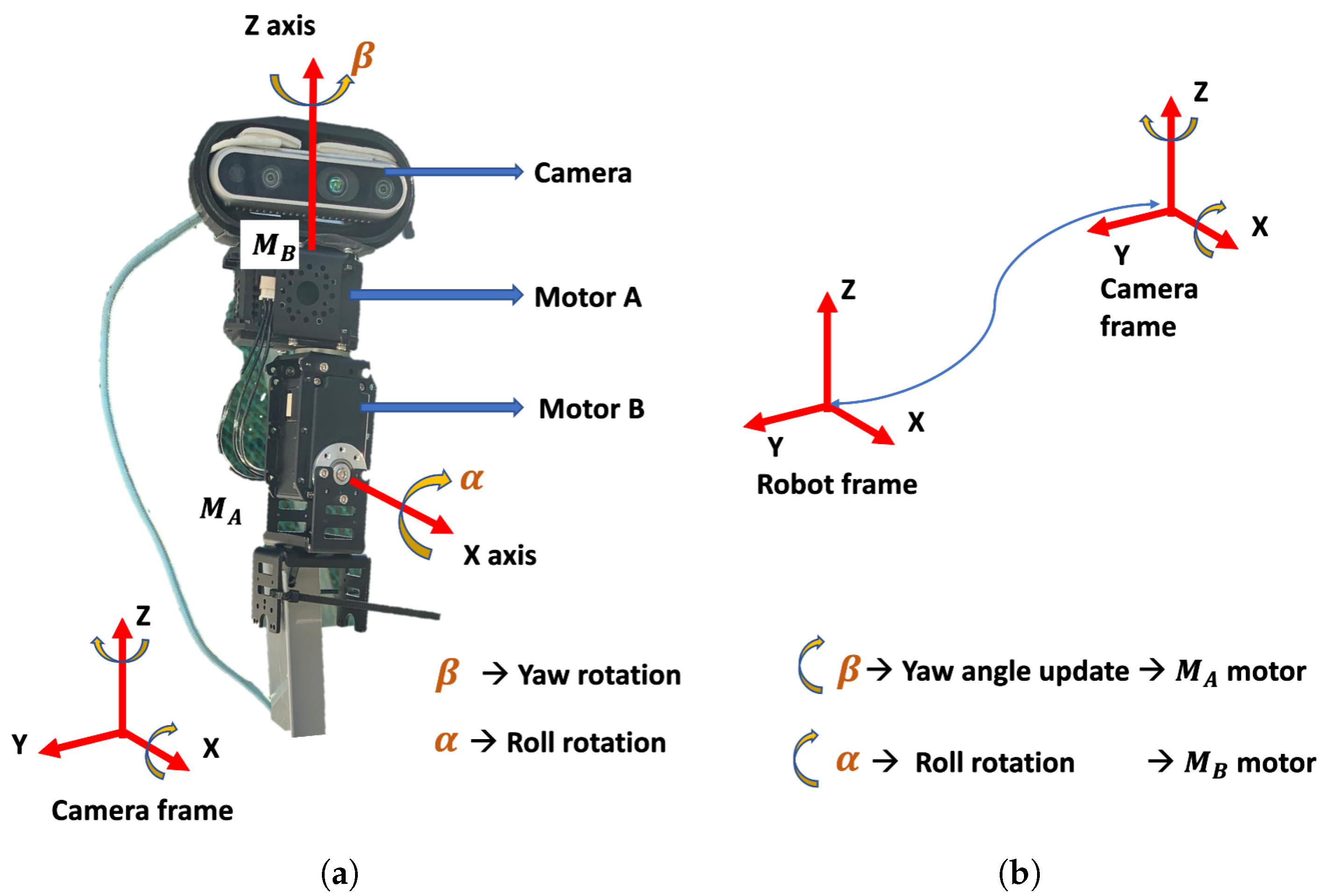

4.1.8. Vision System with Pan-Tilt Mechanism

4.2. Network Layer

4.3. Processing Layer

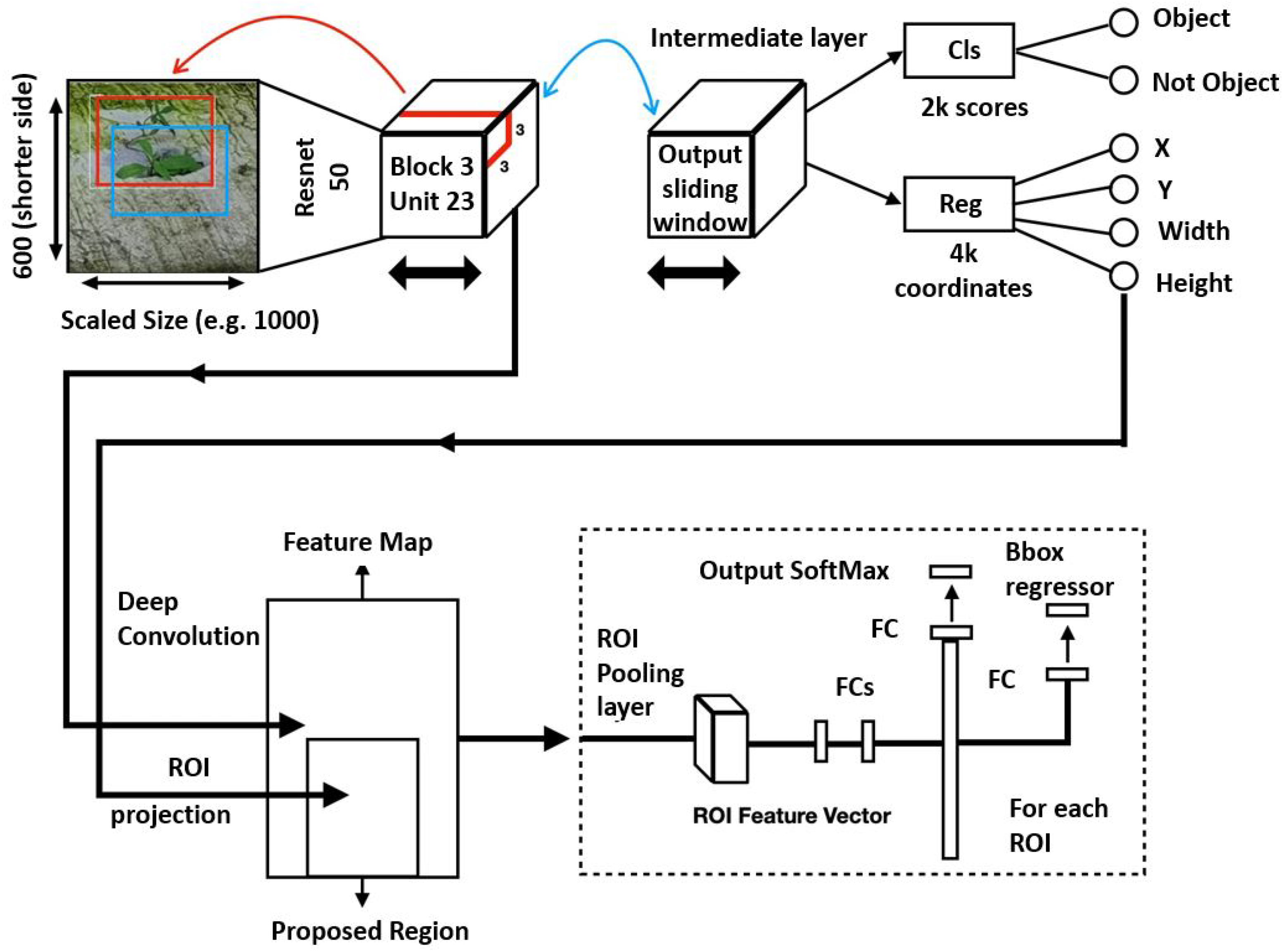

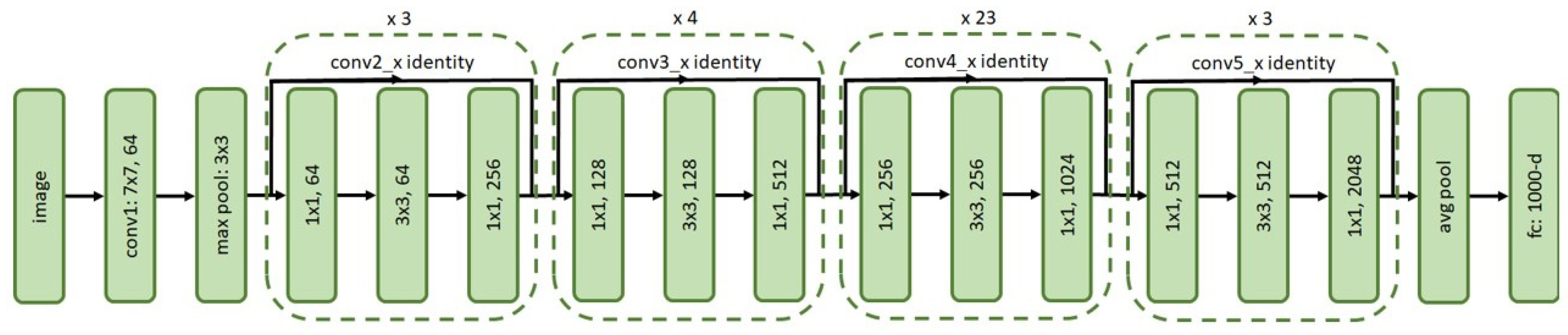

Deep Learning-Based Defect Detection

4.4. Application Layer

5. Experimental Setup and Results

5.1. Dataset Preparation and Training

5.1.1. Training Hardware and Software Details

5.1.2. Parameter Configuration

5.2. Offline Test

5.3. Real-Time Field Trial

5.3.1. Maneuverability Test

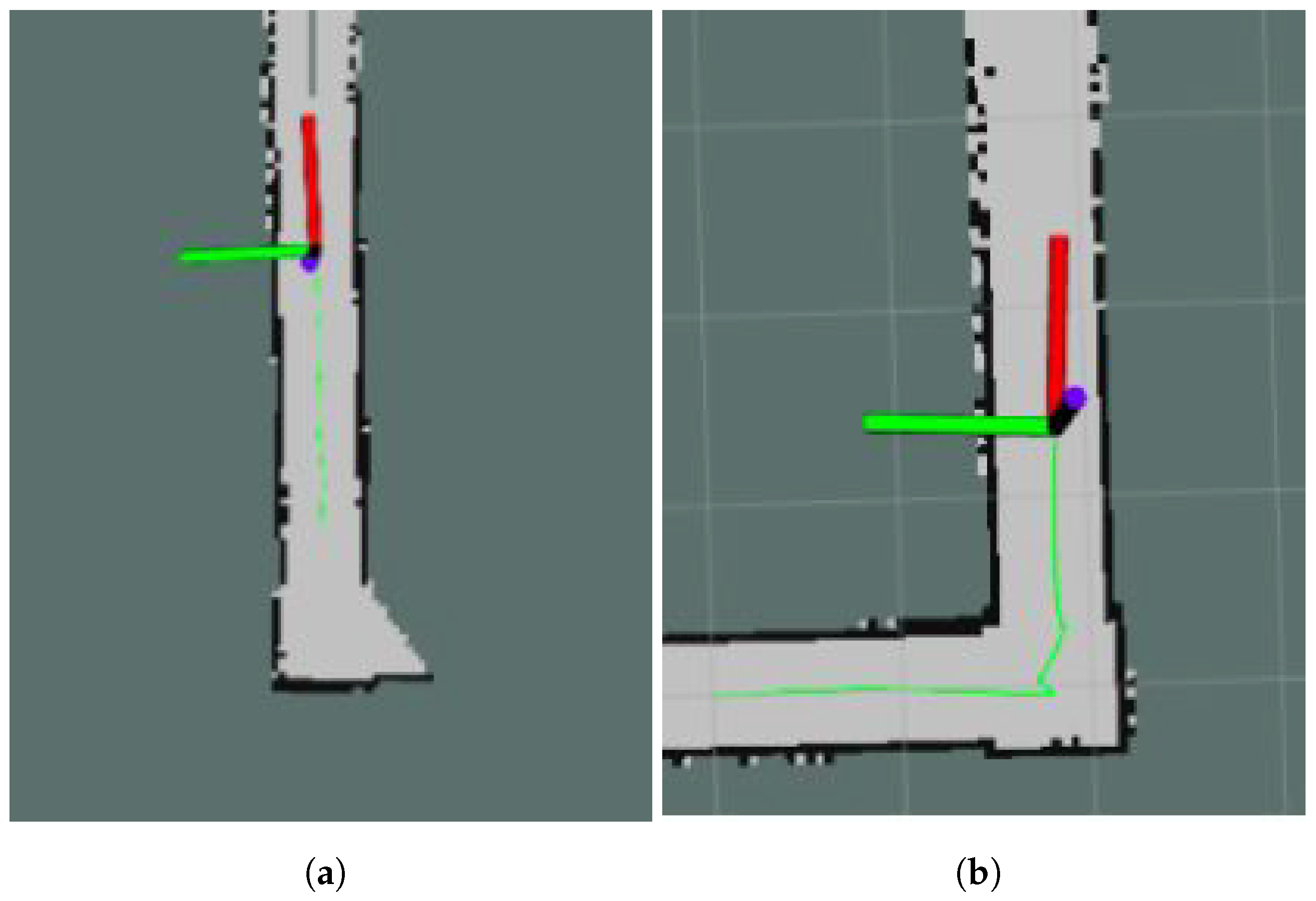

5.3.2. Drain Mapping Algorithm Evaluation

5.4. Real-Time Defect Detection and Mapping

Defect Mapping on SLAM Map

5.5. Comparison with Existing Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abro, G.E.M.; Jabeen, B.; Ajodhia, K.K.; Rauf, A.; Noman, A. Designing Smart Sewerbot for the Identification of Sewer Defects and Blockages. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 615–619. [Google Scholar] [CrossRef] [Green Version]

- Crawler Camera System Market Size Report, 2020–2027. Market Analysis Report. 2020. Available online: https://www.grandviewresearch.com/industry-analysis/crawler-camera-system-market (accessed on 1 November 2021).

- Tennakoon, R.B.; Hoseinnezhad, R.; Tran, H.; Bab-Hadiashar, A. Visual Inspection of Storm-Water Pipe Systems using Deep Convolutional Neural Networks. In ICINCO; Science and Technology Publications: Porto, Portugal, 2018; pp. 145–150. [Google Scholar]

- Cheng, J.C.; Wang, M. Automated detection of sewer pipe defects in closed-circuit television images using deep learning techniques. Autom. Constr. 2018, 95, 155–171. [Google Scholar] [CrossRef]

- Moradi, S.; Zayed, T.; Golkhoo, F. Automated sewer pipeline inspection using computer vision techniques. In Pipelines 2018: Condition Assessment, Construction, and Rehabilitation; American Society of Civil Engineers: Reston, VA, USA, 2018; pp. 582–587. [Google Scholar]

- Wang, M.; Kumar, S.S.; Cheng, J.C. Automated sewer pipe defect tracking in CCTV videos based on defect detection and metric learning. Autom. Constr. 2021, 121, 103438. [Google Scholar] [CrossRef]

- Hassan, S.I.; Dang, L.M.; Mehmood, I.; Im, S.; Choi, C.; Kang, J.; Park, Y.S.; Moon, H. Underground sewer pipe condition assessment based on convolutional neural networks. Autom. Constr. 2019, 106, 102849. [Google Scholar] [CrossRef]

- Dang, L.M.; Kyeong, S.; Li, Y.; Wang, H.; Nguyen, T.N.; Moon, H. Deep learning-based sewer defect classification for highly imbalanced dataset. Comput. Ind. Eng. 2021, 161, 107630. [Google Scholar] [CrossRef]

- Gomez, F.; Althoefer, K.; Seneviratne, L. Modeling of ultrasound sensor for pipe inspection. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No. 03CH37422), Taipei, Taiwan, 14–19 September 2003; Volume 2, pp. 2555–2560. [Google Scholar]

- Turkan, Y.; Hong, J.; Laflamme, S.; Puri, N. Adaptive wavelet neural network for terrestrial laser scanner-based crack detection. Autom. Constr. 2018, 94, 191–202. [Google Scholar] [CrossRef] [Green Version]

- Yu, T.; Zhu, A.; Chen, Y. Efficient crack detection method for tunnel lining surface cracks based on infrared images. J. Comput. Civ. Eng. 2017, 31, 04016067. [Google Scholar] [CrossRef]

- Kirkham, R.; Kearney, P.D.; Rogers, K.J.; Mashford, J. PIRAT—A system for quantitative sewer pipe assessment. Int. J. Robot. Res. 2000, 19, 1033–1053. [Google Scholar] [CrossRef]

- Kuntze, H.; Schmidt, D.; Haffner, H.; Loh, M. KARO—A flexible robot for smart sensor-based sewer inspection. Proc. Int. NoDig 1995, 19, 367–374. [Google Scholar]

- Nassiraei, A.A.; Kawamura, Y.; Ahrary, A.; Mikuriya, Y.; Ishii, K. Concept and design of a fully autonomous sewer pipe inspection mobile robot “kantaro”. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 136–143. [Google Scholar]

- Kirchner, F.; Hertzberg, J. A prototype study of an autonomous robot platform for sewerage system maintenance. Auton. Robot. 1997, 4, 319–331. [Google Scholar] [CrossRef]

- Streich, H.; Adria, O. Software approach for the autonomous inspection robot MAKRO. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’04), New Orleans, LA, USA, 26 April–1 May 2004; Volume 4, pp. 3411–3416. [Google Scholar]

- Parween, R.; Muthugala, M.A.V.J.; Heredia, M.V.; Elangovan, K.; Elara, M.R. Collision Avoidance and Stability Study of a Self-Reconfigurable Drainage Robot. Sensors 2021, 21, 3744. [Google Scholar] [CrossRef]

- Karami, E.; Shehata, M.; Smith, A. Image identification using SIFT algorithm: Performance analysis against different image deformations. arXiv 2017, arXiv:1710.02728. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In European Conference on Computer Vision; Springer: Graz, Austria, 2006; pp. 404–417. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In European Conference on Computer Vision; Springer: Graz, Austria, 2006; pp. 430–443. [Google Scholar]

- Goldenshluger, A.; Zeevi, A. The Hough transform estimator. Ann. Stat. 2004, 32, 1908–1932. [Google Scholar] [CrossRef] [Green Version]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep learning vs. traditional computer vision. In Science and Information Conference; Tokai University: Tokyo, Japan, 2019. [Google Scholar]

- Ramalingam, B.; Hayat, A.A.; Elara, M.R.; Félix Gómez, B.; Yi, L.; Pathmakumar, T.; Rayguru, M.M.; Subramanian, S. Deep Learning Based Pavement Inspection Using Self-Reconfigurable Robot. Sensors 2021, 21, 2595. [Google Scholar] [CrossRef] [PubMed]

- Parween, R.; Hayat, A.A.; Elangovan, K.; Apuroop, K.G.S.; Vega Heredia, M.; Elara, M.R. Design of a Self-Reconfigurable Drain Mapping Robot With Level-Shifting Capability. IEEE Access 2020, 8, 113429–113442. [Google Scholar] [CrossRef]

- Ramalingam, B.; Elara Mohan, R.; Balakrishnan, S.; Elangovan, K.; Félix Gómez, B.; Pathmakumar, T.; Devarassu, M.; Mohan Rayaguru, M.; Baskar, C. sTetro-Deep Learning Powered Staircase Cleaning and Maintenance Reconfigurable Robot. Sensors 2021, 21, 6279. [Google Scholar] [CrossRef]

- Pathmakumar, T.; Kalimuthu, M.; Elara, M.R.; Ramalingam, B. An Autonomous Robot-Aided Auditing Scheme for Floor Cleaning. Sensors 2021, 21, 4332. [Google Scholar] [CrossRef] [PubMed]

- Ramalingam, B.; Tun, T.; Mohan, R.E.; Gómez, B.F.; Cheng, R.; Balakrishnan, S.; Mohan Rayaguru, M.; Hayat, A.A. AI Enabled IoRT Framework for Rodent Activity Monitoring in a False Ceiling Environment. Sensors 2021, 21, 5326. [Google Scholar] [CrossRef] [PubMed]

- Ramalingam, B.; Vega-Heredia, M.; Mohan, R.E.; Vengadesh, A.; Lakshmanan, A.; Ilyas, M.; James, T.J.Y. Visual Inspection of the Aircraft Surface Using a Teleoperated Reconfigurable Climbing Robot and Enhanced Deep Learning Technique. Int. J. Aerosp. Eng. 2019, 2019, 1–14. [Google Scholar] [CrossRef]

- Premachandra, C.; Waruna, H.; Premachandra, H.; Parape, C.D. Image based automatic road surface crack detection for achieving smooth driving on deformed roads. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; pp. 4018–4023. [Google Scholar]

- Premachandra, C.; Ueda, S.; Suzuki, Y. Road intersection moving object detection by 360-degree view camera. In Proceedings of the 2019 IEEE 16th International Conference on Networking, Sensing and Control (ICNSC), Banff, AB, Canada, 9–11 May 2019; pp. 369–372. [Google Scholar]

- Wang, L.; Zhuang, L.; Zhang, Z. Automatic detection of rail surface cracks with a superpixel-based data-driven framework. J. Comput. Civ. Eng. 2019, 33, 04018053. [Google Scholar] [CrossRef]

- Yin, X.; Chen, Y.; Bouferguene, A.; Zaman, H.; Al-Hussein, M.; Kurach, L. A deep learning-based framework for an automated defect detection system for sewer pipes. Autom. Constr. 2020, 109, 102967. [Google Scholar] [CrossRef]

- Vermesan, O.; Bahr, R.; Ottella, M.; Serrano, M.; Karlsen, T.; Wahlstrøm, T.; Sand, H.E.; Ashwathnarayan, M.; Gamba, M.T. Internet of robotic things intelligent connectivity and platforms. Front. Robot. AI 2020, 7, 104. [Google Scholar] [CrossRef] [PubMed]

- Tensorflow 1 Detection Model Zoo. Available online: https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/tf1_detection_zoo.md (accessed on 18 June 2020).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Ramalingam, B.; Mohan, R.E.; Pookkuttath, S.; Gómez, B.F.; Sairam Borusu, C.S.C.; Wee Teng, T.; Tamilselvam, Y.K. Remote insects trap monitoring system using deep learning framework and IoT. Sensors 2020, 20, 5280. [Google Scholar] [CrossRef] [PubMed]

- Kohlbrecher, S.; Von Stryk, O.; Meyer, J.; Klingauf, U. A flexible and scalable SLAM system with full 3D motion estimation. In Proceedings of the 2011 IEEE International Symposium on Safety, Security, and Rescue Robotics, Kyoto, Japan, 1–5 November 2011; pp. 155–160. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef] [Green Version]

- Kevin, P. Murphy Bayesian Map Learning in Dynamic Environments. In NIPS; MIT Press: Denver, CO, USA, 1999; pp. 1015–1021. [Google Scholar]

- Kumar, S.S.; Abraham, D.M.; Jahanshahi, M.R.; Iseley, T.; Starr, J. Automated defect classification in sewer closed circuit television inspections using deep convolutional neural networks. Autom. Constr. 2018, 91, 273–283. [Google Scholar] [CrossRef]

- Muthugala, M.; Palanisamy, P.; Samarakoon, S.; Padmanabha, S.G.A.; Elara, M.R.; Terntzer, D.N. Raptor: A Design of a Drain Inspection Robot. Sensors 2021, 21, 5742. [Google Scholar] [CrossRef]

| Description | Specification |

|---|---|

| Platform Weight | 2.45 kg |

| Payload | Up to 1.6 kg |

| Dimensions | 0.390 × 0.350 × 0.200 m |

| Environmental | 3D-printed nylon for prototyping |

| Ground Clearance | 0.098 m stowed, 0.150 m unstowed |

| Maximum Linear Velocity | 0.22 m/s |

| Maximum Angular Velocity | 0.85 rad/s |

| Maximum Gradient | 20–25 degree |

| Maximum Side Gradient | 18–20 degree |

| Traverse Terrain | Tested on short grassland, concrete, and road conditions |

| Model Name | First Stage Feature Extractor | Second Stage Feature Extractor |

|---|---|---|

| ResNet50 | block_1, block_2, block_3, block_4a | block_4 |

| ResNet101 | block_1, block_2, block_3, block_4a, block_4b | block_4 |

| Inception-ResNet-v2 | conv2d (1a, 2a, 2b, 3b, 4a), mixed_5b, mixed_6a, block_17, block_35 | conv2d_7b, mixed_7a, block_8 |

| Test | ResNet50 | ResNet101 | Inception-ResNet-v2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | Accuracy | Prec. | Recall | Accuracy | Prec. | Recall | Accuracy | ||||

| Tree root intrusion | 86.21 | 85.92 | 85.89 | 86.19 | 88.45 | 88.21 | 88.01 | 88.42 | 91.34 | 91.03 | 90.82 | 91.27 |

| Plant intrusion | 87.56 | 87.38 | 87.09 | 87.49 | 89.48 | 89.27 | 88.93 | 89.39 | 93.98 | 93.72 | 93.67 | 93.92 |

| Crack | 87.34 | 86.98 | 86.79 | 87.29 | 91.11 | 90.89 | 90.82 | 91.05 | 91.94 | 91.78 | 91.71 | 91.96 |

| Pothole | 86.71 | 86.49 | 86.37 | 86.69 | 90.87 | 90.65 | 90.58 | 90.79 | 92.43 | 92. 27 | 92.09 | 92.38 |

| Bughole | 85.97 | 85.63 | 85.59 | 85.95 | 89.33 | 89.02 | 88.94 | 89.29 | 93.82 | 93.61 | 93.55 | 93.73 |

| Deposit | 87.73 | 87.59 | 87.41 | 87.71 | 89.94 | 89.79 | 89.51 | 89.88 | 92.87 | 92.63 | 92.59 | 92.81 |

| Model Name | Local Server |

|---|---|

| Faster RCNN ResNet50 | 95 ms |

| Faster RCNN ResNet101 | 300 ms |

| Faster RCNN Inception-ResNet-v2 | 119 ms |

| Day | Night | Day | Night | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Weather | Drain Type | Classes | TP | FP | TP | FP | Weather | TP | FP | TP | FP |

| Summer | Open | Treeroot | 86 | 3 | 83 | 7 | After Rainfall | 82 | 5 | 79 | 7 |

| Plant | 85 | 4 | 80 | 6 | 82 | 4 | 79 | 7 | |||

| Crack | 86 | 3 | 83 | 6 | 83 | 4 | 81 | 8 | |||

| Pothole | 87 | 4 | 84 | 5 | 83 | 5 | 81 | 5 | |||

| Bughole | 83 | 5 | 80 | 8 | 79 | 4 | 78 | 6 | |||

| Deposit | 84 | 5 | 80 | 8 | 79 | 4 | 78 | 5 | |||

| Semi-closed | Treeroot | 85 | 4 | 81 | 6 | 83 | 5 | 80 | 7 | ||

| Plant | 84 | 5 | 81 | 8 | 82 | 5 | 80 | 7 | |||

| Crack | 87 | 3 | 83 | 6 | 84 | 4 | 82 | 6 | |||

| Pothole | 82 | 6 | 80 | 8 | 80 | 5 | 79 | 6 | |||

| Bughole | 83 | 4 | 80 | 6 | 81 | 5 | 79 | 7 | |||

| Deposit | 83 | 4 | 81 | 5 | 81 | 4 | 80 | 7 | |||

| Closed | Treeroot | 84 | 5 | 80 | 8 | 80 | 4 | 79 | 6 | ||

| Plant | 83 | 6 | 80 | 7 | 81 | 6 | 80 | 7 | |||

| Crack | 85 | 4 | 81 | 7 | 82 | 5 | 79 | 6 | |||

| Pothole | 84 | 5 | 81 | 6 | 81 | 4 | 79 | 6 | |||

| Bughole | 86 | 4 | 83 | 6 | 83 | 4 | 78 | 7 | |||

| Deposit | 82 | 5 | 80 | 7 | 80 | 5 | 78 | 8 | |||

| Case Studies | Inspection Type | Algorithm | Classes | Precision |

|---|---|---|---|---|

| Tennakoon et al. [3] | Offline CCTV | Resnet-TL | 5 | 85.00 |

| Kumar et al. [42] | Offline CCTV | 4 layer CNN | 3 | 87.7 |

| Moradi et al. [5] | Offline CCTV | 5 layer CNN | 1 | 78.002 |

| Cheng et al. [4] | Offline CCTV | Modified ZF | 4 | 83.0 |

| Proposed framework | Real-time with Raptor | Faster RCNN Inception-ResNet-v2 1 | 6 | 92.67 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Palanisamy, P.; Mohan, R.E.; Semwal, A.; Jun Melivin, L.M.; Félix Gómez, B.; Balakrishnan, S.; Elangovan, K.; Ramalingam, B.; Terntzer, D.N. Drain Structural Defect Detection and Mapping Using AI-Enabled Reconfigurable Robot Raptor and IoRT Framework. Sensors 2021, 21, 7287. https://doi.org/10.3390/s21217287

Palanisamy P, Mohan RE, Semwal A, Jun Melivin LM, Félix Gómez B, Balakrishnan S, Elangovan K, Ramalingam B, Terntzer DN. Drain Structural Defect Detection and Mapping Using AI-Enabled Reconfigurable Robot Raptor and IoRT Framework. Sensors. 2021; 21(21):7287. https://doi.org/10.3390/s21217287

Chicago/Turabian StylePalanisamy, Povendhan, Rajesh Elara Mohan, Archana Semwal, Lee Ming Jun Melivin, Braulio Félix Gómez, Selvasundari Balakrishnan, Karthikeyan Elangovan, Balakrishnan Ramalingam, and Dylan Ng Terntzer. 2021. "Drain Structural Defect Detection and Mapping Using AI-Enabled Reconfigurable Robot Raptor and IoRT Framework" Sensors 21, no. 21: 7287. https://doi.org/10.3390/s21217287

APA StylePalanisamy, P., Mohan, R. E., Semwal, A., Jun Melivin, L. M., Félix Gómez, B., Balakrishnan, S., Elangovan, K., Ramalingam, B., & Terntzer, D. N. (2021). Drain Structural Defect Detection and Mapping Using AI-Enabled Reconfigurable Robot Raptor and IoRT Framework. Sensors, 21(21), 7287. https://doi.org/10.3390/s21217287