Joint Constraints Based Dynamic Calibration of IMU Position on Lower Limbs in IMU-MoCap

Abstract

:1. Introduction

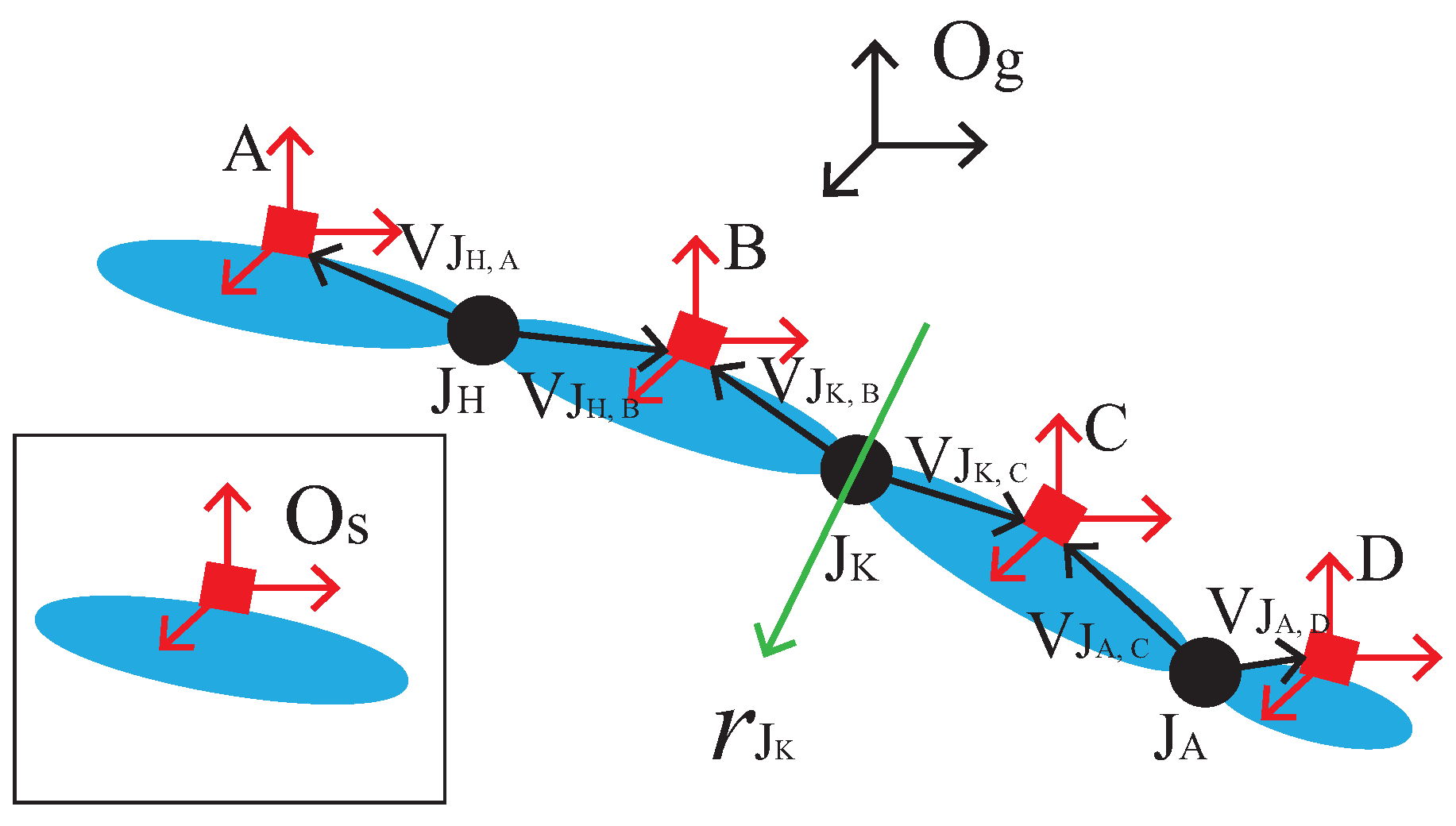

Problem Statement

2. IMU Position Calibration Principle

3. Calibration Algorithm Design

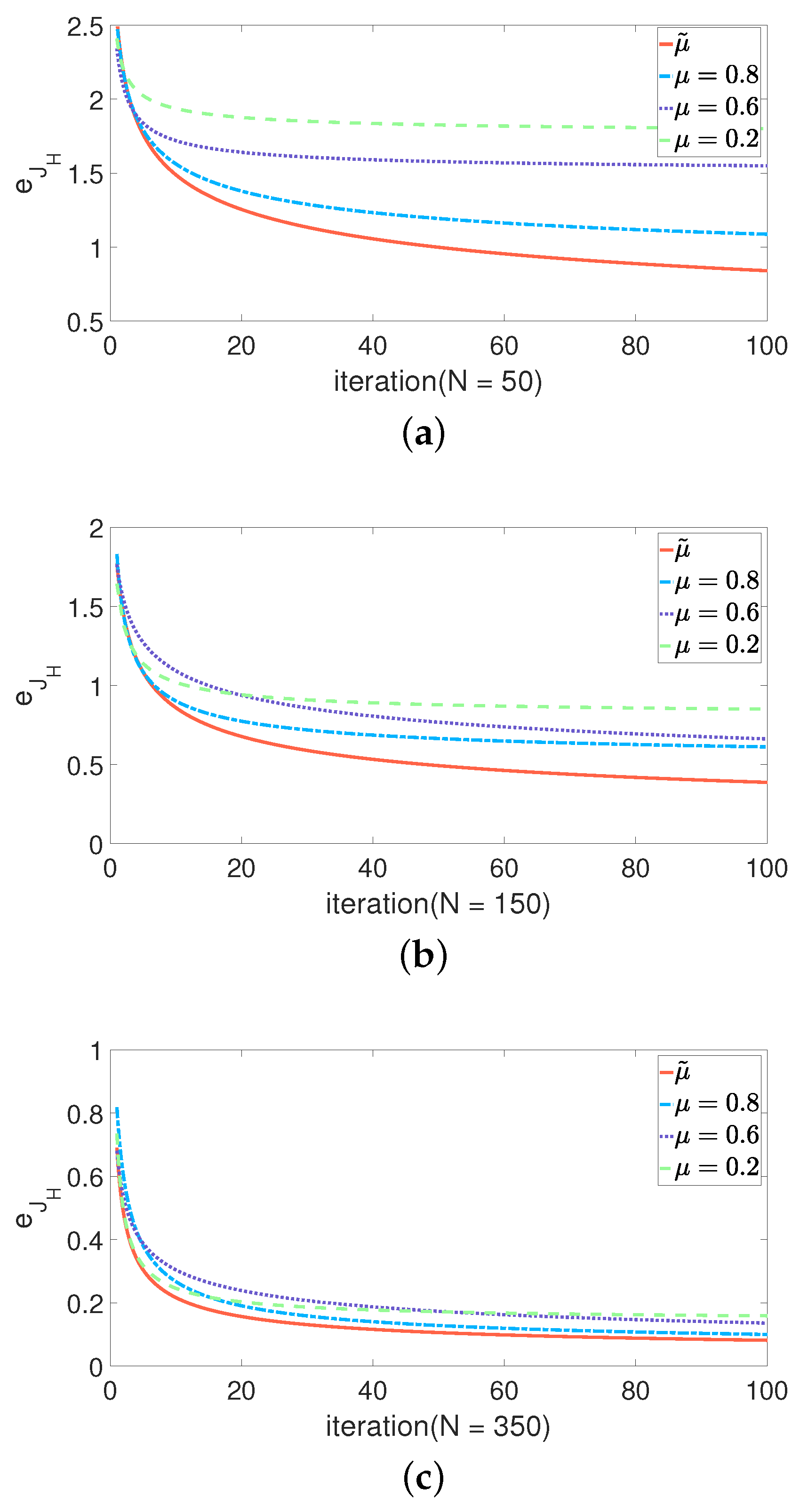

3.1. Gauss–Newton Method for IMUs Position Calibration

3.2. Dynamic Weight Particle Swarm Optimization for IMUs Position Calibration

3.3. Grey Wolf Optimizer for IMUs Position Calibration

4. Calculation of Human Lower Limbs Joint Angles

4.1. Establish the Coordinate System Attached to a Limb

4.2. Joint Angles Calculation

4.3. Single IMU Attitude Fusion

5. Experimental Analysis

5.1. Measurement Equipment

5.2. Data Analysis

5.3. Results and Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Muro-De-La-Herran, A.; Garcia-Zapirain, B.; Mendez-Zorrilla, A. Gait analysis methods: An overview of wearable and non-wearable systems, highlighting clinical applications. Sensors 2014, 14, 3362–3394. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tao, W.; Tao, L.; Zheng, R.; Feng, H. Gait Analysis Using Wearable Sensors. Sensors 2012, 12, 2255–2283. [Google Scholar] [CrossRef] [PubMed]

- Blake, A.; Grundy, C. Evaluation of motion capture systems for Golf Swings: Optical vs. gyroscopic. In Proceedings of the ITI 2008—30th International Conference on Information Technology Interfaces, Cavtat, Croatia, 23–26 June 2008; pp. 409–414. [Google Scholar]

- Ghasemzadeh, H.; Jafari, R. Coordination Analysis of Human Movements with Body Sensor Networks: A Signal Processing Model to Evaluate Baseball Swings. IEEE Sens. J. 2011, 11, 603–610. [Google Scholar] [CrossRef]

- Walsh, M.; Barton, J.; O’Flynn, B.; O’Mathuna, C.; Tyndyk, M. Capturing the overarm throw in darts employing wireless inertial measurement. In Proceedings of the SENSORS, 2011 IEEE, Limerick, Ireland, 28–31 October 2011; pp. 1441–1444. [Google Scholar]

- Valentina, C.; Elena, B.; Fantozz, S.; Vannozzi, G. Trends Supporting the In-Field Use of Wearable Inertial Sensors for Sport Performance Evaluation: A Systematic Review. Sensors 2018, 18, 873. [Google Scholar]

- Salarian, A.; Russmann, H.; Vingerhoets, F.; Dehollain, C.; Aminian, K. Gait assessment in Parkinson’s disease: Toward an ambulatory system for long-term monitoring. IEEE Trans. Biomed. Eng. 2004, 51, 1434–1443. [Google Scholar] [CrossRef] [PubMed]

- Anwary, A.; Yu, H.; Vassallo, M. An Automatic Gait Feature Extraction Method for Identifying Gait Asymmetry Using Wearable Sensors. Sensors 2018, 18, 676. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Palermo, E.; Rossi, S.; Marini, F.; Cappa, P. Experimental evaluation of accuracy and repeatability of a novel body-to-sensor calibration procedure for inertial sensor-based gait analysis. Measurement 2014, 52, 145–155. [Google Scholar] [CrossRef]

- Roetenberg, D.; Luinge, H.; Slycke, P. Xsens MVN: Full 6DOF human motion tracking using miniature inertial sensors. Xsens Motion Technol. BV 2009, 3, 1–9. [Google Scholar]

- Robert-Lachaine, X.; Mecheri, H.; Larue, C.; Plamondon, A. Validation of inertial measurement units with an optoelectronic system for whole-body motion analysis. Med. Biol. Eng. Comput. 2016, 55, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Favre, J.; Aissaoui, R.; Jolles, B.M.; Guise, J.; Aminian, K. Functional calibration procedure for 3D knee joint angle description using inertial sensors. J. Biomech. 2009, 42, 2330–2335. [Google Scholar] [CrossRef] [PubMed]

- Cutti, A.G.; Ferrari, A.; Garofalo, P.; Raggi, M.; Cappello, A.; Ferrari, A. ’Outwalk’: A protocol for clinical gait analysis based on inertial and magnetic sensors. Med. Biol. Eng. Comput. 2010, 48, 17. [Google Scholar] [CrossRef] [PubMed]

- Nazarahari, M.; Rouhani, H. Semi-Automatic Sensor-to-Body Calibration of Inertial Sensors on Lower Limb Using Gait Recording. IEEE Sens. J. 2019, 19, 12465–12474. [Google Scholar] [CrossRef]

- Elias, L.S.V.; Elias, A.; Rocon, E.; Freire, T.; Frizera, A. An IMU-to-Body Alignment Method Applied to Human Gait Analysis. Sensors 2016, 16, 2090. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cheng, P.; Oelmann, B. Joint-Angle Measurement Using Accelerometers and Gyroscopes-A Survey. IEEE Trans. Instrum. Meas. 2010, 59, 404–414. [Google Scholar] [CrossRef]

- Miezal, M.; Bleser, G.; Schmitz, N.; Stricker, D. A Generic Approach to Inertial Tracking of Arbitrary Kinematic Chains. In Proceedings of the 8th International Conference on Body Area Networks, Boston, MA, USA, 30 September–2 October 2013; Number 4. pp. 189–192. [Google Scholar]

- Seel, T.; Schauer, T.; Raisch, J. Joint axis and position estimation from inertial measurement data by exploiting kinematic constraints. In Proceedings of the 2012 IEEE International Conference on Control Applications, Dubrovnik, Croatia, 3–5 October 2012; pp. 45–49. [Google Scholar]

- Seel, T.; Raisch, J.; Schauer, T. IMU-Based Joint Angle Measurement for Gait Analysis. Sensors 2014, 14, 6891–6909. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nerino, R.; Contin, L.; Gonçalves da Silva Pinto, W.J.; Massazza, G.; Actis, M.; Capacchione, P.; Chimienti, A.; Pettiti, G. A BSN Based Service for Post-Surgical Knee Rehabilitation at Home. In Proceedings of the 8th International Conference on Body Area Networks; ICST (Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering), Boston, MA, USA, 30 September–2 October 2013; pp. 401–407. [Google Scholar]

- O’Donovan, K.J.; Kamnik, R.; O’Keeffe, D.T.; Lyons, G.M. An inertial and magnetic sensor based technique for joint angle measurement. J. Biomech. 2007, 40, 2604–2611. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Eberhart, R. A modified particle swarm optimizer. In Proceedings of the 1998 IEEE International Conference on Evolutionary Computation Proceedings. IEEE World Congress on Computational Intelligence (Cat. No.98TH8360), Anchorage, AK, USA, 4–9 May 1998; pp. 69–73. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Wu, G.; Siegler, S.; Allard, P.; Kirtley, C.; Leardini, A.; Rosenbaum, D.; Whittle, M.; DâǍŹLima, D.D.; Cristofolini, L.; Witte, H.; et al. ISB recommendation on definitions of joint coordinate system of various joints for the reporting of human joint motion—Part I: Ankle, hip, and spine. J. Biomech. 2002, 35, 543–548. [Google Scholar] [CrossRef]

- Cappozzo, A.; Catani, F.; Leardini, A.; Benedetti, M.; Della Croce, U. Position and orientation in space of bones during movement: Experimental artefacts. Clin. Biomech. 1996, 11, 90–100. [Google Scholar] [CrossRef]

- Craig, J.J. Introduction to Robotics: Mechanics and Control; Pearson: New York City, NY, USA, 1986. [Google Scholar]

- Gao, Y.; Song, D. A New Improved Genetic Algorithms and its Property Analysis. In Proceedings of the 2009 Third International Conference on Genetic and Evolutionary Computing, Guilin, China, 14–17 October 2009; pp. 73–76. [Google Scholar]

- Paul, R.P. Robot Manipulators: Mathematics, Programming and Control; The MIT Press: Cambridge, MA, USA, 1981. [Google Scholar]

- Zhou, Y.; Zhang, H.; Zhang, T.; Wang, L.; Wei, R.; Luan, M.; Liu, H.; Shi, J. A fusion attitude determination method based on quaternion for MEMS gyro/accelerometer/magnetometer. In Proceedings of the 2013 25th Chinese Control and Decision Conference (CCDC), Guiyang, China, 25–27 May 2013; pp. 3228–3232. [Google Scholar]

- Premerlani, W.; Bizard, P. Direction Cosine Matrix IMU: Theory; Diy Drone: USA, 2009; pp. 13–15. Available online: https://www.researchgate.net/publication/265755808_Direction_Cosine_Matrix_IMU_Theory (accessed on 19 September 2021).

- Goodwin, G.C.; Graebe, S.F.; Salgado, M.E. Control System Design; Prentice Hall New: Hoboken, NJ, USA, 2001; Volume 240. [Google Scholar]

| Algorithm Type | Average (s) | SD |

|---|---|---|

| DWPSO | 1076.1 | 2.01 |

| GWO | 576.3 | 3.76 |

| GN | 1556.4 | 2.98 |

| Subject 1 | Subject 2 | Subject 3 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| DWPSO | GWO | GN | DWPSO | GWO | GN | DWPSO | GWO | GN | |

| 8.65 | 9.09 | 9.36 | 10.63 | 10.97 | 11.05 | 8.17 | 9.65 | 11.90 | |

| 3.72 | 6.42 | 7.90 | 5.97 | 7.36 | 12.53 | 3.42 | 7.83 | 9.61 | |

| 4.53 | 5.13 | 5.26 | 3.29 | 4.15 | 4.69 | 5.71 | 6.08 | 6.86 | |

| 5.77 | 6.86 | 7.45 | 4.35 | 5.61 | 5.99 | 2.06 | 3.41 | 3.76 | |

| 1.12 | 3.42 | 5.02 | 6.01 | 7.86 | 10.34 | 4.98 | 6.93 | 9.28 | |

| 3.16 | 5.26 | 7.02 | 4.54 | 6.37 | 7.63 | 1.57 | 4.25 | 6.97 | |

| 4.03 | 5.69 | 7.36 | 3.81 | 4.08 | 6.62 | 5.43 | 8.62 | 9.45 | |

| 5.76 | 6.83 | 7.71 | 3.55 | 5.67 | 9.71 | 3.26 | 4.54 | 4.86 | |

| 21.05 | 23.07 | 23.45 | 25.41 | 26.06 | 26.83 | 20.25 | 21.79 | 23.67 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Q.; Liu, L.; Mei, F.; Yang, C. Joint Constraints Based Dynamic Calibration of IMU Position on Lower Limbs in IMU-MoCap. Sensors 2021, 21, 7161. https://doi.org/10.3390/s21217161

Hu Q, Liu L, Mei F, Yang C. Joint Constraints Based Dynamic Calibration of IMU Position on Lower Limbs in IMU-MoCap. Sensors. 2021; 21(21):7161. https://doi.org/10.3390/s21217161

Chicago/Turabian StyleHu, Qian, Lingfeng Liu, Feng Mei, and Changxuan Yang. 2021. "Joint Constraints Based Dynamic Calibration of IMU Position on Lower Limbs in IMU-MoCap" Sensors 21, no. 21: 7161. https://doi.org/10.3390/s21217161

APA StyleHu, Q., Liu, L., Mei, F., & Yang, C. (2021). Joint Constraints Based Dynamic Calibration of IMU Position on Lower Limbs in IMU-MoCap. Sensors, 21(21), 7161. https://doi.org/10.3390/s21217161