LiDAR Positioning Algorithm Based on ICP and Artificial Landmarks Assistance

Abstract

:1. Introduction

2. Materials and Methods

2.1. Traditional Reflector Positioning Algorithm and Traditional ICP Algorithm

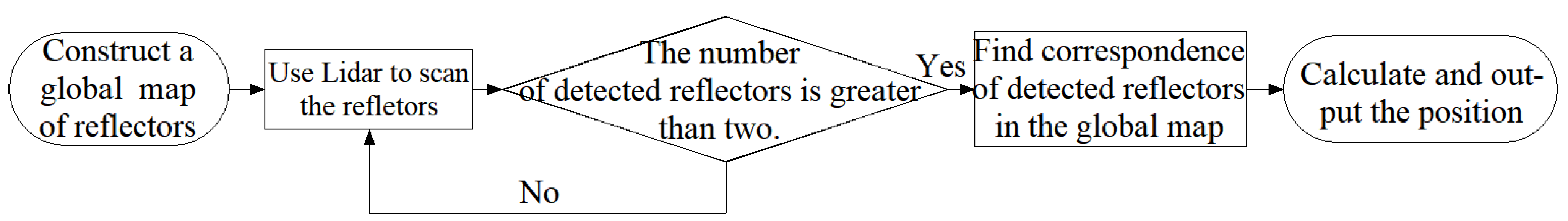

2.1.1. Traditional Reflector Positioning Algorithm

2.1.2. Traditional ICP Algorithm

2.2. ICP Fused with Reflector Positioning

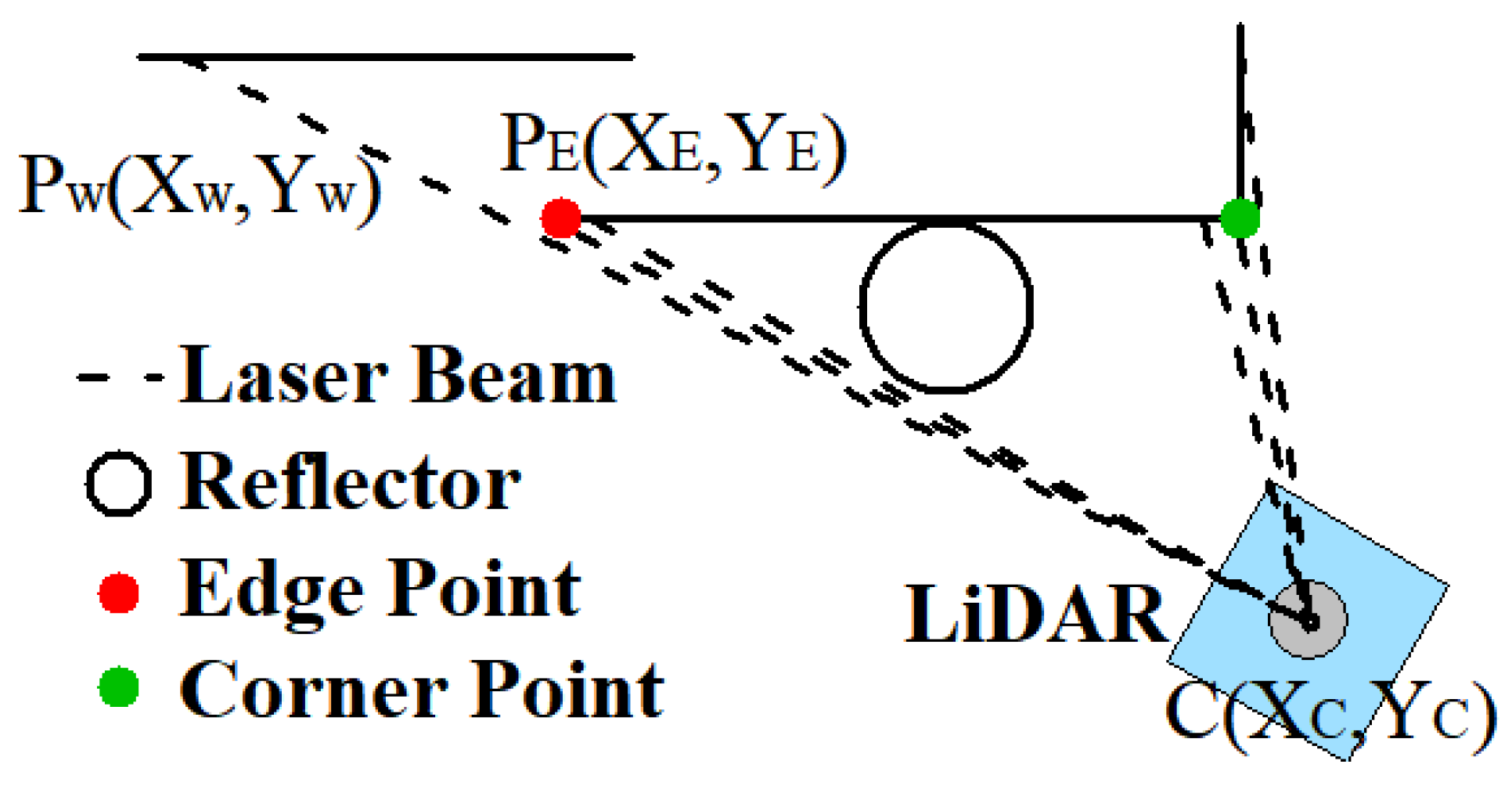

2.2.1. Coordinates of Reflector Fitting Based on Least Square

2.2.2. Improved Reflector Positioning Method

2.2.3. Optimization of Improved Reflector Positioning Method

2.2.4. Integrate the Pose Transformation into ICP

- Step 1: Obtain consecutive point cloud frames from the LiDAR

- Step 2: Extract the coordinates of reflectors in frame i + 1

- Step 3: If the number of reflectors is more than three, construct a triangle set; if not, add corner points and so on to the reflector sets before constructing the triangle set

- Step 4: Match the triangle sets of frame i and frame i + 1 and obtain the correspondence of reflectors between two frames

- Step 5: Calculate the initial pose transformation by SVD

- Step 6: Provide the initial pose transformation to the initial iteration value of ICP and operate ICP to calculate the final pose transformation

- Step 7: Add the final pose transformation to the last position of LiDAR

- Step 8: Return to step 1, repeat the above steps.

| Algorithm 1. Improved least square calculating coordinate of reflector |

| Input:PCL:The original point clouds obtained from the LiDAR |

| Output:P_LiDAR:Position of LiDAR |

| 1: Initialize:i ← 1,n ← 0,P_LiDAR [0] ← 0 |

| 2: while true do |

| 3: Detect the reflectors and calculate the coordinates from PCL[i], structure the set of reflectors, n ← number of reflectors |

| 4: if n ≥ 1 |

| 5: if n > 2 |

| 6: Structure the triangle set Tri |

| 7: if n ≤ 2 |

| 8: Add edge points, corner points or points of wall to R |

| 9: Structure the triangle set Tri |

| 10: Compare current set Tri and last set Tri′ and Find the correspondence of reflectors |

| 11: Calculate the initial transformation, Rotation matrix and translation matrix |

| 12: else |

| 13: , |

| 14: end if |

| 15: Provide and to the initial transformation of iteration |

| 16: Use ICP algorithm to calculate final transformation and |

| 17: Output the position of LiDAR: P_LiDAR[i] ← P_LiDAR[i − 1] + (,) |

| 18: i ← i + 1, n ← 0 |

| 19: end while |

3. Results

3.1. Platform Construction and Data Collection

3.2. Experiment of Fitting the Reflector Coordinates

3.3. Experiment with the Proposed Positioning Algorithm

4. Discussion

4.1. Performance of Fitting the Reflector Coordinates

4.2. Performance of the Proposed Positioning Algorithm

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ullrich, G. Automated Guided Vehicle Systems; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Wang, W.J.; Zhang, W.G. Research state and development trend of guide technology for automated guided vehicle. Transducer Microsyst. Technol. 2009, 28, 5–7. [Google Scholar]

- Pang, C. Research on AGV Control System of Electromagnetic Navigation Based on Medical Transportation. Electr. Eng. 2018, 8, 21–22+25. [Google Scholar]

- Xiao, X.U.; Zhao, Y.K.; Liao, C.; Yuan, Q.; Ge, L. Exploration of a RFID-based Approach to AGV Electromagnetic Guiding System. Logist. Technol. 2011, 30, 138–140. [Google Scholar]

- Reis, W.; Junior, O.M. Sensors applied to automated guided vehicle position control: A systematic literature review. Int. J. Adv. Manuf. Technol. 2021, 113, 21–34. [Google Scholar] [CrossRef]

- Peisen, L.I.; Yuan, X.U.; Tao, S.; Bi, S. INS/UWB integrated AGV localization employing Kalman filter for indoor LOS/NLOS mixed environment. In Proceedings of the 2019 International Conference on Advanced Mechatronic Systems (ICAMechS), Kusatsu, Japan, 26–28 August 2019; pp. 294–298. [Google Scholar]

- Pudlovskiy, V.; Chugunov, A.; Kulikov, R. Investigation of impact of UWB RTLS errors on AGV positioning accuracy. In Proceedings of the 2019 International Russian Automation Conference (RusAutoCon), Sochi, Russia, 8–14 September 2019; pp. 1–5. [Google Scholar]

- Waldy, I.; Rusdinar, A.; Estananto, E. Design and Implementation System Automatic Guided Vehicle (AGV) Using RFID for Position Information. J. Meas. Electron. Commun. Syst. 2015, 1, D1–D6. [Google Scholar] [CrossRef]

- Xu, J.; Liu, Y.; Li, J.; Zhou, Y.; Wang, L. Overview and Development Trend of AGV Laser Navigation and Positioning Technology. Logist. Mater. Handl. 2020, 25, 124–125. [Google Scholar]

- Zhou, S.; Cheng, G.; Meng, Q.; Lin, H.; Du, Z.; Wang, F. Development of multi-sensor information fusion and AGV navigation system. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020. [Google Scholar]

- Li, L.; Schulze, L. Comparison and evaluation of SLAM algorithms for AGV navigation. In Proceedings of the 8th International on Conference Production Engineer and Management, Lemgo, Germany, 4–5 October 2018; pp. 213–221. [Google Scholar]

- Liu, Y.; Gong, J.; Zhang, M.; Xie, H.; Li, W. Research on Gmapping SLAM Navigation Algorithm Based on Single Steering Sheel AGV. Manuf. Autom. 2020, 42, 133–135+144. [Google Scholar]

- Beinschob, P.; Reinke, C. Graph SLAM Based Mapping for AGV Localization in Large-scale Warehouses. In Proceedings of the 2015 IEEE International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2015; pp. 245–248. [Google Scholar]

- Wang, H.; Wang, C.; Chen, C.L.; Xie, L. F-loam: Fast LiDAR Odometry and Mapping. arXiv 2021, arXiv:2107.00822. [Google Scholar]

- Xu, Z.; Huang, S.; Ding, J. A New Positioning Method for Indoor Laser Navigation on Under-Determined Condition. In Proceedings of the 2016 Sixth International Conference on Instrumentation & Measurement, Computer, Communication and Control (IMCCC), Harbin, China, 21–23 July 2016; pp. 703–706. [Google Scholar]

- Morrison, R. Fiducial Marker Detection and Pose Estimation from LIDAR Range Data. Master’s Thesis, Naval Postgraduate School, Monterey, CA, USA, 2010. [Google Scholar]

- Xu, H.; Xia, J.; Yuan, Z.; Cao, P. Design and Implementation of Differential Drive AGV Based on Laser Guidance. In Proceedings of the 2019 3rd International Conference on Robotics and Automation Sciences (ICRAS), Harbin, China, 21–23 July 2019. [Google Scholar]

- Ronzoni, D.; Olmi, R.; Secchi, C.; Fantuzzi, C. AGV global localization using indistinguishable artificial landmarks. In Proceedings of the IEEE International Conference on Robotics & Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Xu, H.; Zhang, S.; Cao, P.; Qin, J.; Wang, C. A Research on AGV Integrated Navigation System Based on Fuzzy PID Adaptive Kalman Filter. In Proceedings of the 2019 IEEE 3rd Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 11–13 October 2019. [Google Scholar]

- Ye, H.; Zhou, C.C. A new EKF SLAM algorithm of lidar-based AGV fused with bearing information. In Proceedings of the TechConnect World Innovation Conference, Anaheim, CA, USA, 9 May 2018; pp. 32–39. [Google Scholar]

- Cho, H.; Kim, E.K.; Kim, S. Indoor SLAM application using geometric and ICP matching methods based on line features. Robot. Auton. Syst. 2017, 100, 206–224. [Google Scholar] [CrossRef]

- Wei, X. Research on SLAM, Positioning and Navigation of AGV Robot Based on LiDAR. Master’s Thesis, South China University of Technology, Gunagzhou, China, 2019. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. Int. Soc. Opt. Photonics 1992, 1611, 586–606. [Google Scholar]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the IEEE International Conference on Robotics & Automation, Pasadena, CA, USA, 19–23 May 2008. [Google Scholar]

- Arun, K.S. Least-Squares Fitting of Two 3-D Point Sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 698–700. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, M.; Yue, Z.; Liu, K.; Quan, B. PETROV Viacheslav, SEMEN Shanoylo.Characteristics analysis and producing of micro-prism retroreflective meterial. J. Zhejiang Univ. Technol. 2010, 38, 351–354. [Google Scholar]

- Yang, H.; Yin, Z.; Yang, S. A Method for Measuring Circular Edge of Workpiece. C.N. Patent 104050660A, 17 September 2014. [Google Scholar]

- Li, Q. Research on Indoor LiDAR SLAM Method for Spherical Robots. Master’s Thesis, Zhejiang University, Hangzhou, China, 2021. [Google Scholar]

- Zhang, S.; Yang, P.; Zhang, W.; Wu, Y. A Filtering Method for LiDAR Scan Shadows. C.N. Patent 113345093A, 17 May 2021. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar]

- Lawson, C.L.; Hanson, R.J. Solving Least Squares Problems; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1995. [Google Scholar]

| Frame_id | X (m) | Y (m) | Angle (°) |

|---|---|---|---|

| 1 | −0.647 | 0.224 | 356.46 |

| 2 | −0.647 | 0.225 | 356.46 |

| 3 | −0.646 | 0.225 | 356.46 |

| 4 | −0.646 | 0.228 | 356.49 |

| 5 | −0.648 | 0.224 | 356.46 |

| 6 | −0.648 | 0.226 | 356.47 |

| 7 | −0.648 | 0.227 | 356.48 |

| 8 | −0.646 | 0.222 | 356.44 |

| 9 | −0.647 | 0.224 | 356.45 |

| 10 | −0.646 | 0.223 | 356.45 |

| 11 | −0.649 | 0.225 | 356.45 |

| 12 | −0.648 | 0.225 | 356.46 |

| 13 | −0.647 | 0.224 | 356.48 |

| 14 | −0.647 | 0.224 | 356.51 |

| 15 | −0.645 | 0.224 | 356.51 |

| … | … | … | … |

| NAV350 | Method Proposed | Least Square | |

|---|---|---|---|

| Average length of the 1st line (m) | 1.0365 | 1.0367 | 1.0275 |

| Average length of the 2nd line (m) | 1.5428 | 1.5450 | 1.5235 |

| Average length of the 3rd line (m) | 1.8167 | 1.8170 | 1.7898 |

| Average error of the 1st line (m) | / | −0.0090 | |

| Average error of the 2nd line (m) | / | 0.0022 | −0.0193 |

| Average error of the 3rd line (m) | / | −0.0269 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, Q.; Kan, Y.; Tao, X.; Hu, Y. LiDAR Positioning Algorithm Based on ICP and Artificial Landmarks Assistance. Sensors 2021, 21, 7141. https://doi.org/10.3390/s21217141

Zeng Q, Kan Y, Tao X, Hu Y. LiDAR Positioning Algorithm Based on ICP and Artificial Landmarks Assistance. Sensors. 2021; 21(21):7141. https://doi.org/10.3390/s21217141

Chicago/Turabian StyleZeng, Qingxi, Yuchao Kan, Xiaodong Tao, and Yixuan Hu. 2021. "LiDAR Positioning Algorithm Based on ICP and Artificial Landmarks Assistance" Sensors 21, no. 21: 7141. https://doi.org/10.3390/s21217141

APA StyleZeng, Q., Kan, Y., Tao, X., & Hu, Y. (2021). LiDAR Positioning Algorithm Based on ICP and Artificial Landmarks Assistance. Sensors, 21(21), 7141. https://doi.org/10.3390/s21217141