1. Introduction

With the fast development of digital cameras, more and more computer vision systems are applied in various fields, such as visual navigation robotics. Visual odometry algorithms have been improved greatly. Direct methods, such as LSD-SLAM (Large-scale direct monocular SLAM) [

1], DSO (Direct Sparse Odometry) [

2], LDSO (Direct Sparse Odometry with Loop Closure) [

3], Stereo DSO [

4], and SO-DSO (Scale Optimized Direct Sparse Odometry) [

5], have been proposed. These methods assume that the same point in different frames appears same pixel value. However, for auto exposure cameras, which could adapt to different light environments, the observed pixel value from the same scene point may change during a time in the sequence. This affects the precision of the direct visual odometry algorithms.

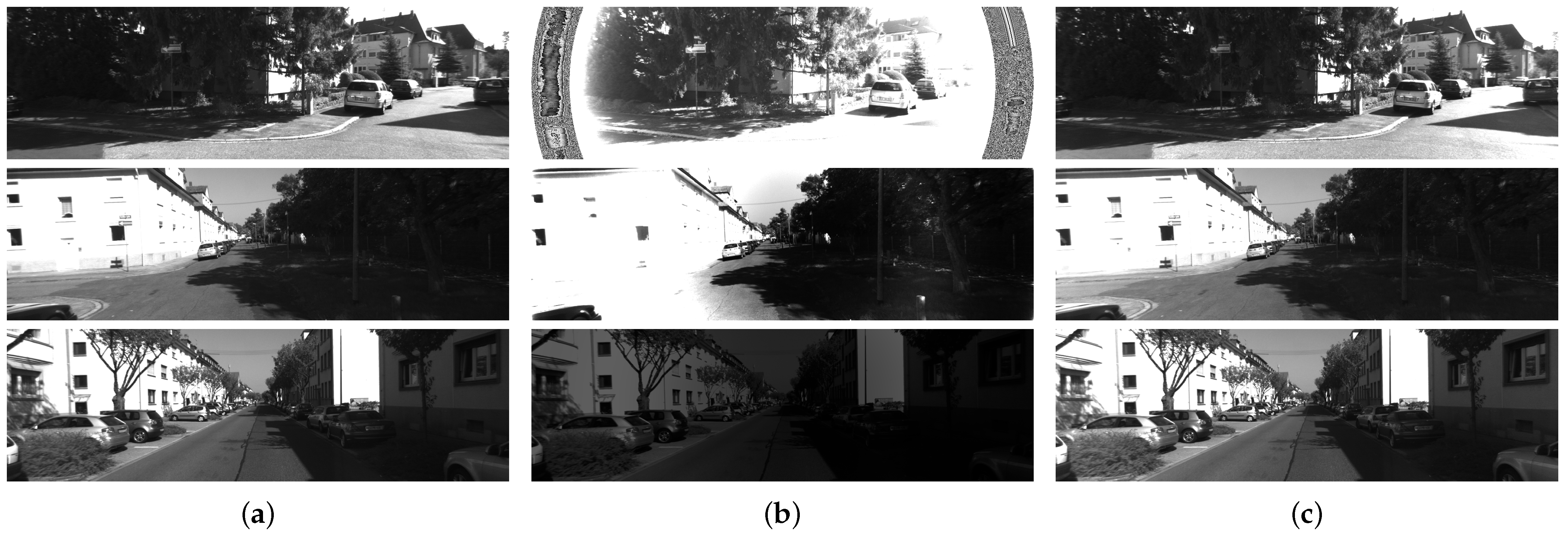

Worse yet, there is an irradiance fall-off phenomenon called vignetting. The irradiance reduces on the periphery compared to the image center. It means that, even the exposure time is fixed, the pixel value changes if the camera is moving and the projected location of the scene point to the camera is changing.

Prior photometric camera calibration has been proved effective for improving the performance of DSO. Therefore, it is necessary to align the brightness of the same scene point in different frames. For this purpose, the vignetting phenomenon is required to be estimated. Furthermore, the relationship between the irradiance captured by the camera and the output image brightness value is often nonlinear, which makes the estimation more complex. The camera response function (CRF) is used to describe the relationship between the irradiance and the image brightness.

There are also other color correction factors, such as white balance correction, for multi-camera systems. In most visual odometry systems, only grayscale images or one channel of the color images are used for processing. Thus, we just discuss the grayscale images in this work.

In some cases, the response function and vignetting function are estimated beforehand. However, to achieve the response function in this case, the camera and the scene objects should remain static, and the environment light source should be fixed. In fact, if the exposure time and other parameters change during recording, it is more desirable to use the captured images to estimate the response function [

6]. For the vignetting function, a uniform radiance plane is usually used as a given reference target [

7], and the function that describes the relationship of pixel brightness and location is established. However, sometimes, there is no condition to do the pre-calibration, such as the exposure time could not be set manually. In addition, the change of aperture or focus affect the vignetting, the calibrated function under fixed settings may not be suited to different actual operations.

There are also many calibration methods for the captured images without special patterns. In some multi-view systems and image-stitching tasks, the overlap area gives many correspondences, so that we could analyze the brightness change rule of one object point in different views or images. A. Litvinov et al. [

8] propose a linear least squares solution for response and vignetting functions, where they use the correspondences obtained from panorama stitching. In the visual odometry system, the camera usually moves at fast forward speed. Drastic changes in perspective relationships affect the performance of the image stitching method. S. J. Kim et al. [

9] propose a method to estimate the radiometric response function of the camera and the exposure difference between frames with feature tracking. M. Grundmant et al. [

10] suppose the camera response function changes with scene content and exposure and propose a self-calibration model for time-varying mixture of responses. In these methods, they suppose that the movement in the track is short, and no vignetting is present. In order to improve the performance of monocular direct visual odometry, P. Bergmann et al. [

11] propose an energy function for response and vignetting at the same time. The correspondences for the function are captured from the KLT tracker. In direct visual odometry with stereo cameras [

4], the exposure differences between neighboring frames are estimated without considering the vignetting effects.

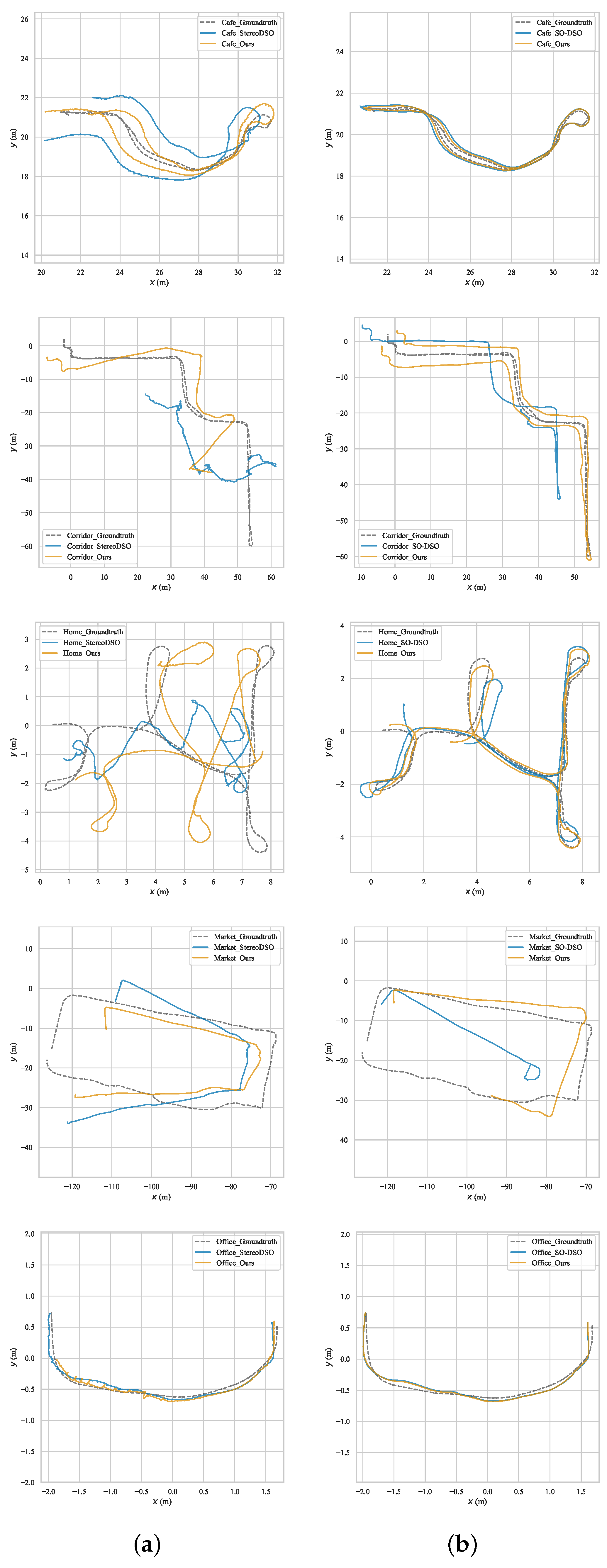

In this paper, we propose a new calibration method to establish the vignetting function and exposure time ratios between frames from a stereo camera used in direct visual odometry. Feature points tracking and stereo matching give out the correspondences for solving the energy function. The method is suitable for the camera with a gamma-like response function. We calibrate the captured images with the inverse vignetting function and the exposure time ratios during the visual odometry processing. We tested our algorithm on open datasets and image sequences captured by a stereo camera.

2. Method

2.1. Photometric Model

In this paper, we suppose that every object in the scene has a Lambertian surface. The radiance of a Lambertian surface to a camera is the same regardless of the camera’s angle of view. Although there may be some objects that do not follow Lambertian reflectance, if we can get enough points pairs on the Lambertian surface, the solving processing of energy equation will be robust. When L is the radiance of a point in the scene, t is the exposure time of the camera, and then the irradiance of the point at the sensor should be in the ideal situation. However, the vignetting effect appears in most camera systems. is used to describe the vignetting effect of the camera, and x is the location of the point in the image sensor. The real irradiance of one point in the sensor is .

In order to make the image taken by a camera to be adapted to the color space standard, digital camera normally uses a nonlinear response function to transform the irradiance on the sensor to image pixel brightness value. However, the response function in fact depends on the camera devices to generate visually preferable images. The function

f describes the CRF. For an 8-bit image, which is normally used in image processing, the range of

f is

. Here, we normalize the range to

for convenience. The brightness value of a scene point in the image captured by the camera can be written as

For the point

in

k-th frame with exposure time

, the value in the image can be written as

Here,

is a fixed value for the object corresponding to the pixel

based on the Lambertian reflection assumption. We can get the function:

It is easy to think of an energy equation in the below format:

Here,

n is the totally used frame number and

P is the set of tracked points. As the

is unknown, we change the format of energy equation to

We cannot know the real exposure time but only the ratio of exposure time between frames.

2.2. Vignetting Function Model

In our method, a polynomial is used to approximate the vignetting correction function.

Here, is the normalized radius of the image point to the optical center of the image. If the optical center is not known, the center of the image is used. In most SLAM datasets or stereo camera systems, the optical center is necessary for odometry-related processing. We could consider the location of the optical center as known information.

2.3. Response Function Model

Grossberg and Nayar [

12] introduced a diverse database of real-world camera response functions (DoRF). They combine the basis functions from DoRF to create a low-parameter Empirical Model of Response (EMoR), such as:

Here, is the mean response function, and is the parameter for each basic function . Many camera manufacturers design the response to be a gamma curve. Therefore, they included a few gamma curves, chosen from the range , in the database.

Then, we consider the camera with a CRF in the type of

where

l is the normalized irradiance,

,

. We get

,

, and

f is a monotonically increasing function.

We assume that there is an ideal solution with a response function

, a vignetting function

, and the exposure time of

k-th frame is

. With this solution, we get

Then, for another

, which is a given constant different from

, we can give out another solution:

With this solution, we can also get

Thus, there are infinite solutions for the equation without extra assumption.

We also use several other kinds of functions to estimate the response function, such as polynomial, power function (gamma curve) with constant term and functions in Reference [

12]. We tested these methods on different datasets, and they all give an unstable performance.

2.4. Assumptions

Usually, the left camera and right camera in a stereo camera system have the same light-sensitive components and the same type of lens. Some stereo camera systems have hardware synchronization that makes the exposure time settings for the multi-cameras exactly the same. Even without hardware synchronization, the same auto-exposure algorithm and highly overlapping fields of view make the exposure time of the left camera and the right camera almost the same in most conditions. In our method, the response functions of the left camera and right camera are assumed to be same. Even though each lens has a slight difference, we still can assume that the vignetting effects are the same.

Gamma correction is a widely used nonlinear operation for encoding and decoding luminance or tristimulus values in video or still image systems for compensating the display tone reproduction curve [

13]. The image sensors used in the industrial application usually have a gamma correction parameter in settings. Some cameras for image processing have a linear CRF, which means a gamma curve with

. Thus, in our method, we assume the CRF is described with a gamma curve. In the previous section, we discuss that, if there is a ideal solution for the energy equation, for any

, we can get another solution. Especially, we set the CRF function as

. Although we cannot get the real solution, we can use the special solution to unify the pixels in different frames which are corresponding to the same object into the same brightness value.

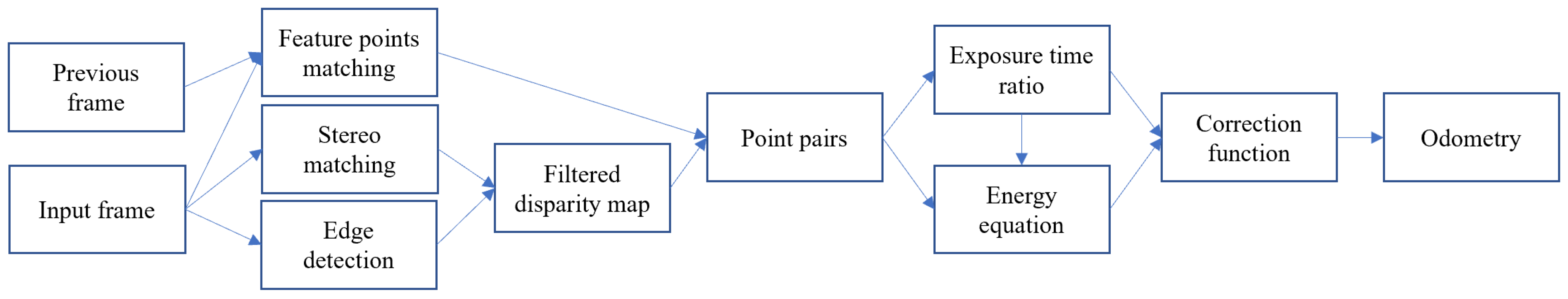

Figure 1 shows the flow chart of the whole processing.

2.5. Stereo Matching

Stereo matching algorithms have been greatly developed in recent years. We use stereo matching between image pairs in the initialization step of the algorithm to get a quick estimate of the vignetting function. Here, we focus on the computational cost. The accuracy of each point and density of the disparity image are not much concerned as we do not use the disparity to calculate the depth. The semi-global matching [

14] method is chosen to process the stereo matching. This is a mature algorithm, which can be realized on GPU. The internal and external parameters of stereo camera are necessary before stereo matching, where they are often provided in a visual odometry system. So, it is reasonable to assume that those parameters are already known in the processing. During the stereo matching, each point gets a cost value to measure the confidence of the matching. Usually, the pixel with high confidence should be chosen. In stereo matching, the edge points or corner points often get a higher confidence value. The disparity is a sub-pixel value, and the brightness changes a lot on the edge area. It is not easy to achieve exact correspondences of brightness on the edge area. As the applied method is a dense matching, we can get enough correspondences even if the points on the edge area are not selected. In order to move out the pixels on the edge area from the data used for estimation, the canny detector [

15] is applied to extract the edge. Then, we apply a blur kernel to the edge image. The pixel value of the blurred image is used to measure the distance of the pixel to the nearby edge. Finally, we select pixels with high confidence and far away from edges to get correspondences.

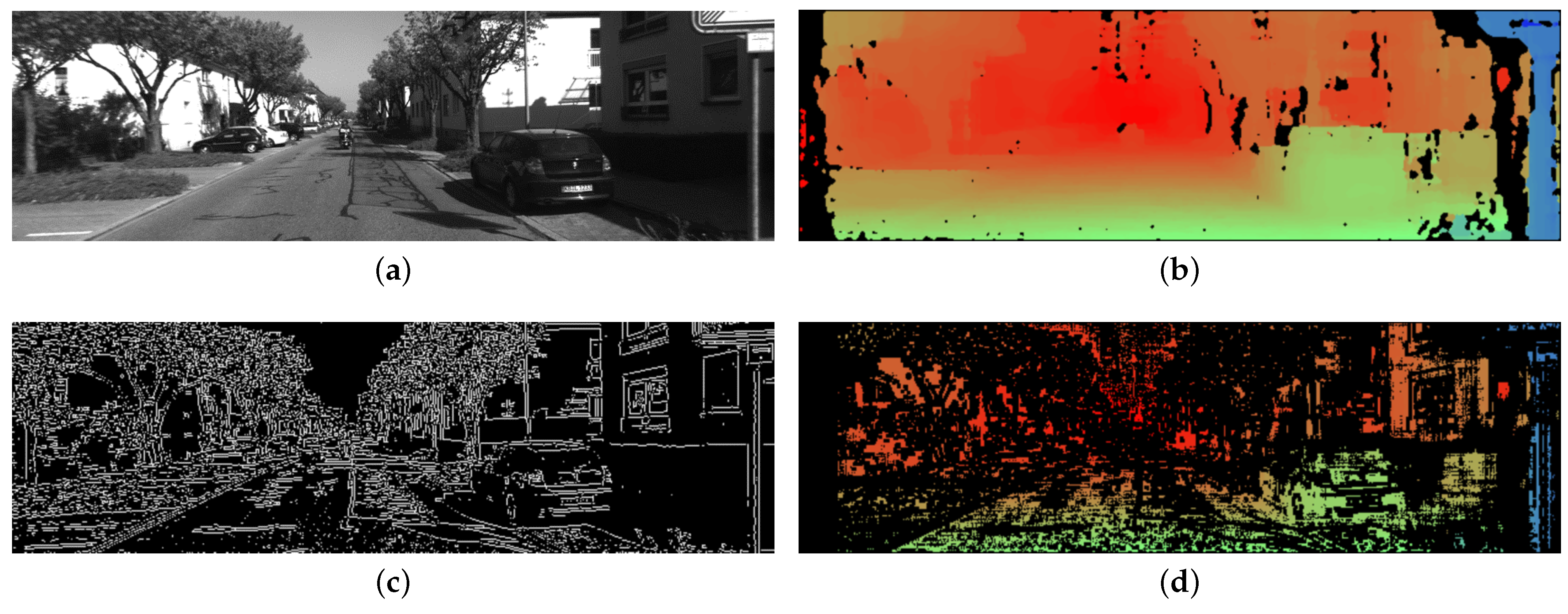

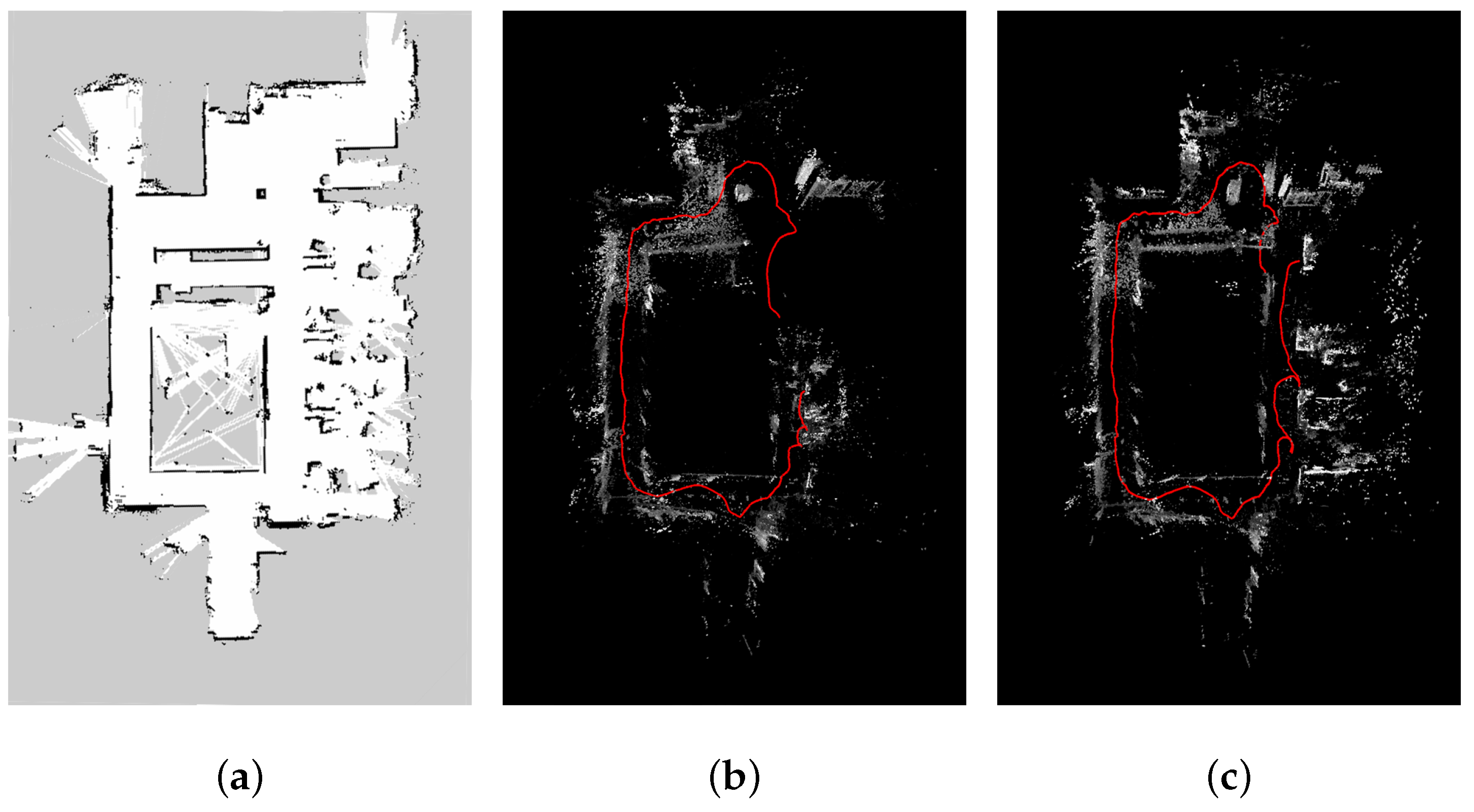

Figure 2 shows a sample of a filtered disparity image.

In the algorithm, we resize the input image to speed up the stereo matching. Then, an interpolation method is processed to resize the disparity map to its original size. We also give out a GPU accelerated version of the stereo matching program to ensure the processing in real-time.

2.6. Feature Points Mapping

We choose the ORB-features [

17] to process feature points mapping between frames. The ORB-feature is robust to rotation and illumination change, so it is suitable for our target. We calculate the feature points mapping between the previous frame and the current frame. Further, we calculate the feature points mapping between the left and right frames in the same frame pair. As we assume the left and right frames have the same exposure time, the difference between the same point in the two frames occurred by the vignetting effects. One point may have different projected coordinates in the left camera and right camera because of the baseline of the two cameras. Thus, the radius of the point to the optical center changes in the left camera and right camera. These two frames from one frame pair give more stable information for vignetting estimation than two frames that have different exposure times.

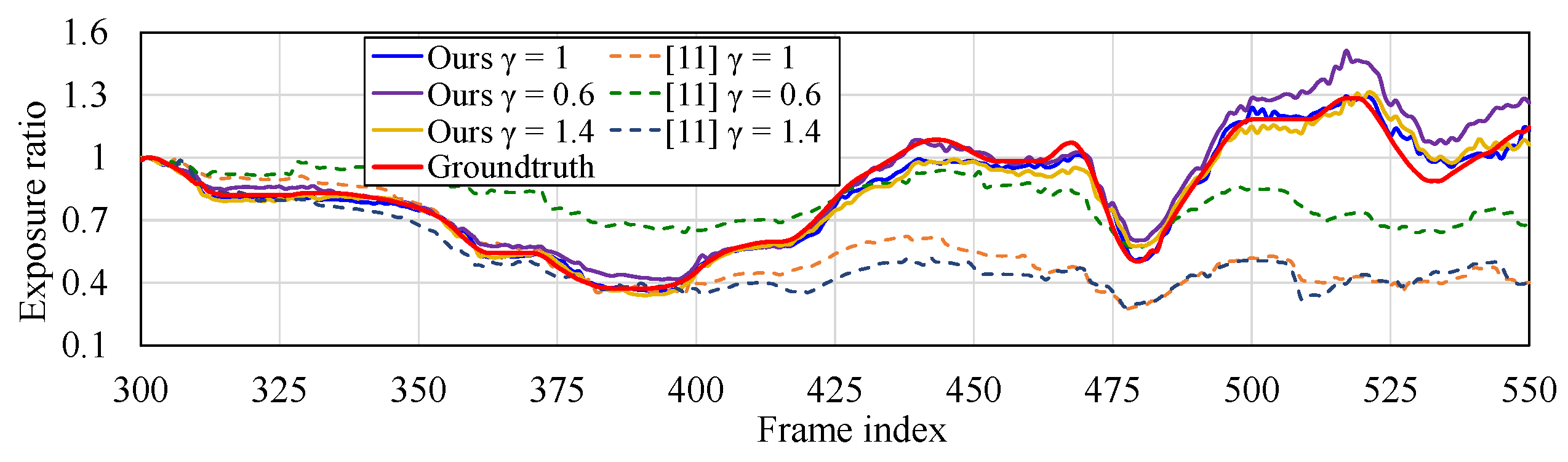

To estimate the exposure time ratio between two frames, it is easy to consider using the matched feature points and calculating the ratio of their pixel brightness. However, as the camera often keeps moving during the visual odometry processing, the location of one point in the frame changes during time. The vignetting affects the pixel value in each frame. If we just use the matched points pair in two frames with different radius without an inverse vignetting function, the precision will be affected. In the early step of the estimation, vignetting function is unknown. It is a challenge for a monocular camera algorithm to fix this problem. In stereo camera system, we could use the previous left camera to match the current camera and also use the previous right camera to match the current left camera. Much more feature points pairs could be achieved. We give a threshold for the difference of radius of the two points in one pair. Only when the points have almost the same radius will the points pair be used in the estimation. In addition, a weight coefficient inversely proportional to the radius is used to reduce the vignetting effect.

After the estimation of the exposure time ratio, we give out a threshold to decide whether use the previous frame pair to achieve more points pairs for vignetting function or not. If the exposure time rate is very close to 1, we assume that the camera did not change the exposure setting between the two frames. The feature points pairs between the previous frame pair and current frame pair are used to achieve more information for vignetting estimation. The brightness values of neighboring pixels of points pairs are used to increase the precision. We stop this step when enough points have been selected for the calculation of the vignetting function. The next exposure time estimation is the same as the original direct visual odometry. In this paper, we apply our method on Stereo DSO and SO-DSO.

2.7. Energy Equation

We assume that the CRF is

, and the energy equation is simplified as follows:

is calculated by the points pairs with similar radius to optical center before solving the equation. Then, we change the energy equation format to

Here, is the vignetting correction function which is unknown in the equation. We use SVD decomposition to solve this least square problem.