Smart Search System of Autonomous Flight UAVs for Disaster Rescue

Abstract

:1. Introduction

- Autonomous UAV Search: the smart search system enables UAVs to perform the autonomous search process to locate and approach the distressed people without the help of the ground control server (GCS). When a UAV takes off, the first predicted position is not accurate and can be very far from the actual survivor’s position. As the drone flies, it accumulates RSSI (Received Signal Strength Indicator) and ToA (Time of Arrival) data from survivors and the UAV gradually modifies its flight direction towards the survivor, resulting in a more accurate estimate of the location.

- Quick and Smart Tracking Algorithm: we present a smart search system based on a genetic algorithm by detecting changes in the signal strength between distress and drones inside the search system. The proposed smart search system is customized to the disaster site environment to improve tracking accuracy. Specifically, by combining RSSI and ToA data in consideration of the flight environment, it is possible to effectively filter out noise factors and obtain more accurate distance estimation.

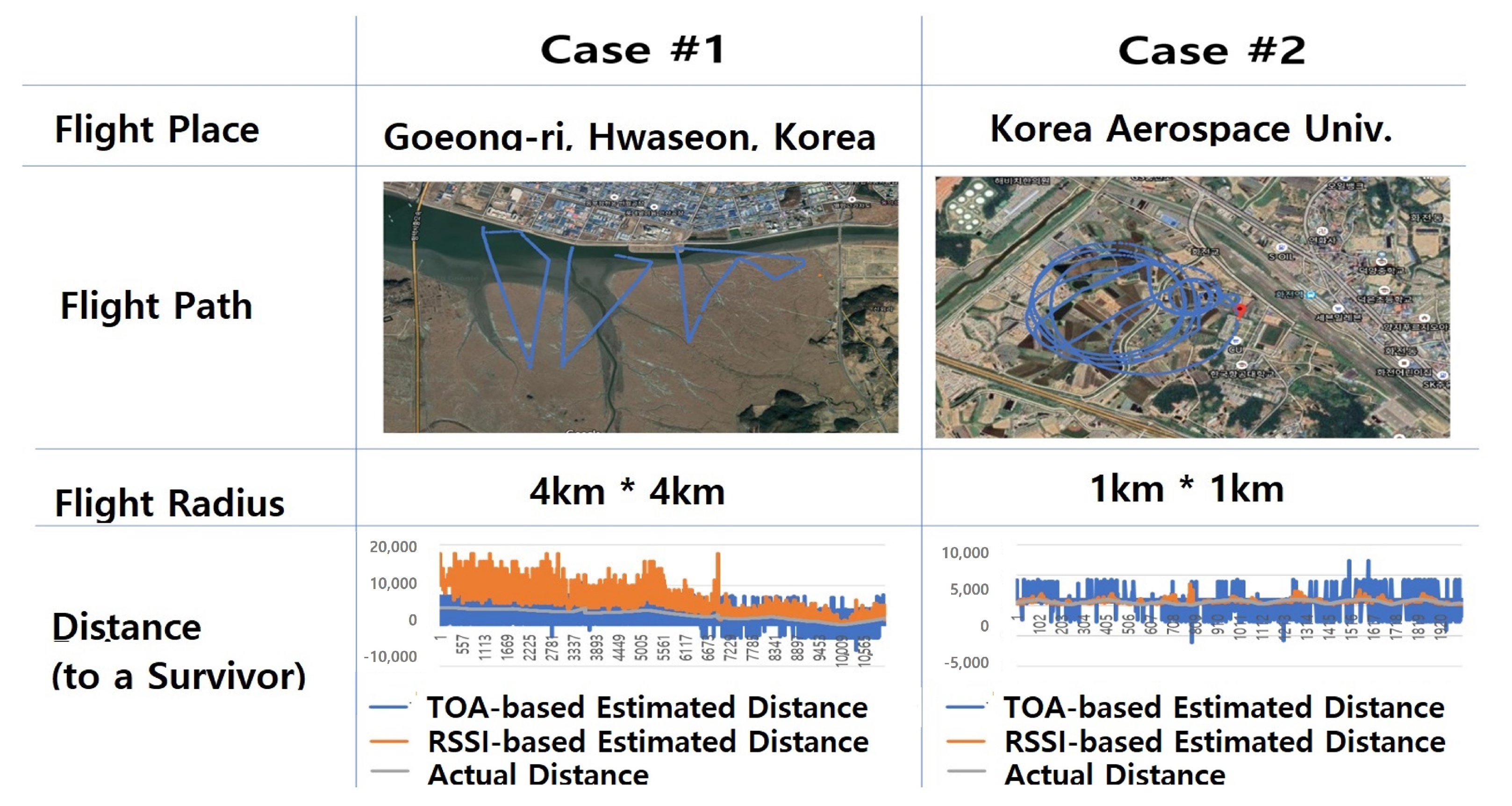

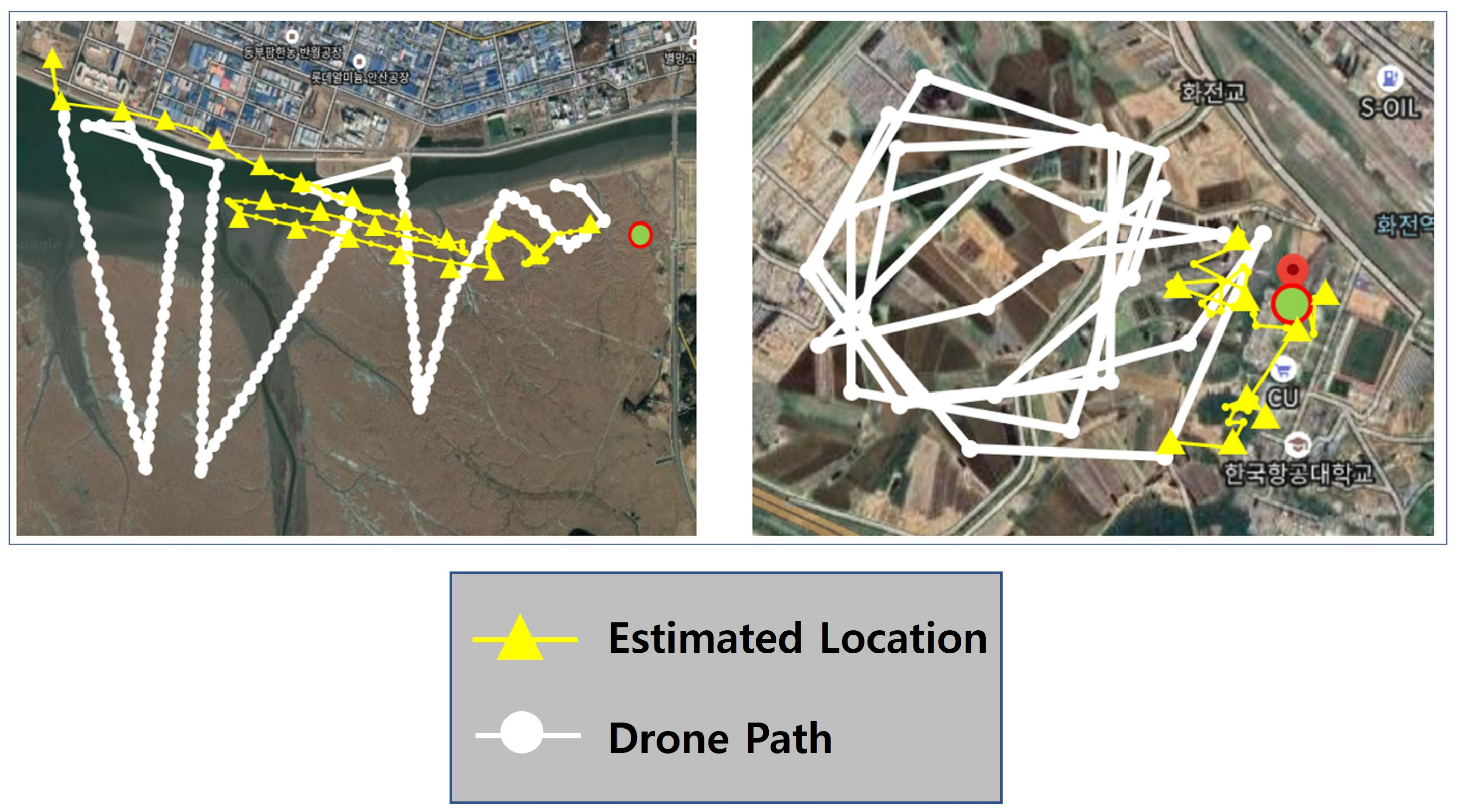

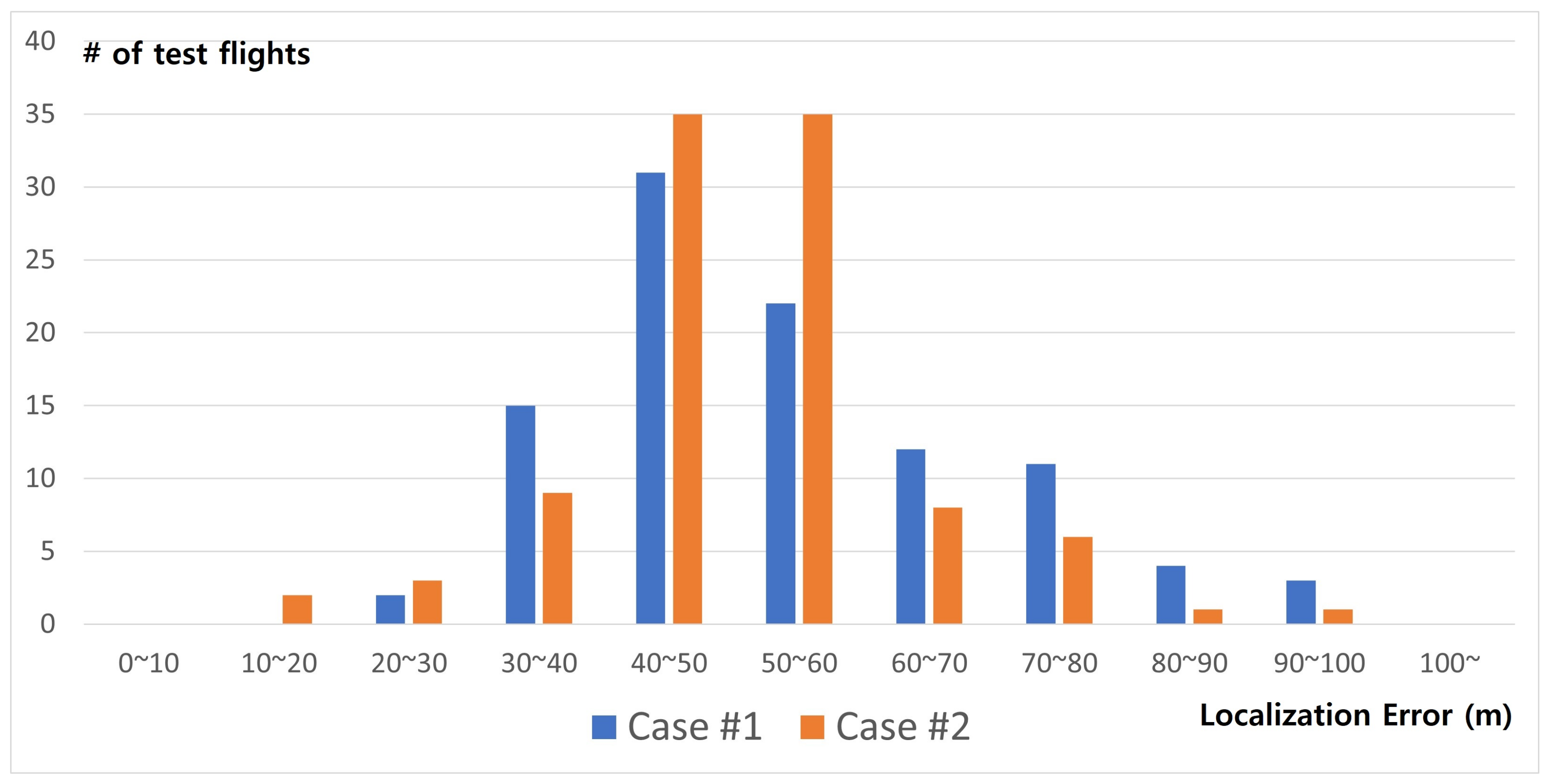

- Real-World Case Studies: we performed the flight search test in two real-world test cases to verify the performance of the proposed survivor location tracking system. We operated fixed-wing drones in about 4 km × 4 km and 1 km × 1 km sites to search a survivor, and we compared the estimated position of the survivor with the actual position.

2. Related Works

2.1. Autonomous Path Planning

2.2. Localization Algorithm

3. Proposed Smart Search System

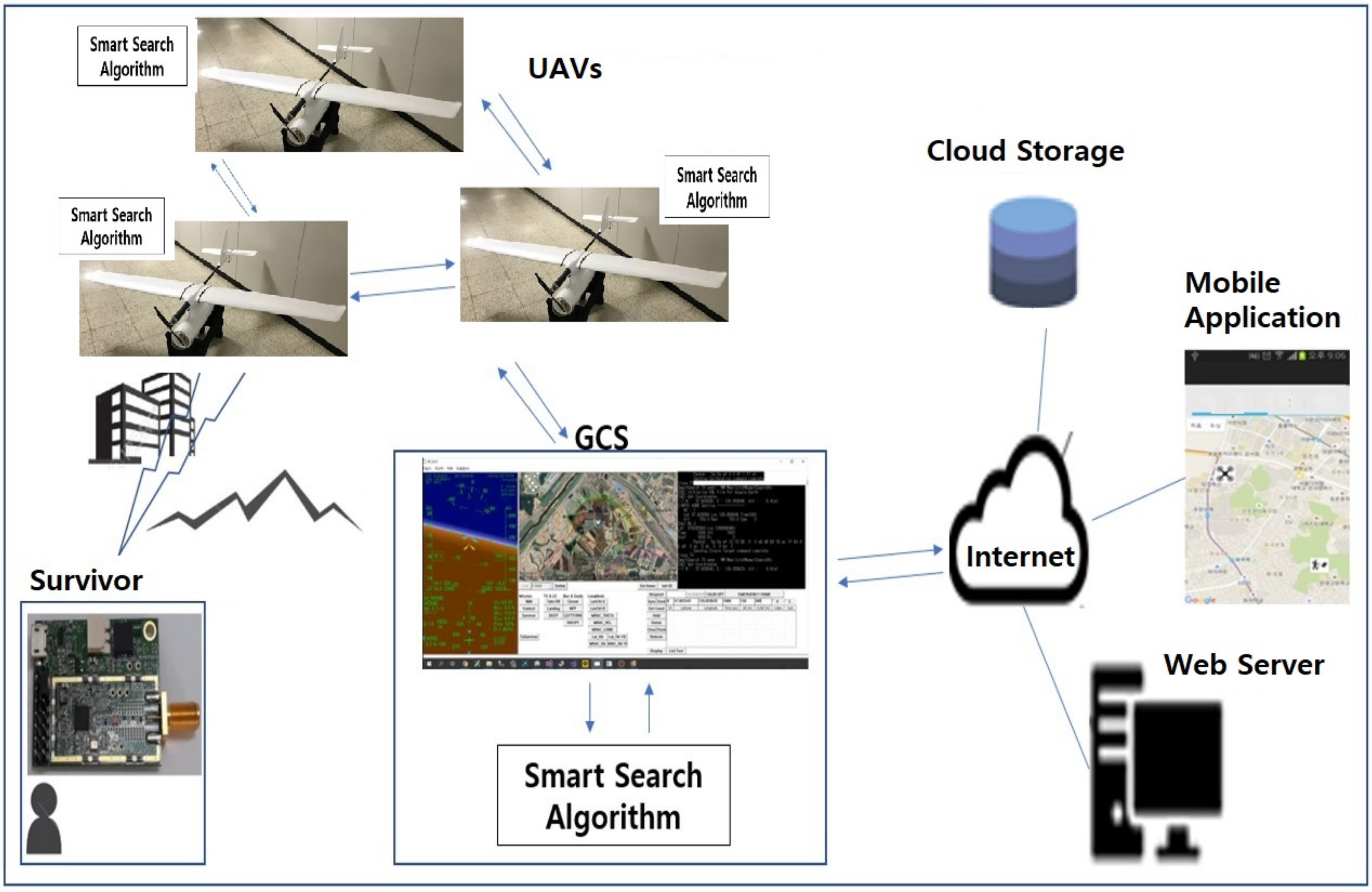

3.1. Overall Architecture of the UAV-Based Smart Search System

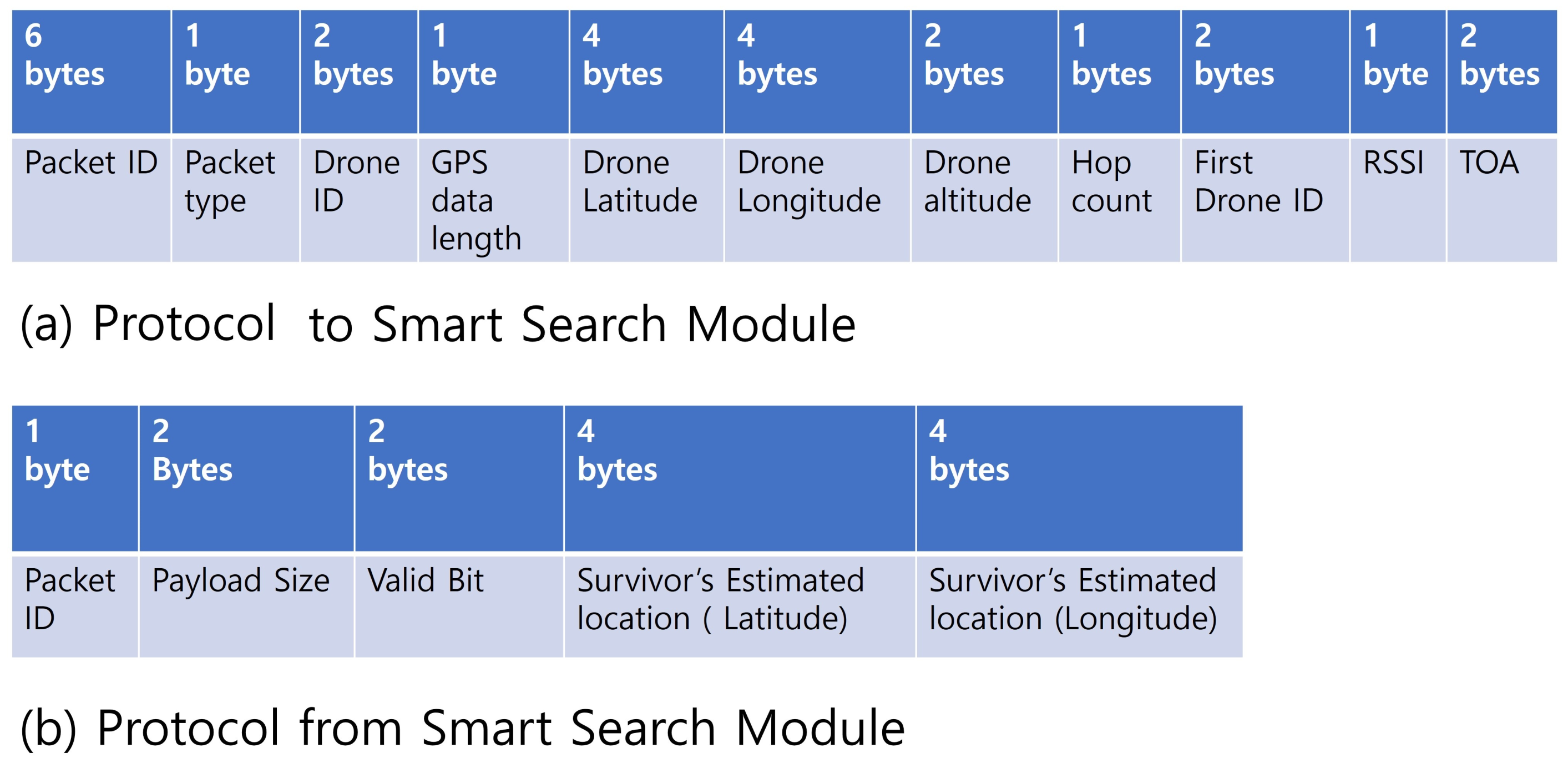

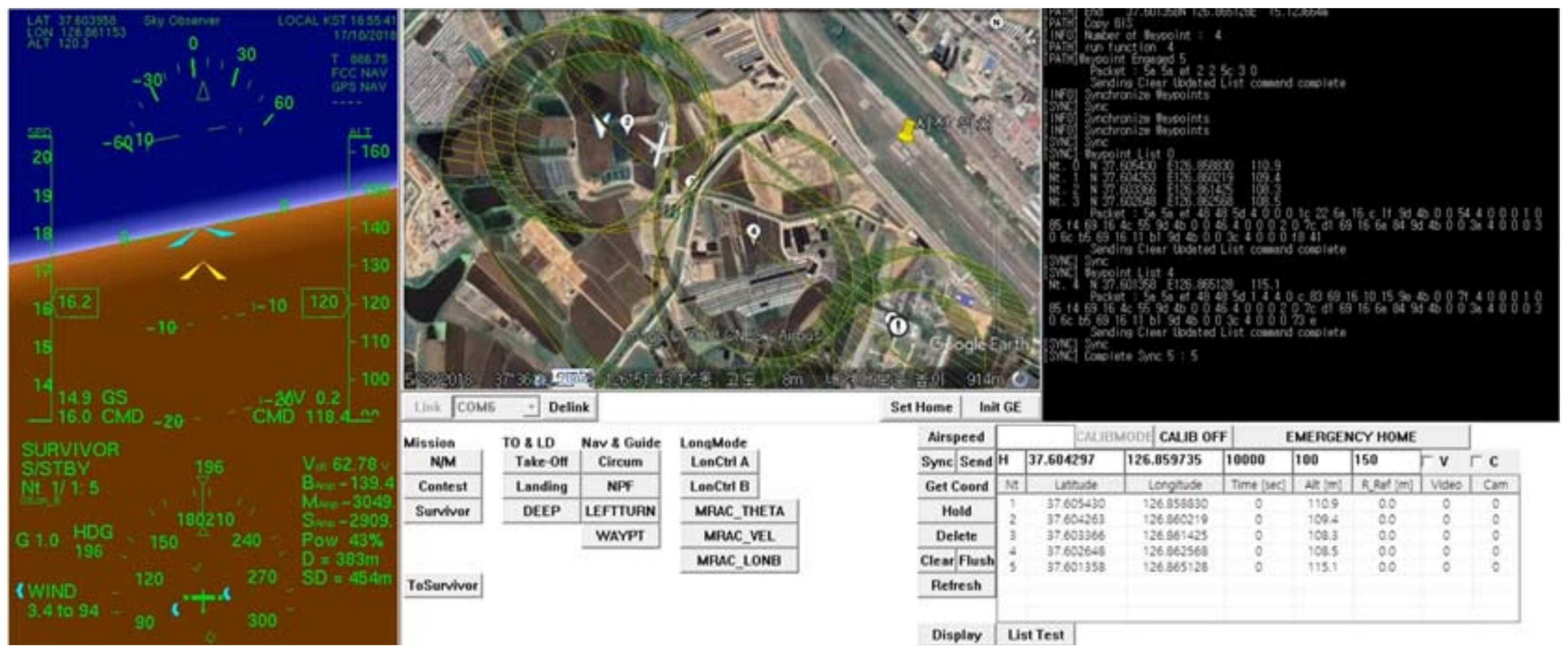

3.2. Communication among UAVs and Ground Control System

- 1.

- Each drone takes off and flies in a random direction until it catches signals from a survivor. We call this stage a random flight.

- 2.

- Once a radio signal with the survivor’s radio module is detected, the UAV shares and exchanges the information with other UAVs and GCS over the mesh network using OLSR routing protocols.

- 3.

- Each agent in UAVs and/or GCS launches a Smart Search Module and transfers the shared data about a survivor to the Smart Search Module.

- 4.

- The Smart Search module estimates the survivor’s location, if possible. If the obtained data are not enough for localization, then it sends back the packet with an invalid tag on.

- 5.

- If the Smart Search module sends valid location information of a survivor, then the GCS and UAVs switch their stage to a search mission for the survivor and generate an evasive path to the estimated survivor’s location.

- 6.

- UAVs autonomously fly to the waypoints avoiding obstacles towards the estimated location.

- 7.

- As an UAV gets close to the survivor, it gets more accurate signal information about it. It re-sends the information about a survivor to the Smart Search Algorithm and updates a survivor’s location (then go to Step 3).

3.3. Smart Search Algorithm

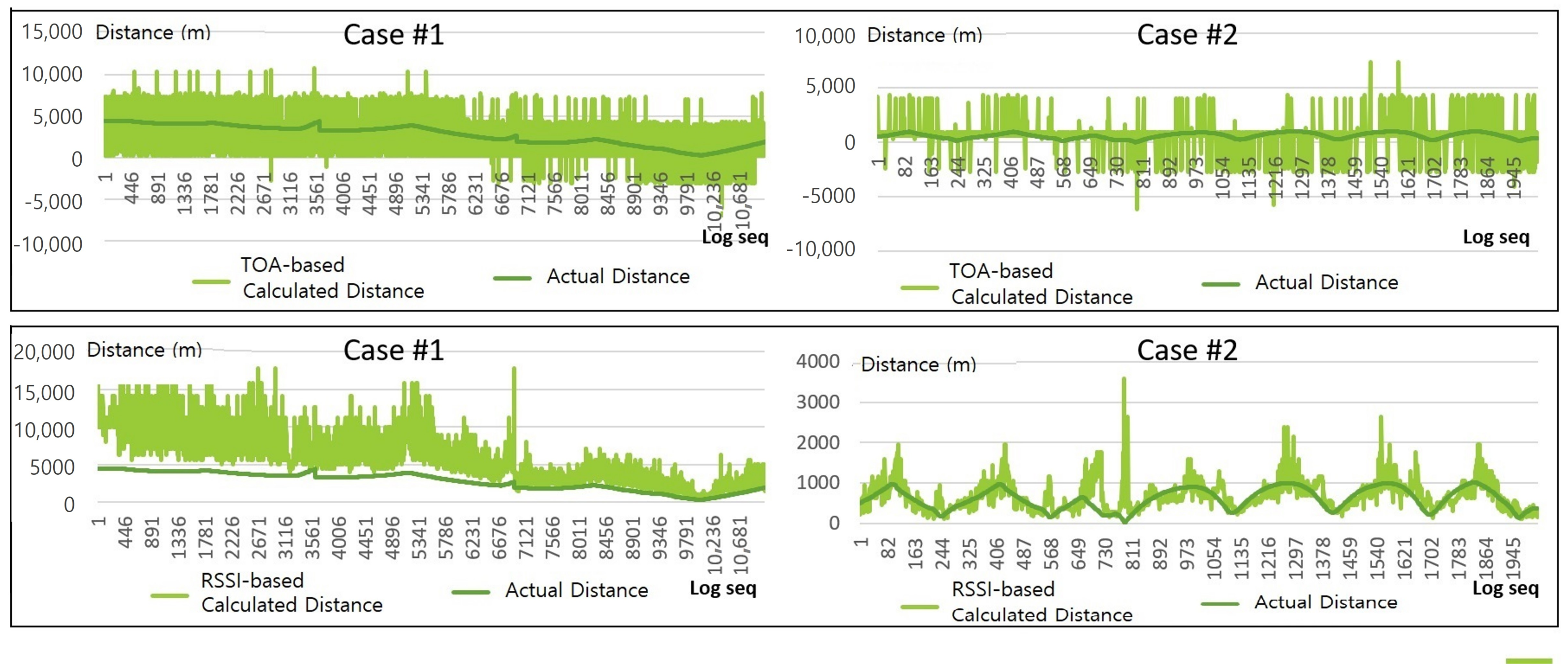

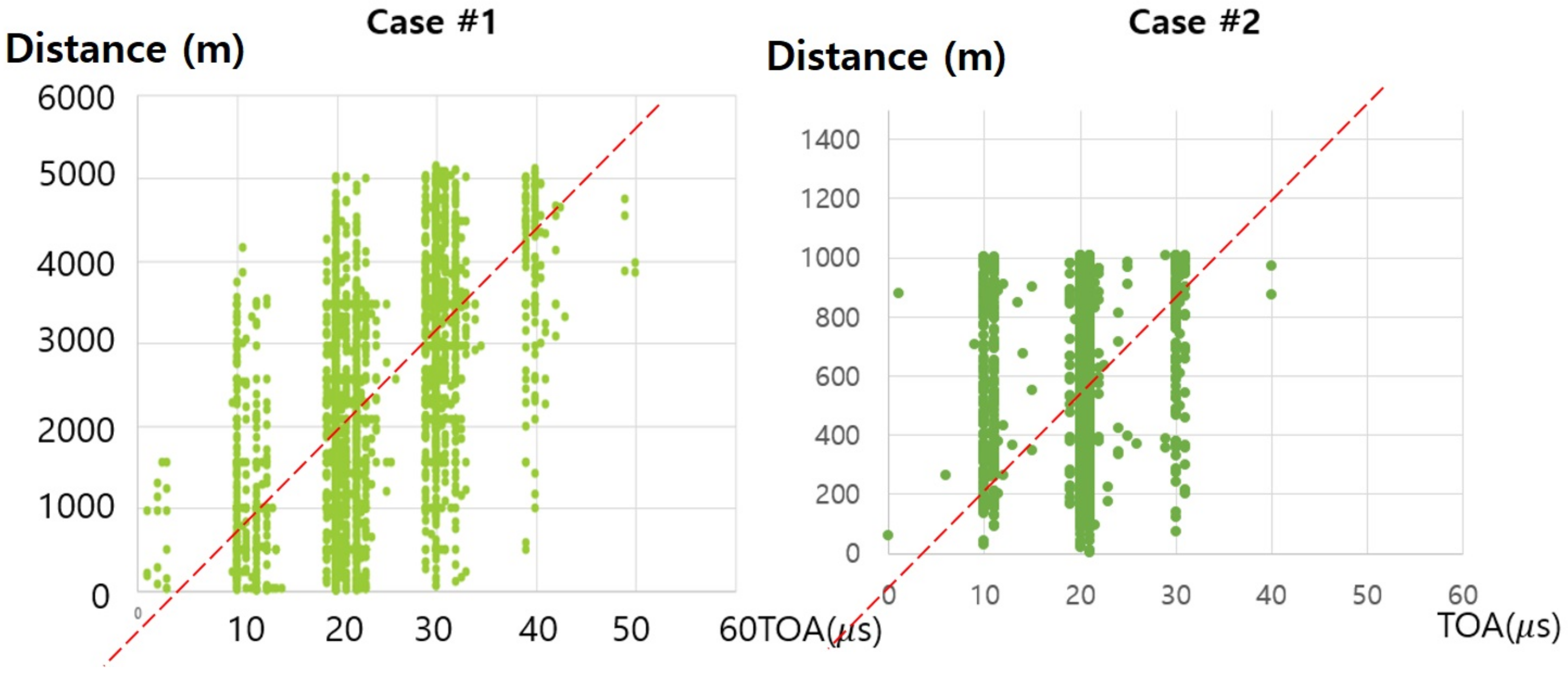

- This paper considers ToA as well as RSSI to estimate distance to a survivor because the target area of this paper is much bigger than a previous one. RSSI is more susceptible to noise over long distances and so we additionally consider ToA along with RSSI. Depending on how far UAVs are from the survivor, we adapt a reflection ratio of ToA and RSSI dynamically in this paper. Specifically, we estimate the position of the survivor by effectively combining RSSI and ToA information of radio signals that keep changing in real time during drone movements. The detailed discussion will be presented in Section 4.2.

- We re-designed a genetic-based algorithm by re-defining mutation and cross-over procedures. We also developed a new way to select individuals in the genetic algorithm as explained in Section 3.3.2.

- The proposed algorithm enables a UAV to fuse various localization data obtained from other UAVs by supporting multiple UAVs’ data considered to form a set of potential locations of the survivor.

- The input values required to execute this algorithm are the drone’s latitude, longitude, and altitude because we are dealing with a 3D zone in this paper.

- The proposed genetic-based Smart Search module is verified and finely customized to its flight environments.

3.3.1. Distance Estimation

3.3.2. Genetic-Based Localization Algorithm

| Algorithm 1 Genetic-Based Localization Pseudo Code. |

|

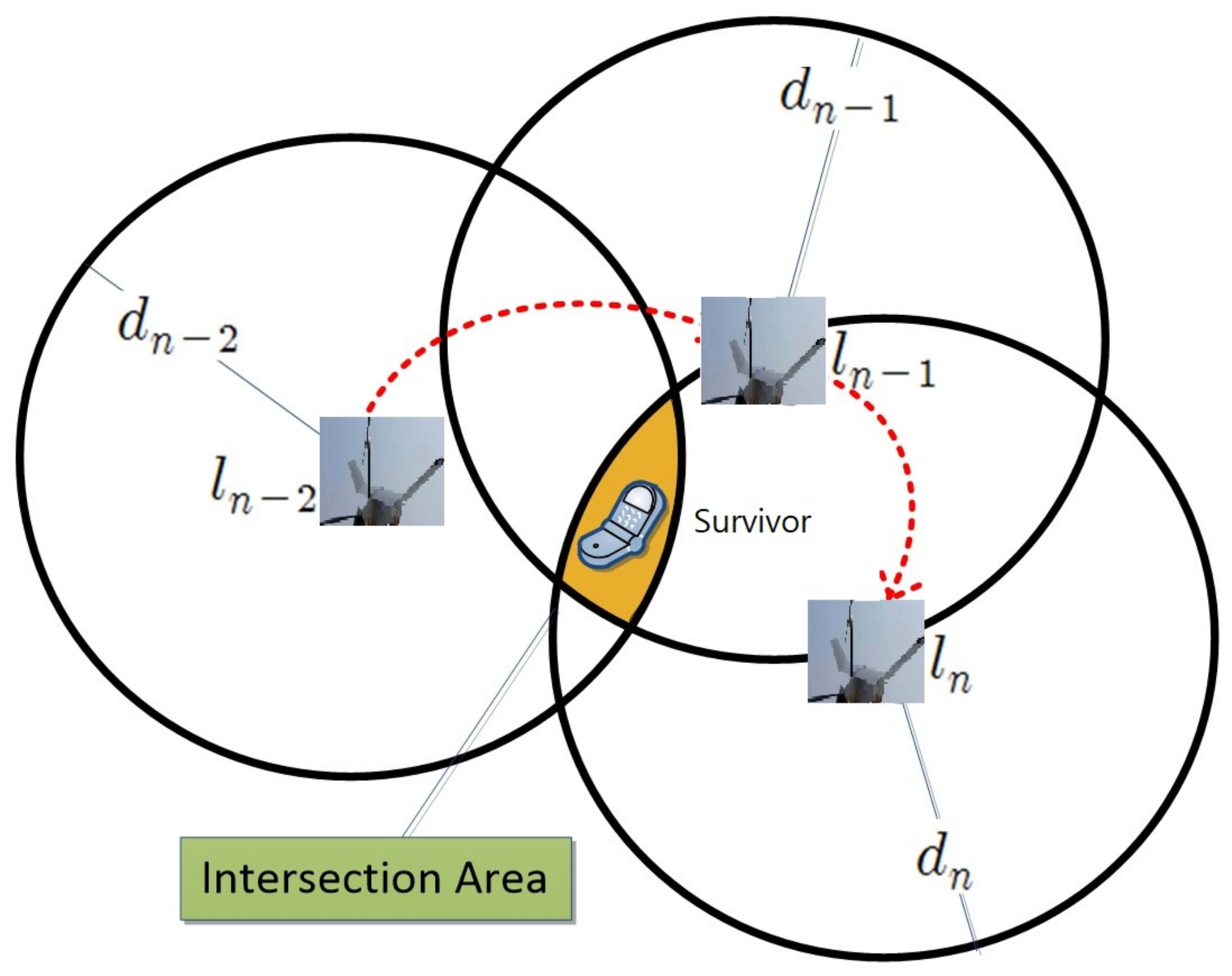

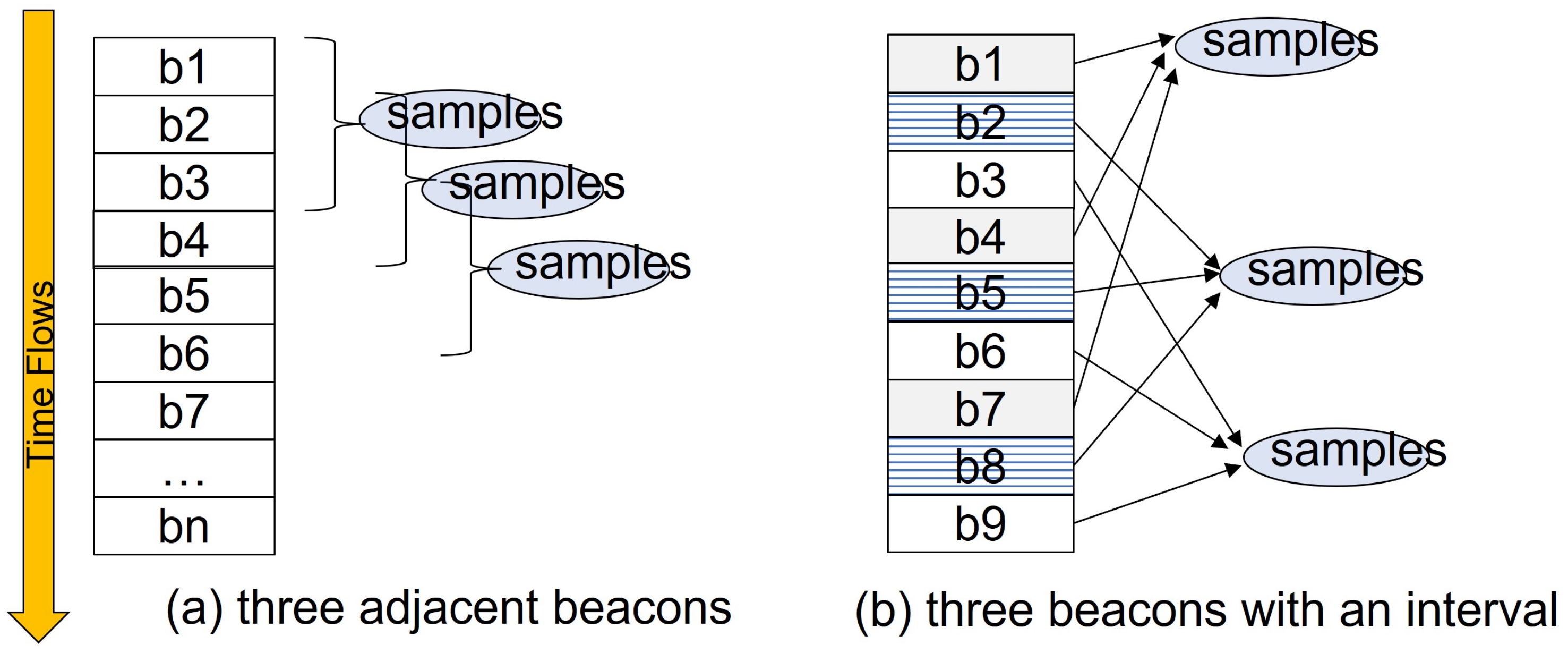

- Collect Beacons(): The Smart Search module in UAVs gathers its own beacons and also beacons from other UAVs, referred to as . Among these collected beacons, there are two different ways to select three beacons:If the positions of the forming circles are close to each other, the width of the intersection section might be too large and the estimated positions could not be narrowed down. Thus, the proposed genetic-based algorithm uses the second method to select beacons so that selected circles can keep the proper distance to form an intersection area.

- Obtain Samples(): Smart Search modules draw three circles, each of which is correspondent to one of three beacons in the previous step. As shown in Figure 5, the three circles might create an intersection area instead of an exact single location point due to the distance errors calculated by RSSI and ToA. Therefore, the location should be estimated based on the intersection area, not a point of intersection. Then, the algorithm randomly extracts a certain number of sample points out of the intersection area and puts them into . Note that, if the size of intersection area is way too large or too small, then we skip the genetic process and wait for the next beacons.

- Form Population(): The algorithm now selects a set of chromosomes, c out of using fitness function. Fitness value of c is defined as follows:A fitness value for each chromosome is calculated based on the distance between the chromosome and the drone, and the radius of the circle data. In this work, we design a fitness function so that the closer the distance between the drone and the chromosome is to the distance estimated based on RSSI and TOA signals, the higher the fitness. The selected chromosomes form a population of a generation.

- Crossover() and Mutation(): It then undergoes inter-chromosome crossover and mutation, which constitute a generation with a certain probability. The following equations describe crossover process in the proposed algorithm in Algorithm 1:where and are selected chromosomes, and is a random value in the range of [0, 1]. Then, we perform mutation with two offspring generated by crossover as follows:where are random numbers in the range of [0, minimum radius of three circles]. Such mutation and cross-over operations with randomness are performed to avoid local optimal solution.

- Termination(): the output offspring of above mutation and crossover operations are put into population and two worst-fitted chromosomes are removed from the population. This process is correspondent to one generation. If the best fitness value among the population is greater than a threshold or the number of generation is larger than a maximum generation threshold, then we terminate this process. If not, we repeat the above process.

3.4. Overall Procedure of Operations

4. Case Studies

4.1. Test Environment

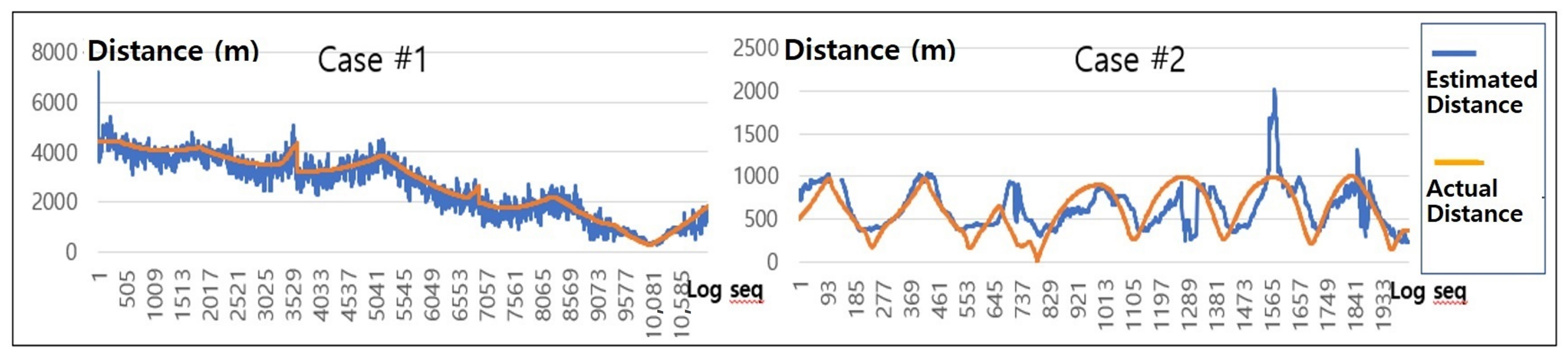

4.2. Results: Distance Estimation

4.3. Results: Survivor Tracking

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| GCS | Ground Control System |

| RSSI | Received Signal Strength Indicator |

| VIN | Value Iteration Network |

| DQN | Deep Q Networks |

| NLOS | Non Line-of-Sight |

| GA | Genetic Algorithm |

| ToA | Time of Arrival |

| CSMA | Carrier Sense Multiple Access |

| OLSR | Optimized Link State Routing |

References

- Al-Naji, A.; Perera, A.G.; Mohammed, S.L.; Chahl, J. Life Signs Detector Using a Drone in Disaster Zones. Remote Sens. 2019, 11, 2441. [Google Scholar] [CrossRef] [Green Version]

- Zwęgliński, T. The Use of Drones in Disaster Aerial Needs Reconnaissance and Damage Assessment—Three-Dimensional Modeling and Orthophoto Map Study. Sustanability 2020, 12, 6080. [Google Scholar] [CrossRef]

- Kyrkou, C.; Theocharides, T. Deep-Learning-Based Aerial Image Classification for Emergency Response Applications Using Unmanned Aerial Vehicles. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–20 June 2019; pp. 517–525. [Google Scholar]

- Kim, D.W. Path Planning Algorithms of Mobile Robot. J. Korean Inst. Commun. Sci. 2016, 33, 80–85. [Google Scholar]

- Xin, J.; Zhao, H.; Liu, D.; Li, M. Application of deep reinforcement learning in mobile robot path planning. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 7112–7116. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.; Liu, X.; Xu, Y.; Guo, J. A Deep Q-network (DQN) Based Path Planning Method for Mobile Robots. In Proceedings of the 2018 IEEE International Conference on Information and Automation (ICIA), Wuyishan, China, 11–13 August 2018; pp. 366–371. [Google Scholar] [CrossRef]

- Simao, L.B. Deep Q-Learning. Available online: https://github.com/lucasbsimao/DQN-simVSSS (accessed on 20 August 2019).

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Duan, Y.; Chen, X.; Houthooft, R.; Schulman, J.; Abbeel, P. Benchmarking deep reinforcement learning for continuous control. In International Conference on Machine Learning; JMLR: New York, NY, USA, 2016; Volume 48. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. In Proceedings of the NIPS Deep Learning Workshop, Lake Tahoe, CA, USA, 9 December 2013. [Google Scholar]

- Han, X.; Wang, J.; Xue, J.; Zhang, Q. Intelligent decision-making for three-dimensional dynamic obstacle avoidance of UAV based on deep reinforcement learning. In Proceedings of the 11th WCSP, Xi’an, China, 23–25 October 2019. [Google Scholar]

- Kjell, K. Deep Reinforcement Learning as Control Method for Autonomous UAV. Master’s Thesis, Polytechnic University of Catalonia, Barcelona, Spain, 2018. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Kong, W.; Zhou, D.; Yang, Z.; Zhao, Y.; Zhang, K. UAV Autonomous Aerial Combat Maneuver Strategy Generation with Observation Error Based on State-Adversarial Deep Deterministic Policy Gradient and Inverse Reinforcement Learning. Electronics 2020, 9, 1121. [Google Scholar] [CrossRef]

- Gupta, A.; Khwaja, A.S.; Anpalagan, A.; Guan, L.; Venkatesh, B. Policy-Gradient and Actor-Critic Based State Representation Learning for Safe Driving of Autonomous Vehicles. Sensors 2020, 20, 5991. [Google Scholar] [CrossRef] [PubMed]

- Qi, H.; Hu, Z.; Huang, H.; Wen, X.; Lu, Z. Energy Efficient 3D UAV Control for Persistent Communication Service and Fairness: A Deep Reinforcement Learning Approach. IEEE Access 2020, 36, 53172–53184. [Google Scholar] [CrossRef]

- Hu, Z.; Wan, K.; Gao, X.; Zhai, Y.; Wang, Q. Deep Reinforcement Learning Approach with Multiple Experience Pools for UAV Autonomous Motion Planning in Complex Unknown Environments. Sensors 2020, 20, 1890. [Google Scholar]

- Tamar, A.; Wu, Y.; Thomas, G.; Levine, S.; Abbeel, P. Value iteration networks. arXiv 2016, arXiv:1602.02867. [Google Scholar]

- Sykora, Q.; Ren, M.; Urtasun, R. Multi-Agent Routing Value Iteration Network. In Proceedings of the 37th International Conference on Machine Learning, Vienna, Austria, 13–18 July 2020. [Google Scholar]

- Niu, S.; Chen, S.; Guo, H.; Targonski, C.; Smith, M.C.; Kovačević, J. Generalized Value Iteration Networks: Life Beyond Lattices. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2017. [Google Scholar]

- Oh, D.; Han, J. Fisheye-Based Smart Control System for Autonomous UAV Operation. Sensors 2020, 20, 7321. [Google Scholar] [CrossRef] [PubMed]

- Niculescu, D.; Nath, B. Ad hoc positioning system (APS). In Proceedings of the Global Telecommunications Conference, San Antonio, TX, USA, 25–29 November 2001; Volume 5, pp. 2926–2931. [Google Scholar]

- Horiba, M.; Okamoto, E.; Shinohara, T.; Matsumura, K. An Accurate Indoor-Localization Scheme with NLOS Detection and Elimination Exploiting Stochastic Characteristics. IEICE Trans. Commun. 2015, 98, 1758–1767. [Google Scholar] [CrossRef]

- Kim, K.W.; Kwon, J.; Lee, C.G.; Han, J. Accurate Indoor Location Tracking Exploiting Ultrasonic Reflections. IEEE Sensors J. 2016, 16, 9075–9088. [Google Scholar] [CrossRef]

- Mathias, A.; Leonardi, M.; Galati, G. An efficient multilateration algorithm. In Proceedings of the 2008 Tyrrhenian International Workshop on Digital Communications-Enhanced Surveillance of Aircraft and Vehicles, Capri, Italy, 3–5 September 2008. [Google Scholar] [CrossRef]

- Leonardi, M.; Mathias, A.; Galati, G. Two efficient localization algorithms for multilateration. Int. J. Microw. Wirel. Technol. 2009, 1, 223–229. [Google Scholar] [CrossRef] [Green Version]

- Li, Q.; Li, R.; Ji, K.; Dai, W. Kalman Filter and Its Application. In Proceedings of the 2015 8th International Conference on Intelligent Networks and Intelligent Systems (ICINIS), Tianjin, China, 1–3 November 2015; pp. 74–77. [Google Scholar] [CrossRef]

- Lazik, P.; Rajagopal, N.; Shih, O.; Sinopoli, B.; Rowe, A. ALPS: A Bluetooth and ultra-sound platform for mapping and localization. In Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems, Seoul, Korea, 1–4 November 2015; pp. 73–84. [Google Scholar]

- Xiao, Z.; Wen, H.; Markham, A.; Trigoni, N.; Blunsom, P.; Frolik, J. Nonline-of-sight iden-tification and mitigation using received signal strength. IEEE Trans. Wirel. Commun. 2015, 14, 1689–1702. [Google Scholar] [CrossRef]

- Sichitiu, M.L.; Ramadurai, V. Localization of wireless sensor networks with a mobile beacon. In Proceedings of the 2004 IEEE International Conference on Mobile Ad-hoc and Sensor Systems, Fort Lauderdale, FL, USA, 25–27 October 2004; pp. 174–183. [Google Scholar]

- Sun, G.; Guo, W. Comparison of distributed localization algorithms for sensor network with a mobile beacon. In Proceedings of the 2004 IEEE International Conference on Networking, Sensing and Control, Taipei, Taiwan, 21–23 March 2004; Volume 1, pp. 536–540. [Google Scholar]

- Ssu, K.; Ou, C.; Jiau, H.C. Localization with mobile anchor points in wireless sensor net-works. IEEE Trans. Veh. Technol. 2005, 54, 1187–1197. [Google Scholar] [CrossRef]

- Yu, G.; Yu, F.; Feng, L. A three-dimensional localization algorithm using a mobile anchor node under wireless channel. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Padua, Italy, 1–8 June 2008; pp. 477–483. [Google Scholar]

- Han, J.; Han, J. Building a disaster rescue platform with utilizing device-to-device communication between smart devices. Int. J. Distrib. Sens. Netw. 2018, 14. [Google Scholar] [CrossRef] [Green Version]

- Shenoy, N.; Hamilton, J.; Kwasinski, A.; Xiong, K. An improved IEEE 802.11 CSMA/CA medium access mechanism through the introduction of random short delays. In Proceedings of the 2015 13th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks (WiOpt), Mumbai, India, 25–29 May 2015. [Google Scholar]

- Jacquet, P.; Muhlethaler, P.; Clausen, T.; Laouiti, A.; Qayyum, A.; Viennot, L. Optimized link state routing protocol for ad hoc networks. In Proceedings of the IEEE International Multi Topic Conference, IEEE INMIC 2001, Technology for the 21st Century, Lahore, Pakistan, 28–30 December 2001; pp. 62–68. [Google Scholar]

- Rango, F.D.; Fotino, M.; Marano, S. EE-OLSR: Energy Efficient OLSR routing protocol for Mobile ad-hoc Networks. In Proceedings of the MILCOM 2008—2008 IEEE Military Communications Conference, San Diego, CA, USA, 16–19 November 2008; pp. 1–7. [Google Scholar]

- Benkic, K.; Malajner, M.; Planinsic, P.; Cucej, Z. Using RSSI value for distance estimation in wireless sensor networks based on ZigBee. In Proceedings of the 2008 15th International Conference on Systems, Signals and Image Processing, Batislava, Slovak Republic, 25–28 June 2008; pp. 303–306. [Google Scholar]

- Available online: https://diydrones.com/profiles/blogs/introducing-the-sky-observer-skylark-uav-from-zeta (accessed on 20 August 2018).

| Parameter/Measure | Type 1 | Type 2 |

|---|---|---|

| RF | Access-L | |

| Distance (km) | 4.6 | |

| PHY Data Rate (kbps) | 9.6 | |

| TX Power (dBm) | 14 dBm (25 mW) | |

| RX LNA gain (db) | 0 | |

| Antenna | dipole 3.0 dBi | |

| # of Retransmissions | 1 | 4 |

| # of Bytes per packet | 20 | 20 |

| Success rate (%) | 90 | 98 |

| Mac Throughput (kbps) estimate | 4 | 5 |

| RSSI @RX (dBm) | −98 | −98 |

| Notations | Definitions |

|---|---|

| location of UAV | |

| the distance from a survivor to the UAV calculated | |

| beacon composed of | |

| randomly extracted survivor’s locations out of intersection area | |

| c | chromosome correspondent to a survivor’s potential location |

| distance between the chromosome and a UAV’s location |

| Parameters | Values |

|---|---|

| Population size | 300 |

| Crossover Probability | 0.9 |

| Mutation Probability | 0.1 |

| Threshold of MaxGeneration | 300 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oh, D.; Han, J. Smart Search System of Autonomous Flight UAVs for Disaster Rescue. Sensors 2021, 21, 6810. https://doi.org/10.3390/s21206810

Oh D, Han J. Smart Search System of Autonomous Flight UAVs for Disaster Rescue. Sensors. 2021; 21(20):6810. https://doi.org/10.3390/s21206810

Chicago/Turabian StyleOh, Donggeun, and Junghee Han. 2021. "Smart Search System of Autonomous Flight UAVs for Disaster Rescue" Sensors 21, no. 20: 6810. https://doi.org/10.3390/s21206810

APA StyleOh, D., & Han, J. (2021). Smart Search System of Autonomous Flight UAVs for Disaster Rescue. Sensors, 21(20), 6810. https://doi.org/10.3390/s21206810