Abstract

To capture scientific evidence in elderly care, a user-defined facial expression sensing service was proposed in our previous study. Since the time-series data of feature values have been growing at a high rate as the measurement time increases, it may be difficult to find points of interest, especially for detecting changes from the elderly facial expression, such as many elderly people can only be shown in a micro facial expression due to facial wrinkles and aging. The purpose of this paper is to implement a method to efficiently find points of interest (PoI) from the facial feature time-series data of the elderly. In the proposed method, the concept of changing point detection into the analysis of feature values is incorporated by us, to automatically detect big fluctuations or changes in the trend in feature values and detect the moment when the subject’s facial expression changed significantly. Our key idea is to introduce the novel concept of composite feature value to achieve higher accuracy and apply change-point detection to it as well as to single feature values. Furthermore, the PoI finding results from the facial feature time-series data of young volunteers and the elderly are analyzed and evaluated. By the experiments, it is found that the proposed method is able to capture the moment of large facial movements even for people with micro facial expressions and obtain information that can be used as a clue to investigate their response to care.

1. Introduction

Japan is facing a super-aged society [1]. The number of elderly people who need care is increasing, which leads to a chronic lack of care resources. Under this circumstance, the Japanese Government has declared the practice of scientific long-term care as a national policy [2,3]. It aims at optimal use of care resources by corroborating the effect of care with scientific evidence. To achieve scientific long-term care, it is essential to evaluate the effect of care objectively and quantitatively. As an objective metric to quantify the care effect, we focus on facial expressions [4,5,6]. The facial expressions give useful hints to interpret the emotions of a person under care. As a practical example, there is a systematic methodology called facial expression analysis [7]. To analyze one’s face and emotions automatically, emerging image processing technologies can be used. Especially, in recent years, owing to powerful machine learning technologies, various companies have provided cognitive application programing interfaces (API) for face [8,9], which automatically recognize and analyze faces within a given picture. These APIs produce values of typical emotions such as happiness and sadness, which would be helpful for the evaluation of the care effect. In actual medical and care scenes, subtle changes of a face that may not be covered by the existing APIs have to be detected. This is because the facial expressions of elderly people under care tend to be weakened due to functional and/or cognitive impairment. Moreover, the emotion analysis conducted by the existing APIs is a black box [10]. Therefore, it is difficult for caregivers to understand why and how the value of the emotion is measured. In addition, analysis of subtle facial changes and explainable emotion analysis become possible, and the user’s expertise in emotion analysis also can be improved.

The main contribution of this paper is to implement a method to efficiently find points of interest from the facial feature time-series data of the elderly. In the existing approach with the facial expression sensing service, since the time-series data of feature values will greatly increase as the measurement time increases, a method to efficiently find points of interest in changes of the facial expressions is desperately needed, especially for evaluating elderly people who can only be shown in a micro facial expression due to facial wrinkles and aging. Analyzing the data obtained, it is possible to analyze them from two perspectives: the macro perspective of how the subject’s emotions changed throughout the care and the micro perspective of when the subject’s emotions changed during care. From the macro perspective, how the care satisfied the subject and how the care suitable for the subject can be considered, and from the micro perspective, it is possible to infer to what kind of conversations or contents the subject responded positively. This kind of analysis is useful for evaluating the effectiveness of care and for providing optimal care according to the preferences of each subject. However, since the facial expression sensing service measures feature values on a second-by-second basis, the time-series data obtained can be enormous, and it may be difficult to find a specific Point of Interest (PoI) [11,12,13], especially when analyzing them from a micro perspective.

The previous version of this paper was published as a conference paper [14]. The most significant change made to this version is the addition of a method for extracting the points of interest from the facial feature time-series data more efficiently. In the proposed method, the moment is regarded as PoIs by us when the subject’s expression changed significantly. To find them, the concept of Changing Point Detection is adopted [15,16] and changing points automatically detected that indicate the moments at which the feature values fluctuated significantly or the tread of the values changed and find the moments at which the facial expressions of the subject have changed significantly. This makes it possible to easily find PoIs, from the time-series data and provide data that contribute to the analysis from the micro perspective described earlier. Based on the proposed method, test data for evaluation created from the subject’s face and the data measured from the video of subjects receiving care are analyzed using Change Finder (i.e., a changing point detection method [17]). Moreover, the effect of the changing point detection method is discussed by comparing it with the actual behavior. In addition, by creating a composite feature value [18], which is a combination of more than two feature values instead of single feature values, and applying a changing point detection to it as well, we attempted to obtain information that is closer to fluctuations of actual facial expressions.

The remainder of this paper is organized as follows. Section 2 describes the preliminary and previous study of developing “Face Emotion Tracker (FET)” with a cognitive API. Section 3 introduces the related work of Changing Point Detection and Change Finder from recent years. Section 4 produces a complete description of the proposed method. The preliminary experiment and actual experiment are respectively presented in Section 5 and Section 6, followed by conclusions in Section 7.

2. Preliminary and Previous Study

As described in Section 1, to evaluate the effect of care quantitatively and objectively is important for scientific long-term care. However, the evaluation methods of care tend to depend on a subjective scale, such as observations and questionnaires. Therefore, it is difficult to use the obtained data as scientific evidence. Moreover, these assessment methods place a heavy burden on care evaluators, care recipients, or both. In addition, since scientific care is premised on using large-scale data, efficient data collection is required. In the past, to measure care effects objectively, an experiment that measured the changes in the facial expressions of people undergoing care was conducted [19]. In this experiment, facial features such as the height of eyebrows and the opening of eyes were measured from images based on the method called “facial expression analysis” of P. Ekman et al. [7] and so on. However, they measured the features from the recorded video manually. Moreover, in the report, they concluded that “… Currently, we have not generalized this study successfully because of the limited number of cases. … To establish an objective evaluation method, we have to collect and analyze both the data of the objective people and the caregiver more”. From the above, to practice scientific long-term care, it is important to develop a computer-assisted service that enables us to automatically collect data that contribute to the quantitative assessment of care.

With the rapid technological growth of cloud computing, cloud services to process various tasks which were previously executed on edge sides can be used [20]. Under such a kind of background, various services utilizing artificial intelligence, which has become very popular in recent years, are currently available as a cloud service. One of the actual cases of cloud service using artificial intelligence is cognitive services. Cognitive services extract useful information from visual [21], sound [22], linguistic [23], and knowledge data [24] by analyzing them and enable computers to recognize these types of information. Using cognitive services, functions about cognition can be performed which were previously difficult for computers. Many public cloud services provide these kinds of services as “cognitive API” [25]. For example, cognitive APIs for vision can extract various information from an input image [26,27,28]. Cognitive APIs can automatically quantify emotions, which scientific long-term care might utilize.

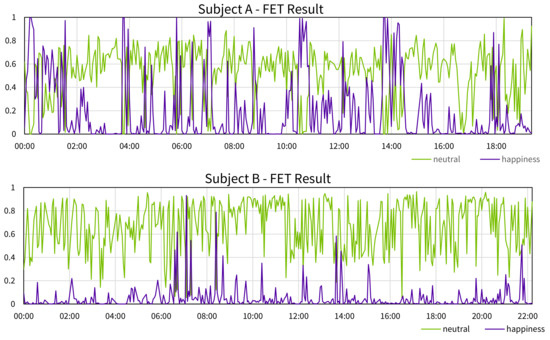

In the past, our research group developed a real-time emotion analysis service “Face Emotion Tracker (FET)” using a cognitive API and attempted a quantitative evaluation of care [29]. In this previous study, care using a virtual agent [30] was conducted for 5 elderly people living in an elderly facility. During care, FET captures pictures of a subject and sends them to a cognitive API (Microsoft Face API [31]) to analyze the emotion. A part of the result of the FET experiment is shown in Figure 1 as time-series graphs (some outliers has been eliminated). FET outputs 8 kinds of emotions (“anger”, “contempt”, “disgust”, “fear”, “happiness”, “neutral”, “sadness”, “surprise”) from 0 to 1 as probabilities, this time, paying attention to the values of “neutral” and “happiness”. Subject A was relatively expressive, and the values of “neutral” and “happiness” appear alternately during care. Subject B was 99 years old, and the change in facial expression was not so apparent because of the decline of facial muscles. As a result, in contrast to subject A, only neutral was observed at almost all timings. However, the accompanying person who observed the subjects evaluated that subject B felt better and better as the care progressed. Therefore, it can be said that there was a gap between the obtained results and the actual ones. Thus, the reliability of the result of emotion analysis with cognitive API may be degraded depending on the characteristics of the subject.

Figure 1.

A part of the result of the FET experiment.

When observing the facial expressions of care targets, many cases of people with poor facial expressions for their actual emotions will be encountered. The factors involved are aging, dementia [32], the sequela of stroke [33], Amyotrophic Lateral Sclerosis (ALS) [34], Parkinson’s disease [35], and so on. Hence, taking some measures will be needed. In addition, the cognitive API which FET used is built with machine learning technology. Therefore, the process in which a result is outputted from an input cannot be followed. As a result, we may face difficulty when using the obtained data as evidence for the effect of care. To capture scientific evidence in elderly care, facial expressions are regarded as an objective indicator of the effects of care, which led to proposing a user-defined facial expression sensing service in the previous study [14]. The facial expression sensing service extracts the coordinates of characteristic parts of the face (i.e., feature points) such as eyebrows, eyes, and mouth from the face image. Then, the service measures the length connecting two feature points as a feature value and records it as time-series data. By tracking the change of these values, the degree of facial expression change from facial movement can be measured. As a result, even when the person shows only a subtle facial expression change, the service will be able to measure and record it as opposed to overlooking it. The aim of this paper is to contribute to explicable emotion analysis and quantitative evaluation of care by examining the degree of facial expression change as clear numerical values and inferring the emotional changes associated with the movement of facial expression.

3. Related Work

Since the Facial Expression Sensing Service measures feature values on a second-by-second basis, the time-series data obtained can be enormous, and it may be difficult to find PoI, especially when analyzing from a micro perspective. Changing point detection is the technique for detecting changing points in time-series data. This falls into two categories, offline detection and online detection. Offline detection uses a batch process for data that have been accumulated and find changing points. On the other hand, online detection judges if the data are changing point each time new data are presented. An an example of an offline detection method is a method based on statistical tests by Guralnik and Srivastava [36]. In this method, the point of change in the time-series data is analyzed assuming that all the time-series data to be analyzed are available. In this process, a time-series model has to be applied, such as an autoregressive model or a polynomial regression model, to the previous and next data for all data points, which causes a problem that requires a large amount of computation. In this study, Change Finder [17,37,38] (i.e., one of the online change-point detection methods) is adopted. Since the amount of calculation of Change Finder is linear to the number of data to be used, Change Finder can detect changing points quickly [39].

Change Finder is a changing point detection method characterized by an auto regression model (AR model) and two phases of learning using smoothing. Change Finder has a mechanism for detecting changes of the model that the time-series data follow and calculates the degree of change with a changing score. The changing score will be high when the degree of change is high. Moreover, the changing score will be low when the degree of change is low. Change Finder has two AR models. The first model learns original time-series data. The second model learns the degree of changes, which is calculated based on the first model as time-series data. This leads to removing changes raised by small noises. Moreover, using a discounting learning algorithm called Sequentially Discounting AR model learning (SDAR) algorithm to train AR models, Change Finder achieves processing speed as fast as Change Finder can process online. Moreover, whereas AR models require stationarity of data, Change Finder can manipulate non-stationary data by adopting discounting learning. Change Finder processes the one-dimensional time-series data obtained by Facial Expression Sensing Service as follows:

- Step 1:

- Change Finder trains the first AR model on the input data. In this step, using SDAR algorithm, Change Finder updates the mean, covariance, variance, and autoregression coefficients, which the AR model has. The equations of update are given as follows:

Equation (3) is called the Yule–Walker equation. In this regard, let . By solving this equation, is obtained, and then, the following is calculated:

- Step 2:

- In this step, Change Finder calculates the score using normal probability density distribution based on average and variance that results from Step 1. Let the probability density be , then and the score are calculated as follows.

- Step 3:

- In this step, Change Finder smoothes scores that result from Step 2 to remove noises.

- Step 4:

- Using that results from Step 3 as new time-series data, Change Finder performs the second round of learning, calculating the score and smoothing just as in Step 1–Step 3. Then, scores that result from the second round of Step 3 as the changing score are obtained.

4. Methodology

4.1. Purpose and Approach

In this study, the analysis focuses on the micro-perspective described in Section 1 and aims to efficiently investigate when the subject reacted during care. For this purpose, we consider extracting PoI, as the moments when large fluctuations are recognized in the time-series data of feature values obtained by Facial Expression Sensing Service.

A simple way of extracting PoIs is to look for points whose feature values exceed a certain threshold. However, this method considers the absolute values of the feature values, even if a subject’s expression has changed significantly, for example, when a neutral face becomes a smiley face, those facial changes may be discarded if the change in feature values is small. Moreover, since feature values are based on facial movements, the values are always fluctuating little by little. Therefore, depending on the threshold setting, a large number of points that exceed the threshold may be extracted, and thus the efficiency of analysis will not be improved. Considering these factors, it cannot be said that this method can capture facial expression changes based on reality, and extracted points will not be so valuable as PoIs. Therefore, in this study, the change detection algorithm called Change Finder described in Section 3 and attempts to extract PoIs based on accurately capturing the change in the feature values are adopted.

The key idea of this study is to introduce the concept of composite feature value and apply change-point detection to it as well as to single feature values. Composite feature value is a scale created from multiple feature values according to a certain purpose of the analysis. In this way, analysis can be performed more efficiently than considering the changes in each feature value. Furthermore, since multiple facial parts move when facial expression changes, the composite feature value that combines multiple feature values will enable the extraction of PoIs with high validity that reflect the actual facial movements. The specific method is as follows.

- Step 1:

- Create a composite feature value by combining multiple features

- Step 2:

- Input into Change Finder and obtain the change score .

- Step 3:

- Set a threshold , and regard a point with as a candidate of PoI.

4.2. Composite Feature Value

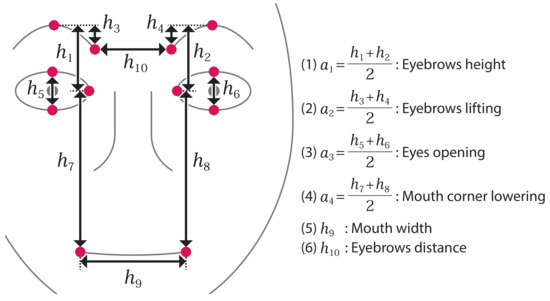

In this study, from the perspective of measuring the effectiveness of care, we consider defining “Smile scale” as a composite feature that indicates how much the subject enjoys the care. In creating Smile scale, facial movements suggesting feelings of enjoyment and happiness are considered. Based on Ekman et al.’s book on facial expression analysis [7] and the attempt to observe the features of faces showing various emotions taken with the cooperation of volunteers [40], the following features of facial expressions showing happiness are summarized. From this description, this study focuses on 4 of the 6 feature values used in the experiments of preliminary study (see Figure 2) as the smile scale: “Mouth width” , “Eyebrow height” , “Eyes opening” , and “Mouth corner lowering” . Out of the four features that we focus on, when compared with the features of facial expressions described earlier, the three features except for the “Mouth width” show more happiness when the value is smaller. Moreover, the absolute amount of variation differs for each feature value. For example, the movement around the mouth should be larger than the movement of eyes opening and closing. Therefore, it is necessary to assign certain weights to each feature.

Figure 2.

Feature values for the experiment.

- Eyebrows will be lowered in whole;

- Eyes narrowed;

- Mouth corner will be pulled back and raised;

- Cheeks will be raised.

To determine the weights, we first measured the feature values of neutral and smiling faces, using the same face images of this thesis author as in the preliminary experiment. Next, we randomly selected 10 data in each state and calculated the average of the feature values and differences between the two states. The samples of the face images used in the calculations are shown in Figure 3 for the neutral state and Figure 4 for the smiling state. The results are shown in Table 1. The “Ratio of difference” shows the ratio of the “Difference” of each feature value compared to the “mouth width” . The results show that the absolute amount of change in “Eyebrow height” and “Eyes opening” is small, about half of that of “Mouth width” and “Mouth corner lowering”. . Therefore, Smile scale X is determined as follows.

Figure 3.

Samples of neutral face images to consider smile scale.

Figure 4.

Samples of smiling face images to consider smile scale.

Table 1.

Average of 10 feature values for the neutral and the smiling faces of the author.

Considering that the degree of facial movement varies among individuals, using Ratio of change in Table 1 directly as a coefficient was avoided. Note that the standardized single feature values to calculate X was used. Specifically, for the original feature value , which has the mean and standard deviation , is calculated by the following formula and is used as the feature value:

4.3. Experiment Detail

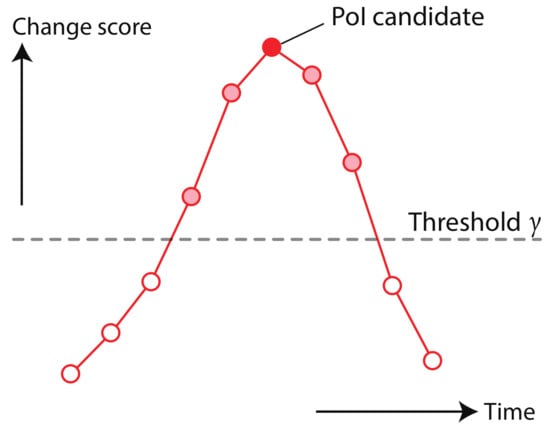

We attempted to extract PoIs for single feature values and composite features value Smile scale, using Change Finder on the test data created from the face of this thesis’ author and data obtained from facial images of elderly people receiving care. In this experiment, we use the Change Finder library for Python [41]. The library takes the following parameters: order of the AR model , discounting rate r, and width of smoothing size w. These parameters are used directly for the first AR model training. In the second AR model training, the width of the smoothing size will be round(w/2.0). In each experiment, the parameters are set to , , and . Among the change scores calculated by the Change Finder, as shown in Figure 5, we define the point t with the highest score as the time between the score exceeding a certain threshold and it falling below the threshold as the PoI candidate. By examining the appearance of the face around PoI candidates, we can confirm whether an obtained PoI candidate is a valid as PoI. In this series of experiments, we determine the threshold by using the mean and standard deviation of the change score S.

Figure 5.

Definition of PoI candidate.

5. Preliminary Experiment

5.1. Outline

As a preliminary experiment, we conducted a PoI extraction experiment using the feature value data obtained from the face image of the author of this paper was conducted. The test data based on a video in which the author repeated a neutral and a smile expression several times was created. The video was taken by using the built-in camera of a laptop computer while the author was looking at the screen. We measured the feature values at intervals of 1 s and removed obvious outliers. Missing data were filled with previous data. Then Change Finder was applied to the single features and Smile scale of this data to calculate change score and extract PoI candidates.

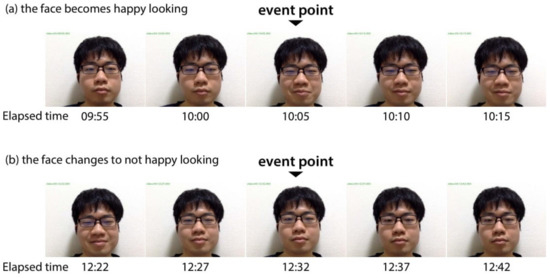

In this experiment, the ratios and were first calculated to estimate how much more efficient the facial expression analysis becomes. Here, n is the number of whole data; is the number of PoIs, and is the number of data exceeding the threshold . The smaller these values are, the fewer the number of data to be checked, and the more efficient the analysis will be. Next, to confirm the validity of the obtained PoI candidates, the timing of facial expression changes recorded by eye observation was compared with them. First, we looked for the timing, as shown in Figure 6, when the face (a) becomes happy looking and (b) changes to not happy looking by visual inspection, and recorded them as “event point”. In this experiment, event points were recorded only when obvious facial movement was seen. For example, gradually returning from a smiling face to a neutral face over a long period, about 30 s to 1 min was not recorded because it was difficult to determine where to place an event point. Next, PoI candidates were picked whose timing matched an event point, and we defined it as a correct PoI and defined its number as a number of correct. In this experiment, if a PoI candidate was within 10 s before or after an event point, it was considered that they are matched. Note that a number of correct will be counted only once for each event point. Then, for the number of PoIs , the number of correct , and the number of event points , the following ratios were checked.

Figure 6.

Example of event points (a) the face becomes happy looking, (b) the faces changes to not happy looking. (“Elapsed time” corresponds to change score graphs in Figure 7).

- Recall: Ratio of event points that could be captured ;

- Precision: Ratio of valid PoI candidates .

The higher the Recall, the less the change in facial expression is missed. Moreover, the higher the Precision, the more accurate the extraction of PoI is with less noise. The data at the beginning of the change score are not useful because the training of the SDAR algorithm has just started. Therefore, the data in the first 30 s from the calculation of the threshold and each ratio were excluded.

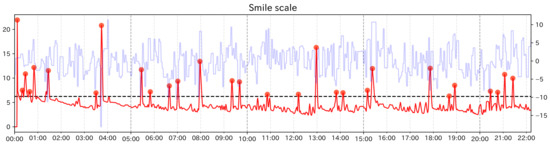

5.2. Results

Table 2 shows the calculation results of each ratio. Figure 7 shows the change score for Smile scale. The red line is the change score, the light blue line is the raw value of each feature value, and the black dashed line is the threshold . Red dots indicate PoI candidates. Among them, dots with double circles indicate correct PoIs. The colored vertical lines indicate the event points, where yellow lines indicate when the subject’s face became happy looking and light blue lines dashed line indicates when the subject’s face became not happy looking. The left axis indicates the value of the change score, the right axis indicates the feature value, and the horizontal axis shows the elapsed time. was less than 0.02 in all cases, and was about 0.1 at the highest. In other words, our method was able to narrow down the data to be searched to less than one-tenth. When Change Finder was applied to the smile scale, the number of correct was the highest and Recall was also the highest. Looking at the graphs, PoI candidates near the event points at around 2 min, 3 min, and 15 min and 30 s were obtained only from the smile scale, and these were not obtained for any single feature value. In addition, for the case of Smile scale, the number of PoI candidates not around event points was the lowest, so the Precision was the highest. From these results, it can be said that PoI candidates of composite feature value have a higher probability to catch important moments than PoI candidates of single feature values.

Table 2.

Various parameters and ratios measured (test data: number of whole data n = 1114, number of event points = 12).

Figure 7.

The graph showing the change score for Smile scale (test data).

In the graphs of each feature value, there are PoI candidates in the 6 to 9 min where there are no event points. Although at these points the author does not show smiles, subtle facial movements were observed such as widening eyes and holding the mouth. Change Finder seemed to react to these facial movements. As long as the data are based on detailed facial movement tracking, this kind of noise will occur more or less. However, when using Smile scale, the subtle variation in each feature value is suppressed a little. Therefore, it is considered that Change Finder extracts few unimportant points compared to the case using single feature values, and as a result, the Precision is increased.

As a comparison with the method of this experiment, the PoI candidates were extracted and each scale calculated using the raw values of the Smile scale. As in the case in which the change score was used, the timing with the highest value exceeding a certain threshold was considered as a PoI candidate. We set the threshold value to using the mean and standard deviation of all Smile scale’s raw values. The results are shown in Table 3. When we set the low threshold (), the values of and were much higher than those using the change score. Moreover, many PoI candidates were detected, and although Recall was high, Precision was low. When we set the high threshold (), the number of correct decreased and the precision was low because small changes were ignored. In the case of , although Recall was relatively high, many PoI candidates were detected in the areas where Smile scale value wiggled, and the precision was lower than that using the change score. From this, it can be said that using the change score is more efficient and accurate than analyzing the raw feature data while changing the threshold value.

Table 3.

Various parameters and ratios of the experiment measured using the raw Smile scale value (test data: number of whole data n = 1144, number of event points = 12).

From this result, it was confirmed that applying Change Finder to feature value data and using composite features are effective for the test data. In the next section, we will conduct the same analysis using actual images of elderly people during care and verify whether the analysis method of this preliminary experiment can be applied to general data.

6. Actual Experiment

6.1. Overview

In this experiment, we measure a feature value using images of five subjects during care obtained in the experiment of FET [29] and attempt to extract PoIs on them using Change Finder as in the preliminary experiment. Then, we examine whether the analysis can be more efficient and whether the obtained PoI candidates are valid. We divided expressive subjects (subjects A, C, and D) and subjects with poor facial expression (subjects B and E) into subject group 1 and subject group 2, respectively, and discussed each group. Since the face images of each subject were taken at intervals of about 3 s, the obtained feature value had similar intervals. To adjust the experimental conditions with the preliminary experiment, in advance, we filled the feature value data with their previous data, to place the data at intervals of 1 s. Moreover, we removed obvious outliers. As in the preliminary experiment, we excluded the data in the first 30 s from the calculation of the threshold and from each ratio.

6.2. Results

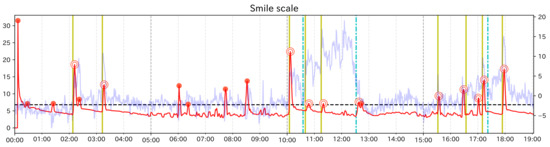

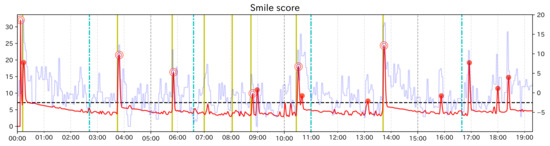

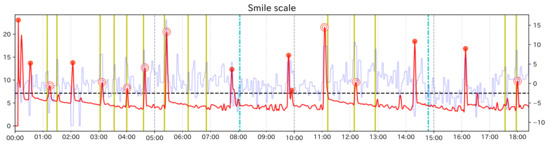

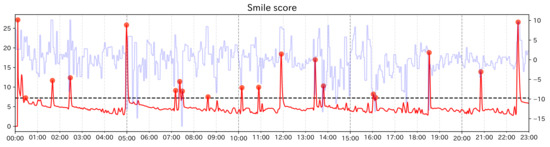

Here, we explain the results of the analysis for subject group 1. In addition to calculating the change score of Change Finder, for Subject Group 1, since we could examine facial expression changes visually, as in the preliminary experiment, we recorded event points and calculated Recall and Precision. Table 4, Table 5 and Table 6 show the calculation results of each ratio for each subject. Figure 8, Figure 9 and Figure 10 are the graphs showing the change scores of the Smile scale. The legends of each graph are the same as that of the preliminary experiment. For each subject, was about 0.08 at the highest for any of the feature values. Considering that the data to be investigated can be reduced to less than 8% of the total data, it can be said that the analysis was efficient. In this experiment, the original data were heavily padded, so is not very helpful, but the values were all less than 0.02.

Table 4.

Various parameters and ratios measured (Subject A: number of whole data n = 1129, number of event points = 11).

Table 5.

Various parameters and ratios measured (Subject C: number of whole data n = 1075, number of event points = 16).

Table 6.

Various parameters and ratios measured (Subject D: number of whole data n = 769, number of event points = 14).

Figure 8.

Graph of the change score for Smile scale (Subject A).

Figure 9.

Graph of the change score for Smile scale (Subject C).

Figure 10.

Graph of the change score for Smile scale (Subject D).

For subjects C and D, the highest values of Recall and Precision were obtained when using the Smile scale. Moreover, for both subjects, there were event points that could be detected only when using the Smile scale. From these results, it can be said that using the composite feature value was useful for subjects C and D, as the preliminary experiment. In both subjects, Recall was 0.5, which means that half of the event points were detected as PoI candidates. The graph of the Smile scale shows that although the value rises and falls around the event points, not all of them were extracted as PoI candidates. In other words, it can be considered that Change Finder does not simply react to all local changes of feature value. However, considering that the data to be investigated was greatly narrowed down and that the extracted PoI candidates corresponded to the event points to some extent, it can be said that although there were some missing event points, Change Finder was able to efficiently scoop up the appropriate timing as PoI from the constantly changing feature data. As for the subject A, compared to Smile scale, “Mouth width” has a higher value in both Recall and Precision, and “Mouth corner lowering” has a higher value in Recall. Looking at the graph of Smile scale, compared to the large changes in feature values around 4 min, 10 min 30 s, and 14 min, the change score did not react to the small value changes between 6 min 30 s and 7 min, and corresponding event points were overlooked. On the other hand, they were detected when using “mouth width”. Although the results of single feature values are more likely to catch unimportant points as PoI candidates than composite feature values, they can be considered to be effective in complementing the results of composite feature value depending on the characteristics of the input data.

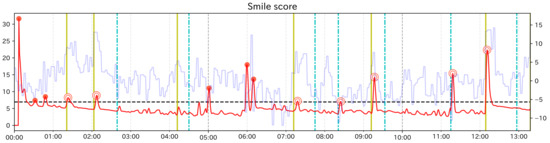

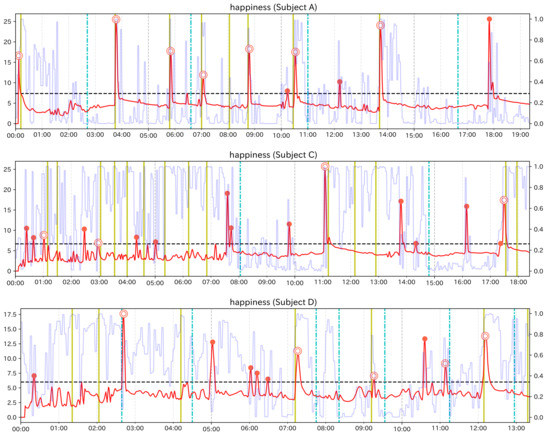

We describe the results of the analysis for subject group 2. For subject group 2, it was difficult to find event points visually, so we only calculated the change score of Change Finder and discussed it, confirming the facial expression around detected PoI candidates The calculation results of and are shown in Table 7 and Table 8 for each subject. Figure 11 and Figure 12 is the graphs showing the change scores of Smile scale. The legends of the graphs are the same as those of the preliminary experiment and the experiment of subject group 1. For each subject, was always less than 0.1. As in the case of subject group 1, it can be said that this method was able to narrow down the data significantly. Subject B had hardened facial muscles and no obvious change in facial expression compared to the other subjects. Although each feature value continued to fluctuate, it was not possible to identify the timing when the values rose or fell clearly or when the trend of the time-series changed. Around the PoI candidate using single feature values, it was possible to confirm the facial movements corresponding to each feature value, but there were no clear signs that emotional changes can be inferred. Examining the faces near the PoI candidates using the smile scale, we mainly observed mouth movements such as holding the mouth and opening the mouth. While there was no particular tendency of variation in every single feature, the Smile scale might be strongly reflected by “Mouth width” and “Mouth corner lowering”, which have a high coefficient in the Smile scale, and this made Change Finder sensitive to these fluctuations. Subject E showed symptoms of euphoria and generally appeared to be happy from the beginning to the end of the care. When we examined the faces near the PoI candidate extracted from the smile scale, we were able to capture the moments when the expressions moved significantly. In the first half to the middle part of the care (before 14 min), large smile scenes could be seen. In the latter part of the care, there was a candidate point of interest at 18 min and 30 s, and from this time, facial movements including smiling were not seen very much until about 22 min and 30 s. According to the care record, the subject was listening to her favorite music for about 4 min from 18 min and 20 s, and it can be assumed that her facial expression temporarily faded as a result of listening to the music intently.

Table 7.

Various parameters and ratios measured (Subject B: number of whole data n = 1303).

Table 8.

Various parameters and ratios measured (Subject E: number of whole data n = 1351).

Figure 11.

Graph of the change score for the Smile scale (Subject B).

Figure 12.

Graph of the change score for the Smile scale (Subject E).

6.3. Discussion

For subject group 1, we confirmed that the extracted PoI candidates matched the visually confirmed event points, (i.e., the change in timing of facial expression, to some extent). Although there were gaps in accuracy depending on the data used and some overlooked event points, this method seems to be useful in that it can narrow down a huge amount of time-series data and provide clues to efficiently find PoIs. In common with the three subjects of subject group 1, closely examining the graph of the change score, we found some small increase in the score around the event point, although it does not exceed the threshold . If the threshold is lowered, such points will be detected, but the number of PoI candidates will also increase, and the cost to examine appropriate PoI will increase. Therefore, it will be necessary for users to determine the threshold value by considering their own needs, the time and cost they can take for analysis, and so on. Regarding the composite feature value, looking at the results for the Smile scale of subject group 1, the failure to detect event points when the face changes to not happy looking is more noticeable than when the face becomes happy looking. For example, in the case of Subject D’s Smile scale, the former was detected in 5 out of 7 event points (There is one event point of happy looking on the right edge of the graph), while the latter was detected only in 2 out of 6 event points. The Smile scale was created to estimate how happy subjects feel. However, since the facial movements corresponding to emotions such as happiness, disgust, and surprise are unique, it would be necessary to create a composite feature value for each emotion, especially if we want to capture the “unpleasant look”. For subject group 2, we could not successfully extract PoI candidates that showed a large change in facial expression from those whose facial movements were small. However, we could see some facial movements like mouth movements around the PoI candidates, so even though it is difficult to estimate emotions, it is possible to estimate whether the interaction was successful without losing the subject’s interest by investigating the “magnitude of response to external stimulus”. On the other hand, for the subject who seemed to be euphoric, the moments when she showed a big smile or became serious were successfully captured as PoI candidates.

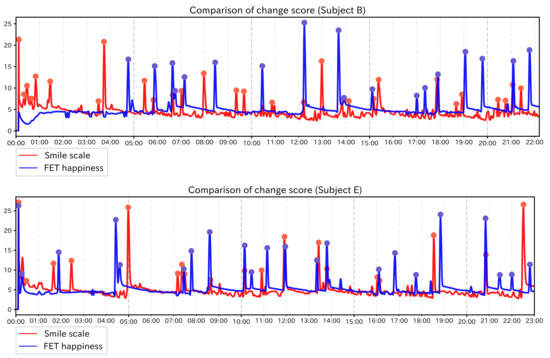

We have conducted experiments based on the images of the subject during care obtained in the FET experiment. In this section, we attempt to extract the PoI candidates using the original measurement results of the FET experiment and compare the results with those obtained by using the feature values obtained by the Facial Expression Sensing Service. FET can quantify various kinds of emotions (see Section 2). In this experiment, we focus on “happiness” from the viewpoint of estimating the effect of care. For the time-series data of the “happiness” value, as in the previous experiments, the time-series data at 1-s intervals and the extracted PoI candidate were interpolated using Change Finder with the settings described in Section 6.1. We calculated and for each subject. The results are shown in Table 9. For subject group 1, we calculated Recall and Precision. The results are shown in Table 10. Moreover, the graphs of results of subjects in each subject group are also shown in Figure 13 and Figure 14. The right vertical axis of each graph shows the value of “happiness”. Other than that, legends are the same as in the previous experiments.

Table 9.

Values of and from change score using FET “happiness” data.

Table 10.

Recall and precision from change score using FET “happiness” data of Subject Group 1.

Figure 13.

Graphs of the change score for FET’s “happiness” value (Subject group 1).

Figure 14.

Graphs of the change score for FET’s “happiness” value (Subject group 2).

In this experiment, was lower than 0.1 for all subjects, which means that we were able to focus on the important data, significantly, the FET data as well as the data from the Facial Expression Sensing Service. In subject group 1, for subjects A and D, Recall and Precision were higher than those with feature values. For subject C, the result was not good. If the data tend to keep high or low values, as in the case of subject C, the subtle changes will be lost and PoIs will not be detected well. For subject group 2, the change score run along with the change in the value of “happiness”. The value of “happiness” is almost always around 0 or 1, and it tends to change sharply at some moment. Therefore, such instantaneous value is mainly detected as a PoI candidate, and the detailed changes in facial expressions at other moments are lost. Moreover, we compared the change scores and PoI candidates for each subject in subject group 2 with those using the Smile scale. The result are shown in Figure 15. From these graphs, it can be seen that there are some gaps in the timing of the PoI candidates between the two cases. The gaps are especially seen in subject B. This suggests that by using the data from the Facial Expression Sensing Service, it is possible to perform different analyses of the case using FET data. From these results, we conclude that the analysis based on the method of this experiment can be used for FET data for expressive people. For the people with poor facial expression, we will be able to conduct care analysis based on subtle facial changes that cannot be captured by FET by using the data of the Facial Expression Sensing Service.

Figure 15.

Comparison of change score between Smile scale and FET “happiness” value (Subject group 2).

7. Conclusions

In order to capture scientific evidence in elderly care, this paper specifically focused on the changes in the facial expressions of the elderly. The main outcomes include the following: (1) Proposing to apply the Changing point detection method using Change Finder with the feature value data obtained from Facial Expression Sensing Service to efficiently find PoIs. (2) Conducting a complete experiment to analyze and evaluate the performance of the proposed method whether on the facial expressions of young men or elderly people. Through the experiments, it was found that the proposed method cannot only efficiently find appropriate PoIs from a huge amount of time series data but also extract the moments when the face moved remarkably. Meanwhile, for processing the special case with incomplete raw data to the maximum, improvement and verification of the proposed method are required. As future work, more facial data from elderly people will be analyzed and evaluated integrating the proposed method and care nursing knowledge, in order to realize explainable scientific care for elderly people.

Author Contributions

Writing—original draft preparation, K.H.; writing—review and editing, S.C. and S.S.; supervision, M.N.; validation, S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was approved by the Research Ethics Committee of Kobe University Graduate School of System Informatics (No. R01-02).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Acknowledgments

This research was partially supported by JSPS KAKENHI Grant Numbers JP19H01138, JP18H03242, JP18H03342, JP19H04154, JP19K02973, JP20K11059, JP20H04014, JP20H05706 and Tateishi Science and Technology Foundation (C) (No.2207004).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Okamura, H. Mainstreaming Gender and Aging in the SDGs; At a Side Event to the High Level Political Forum; Ambassador and Deputy Representative of Japan to the United Nations: New York, NY, USA, 2016; Volume 13. [Google Scholar]

- For Japan’s Economic Revitalization (No.26), H. Future Investment Strategy (2017). Available online: http://www.kantei.go.jp/jp/singi/keizaisaisei/dai26/siryou.pdf (accessed on 15 September 2021).

- Tsutsui, T.; Muramatsu, N. Care-needs certification in the long-term care insurance system of Japan. J. Am. Geriatr. Soc. 2005, 53, 522–527. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. Facial expressions. Handb. Cogn. Emot. 1999, 16, e320. [Google Scholar]

- Ekman, P.; Keltner, D. Universal facial expressions of emotion. In Nonverbal Communication: Where Nature Meets Culture; Segerstrale, U.P., Molnar, P., Eds.; Psychology Press: Hove, UK, 1997; Volume 27, p. 46. [Google Scholar]

- Dimberg, U.; Thunberg, M.; Elmehed, K. Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 2000, 11, 86–89. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Unmasking the Face; Spectrum-Prentice Hall: Englewood Cliffs, NJ, USA, 1975. [Google Scholar]

- Khan, S.; Akram, A.; Usman, N. Real Time Automatic Attendance System for Face Recognition Using Face API and OpenCV. Wirel. Pers. Commun. 2020, 113, 469–480. [Google Scholar] [CrossRef]

- Kim, H.; Kim, T.; Kim, P. Interest Recommendation System Based on Dwell Time Calculation Utilizing Azure Face API. In Proceedings of the 2018 International Conference on Computer, Information and Telecommunication Systems (CITS), Colmar, France, 11–13 July 2018; pp. 1–5. [Google Scholar]

- Duclos, J.; Dorard, G.; Berthoz, S.; Curt, F.; Faucher, S.; Falissard, B.; Godart, N. Expressed emotion in anorexia nervosa: What is inside the “black box”? Compr. Psychiatry 2014, 55, 71–79. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Fu, Y.; Yao, Z.; Xiong, H. Learning geographical preferences for point-of-interest recommendation. In Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Chicago, IL, USA, 11–14 August 2013; pp. 1043–1051. [Google Scholar]

- Yuan, Q.; Cong, G.; Ma, Z.; Sun, A.; Thalmann, N.M. Time-aware point-of-interest recommendation. In Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval, Dublin, Ireland, 28 July–1 August 2013; pp. 363–372. [Google Scholar]

- Gao, H.; Tang, J.; Hu, X.; Liu, H. Content-aware point of interest recommendation on location-based social networks. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Hirayama, K.; Saiki, S.; Nakamura, M.; Yasuda, K. Capturing User-defined Facial Features for Scientific Evidence of Elderly Care. In Proceedings of the 2020 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Austin, TX, USA, 23–27 March 2020; pp. 1–6. [Google Scholar]

- Lee, B.; Erdenee, E.; Jin, S.; Nam, M.Y.; Jung, Y.G.; Rhee, P.K. Multi-class multi-object tracking using changing point detection. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 68–83. [Google Scholar]

- Miranda, V.; Zhao, L. Topological Data Analysis for Time Series Changing Point Detection. In Proceedings of the International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery; Springer: Berlin/Heidelberg, Germany, 2019; pp. 194–203. [Google Scholar]

- Saranya, R.; Rajeshkumar, S. A Survey on Anomaly Detection for Discovering Emerging Topics. Int. J. Comput. Sci. Mob. Comput. (IJCSMC) 2014, 3, 895–902. [Google Scholar]

- Li, R.K.; Tu, Y.M.; Yang, T.H. Composite feature and variational design concepts in a feature-based design system. Int. J. Prod. Res. 1993, 31, 1521–1540. [Google Scholar] [CrossRef]

- Sato, K.; Sugino, K.; Hayashi, T. Development of an objective evaluation method for the nursing-care education based on facial expression analysis. Med. Health Sci. Res. Bull. Tsukuba Int. Univ. 2010, 1, 163–170. [Google Scholar]

- Moniz, A.; Gordon, M.; Bergum, I.; Chang, M.; Grant, G. Introducing Cognitive Services. In Beginning Azure Cognitive Services; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–17. [Google Scholar]

- Kasaei, S.; Sock, J.; Lopes, L.S.; Tomé, A.M.; Kim, T.K. Perceiving, learning, and recognizing 3d objects: An approach to cognitive service robots. In Proceedings of the AAAI Conference on Artificial Intelligence, Hilton, New Orleans Riverside, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Mertes, C.; Yang, J.; Hammerschmidt, J.; Hermann, T. Challenges for Smart Environments in Bathroom Contexts-Video and Sound Example; Bielefeld University: Bielefeld, Germany, 2016. [Google Scholar]

- Ogiela, U.; Ogiela, L. Linguistic techniques for cryptographic data sharing algorithms. Concurr. Comput. Pract. Exp. 2018, 30, e4275. [Google Scholar] [CrossRef]

- Chen, M.; Herrera, F.; Hwang, K. Cognitive computing: Architecture, technologies and intelligent applications. IEEE Access 2018, 6, 19774–19783. [Google Scholar] [CrossRef]

- Chen, S.; Saiki, S.; Nakamura, M. Proposal of home context recognition method using feature values of cognitive API. In Proceedings of the 2019 20th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Toyama, Japan, 8–11 July 2019; pp. 533–538. [Google Scholar]

- Chen, S.; Saiki, S.; Nakamura, M. Toward Affordable and Practical Home Context Recognition: Framework and Implementation with Image-based Cognitive API. Int. J. Netw. Distrib. Comput. 2019, 8, 16–24. [Google Scholar] [CrossRef] [Green Version]

- Chen, S.; Saiki, S.; Nakamura, M. Integrating Multiple Models Using Image-as-Documents Approach for Recognizing Fine-Grained Home Contexts. Sensors 2020, 20, 666. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, S.; Saiki, S.; Nakamura, M. Toward flexible and efficient home context sensing: Capability evaluation and verification of image-based cognitive APIs. Sensors 2020, 20, 1442. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sako, A.; Saiki, S.; Nakamura, M.; Yasuda, K. Developing Face Emotion Tracker for Quantitative Evaluation of Care Effects. In Proceedings of the Digital Human Modeling 2018 (DHM 2018), Held as Part of HCI International 2018, Las Vegas, NV, USA, 15–20 July 2018; Volume LNCS 10917, pp. 513–526. [Google Scholar]

- Tokunaga, S.; Tamamizu, K.; Saiki, S.; Nakamura, M.; Yasuda, K. VirtualCareGiver: Personalized Smart Elderly Care. Int. J. Softw. Innov. (IJSI) 2016, 5, 30–43. [Google Scholar] [CrossRef]

- Azure, M. Face API-Facial Recognition Software | Microsoft Azure. Available online: https://azure.microsoft.com/en-us/services/cognitive-services/face/ (accessed on 30 August 2021).

- Asgarian, A.; Zhao, S.; Ashraf, A.B.; Browne, M.E.; Prkachin, K.M.; Mihailidis, A.; Hadjistavropoulos, T.; Taati, B. Limitations and Biases in Facial Landmark Detection D an Empirical Study on Older Adults with Dementia. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 15 June 2019; pp. 28–36. [Google Scholar]

- Batista, A.X.; Bazán, P.R.; Conforto, A.B.; Martin, M.d.G.M.; Simon, S.; Hampstead, B.; Figueiredo, E.G.; Miotto, E.C. Effects of mnemonic strategy training on brain activity and cognitive functioning of left-hemisphere ischemic stroke patients. Neural Plast. 2019, 2019, 1–16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bandini, A.; Green, J.R.; Taati, B.; Orlandi, S.; Zinman, L.; Yunusova, Y. Automatic detection of amyotrophic lateral sclerosis (ALS) from video-based analysis of facial movements: Speech and non-speech tasks. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 150–157. [Google Scholar]

- Bandini, A.; Orlandi, S.; Escalante, H.J.; Giovannelli, F.; Cincotta, M.; Reyes-Garcia, C.A.; Vanni, P.; Zaccara, G.; Manfredi, C. Analysis of facial expressions in parkinson’s disease through video-based automatic methods. J. Neurosci. Methods 2017, 281, 7–20. [Google Scholar] [CrossRef] [PubMed]

- Guralnik, V.; Srivastava, J. Event detection from time series data. In Proceedings of the Fifth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 15–18 August 1999; pp. 33–42. [Google Scholar]

- Yamanishi, K.; Takeuchi, J. A Unifying Framework for Detecting Outliers and Change Points from Non-Stationary Time Series Data. In Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Edmonton, AB, Canada, 23–26 July 2002; pp. 676–681. [Google Scholar]

- Yamanishi, K.; Takeuchi, J. Discovering Outlier Filtering Rules from Unlabeled Data: Combining a Supervised Learner with an Unsupervised Learner. In Proceedings of the Seventh ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 26–29 August 2001; pp. 389–394. [Google Scholar]

- Yamanishi, K. Anomaly Detection with Data Mining; Kyoritsu Shuppan Co., Ltd.: Tokyo, Japan, 2009. [Google Scholar]

- Ohta, T.; Tamura, M.; Arita, M.; Kiso, N.; Saeki, Y. Facial expression analysis: Comparison with results of Paul Ekman. J. Nurs. Shiga Univ. Med Sci. 2005, 3, 20–24. [Google Scholar]

- GitHub-Shunsukeaihara/Changefinder. Available online: https://github.com/shunsukeaihara/changefinder (accessed on 15 September 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).