Detection of Road-Surface Anomalies Using a Smartphone Camera and Accelerometer

Abstract

1. Introduction

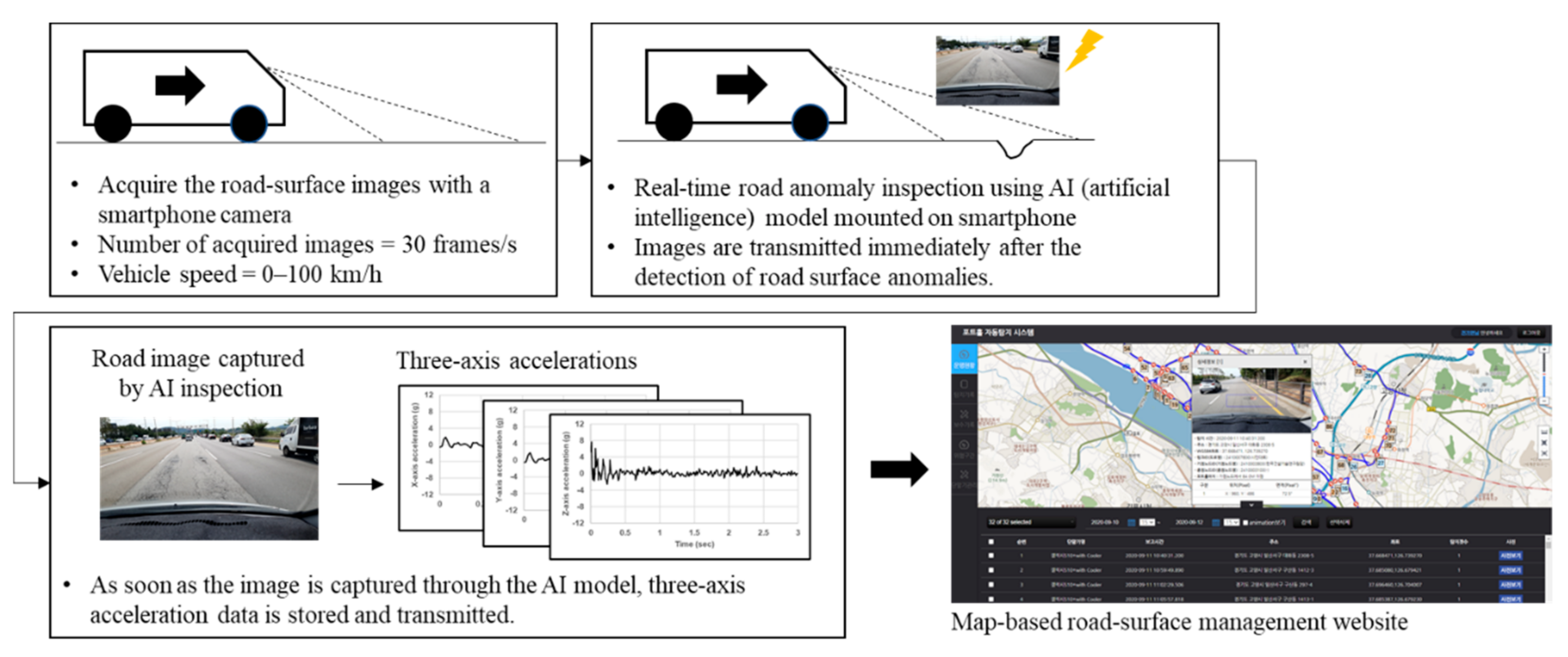

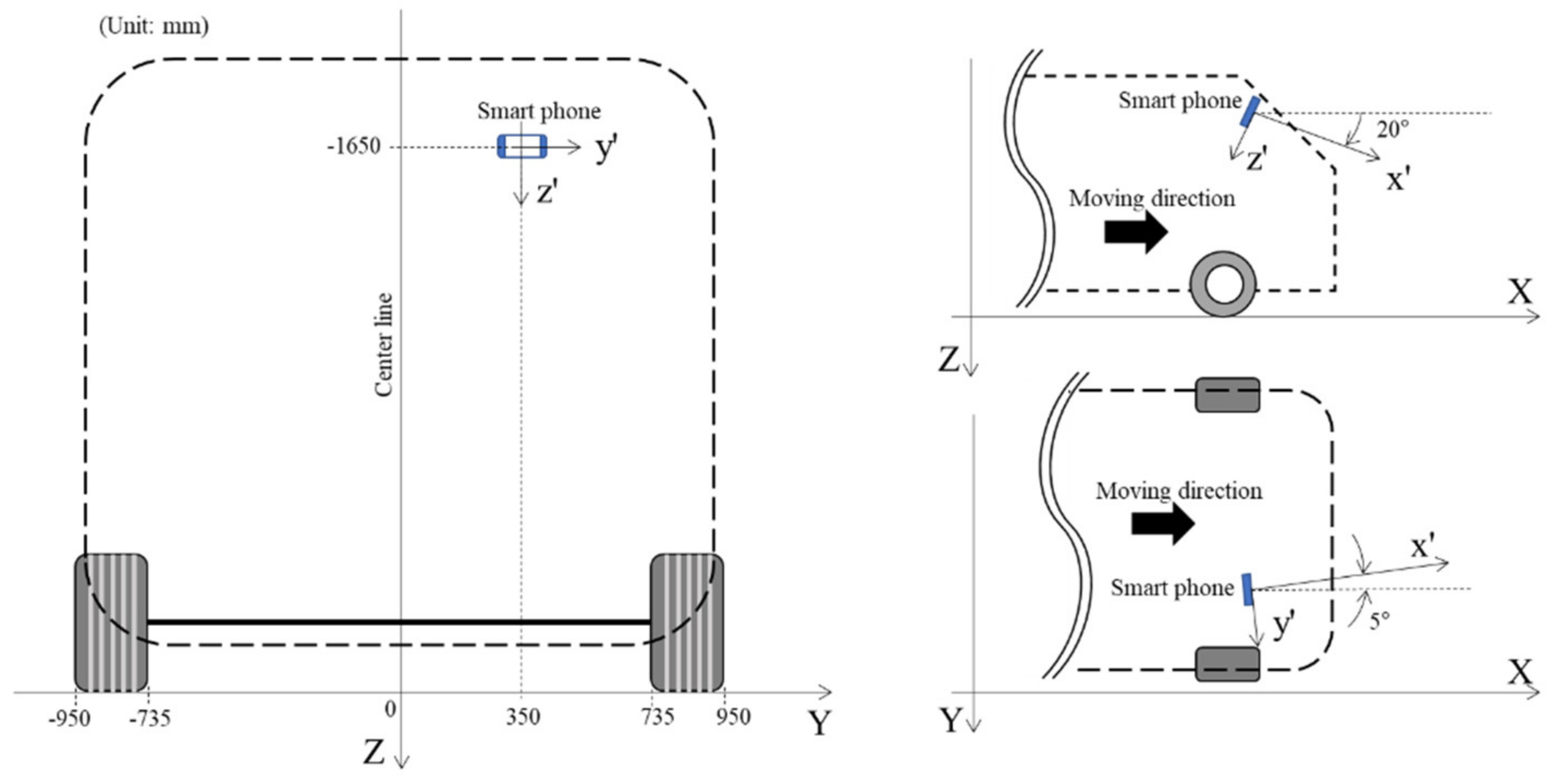

2. Collection of Road-Surface Anomaly Information Using a Smartphone Camera and Accelerometer

2.1. Overall Flow of Data Acquisition

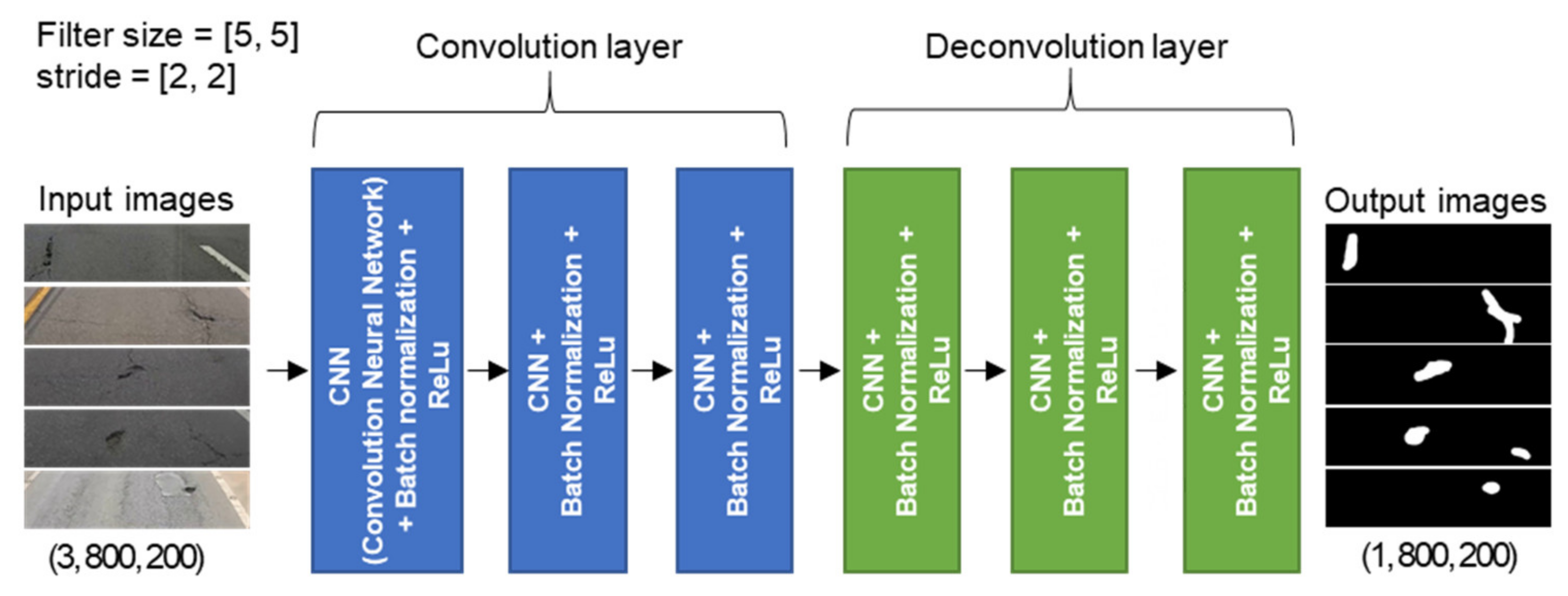

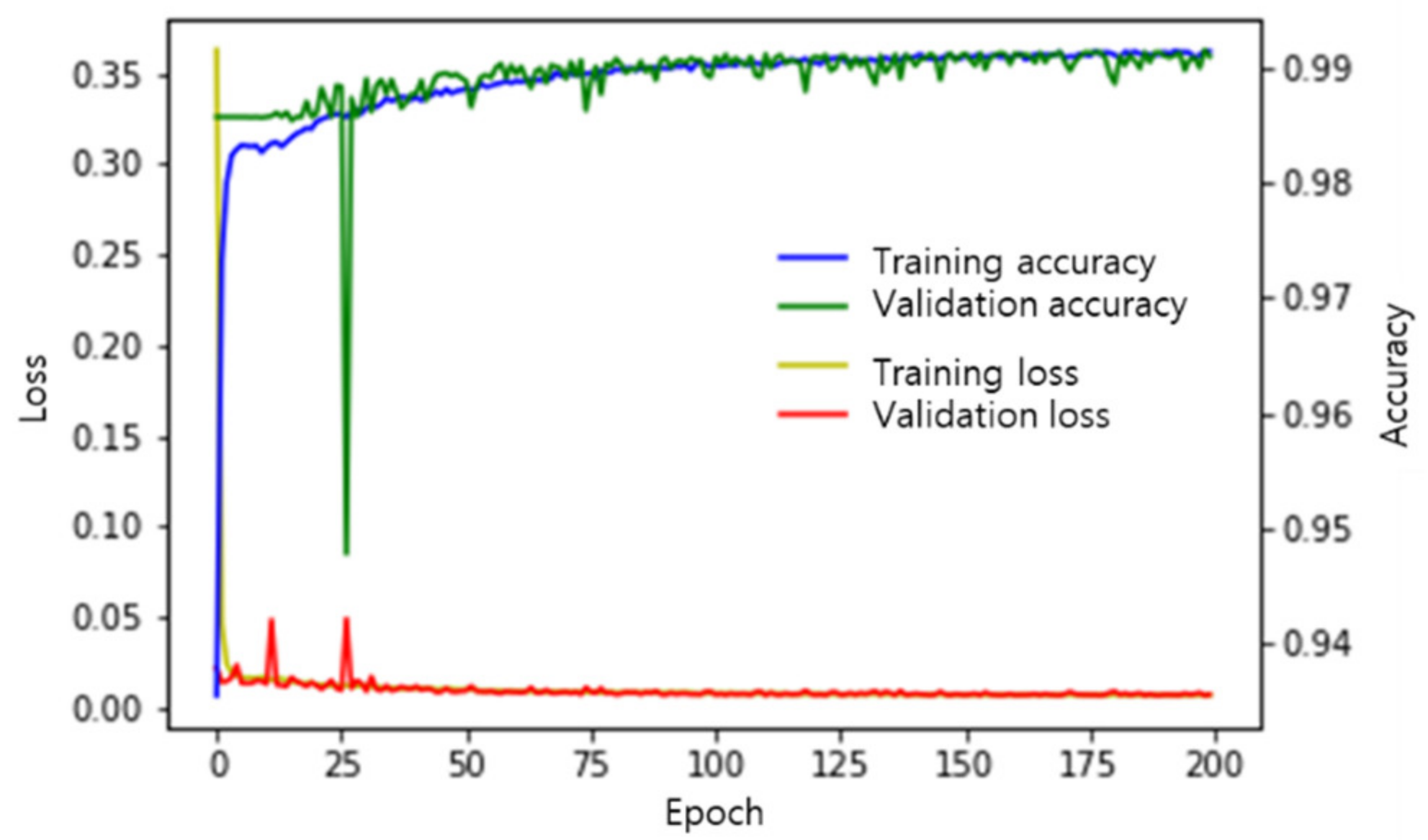

2.2. FCN-Based Road-Surface Anomaly Detection Model

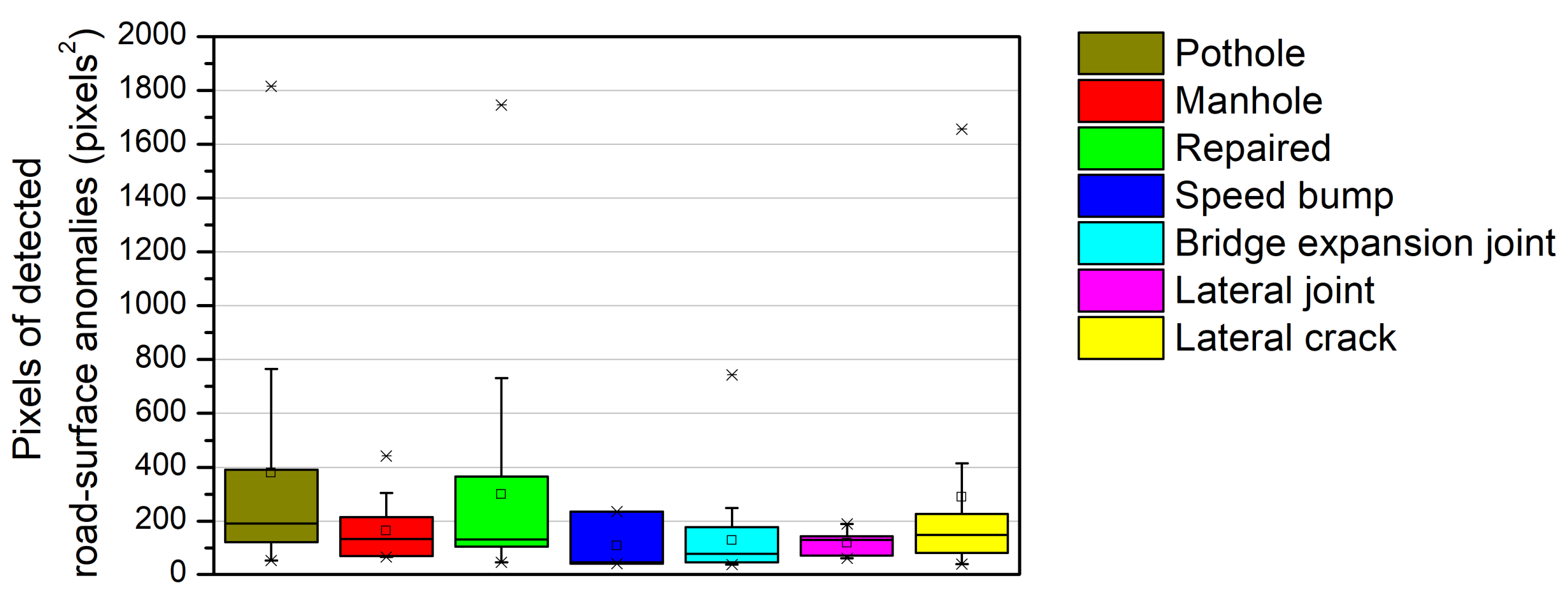

2.3. Configuration of the Collected Data

3. Preprocessing of Acquired Acceleration Data

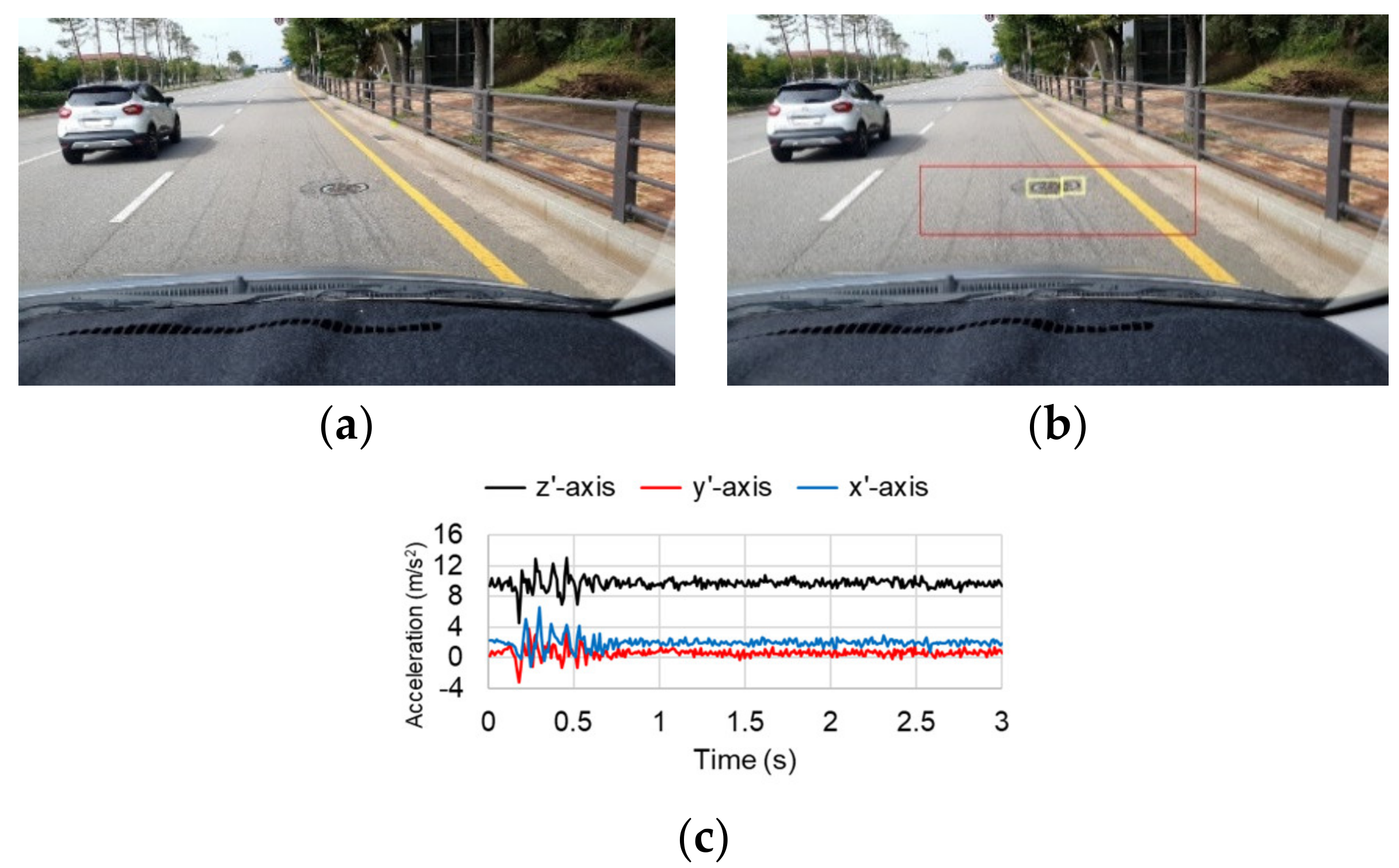

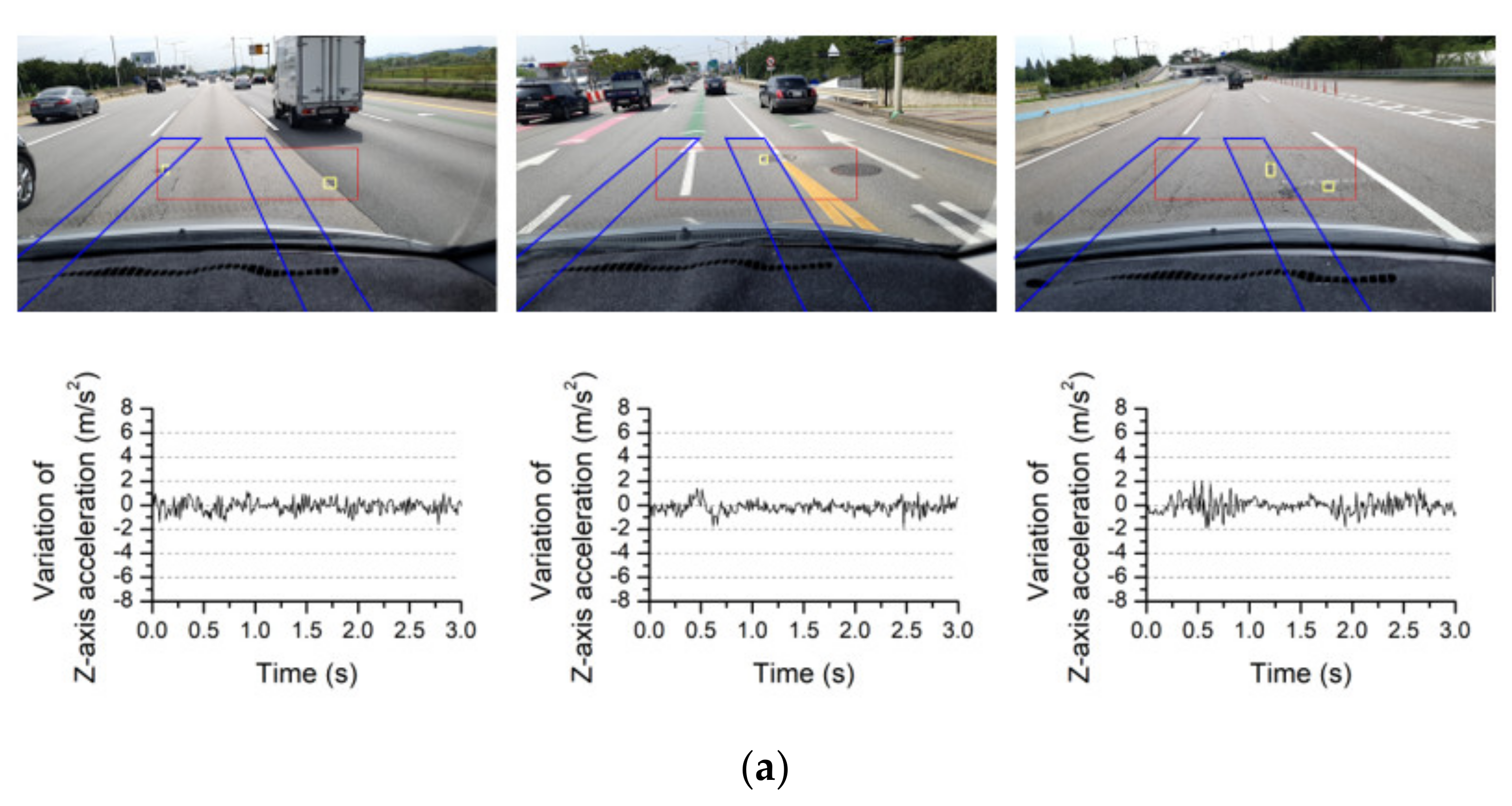

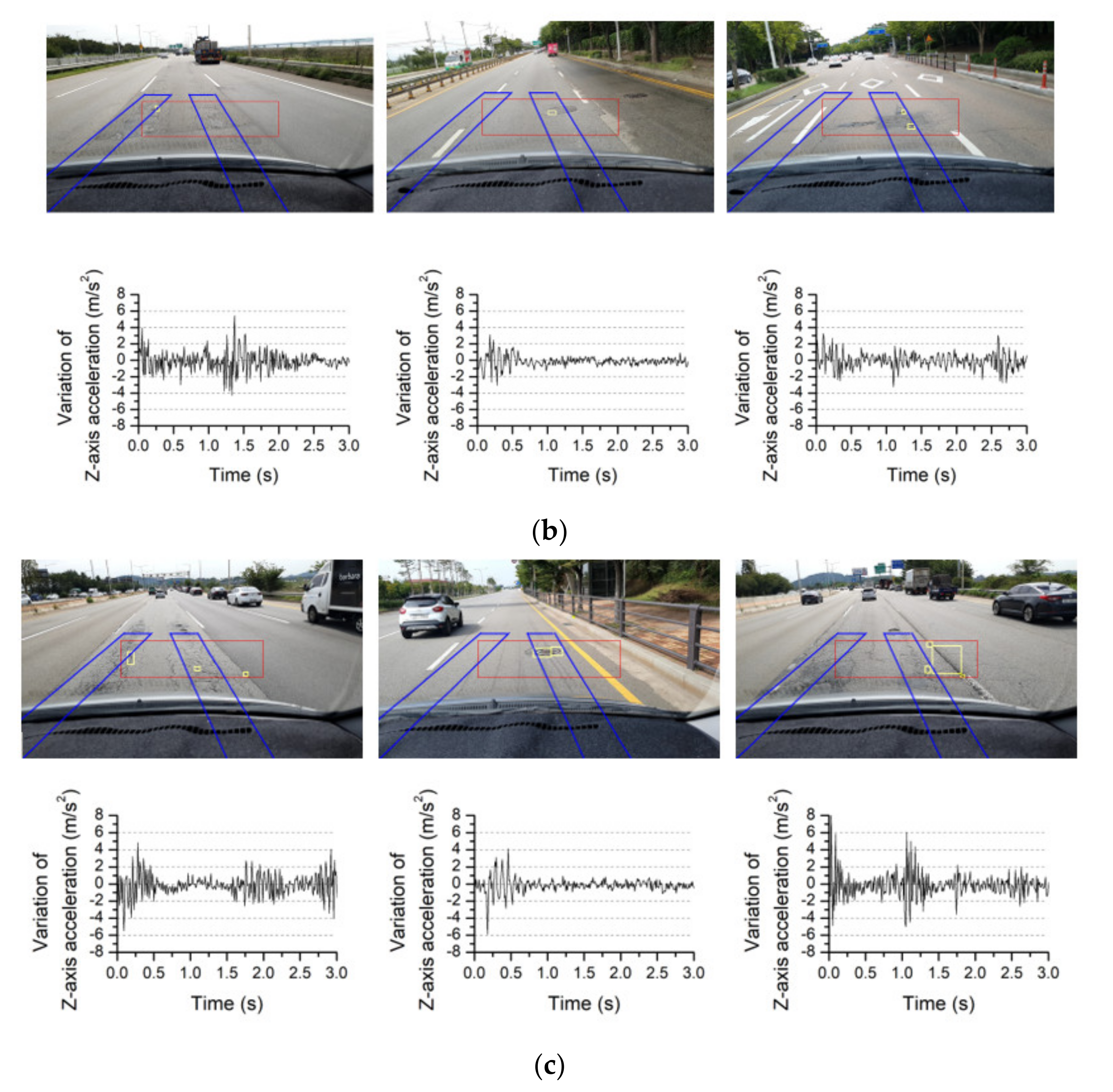

3.1. Typical Images and Collected Acceleration Data

3.2. Conversion of Acceleration Measurements into the Global Coordinate System

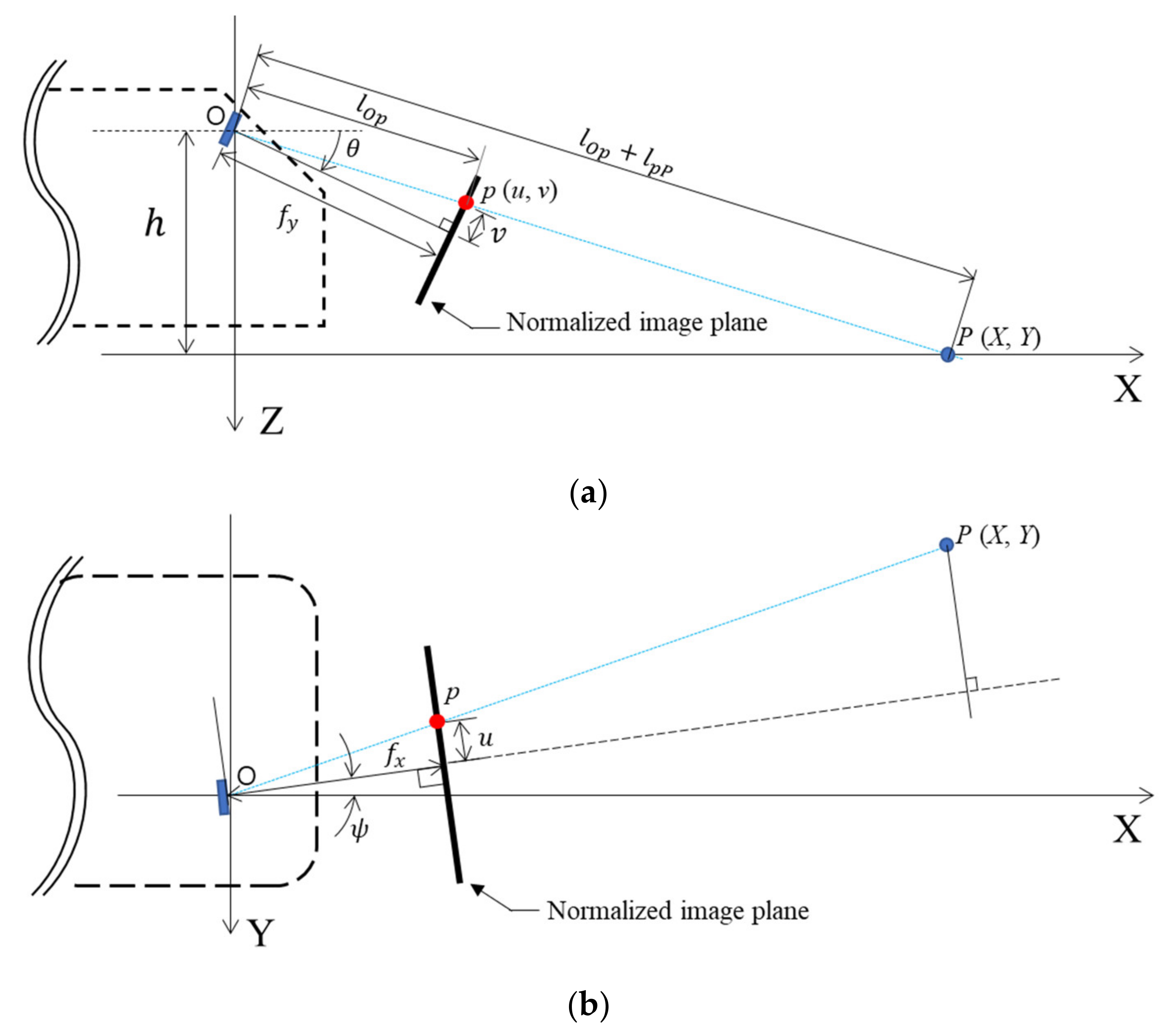

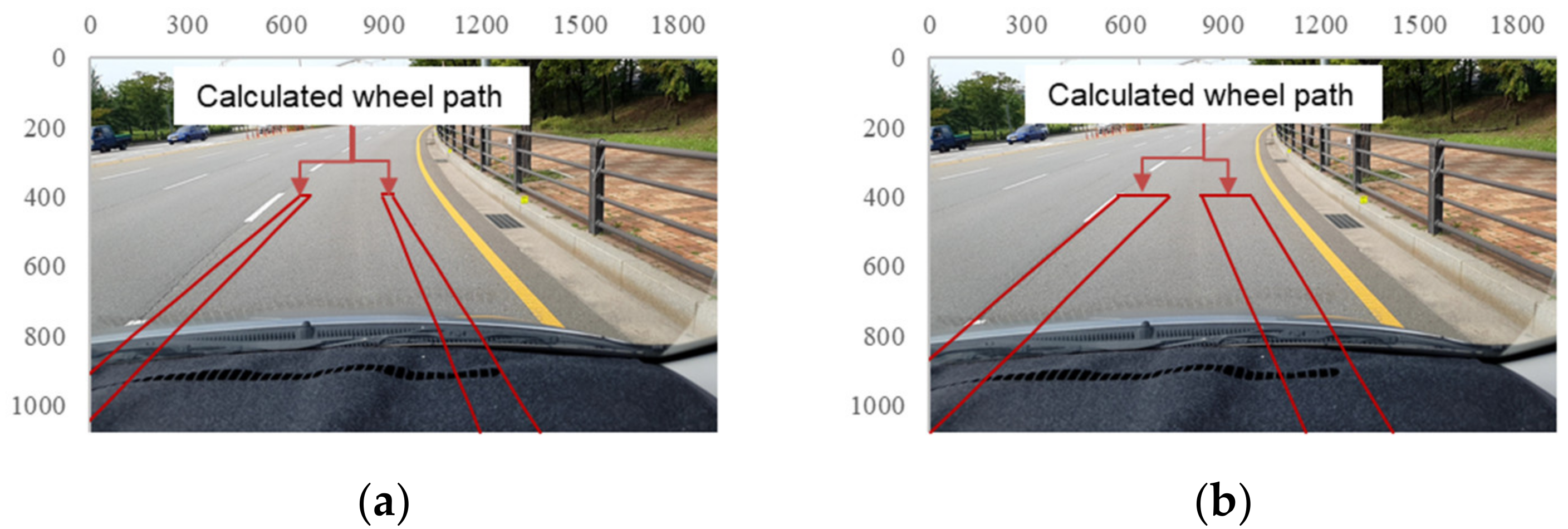

3.3. Estimation of the Vehicle Wheel Paths

4. Acceleration Data Acquisition Results and Analysis

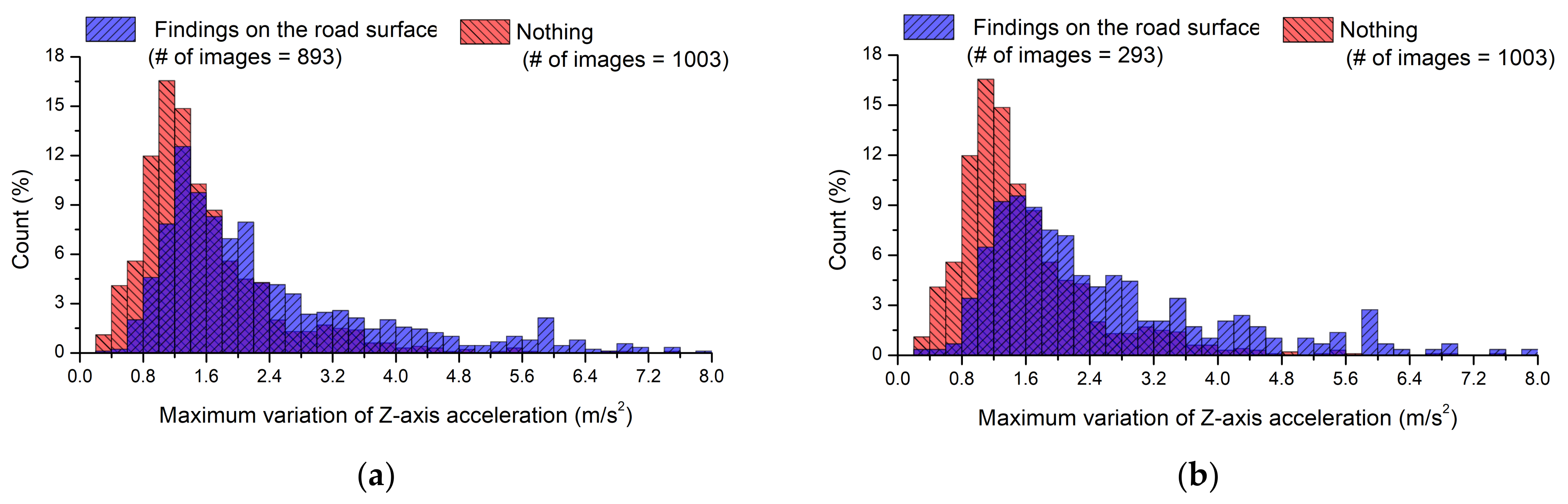

4.1. Histogram of the Maximum Variation of Z-Axis Acceleration According to the Time Needed to Acquire Acceleration Data

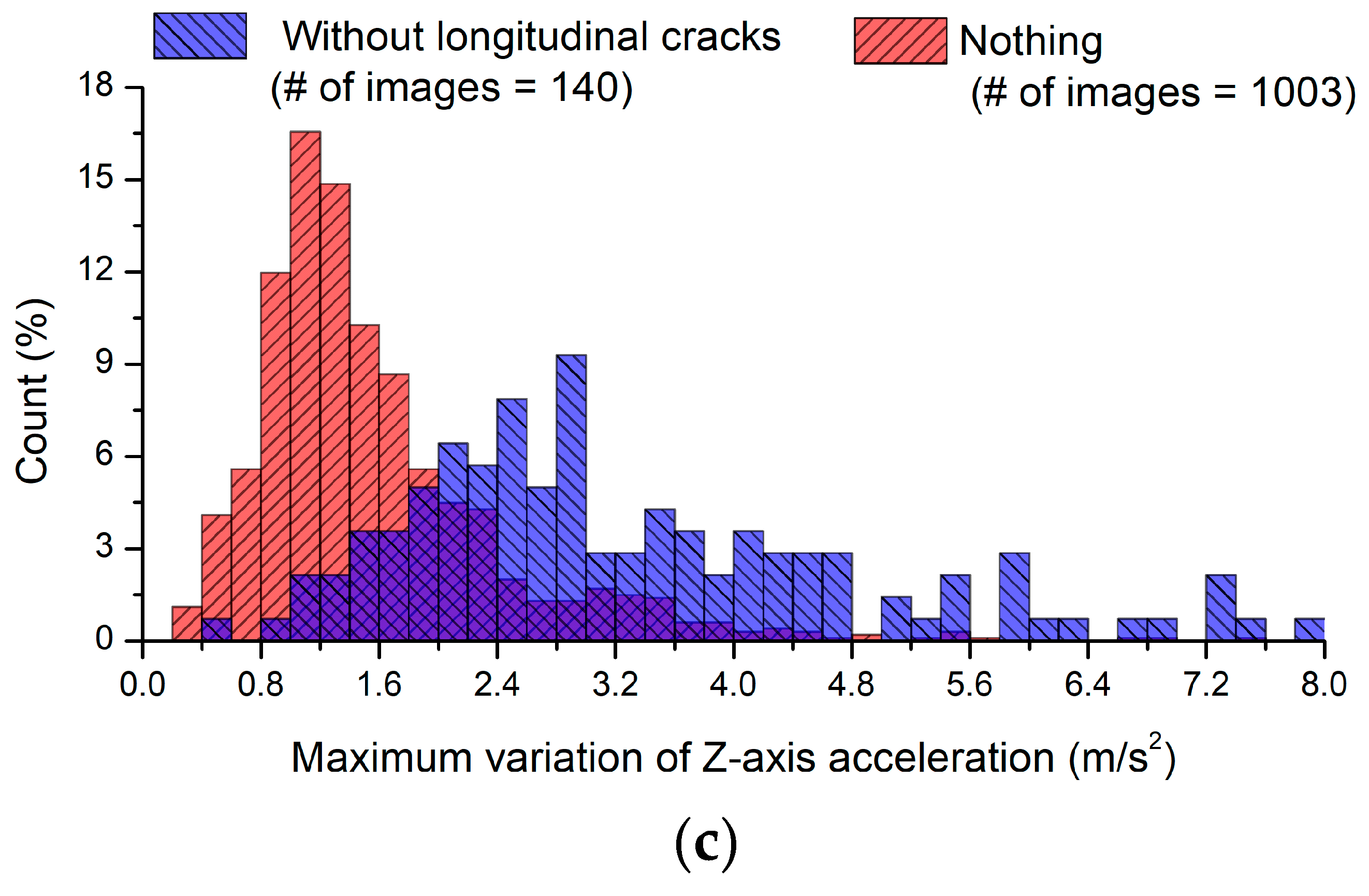

4.2. Analysis of the Histogram for the Variation of Z-Axis Acceleration

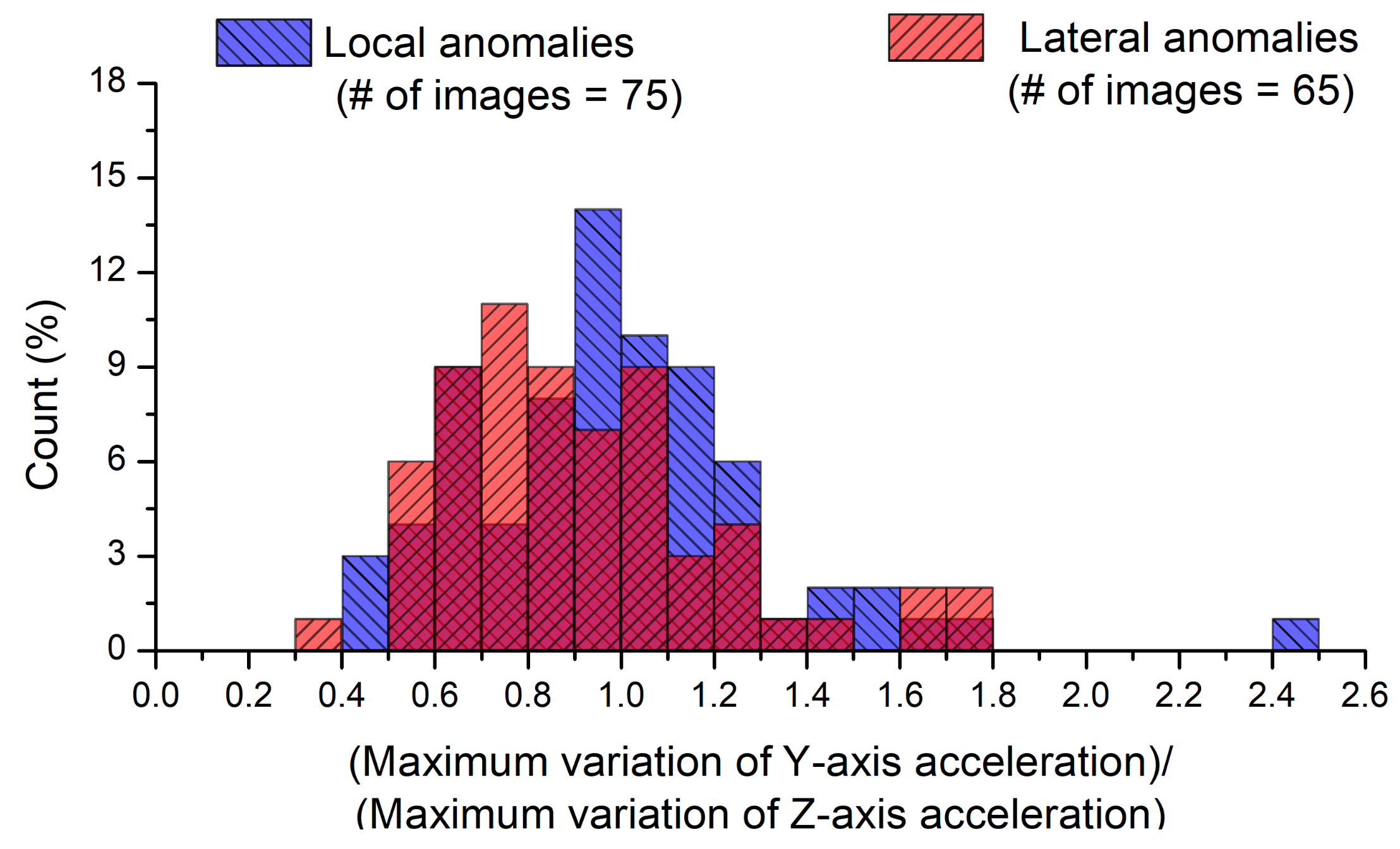

4.3. Histogram Analysis for the Ratio of Y- to Z-Axis Accelerations

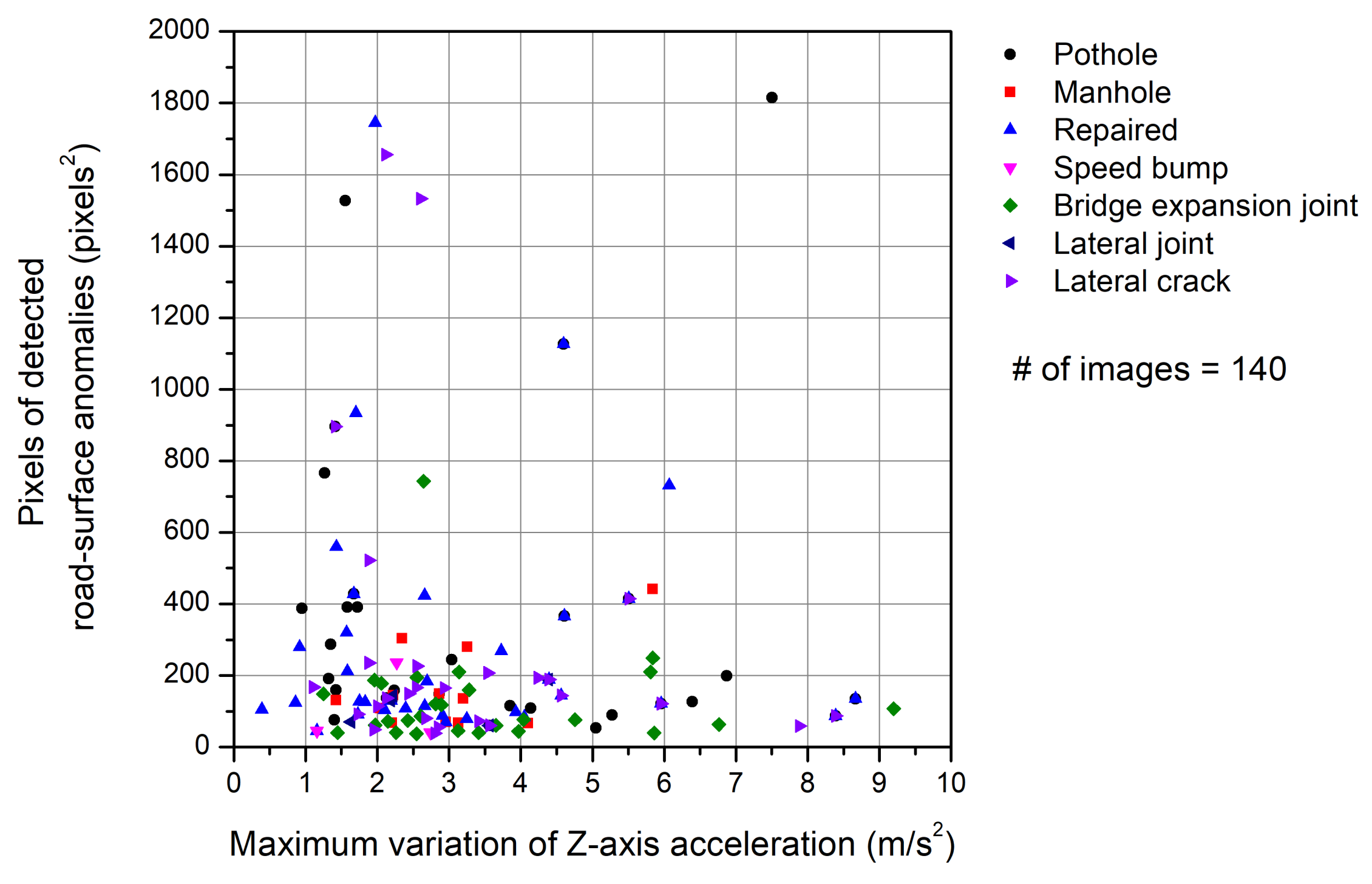

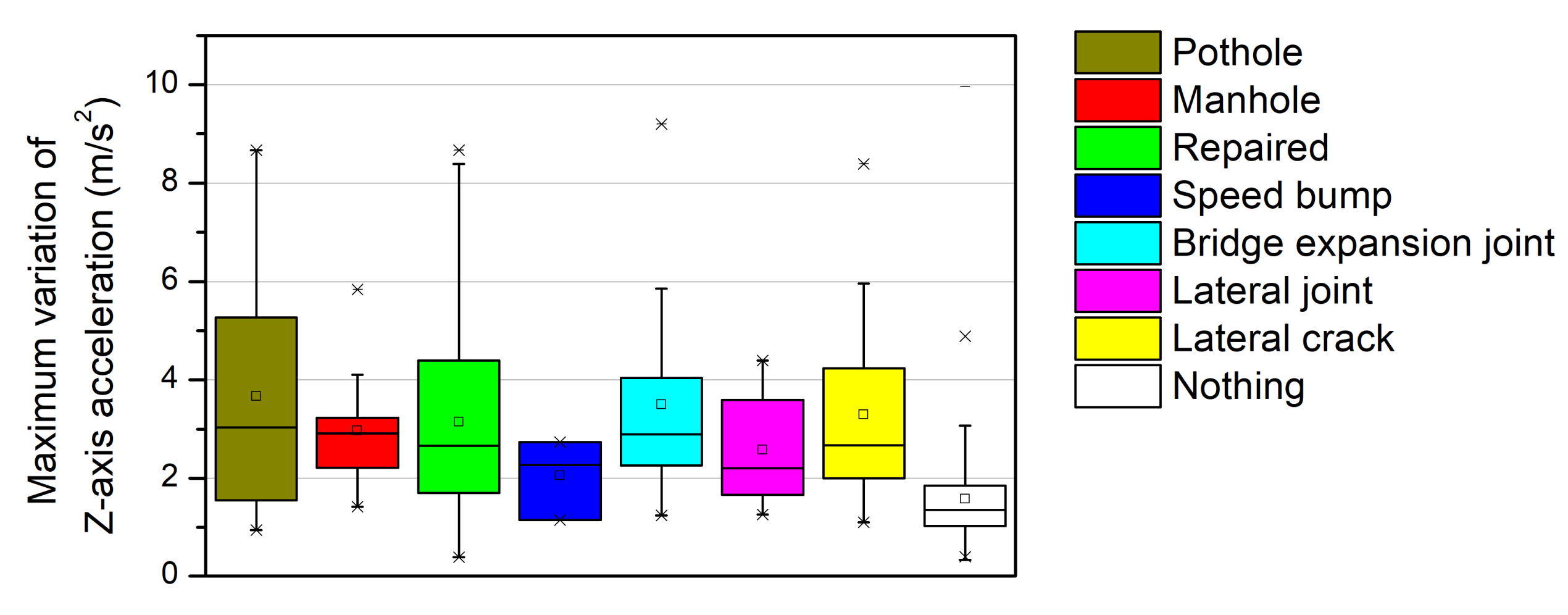

5. Image and Acceleration Results and Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Haas, R.; Hudson, W.R.; Zaniewski, J. Modern Pavement Management; Krieger Publishing Company: Melbourne, FL, USA, 1994. [Google Scholar]

- Zang, K.; Shen, J.; Huang, H.; Wan, M.; Shi, J. Assessing and mapping of road surface roughness based on GPS and Accelerometer sensors on bicycle-mounted smartphones. Sensors 2018, 18, 914. [Google Scholar] [CrossRef] [PubMed]

- Mubaraki, M. Third-order polynomial equations of municipal urban low-volume pavement for the most common distress types. Int. J. Pavement Eng. 2014, 15, 303–308. [Google Scholar] [CrossRef]

- Silva, L.A.; Sanchez San Blas, H.; Peral García, D.; Sales Mendes, A.; Villarubia González, G. An architectural multi-agent system for a pavement monitoring system with pothole recognition in UAV images. Sensors 2020, 20, 6205. [Google Scholar] [CrossRef] [PubMed]

- De Blasiis, M.R.; Di Benedetto, A.; Fiani, M.; Garozzo, M. Assessing of the road pavement roughness by means of LiDAR technology. Coatings 2021, 11, 17. [Google Scholar] [CrossRef]

- Kim, T.; Ryu, S.K. Pothole DB based on 2D images and video data. J. Emerg. Trends Comput. Inform. Sci. 2014, 5, 527–531. [Google Scholar]

- Eriksson, J.; Girod, L.; Hull, B.; Newton, R.; Madden, S.; Balakrishnan, H. The pothole patrol: Using a mobile sensor network for road surface monitoring. In Proceedings of the 6th International Conference on Mobile Systems, Applications, and Services, Breckenridge, CO, USA, 17–20 June 2008; pp. 29–39. [Google Scholar]

- Mednis, A.; Strazdins, G.; Zviedris, R.; Kanonirs, G.; Selavo, L. Real time pothole detection using Android smart phones with accelerometers. In Proceedings of the International Conference on Distributed Computing in Sensor Systems and Workshops, Barcelona, Spain, 27–29 June 2011; pp. 1–6. [Google Scholar]

- Mohan, P.; Padmanabhan, V.N.; Ramjee, R. Nericell: Rich monitoring of road and traffic conditions using mobile smartphones. In Proceedings of the 6th ACM Conference on Embedded Network Sensor Systems, Raleigh, NC, USA, 5–7 November 2008; pp. 323–336. [Google Scholar]

- Bhatt, U.; Mani, S.; Xi, E.; Kolter, J.Z. Intelligent pothole detection and road condition assessment. In Proceedings of the Bloomberg Data for Good Exchange Conference, Chicago, IL, USA, 24 September 2017. [Google Scholar]

- Nunes, D.E.; Mota, V.F. A participatory sensing framework to classify road surface quality. J. Internet Serv. Appl. 2019, 10, 13. [Google Scholar] [CrossRef]

- Allouch, A.; Koubâa, A.; Abbes, T.; Ammar, A. Roadsense: Smartphone application to estimate road conditions using accelerometer and gyroscope. IEEE Sens. J. 2017, 17, 4231–4238. [Google Scholar] [CrossRef]

- Chen, K.; Tan, G.; Lu, M.; Wu, J. CRSM: A practical crowdsourcing-based road surface monitoring system. Wirel. Netw. 2016, 22, 765–779. [Google Scholar] [CrossRef]

- Jang, J.; Yang, Y.; Smyth, A.W.; Cavalcanti, D.; Kumar, R. Framework of data acquisition and integration for the detection of pavement distress via multiple vehicles. J. Comput. Civ. Eng. 2017, 31, 04016052. [Google Scholar] [CrossRef]

- Kyriakou, C.; Christodoulou, S.E.; Dimitriou, L. Smartphone-Based pothole detection utilizing artificial neural networks. J. Infrastruct. Syst. 2019, 25, 04019019. [Google Scholar] [CrossRef]

- Singh, G.; Bansal, D.; Sofat, S.; Aggarwal, N. Smart patrolling: An efficient road surface monitoring using smartphone sensors and crowdsourcing. Pervasive Mob. Comput. 2017, 40, 71–88. [Google Scholar] [CrossRef]

- Celaya-Padilla, J.M.; Galván-Tejada, C.E.; López-Monteagudo, F.E.; Alonso-González, O.; Moreno-Báez, A.; Martínez-Torteya, A.; Galván-Tejada, J.I.; Arceo-Olague, J.G.; Luna-García, H.; Gamboa-Rosales, H. Speed bump detection using Accelerometric features: A genetic algorithm approach. Sensors 2018, 18, 443. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Yuan, C.; Liu, D.; Cai, H. Integrated processing of image and GPR data for automated pothole detection. J. Comput. Civ. Eng. 2016, 30, 04016015. [Google Scholar] [CrossRef]

- Chang, K.; Chang, J.; Liu, J. Detection of pavement distresses using 3D laser scanning technology. In Proceedings of the International Conference on Computing in Civil Engineering, Cancun, Mexico, 12–15 July 2005. [Google Scholar]

- Li, Q.; Yao, M.; Yao, X.; Xu, B. A real-time 3D scanning system for pavement distortion inspection. Meas. Sci. Technol. 2009, 21, 015702. [Google Scholar] [CrossRef]

- Bitelli, G.; Simone, A.; Girardi, F.; Lantieri, C. Laser scanning on road pavements: A new approach for characterizing surface texture. Sensors 2012, 12, 9110–9128. [Google Scholar] [CrossRef]

- Gui, R.; Xu, X.; Zhang, D.; Lin, H.; Pu, F.; He, L.; Cao, M. A component decomposition model for 3D laser scanning pavement data based on high-pass filtering and sparse analysis. Sensors 2018, 18, 2294. [Google Scholar] [CrossRef]

- Koch, C.; Brilakis, I. Pothole detection in asphalt pavement images. Adv. Eng. Inform. 2011, 25, 507–515. [Google Scholar] [CrossRef]

- Jo, Y.; Ryu, S. Pothole detection system using a black-box camera. Sensors 2015, 15, 29316–29331. [Google Scholar] [CrossRef]

- Jog, G.M.; Koch, C.; Golparvar-Fard, M.; Brilakis, I. Pothole properties measurement through visual 2D recognition and 3D reconstruction. In Proceedings of the ASCE International Conference on Computing in Civil Engineering, Clearwater Beach, FL, USA, 17–20 June 2012; pp. 553–560. [Google Scholar]

- Koch, C.; Georgieva, K.; Kasireddy, V.; Akinci, B.; Fieguth, P. A review on computer vision based defect detection and condition assessment of concrete and asphalt civil infrastructure. Adv. Eng. Inform. 2015, 29, 196–210. [Google Scholar] [CrossRef]

- Mahmoudzadeh, A.; Golroo, A.; Jahanshahi, M.R.; Firoozi Yeganeh, S. Estimating pavement roughness by fusing color and depth data obtained from an inexpensive RGB-D sensor. Sensors 2019, 19, 1655. [Google Scholar] [CrossRef]

- Chun, C.; Ryu, S.K. Road surface damage detection using fully convolutional neural networks and semi-supervised learning. Sensors 2019, 19, 5501. [Google Scholar] [CrossRef] [PubMed]

- Han, W.; Wu, C.; Zhang, X.; Sun, M.; Min, G. Speech enhancement based on improved deep neural networks with MMSE pretreatment features. In Proceedings of the 13th IEEE International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. ADAM: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

| Findings on the Road Surface | Nothing Detected on the Road Surface | Total | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Local Anomalies | Continuous Anomalies | |||||||||

| Lateral Anomalies | Longitudinal Anomalies | |||||||||

| 241 | 195 | 457 | ||||||||

| Pothole | Manhole | Repaired | Speed Bump | Bridge Expansion Joint | Lateral Joint | Lateral Crack | Longitudinal Joint | Longitudinal Crack | ||

| 50 | 60 | 131 | 9 | 44 | 42 | 100 | 113 | 344 | 1003 | 1896 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, T.; Chun, C.; Ryu, S.-K. Detection of Road-Surface Anomalies Using a Smartphone Camera and Accelerometer. Sensors 2021, 21, 561. https://doi.org/10.3390/s21020561

Lee T, Chun C, Ryu S-K. Detection of Road-Surface Anomalies Using a Smartphone Camera and Accelerometer. Sensors. 2021; 21(2):561. https://doi.org/10.3390/s21020561

Chicago/Turabian StyleLee, Taehee, Chanjun Chun, and Seung-Ki Ryu. 2021. "Detection of Road-Surface Anomalies Using a Smartphone Camera and Accelerometer" Sensors 21, no. 2: 561. https://doi.org/10.3390/s21020561

APA StyleLee, T., Chun, C., & Ryu, S.-K. (2021). Detection of Road-Surface Anomalies Using a Smartphone Camera and Accelerometer. Sensors, 21(2), 561. https://doi.org/10.3390/s21020561