Design of Decision Tree Structure with Improved BPNN Nodes for High-Accuracy Locomotion Mode Recognition Using a Single IMU

Abstract

:1. Introduction

2. Proposed Methods

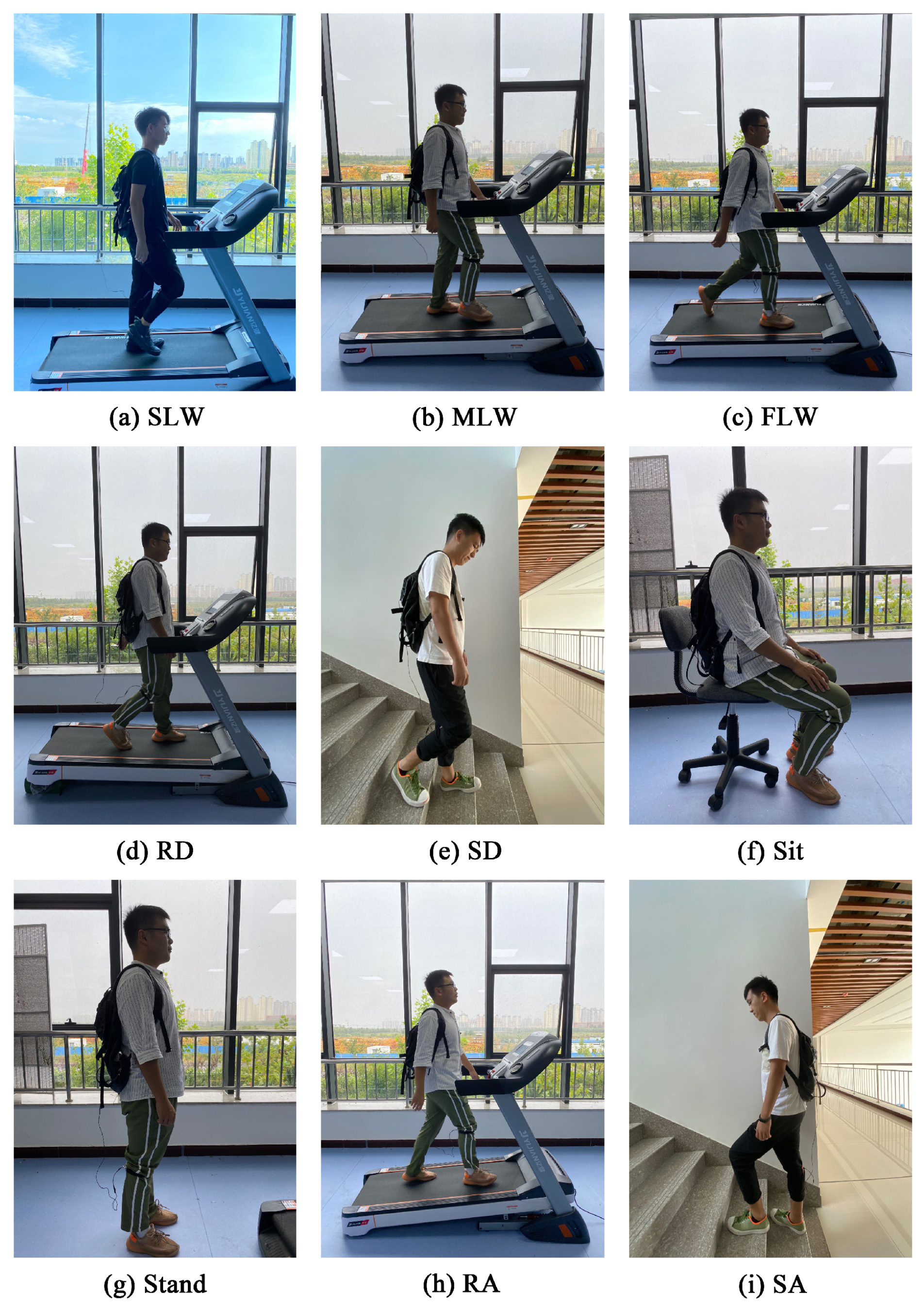

2.1. Definition of Locomotion Modes

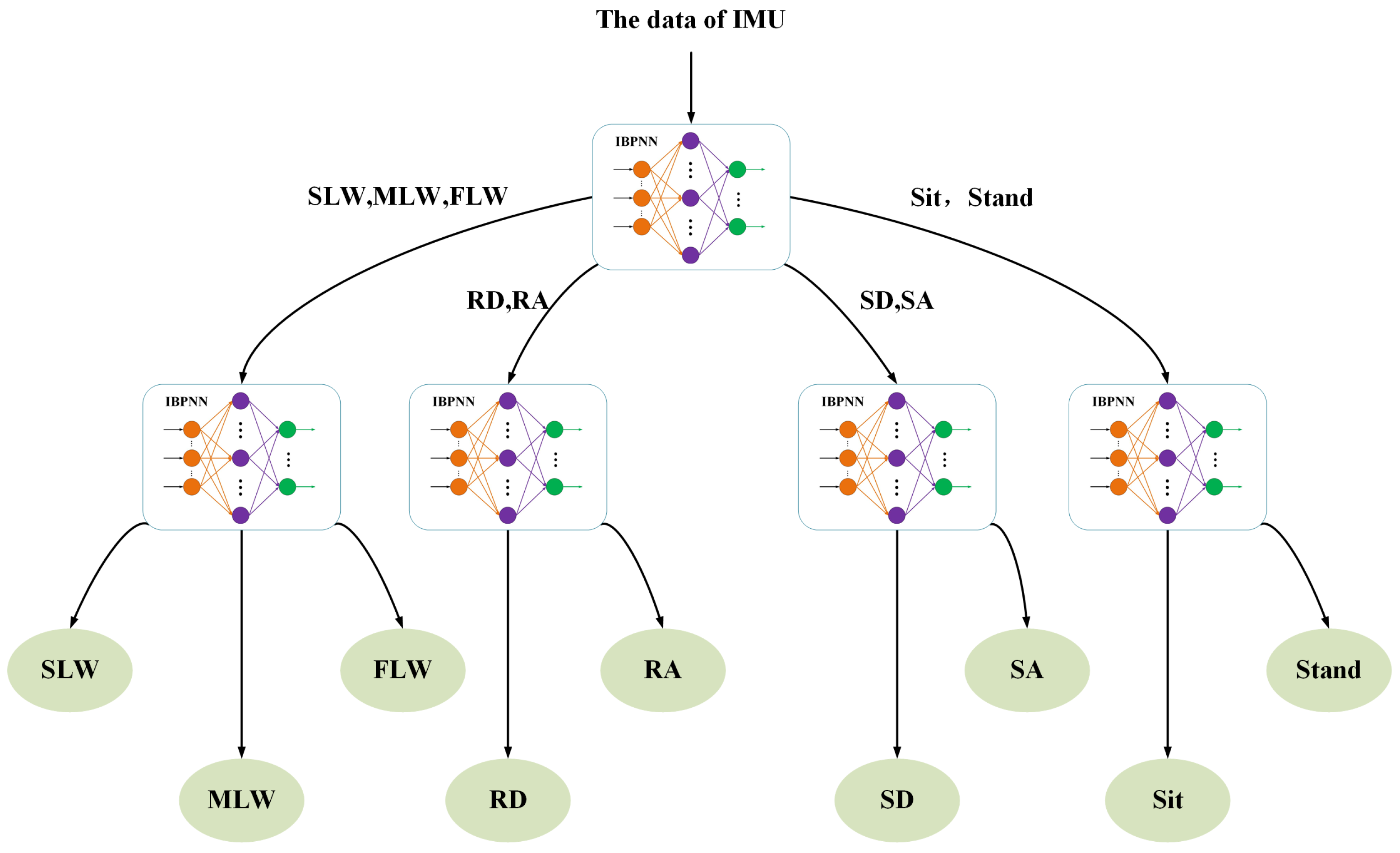

2.2. Locomotion Mode Data-Based DTS Design

2.3. IBPNN for DTS Judgment Node

2.4. ABC for Optimizing Initial Parameters

- Initialize the bee colony. For a D-dimension vector to be optimized, N feasible solutions are generated randomly, where N is the number of collecting and following bees. Feasible solutions are expressed as follows:where and are the maximum and maximum of the D-dimension vector, respectively.

- Calculate fitness function . The fitness function is used to evaluate the quality of nectar sources based on the error function of the IBPNN.

- Conduct local search at optimal nectar source locations. The search function is expressed as follows.The bees replace a nectar source with a new location according to a greed criterion to ensure that the entire evolution process does not recede. The greedy selection operator is expressed as follows.

- A following bee chooses to follow a collecting bee according to the nectar source information it receives based on a certain probability proportional to fitness.

- If a better nectar source is not found after collecting bees have finished the finite limit iterations (i.e., the local search), the first step is repeated until the total number of iterations (i.e., the global search) is completed to obtain the optimal D-dimension vector.

3. Experiments

3.1. Experimental Protocol

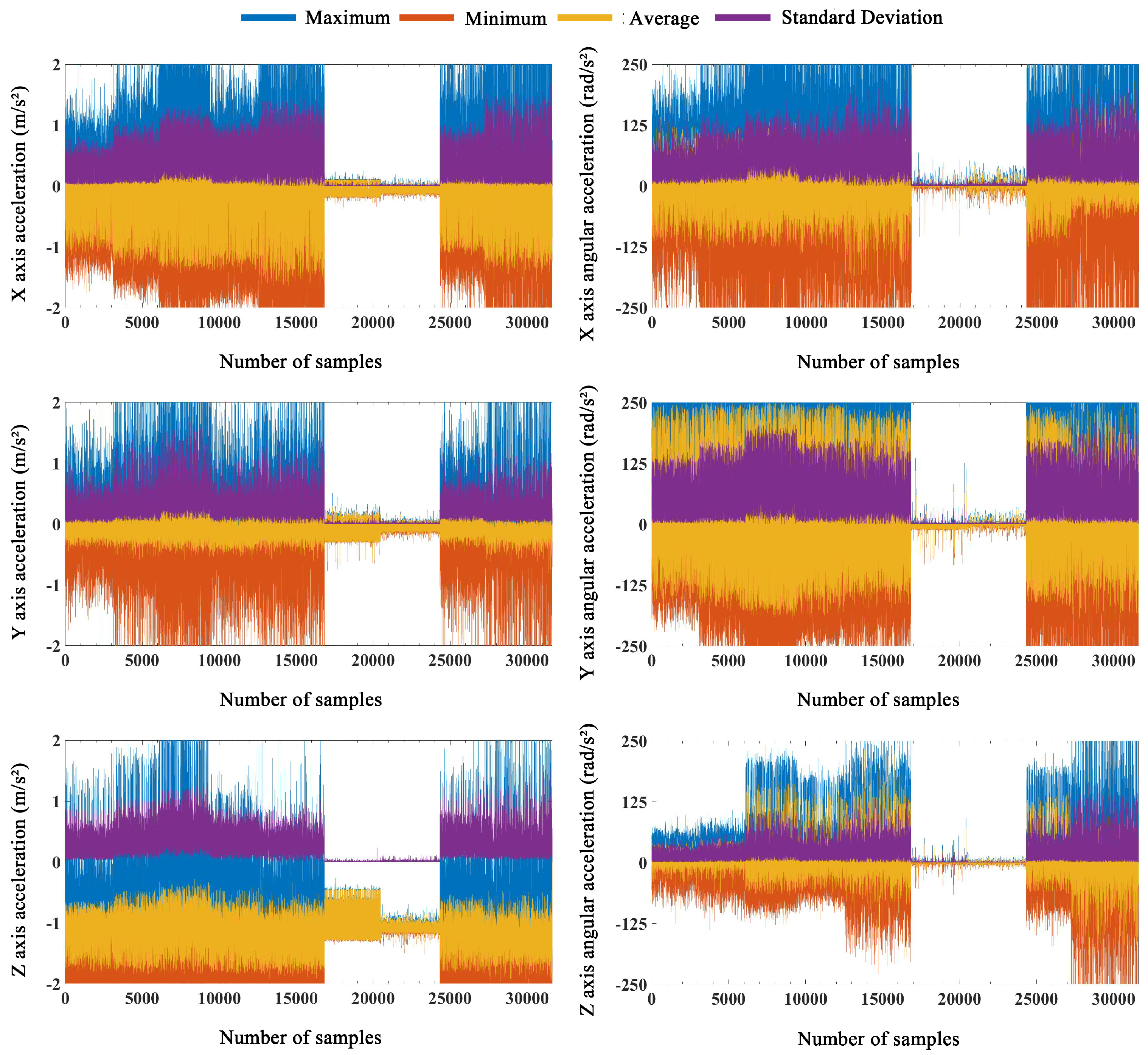

3.2. Data Preprocessing

3.3. Classification Model

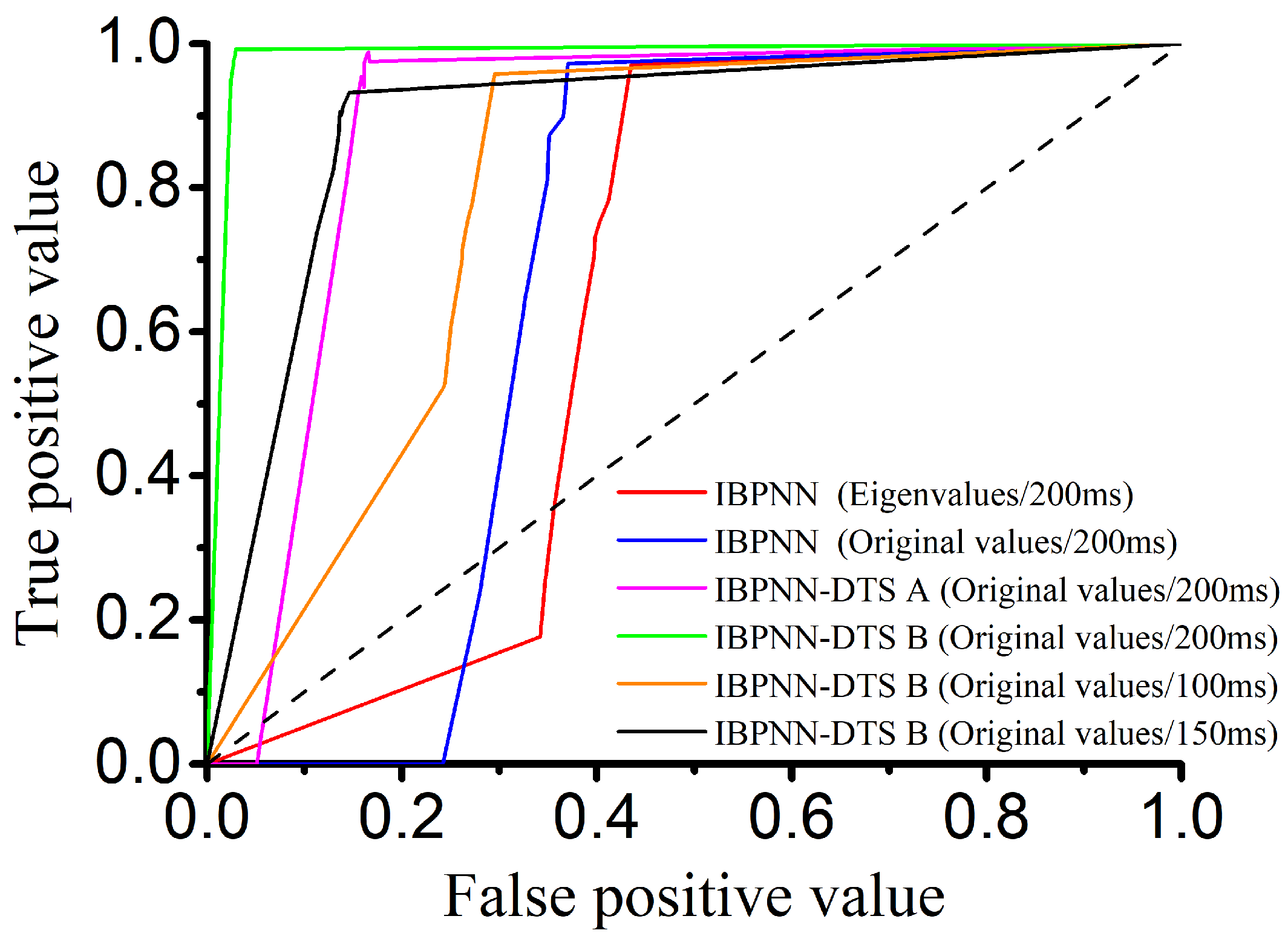

- IBPNN: Here, IBPNN is employed to identify all locomotion modes simultaneously. The input to the model is the original values and eigenvalues from the 200-ms time window, the number of IBPNN is only one, and the output is the nine locomotion modes. This type of model, which identifies all results once, has been applied in most locomotion mode recognition studies and has achieved good results [2,34,36].

- IBPNN-DTS A: Here, DTS A is employed with five IBPNNs as nodes to identify the nine locomotion modes hierarchically (Figure 1). The input to the model is the original values in the 200-ms time window, and the five IBPNNs can be trained simultaneously based on these original values. The first node divides the nine locomotion modes into four categories (a: SLW, MLW, FLW; b: RD, RA; c: SD, SA; d: sit, stand), and the other four nodes divide these four categories into the nine specific locomotion modes.

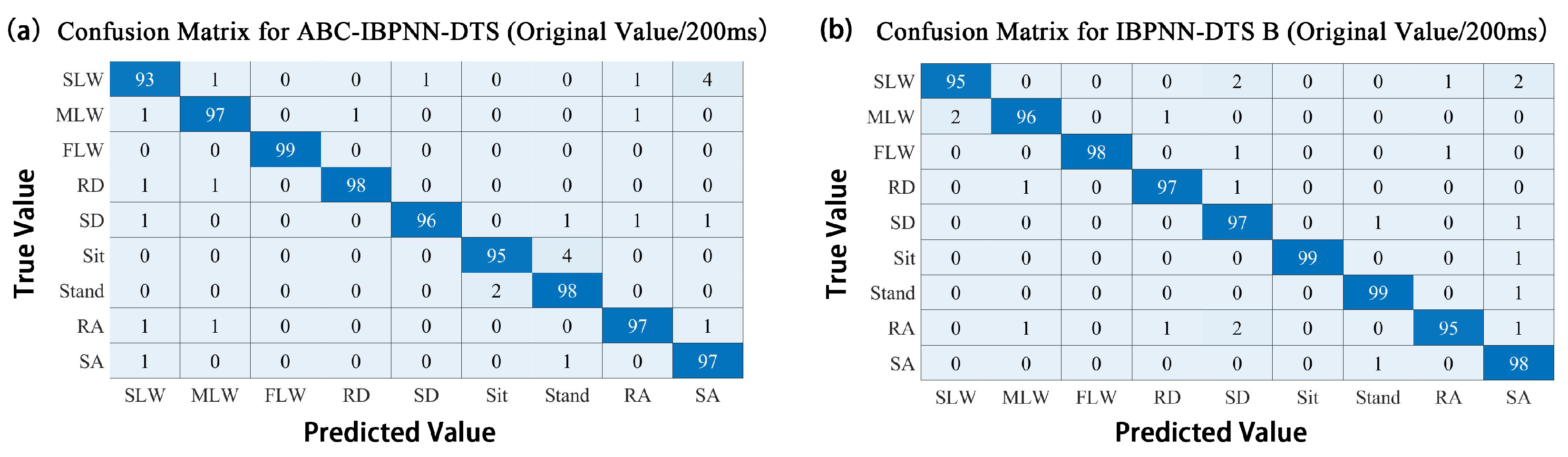

- IBPNN-DTS B: Here, DTS B is used with five IBPNNs as nodes to identify the nine locomotion modes hierarchically (Figure 2). The input to the model is the original values in the 100-ms, 150-ms, and 200-ms time windows, and the five IBPNNs can be trained simultaneously based on the original values, i.e., the same as DTS A. The first node divides the nine locomotion modes into two categories (a: sit, stand; b: others). The second node distinguishes the sit and stand modes, and the third node divides the class b into three categories (c: SD; d: SA; e: others). The fourth node divides class e into three categories (f: RA; g: RD; h: others), and the final node distinguishes SLW, MLW, and FLW from class h.

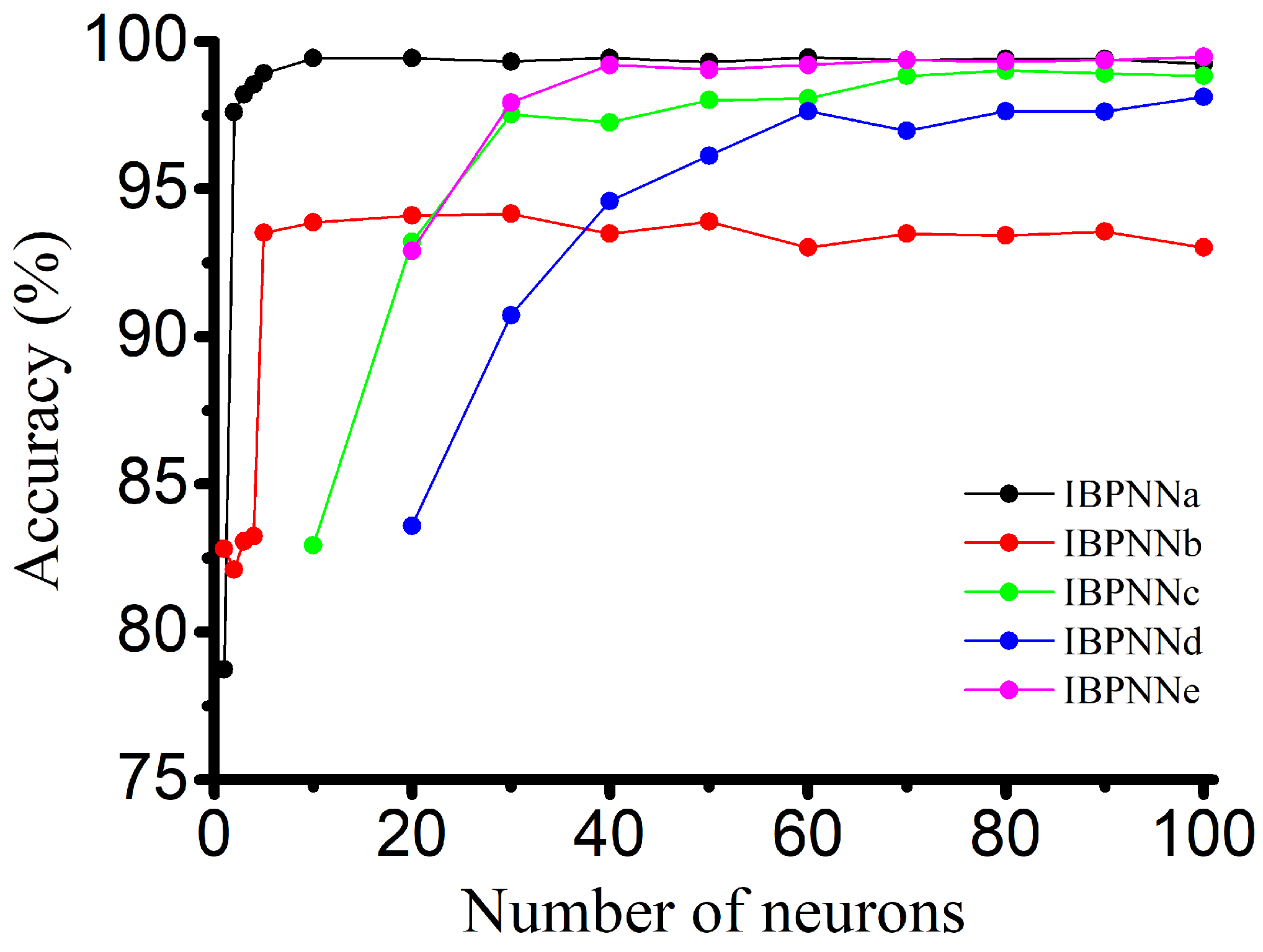

3.4. Structure and Parameter Optimization

4. Results and Discussion

4.1. Input to Classification Model

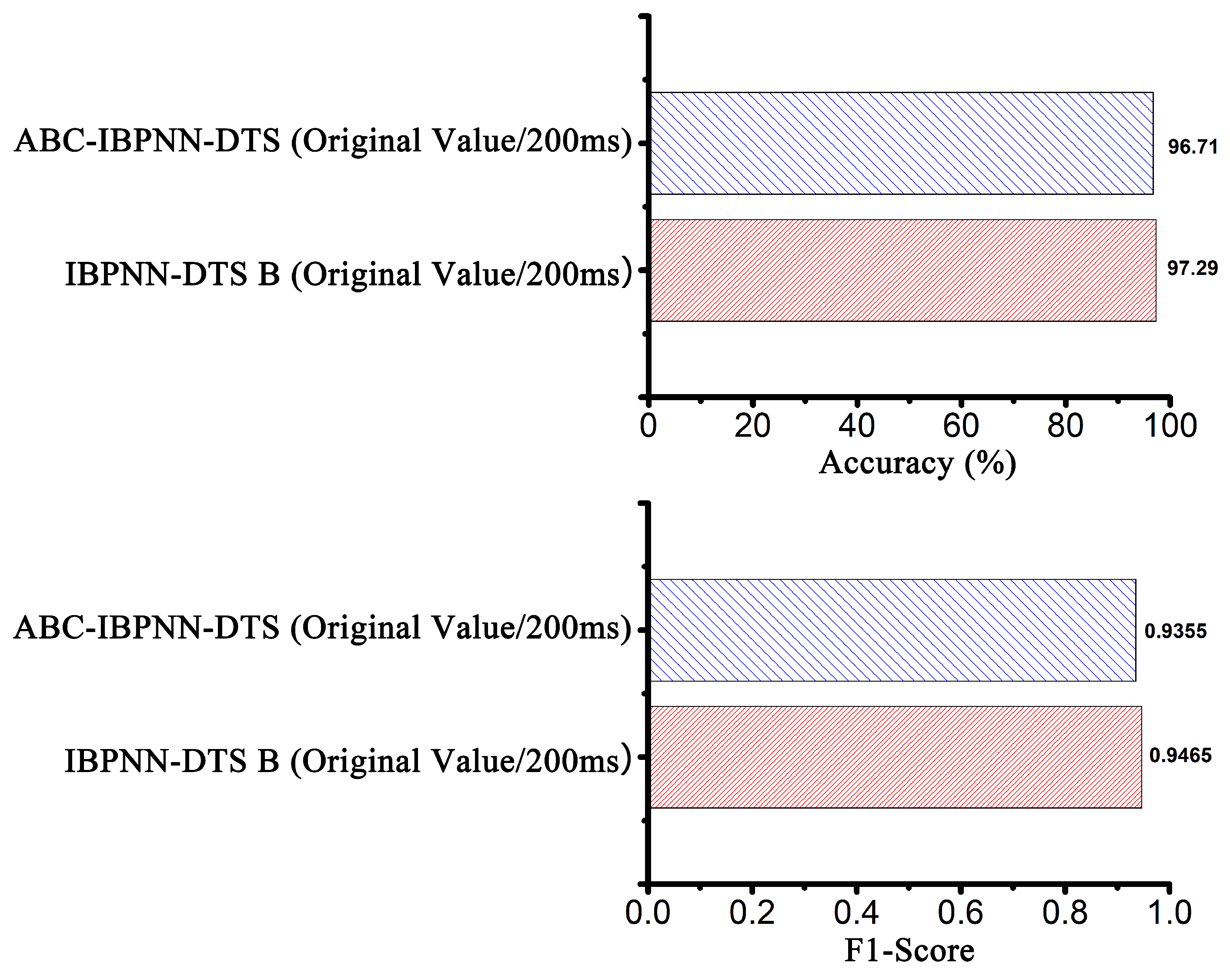

4.2. Comparison of Classification Models

4.3. Time Window Selection

4.4. Structure and Parameter Optimization Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, L.; Yang, P.; Liu, Z.J.; Song, Y.M. Human Motion Intent Recognition Based on Kernel Principal Component Analysis and Relevance Vector Machine. Robot 2017, 39, 661–669. [Google Scholar]

- Yu, Y.P.; Sun, L.N.; Zhang, F.F.; Zhang, J.F. sEMG Pattern Recognition Based on Multi Feature Fusion of Wavelet Transform. Chin. J. Sens. Actuators 2016, 29, 512–518. [Google Scholar]

- Wu, J.F.; Sun, S.Q. Research on Classification Algorithm of Reduced Support Vector Machine for Low Limb Movement Recognition. China Mech. Eng. 2011, 4, 433–438. [Google Scholar]

- Tong, L.N.; Hou, Z.G.; Peng, L.; Wang, W.Q.; Chen, Y.X.; Tan, M. Multi-channel sEMG Time Series Analysis Based Human Motion Recognition Method. Acta Autom. Sin. 2014, 40, 810–821. [Google Scholar]

- Kim, D.H.; Cho, C.Y.; Ryu, J. Real-Time Locomotion Mode Recognition Employing Correlation Feature Analysis Using EMG Pattern. Etri J. 2014, 36, 99–105. [Google Scholar] [CrossRef]

- Young, A.J.; Simon, A.M.; Hargrove, L.J. A training method for locomotion mode prediction using powered lower limb prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 671–677. [Google Scholar] [CrossRef]

- Young, A.J.; Simon, A.M.; Fey, N.P.; Hargrove, L.J. Intent recognition in a powered lower limb prosthesis using time history information. Ann. Biomed. Eng. 2014, 42, 631–641. [Google Scholar] [CrossRef]

- Liu, Z.J.; Lin, W.; Geng, Y.L.; Yang, P. Intent pattern recognition of lower-limb motion based on mechanical sensors. IEEE/CAA J. Autom. Sin. 2017, 4, 651–660. [Google Scholar] [CrossRef]

- Su, B.Y.; Wang, J.; Liu, S.Q.; Sheng, M.; Jiang, J.; Xiang, K. A CNN-based method for intent recognition using inertial measurement units and intelligent lower limb prosthesis. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1032–1042. [Google Scholar] [CrossRef]

- Liu, M.; Zhang, F.; Huang, H. An adaptive classification strategy for reliable locomotion mode recognition. Sensors 2017, 17, 2020. [Google Scholar]

- Huang, H.; Zhang, F.; Hargrove, L.J.; Dou, Z.; Rogers, D.R.; Englehart, K.B. Continuous locomotion-mode identification for prosthetic legs based on neuromuscular-mechanical fusion. IEEE Trans. Biomed. Eng. 2011, 58, 2867–2875. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, L.; Yang, P.; Liu, Z. Lower limb locomotion modes recognition based on multiple-source information and general regression neural network. Robot 2015, 37, 310–317. [Google Scholar]

- Liu, M.; Ding, W.; Huang, H. Development of an environment-aware locomotion mode recognition system for powered lower limb prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 434–443. [Google Scholar] [CrossRef] [PubMed]

- Du, L.; Zhang, F.; Liu, M.; Huang, H. Toward design of an environment-aware adaptive locomotion-mode-recognition system. IEEE Trans. Biomed. Eng. 2012, 59, 2716–2725. [Google Scholar] [PubMed]

- Khademi, G.; Simon, D. Toward Minimal-Sensing Locomotion Mode Recognition for a Powered Knee-Ankle Prosthesis. IEEE Trans. Biomed. Eng. 2020, 1, 99. [Google Scholar] [CrossRef]

- Martinez-Hernandez, U.; Mahmood, I.; Dehghani-Sanij, A.A. Probabilistic Locomotion Mode Recognition withWearable Sensors. In Converging Clinical and Engineering Research on Neurorehabilitation II; Springer: Cham, Switzerland, 2017; Volume 15, pp. 1037–1042. [Google Scholar]

- Feng, Y.G.; Chen, W.W.; Wang, Q.N. A strain gauge based locomotion mode recognition method using convolutional neural network. Adv. Robot. 2019, 33, 254–263. [Google Scholar] [CrossRef]

- Zhao, L.N.; Liu, Z.J.; Gou, B.; Yang, P. Gait Pre-recognition of active Lower Limb Prosthesis Based on Hidden Markov Model. Robot 2014, 36, 337–341. [Google Scholar]

- Su, B.Y.; Wang, J.; Liu, S.Q.; Sheng, M.; Xiang, K. An Improved Motion Intent Recognition Method for Intelligent Lower Limb Prosthesis Driven by Inertial Motion Capture Data. Acta Autom. Sin. 2020, 46, 1517–1530. [Google Scholar]

- Liu, S.Q. Motion intent Recognition Of Intelligent Lower Limb Prosthetics Based On LSTM Deep Learning Model. J. Hefei Univ. (Compr. Ed.) 2019, 36, 96–104. [Google Scholar]

- Sheng, M.; Liu, S.Q.; Su, B.Y. Motion intent recognition of intelligent lower limb prosthesis based on GMM-HMM. Chin. J. Sci. Instrum. 2019, 40, 169–178. [Google Scholar]

- Zheng, E.; Wang, Q. Noncontact capacitive sensing-based locomotion transition recognition for amputees with robotic transtibial prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 161–170. [Google Scholar] [CrossRef] [PubMed]

- Ali, R.; Atallah, L.; Lo, B.; Yang, G. Detection and Analysis of Transitional Activity in Manifold Space. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 119–128. [Google Scholar] [CrossRef] [PubMed]

- Parri, A.; Yuan, K.B.; Marconi, D.; Yan, T.F.; Crea, S.; Munih, M.; Lova, R.M.; Vitiello, N.; Wang, Q.N. Real-Time Hybrid Locomotion Mode Recognition for Lower Limb Wearable Robots. IEEE ASME Trans. Mechatron. 2017, 22, 2480–2491. [Google Scholar] [CrossRef]

- Young, A.; Hargrove, L. A classification method for user-independent intent recognition for transfemoral amputees using powered lower limb prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 217–225. [Google Scholar] [CrossRef] [PubMed]

- Stolyarov, R.; Burnett, G.; Herr, H. Translational motion tracking of leg joints for enhanced prediction of walking tasks. IEEE Trans. Biomed. Eng. 2018, 65, 763–769. [Google Scholar] [CrossRef]

- Bartlett, H.L.; Goldfarb, M. A phase variable approach for IMU-based locomotion activity recognition. IEEE Trans. Biomed. Eng. 2018, 65, 1330–1338. [Google Scholar] [CrossRef]

- Gao, F.; Liu, G.; Liang, F.; Liao, W. IMU-Based locomotion mode identification for transtibial prostheses, orthoses, and exoskeletons. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1334–1343. [Google Scholar] [CrossRef]

- Min, S.; Lee, B.; Yoon, S. Deep learning in bioinformatics. Brief. Bioinform. 2017, 18, 851–869. [Google Scholar] [CrossRef] [Green Version]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Xue, Y.; Hu, Z.H.; Jing, Y.K.; Wu, H.Y.; Li, X.Y.; Wang, J.M.; Seybert, A.; Xie, X.Q.; Lv, Q.Z. Efficacy Assessment of Ticagrelor Versus Clopidogrel in Chinese Patients with Acute Coronary Syndrome Undergoing Percutaneous Coronary Intervention by Data Mining and Machine-Learning Decision Tree Approaches. J. Clin. Pharm. Ther. 2020, 45, 1076–1086. [Google Scholar] [CrossRef]

- Billah, Q.M.; Rahman, L.; Adan, J.; Kamal, A.H.M.M.; Islam, M.K.; Shahnaz, C.; Subhana, A. Design of Intent Recognition System in a Prosthetic Leg for Automatic Switching of Locomotion Modes. In Proceedings of the TENCON 2019—2019 IEEE Region 10 Conference (TENCON), Kerala, India, 17–20 October 2019; pp. 1638–1642. [Google Scholar]

- Chen, B.; Wang, X.; Huang, Y.; Wei, K.; Wang, Q. A foot-wearable interface for locomotion mode recognition based on discrete contact force distribution. Mechatronics 2015, 32, 12–21. [Google Scholar] [CrossRef]

- Xu, D.F.; Wang, Q.N. BP Neural Network Based On-board Training for Real-time Locomotion Mode Recognition in Robotic Transtibial Prostheses. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macao, China, 3–8 November 2019; pp. 8152–8157. [Google Scholar]

- Mai, J.; Chen, W.; Zhang, S.; Xu, D.; Wang, Q. Performance analysis of hardware acceleration for locomotion mode recognition in robotic prosthetic control. In Proceedings of the IEEE International Conference on Cyborg and Bionic Systems, Shenzhen, China, 25–27 October 2018; pp. 607–611. [Google Scholar]

- Gong, C.; Xu, D.; Zhou, Z.; Vitiello, N.; Wang, Q. BPNN-based real-time locomotion mode recognition for an active pelvis orthosis with different assistive strategies. Int. J. Hum. Robot. 2020, 17, 1–18. [Google Scholar] [CrossRef]

- Song, X.Y.; Zhao, M.; Xing, S.Y. A multi-strategy fusion artificial bee colony algorithm with small population. Expert Syst. Appl. 2020, 142, 112921. [Google Scholar] [CrossRef]

- Chen, C.S.; Huang, J.F.; Huang, N.C.; Chen, K.S. MS Location Estimation Based on the Artificial Bee Colony Algorithm. Sensors 2020, 20, 5597. [Google Scholar] [CrossRef]

- Gao, H.; Li, H.L.; Liu, Y.; Lu, H.M.; Kim, H.; Pun, C.M. High-quality-guided artificial bee colony algorithm for designing loudspeaker. Neural Comput. Appl. 2020, 32, 4473–4480. [Google Scholar] [CrossRef]

- Merkle, D.; Middendorf, M.; Schmeck, H. Ant colony optimization for resource-constrained project scheduling. IEEE Trans. Evol. Comput. 2002, 6, 333–346. [Google Scholar] [CrossRef] [Green Version]

- Kannan, S.; Slochanal, S.M.R.; Subbaraj, P.; Padhy, N.P. Application of particle swarm optimization technique and its variants to generation expansion planning problem. Electr. Power Syst. Res. 2004, 70, 203–210. [Google Scholar] [CrossRef]

- Lee, J.T.; Bartlett, H.L.; Goldfarb, M. Design of a Semipowered Stance-Control Swing-Assist Transfemoral Prosthesis. IEEE/ASME Trans. Mechatron. 2020, 25, 175–184. [Google Scholar] [CrossRef]

| Locomotion Modes | Velocity | Platform |

|---|---|---|

| SLW | 3 km/h | Treadmill |

| MLW | 4.2 km/h | Treadmill |

| FLW | 6 km/h | Treadmill |

| RD | 4.2 km/h | 9 degrees ramp on treadmill |

| SD | Slow | Stairs |

| Sit | Slow | Chair |

| Stand | Slow | Chair |

| RA | 4.2 km/h | 9 degrees ramp on treadmill |

| SA | Slow | Stairs |

| IBPNN | Function | Number of Neurons |

|---|---|---|

| IBPNNa | Distinguish between (sit, stand)and (others) | 10 |

| IBPNNb | Distinguish between sit and stand | 30 |

| IBPNNc | Distinguish between SD, SA and (the others) | 30 |

| IBPNNd | Distinguish between RD, RA and (the others) | 60 |

| IBPNNe | Distinguish between SLW, MLW, FLW | 40 |

| Reference | Sensors | Feature Extraction | Classifier | Number of Locomotion Modes | Accurary |

|---|---|---|---|---|---|

| Young et al. 2014 [6] | 3 IMUs and 1 pressure sensor | Manual | LDA | 5 | 93.9% |

| Young et al. 2014 [7] | 1 IMU and 1 axial load cell.etc | Manual | Dynamic Bayesian Network | 5 | 94.7% |

| Liu et al. 2017 [8] | 1 IMU and 2 pressure sensors | Manual | HMM | 5 | 95.8% |

| Feng et al. 2019 [17] | 1 strain gauge | Automatic | CNN | 3/5 | 92.53%/89.11% |

| Gao et al. 2020 [28] | 1 IMU | Manual | Terrain Geometry | 5 | 98.5% |

| Our Method | 1 IMU | Automatic | IBPNN-DTS B/ ABC-IBPNN-DTS | 9 | 97.29%/ 96.71% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, Y.; Liu, C.; Yan, L.; Ren, L. Design of Decision Tree Structure with Improved BPNN Nodes for High-Accuracy Locomotion Mode Recognition Using a Single IMU. Sensors 2021, 21, 526. https://doi.org/10.3390/s21020526

Han Y, Liu C, Yan L, Ren L. Design of Decision Tree Structure with Improved BPNN Nodes for High-Accuracy Locomotion Mode Recognition Using a Single IMU. Sensors. 2021; 21(2):526. https://doi.org/10.3390/s21020526

Chicago/Turabian StyleHan, Yang, Chunbao Liu, Lingyun Yan, and Lei Ren. 2021. "Design of Decision Tree Structure with Improved BPNN Nodes for High-Accuracy Locomotion Mode Recognition Using a Single IMU" Sensors 21, no. 2: 526. https://doi.org/10.3390/s21020526

APA StyleHan, Y., Liu, C., Yan, L., & Ren, L. (2021). Design of Decision Tree Structure with Improved BPNN Nodes for High-Accuracy Locomotion Mode Recognition Using a Single IMU. Sensors, 21(2), 526. https://doi.org/10.3390/s21020526