Using Artificial Intelligence to Achieve Auxiliary Training of Table Tennis Based on Inertial Perception Data

Abstract

:1. Introduction

- Section 2 introduces our crucial preparation work.

- Section 3 presents the two critical processes of human table tennis action recognition and human table tennis action evaluation in detail.

- Section 4 provides the results to verify the performance of the method.

- Section 5 summarizes the significant developments and their impact and provides ideas for future work.

2. Preparation Work

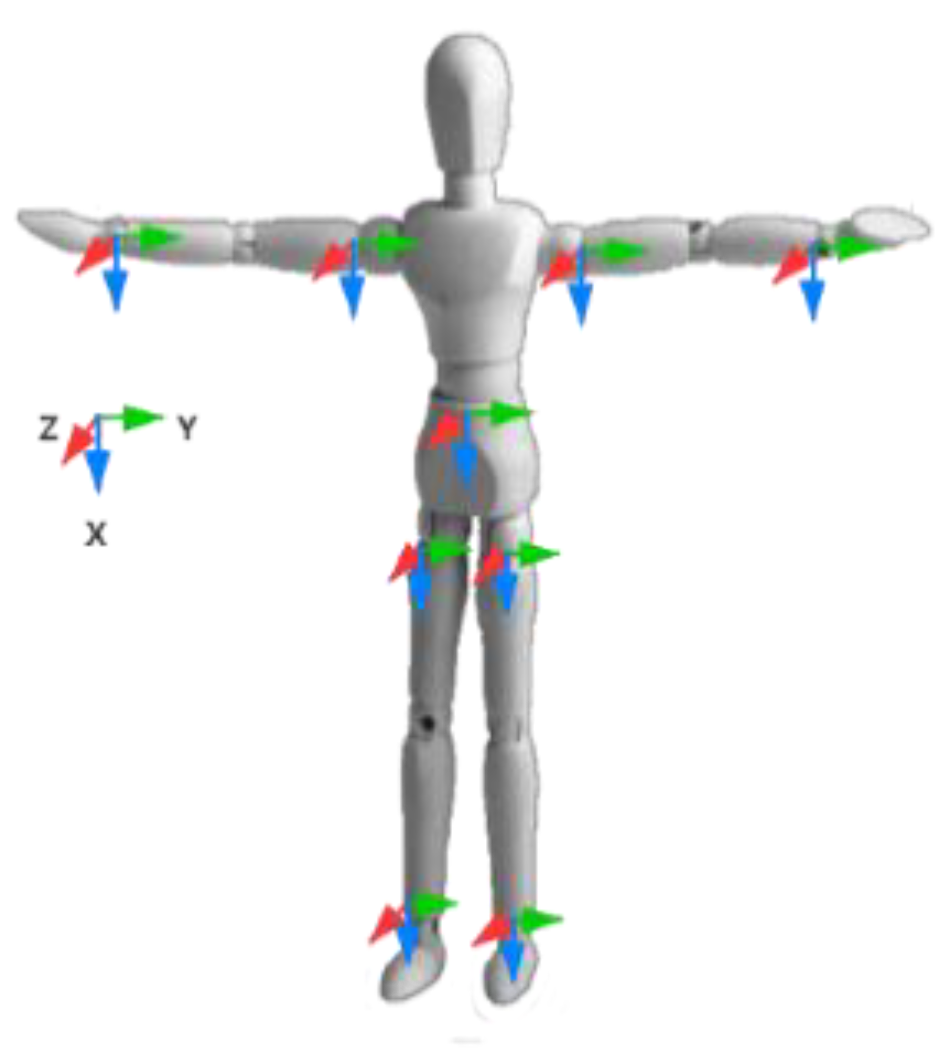

2.1. Coordinate System Definition and Inertial Sensors

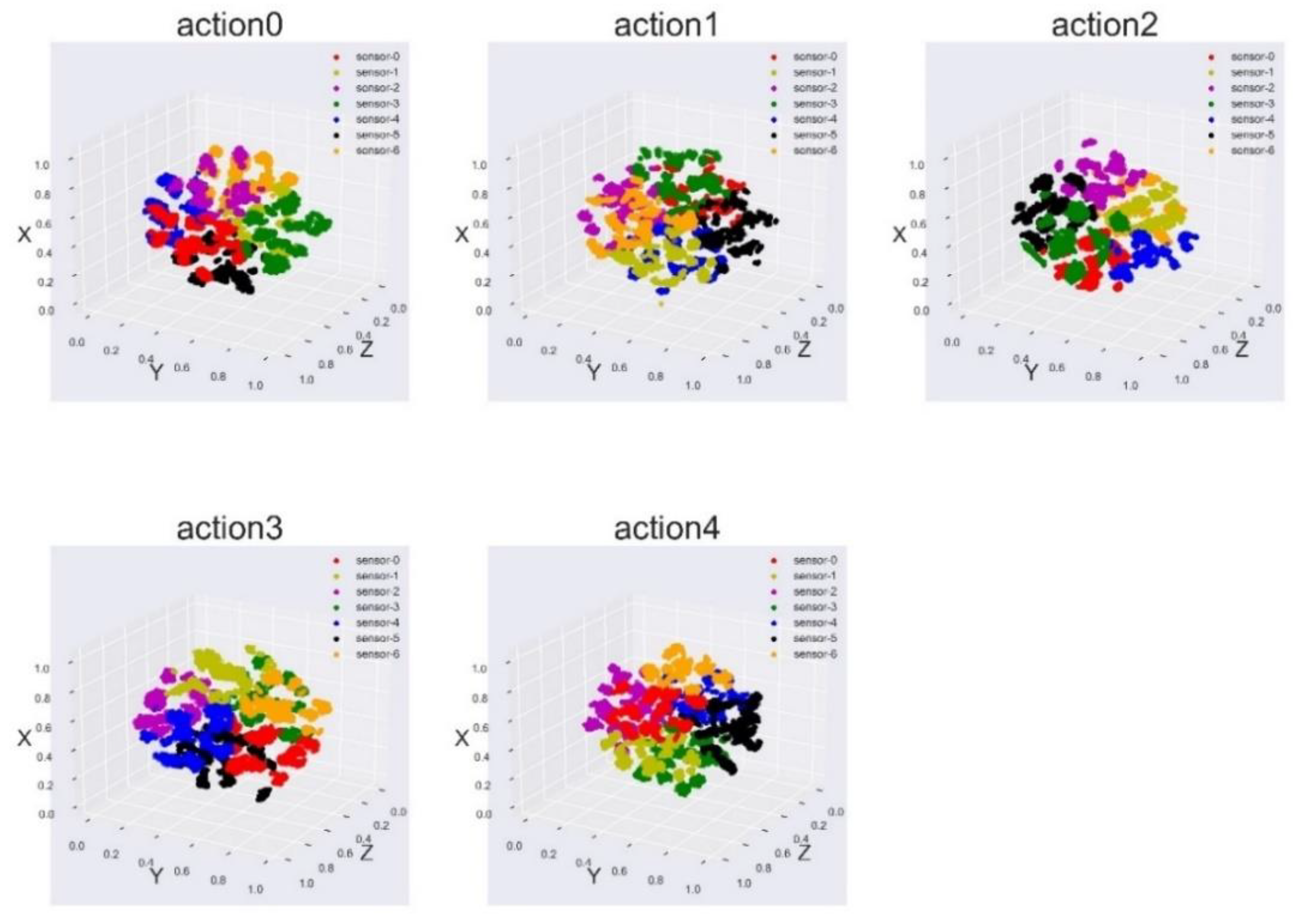

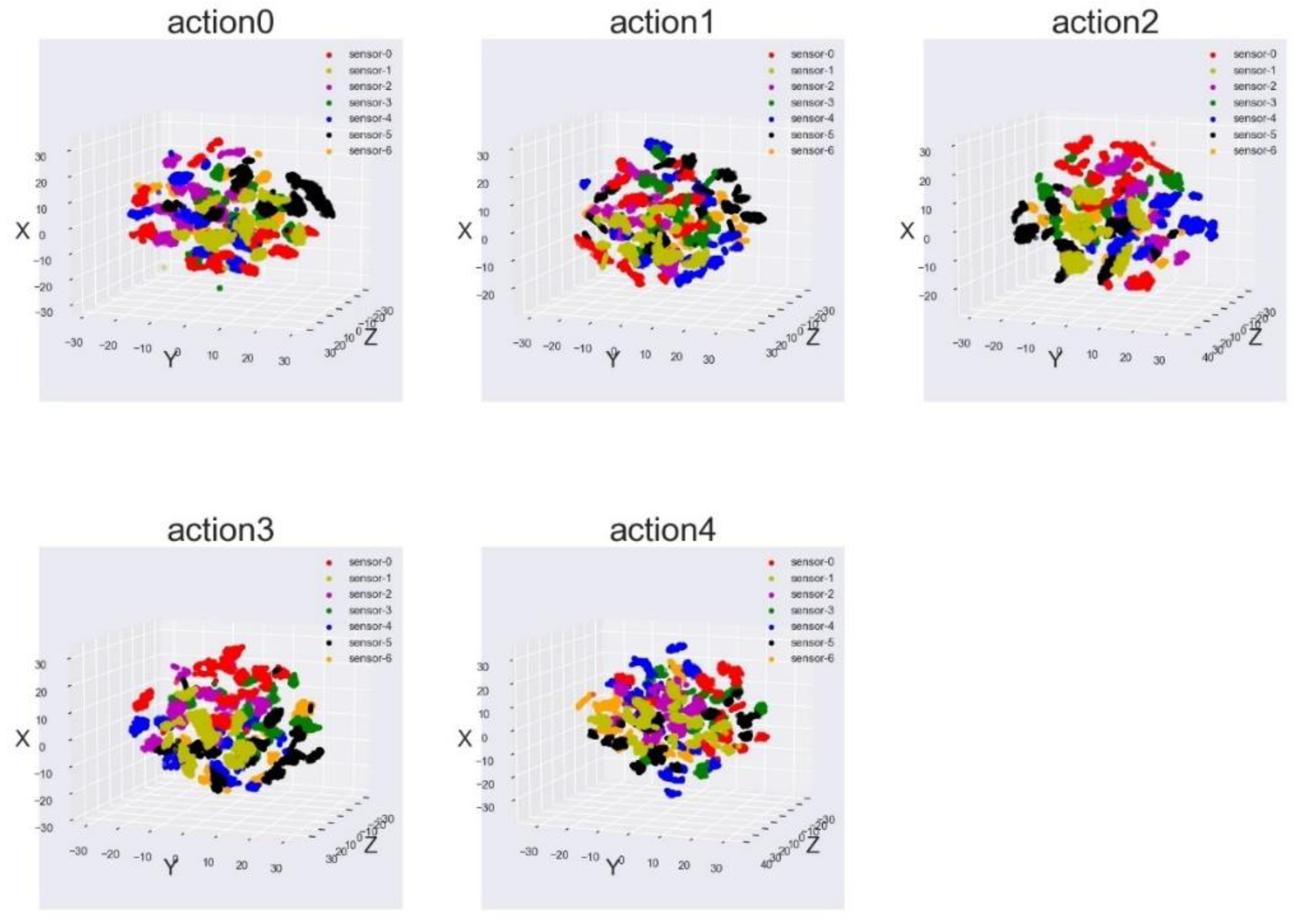

2.2. Human Body Action Representation Based on Inertial Data

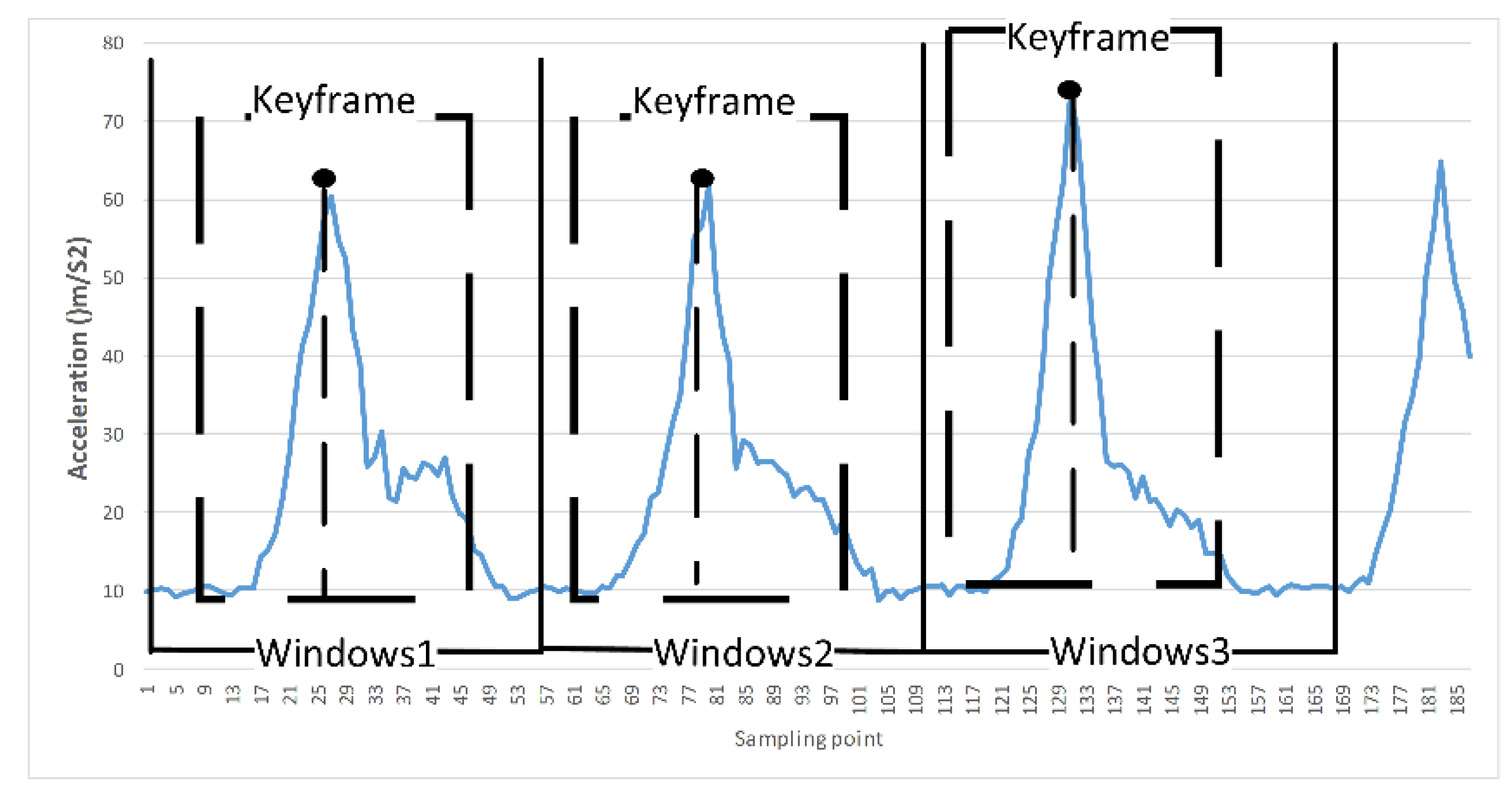

2.3. Wearable Inertial Sensing Data Window Segmentation Point Detection and Key Frame Extraction

3. Implementation of Key Models and Methods

3.1. Multi-Dimensional Feature Fusion Convolutional Neural Network

- (1)

- The introduction of a 1 × 1 convolution kernel is used to organize information across channels and reduce the dimensionality of input channels, which effectively improves the expressive ability of the network. Using the cross-channel capability of the 1 × 1 convolution kernel, the features that are highly correlated but are in different channels at the same spatial location are connected together. In addition, the 1 × 1 convolution kernel has a small amount of calculation, and it can also add a layer of features and nonlinear changes.

- (2)

- In order to avoid the excessive number of convolution maps after cascading, we adjusted the 1 × 1 convolution to the 3 × 3 convolution in the improved Inception network and reduced the dimensionality of the output feature map.

- (3)

- The global average pooling (AdaptiveAvgPool) method is used to replace the fully connected layer. The fully connected layer occupies most of the parameters in the convolutional neural network, which is the most prone to overfitting and the most time-consuming place in the entire network. The global average pooling method has the following advantages: First, it can better associate the category with the feature map of the last convolutional layer. The second is to reduce the number of parameters. The global average pooling layer has no parameters, which can prevent overfitting in this layer. The third is to integrate global spatial information. For the convenience of classification, we still use the fully connected layer in the last layer of the network. However, after the global pooling process, the amount of data entering the fully connected layer has been greatly reduced.

- (4)

- We have combined the advantages of multiple network structure designs and methods, which can effectively reduce network complexity and weight parameters. It is expected that a better recognition speed can be obtained on the premise of maintaining the recognition effect.

3.2. Human Table Tennis Action Assessment Method

3.2.1. Overall Assessment of Human Ping-Pong Actions

3.2.2. Fine-Grained Evaluation of Human Table Tennis Actions

4. Experiment and Result Analysis

4.1. Data

4.2. Multi-Dimensional Feature Fusion Convolutional Neural Network Experimental Results

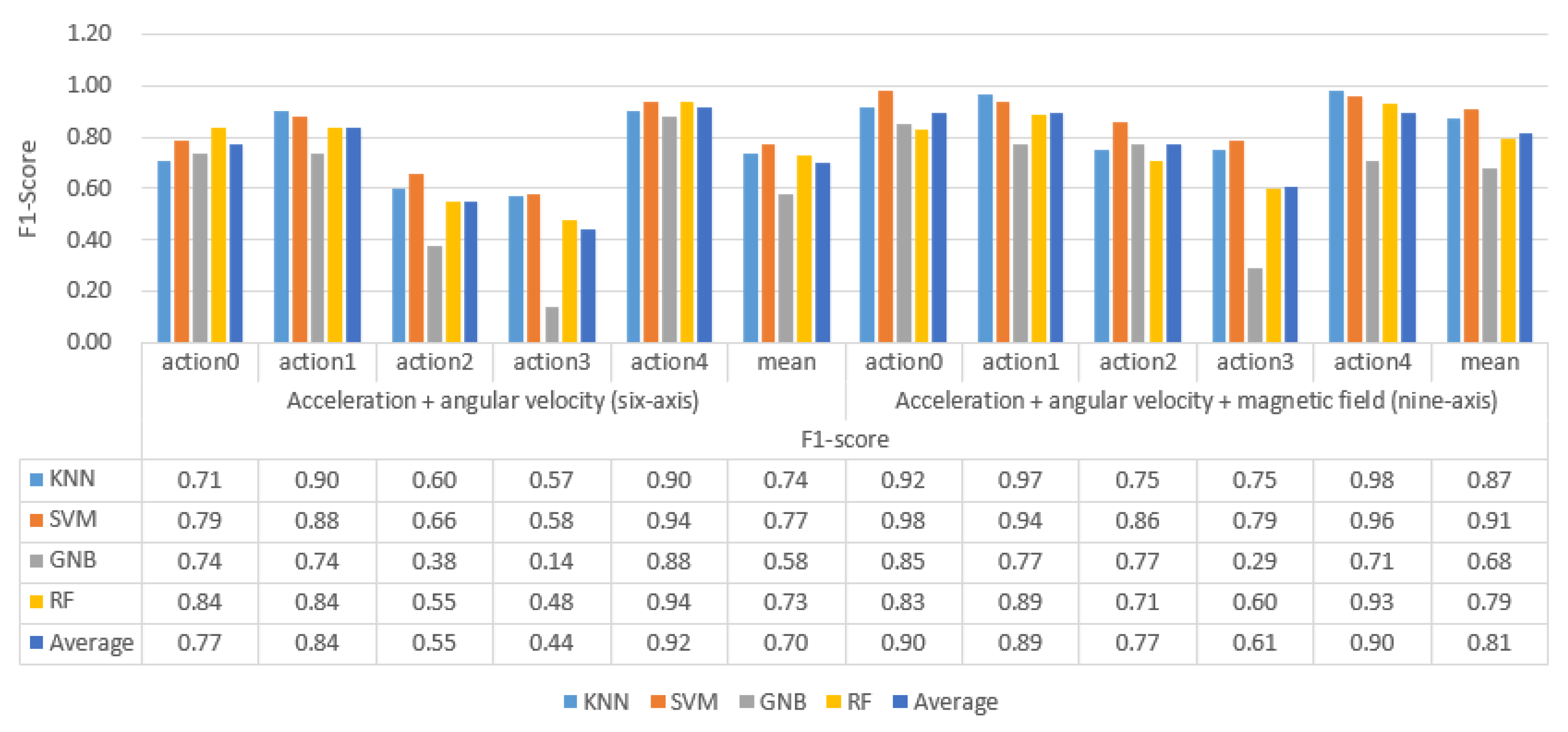

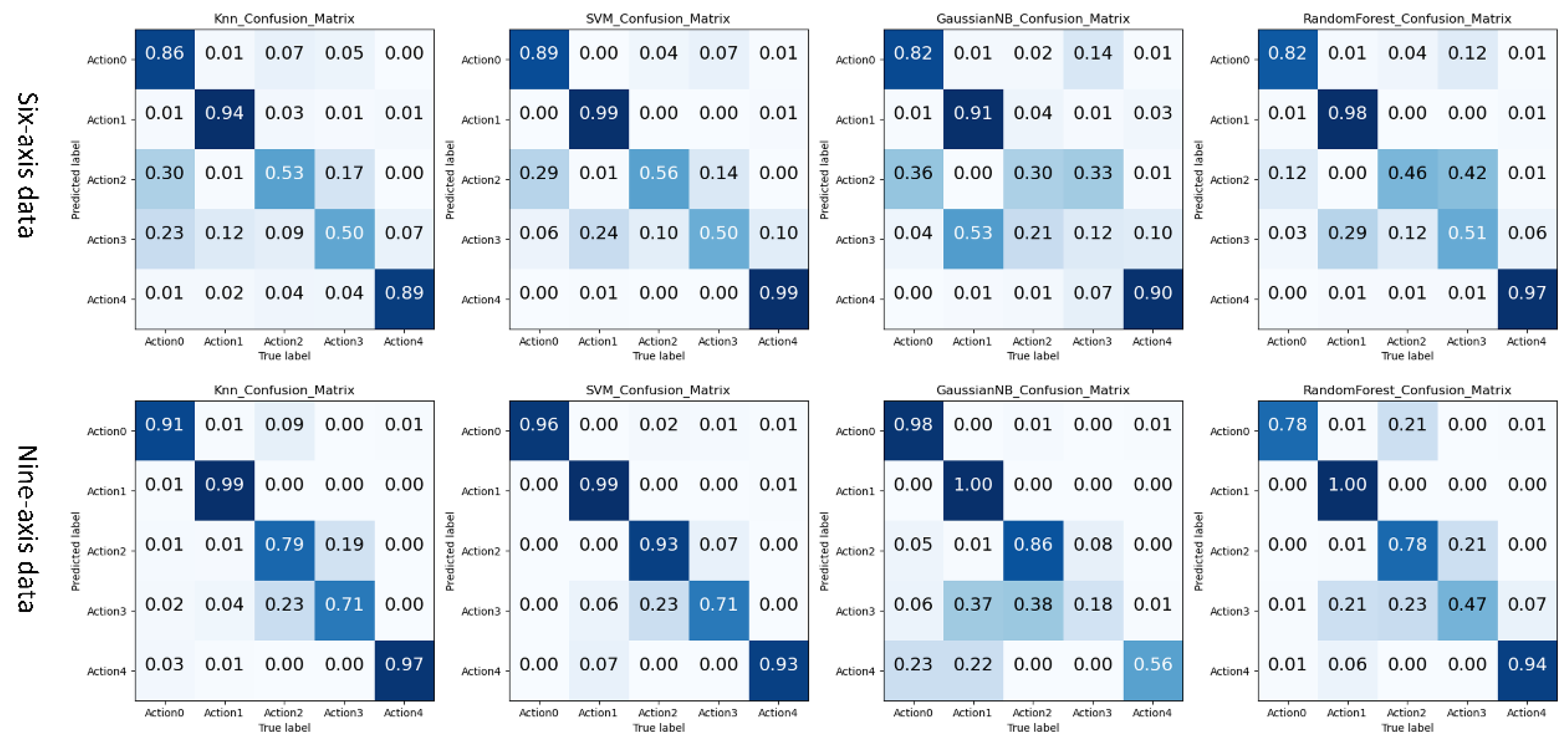

4.2.1. Traditional Machine Learning Action Recognition Experiment and Result Analysis Based on Artificial Features

- (1)

- The recognition result of the classifier on the professional test set

- i.

- The machine learning method based on the time domain and frequency domain has a specific classification effect, but it is not ideal.

- ii.

- The average recognition accuracy of 9-axis inertial data is 0.11 higher than that of 6-axis inertial data. More valuable features can be obtained from 9-axis inertial data.

- iii.

- There are differences in the classification performance of the four classifiers on the ping-pong action inertial data set, from large to small as SVM > KNN > RF > GaussianBN.

- iv.

- The classification effect of the five actions shows a specific rule: action 1 and action 4 are easier to identify, action 0 can be determined, and there will be confusion in the recognition results between action 2 and action 3. This classification rule is most evident on the 6-axis test data.

- (2)

- The recognition results of the classifier on the non-professional test set

- i.

- Almost all classifiers have lost their classification ability on the 6-axis and 9-axis non-professional test data sets.

- ii.

- The artificially extracted time domain and frequency domain features strongly depend on the data set, and the generalization is poor.

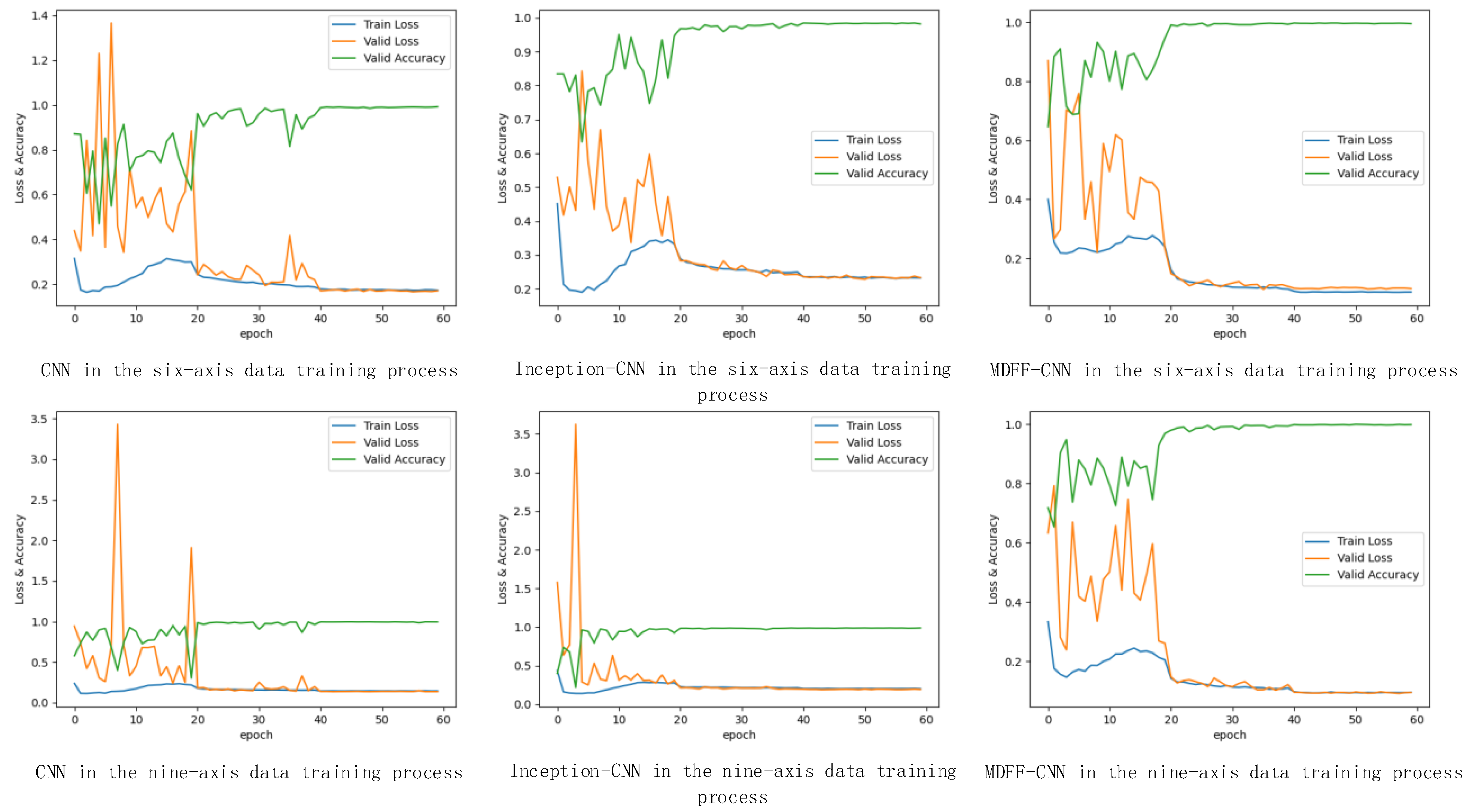

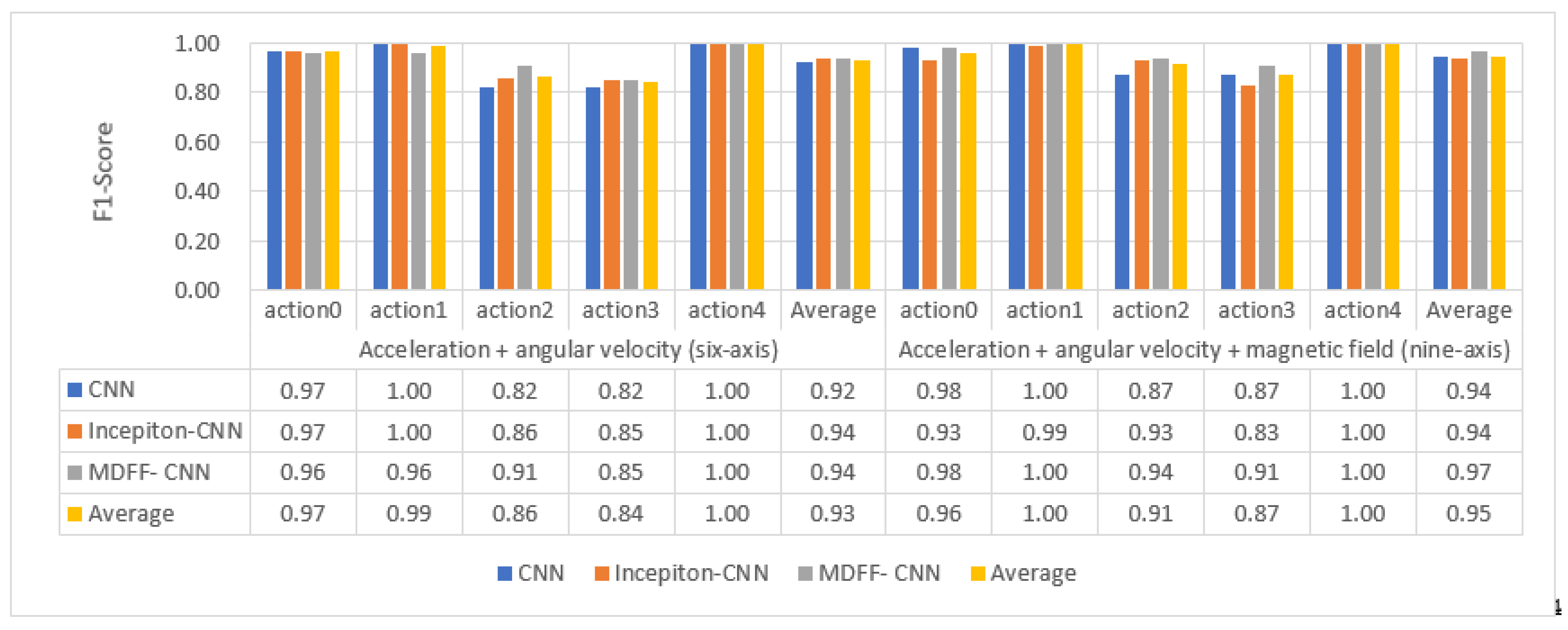

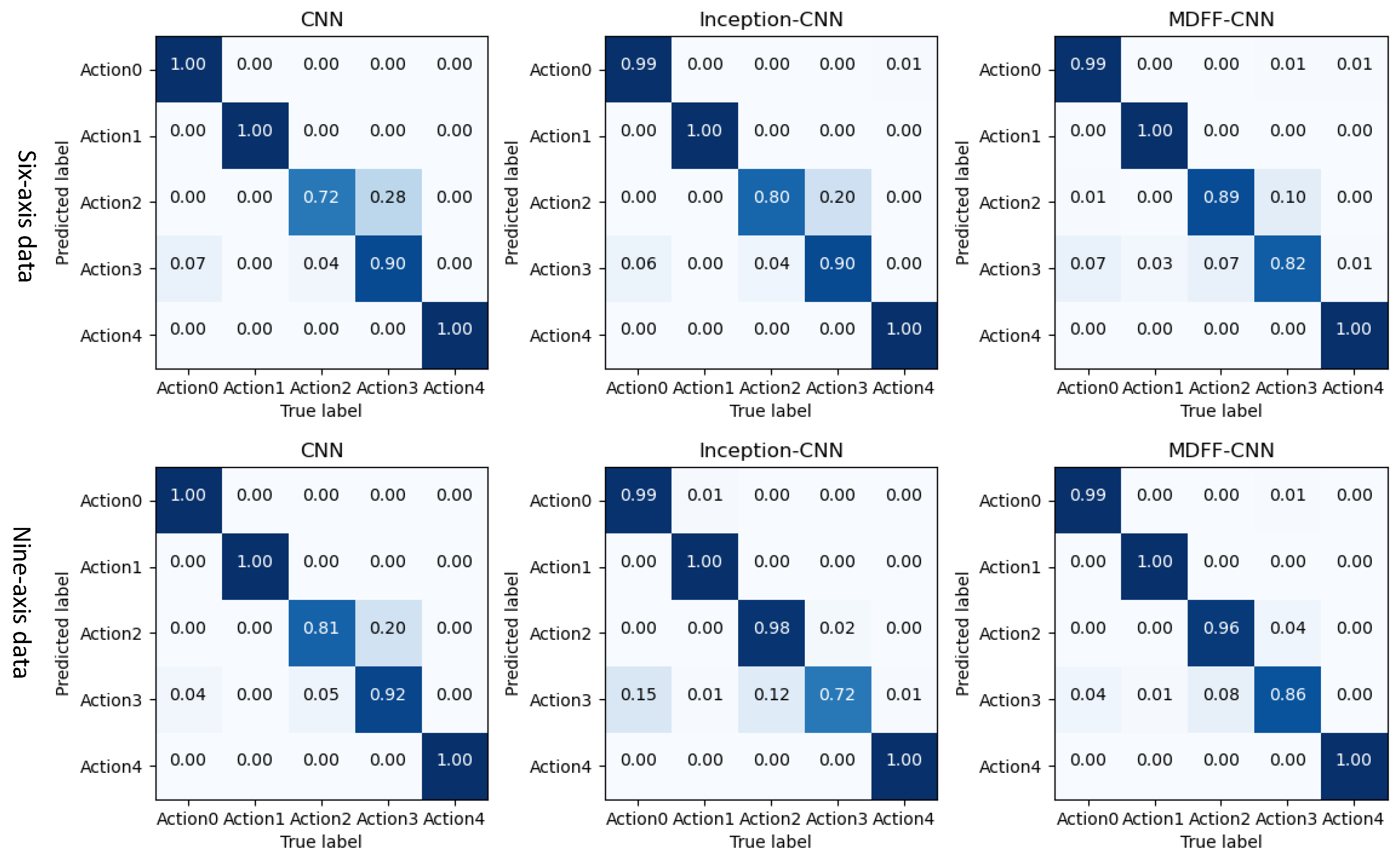

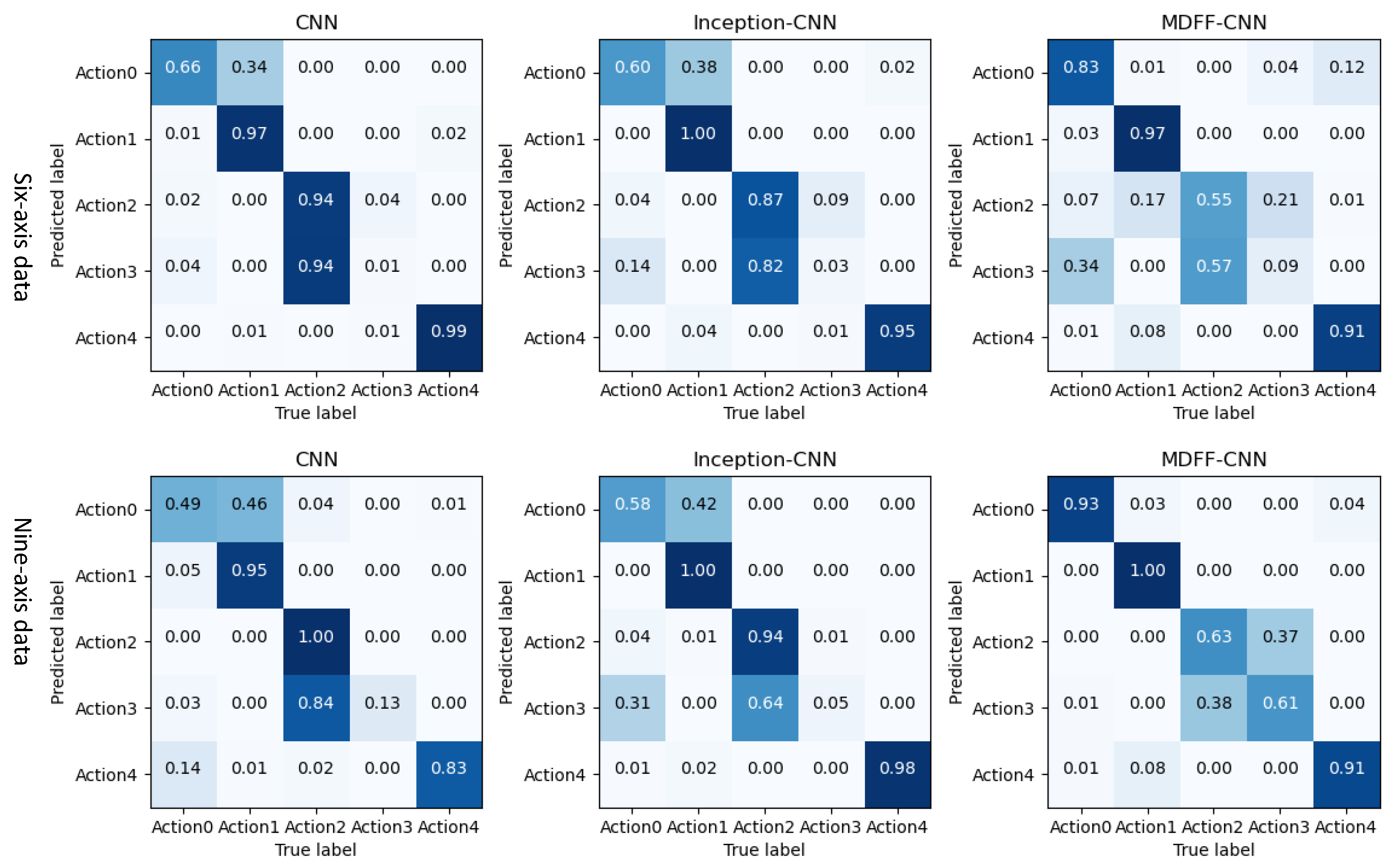

4.2.2. Action Recognition Experiment and Result Analysis Based on Convolutional Neural Network

- (1)

- Recognition results of three types of convolutional neural networks on professional test sets

- i.

- The recognition results of the three convolutional neural network models are all good, the average recognition rate F1 value is above 0.9, and the ideal recognition effect is obtained.

- ii.

- The average recognition rate of 9-axis inertial data is 0.02 higher than that of 6-axis inertial data.

- iii.

- The recognition effect of action 2 and action 3 is lower than the other three actions.

- iv.

- The recognition effect of the three convolutional neural networks on the test set is better than the machine learning classification method based on artificial features.

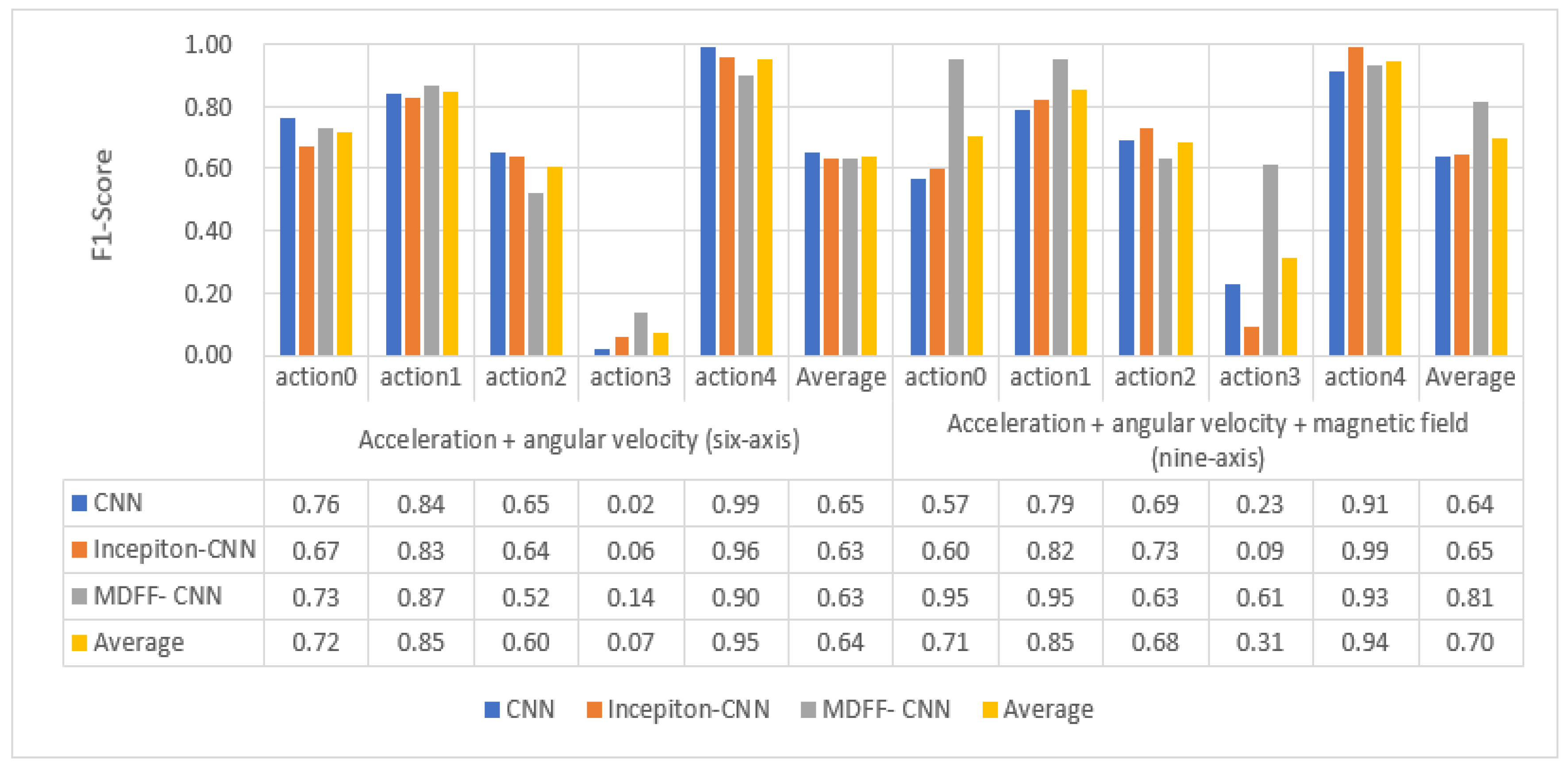

- (2)

- The recognition results of three convolutional neural networks on the non-professional test set

4.3. Human Table Tennis Action Assessment Results

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Zhiwei, W. Research on the Development Process and Promotion Path of Table Tennis in My Country. Master’s Thesis, Liaoning Normal University, Dalian, China, 2014. [Google Scholar]

- Zilu, Q.; Dongqing, Y. Summary of Research on Table Tennis in China. J. Guangzhou Inst. Phys. Educ. 2018, 38, 98–103. [Google Scholar]

- Chu, K.; Zhu, Z. Design for running auxiliary trainer based on RF technology. In Proceedings of the 2011 International Conference on Electronics, Communications and Control (ICECC), Ningbo, China, 9–11 September 2011. [Google Scholar]

- Wu, H.-j.; Zhao, H.-y.; Zhao, J. Application of the cloud computing technology in the sports training. In Proceedings of the 2013 3rd International Conference on Consumer Electronics, Communications and Networks, Xianning, China, 20–22 November 2013. [Google Scholar]

- Zhou, J. Virtual reality sports auxiliary training system based on embedded system and computer technology. Microprocess. Microsyst. 2021, 82, 103944. [Google Scholar] [CrossRef]

- Moeslund, T.B.; Hilton, A.; Krüger, V. A survey of advances in vision-based human motion capture and analysis. Comput. Vis. Image Underst. 2006, 104, 90–126. [Google Scholar] [CrossRef]

- Vishwakarma, S.; Agrawal, A. A survey on activity recognition and behavior understanding in video surveillance. Vis. Comput. 2013, 29, 983–1009. [Google Scholar] [CrossRef]

- Zhang, H.-B.; Zhang, Y.-X.; Zhong, B.; Lei, Q.; Yang, L.; Du, J.-X.; Chen, D.-S. A Comprehensive Survey of Vision-Based Human Action Recognition Methods. Sensors 2019, 19, 1005. [Google Scholar] [CrossRef] [Green Version]

- Chakraborty, B.K.; Sarma, D.; Bhuyan, M.K.; MacDorman, K.F. Review of constraints on vision-based gesture recognition for human–computer interaction. IET Comput. Vis. 2018, 12, 3–15. [Google Scholar] [CrossRef]

- Dawn, D.D.; Shaikh, S.H. A comprehensive survey of human action recognition with spatio-temporal interest point (STIP) detector. Vis. Comput. 2016, 32, 289–306. [Google Scholar] [CrossRef]

- Meng, M.; Drira, H.; Boonaert, J. Distances evolution analysis for online and off-line human object interaction recognition. Image Vis. Comput. 2018, 70, 32–45. [Google Scholar] [CrossRef] [Green Version]

- Ibrahim, M.S.; Muralidharan, S.; Deng, Z.; Vahdat, A.; Mori, G. A hierarchical deep temporal model for group activity recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1971–1980. [Google Scholar]

- Dang, L.M.; Min, K.; Wang, H.; Piran, J.; Lee, C.H.; Moon, H. Sensor-based and vision-based human activity recognition: A comprehensive survey. Pattern Recognit. 2020, 108, 107561. [Google Scholar] [CrossRef]

- Yang, X.; Tian, Y. Super normal vector for human activity recognition with depth cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1028–1039. [Google Scholar] [CrossRef]

- Beddiar, D.R.; Nini, B.; Sabokrou, M.; Hadid, A. Vision-based human activity recognition: A survey. Multimed. Tools Appl. 2020, 79, 30509–30555. [Google Scholar] [CrossRef]

- Murray, T.S.; Mendat, D.R.; Sanni, K.A.; Pouliquen, P.O.; Andreou, A.G. Bio-Inspired Human Action Recognition With a Micro-Doppler Sonar System. IEEE Access 2017, 6, 28388–28403. [Google Scholar] [CrossRef]

- Dura-Bernal, S.; Garreau, G.; Georgiou, J.; Andreou, A.G.; Denham, S.L.; Wennekers, T. Multimodal integration of micro-Doppler sonar and auditory signals for behavior classification with convolutional networks. Int. J. Neural Syst. 2013, 23, 1350021. [Google Scholar] [CrossRef] [PubMed]

- Avci, A.; Bosch, S.; Marin-Perianu, M.; Marin-Perianu, R.; Havinga, P. Activity recognition using inertial sensing for healthcare, wellbeing and sports applications: A survey. In Proceedings of the 23th International Conference on Architecture of Computing Systems, Hannover, Germany, 22–23 February 2010. [Google Scholar]

- Montalto, F.; Guerra, C.; Bianchi, V.; de Munari, I.; Ciampolini, P. MuSA: Wearable multi sensor assistant for human activity recognition and indoor localization. In Ambient Assisted Living; Springer: Cham, Switzerland, 2015; pp. 81–92. [Google Scholar]

- Liu, Y. Research on Human Motion Capture and Recognition based on Wearable Sensors. Ph.D. Thesis, Harbin Institute of Technology, Harbin, China, 2020. [Google Scholar]

- Keysers, C.; Kohler, E.; Nanetti, L.; Fogassi, L.; Gallese, V. Audiovisual mirror neurons and action recognition. Exp. Brain Res. 2003, 153, 628–636. [Google Scholar] [CrossRef]

- Cédras, C.; Shah, M. Motion-based recognition a survey. Image Vis. Comput. 1995, 13, 129–155. [Google Scholar] [CrossRef]

- Gavrila, D.M. The Visual Analysis of Human Movement: A Survey. Comput. Vis. Image Underst. 1999, 73, 82–98. [Google Scholar] [CrossRef] [Green Version]

- Jovanov, E.; Milenkovic, A.; Otto, C.; de Groen, P.C. A wireless body area network of intelligent motion sensors for computer assisted physical rehabilitation. J. NeuroEngineering Rehabil. 2005, 2, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Yang, A.Y.; Jafari, R.; Sastry, S.S.; Bajcsy, R. Distributed recognition of human actions using wearable motion sensor networks. J. Ambient. Intell. Smart Environ. 2009, 1, 103–115. [Google Scholar] [CrossRef] [Green Version]

- Banos, O.; Galvez, J.-M.; Damas, M.; Pomares, H.; Rojas, I. Window Size Impact in Human Activity Recognition. Sensors 2014, 14, 6474–6499. [Google Scholar] [CrossRef] [Green Version]

- Wang, G.; Li, Q.; Wang, L.; Wang, W.; Wu, M.; Liu, T. Impact of Sliding Window Length in Indoor Human Motion Modes and Pose Pattern Recognition Based on Smartphone Sensors. Sensors 2018, 18, 1965. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aminian, K.; Rezakhanlou, K.; de Andres, E.; Fritsch, C.; Leyvraz, P.F.; Robert, P. Temporal feature estimation during walking using miniature accelerometers: An analysis of gait improvement after hip arthroplasty. Med Biol. Eng. Comput. 1999, 37, 686–691. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Selles, R.; Formanoy, M.; Bussmann, J.; Janssens, P.; Stam, H. Automated estimation of initial and terminal contact timing using accelerometers; development and validation in transtibial amputees and controls. IEEE Trans. Neural Syst. Rehabil. Eng. 2005, 13, 81–88. [Google Scholar] [CrossRef] [PubMed]

- Nyan, M.; Tay, F.; Seah, K.; Sitoh, Y. Classification of gait patterns in the time–frequency domain. J. Biomech. 2006, 39, 2647–2656. [Google Scholar] [CrossRef] [PubMed]

- Yan, Z.; Chakraborty, D.; Mittal, S.; Misra, A.; Aberer, K. An exploration with online complex activity recognition using cellphone accelerometer. In Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication, Zurich, Switzerland, 8–12 September 2013. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

| Visual Action Recognition | Acoustic Action Recognition | Inertial Action Recognition | |

|---|---|---|---|

| Flexibility | General | General | High |

| Accuracy | Extremely high | Low | High |

| Sampling frequency | Extremely high | Low | High |

| Movable range | Small | General | Big |

| Computational efficiency | General | General | High |

| Multi-target capture | General | General | High |

| Cost | High | Low | Low |

| Calibration time | Long | General | Short |

| Environmental constraints | Strong light, Occlusion | Temperature, air | Magnetic interference |

| Serial Number | Gender | Age | Height (cm) | Weight (kg) |

|---|---|---|---|---|

| Person 0 | Man | 24 | 175 | 70 |

| Person 1 | Woman | 24 | 158 | 47 |

| Person 2 | Man | 21 | 172 | 72 |

| Person 3 | Woman | 22 | 168 | 53 |

| Person 4 | Woman | 22 | 163 | 65 |

| Person 5 | Woman | 21 | 160 | 50 |

| Person 6 | Woman | 22 | 155 | 60 |

| Person 7 | Man | 27 | 165 | 68 |

| Person 8 | Man | 27 | 175 | 79 |

| Person 9 | Man | 23 | 176 | 72 |

| Person 10 | Man | 22 | 185 | 75 |

| Person 11 | Woman | 28 | 163 | 55 |

| Action Name | Number of Actions | Number of Data Frames |

|---|---|---|

| Forehand Stroke | 200 | 12,549 |

| Fast push | 200 | 12,619 |

| Speedo | 200 | 12,449 |

| Loop | 200 | 11,899 |

| Backhand Push | 200 | 13,287 |

| Sum | 1000 | 62,803 |

| Action | Training Sets | Validation Sets | Major-Test Sets | Amateur-Test Sets |

|---|---|---|---|---|

| Action 0 | 1411 | 389 | 200 | 180 |

| Action 1 | 1402 | 398 | 200 | 180 |

| Action 2 | 1384 | 416 | 200 | 180 |

| Action 3 | 1398 | 402 | 200 | 180 |

| Action 4 | 1405 | 395 | 200 | 180 |

| Sum | 7000 | 2000 | 1000 | 900 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yanan, P.; Jilong, Y.; Heng, Z. Using Artificial Intelligence to Achieve Auxiliary Training of Table Tennis Based on Inertial Perception Data. Sensors 2021, 21, 6685. https://doi.org/10.3390/s21196685

Yanan P, Jilong Y, Heng Z. Using Artificial Intelligence to Achieve Auxiliary Training of Table Tennis Based on Inertial Perception Data. Sensors. 2021; 21(19):6685. https://doi.org/10.3390/s21196685

Chicago/Turabian StyleYanan, Pu, Yan Jilong, and Zhang Heng. 2021. "Using Artificial Intelligence to Achieve Auxiliary Training of Table Tennis Based on Inertial Perception Data" Sensors 21, no. 19: 6685. https://doi.org/10.3390/s21196685

APA StyleYanan, P., Jilong, Y., & Heng, Z. (2021). Using Artificial Intelligence to Achieve Auxiliary Training of Table Tennis Based on Inertial Perception Data. Sensors, 21(19), 6685. https://doi.org/10.3390/s21196685