An Instance Segmentation Model for Strawberry Diseases Based on Mask R-CNN

Abstract

:1. Introduction

Contribution

- 1.

- We introduce a new dataset towards advancing the current state of research in instance segmentation systems for predicting strawberry diseases.

- 2.

- We then propose an optimized model based on the Mask R-CNN architecture to effectively perform instance segmentation for seven different categories of strawberry diseases.

- 3.

- We investigate a range of augmentation techniques to determine the most suitable augmentations for our novel dataset.

2. Related Work

2.1. Classical vs. Deep Learning-Based Approaches

2.2. The Problem of Detection

2.2.1. Classification Approaches

2.2.2. Detection Approaches

2.2.3. Segmentation Approaches

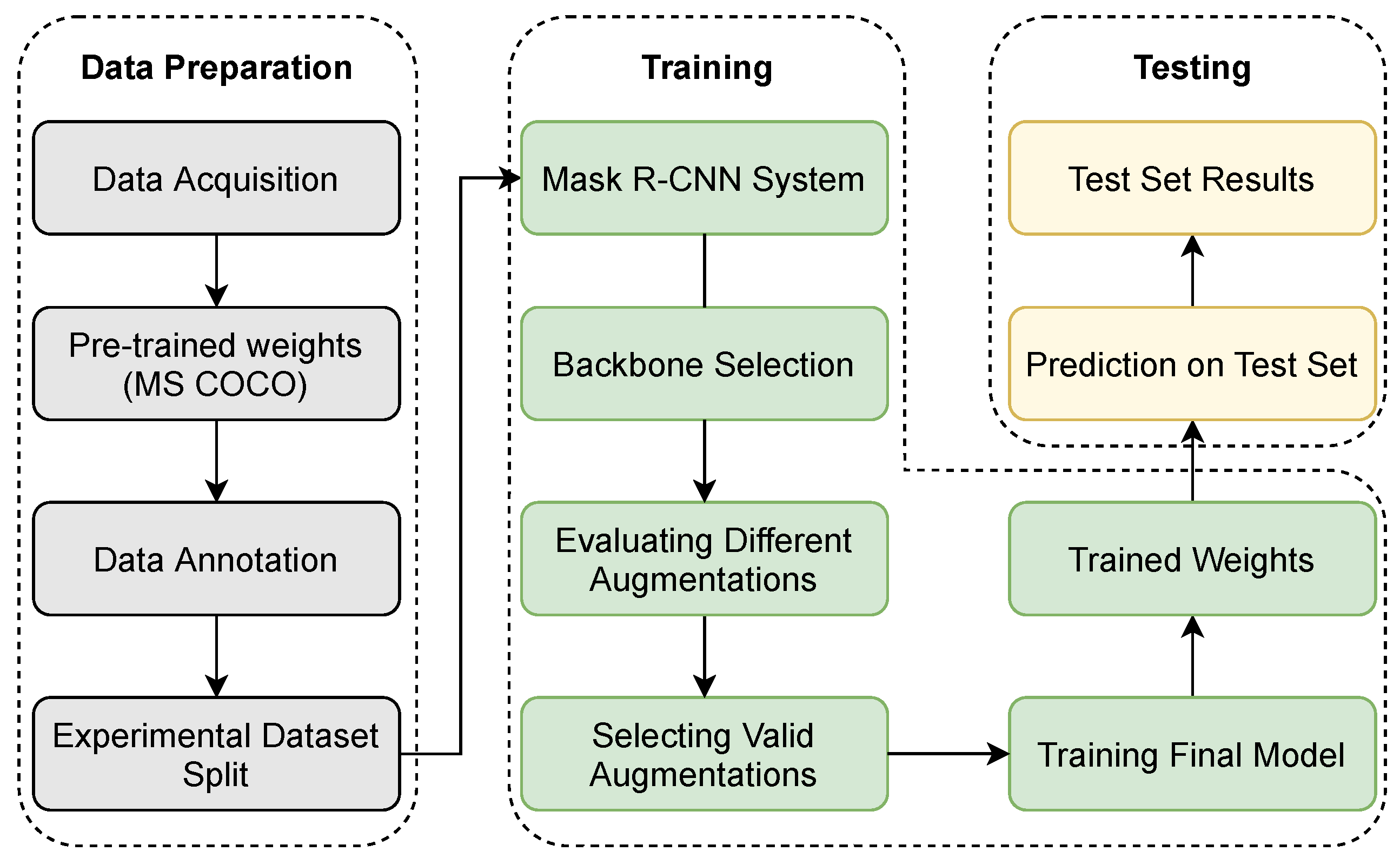

3. Materials and Methods

3.1. Dataset

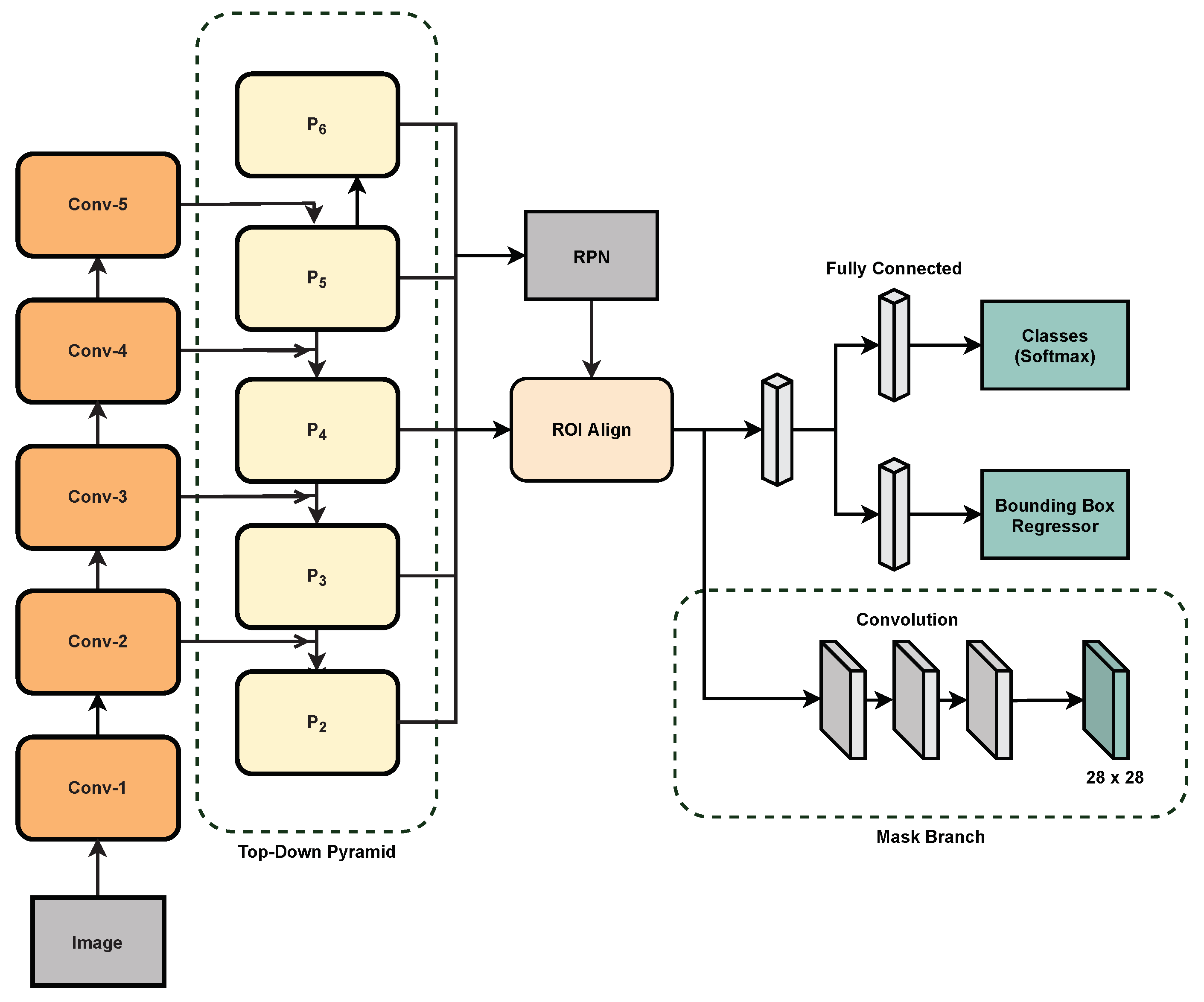

3.2. Mask R-CNN Architecture

3.3. Evaluation Metrics

3.4. Multi-Task Loss

4. Experimental Results and Discussion

4.1. Implementation Details

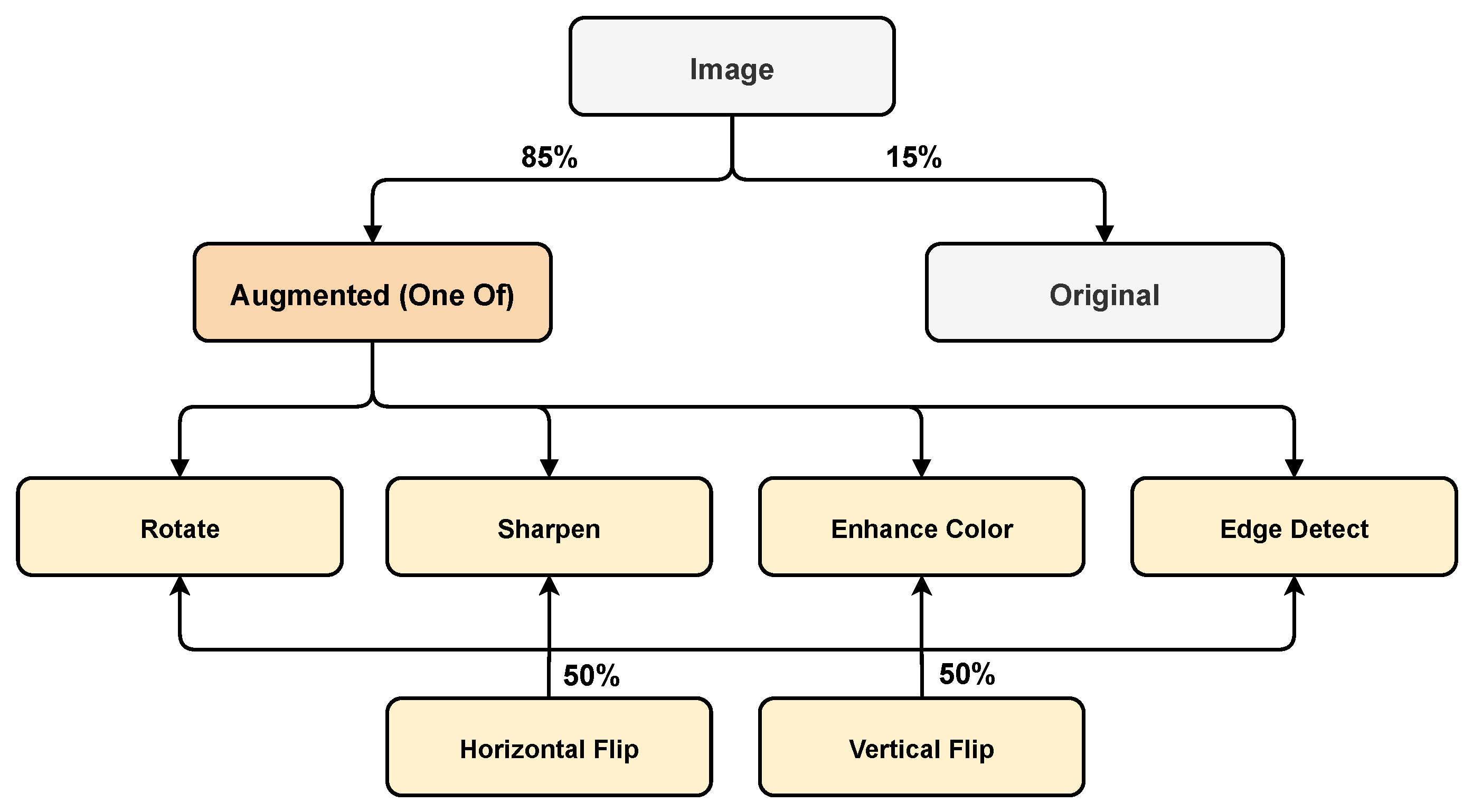

4.2. Augmentation Graph

4.3. Selection of Best Performers

4.4. Results on the Improved Dataset

4.5. Analysis of Model Predictions

4.6. Disease Severity Level Analysis

4.7. Comparison with Relevant Literature

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fuentes, A.F.; Yoon, S.; Lee, J.; Park, D.S. High-performance deep neural network-based tomato plant diseases and pests diagnosis system with refinement filter bank. Front. Plant Sci. 2018, 9, 1162. [Google Scholar] [CrossRef] [Green Version]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Plant diseases and pests detection based on deep learning: A review. Plant Methods 2021, 17, 22. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.; Han, Y.K.; Park, J.H.; Lee, J. Improved Vision-Based Detection of Strawberry Diseases Using a Deep Neural Network. Front. Plant Sci. 2021, 11, 2040. [Google Scholar] [CrossRef] [PubMed]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fergus, R.; Ranzato, M.; Salakhutdinov, R.; Taylor, G.; Yu, K. Deep learning methods for vision. In Proceedings of the CVPR 2012 Tutorial, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Cortes, C.; Vapnik, V. Support vector machine. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Sys. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef] [Green Version]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [Green Version]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep learning vs. traditional computer vision. In Proceedings of the Science and Information Conference, Las Vegas, NV, USA, 25–26 April 2019. [Google Scholar]

- Russakovsky, O.; Lin, Y.; Yu, K.; Fei-Fei, L. Object-centric spatial pooling for image classification. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [Green Version]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Fang, T.; Chen, P.; Zhang, J.; Wang, B. Crop leaf disease grade identification based on an improved convolutional neural network. J. Electron. Imaging 2020, 29, 013004. [Google Scholar] [CrossRef]

- Fuentes, A.; Lee, J.; Lee, Y.; Yoon, S.; Park, D.S. Anomaly Detection of Plant Diseases and Insects using Convolutional Neural Networks. In Proceedings of the International Society for Ecological Modelling Global Conference, Ramada Plaza, Jeju, Korea, 17–21 September 2017. [Google Scholar]

- Hasan, M.J.; Mahbub, S.; Alom, M.S.; Nasim, M.A. Rice Disease Identification and Classification by Integrating Support Vector Machine With Deep Convolutional Neural Network. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), East West University, Dhaka, Bangladesh, 3–5 May 2019. [Google Scholar]

- Yalcin, H.; Razavi, S. Plant classification using convolutional neural networks. In Proceedings of the 2016 Fifth International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Tianjin, China, 18–20 July 2016. [Google Scholar]

- DeChant, C.; Wiesner-Hanks, T.; Chen, S.; Stewart, E.L.; Yosinski, J.; Gore, M.A.; Nelson, R.J.; Lipson, H. Automated identification of northern leaf blight-infected maize plants from field imagery using deep learning. Phytopathology 2017, 107, 1426–1432. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, B.; Zhang, Y.; He, D.; Li, Y. Identification of apple leaf diseases based on deep convolutional neural networks. Symmetry 2018, 10, 11. [Google Scholar] [CrossRef] [Green Version]

- Barbedo, J.G.A. Impact of dataset size and variety on the effectiveness of deep learning and transfer learning for plant disease classification. Comput. Electron. Agric. 2018, 153, 46–53. [Google Scholar] [CrossRef]

- Ramcharan, A.; Baranowski, K.; McCloskey, P.; Ahmed, B.; Legg, J.; Hughes, D.P. Deep learning for image-based cassava disease detection. Front. Plant Sci. 2017, 8, 1852. [Google Scholar] [CrossRef] [Green Version]

- Kawasaki, Y.; Uga, H.; Kagiwada, S.; Iyatomi, H. Basic study of automated diagnosis of viral plant diseases using convolutional neural networks. In Proceedings of the International Symposium on Visual Computing, Las Vegas, NV, USA, 14–16 December 2015. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on computer Vision, Santiago, Chile, 3–7 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Qiao, S.; Chen, L.C.; Yuille, A. Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [Green Version]

- Fuentes, A.; Yoon, S.; Park, D.S. Deep learning-based phenotyping system with glocal description of plant anomalies and symptoms. Front. Plant Sci. 2019, 10, 1321. [Google Scholar] [CrossRef]

- Ozguven, M.M.; Adem, K. Automatic detection and classification of leaf spot disease in sugar beet using deep learning algorithms. Phys. A Stat. Mech. Appl. 2019, 535, 122537. [Google Scholar] [CrossRef]

- Nie, X.; Wang, L.; Ding, H.; Xu, M. Strawberry verticillium wilt detection network based on multi-task learning and attention. IEEE Access 2019, 7, 170003–170011. [Google Scholar] [CrossRef]

- Ramcharan, A.; McCloskey, P.; Baranowski, K.; Mbilinyi, N.; Mrisho, L.; Ndalahwa, M.; Legg, J.; Hughes, D.P. A mobile-based deep learning model for cassava disease diagnosis. Front. Plant Sci. 2019, 10, 272. [Google Scholar] [CrossRef] [Green Version]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, X.; Girshick, R.; He, K.; Dollár, P. Tensormask: A foundation for dense object segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Stewart, E.L.; Wiesner-Hanks, T.; Kaczmar, N.; DeChant, C.; Wu, H.; Lipson, H.; Nelson, R.J.; Gore, M.A. Quantitative phenotyping of Northern Leaf Blight in UAV images using deep learning. Remote Sens. 2019, 11, 2209. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Qi, F.; Sun, M.; Qu, J.; Xue, J. Identification of tomato disease types and detection of infected areas based on deep convolutional neural networks and object detection techniques. Comput. Intell. NeuroSci. 2019, 2019, 9142753. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Ilyas, T.; Umraiz, M.; Mannan, Z.I.; Kim, H. Ced-net: Crops and weeds segmentation for smart farming using a small cascaded encoder-decoder architecture. Electronics 2020, 9, 1602. [Google Scholar] [CrossRef]

- Ilyas, T.; Umraiz, M.; Khan, A.; Kim, H. DAM: Hierarchical Adaptive Feature Selection Using Convolution Encoder Decoder Network for Strawberry Segmentation. Front. Plant Sci. 2021, 12, 189. [Google Scholar] [CrossRef]

- Lin, K.; Gong, L.; Huang, Y.; Liu, C.; Pan, J. Deep learning-based segmentation and quantification of cucumber powdery mildew using convolutional neural network. Front. Plant Sci. 2019, 10, 155. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Zhang, S. Segmentation of Corn Leaf Disease Based on Fully Convolution Neural Network. Acad. J. Comput. Inf. Sci. 2018, 1, 9–18. [Google Scholar]

- Abdulla, W. Mask R-CNN for Object Detection and Instance Segmentation on Keras and TensorFlow. 2017. Available online: https://github.com/matterport/Mask_RCNN (accessed on 18 March 2021).

- Peres, N.A.; Rondon, S.I.; Price, J.F.; Cantliffe, D.J. Angular leaf spot: A bacterial disease in strawberries in Florida. EDIS 2005, 2005, 199. [Google Scholar]

- Mertely, J.C.; Peres, N.A. Anthracnose fruit rot of strawberry. EDIS 2012, 2012, 207. [Google Scholar]

- Burlakoti, R.R.; Zandstra, J.; Jackson, K. Evaluation of epidemics and weather-based fungicide application programmes in controlling anthracnose fruit rot of day-neutral strawberry in outdoor field and protected cultivation systems. Can. J. Plant Pathol. 2014, 36, 64–72. [Google Scholar] [CrossRef]

- Tanović, B.; Delibašić, G.; Milivojević, J.; Nikolić, M. Characterization of Botrytis cinerea isolates from small fruits and grapevine in Serbia. Arch. Biol. Sci. 2009, 61, 419–429. [Google Scholar] [CrossRef]

- Salami, P.; Ahmadi, H.; Keyhani, A.; Sarsaifee, M. Strawberry post-harvest energy losses in Iran. Researcher 2010, 2, 67–73. [Google Scholar]

- Mertely, J.C.; Peres, N.A. Botrytis fruit rot or gray mold of strawberry. EDIS 2018, 2018, 230. [Google Scholar] [CrossRef]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Ouyang, C.; Li, D.; Wang, J.; Wang, S.; Han, Y. The research of the strawberry disease identification based on image processing and pattern recognition. In Proceedings of the International Conference on Computer and Computing Technologies in Agriculture, Zhangjiajie, China, 19–21 October 2012. [Google Scholar]

| Authors | Network Architecture | Disease Category | Pre-Training Dataset | Fine-Tuning Dataset | No. of Classes | Accuracy(%) |

|---|---|---|---|---|---|---|

| Liu et al. [29] | AlexNet | Apple | ImageNet | Field Collected | 4 | 97.62 |

| Fang et al. [24] | ResNet50 | Leaf | - | PlantVillage | 27 | 95.61 |

| Hasan et al. [26] | InceptionV3+SVM | Rice | ImageNet | Field Collected, Online | 9 | 97.5 |

| Dechant et al. [28] | Custom CNNs | Maize | - | Field Collected | 2 | 96.7 |

| Barbedo et al. [30] | GoogLeNet | 12 plant species | ImageNet | - | 12 | 87 |

| Ramcharan et al. [31] | InceptionV3 | Cassava | ImageNet | Field Collected | 5 | - |

| Kawasaki et al. [32] | Modified LeNet | Cucumber | - | Laboratory Collected | 3 | 94.9 |

| Authors | Network Architecture | Disease Category | Pre-Training Dataset | Fine-Tuning Dataset | No. of Classes | Accuracy(%) |

|---|---|---|---|---|---|---|

| Nie et al. [47] | Faster R-CNN+Attention | Strawberry | ImageNet | Field Collected | 4 | 78.05 |

| Byoungjun et al. [4] | Cascaded Faster R-CNN | Strawberry | PlantCLEF | Field Collected | 7 | 91.62 |

| Ramcharan et al. [48] | SSD | Cassava | MS-COCO | Field Collected | 3 | - |

| Ozguven et al. [46] | Modified Faster R-CNN | Sugar beet | - | Field Collected | 4 | 95.48 |

| Fuentes et al. [1] | Faster R-CNN+Filterbank | Tomato | ImageNet | Field Collected | 10 | 96.25 |

| Fuentes et al. [45] | FPN + LSTM | Tomato | ImageNet | Field Collected | 10 | 92.5 |

| Authors | Network Architecture | Disease Category | Pre-Training Dataset | Fine-Tuning Dataset | No. of Classes | Accuracy (%) |

|---|---|---|---|---|---|---|

| Stewart et al. [54] | Mask R-CNN | Northern Leaf Blight | MS-COCO | Field Collected | 1 | 96 |

| Lin et al. [58] | Modified U-Net | Cucumber Powdery Mildew | - | Laboratory Collected | 1 | 96.08 |

| Wang et al. [59] | FCN | Maize Leaf Disease | - | Field Collected | 6 | 96.26 |

| Category of Disease | Images for Training | Images for Validation | Images for Testing |

|---|---|---|---|

| Angular Leafspot | 245 | 43 | 147 |

| Anthracnose Fruit Rot | 52 | 12 | 33 |

| Blossom Blight | 117 | 29 | 62 |

| Gray Mold | 255 | 77 | 145 |

| Leaf Spot | 382 | 71 | 162 |

| Powdery Mildew Fruit | 80 | 12 | 43 |

| Powdery Mildew Leaf | 319 | 63 | 151 |

| Total | 1450 | 307 | 743 |

| Network | mAP (%) |

|---|---|

| ResNet50 | 72.06 |

| ResNet101 | 71.69 |

| Augmentation | Specifications | mAP (%) |

|---|---|---|

| Baseline | - | 71.69 |

| Change Color Temperature | (7000, 12000) | 68.92 |

| Dropout | p = (0, 0.2) | 71.74 |

| Edge Detect | alpha = (0.0, 1.0) | 72.37 |

| Enhance Color | - | 72.02 |

| Filter Edge Enhance | - | 68.34 |

| Gamma Contrast | (0.5, 2.0) | 71.90 |

| Gaussian Blur | sigma = (0.0, 2.0) | 68.70 |

| Histogram Equalization (All Channels) | - | 71.02 |

| Multiply | (0.4, 1.4) | 70.58 |

| Multiply and Add to Brightness | mul = (0.5, 1.5), add = (−30, 30) | 72.26 |

| Multiply Hue and Saturation | (0.3, 1.3), per_channel = True | 73.79 |

| Perspective Transform | scale = (0.01, 0.15) | 68.90 |

| Rotate | (−45, 45) | 72.91 |

| Rotate + Edge Detect | copied from individual application | 73.63 |

| Rotate + Enhance Color + Sharpen | copied from individual application | 75.88 |

| Sharpen | alpha = (0.0, 1.0), lightness = (0.75, 2.0) | 70.72 |

| Network | Augmentation | Improved Training Strategy | mAP (%) |

|---|---|---|---|

| ResNet50 | √ | 79.84 | |

| ResNet50 | √ | √ | 81.37 |

| ResNet101 | √ | 80.24 | |

| ResNet101 | √ | √ | 82.43 |

| Class | AP for ResNet50 (%) | AP for ResNet101 (%) |

|---|---|---|

| Angular Leafspot | 79.93 | 81.16 |

| Anthracnose Fruit Rot | 71.46 | 63.63 |

| Blossom Blight | 87.90 | 82.25 |

| Gray Mold | 92.29 | 93.90 |

| Leaf Spot | 71.93 | 73.33 |

| Powdery Mildew Fruit | 68.02 | 70.91 |

| Powdery Mildew Leaf | 85.66 | 89.87 |

| Network | Level | Infection Status | mAP IOU 0.50 (%) | mAP IOU 0.50:0.95 (%) |

|---|---|---|---|---|

| Mask R-CNN | Level 1 | Low-Mid | 86.10 | 64.76 |

| Mask R-CNN | Level 2 | High | 81.02 | 58.10 |

| Network | Backbone | mAP IOU 0.50 (%) | mAP IOU 0.50:0.95 (%) |

|---|---|---|---|

| Mask R-CNN | ResNet50 | 81.37 | 55.21 |

| Mask R-CNN | ResNet101 | 82.43 | 59.94 |

| YOLACT | ResNet50 | 79.71 | 55.19 |

| YOLACT | ResNet101 | 79.39 | 55.81 |

| Authors | Network Architecture | Pre-Training Dataset | Fine-Tuning Dataset | No. of Classes | Accuracy (%) | Approach |

|---|---|---|---|---|---|---|

| Ouyang et al. [69] | SVM | - | Field Collected | 3 | - | Traditional Segmentation |

| Nie et al. [47] | Faster R-CNN+Attention | ImageNet | Field Collected | 4 | 78.05 | Object Detection |

| Byoungjun et al. [4] | Cascaded Faster R-CNN | PlantCLEF | Field Collected, Online | 7 | 91.62 | Object Detection |

| This Work | Mask R-CNN | MS-COCO | Field Collected, Online | 7 | 82.43 | Fine-grained Instance Segmentation |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Afzaal, U.; Bhattarai, B.; Pandeya, Y.R.; Lee, J. An Instance Segmentation Model for Strawberry Diseases Based on Mask R-CNN. Sensors 2021, 21, 6565. https://doi.org/10.3390/s21196565

Afzaal U, Bhattarai B, Pandeya YR, Lee J. An Instance Segmentation Model for Strawberry Diseases Based on Mask R-CNN. Sensors. 2021; 21(19):6565. https://doi.org/10.3390/s21196565

Chicago/Turabian StyleAfzaal, Usman, Bhuwan Bhattarai, Yagya Raj Pandeya, and Joonwhoan Lee. 2021. "An Instance Segmentation Model for Strawberry Diseases Based on Mask R-CNN" Sensors 21, no. 19: 6565. https://doi.org/10.3390/s21196565

APA StyleAfzaal, U., Bhattarai, B., Pandeya, Y. R., & Lee, J. (2021). An Instance Segmentation Model for Strawberry Diseases Based on Mask R-CNN. Sensors, 21(19), 6565. https://doi.org/10.3390/s21196565