Identification of Distributed Denial of Services Anomalies by Using Combination of Entropy and Sequential Probabilities Ratio Test Methods

Abstract

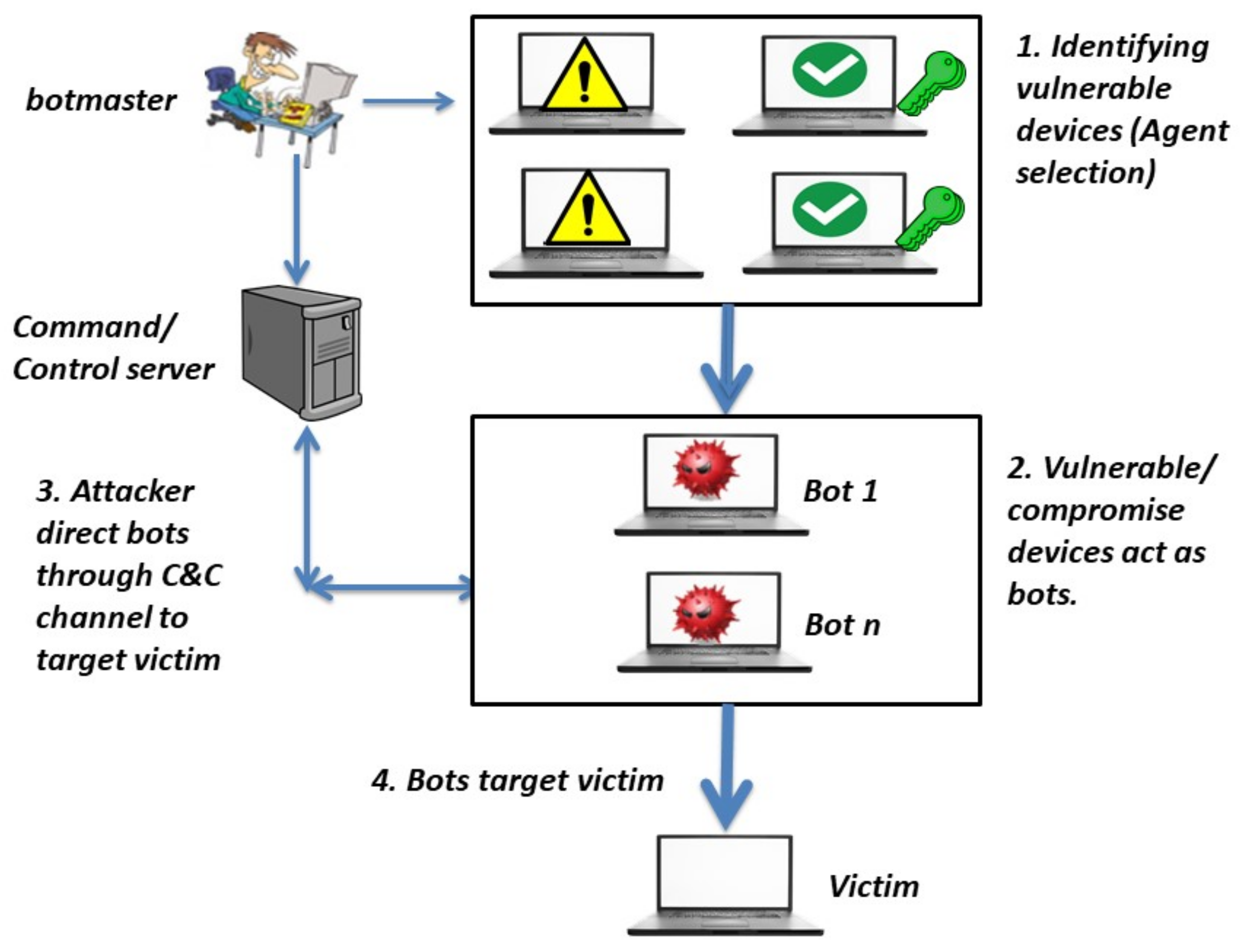

:1. Introduction

2. Related Works

2.1. Statistical-Based Detection Techniques

2.2. Data Mining-Based Detection Techniques

2.3. Machine Learning-Based Detection Techniques

2.4. Deep Learning-Based Detection Techniques

2.5. Combination-Based Detection Techniques

3. Methodology

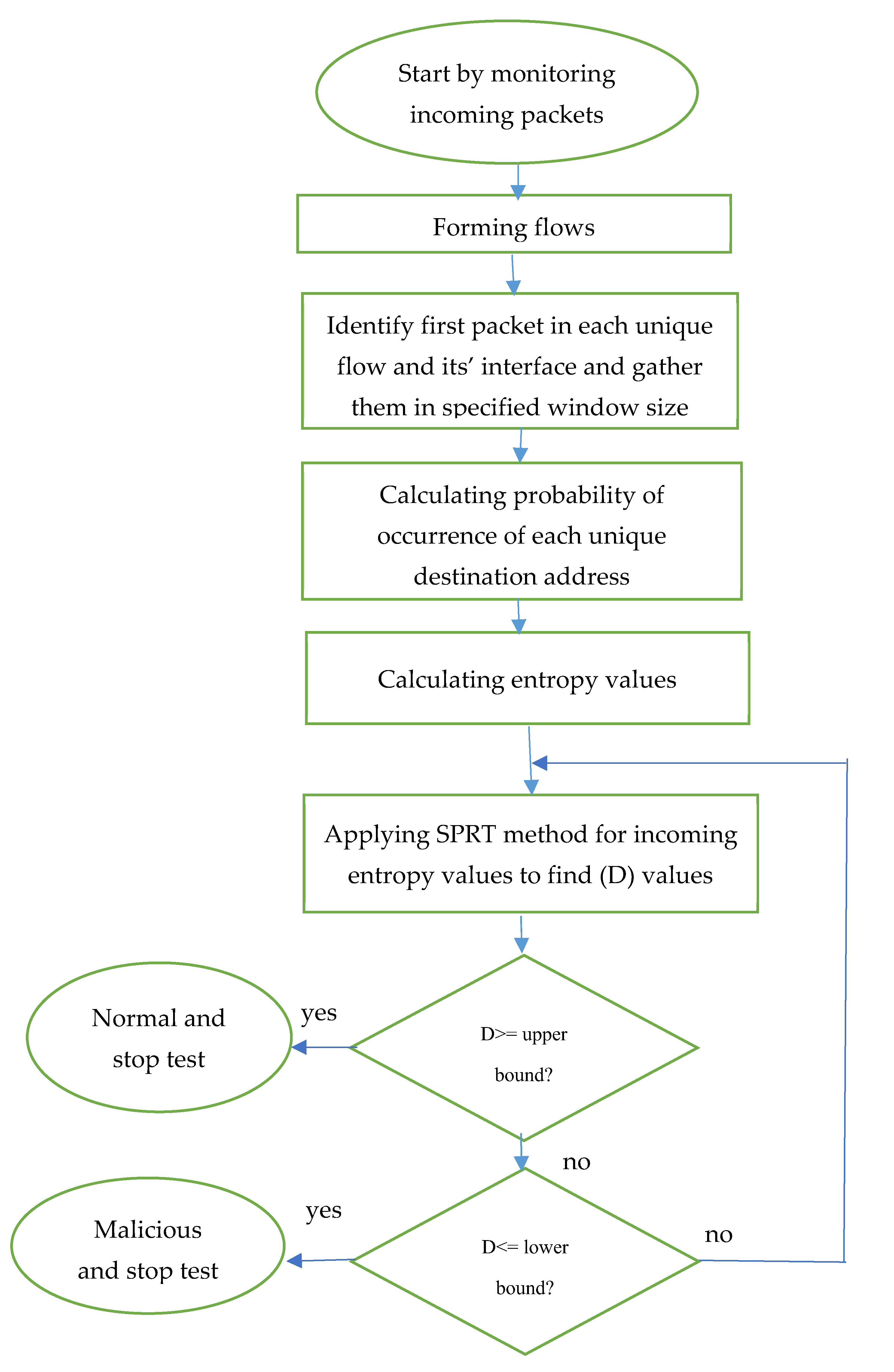

3.1. Flowchart of ESPRT

3.2. First Phase (Entropy)

3.3. Second Phase (SPRT)

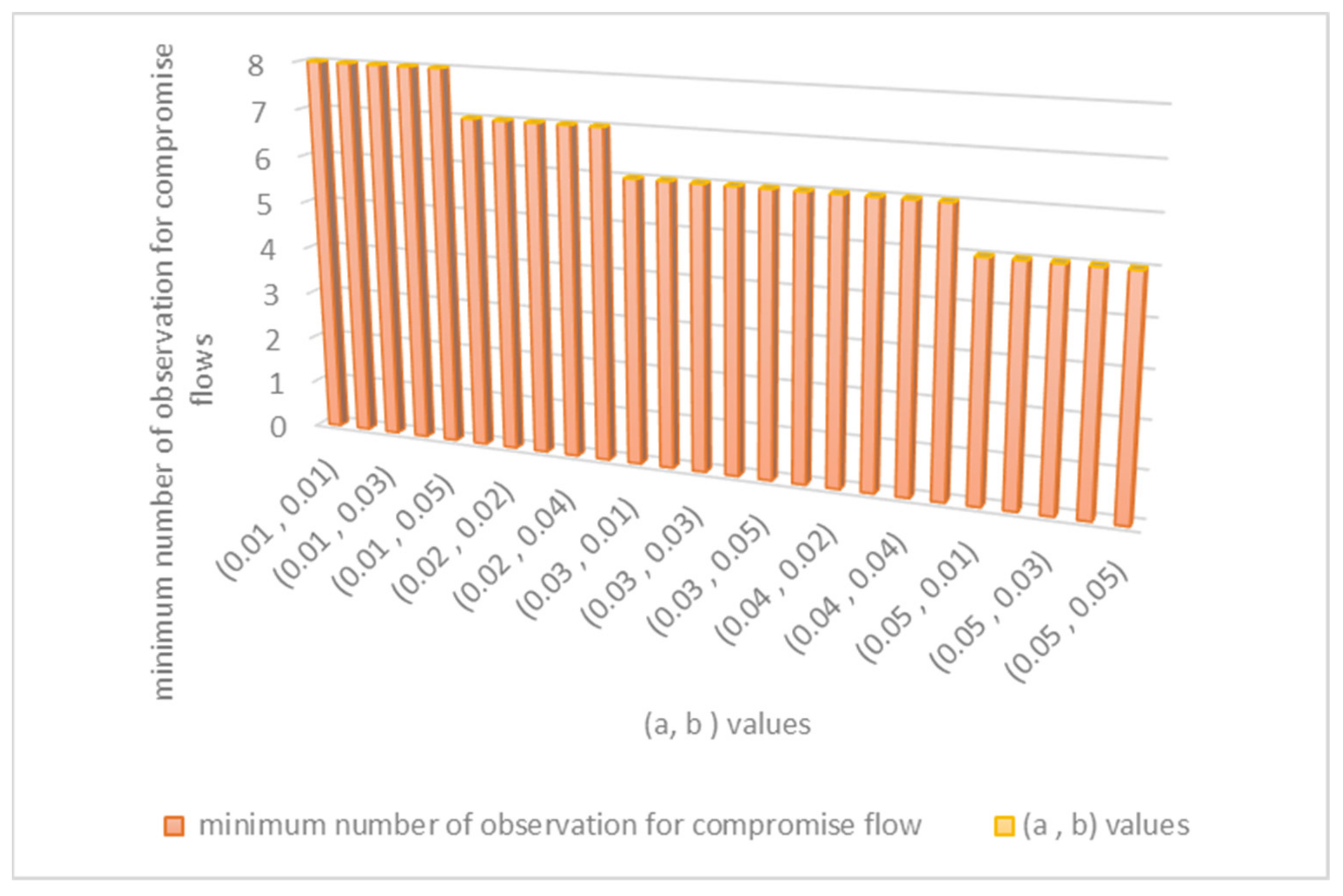

4. Results

4.1. Confusion Matrix

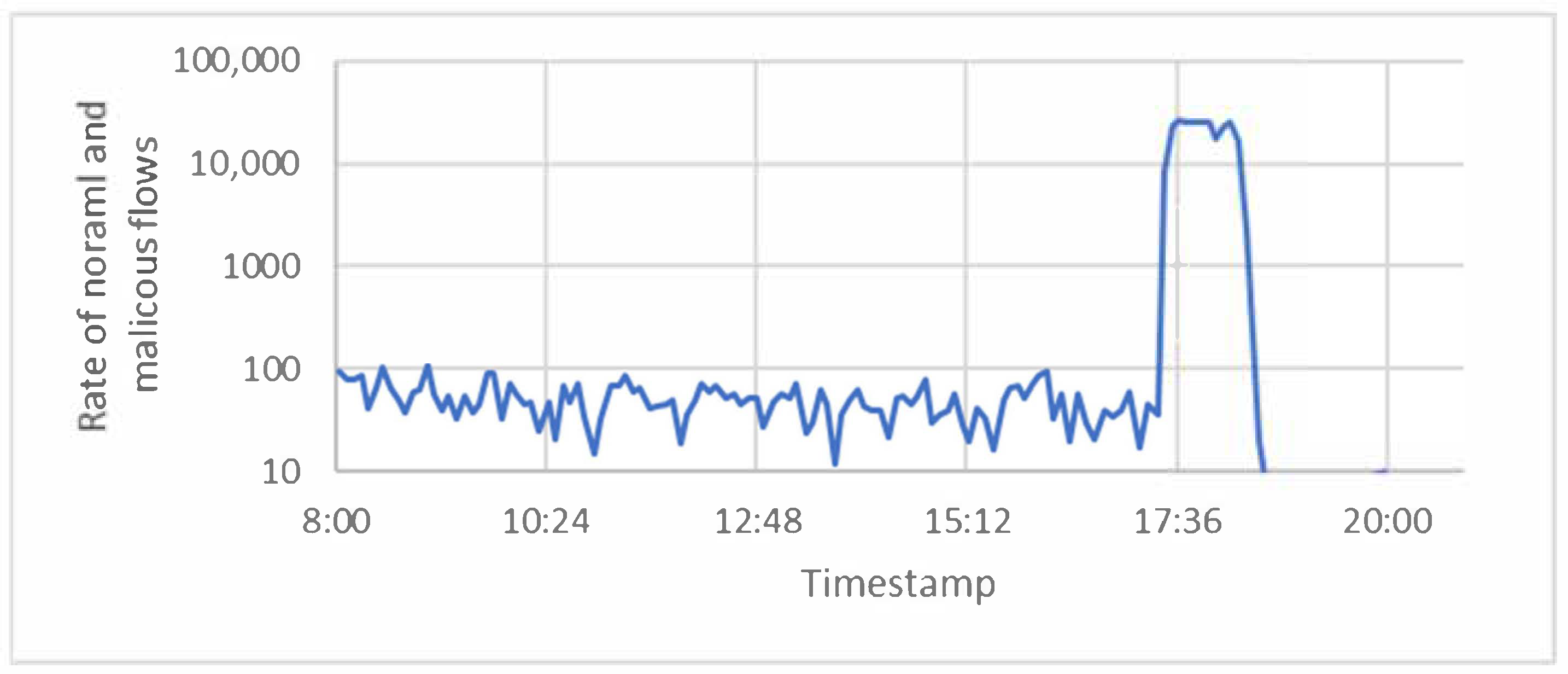

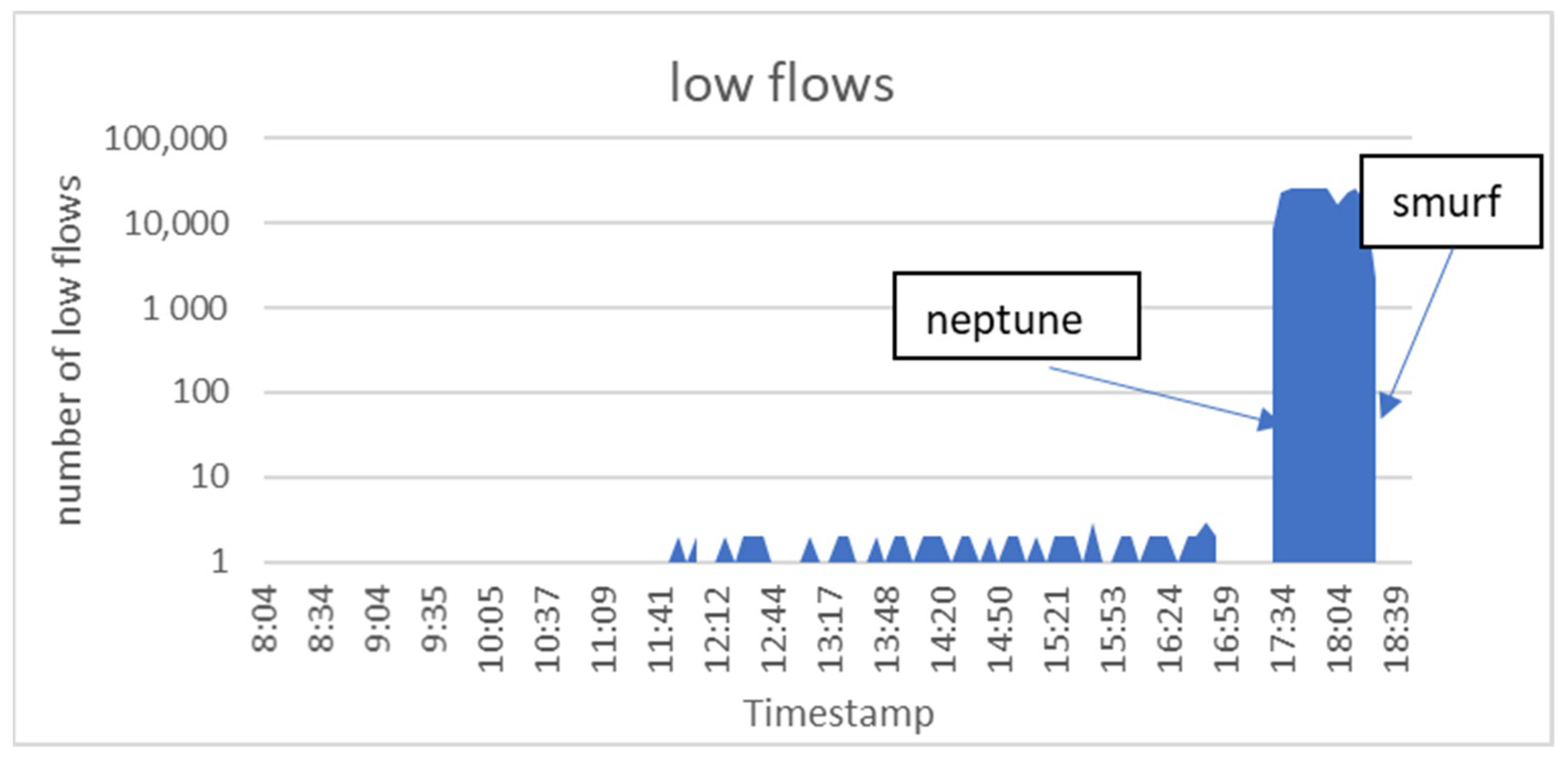

4.2. DARPA (Friday_1998 Dataset)

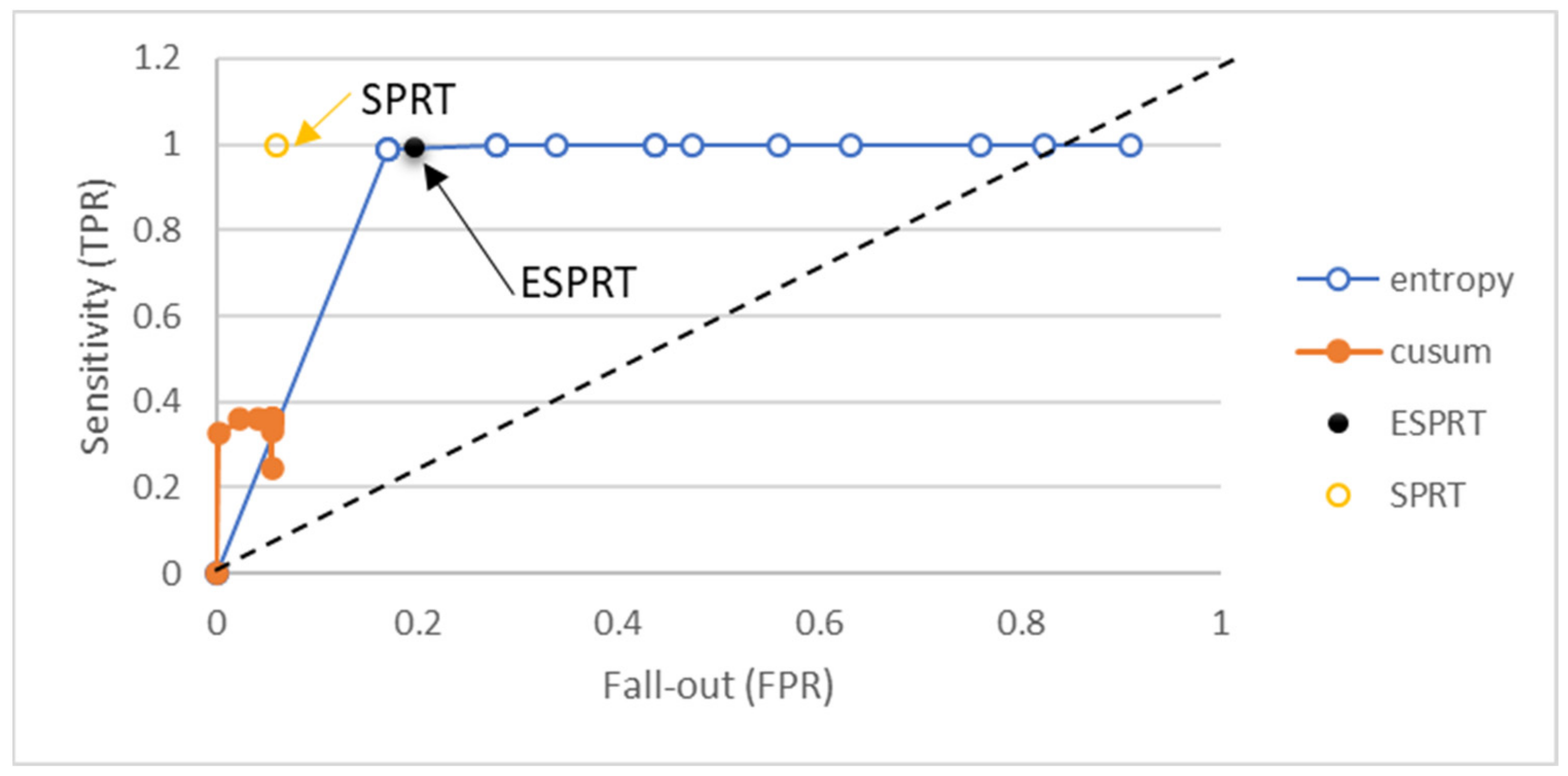

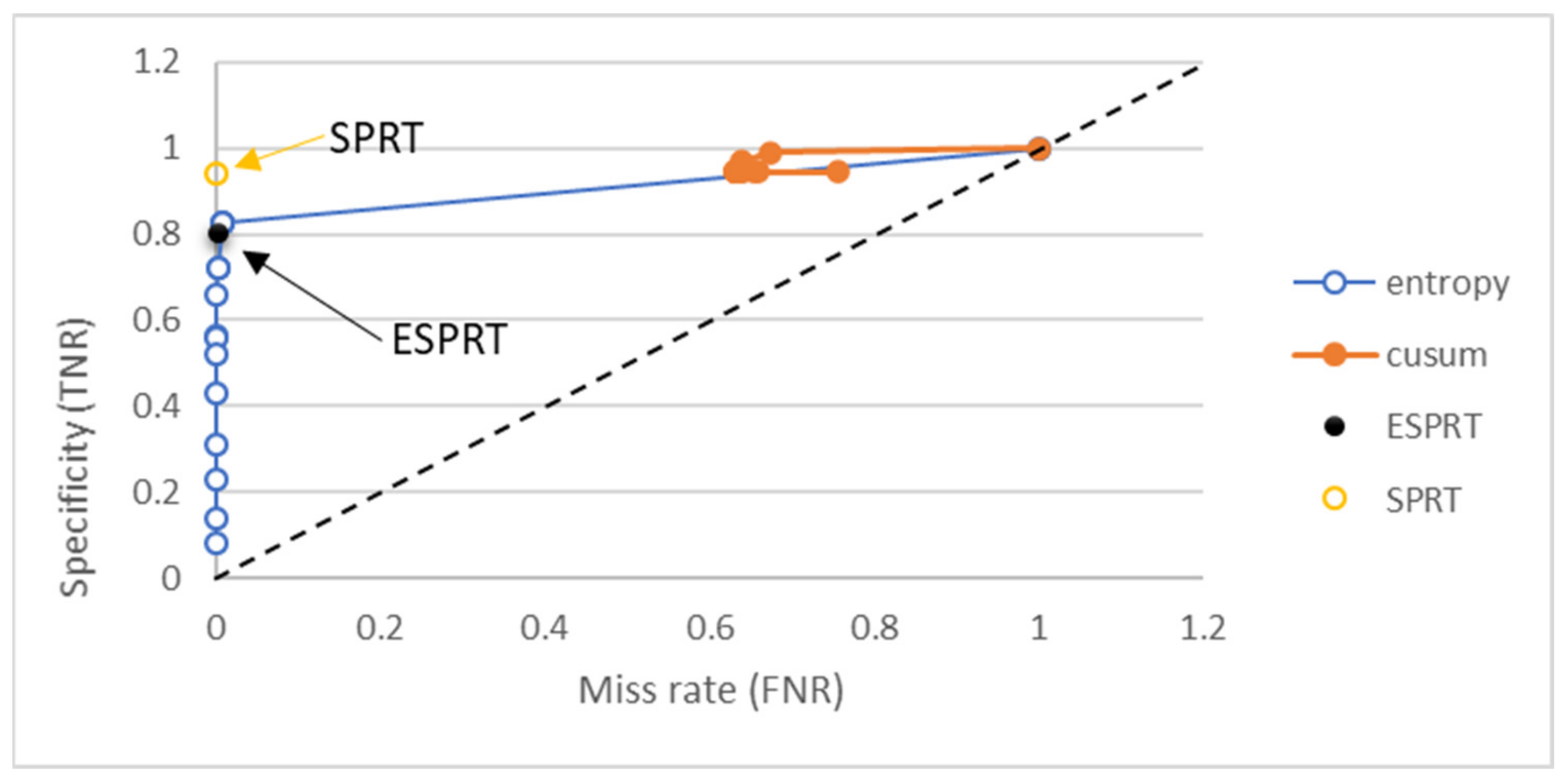

4.3. Results and Comparison Based on the Friday_1998 Dataset

4.4. Results and Comparison Based on DARPA 2000 Dataset

4.5. Results and Comparison Based on (CIC-DDoS2019) Dataset

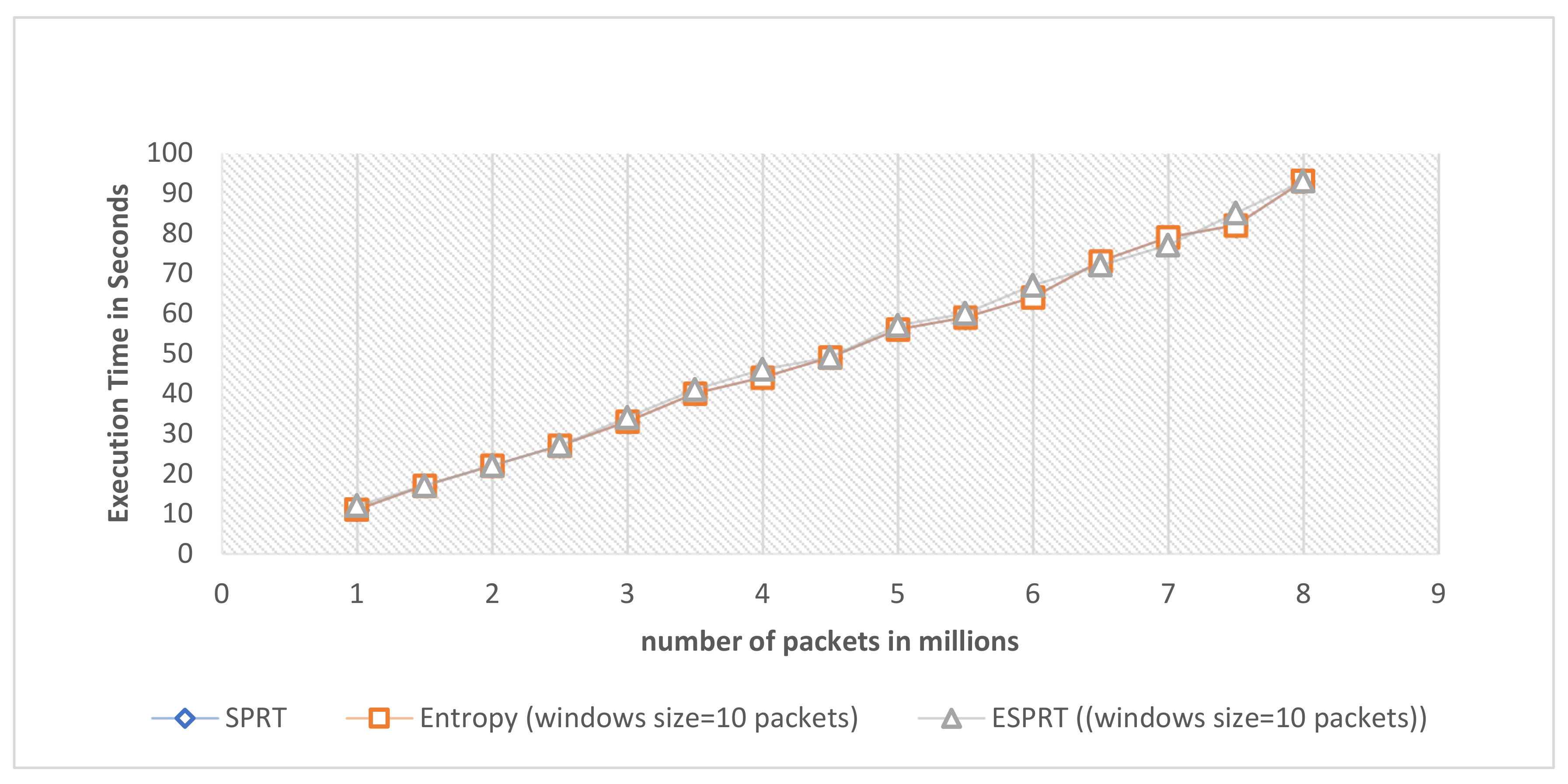

4.6. Scalability of ESPRT

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alarqan, M.A.; Zaaba, Z.F.; Almomani, A. Detection Mechanisms of DDoS Attack in Cloud Computing Environment: A Survey. In International Conference on Advances in Cyber Security; Springer Nature: Penang, Malaysia, 2020. [Google Scholar]

- Jaafar, A.G.; Ismail, S.A.; Abdullah, M.S.; Kama, N.; Azmi, A.; Yusop, O.M. Recent Analysis of Forged Request Headers Constituted by HTTP DDoS. Sensors 2020, 20, 3820. [Google Scholar] [CrossRef]

- Bhatia, S. Ensemble-Based Model for DDoS Attack Detection and Flash Event Separation. In Proceedings of the Future Technologies Conference, San Francisco, CA, USA, 6–7 December 2016. [Google Scholar]

- Bhuyan, M.H.; Bhattacharyya, D.; Kalita, J. An empirical evaluation of information metrics for low-rate and high-rate DDoS attack detection. Pattern Recognit. Lett. 2015, 51, 1–7. [Google Scholar] [CrossRef]

- Gupta, B.B.; Badve, O.P. Taxonomy of DoS and DDoS attacks and desirable defense mechanism in a Cloud computing environment. Nat. Comput. Appl. Forum 2017, 28, 3655–3682. [Google Scholar] [CrossRef]

- Nooribakhsh, M.; Mollamotalebi, M. A Review on Statistical Approaches for Anomaly Detection in DDoS Attacks. Inf. Secur. J. A Glob. Perspect. 2020, 29, 118–133. [Google Scholar] [CrossRef]

- Zargar, S.T.; Joshi, J.; Tipper, D. A Survey of Defense Mechanisms against Distributed Denial of Service (DDoS) Flooding Attacks. IEEE Commun. Surv. Tutor. 2013, 15, 2046–2069. [Google Scholar] [CrossRef] [Green Version]

- Innab, N.; Alamri, A. The Impact of DDoS on E-Commerce. In Proceedings of the IEEE 21st Saudi Computer Society National Computer Conference (NCC), Riyadh, Saudi Arabia, 25–26 April 2018. [Google Scholar]

- McKeay, M.; Ragan, S.; Tuttle, C.; Goedde, A.; LaSeur, L. Gaming—You Can’t Solo Security. Available online: https://www.akamai.com/content/dam/site/en/documents/state-of-the-internet/soti-security-gaming-you-cant-solo-security-report-2020.pdf (accessed on 4 July 2021).

- Gulisano, V.; Callau-Zori, M.; Fu, Z.; Jiménez-Peris, R.; Papatriantafilou, M.; Patiño-Martínez, M. STONE: A streaming DDoS defense framework. Elsevier Expert Syst. Appl. 2015, 42, 9620–9633. [Google Scholar] [CrossRef]

- Fortunati, S.; Gini, F.; Greco, M.S.; Farina, A.; Graziano, A.; Giompapa, S. An Improvement of the State-of-the-Art Covariance-based Methods for Statistical Anomaly Detection Algorithms. Signal. Image Video Process. 2016, 10, 687–694. [Google Scholar] [CrossRef]

- Mousavi, S.M.; St-Hilaire, M. Early Detection of DDoS Attacks against SDN Controllers. In Proceedings of the 2015 International Conference on Computing, Networking and Communications, Communications and Information Security, Anaheim, CA, USA, 16–19 February 2015; pp. 77–81. [Google Scholar]

- Koay, A.; Chen, A.; Welch, I.; Seah, W.K.G. A New Multi Classifier System Using Entropy-Based Features in DDoS Attack Detection. In Proceedings of the 2018 International Conference on Information Networking (ICOIN), Chiang Mai, Thailand, 10–12 January 2018; pp. 162–167. [Google Scholar] [CrossRef]

- Ma, X.; Chen, Y. DDoS Detection Method Based on Chaos Analysis of Network Traffic Entropy. IEEE Commun. Lett. 2014, 18, 114–117. [Google Scholar] [CrossRef]

- Hoque, N.; Bhattacharyya, D.K.; Kalita, J.K. FFSc: A novel measure for low-rate and high-rate DDoS attack detection using multivariate data analysis. Secur. Commun. Netw. 2016, 9, 2032–2041. [Google Scholar] [CrossRef] [Green Version]

- Meng, L.; Guo, X. A Detection Method for DDoS Attack against SDN Controller. Adv. Eng. Res. 2017, 146, 292–296. [Google Scholar]

- Bista, S.; Chitrakar, R. DDoS Attack Detection Using Heuristics Clustering Algorithm and Naïve Bayes Classification. J. Inf. Secur. 2018, 9, 33–44. [Google Scholar]

- Polat, H.; Polat, O.; Cetin, A. Detecting DDoS Attacks in Software-Defined Networks through Feature Selection Methods and Machine Learning Models. Sustainability 2020, 12, 1035. [Google Scholar] [CrossRef] [Green Version]

- Maranhão, J.P.A.; da Costa, J.P.C.L.; Freitas, E.P.d.; Javidi, E.; Júnior, R.T.d.S. Error-Robust Distributed Denial of Service Attack Detection Based on an Average Common Feature Extraction Technique. Sensors 2020, 20, 5845. [Google Scholar] [CrossRef]

- Taheri, R.; Javidan, R.; Shojafar, M.; Vinod, P.; Conti, M. Can machine learning model with static features be fooled: An adversarial machine learning approach. Clust. Comput. 2020, 23, 3233–3253. [Google Scholar] [CrossRef] [Green Version]

- Taheri, R.; Javidan, R.; Pooranian, Z. Adversarial android malware detection for mobile multimedia applications in IoT environments. Multimed. Tools Appl. 2021, 80, 16713–16729. [Google Scholar] [CrossRef]

- Nazih, W.; Hifny, Y.; Elkilani, W.S.; Dhahri, H.; Abdelkader, T. Countering DDoS Attacks in SIP Based VoIP Networks Using Recurrent Neural Networks. Sensors 2020, 20, 5875. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Liu, Y. A DDoS Attack Detection Method Based on Information Entropy and Deep Learning in SDN. In Proceedings of the IEEE 4th Information Technology Networking. Electronic and Automation Control Conference (ITNEC 2020), Chongqing, China, 12–14 June 2020; pp. 1–5. [Google Scholar]

- Daneshgadeh, S.; Kemmerich, T.; Ahmed, T.; Baykal, N. An Empirical Investigation of DDoS and Flash Event Detection Using Shannon Entropy, KOAD and SVM Combined. In Proceedings of the 2019 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 18–21 February 2019. [Google Scholar]

- Özçelik, İ.; Brooks, R.R. Cusum—Entropy: An Efficient Method for DDoS Attack Detection. In Proceedings of the 2016 4th International Istanbul Smart Grid Congress and Fair (ICSG), Istanbul, Turkey, 20–21 April 2016; pp. 1–5. [Google Scholar]

- Dong, P.; Du, X.; Zhang, H.; Xu, T. A Detection Method for a Novel DDoS Attack against SDN Controllers by Vast New Low-Traffic Flows. In Proceedings of the IEEE International Conference on Communications (ICC), Kuala Lumpur, Malaysia, 22–27 May 2016. [Google Scholar]

- Massachusetts Institute of Technology Lincoln Laboratory. Available online: https://archive.ll.mit.edu/ideval/index.html (accessed on 2 July 2021).

- Ali, B.H.; Jalal, A.A.; Al-Obaydy, W.N.I. Data loss prevention by using MRSH-v2 algorithm. Int. J. Electr. Comput. Eng. 2020, 10, 3615–3622. [Google Scholar]

- Hoque, N.; Kashyap, H.; Bhattacharyya, D. Real-time DDoS attack detection using FPGA. Comput. Commun. 2017, 110, 48. [Google Scholar] [CrossRef]

- Chonka, A.; Singh, J.; Zhou, W. Chaos theory based detection against network mimicking DDoS attacks. IEEE Commun. Lett. 2009, 13, 717–719. [Google Scholar] [CrossRef] [Green Version]

- Sarmila, K.; Kavin, G. A Clustering Algorithm for Detecting DDoS Attacks in Networks. Int. J. Recent Eng. Sci. 2014, 1, 24–30. [Google Scholar]

- Cepheli, Ö.; Büyükçorak, S.; Kurt, G.K. Hybrid Intrusion Detection System for DDoS Attacks. Hindawi Publ. Corp. J. Electr. Comput. Eng. 2016, 2016, 1075648. [Google Scholar] [CrossRef] [Green Version]

- Sharafaldin, I.; Lashkari, A.H.; Hakak, S.; Ghorbani, A.A. Developing Realistic Distributed Denial of Service (DDoS) Attack Dataset and Taxonomy. In Proceedings of the IEEE 53rd International Carnahan Conference on Security Technology, Chennai, India, 1–3 October 2019. [Google Scholar]

| Window Size | Accuracy of ESPRT Detection | Accuracy of Entropy Detection | ||||

|---|---|---|---|---|---|---|

| (Thr = 0.2) | (Thr = 0.5) | (Thr = 1) | (Thr = 1.31) | (Thr = 1.5) | ||

| 5 | 0.987 | 0.986 | 0.986 | 0.980 | 0.979 | 0.972 |

| 10 | 0.990 | 0.986 | 0.989 | 0.984 | 0.980 | 0.978 |

| 25 | 0.988 | 0.991 | 0.992 | 0.989 | 0.986 | 0.984 |

| 50 | 0.995 | 0.987 | 0.994 | 0.992 | 0.990 | 0.989 |

| 75 | 0.995 | 0.986 | 0.994 | 0.993 | 0.991 | 0.989 |

| 100 | 0.993 | 0.984 | 0.994 | 0.993 | 0.992 | 0.990 |

| Window Size | F-Score of ESPRT Detection | F-Score of Entropy Detection | ||||

|---|---|---|---|---|---|---|

| (Thr = 0.2) | (Thr = 0.5) | (Thr = 1) | (Thr = 1.31) | (Thr = 1.5) | ||

| 5 | 0.993 | 0.993 | 0.993 | 0.990 | 0.989 | 0.986 |

| 10 | 0.994 | 0.993 | 0.994 | 0.991 | 0.990 | 0.989 |

| 25 | 0.994 | 0.995 | 0.996 | 0.994 | 0.993 | 0.991 |

| 50 | 0.997 | 0.993 | 0.997 | 0.996 | 0.994 | 0.994 |

| 75 | 0.997 | 0.993 | 0.997 | 0.996 | 0.995 | 0.994 |

| 100 | 0.996 | 0.992 | 0.996 | 0.996 | 0.995 | 0.994 |

| Method | Accuracy | DR or TPR | FPR | FNR | Window Size | Thr |

|---|---|---|---|---|---|---|

| ESPRT | 89.6% to 93.2% (average = 90.5%) | 0.914 to 0.957 (average = 0.929) | 0.154 to 0.270 (average = 0.207) | 0.042 to 0.078 (average = 0.069) | Window size range between 5 and 120 packets | No need for Thr in this method. |

| Real-time DDoS attack [29] | 22% to 100% (average = 90.3%) | NA to 1 (They mentioned the maximum value only) | 0 to NA | 0.18 to NA | No need for window size in this method. | Threshold values range between 0.1 and 0.9 (22% when thr = 0.3, 70% when thr = 0.4, 100% when thr = 0.5 or higher). |

| Chaos theory [30] | NA | 0.88 to 0.94 (average = 0.907) | 0.05 to 0.45 (average = 0.233) | NA | No need for window size in this method. | Threshold values range between 0.1 to 0.9 |

| HCA with Labelling [17,31] | 41% to 95% (average = 72.42%) | 0.048 to 0.383 (average = 0.166) | 0.167 to 0.523 (average = 0.237) | NA | Window size range between 10 and 120 packets | No need for Thr in this method. |

| HCA with Naïve Base Classification [17] | 58% to 100% (average = 86.73) | 0.560 to 1 (average = 0.591) | 0 to 0.352 (average = 0.119) | NA | Window size range between 10 and 120 packets | No need for Thr in this method. |

| H-IDS [32] | NA | 0.921 | 0.18 | NA | No need for window size in this method. | No need for Thr in this method. |

| Dataset | Accuracy | F1-Score | DR or TPR | FPR | FNR |

|---|---|---|---|---|---|

| Sample#1 (SAT-03-11-2018_0137) | 0.997 | 0.998 | 0.997 | 0 | 0.002 |

| Sample#2 (SAT-03-11-2018_010) | 0.997 | 0.998 | 0.997 | 0 | 0 |

| Sample#3 (SAT-03-11-2018_030) | 0.975 | 0.987 | 0.975 | 0.287 | 0.024 |

| Sample#4 (SAT-03-11-2018_070) | 0.974 | 0.997 | 0.994 | 0.320 | 0.005 |

| Sample#5 (SAT-03-11-2018_0110) | 0.993 | 0.996 | 0.993 | 0.1 | 0.006 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, B.H.; Sulaiman, N.; Al-Haddad, S.A.R.; Atan, R.; Hassan, S.L.M.; Alghrairi, M. Identification of Distributed Denial of Services Anomalies by Using Combination of Entropy and Sequential Probabilities Ratio Test Methods. Sensors 2021, 21, 6453. https://doi.org/10.3390/s21196453

Ali BH, Sulaiman N, Al-Haddad SAR, Atan R, Hassan SLM, Alghrairi M. Identification of Distributed Denial of Services Anomalies by Using Combination of Entropy and Sequential Probabilities Ratio Test Methods. Sensors. 2021; 21(19):6453. https://doi.org/10.3390/s21196453

Chicago/Turabian StyleAli, Basheer Husham, Nasri Sulaiman, Syed Abdul Rahman Al-Haddad, Rodziah Atan, Siti Lailatul Mohd Hassan, and Mokhalad Alghrairi. 2021. "Identification of Distributed Denial of Services Anomalies by Using Combination of Entropy and Sequential Probabilities Ratio Test Methods" Sensors 21, no. 19: 6453. https://doi.org/10.3390/s21196453

APA StyleAli, B. H., Sulaiman, N., Al-Haddad, S. A. R., Atan, R., Hassan, S. L. M., & Alghrairi, M. (2021). Identification of Distributed Denial of Services Anomalies by Using Combination of Entropy and Sequential Probabilities Ratio Test Methods. Sensors, 21(19), 6453. https://doi.org/10.3390/s21196453