Detection of Error-Related Potentials in Stroke Patients from EEG Using an Artificial Neural Network

Abstract

1. Introduction

2. Methods

2.1. Participants

2.2. Data Recording

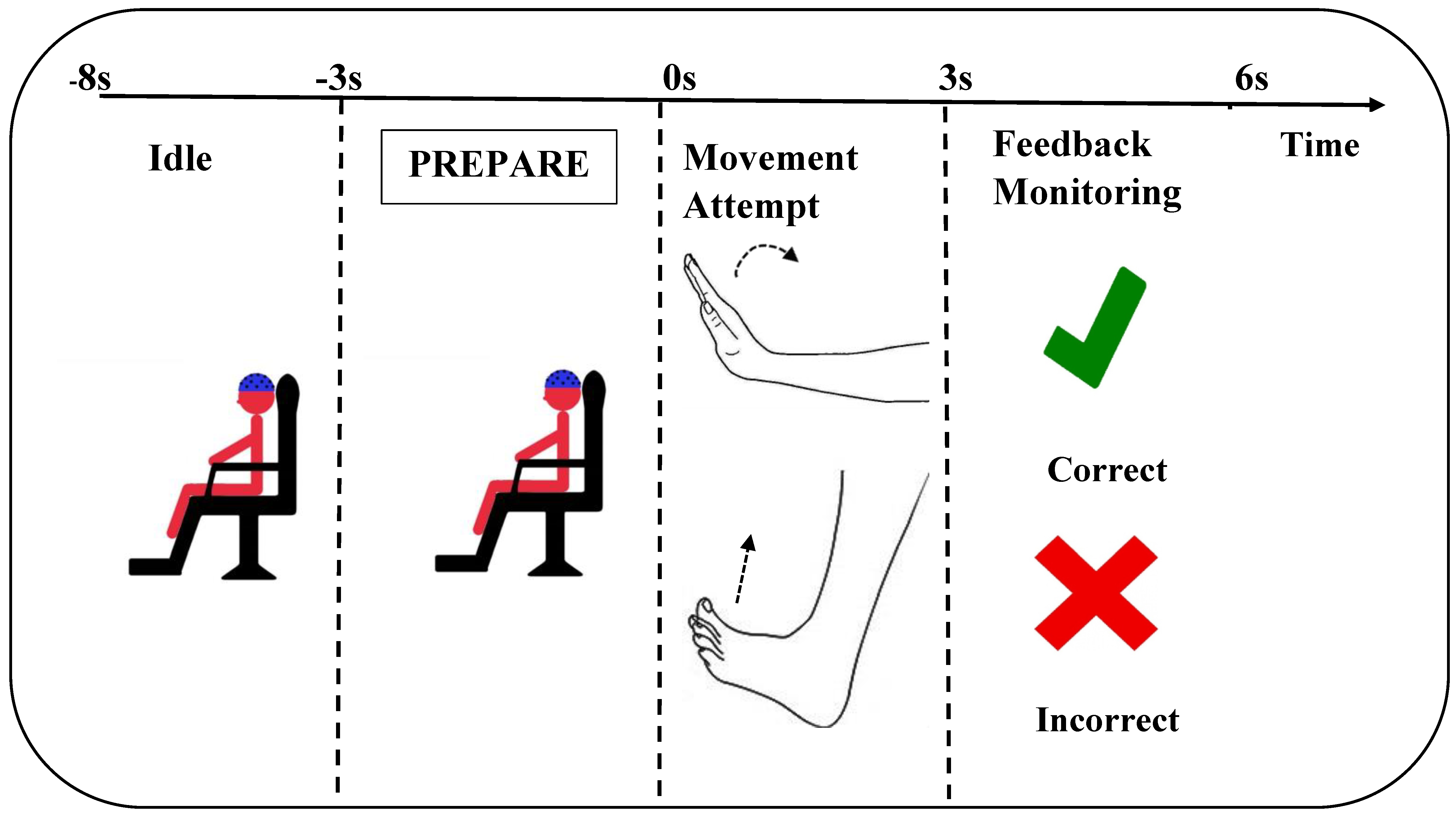

2.3. Experimental Details

2.4. Signal Processing

2.4.1. Pre-Processing

2.4.2. Classification

2.5. Statistics

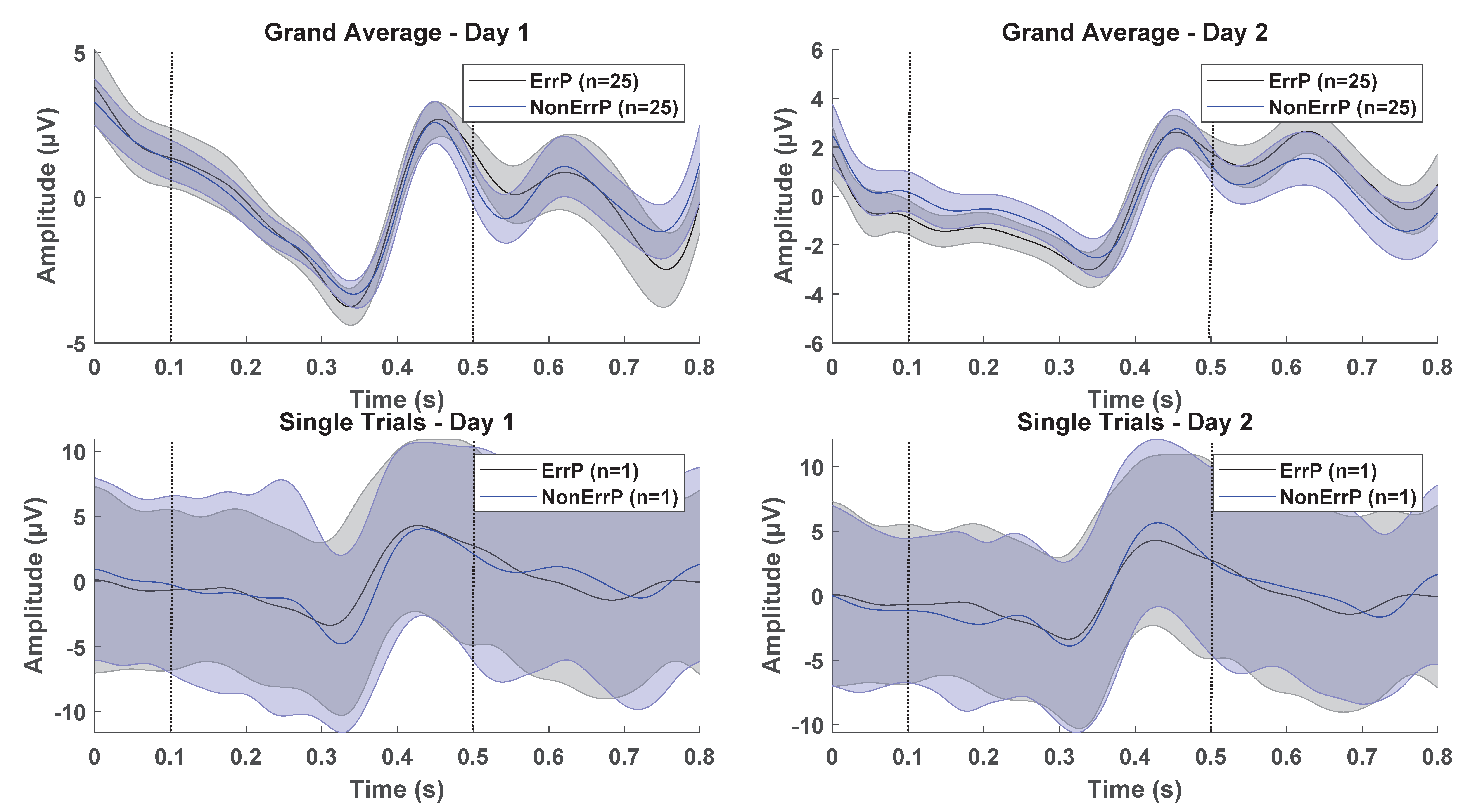

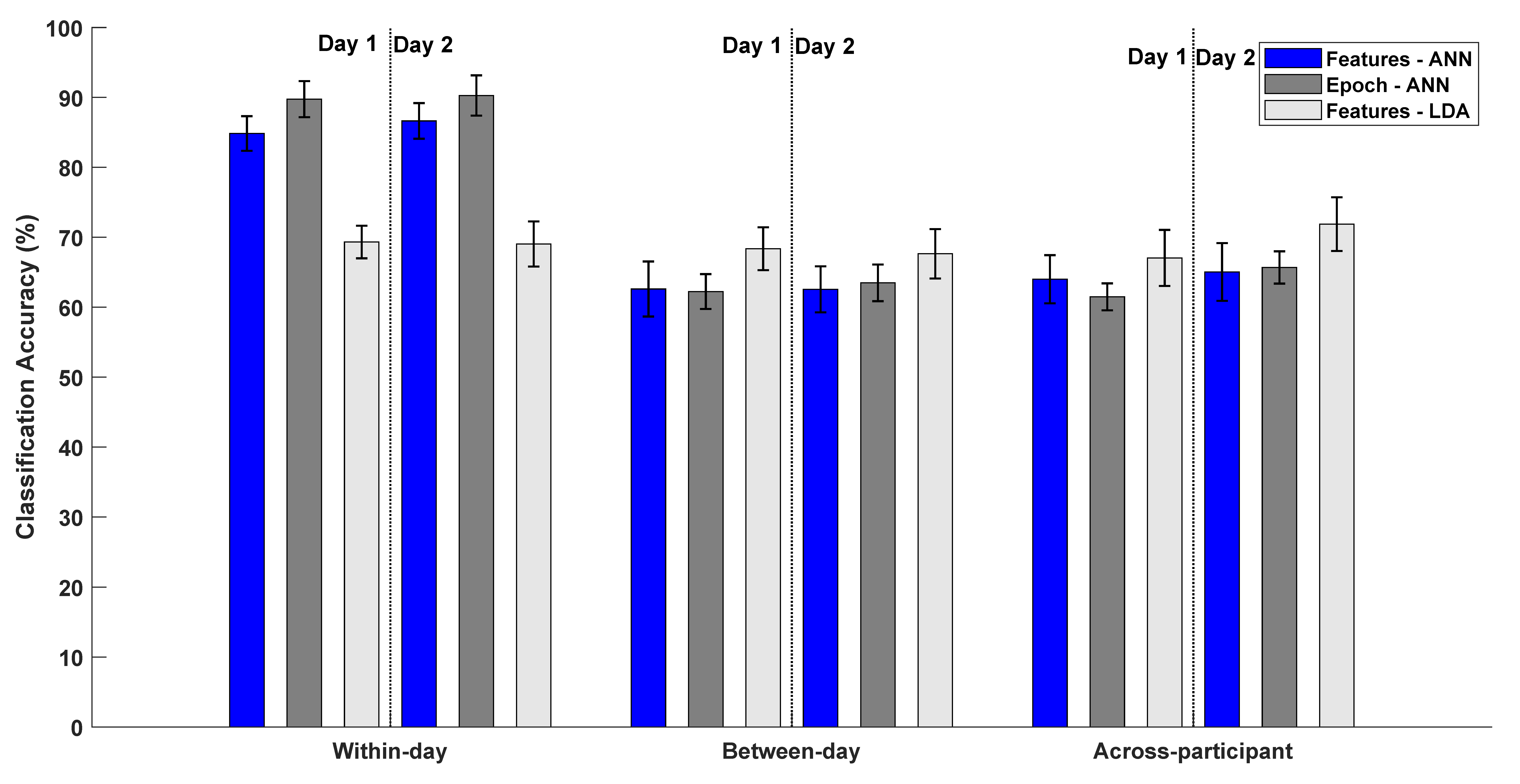

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Cervera, M.A.; Soekadar, S.R.; Ushiba, J.; del R. Millán, J.; Liu, M.; Birbaumer, N.; Garipelli, G. Brain-computer interfaces for post-stroke motor rehabilitation: A meta-analysis. Ann. Clin. Transl. Neurol. 2018, 5, 651–663. [Google Scholar] [CrossRef]

- Millán, J.R.; Rupp, R.; Müller-Putz, G.R.; Murray-Smith, R.; Giugliemma, C.; Tangermann, M.; Vidaurre, C.; Cincotti, F.; Kübler, A.; Leeb, R.; et al. Combining brain–computer interfaces and assistive technologies: State-of-the-art and challenges. Front. Neurosci. 2010, 4, 1–15. [Google Scholar] [CrossRef]

- Niazi, I.K.; Jiang, N.; Jochumsen, M.; Nielsen, J.F.; Dremstrup, K.; Farina, D. Detection of movement-related cortical potentials based on subject-independent training. Med. Biol. Eng. Comput. 2013, 51, 507–512. [Google Scholar] [CrossRef]

- Krauledat, M.; Tangermann, M.; Blankertz, B.; Müller, K.-R. Towards zero training for brain-computer interfacing. PLoS ONE 2008, 3, e2967. [Google Scholar] [CrossRef]

- Fazli, S.; Grozea, C.; Danoczy, M.; Blankertz, B.; Popescu, F.; Müller, K.R. Subject independent EEG-based BCI decoding. Adv. Neural Inf. Process. Syst. 2009, 22, 513–521. [Google Scholar]

- Jochumsen, M.; Niazi, I.K.; Nedergaard, R.W.; Navid, M.S.; Dremstrup, K. Effect of subject training on a movement-related cortical potential-based brain-computer interface. Biomed. Signal Process. Control 2018, 41, 63–68. [Google Scholar] [CrossRef]

- Aliakbaryhosseinabadi, S.; Kostic, V.; Pavlovic, A.; Radovanovic, S.; Farina, D.; Mrachacz-Kersting, N. Effect of Attention Variation in Stroke Patients: Analysis of Single Trial Movement-Related Cortical Potentials. In Converging Clinical and Engineering Research on Neurorehabilitation II; Springer: Cham, Switzerland, 2017; pp. 983–987. [Google Scholar]

- Chavarriaga, R.; Sobolewski, A.; del R. Millán, J. Errare machinale est: The use of error-related potentials in brain-machine interfaces. Front. Neurosci. 2014, 8, 208. [Google Scholar] [CrossRef]

- Kumar, A.; Gao, L.; Pirogova, E.; Fang, Q. A Review of Error-Related Potential-Based Brain–Computer Interfaces for Motor Impaired People. IEEE Access. 2019, 7, 142451–142466. [Google Scholar] [CrossRef]

- Aydarkhanov, R.; Ušćumlić, M.; Chavarriaga, R.; Gheorghe, L.; del R. Millán, J. Spatial covariance improves BCI performance for late ERPs components with high temporal variability. J. Neural Eng. 2020, 17, 036030. [Google Scholar] [CrossRef]

- Buttfield, A.; Ferrez, P.W.; Millan, J.R. Towards a robust BCI: Error potentials and online learning. Neural Syst. Rehabil. Eng. IEEE Trans. 2006, 14, 164–168. [Google Scholar] [CrossRef]

- Omedes, J.; Schwarz, A.; Müller-Putz, G.R.; Montesano, L. Factors that affect error potentials during a grasping task: Toward a hybrid natural movement decoding BCI. J. Neural Eng. 2018, 15, 046023. [Google Scholar] [CrossRef] [PubMed]

- Roset, S.A.; Gant, K.; Prasad, A.; Sanchez, J.C. An adaptive brain actuated system for augmenting rehabilitation. Front. Neurosci. 2014, 8, 415. [Google Scholar] [CrossRef]

- Schmidt, N.M.; Blankertz, B.; Treder, M.S. Online detection of error-related potentials boosts the performance of mental typewriters. BMC Neurosci. 2012, 13, 1–13. [Google Scholar] [CrossRef]

- Spüler, M.; Niethammer, C. Error-related potentials during continuous feedback: Using EEG to detect errors of different type and severity. Front. Hum. Neurosci. 2015, 9, 155. [Google Scholar]

- Tong, J.; Lin, Q.; Xiao, R.; Ding, L. Combining multiple features for error detection and its application in brain–computer interface. Biomed. Eng. Online 2016, 15, 1–15. [Google Scholar] [CrossRef]

- Usama, N.; Leerskov, K.K.; Niazi, I.K.; Dremstrup, K.; Jochumsen, M. Classification of error-related potentials from single-trial EEG in association with executed and imagined movements: A feature and classifier investigation. Med. Biol. Eng. Comput. 2020, 58, 2699–2710. [Google Scholar] [CrossRef]

- Ventouras, E.M.; Asvestas, P.; Karanasiou, I.; Matsopoulos, G.K. Classification of Error-Related Negativity (ERN) and Positivity (Pe) potentials using kNN and Support Vector Machines. Comput. Biol. Med. 2011, 41, 98–109. [Google Scholar] [CrossRef]

- Yousefi, R.; Rezazadeh Sereshkeh, A.; Chau, T. Development of a robust asynchronous brain-switch using ErrP-based error correction. J. Neural Eng. 2019, 16, 066042. [Google Scholar] [CrossRef]

- Yousefi, R.; Sereshkeh, A.R.; Chau, T. Exploiting error-related potentials in cognitive task based BCI. Biomed. Phys. Eng. Express 2018, 5, 015023. [Google Scholar] [CrossRef]

- Gao, C.; Li, Z.; Ora, H.; Miyake, Y. Improving Error Related Potential Classification by using Generative Adversarial Networks and Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Korea, 16–19 December 2020; pp. 2468–2476. [Google Scholar]

- Rahimi, A.; Tchouprina, A.; Kanerva, P.; del R. Millán, J.; Rabaey, J.M. Hyperdimensional computing for blind and one-shot classification of EEG error-related potentials. Mob. Netw. Appl. 2020, 25, 1958–1969. [Google Scholar] [CrossRef]

- Kakkos, I.; Ventouras, E.M.; Asvestas, P.A.; Karanasiou, I.S.; Matsopoulos, G.K. A condition-independent framework for the classification of error-related brain activity. Med. Biol. Eng. Comput. 2020, 58, 573–587. [Google Scholar] [CrossRef] [PubMed]

- Shou, G.; Ding, L. Detection of EEG Spatial–Spectral–Temporal Signatures of Errors: A Comparative Study of ICA-Based and Channel-Based Methods. Brain Topogr. 2015, 28, 47–61. [Google Scholar] [CrossRef] [PubMed]

- Yousefi, R.; Rezazadeh Sereshkeh, A.; Chau, T. Online detection of error-related potentials in multi-class cognitive task-based BCIs. Brain Comput. Interfaces 2019, 6, 1–12. [Google Scholar] [CrossRef]

- Spüler, M.; Bensch, M.; Kleih, S.; Rosenstiel, W.; Bogdan, M.; Kübler, A. Online use of error-related potentials in healthy users and people with severe motor impairment increases performance of a P300-BCI. Clin. Neurophysiol. 2012, 123, 1328–1337. [Google Scholar] [CrossRef]

- Vasios, C.E.; Ventouras, E.M.; Matsopoulos, G.K.; Karanasiou, I.; Asvestas, P.; Uzunoglu, N.K.; Van Schie, H.T.; De Bruijn, E.R. Classification of event-related potentials associated with response errors in actors and observers based on autoregressive modeling. Open Med. Inform. J. 2009, 3, 32. [Google Scholar] [CrossRef]

- Abu-Alqumsan, M.; Kapeller, C.; Hintermüller, C.; Guger, C.; Peer, A. Invariance and variability in interaction error-related potentials and their consequences for classification. J. Neural Eng. 2017, 14, 066015. [Google Scholar] [CrossRef]

- Bhattacharyya, S.; Konar, A.; Tibarewala, D.N.; Hayashibe, M. A generic transferable EEG decoder for online detection of error potential in target selection. Front. Neurosci. 2017, 11, 226. [Google Scholar] [CrossRef]

- Dias, C.L.; Sburlea, A.I.; Breitegger, K.; Wyss, D.; Drescher, H.; Wildburger, R.; Müller-Putz, G.R. Online asynchronous detection of error-related potentials in participants with a spinal cord injury by adapting a pre-trained generic classifier. J. Neural Eng. 2020, 18, 046022. [Google Scholar] [CrossRef]

- Ferrez, P.W.; del R. Millán, J. Error-related EEG potentials generated during simulated brain–computer interaction. IEEE Trans. Biomed. Eng. 2008, 55, 923–929. [Google Scholar] [CrossRef]

- Iturrate, I.; Montesano, L.; Minguez, J. Task-dependent signal variations in EEG error-related potentials for brain–computer interfaces. J. Neural Eng. 2013, 10, 026024. [Google Scholar] [CrossRef]

- Iturrate, I.; Chavarriaga, R.; Montesano, L.; Minguez, J.; Millán, J. Latency correction of event-related potentials between different experimental protocols. J. Neural Eng. 2014, 11, 036005. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.K.; Kirchner, E.A. Handling Few Training Data: Classifier Transfer Between Different Types of Error-Related Potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 320–332. [Google Scholar] [CrossRef] [PubMed]

- Schönleitner, F.M.; Otter, L.; Ehrlich, S.K.; Cheng, G. Calibration-Free Error-Related Potential Decoding With Adaptive Subject-Independent Models: A Comparative Study. IEEE Trans. Med. Robot. Bionics 2020, 2, 399–409. [Google Scholar] [CrossRef]

- Huffmeijer, R.; Bakermans-Kranenburg, M.J.; Alink, L.R.; Van IJzendoorn, M.H. Reliability of event-related potentials: The influence of number of trials and electrodes. Physiol. Behav. 2014, 130, 13–22. [Google Scholar] [CrossRef] [PubMed]

- Larson, M.J.; Baldwin, S.A.; Good, D.A.; Fair, J.E. Temporal stability of the error-related negativity (ERN) and post-error positivity (Pe): The role of number of trials. Psychophysiology 2010, 47, 1167–1171. [Google Scholar] [CrossRef]

- Olvet, D.M.; Hajcak, G. Reliability of error-related brain activity. Brain Res. 2009, 1284, 89–99. [Google Scholar] [CrossRef] [PubMed]

- Cruz, A.; Pires, G.; Nunes, U.J. Double ErrP detection for automatic error correction in an ERP-based BCI speller. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 26, 26–36. [Google Scholar] [CrossRef]

- Kumar, A.; Fang, Q.; Pirogova, E. The influence of psychological and cognitive states on error-related negativity evoked during post-stroke rehabilitation movements. Biomed. Eng. Online 2021, 20, 1–15. [Google Scholar] [CrossRef]

- Kumar, A.; Fang, Q.; Fu, J.; Pirogova, E.; Gu, X. Error-related neural responses recorded by electroencephalography during post-stroke rehabilitation movements. Front. Neurorobot. 2019, 13, 107. [Google Scholar] [CrossRef]

- Niessen, E.; Ant, J.M.; Bode, S.; Saliger, J.; Karbe, H.; Fink, G.R.; Stahl, J.; Weiss, P.H. Preserved performance monitoring and error detection in left hemisphere stroke. NeuroImage Clin. 2020, 27, 102307. [Google Scholar] [CrossRef] [PubMed]

- Maier, M.E.; Di Gregorio, F.; Muricchio, T.; Di Pellegrino, G. Impaired rapid error monitoring but intact error signaling following rostral anterior cingulate cortex lesions in humans. Front. Hum. Neurosci. 2015, 9, 339. [Google Scholar] [CrossRef] [PubMed]

- Stemmer, B.; Segalowitz, S.J.; Witzke, W.; Schönle, P.W. Error detection in patients with lesions to the medial prefrontal cortex: An ERP study. Neuropsychologia 2004, 42, 118–130. [Google Scholar] [CrossRef]

- Turken, A.U.; Swick, D. The effect of orbitofrontal lesions on the error-related negativity. Neurosci. Lett. 2008, 441, 7–10. [Google Scholar] [CrossRef]

- Ullsperger, M.; von Cramon, D.Y. The role of intact frontostriatal circuits in error processing. J. Cogn. Neurosci. 2006, 18, 651–664. [Google Scholar] [CrossRef]

- Ullsperger, M.; Von Cramon, D.Y.; Müller, N.G. Interactions of focal cortical lesions with error processing: Evidence from event-related brain potentials. Neuropsychology 2002, 16, 548. [Google Scholar] [CrossRef]

- Wessel, J.R.; Klein, T.A.; Ott, D.V.; Ullsperger, M. Lesions to the prefrontal performance-monitoring network disrupt neural processing and adaptive behaviors after both errors and novelty. Cortex 2014, 50, 45–54. [Google Scholar] [CrossRef]

- Shen, I.H.; Lin, Y.J.; Chen, C.L.; Liao, C.C. Neural Correlates of Response Inhibition and Error Processing in Individuals with Mild Traumatic Brain Injury: An Event-Related Potential Study. J. Neurotrauma 2020, 37, 115–124. [Google Scholar] [CrossRef]

- Brunnstrom, S. Motor testing procedures in hemiplegia: Based on sequential recovery stages. Phys. Ther. 1966, 46, 357–375. [Google Scholar] [CrossRef]

- Maksimenko, V.A.; Kurkin, S.A.; Pitsik, E.N.; Musatov, V.Y.; Runnova, A.E.; Efremova, T.Y.; Hramov, A.E.; Pisarchik, A.N. Artificial neural network classification of motor-related eeg: An increase in classification accuracy by reducing signal complexity. Complexity 2018, 2018, 9385947. [Google Scholar] [CrossRef]

- Müller-Putz, G.R.; Scherer, R.; Brunner, C.; Leeb, R.; Pfurtscheller, G. Better than random? A closer look on BCI results. Int. J. Bioelectromagn. 2008, 10, 52–55. [Google Scholar]

- Chavarriaga, R.; del R. Millán, J. Learning from EEG error-related potentials in noninvasive brain-computer interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 381–388. [Google Scholar] [CrossRef] [PubMed]

- Pezzetta, R.; Nicolardi, V.; Tidoni, E.; Aglioti, S.M. Error, rather than its probability, elicits specific electrocortical signatures: A combined EEG-immersive virtual reality study of action observation. J. Neurophysiol. 2018, 120, 1107–1118. [Google Scholar] [CrossRef]

- Chavarriaga, R.; Khaliliardali, Z.; Gheorghe, L.; Iturrate, I.; Millán, J.D.R. EEG-based decoding of error-related brain activity in a real-world driving task. J. Neural Eng. 2015, 12, 066028. [Google Scholar]

- Falkenstein, M.; Hoormann, J.; Hohnsbein, J. Changes of error-related ERPs with age. Exp. Brain Res. 2001, 138, 258–262. [Google Scholar] [CrossRef]

- Wessel, J.R. Error awareness and the error-related negativity: Evaluating the first decade of evidence. Front. Hum. Neurosci. 2012, 6, 88. [Google Scholar] [CrossRef]

- Wirth, C.; Dockree, P.M.; Harty, S.; Lacey, E.; Arvaneh, M. Towards error categorisation in BCI: Single-trial EEG classification between different errors. J. Neural Eng. 2019, 17, 016008. [Google Scholar] [CrossRef] [PubMed]

- Milekovic, T.; Ball, T.; Schulze-Bonhage, A.; Aertsen, A.; Mehring, C. Error-related electrocorticographic activity in humans during continuous movements. J. Neural Eng. 2012, 9, 026007. [Google Scholar] [CrossRef]

| Subject ID | Gender | Age (Years) | Affected Side | Type of Stroke | Time Since Injury (Days) | Brunnstrom Stage |

|---|---|---|---|---|---|---|

| 1 | M | 48 | Right | Haemorrhage | 91 | II |

| 2 | M | 55 | Right | Ischemic | 172 | V |

| 3 | M | 41 | Left | Ischemic | 70 | III |

| 4 | M | 50 | Left | Haemorrhage | 90 | III |

| 5 | M | 57 | Right | Haemorrhage | 52 | V |

| 6 | M | 52 | Right | Ischemic | 188 | V |

| 7 | M | 24 | Left | Haemorrhage | 180 | IV |

| 8 | F | 32 | Left | Ischemic | 25 | II |

| 9 | F | 26 | Left | Haemorrhage | 20 | I |

| 10 | M | 60 | Right | Ischemic | 87 | IV |

| 11 | M | 54 | Left | Ischemic | 220 | VII |

| 12 | M | 46 | Left | Ischemic | 42 | III |

| 13 | M | 58 | Right | Ischemic | 84 | III |

| 14 | M | 37 | Right | Haemorrhage | 36 | II |

| 15 | M | 42 | Left | Haemorrhage | 118 | V |

| 16 | M | 24 | Left | Haemorrhage | 45 | IV |

| 17 | F | 26 | Right | Ischemic | 12 | I |

| 18 | M | 62 | Right | Haemorrhage | 118 | III |

| 19 | M | 30 | Right | Ischemic | 60 | III |

| 20 | F | 53 | Left | Ischemic | 93 | IV |

| 21 | F | 38 | Right | Haemorrhage | 45 | VI |

| 22 | F | 28 | Left | Ischemic | 27 | V |

| 23 | M | 45 | Left | Ischemic | 90 | IV |

| 24 | M | 35 | Left | Haemorrhage | 17 | II |

| 25 | M | 45 | Right | Haemorrhage | 280 | VI |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Usama, N.; Niazi, I.K.; Dremstrup, K.; Jochumsen, M. Detection of Error-Related Potentials in Stroke Patients from EEG Using an Artificial Neural Network. Sensors 2021, 21, 6274. https://doi.org/10.3390/s21186274

Usama N, Niazi IK, Dremstrup K, Jochumsen M. Detection of Error-Related Potentials in Stroke Patients from EEG Using an Artificial Neural Network. Sensors. 2021; 21(18):6274. https://doi.org/10.3390/s21186274

Chicago/Turabian StyleUsama, Nayab, Imran Khan Niazi, Kim Dremstrup, and Mads Jochumsen. 2021. "Detection of Error-Related Potentials in Stroke Patients from EEG Using an Artificial Neural Network" Sensors 21, no. 18: 6274. https://doi.org/10.3390/s21186274

APA StyleUsama, N., Niazi, I. K., Dremstrup, K., & Jochumsen, M. (2021). Detection of Error-Related Potentials in Stroke Patients from EEG Using an Artificial Neural Network. Sensors, 21(18), 6274. https://doi.org/10.3390/s21186274