A Novel Automate Python Edge-to-Edge: From Automated Generation on Cloud to User Application Deployment on Edge of Deep Neural Networks for Low Power IoT Systems FPGA-Based Acceleration

Abstract

:1. Introduction

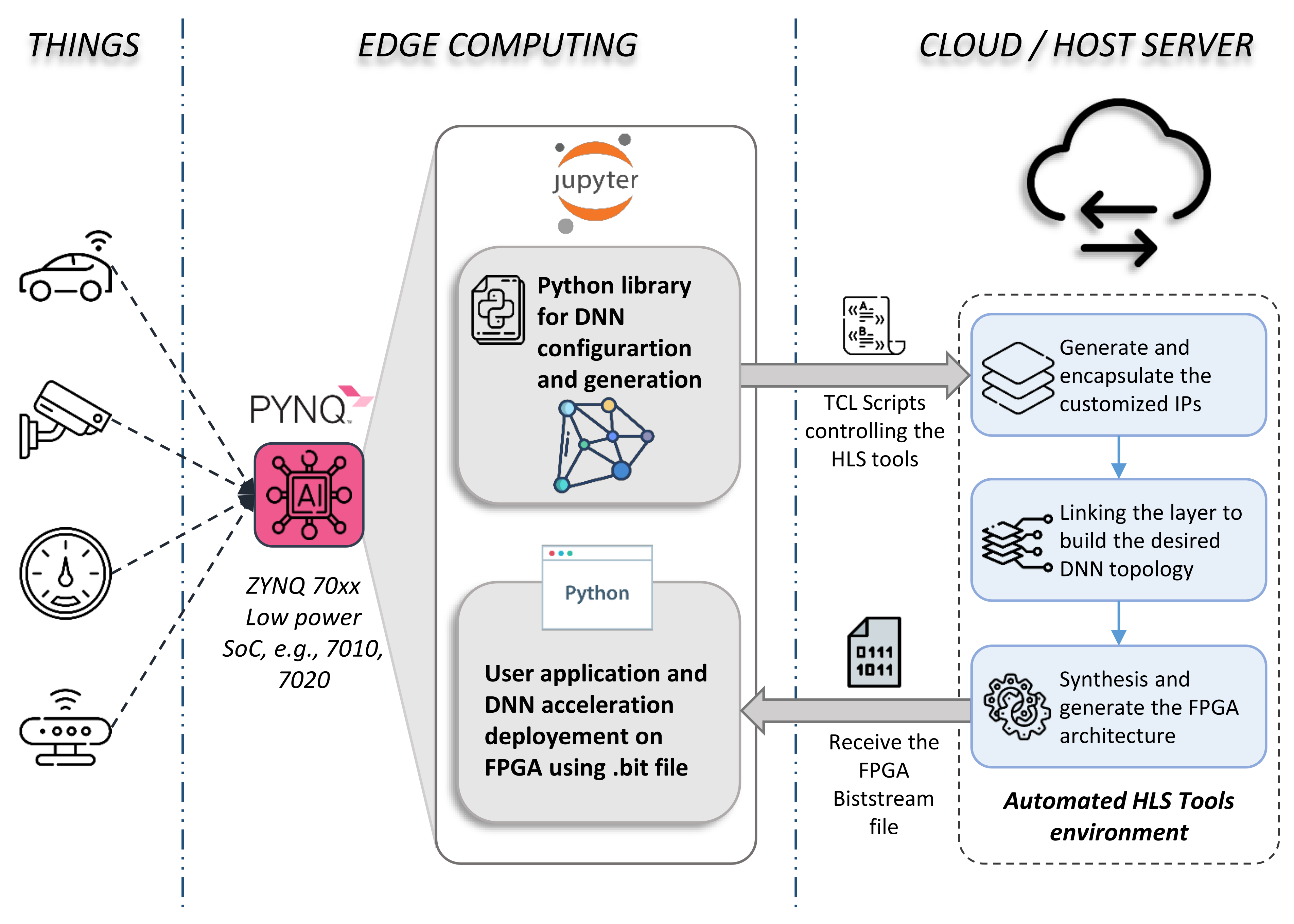

- A Python interface allows the user to customize the DNN implementation at the Edge based on the target platform’s limited hardware resources and the desired performance.

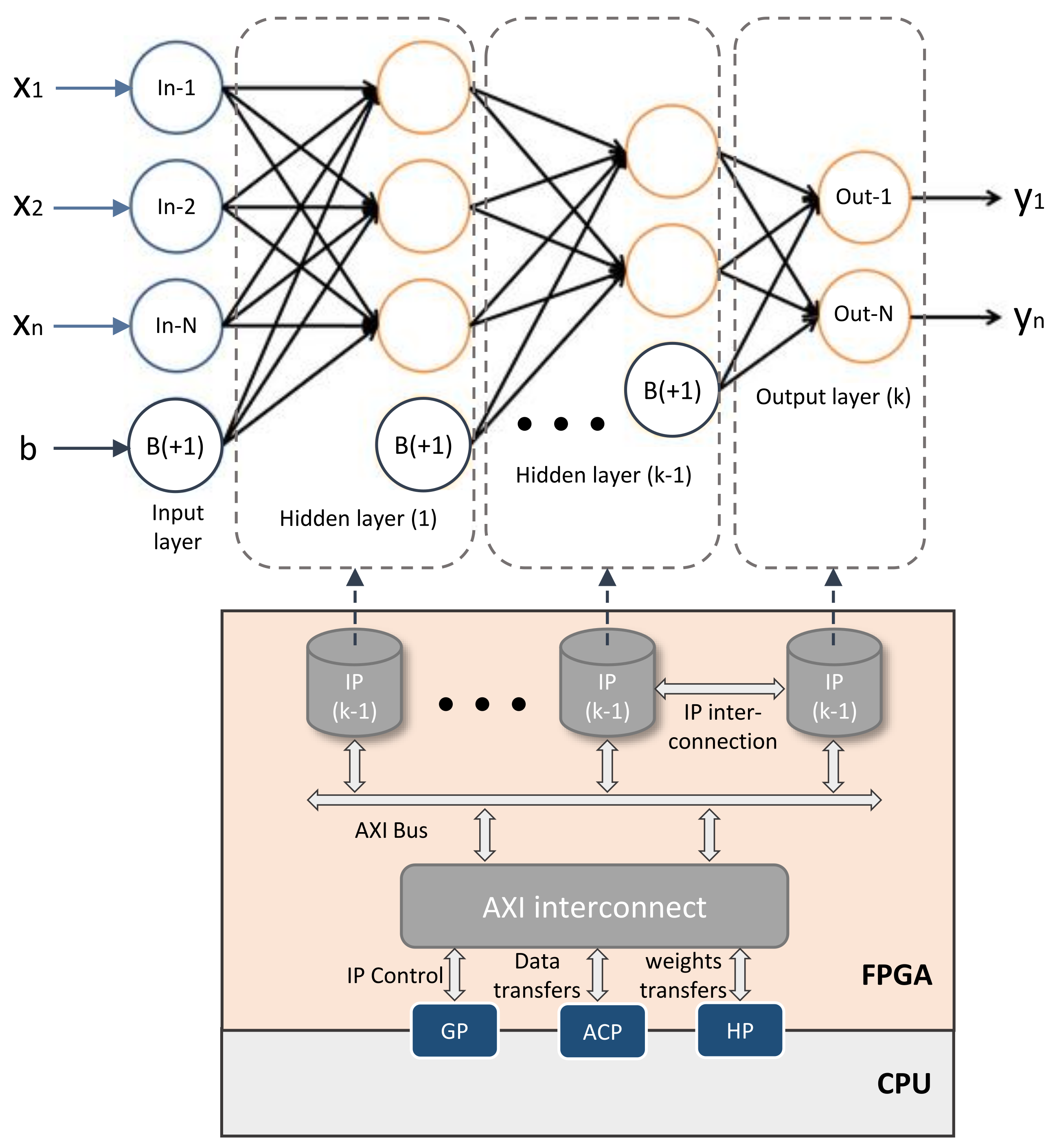

- Balancing the optimization techniques as well as the interface protocols of each IP in order to meet design-entry requirements and FPGA restrictions. For that, the customization process starts from a C++ template that has several default input parameters to optimize and encapsulate the IP layer to be generated. The generated IPs will be stacked to build such DNN topology.

- Once the customization is complete, the framework generates a TCL file that runs the pre-installed tools on the host server (or a commercial cloud such as AWS or Google Cloud) where all the necessary HLS development tools are pre-installed.

- The IP hardware (bitstream file) and the cloud-generated software library can easily be deployed to the Edge from the user application. The generated DNN can also be uploaded to a website platform (e.g., Github, the AWS Marketplace) to be shared with other users.

- Thanks to our new design flow, the user can easily customize, optimize, generate, and use DNN models on the Edge without needing to master hardware development tools, as is the case with almost all cutting-edge works. To our knowledge, this is the first automated environment that develops and deploys DNN architectures based on end-to-end FPGA acceleration.

2. Related Works

3. General Overview of the Proposed Framework

4. Framework at the Edge: DNN Configuration and TCL Generation

4.1. IP Layer C/C++ Base Template

| Listing 1. Pseudo-code for the C/C++ template used for a DNN layer. |

| // Multiply the bias value by the weight of j-th neuron for j = 1 to N_Output B[j] = Wb[j] ∗ b end // Multiply and accumulate layer weights for all neurons for i = 1 to N_Input for j = 1 to N_Output X[j] = X[j] + x[i] ∗ W[j] end end // Add weighted bias and weighted neurons and produce output neurons // result via activation function for j = 1 to N_Output y[j] = f(X[j]+B[i]) // activation function of each neuron at k-th layer end |

4.2. Python Library: DNN Customization and TCL Script Generation

| Listing 2. Python framework to automate the configuration and generation of the DNN model for a specific FPGA. |

from DNN_framework_lib.layers import Inputlayer, Hiddenlayer, Outputlayer from DNN_framework_lib.models import~Sequential # Configuration input_layer = Inputlayer( size=input_size, loop1="unrolling", loop3="pipeline_and_unrolling", input_layer="AXI-MM" output_layer="AXI-Stream" ) hidden_layers = [ Hidden_layer( size=layer2_size, loop3="pipeline_and_unrolling", input_layer="AXI-Stream", output_layer="AXI-MM" ), ... Hiddenlayer( size=layerN_size, loop1="unrolling" ] output_layer = Outputlayer( size=output_size, ) model = Sequential(board_name,...) mode.add(input_layer) for layer in hidden_layers: model.add(layer) model.compile() |

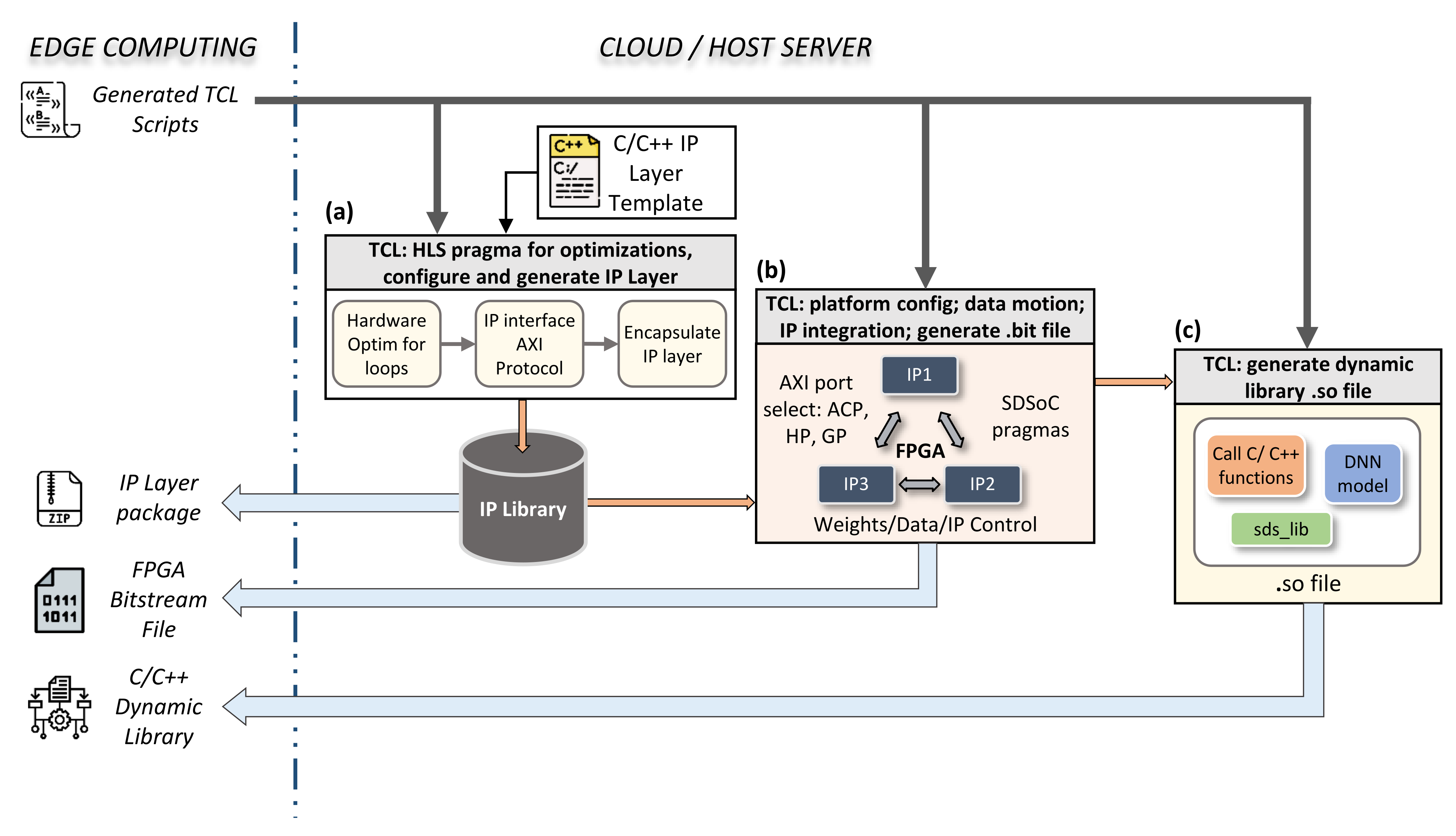

5. Framework on the Cloud: Automate HLS Tools for Generating FPGA Architecture

5.1. Auto IP Layer Generation

| Listing 3. TCL script that automates HLS tools on the Cloud/Host server. |

|

5.2. Auto IP Layer Integration and Software Control

5.3. Auto Dynamic Library and FPGA Binary Generation

6. Framework to/from Cloud to Edge: Communication between the Cloud and the Edge Applications

| Listing 4. Pseudo-code of the function that generates the bitstream (.bit) and the shared libraries (.so) files. |

|

| Listing 5. Pseudo-code of the server runtime main loop. |

|

| Listing 6. Pseudo-code of the our Python API for the Model creation. |

|

| Listing 7. Pseudo-code shows the adaptation and utilization of the Model. |

|

7. Experimental Results and Discussion

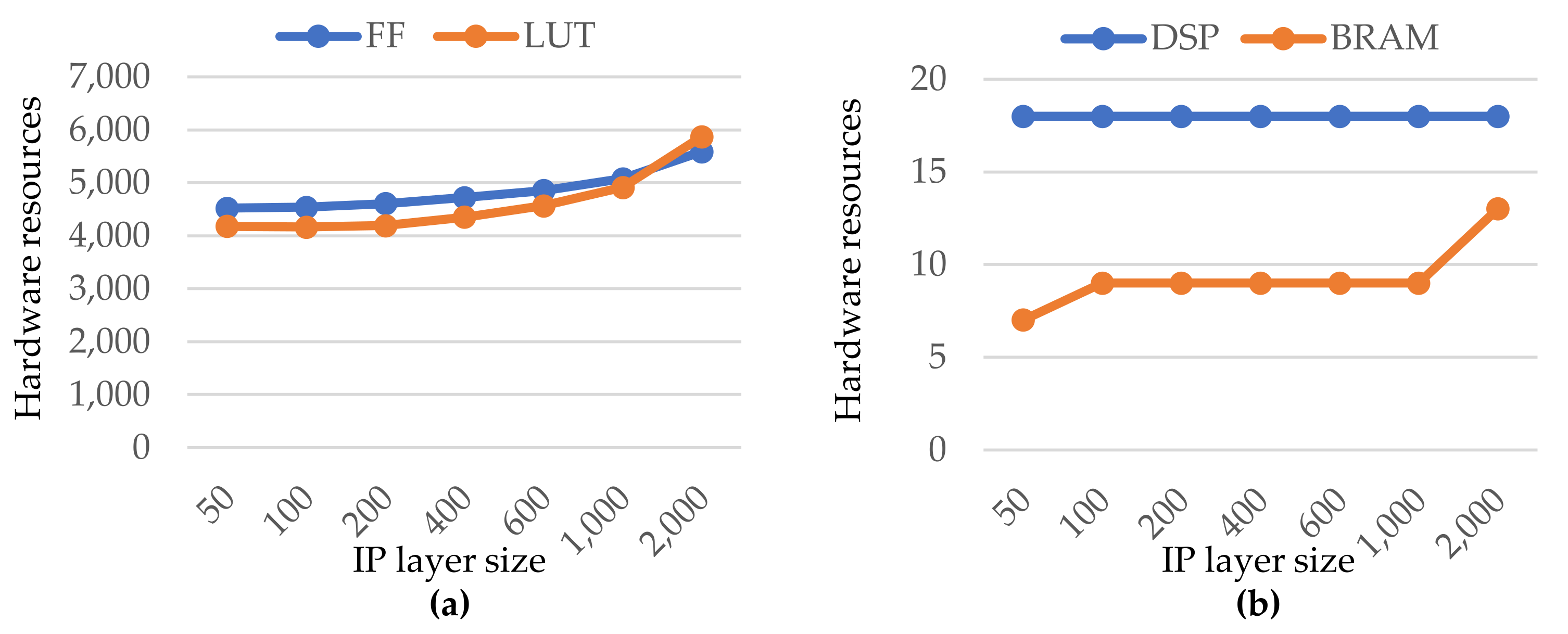

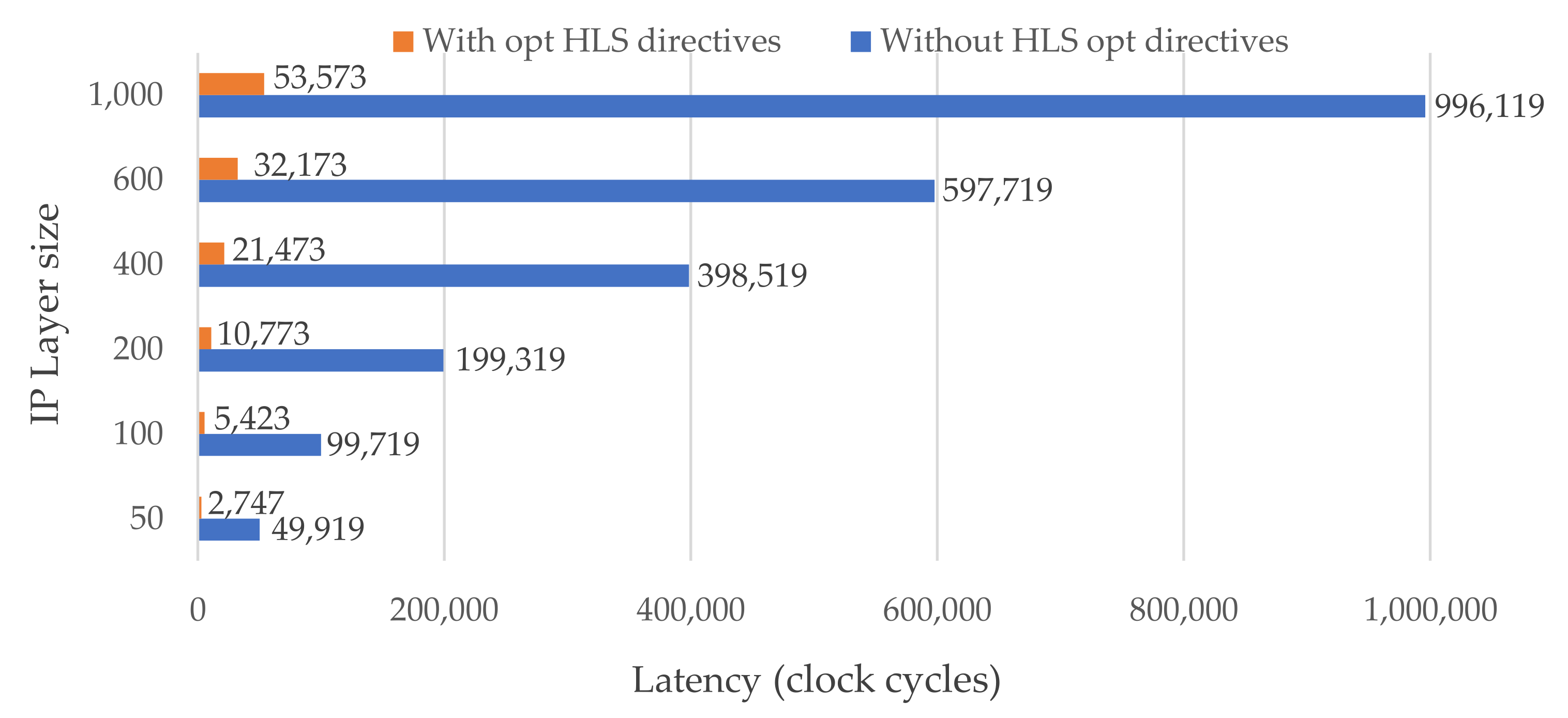

7.1. Synthesis and Implementation Results

7.2. State-of-the-Art Comparison and Discussion

7.3. Enhancement of Design Flow for IoT Applications-Based Edge Computing

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| AXI | Advanced eXtensible Interface |

| CPU | Central Processing Unit |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| FPS | Frames Per Second |

| FF | Flip-Flop |

| FPGA | Field Programmable Gate Array |

| HLS | High-Level Synthesis |

| IoT | Internet of Things |

| IP | Intellectual Property |

| LUT | Look-Up Table |

| Mps | Million parameters per second (DNN parameters) |

| USD | US Dollars |

| RTL | Register Transfer Level |

| SoC | System-on-Chip |

| TCL | Tool Command Language |

References

- Balakrishnan, T.; Chui, M.; Hall, B.; Henke, N. The state of AI in 2020. Available online: https://www.mckinsey.com/business-functions/mckinsey-analytics/our-insights/global-survey-the-state-of-ai-in-2020 (accessed on 18 August 2021).

- Dahlqvist, F.; Patel, M.; Rajko, A.; Shulman, J. Growing Opportunities in the Internet of Things. Available online: https://www.mckinsey.com/industries/private-equity-and-principal-investors/our-insights/growing-opportunities-in-the-internet-of-things (accessed on 18 August 2021).

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metab. Clin. Exp. 2017, 69, S36–S40. [Google Scholar] [CrossRef] [PubMed]

- Li, B.H.; Hou, B.C.; Yu, W.T.; Lu, X.B.; Yang, C.W. Applications of artificial intelligence in intelligent manufacturing: A review. Front. Inf. Technol. Electron. Eng. 2017, 18, 86–96. [Google Scholar] [CrossRef]

- Capra, M.; Peloso, R.; Masera, G.; Roch, M.R.; Martina, M. Edge computing: A survey on the hardware requirements in the Internet of Things world. Future Internet 2019, 11, 100. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Zhang, M.; Wang, X.; Ma, X.; Liu, J. Deep Learning for Edge Computing Applications: A State-of-the-Art Survey. IEEE Access 2020, 8, 58322–58336. [Google Scholar] [CrossRef]

- Lammie, C.; Olsen, A.; Carrick, T.; Rahimi Azghadi, M. Low-Power and High-Speed Deep FPGA Inference Engines for Weed Classification at the Edge. IEEE Access 2019, 7, 51171–51184. [Google Scholar] [CrossRef]

- Hao, C.; Chen, D. Deep neural network model and FPGA accelerator co-design: Opportunities and challenges. In Proceedings of the 2018 14th IEEE International Conference on Solid-State and Integrated Circuit Technology (ICSICT), Qingdao, China, 31 October–3 November 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Guo, K.; Han, S.; Yao, S.; Wang, Y.; Xie, Y.; Yang, H. Software-Hardware Codesign for Efficient Neural Network Acceleration. IEEE Micro 2017, 37, 18–25. [Google Scholar] [CrossRef]

- Quenon, A.; Ramos Gomes Da Silva, V. Towards higher-level synthesis and co-design with python. In Proceedings of the Workshop on Languages, Tools, and Techniques for Accelerator Design (LATTE ’21); ACM: New York, NY, USA, 2021. [Google Scholar]

- Belabed, T.; Coutinho, M.G.F.; Fernandes, M.A.C.; Sakuyama, C.V.; Souani, C. User Driven FPGA-Based Design Automated Framework of Deep Neural Networks for Low-Power Low-Cost Edge Computing. IEEE Access 2021, 9, 89162–89180. [Google Scholar] [CrossRef]

- Nurvitadhi, E.; Sheffield, D.; Sim, J.; Mishra, A.; Venkatesh, G.; Marr, D. Accelerating binarized neural networks: Comparison of FPGA, CPU, GPU, and ASIC. In Proceedings of the 2016 International Conference on Field-Programmable Technology (FPT), Xi’an, China, 7–9 December 2016; pp. 77–84. [Google Scholar] [CrossRef]

- Nurvitadhi, E.; Sim, J.; Sheffield, D.; Mishra, A.; Krishnan, S.; Marr, D. Accelerating recurrent neural networks in analytics servers: Comparison of FPGA, CPU, GPU, and ASIC. In Proceedings of the 2016 26th International Conference on Field Programmable Logic and Applications (FPL), Lausanne, Switzerland, 29 August–2 September 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Nurvitadhi, E.; Subhaschandra, S.; Boudoukh, G.; Venkatesh, G.; Sim, J.; Marr, D.; Huang, R.; Ong Gee Hock, J.; Liew, Y.T.; Srivatsan, K.; et al. Can FPGAs beat GPUs in accelerating next-generation deep neural networks? In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays—FPGA ’17, Monterey, CA, USA, 22–24 February 2017; ACM Press: New York, NY, USA, 2017; pp. 5–14. [Google Scholar] [CrossRef]

- Venieris, S.I.; Bouganis, C.S. fpgaConvNet: A framework for mapping convolutional neural networks on FPGAs. In Proceedings of the 2016 IEEE 24th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Washington, DC, USA, 1–3 May 2016; pp. 40–47. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Xu, J.; Han, Y.; Li, H.; Li, X. DeepBurning: Automatic generation of FPGA-based learning accelerators for the Neural Network family. In Proceedings of the 53rd Annual Design Automation Conference, Austin, TX, USA, 5–9 June 2016; ACM: New York, NY, USA, 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Elnawawy, M.; Farhan, A.; Nabulsi, A.A.; Al-Ali, A.; Sagahyroon, A. Role of FPGA in internet of things applications. In Proceedings of the 2019 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Ajman, United Arab Emirates, 10–12 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, Y.; Zheng, B.; Zhang, Z.; Wang, Q.; Shen, C.; Zhang, Q. Deep Learning on Mobile and Embedded Devices: State-of-the-art, Challenges, and Future Directions. ACM Comput. Surv. 2020, 53, 1–37. [Google Scholar] [CrossRef]

- Wang, C.; Gong, L.; Yu, Q.; Li, X.; Xie, Y.; Zhou, X. DLAU: A Scalable Deep Learning Accelerator Unit on FPGA. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2016, 36, 1. [Google Scholar] [CrossRef]

- Maria, J.; Amaro, J.; Falcao, G.; Alexandre, L.A. Stacked Autoencoders Using Low-Power Accelerated Architectures for Object Recognition in Autonomous Systems. Neural Process. Lett. 2016, 43, 445–458. [Google Scholar] [CrossRef]

- Coutinho, M.G.F.; Torquato, M.F.; Fernandes, M.A.C. Deep Neural Network Hardware Implementation Based on Stacked Sparse Autoencoder. IEEE Access 2019, 7, 40674–40694. [Google Scholar] [CrossRef]

- Mouselinos, S.; Leon, V.; Xydis, S.; Soudris, D.; Pekmestzi, K. TF2FPGA: A framework for projecting and accelerating tensorflow CNNs on FPGA platforms. In Proceedings of the 2019 8th International Conference on Modern Circuits and Systems Technologies (MOCAST), Thessaloniki, Greece, 13–15 May 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Mousouliotis, P.G.; Petrou, L.P. CNN-Grinder: From Algorithmic to High-Level Synthesis descriptions of CNNs for Low-end-low-cost FPGA SoCs. Microprocess. Microsyst. 2020, 73, 102990. [Google Scholar] [CrossRef]

- Rivera-Acosta, M.; Ortega-Cisneros, S.; Rivera, J. Automatic Tool for Fast Generation of Custom Convolutional Neural Networks Accelerators for FPGA. Electronics 2019, 8, 641. [Google Scholar] [CrossRef] [Green Version]

- Mazouz, A.; Bridges, C.P. Automated offline design-space exploration and online design reconfiguration for CNNs. In Proceedings of the 2020 IEEE Conference on Evolving and Adaptive Intelligent Systems (EAIS), Bari, Italy, 27–29 May 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Xilinx. PYNQ PYTHON PRODUCTIVITY: Development Boards. Available online: http://www.pynq.io/board.html (accessed on 18 August 2021).

- Xilinx. PYNQ Libraries. Available online: https://pynq.readthedocs.io/en/v2.6.1/pynq_libraries.html (accessed on 18 August 2021).

- Xilinx. Vivado AXI Reference Guide, v4.0; Technical Report; Xilinx, Inc.: San Jose, CA, USA, 2017. [Google Scholar]

- Arm. Introduction to AMBA AXI4; Technical Report 0101; Arm Limited: Cambridge, UK, 2020. [Google Scholar]

- Kung, H.T.; Leiserson, C.E. Systolic arrays (for VLSI). In Sparse Matrix Proceedings; Duff, I.S., Stewart, G.W., Eds.; Society for Industrial & Applied Mathematics: Philadelphia, PA, USA, 1978; pp. 256–282. [Google Scholar]

- Crockett, L.H.; Elliot, R.A.; Enderwitz, M.A.; Stewart, R.W. The Zynq Book: Embedded Processing with the ARM® Cortex®-A9 on the Xilinx® Zynq®-7000 All Programmable SoC; Strathclyde Academic Media: Glasgow, Scotland, UK, 2014; p. 460. [Google Scholar]

- Xilinx. SDSoC Environment User Guide; Technical Report; Xilinx, Inc.: San Jose, CA, USA, 2019. [Google Scholar]

- LeCun, Y.; Cortes, C.; Burges, C.J. THE MNIST DATABASE of Handwritten Digits. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 18 August 2021).

- Xilinx. PYNQ: Python Productivity. Available online: http://www.pynq.io/ (accessed on 18 August 2021).

- Garola, A.R.; Manduchi, G.; Gottardo, M.; Cavazzana, R.; Recchia, M.; Taliercio, C.; Luchetta, A. A Zynq-Based Flexible ADC Architecture Combining Real-Time Data Streaming and Transient Recording. IEEE Trans. Nucl. Sci. 2021, 68, 245–249. [Google Scholar] [CrossRef]

- Kowalczyk, M.; Ciarach, P.; Przewlocka-Rus, D.; Szolc, H.; Kryjak, T. Real-Time FPGA Implementation of Parallel Connected Component Labelling for a 4K Video Stream. J. Signal Process. Syst. 2021, 93, 481–498. [Google Scholar] [CrossRef]

- Krishnamoorthy, R.; Krishnan, K.; Chokkalingam, B.; Padmanaban, S.; Leonowicz, Z.; Holm-Nielsen, J.B.; Mitolo, M. Systematic Approach for State-of-the-Art Architectures and System-on-Chip Selection for Heterogeneous IoT Applications. IEEE Access 2021, 9, 25594–25622. [Google Scholar] [CrossRef]

- Yvanoff-Frenchin, C.; Ramos, V.; Belabed, T.; Valderrama, C. Edge Computing Robot Interface for Automatic Elderly Mental Health Care Based on Voice. Electronics 2020, 9, 419. [Google Scholar] [CrossRef] [Green Version]

- Farhat, W.; Sghaier, S.; Faiedh, H.; Souani, C. Design of efficient embedded system for road sign recognition. J. Ambient Intell. Humaniz. Comput. 2019, 10, 491–507. [Google Scholar] [CrossRef]

- Digikey. Available online: https://www.digikey.com/ (accessed on 18 August 2021).

- Xilinx. PYNQ: Overlay Design Methodology. Available online: https://pynq.readthedocs.io/en/latest/overlay_design_methodology.html (accessed on 18 August 2021).

- Hassan, N.; Gillani, S.; Ahmed, E.; Yaqoob, I.; Imran, M. The Role of Edge Computing in Internet of Things. IEEE Commun. Mag. 2018, 56, 110–115. [Google Scholar] [CrossRef]

| Topology | Throughput | Speed-Up | Accuracy (%) | Power (W) | |

|---|---|---|---|---|---|

| CPU-Only | CPU + FPGA | ||||

| 784-32-32-10 | 60 | 3636 | 60.6× | 96.2 | 0.266 |

| 784-100-50-10 | 19 | 1160 | 61.05× | 99.2 | 0.380 |

| 784-100-50-20-10 | 19 | 1153 | 60.68× | 99.2 | 0.430 |

| Work | Topology | Chip | Data (Bit, Type) | Parameters (Number) | Accuracy (%) | Throughput | Power (W) | Power/Throughput (mW/Mps) ** | |

|---|---|---|---|---|---|---|---|---|---|

| (FPS) | (Mps) * | ||||||||

| [21] | 784-100-50-10 | Virtex 6 | 12, fixed | 84.05 | 93.3% | 1250 | 105.062 | 0.3 | 2.855 |

| [25] | 1-2-4 | Zynq 7100 | 16, fixed | 41.71 | 98.6% | 526 | 21.934 | 0.578 | 26.35 |

| [24] | LeNet-5 | Cyclone IV | 32, float | 60 | - | 925 | 55.5 | - | - |

| Ours, #1 | 784-100-50-10 | Zynq 7020 | 32, float | 84.05 | 99.2% | 19 | 1.59 | 0.478 | 300.6 |

| Ours, #2 | 784-100-50-10 | Zynq 7020 | 32, float | 84.05 | 99.2% | 118 | 9.917 | 0.49 | 49.4 |

| Ours, #3 | 784-100-50-10 | Zynq 7020 | 32, float | 84.05 | 99.2% | 1160 | 97.498 | 0.38 | 3.89 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Belabed, T.; Ramos Gomes da Silva, V.; Quenon, A.; Valderamma, C.; Souani, C. A Novel Automate Python Edge-to-Edge: From Automated Generation on Cloud to User Application Deployment on Edge of Deep Neural Networks for Low Power IoT Systems FPGA-Based Acceleration. Sensors 2021, 21, 6050. https://doi.org/10.3390/s21186050

Belabed T, Ramos Gomes da Silva V, Quenon A, Valderamma C, Souani C. A Novel Automate Python Edge-to-Edge: From Automated Generation on Cloud to User Application Deployment on Edge of Deep Neural Networks for Low Power IoT Systems FPGA-Based Acceleration. Sensors. 2021; 21(18):6050. https://doi.org/10.3390/s21186050

Chicago/Turabian StyleBelabed, Tarek, Vitor Ramos Gomes da Silva, Alexandre Quenon, Carlos Valderamma, and Chokri Souani. 2021. "A Novel Automate Python Edge-to-Edge: From Automated Generation on Cloud to User Application Deployment on Edge of Deep Neural Networks for Low Power IoT Systems FPGA-Based Acceleration" Sensors 21, no. 18: 6050. https://doi.org/10.3390/s21186050

APA StyleBelabed, T., Ramos Gomes da Silva, V., Quenon, A., Valderamma, C., & Souani, C. (2021). A Novel Automate Python Edge-to-Edge: From Automated Generation on Cloud to User Application Deployment on Edge of Deep Neural Networks for Low Power IoT Systems FPGA-Based Acceleration. Sensors, 21(18), 6050. https://doi.org/10.3390/s21186050