Abstract

The data produced by sensors of IoT devices are becoming keystones for organizations to conduct critical decision-making processes. However, delivering information to these processes in real-time represents two challenges for the organizations: the first one is achieving a constant dataflow from IoT to the cloud and the second one is enabling decision-making processes to retrieve data from dataflows in real-time. This paper presents a cloud-based Web of Things method for creating digital twins of IoT devices (named sentinels).The novelty of the proposed approach is that sentinels create an abstract window for decision-making processes to: (a) find data (e.g., properties, events, and data from sensors of IoT devices) or (b) invoke functions (e.g., actions and tasks) from physical devices (PD), as well as from virtual devices (VD). In this approach, the applications and services of decision-making processes deal with sentinels instead of managing complex details associated with the PDs, VDs, and cloud computing infrastructures. A prototype based on the proposed method was implemented to conduct a case study based on a blockchain system for verifying contract violation in sensors used in product transportation logistics. The evaluation showed the effectiveness of sentinels enabling organizations to attain data from IoT sensors and the dataflows used by decision-making processes to convert these data into useful information.

Keywords:

digital twins; IoT data; microservices; cloud computing; Web of Things; virtual containers 1. Introduction

IoT devices are becoming a key element in decision-making processes [1,2,3]. These devices are quite common in multiple infrastructures, such as Industry 4.0 [4], healthcare domain [5], and supply chains [6], to name a few. The data produced by these devices follow a lifecycle from the sensors to the edge [7], to the fog [4], and to the cloud [8]. In this lifecycle, data are acquired (mainly at the edge [9]), prepared and analyzed (typically at the fog or the cloud [10]), and finally converted into information for human consumption to use it in decision-making processes (mainly at the cloud [8] through end-user devices). In these types of infrastructures (any combination of edge, fog, or cloud), the virtual containers () are key to deploy services on each infrastructure [11,12,13]. These services provide dataflows from the IoT to the cloud that produce different types of data and information, which proves to be key for organizations to conduct critical decision-making processes [14,15,16].

However, extracting data and information from these dataflows to deliver it to decision-making processes in real-time represents a huge challenge in two directions: the first one is verifying the accomplishment of a constant dataflow from IoT to the cloud; and the second one is enabling decision-making processes to retrieve, in real-time, data and information from different points of dataflows. These data acquisition tasks through dataflows are not straightforward because of the heterogeneity of the components participating in a dataflow (applications, types of sensors, data formats, infrastructures [17], to name a few). It is desirable a manner not just to acquire data and information from dataflows, but also to invoke actions and tasks on the dataflow components. That could facilitate tasks on decision-making analysis.

We propose to create digital twins of the IoT data acquirers (hardware, physical machine, or virtual container, application, or microservice) by using Web of Things cards (WoT) (www.w3.org/WoT accessed on 5 August 2021) for decision-making process to retrieve, in real time, data and information or invoke actions or tasks. A digital twin is an abstract representation commonly used in Industry 4.0 for IoT device monitoring [18]; that is, a virtual replica of objects or processes that simulate the behavior of their real counterparts. WoT is an initiative for representing and managing definitions of IoT artifacts (devices, components, applications, etc.), which suggests using a set of well-accepted protocols from the Semantic Web for any IoT artifact from the physical world to be available into the World Wide Web by creating a net of WoT definitions [19].

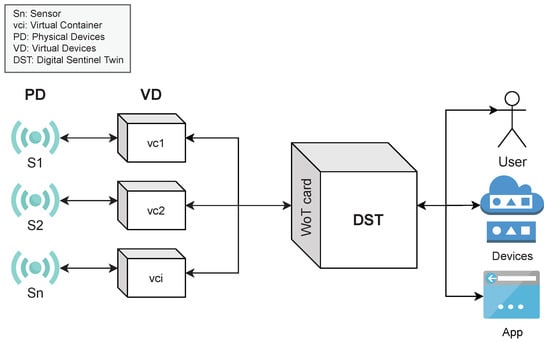

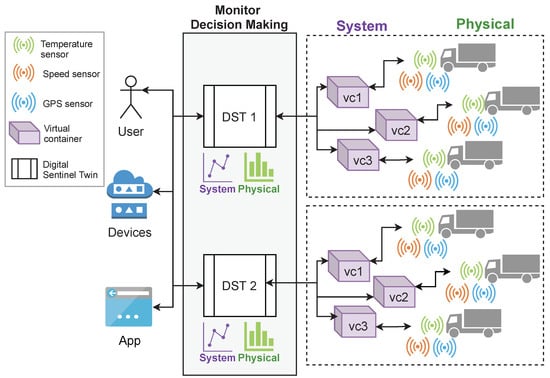

In this paper, we present the design, implementation, and evaluation of a cloud-based WoT method for creating digital sentinel twins () of IoT devices. A DST creates an abstract window for decision-making processes to attain information and data, such as properties, events, and data produced by sensors, and to invoke actions or tasks from IoT devices. An IoT device is a physical device () with sensors and tasks that can be accessed directly or through a virtual device (). A is an application or microservice encapsulated into a virtual container for acquiring, extracting, processing, monitoring, and analyzing data from PDs. Figure 1 shows an example of the process used by a DST to create a window for decision-making processes consumption (by either a human, application, or VD). As it can be seen, in this approach, the applications or services used in decision-making processes deal with DSTs instead of managing the complex details associated with the PDs, VDs, or cloud computing infrastructures.

Figure 1.

Conceptual view of a DST.

We implemented a prototype based on this method to perform case studies supported by GPS, temperature, and speed sensors. Additionally, using a blockchain system, the compliance of contracts to which these sensors are subject in the transportation logistics of products is continuously verified. The evaluation revealed the effectiveness of the DSTs for organizations to attain data and information about both IoT devices and the whole processes converting IoT data into useful information required in decision-making processes.

The contributions of this work are:

- The design, implementation, and evaluation of a cloud-based WoT method for creating digital sentinel twins of IoT devices.

- The definition of the digital sentinel twin concept as a mean for accessing data and information, and for invoking tasks from IoT devices.

The rest of the paper is organized as follows: Section 2 describes the state of the art of works related to the topics of the proposed method; Section 3 describes the design and construction of a method to create DSTs for interacting with IoT devices; Section 4 describes the implementation of a prototype for the creation of DSTs; Section 5 presents the results of the prototype in two phases of experiments; The discussion of the obtained results is described in Section 6. Finally, Section 7 is presented with conclusions and future work.

2. Related Work

In the literature, there are some works about digital twins that are relevant to our approach, and these are next described.

In the context of digital twins, there are different works focused on its use for simulation, monitoring, risk prevention, etc., for IoT devices. Some are [20,21,22]. In [20], Assad et al. proposed a web-based digital twin (WDT) architecture, with the purpose of improving the sustainability of industrial cyber-physical systems. In [21] Bevilacqua et al. proposed a digital twin reference model for risk prediction and prevention. The difference between our work and these two proposals is that we establish the use of virtual containers in a middle layer to access, acquire, extract, transform, etc., the information of the IoT devices; in this way, through a , we are able to represent both the physical (the IoT artifact) and virtual (software applications accessing the IoT artifact) device. In [22] Gao et al. proposed a method of simulation and modeling in real time for the production line of digital twins. The effectiveness of the proposed method is verified by taking an assembly line as an example.

In the context of digital twins using virtual containers for the acquisition of information from IoT devices, the proposals [23,24,25] are interesting. In [23] Alaasam et al. proposed a study on live stateful stream processing migration of digital twins. The authors emphasized the importance of two factors that influence the construction of stateful stream processing in systems as complex as digital twin: Stateful virtualization infrastructure and the stateful data. In [24], Tingyu et al. proposed a methodology of container virtualization based on simulation as a service (CVSimaaS), the authors use virtual containers to implement a digital twins system, obtaining a lower consumption of resources with high efficiency. Like our proposal, these two works include the concept of virtual containers together with digital twins for IoT devices. However, these two proposals do not add a standardized representation to the digital twin. Moreover, in our proposal, we follow the WoT guidelines for the creation of the as universal accessible entities. In [25] Borodulin et al. proposed a model for simulation and prediction of industrial processes using digital twins in digital twin-as-a-service (DTaaS), which is a way to implement an orchestration of a set of independent services and provide scalability for simulation.

In the context of virtual container modeling, two proposals stand out [26,27]. In [26], Paraiso et al. presented an approach to model-driven management of Docker containers, which enables verification of the virtual container system architecture at design time. In [27], Piraghaj et al. proposed a simulation architecture called ContainerCloudSim, which was used to evaluate resource management techniques in virtual containers from cloud environments. Unlike these proposals, whose focus is only on virtual containers modeling, our proposal additionally models the environment of the IoT devices, adding WoT recommendations for representing them, which produces a flexible for consumption of the virtual containers and IoT devices data. In [28], Medel et al. proposed a performance model for Kubernetes-based deployment using Docker containers. Such a model can be used as a basis to support resource management and application design.

In the context of the use of virtual containers for the monitoring, simulation, and orchestration of IoT devices, there are two proposals [29,30]. In [29], Alam et al. proposed a modular and scalable architecture for IoT based on lightweight virtualization. Thus, the modularity provided, combined with the orchestration provided by Docker, simplifies management and enables distributed deployments, creating a highly dynamic system. In [30], Muralidharan et al. proposed a distributed monitoring system based on virtual containers for IoT applications for the management of a smart city environment. They achieved low latency, reliable and secure communication between large-scale deployment of IoT devices, with a focus on horizontal interoperability between various IoT applications. Both works do not use the digital twin concept, unlike our work (), which allows us to create a reflection with the properties and characteristics of the IoT device.

Muralidharan et al., in [31], proposed a semantic digital twin model for interacting with IoT devices. The authors used virtual containers to mimic IoT devices. This is the most similar approach to our proposal. However, they only focus on modeling the physical devices () and not virtual devices (). Instead, through the , we can represent both the physical and virtual devices.

3. On the Building of Digital Sentinel Twins for IoT Devices

A digital sentinel twin (DST) is a software object produced from a data structure named , which is created from data of physical devices () or virtual devices () interacting with surrounding elements for accomplishing some task involving sensors.

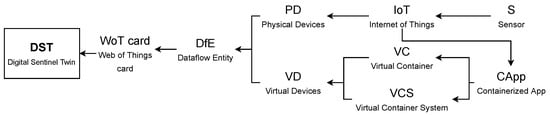

The conceptualization of a DST is illustrated in Figure 2, which is composed of the concepts next described.

Figure 2.

Conceptualization of a DST.

A represents an IoT device interacting with sensors. A represents the software components required for creating a dataflow from an IoT device to a decision-making process. This means that a comprises components such as Virtual Containers () or a Virtual Container System (). A is a mechanism for logical encapsulation of software applications that creates environment independent applications required to create a dataflow. A represents a set of built as a single solution (service) to perform a task into the dataflow. A containerized application () is in charge of interacting with IoT devices, and it is encapsulated into either or .

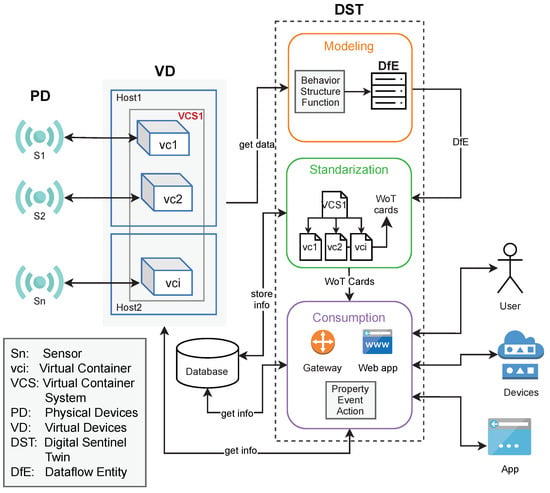

Thus, a is a versatile object for interacting in an easy manner with the complex and detailed structure of or . This is due to the flexibility of the WoTcard, which fulfills the recommendations of the (www.w3.org accessed on 5 August 2021).This information comes from a dataflow entity (), which captures information of each internal component (any of , , or ), as well as relationships of these components with the . The is basically a data structure including information about the structure, behavior, and function of or . The structure, behavior, and function are used to model the dataflow from the IoT device to the decision-making processes (as it captures these features of all entities participating in such a dataflow). The context of generation and usage of a is illustrated in Figure 3.

Figure 3.

Context of a DST.

We considered an additional layer for standardizing the representation of a by using WoT guidelines; this produces a . That means, a represents the information of through standardized concepts about virtual containers. These concepts come from an ontology based on the ISO norm ISO/IEC JTC 1/SC 38 (www.iso.org/committee/601355.html accessed on 5 August 2021). By following these WoT standards, a can represent, in a well-defined manner and unique identity, a or , without any further adaption on .

We propose a three-phase method to create a for a dataflow from IoT devices to decision-making processes. Figure 3 also shows the conceptual view of the stages of the methodology: modeling (phase 1), where the data of the is acquired and its elements modeled; then, in the standardization (phase 2), these elements are depicted into WoT cards, which are ready to be used in the consumption (phase 3). Next, each stage is described more in detail.

3.1. Phase 1: On the Usage of Functional Modeling for Building DfE

A or can be modeled as a process to achieve a goal. The functional modeling [32,33] is quite suitable for creating a representation of its structure, behavior, and function. This modeling has been used, over the past years, for successfully representing processes in multiple scenarios [34,35,36].

In the proposed method, all the dataflow participants are modeled as objects composed of low-level parts; the object has an objective, and its components contribute to achieve, together, such an objective by performing tasks, such as acquiring, manufacturing, preparing, or analyzing data produced by IoT sensors. The functional modeling is quite suitable for the IoT context where it is important not only to model the IoT devices but also the dataflow participants to describe the properties, events, and actions performed from the IoT devices to the decision-making processes (either at the fog or cloud). This approach also allowed us to model all the participants in the production of these dataflows (any of , , or ), which, in fact, are having a behavior of chained processes. This model is captured into , which describes the behavior (properties and events), function (tasks), and structure (interconnections) of all participants in the dataflow.

As a preparation step of this method, we assume the existence of a (see in Figure 3) executing a transformation of data (task); independently of the number of internal in a dataflow, these are modeled as one . Let us consider the simpler case, where one is decomposed into its function, structure, and behavior, and stored in a . This decomposition is represented by means of , making the as a ready for consumption. For the case of a , occurs the same process by each individual , integrating individual functions as the overall function of the .

The objective of this phase is to obtain the three main modeling elements of a :

- structure, where the components of the and its relationships are specified;

- behavior, where the values of the attributes of components are specified, according to the function of the ;

- function, where the main goal of the and the tasks required to achieve it are specified.

This phase starts by receiving the configuration file of a , in YML or YAML format. From this file all the data required to represent the are acquired.

Next, the main elements are described following a decomposition approach.

3.1.1. Function

The function is the goal description of the . If the input file is of a , the function is modeled as a composition of functions of the internal . The function makes reference to the task executed (transformation) on the dataflow. There are six base function for a :

- source, the capability to act as an infinite reservoir of data;

- transport, the capability to transfer data from one point to another, including from one medium to another;

- barrier, the capability to prevent the transfer data from one point to another, including from one medium to another;

- storage, the capability to accumulate data;

- balance, the capability to provide a balance between the total rates of incoming and outgoing dataflows;

- sink, the capability to act as an infinite drain of data.

Specialized functions can be derived from these base functions, such as produce-data, acquire-data, integrate-data, consume, to mention a few. All the functions may be connected to each other into flow paths or flow structures forming software structures.

Thus, each has at least one application () performing some transformation (); defined as follows.

The is the key element for representing the function of a .

A containerized application () represents one or a set of applications , , encapsulated into a .

3.1.2. Structure

The internal structure of a is commonly organized as software structures (e.g., patterns, pipelines, parallel schema, dataflow, etc.). The model of the must reflect this kind of organization. Thus, the structure of the is defined as a logical directed acyclic graph , where nodes (N) represent the components () that compose the , while the interconnections between nodes () are established by edges (E), which are defined as follows.

The is the key element for representing the structure of a .

3.1.3. Behavior

The behavior of the is established by assigning values to its properties, that is, by associating the function of the with the infrastructure (H) defined in the configuration file. The are deployed on , being I the whole infrastructure (e.g., a cloud). The consumption of a set of resources (R) of the specified infrastructure (processor—CPU, memory—MEM, file system—FS, and network—NET) is denoted as per each , which are observed by a set of metrics (M).

H, R, and M follow a hierarchy of elements defined as:

Equation (12) specifies that each physical computer h (where a runs) has a subset of physical resources r. Likewise, Equation (13) specifies that each physical resource r has a denoting the performance of r for , and a metric m observes that for performance analysis.

Each resource r produces several in the continuous numerical space. Thus, a huge set of values is generated per resource r. These values are used for computing utilization factors (UF), which inform about the status performance of a resource r. Although the resources produce a lot of values and data, we are interested in such values of that could initiate a risk situation. Then, according to the ISO 31000 standard (www.iso.org/iso-31000-risk-management.html) accessed on 5 August 2021 for risk management [37], the values of are discretized in scales: , and . These thresholds indicate the level of performance (_lvl) of each resource , as indicates Equation (14).

The of CPU in an instant of time t is defined by (15).

where, is the total processing capacity of the physical computer, given by the sum of the capacity of each of the cores and is the CPU usage at the current time.

The of the file system in an instant of time t is calculated by (16).

where, f is the number of partitions available on the physical computer, is the total capacity of the current partition on the physical computer, and is the consumption of the current partition at a given moment. As shown, the multiple storage partitions associated to a studied object are considered in Equation (16).

The of memory is calculated by (17).

where, is the total memory on the physical computer, and is the memory consumption at time t.

The of network is calculated by (18).

where, is the total capacity of the network in bytes, is the number of bytes transmitted, and is the number of bytes received.

The set is the key element for representing the behavior.

As a result of this stage, a is obtained, conformed by the three elements before described (structure, behavior, and function). The second stage of the method operates on this data structure.

3.2. Phase 2: Standardized Access to DST by Means of WoT

At this point, a provides a representation of the necessary data of the . However, we require a helpful representation to interact with the ; such an interaction may be machine to machine or human to machine. For achieving this flexibility, this representation is based on the Web of Things (WoT) principles [38]. This standardized representation of a is named WoT card. In addition to the information captured by , metadata of the are also added to the WoT card. These metadata are: IP addresses, volumes, ports, namespaces, etc. A WoT card is defined as shows Equation (20).

In the case of a , such elements are defined recursively to capture data about structures and transformations used and performed by the whole and its components, respectively.

According to the WoT recommendations, the generation of the WoT cards must be based on ontologies. In this sense, we defined and created an ontology (named VC Docker FU Ontology (available at github.com/adaptivez/VirtualContainerOntology accessed on 5 August 2021)), which can be adapted to the context of any WoT card in several scenarios. The VC Docker FU Ontology is used as a reference in the whole generation of WoT cards during the representation of . This ontology comes from two more ontologies, it extends from the VC Docker Ontology (github.com/langens-jonathan/docker-vocab/blob/master/docker.md#config accessed on 5 August 2021), which extends from the VC ISO Ontology. The latter ontology was created from scratch according to the norm ISO/IEC JTC 1/SC 38 (www.iso.org/committee/601355.html accessed on 5 August 2021), it defines all the concepts and constraints of the norm in an abstract manner. The VC Docker Ontology, in its original version, already defines concepts and constraints of virtual containers into the Docker environment, it was adapted in line with the VC ISO Ontology; some additional concepts and restrictions were included to fulfill with the ISO norm. The VC Docker FU Ontology adds concepts about the behavior related to infrastructure resources—CPU, MEM, FS, and NET—(such as levels of utilization and properties of such values), and function of virtual containers (such as base functions and tasks).

Technically a WoT card is based on an abstract class named Thing, which is the base object for modeling in the WoT approach. It is based on the representation structure of Thing Description (TD). Thing Description is the base model for describing any IoT Thing in the W3C Web of Things approach, it describes the metadata and interfaces of Things (www.w3.org/TR/wot-thing-description accessed on 5 August 2021). Thus, a WoT card is composed of three elements: (i) metadata (of Thing), which contains interactions (how can be used); (ii) vocabulary, which contains concept definitions used into the Thing Description structure, useful for interactions; and (iii) URIs, which are useful to identify resources into Thing Description, these are Internet links denoting relationships between and other resources on the WoT.

The WoT card was designed so that an external user can interact with it by asking about: properties, actions, and events. Properties contain information about the , such as behavior (), structure (nodes, and edges of the DAG), and metadata of the . Actions refer to the functions of the , including tasks (s) executed by its components. Events refer to alerts on behavior changes, such as defined by the utilization levels (CPU_lvl, MEM_lvl, FS_lvl, NET_lvl).

Then, a WoT card is represented as a file following the format and structure of JSON-LD (JavaScript Object Notation for Linked Data, www.w3.org/TR/json-ld11 accessed on 5 August 2021). Listing 1 illustrates a portion of an example of WoT card.

| Listing 1. Thing Description (TD) structure following the JSON-LD format. |

| { |

| "@context": "https://www.w3.org/2019/wot/td/v1", |

| "id": "996ba6e...aec5f14", |

| "@type": "Thing", |

| "td:title": { "@value": "..." }, |

| "td:description": { "@value": "..." }, |

| "properties": { |

| "ctv:metadata": { data{} }, |

| "ctv:structure": { data{} } |

| }, |

| "actions": { "ctv:functions": {input{}, output{}} }, |

| "events": { "ctv:behavior": {} } |

| } |

3.3. Phase 3: Consumption

After the WoT card has been generated and its data stored, it is ready for consumption by means of a . For the to be accessible and consumed, it must become an intermediary between the modeled object () and the consumer. This is possible by using a RESTful system, which can process requests with the most common HTTP actions: GET, POST, PUT, DELETE. In this way, any artifact making REST type requests can consume the . The consumption can be on properties, actions, or events, which are defined as follows.

Each element of the WoT card is universally identified and accepted by other physical or abstract entities (e.g., other , , applications, devices, human-requests, etc.) by means of a Universal Resource Identifier (URI) in a unique and universal manner.

For the consumption of properties (21), it is necessary to give the URI of the and the specific property to access. Additionally, in the event consumption (22), the URI of the and the event to be accessed must be given. For invoking actions (23), it is necessary to give the URI of the , the action to be performed and the input required for that action as parameter. In the three types of consumption, a JSON object is obtained as a response indicating a value if a property or event were requested, or a value or resultant flag if an action was invoked. Next, an example of consumption of the property “platform” and the function “sum” are given.

- Request (property):

- https://www.example.com/wotmodel/containers/123456789/platform

- Response:

- { "platform" = "Docker" }

- Request (function):

- https://www.example.com/wotmodel/containers/123456789/sum/2/3

- Response:

- { "result" = "5" }

4. DST Prototype

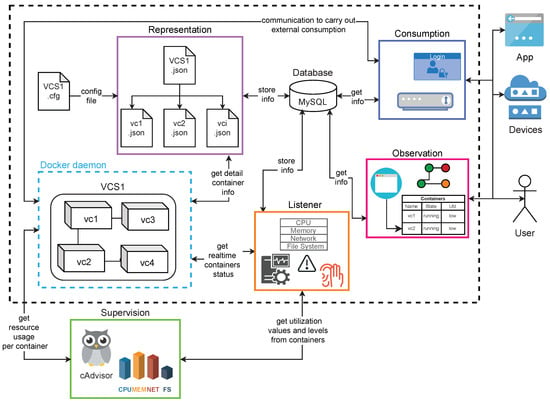

This section describes the implementation of a prototype for building based on the proposed method. The components of this prototype and its interactions are depicted in Figure 4. The components were implemented as microservices (encapsulated into virtual containers), coded by using Python 3.0, except for the observation component, which was implemented by using JavaScript and PHP because of the nature of observation tasks. Next, each component is described.

Figure 4.

Components of DST prototype.

The prototype was deployed on the Docker platform, but may be created from another platform, such as LXC (linuxcontainers.org/lxc accessed on 5 August 2021), Hyper-V (docs.microsoft.com/en-us/virtualization/hyper-v-on-windows accessed on 5 August 2021), or rkt (https://www.openshift.com/learn/topics/rkt accessed on 5 August 2021), where a can be represented by a YML or YAML file.

4.1. Representation

In this service, the configuration file (YML) of the is parsed to build the , capturing structure, behavior, function, and metadata of the participants in a dataflow from an IoT device to the decision-making process. After the creation of , the WoT cards are generated and its corresponding URIs defined. In this way, a decision-making process can consume the WoT card information (properties, events, and actions). The URIs must follow a defined namespace, as shows the Expression (24):

The WoT cards along with the are stored in a MySQL database.

4.2. Listener

This service monitors the state (behavior) of a given (any of , , or ). It is in charge of storing and keeping updated, in real-time, all the captured information by requesting status information from the Docker daemon and registering, in the database, each event producing a change on the . It also keeps a communication with the supervision service to reflect any change on utilization levels, which are also stored in the database.

4.3. Supervision

This service supervises the and performs the acquisition of metrics through an external agent, called cAdvisor. This is an API that provides information about the metrics of the and the physical computers on which it runs. When acquiring the values of the metrics, it calculates the behavior values of (utilization levels of resources used by ). It also responds to requests from the Listener, which is monitoring the and returns values of utilization levels of resources (high, medium, low) about CPU, MEM, FS, or NET, as well as the timestamp when values were captured.

4.4. Observation

This service offers options for observing the (structure, behavior, and function). It is a web application with intuitive interface designed for human consumption. Three tasks can be performed: (1) Discovering of , for searching the s or a specific by using its properties (name, description, type, creator, owner, etc.); (2) Monitoring , to know easily the behavior of the resources used by a by means of warning color signs (red for critical, yellow for intermediate, and green for normal) and its utilization level values; (3) Observing risk levels, to know the risk of failure of the applications by means of a graph denoting virtual containers in nodes and its relationships in edges.

4.5. Consumption

This service acts as a gateway, and is in charge of attending and processing requests from external users (human users, software applications, virtual containers, etc.) trying to consume or interact with the given . This is performed by using an API REST for GET, POST, PUT, and DELETE requests. Three types of consumption are considered: properties, events, and actions depending on the desired consumption/invocation. For properties and events, this service queries the of the , then gets the corresponding data from the database to send it to the requester. For actions, the service queries the of the , then establishes a connection to the corresponding , which executes the action and returns the result to the requester. All responses are into a JSON file. This is illustrated by invoking the clustering task with the parameters k and a dataset named .

- Preparation:

- URI = https://www.example.com/wotmodel/containers/123456789/kmeansinput = {"k"=2,"data":[{"col1":1,"col2":0,"col3":2},{"col1":2,"col2":1,"col3":1},{"col1":0,"col2":0,"col3":2}]}

- Request:

- request.post(URI,input)

- Response:

- {"result":{"cluster1":[{"col1":1,"col2":0,"col3":2},{"col1":0,"col2":0,"col3":2}],"cluster2":[{"col1":2,"col2":1,"col3":1}]}}

5. Results

The evaluation of the prototype for creation was conducted in two phases of experiments. In the first phase, the prototype was evaluated in a controlled manner to measure the response and service times in the construction of the and in its consumption. In the second phase, a case study is presented based on the creation of for a platform for continuous verification of contracts using a blockchain network.

Table 1 shows the infrastructure used by the created for both cases of study.

Table 1.

IT Infrastructure used in the experiments.

5.1. Metrics

The performance of the prototype was evaluated by capturing the following metrics.

- Service time (): The time required by a microservice () to complete a given task.

- Response time (): The time observed by an end-user or a decision-making process to complete a given task. This time considers the initial time to attain data, create the representation, and store it in the database when an end-user builds a . This metric also measures the initial time when an end-user sends a request to the prototype and the time spent by to process it plus the time spent by it to deliver the results to the end-user.

5.2. Controlled Evaluation

To conduct the evaluation of the prototype, a containerized application () was deployed on the previously described infrastructure, one instance of the running on one virtual container . This extracts data from real traces produced by ECG medical devices (IoT devices for acquiring electrocardiogram (ECG) signals) [11], and builds workloads at a given rate time, following a synthetic distribution. An input parameter defines the amount of data to be included in the workload.

By using the , several experiments were carried out by varying the number of and IoT data sources (ECG sensors), as well as the timing when the captures the behavior of the ; this latter is called .

We captured the and metrics for each experiment, each one was performed 31 times (according to the Central Limit Theorem [39]) to capture the median value of both and .

Different combinations of virtual containers () and s () were tested, these combinations were defined in the form , where W is the desired number of virtual containers () in the combination, and Z is the desired number of s. That means 1 (of Z) watches W virtual containers. For example, Expression (25) means 1 watching 5 virtual containers, this results in a total . Expression (26) means 5 watching 5 virtual containers, this results in a total .

These combinations also was executed by varying the slot parameter from 1, 10, to 100 s. Each combination of these parameters produces a median value of and , which are evaluated to show the behavior of the costs. The total time of ECGs extraction was 10 min.

Analysis of Results

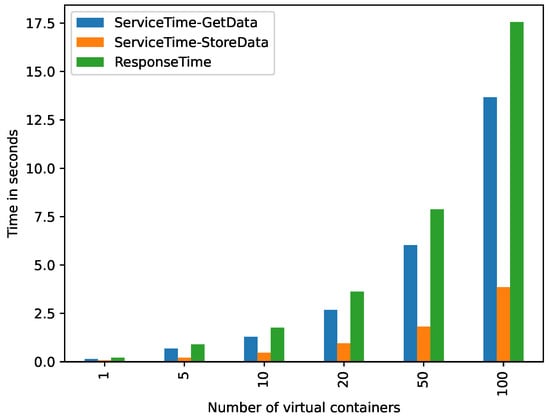

Figure 5 shows, in vertical axis, the and by two key operations related to the building of a ( and tasks) produced by the different number of virtual containers, evaluated in these experiments. This experiment only shows the and observed by either end-users or a decision-making application. As it can be seen, the prototype can build in just seconds for multiple (17.5 s for creating for 100 applications, each connected to an IoT data source). This time is only spent by the prototype once, which means that this is affordable for many environments. Moreover, the task (parsing YML files and creating the ), as it was expected, was the more significant task in the building of a , whereas task (indexing the in a database) results were not significant for the building .

Figure 5.

Service and response times produced by the tasks GetData and StoreData.

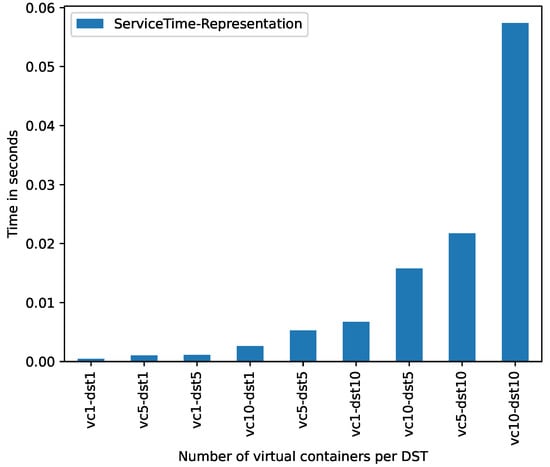

Figure 6 shows, in vertical axis, the (for the task) spent by the building of the according to the sequences of s and virtual containers evaluated in these experiments (horizontal axis). As expected, the more the number of s, the more the spent by the prototype to create the representation of these .

Figure 6.

Service time produced by representation component.

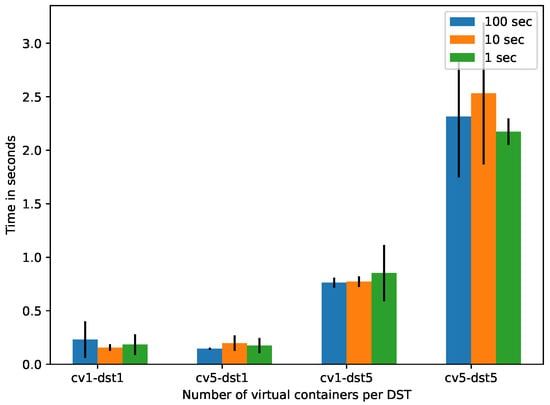

Figure 7 shows, in vertical axis, the spent by the to retrieve information about and to the end-user (in this case a client application) per different sequences of and virtual containers (horizontal axis) for different time . It can be observed that increasing the number of s per also increases the number of requests performed by the per slot, increasing . The obtained is acceptable as soft real time [40].

Figure 7.

Response time in DST consumption.

5.3. A Case Study: Blockchain Network for Continuous Contract Verification

The previous evaluation showed the costs in time associated to create for decision-making process to attain IoT data (by using simple REST API) without dealing with technology elements from IoT and cloud, just invoking tasks on DSTs.

We also conducted a case study to show the flexibility of into a dataflow composed by an end-user (human, device, or application), , virtual containers (), and IoT devices with sensors attached (). This dataflow was emulated from a real trace of a logistic transportation of a supply chain of food, which is used by a implementing a blockchain service for the verification of contract violations by monitoring GPS, temperature, and speed sensors of a set of transportation trucks [41].

Figure 8 shows the conceptual representation of this case study. As it can be seen, two were created for two (including three virtual containers). The deliver to end-users or applications (decision-making processes) information about (the system) and (physical devices).

Figure 8.

Conceptual representation of the scenario for the case study.

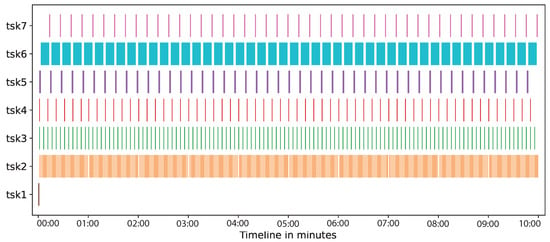

Figure 9 shows, in the horizontal axis, a timeline of the tasks performed by participants on the dataflow (vertical axis) of verifying contract violations: Build (tsk1), data acquisition of temperature (tsk2), data acquisition of speed (tsk3), data acquisiton of GPS (tsk4), send request (tsk5), get data (tsk6), and deliver request (tsk7). The timeline for this case study was 10 min. In tsk1 the prototype builds two . Then, the data acquisition was carried out from IoT sensors (tsk2, tsk3, and tsk4) by the virtual containers, which were stored on the blockchain network. Additionally during the timeline, every 10 s, the virtual containers verified, registered, and reported contract violations on the blockchain network: first the consumer requests to (tsk5), then the blockchain is queried by the corresponding virtual container (tsk6), and finally the responses to the consumer (tsk7). As it can be seen, the impact of the creation (tsk1) and communications (tsk5 and tsk7) is not significant in comparison with the time spent by get data from the blockchain network (tsk6) and the data transfer from sensors to the blockchain network (tsk2, tsk3, and tsk4). Figure 9 also shows that can capture the data produced by both, s (tsk6), and s (tsk2, tsk3, and tsk4).

Figure 9.

Time of tasks in the case study.

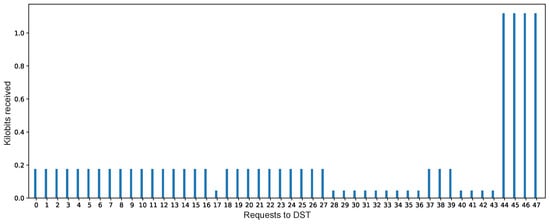

We observed that s were able to inform to end-users, on demand and in real time, about contract violations. From the total number of requests (47) to the s, just in 3 requests the s informed contract violations.

The s can also deliver, on demand and in real time, the data rate produced and received by s to the end-user. Therefore, the behavior of the s can be known by end-users in decision-making time by analyzing these data. In this case study, the prototype showed a regular data production from sensors, with a reduction and increment of the data rate. This could imply to a potential bottleneck in the reception of data or a possible inconsistent data production from sensors at IoT devices. Figure 10 shows the received data amount of 47 user requests to the s.

Figure 10.

Kilobits received in the requests.

The averages of consumed resources r (processor—CPU, memory—MEM, file system—FS, and network—NET) by the prototype in the case study are shown in Table 2. To obtain them, first the consumption of such resources were measured before and during the case study, this was carried out 32 times (). Then the differences between initial () and final () values were computed and added. Finally the average of the differences were obtained, as shows Equation (27).

Table 2.

Average values of resource consumption.

It is important to note that the blockchain network is not of exclusive use of this prototype, it can be consumed by external applications. This (blockchain network) can be replaced by other (e.g., a data analytics system), in such a case that the must deliver the data produced by this new without performing deep changes, but rebuilding the of the .

6. Discussion

In this paper, we demonstrated the viability of the proposed method by applying the implemented prototype in two scenarios. The first one is regarding a controlled evaluation for extracting data from traces produced by ECG medical devices. This scenario showed the response and service time performance during the building and consumption of s. The second scenario demonstrated the flexibility of s to attain information (verification of contract violations on a blockchain network) in real-time from a dataflow of transportation logistics.

The obtained prototype was tested on distinct scenarios for intermediate and partial experiments before obtaining the results reported in this paper. In all these experiments, the prototype showed good performance in several tasks, such as discovering s, monitoring , supervising through created s. Several interactions were performed on these s, accessed by other s, human requests, and software applications.

According to the results of the controlled evaluation (Section 5.2), we can see that augmenting the number of s per increases exponentially the response time for both the building and consumption of s. The building of 1 with 5 (5-1) takes an average response time of 0.90 s (see Figure 5). The consumption of the with the same configuration (5-1) takes an average response time of 0.52 s (see Figure 7).

The case study (Section 5.3) supports the results achieved in the controlled evaluation. In this case, the average response time during the building of the s (sequence 3-s2) was 1.2 s (see Figure 9). For the consumption of the the average response time was 13 s (see Figure 9). However, it is important to note that from these 13 s, 10 s correspond to the communication to and from the blockchain networks for obtaining data. Thus, we can conclude that 3 s is the real response time for the consumption.

In all the experiments of the prototype, the interaction with the created was easy because complex requests were not necessary. The benefits of using the created are as follows:

- Standardized interaction. Since a WoT card is based on W3C guidelines, a can be consumed by distinct users (humans, devices, or applications);

- Easy consumption. Through a , users can: (a) access to data, properties, and events; and (b) invoke tasks and functions, both directly on target devices (s or s);

- Flexible access. A can be exploited by external users by means of RESTful requests from distinct locations to the one of the environment;

- Decision-making aid. s can be used as a mean in decision-making tasks (discovering, classification, monitoring, supervising, migration, to mention a few);

- Generation of . The building of is quite simple and transparent if a well-structured file configuration (YML or YAML) is given;

- Minimal required resources. The execution of requires minimal infrastructure resources (CPU, MEM, FS, and NET).

7. Conclusions

This paper presented a cloud-based WoT method for creating digital twins of IoT devices, named (digital sentinel twins—DST). A is an object that abstracts physical or virtual devices to operate over them by consuming its properties, events, or invoking its functions. This object has the advantage that by investing minimal time and resources, an external user (human, software application, or virtual devices) can access to all the data and functions of those devices. That is useful for interacting with IoT devices in several scenarios.

The method comprises three phases: (a) Modeling, where the data of the or are acquired, with these elements that device is modeled, generating a dataflow entity (DfE); (b) Standardization, where the elements of the model are represented into a standardized representation named WoT card; this representation follows the guidelines of the Web of Things to make its elements universally accessible by means of URIs; and (c) Consumption, the advance of the WoT card generated is that it can be consumed in external scenarios by distinct users (human, software applications, or virtual devices) in different ways.

Based on the proposed method, a functional prototype was implemented. This prototype was tested by creating in several experiments considering distinct scenarios of use (discovering and monitoring of and applications, supervising , etc.). By means of the created , it was possible to consume data and invoke functions of virtual and physical devices. In this paper, two experiments were reported to demonstrate the viability of the proposed method, creating flexible and useful . The first experiment was to show the spent time for creating and consuming . The second one was to demonstrate the use of into a scenario of a blockchain network for verifying contract violation on sensors used in product transportation logistics.

A creates an abstract window for decision-making processes to attain information and data from virtual and physical devices. It acts as a useful mechanism to interact with those devices in several scenarios. Its creation is not expensive in terms of time and computational resources, and it produces a access to data and functions of the target devices. These characteristics may be obtained without managing complex details associated to virtual and physical devices and cloud computing infrastructures.

Nevertheless the benefits obtained by the proposed method, it is important to mention some limitations of the proposed work:

- The creation of s only can be achieved if a well-structured configuration file is given, in YML or YAML format;

- A has no other way to consume it that RESTful requests;

- When target devices (s or s) and s reside in the same infrastructure, the response time of performed tasks increases exponentially.

As further work, the inclusion of security aspects into the DSTs is considered; this will enable its manageability and control while maintaining its flexibility of use.

Author Contributions

Conceptualization, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; methodology, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; software, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; validation, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; formal analysis, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; investigation, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; resources, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; data curation, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; writing—original draft preparation, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; writing—review and editing, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; visualization, I.L.-A., J.L.G.-C., M.H.-T., C.M.-R., J.L.M.-R. and R.M.; funding acquisition, J.L.G.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the project Num. 41756 “Plataforma tecnológica para la gestión, aseguramiento, intercambio y preservación de grandes volúmenes de datos en salud y construcción de un repositorio nacional de servicios de análisis de datos de salud” by FORDECYT-PRONACES, Conacyt (México).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Piccialli, F.; Casolla, G.; Cuomo, S.; Giampaolo, F.; Di Cola, V.S. Decision making in IoT environment through unsupervised learning. IEEE Intell. Syst. 2019, 35, 27–35. [Google Scholar] [CrossRef]

- Troussas, C.; Krouska, A.; Sgouropoulou, C. Improving Learner-Computer Interaction through Intelligent Learning Material Delivery Using Instructional Design Modeling. Entropy 2021, 23, 668. [Google Scholar] [CrossRef]

- Wang, Y.; Li, P.F.; Tian, Y.; Ren, J.J.; Li, J.S. A Shared Decision-Making System for Diabetes Medication Choice Utilizing Electronic Health Record Data. IEEE J. Biomed. Health Inform. 2017, 21, 1280–1287. [Google Scholar] [CrossRef]

- Peralta, G.; Iglesias-Urkia, M.; Barcelo, M.; Gomez, R.; Moran, A.; Bilbao, J. Fog computing based efficient IoT scheme for the Industry 4.0. In Proceedings of the 2017 IEEE International Workshop of Electronics, Control, Measurement, Signals and Their Application to Mechatronics (ECMSM), Donostia, Spain, 24–26 May 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Al-Hamadi, H.; Chen, I.R. Trust-Based Decision Making for Health IoT Systems. IEEE Internet Things J. 2017, 4, 1408–1419. [Google Scholar] [CrossRef]

- Manavalan, E.; Jayakrishna, K. A review of Internet of Things (IoT) embedded sustainable supply chain for industry 4.0 requirements. Comput. Ind. Eng. 2019, 127, 925–953. [Google Scholar] [CrossRef]

- Morabito, R.; Cozzolino, V.; Ding, A.Y.; Beijar, N.; Ott, J. Consolidate IoT Edge Computing with Lightweight Virtualization. IEEE Netw. 2018, 32, 102–111. [Google Scholar] [CrossRef]

- Truong, H.L.; Dustdar, S. Principles for Engineering IoT Cloud Systems. IEEE Cloud Comput. 2015, 2, 68–76. [Google Scholar] [CrossRef]

- Du, Y.; Huang, K. Fast Analog Transmission for High-Mobility Wireless Data Acquisition in Edge Learning. IEEE Wirel. Commun. Lett. 2019, 8, 468–471. [Google Scholar] [CrossRef]

- Tadakamalla, U.; Menascé, D. FogQN: An Analytic Model for Fog/Cloud Computing. In Proceedings of the 2018 IEEE/ACM International Conference on Utility and Cloud Computing Companion (UCC Companion), Zurich, Switzerland, 17–20 December 2018; pp. 307–313. [Google Scholar] [CrossRef]

- Sánchez-Gallegos, D.D.; Galaviz-Mosqueda, A.; Gonzalez-Compean, J.L.; Villarreal-Reyes, S.; Perez-Ramos, A.E.; Carrizales-Espinoza, D.; Carretero, J. On the Continuous Processing of Health Data in Edge-Fog-Cloud Computing by Using Micro/Nanoservice Composition. IEEE Access 2020, 8, 120255–120281. [Google Scholar] [CrossRef]

- Carrizales-Espinoza, D.; Sánchez-Gallegos, D.D.; Gonzalez-Compean, J.L.; Carretero, J. A Federated Content Distribution System to Build Health Data Synchronization Services. In Proceedings of the 2021 29th Euromicro International Conference on Parallel, Distributed and Network-Based Processing (PDP), Valladolid, Spain, 10–12 March 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Yang, H.; Kim, Y. Design and Implementation of Fast Fault Detection in Cloud Infrastructure for Containerized IoT Services. Sensors 2020, 20, 4592. [Google Scholar] [CrossRef]

- Sánchez-Gallegos, D.D.; Carrizales-Espinoza, D.; Reyes-Anastacio, H.G.; Gonzalez-Compean, J.; Carretero, J.; Morales-Sandoval, M.; Galaviz-Mosqueda, A. From the edge to the cloud: A continuous delivery and preparation model for processing big IoT data. Simul. Model. Pract. Theory 2020, 105, 102136. [Google Scholar] [CrossRef]

- Carrizales, D.; Sánchez-Gallegos, D.D.; Reyes, H.; González-Compeán, J.; Morales-Sandoval, M.; Carretero, J.; Galaviz-Mosqueda, A. A data preparation approach for cloud storage based on containerized parallel patterns. In International Conference on Internet and Distributed Computing Systems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 478–490. [Google Scholar]

- Saiáns-Vázquez, J.V.; Ordóñez-Morales, E.F.; López-Nores, M.; Blanco-Fernández, Y.; Bravo-Torres, J.F.; Pazos-Arias, J.J.; Gil-Solla, A.; Ramos-Cabrer, M. Intersection Intelligence: Supporting Urban Platooning with Virtual Traffic Lights over Virtualized Intersection-Based Routing. Sensors 2018, 18, 4054. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Al-Tarawneh, M.A. Mobility-Aware Container Migration in Cloudlet-Enabled IoT Systems using Integrated Muticriteria Decision Making. Environments 2020, 11, 694–701. [Google Scholar] [CrossRef]

- Martínez-Gutiérrez, A.; Díez-González, J.; Ferrero-Guillén, R.; Verde, P.; Álvarez, R.; Perez, H. Digital Twin for Automatic Transportation in Industry 4.0. Sensors 2021, 21, 3344. [Google Scholar] [CrossRef] [PubMed]

- Ostermaier, B.; Schlup, F.; Römer, K. WebPlug: A framework for the Web of Things. In Proceedings of the 2010 8th IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), Mannheim, Germany, 29 March–2 April 2010; pp. 690–695. [Google Scholar] [CrossRef]

- Assad, F.; Konstantinov, S.; Ahmad, M.; Rushforth, E.; Harrison, R. Utilising Web-based Digital Twin to Promote Assembly Line Sustainability. In Proceedings of the 2021 4th IEEE International Conference on Industrial Cyber-Physical Systems (ICPS), Victoria, BC, Canada, 10–12 May 2021. [Google Scholar]

- Bevilacqua, M.; Bottani, E.; Ciarapica, F.E.; Costantino, F.; Di Donato, L.; Ferraro, A.; Mazzuto, G.; Monteriù, A.; Nardini, G.; Ortenzi, M.; et al. Digital Twin Reference Model Development to Prevent Operators’ Risk in Process Plants. Sustainability 2020, 12, 1088. [Google Scholar] [CrossRef] [Green Version]

- Gao, Y.; Lv, H.; Hou, Y.; Liu, J.; Xu, W. Real-time Modeling and Simulation Method of Digital Twin Production Line. In Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 24–26 May 2019; pp. 1639–1642. [Google Scholar] [CrossRef]

- Alaasam, A.B.; Radchenko, G.; Tchernykh, A.; Compeán, J.G. Analytic Study of Containerizing Stateful Stream Processing as Microservice to Support Digital Twins in Fog Computing. Program. Comput. Softw. 2020, 46, 511–525. [Google Scholar] [CrossRef]

- Lin, T.Y.; Shi, G.; Yang, C.; Zhang, Y.; Wang, J.; Jia, Z.; Guo, L.; Xiao, Y.; Wei, Z.; Lan, S. Efficient container virtualization-based digital twin simulation of smart industrial systems. J. Clean. Prod. 2021, 281, 124443. [Google Scholar] [CrossRef]

- Borodulin, K.; Radchenko, G.; Shestakov, A.; Sokolinsky, L.; Tchernykh, A.; Prodan, R. Towards Digital Twins Cloud Platform: Microservices and Computational Workflows to Rule a Smart Factory. In Proceedings of the 10th International Conference on Utility and Cloud Computing, Austin, TX, USA, 5–8 December 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 209–210. [Google Scholar] [CrossRef]

- Paraiso, F.; Challita, S.; Al-Dhuraibi, Y.; Merle, P. Model-Driven Management of Docker Containers. In Proceedings of the 2016 IEEE 9th International Conference on Cloud Computing (CLOUD), San Francisco, CA, USA, 27 June–2 July 2016; pp. 718–725. [Google Scholar] [CrossRef] [Green Version]

- Piraghaj, S.F.; Dastjerdi, A.V.; Calheiros, R.N.; Buyya, R. ContainerCloudSim: An environment for modeling and simulation of containers in cloud data centers. Softw. Pract. Exp. 2017, 47, 505–521. [Google Scholar] [CrossRef]

- Medel, V.; Rana, O.; Bañares, J.A.; Arronategui, U. Modelling Performance & Resource Management in Kubernetes. In Proceedings of the 9th International Conference on Utility and Cloud Computing; Association for Computing Machinery: New York, NY, USA, 2016; pp. 257–262. [Google Scholar] [CrossRef]

- Alam, M.; Rufino, J.; Ferreira, J.; Ahmed, S.H.; Shah, N.; Chen, Y. Orchestration of Microservices for IoT Using Docker and Edge Computing. IEEE Commun. Mag. 2018, 56, 118–123. [Google Scholar] [CrossRef]

- Muralidharan, S.; Song, G.; Ko, H. Monitoring and managing iot applications in smart cities using kubernetes. Cloud Comput. 2019, 11, 1–6. [Google Scholar]

- Muralidharan, S.; Yoo, B.; Ko, H. Designing a Semantic Digital Twin model for IoT. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 4–6 January 2020; pp. 1–2. [Google Scholar] [CrossRef]

- Chittaro, L.; Guida, G.; Tasso, C.; Toppano, E. Functional and teleological knowledge in the multimodeling approach for reasoning about physical systems: A case study in diagnosis. IEEE Trans. Syst. Man Cybern. 1993, 23, 1718–1751. [Google Scholar] [CrossRef]

- Lind, M.; Zhang, X. Functional modelling for fault diagnosis and its application for NPP. Nucl. Eng. Technol. 2014, 46, 753–772. [Google Scholar] [CrossRef] [Green Version]

- Chandrasekaran, B.; Josephson, J.R. Function in device representation. Eng. Comput. 2000, 16, 162–177. [Google Scholar] [CrossRef]

- Yildirim, U.; Campean, F.; Williams, H. Function modeling using the system state flow diagram. AI EDAM 2017, 31, 413–435. [Google Scholar] [CrossRef] [Green Version]

- Umeda, Y.; Takeda, H.; Tomiyama, T.; Yoshikawa, H. Function, behaviour, and structure. Appl. Artif. Intell. Eng. V 1990, 1, 177–193. [Google Scholar]

- Luko, S. Risk Management Principles and Guidelines. Qual. Eng. 2013, 25, 451–454. [Google Scholar] [CrossRef] [Green Version]

- Guinard, D.D.; Trifa, V.M. Building the Web of Things: With Examples in Node. js and Raspberry pi; Simon and Schuster: New York, NY, USA, 2016. [Google Scholar]

- Rosenblatt, M. A central limit theorem and a strong mixing condition. Proc. Natl. Acad. Sci. USA 1956, 42, 43. [Google Scholar] [CrossRef] [Green Version]

- Buttazzo, G.; Lipari, G.; Abeni, L.; Caccamo, M. Soft Real-Time Systems; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Martinez-Rendon, C.; Camarmas-Alonso, D.; Carretero, J.; Gonzalez-Compean, J.L. On the continuous contract verification using blockchain and real-time data. In Cluster Computing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–23. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).