Abstract

Advances in the manufacturing industry have led to modern approaches such as Industry 4.0, Cyber-Physical Systems, Smart Manufacturing (SM) and Digital Twins. The traditional manufacturing architecture that consisted of hierarchical layers has evolved into a hierarchy-free network in which all the areas of a manufacturing enterprise are interconnected. The field devices on the shop floor generate large amounts of data that can be useful for maintenance planning. Prognostics and Health Management (PHM) approaches use this data and help us in fault detection and Remaining Useful Life (RUL) estimation. Although there is a significant amount of research primarily focused on tool wear prediction and Condition-Based Monitoring (CBM), there is not much importance given to the multiple facets of PHM. This paper conducts a review of PHM approaches, the current research trends and proposes a three-phased interoperable framework to implement Smart Prognostics and Health Management (SPHM). The uniqueness of SPHM lies in its framework, which makes it applicable to any manufacturing operation across the industry. The framework consists of three phases: Phase 1 consists of the shopfloor setup and data acquisition steps, Phase 2 describes steps to prepare and analyze the data and Phase 3 consists of modeling, predictions and deployment. The first two phases of SPHM are addressed in detail and an overview is provided for the third phase, which is a part of ongoing research. As a use-case, the first two phases of the SPHM framework are applied to data from a milling machine operation.

1. Introduction

The modern manufacturing era has enabled the collection of large amounts of data from factories and production plants. Data from all levels of an enterprise can be analyzed using Machine Learning (ML) and Deep Learning (DL) techniques. Interdisciplinary approaches such as Industry 4.0 (I4.0), Cyber-Physical Systems (CPS), Cloud-Based Manufacturing (CBM) and Smart Manufacturing (SM) allow the real-time monitoring of operations in manufacturing facilities. These approaches greatly benefit maintenance operations by reducing downtime and thereby cutting costs. Monte-Carlo estimations suggest that annual costs concerning maintenance amount to approximately USD 222 Billion in the United States [1], and recalls due to faulty goods result in costs of more than USD 7 Billion each year [2]. One of the reasons for these relatively high costs is manufacturing organizations preferring corrective or preventive maintenance as opposed to predictive maintenance. Prognostics and Health Management (PHM) is an interdisciplinary area of engineering that deals with the monitoring of system health, detecting failures, diagnosing the cause of failures and making a prognosis of component and system level failures by using metrics such as Remaining Useful Life (RUL). PHM technologies are being widely incorporated into the modern manufacturing approaches as an in situ evaluation of the system is made possible.

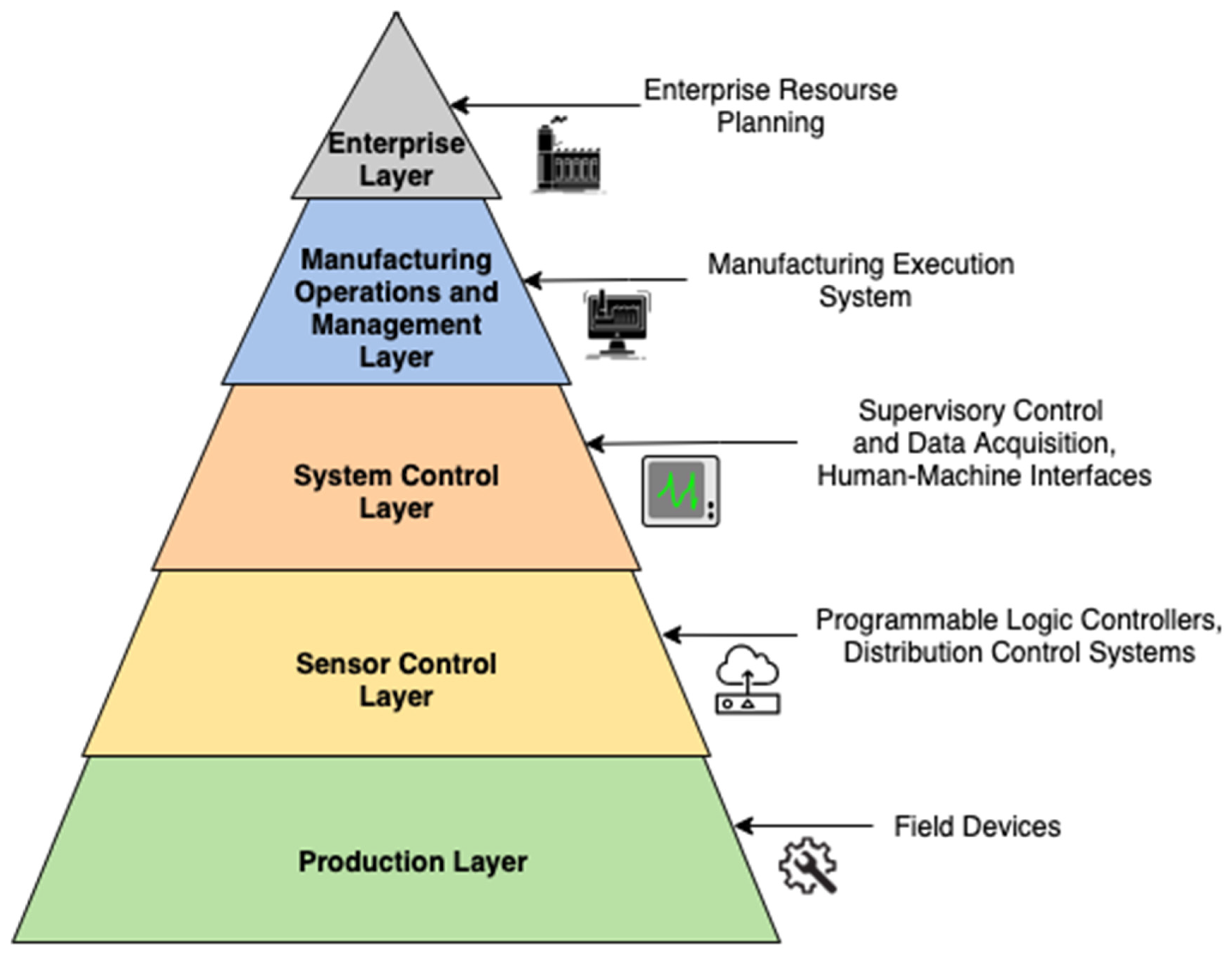

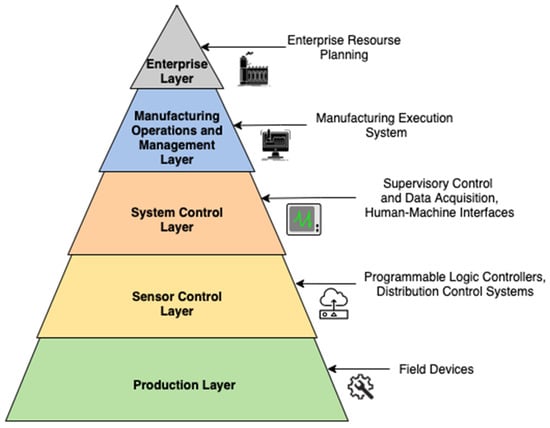

A Smart Manufacturing (SM) paradigm consists of interoperable layers that are capable of vertical as well as horizontal integration [3]. Figure 1 shows the different layers according to the ISA-95 Automation Pyramid. The physical layer of SM consists of the field devices that comprise of sensors and actuation equipment. To monitor and analyze the devices on the physical layer, data from all the individual components need to be incorporated into a single stream, which then provides us with a context of the entire operation. Not only do the data give us context about the operation, but properly formatted data allow faster deployment of AI and ML algorithms. Quicker implementation of ML- and DL-based condition monitoring techniques allows early detection of potential failures. The data generated come from various sources involving multiple parameters, resulting in complex formats and sometimes redundancies. Challenges in effective implementation of PHM techniques for predictive maintenance include the availability of data, the preparation of data and ensuring that appropriate ML and DL methods are selected for modeling. Appropriate steps need to be taken to ensure that manufacturing data can be acquired and preprocessed for PHM.

Figure 1.

Top-down approach to manufacturing system according to ISA-95 Automation Pyramid.

To address these requirements, this paper proposes a Smart Prognostics and Health Management (SPHM) framework in SM. To that end, the rest of the paper is structured as follows: Section 2 provides a review of existing approaches to maintenance and state-of-the-art PHM methods. Section 3 identifies the research gap based on existing work. Section 4 proposes an interoperable framework to implement SPHM in SM. Section 5 applies the first two phases of the proposed framework to data obtained from a milling operation. Section 6 discusses the results. Finally, Section 7 provides the conclusion and future work.

2. Review of Key Concepts and Trends

2.1. Overview of Maintenance Strategies

In recent years, maintenance engineering has emerged as one of the most important areas in manufacturing organizational planning as opposed to it being purely associated with production operations [4]. Proactive approaches to maintenance are being adopted by manufacturers, not only as a cost-cutting measure, but also as a competitive strategy [5]. Manufacturing enterprises implement maintenance strategies based on production requirements, the complexity of machinery and equipment, and the costs involved. Broadly speaking, maintenance strategies follow one of the three main approaches:

- Unplanned or reactive maintenance—typically allows for machinery to breakdown, after which it is analyzed and repaired.

- Planned or preventive maintenance—an assessment of the system is conducted at regular time intervals to determine whether any repair/replacement is necessary. It is important to note that the health of the system is not taken into consideration in establishing the time intervals.

- Predictive maintenance—a data-driven approach in which parameters concerning the health of the system are used to monitor the condition of the equipment and in determining the RUL.

Approaches to maintenance strategies have evolved over the years with the advent of data analytics and advanced ML/DL techniques. Continuous monitoring of shop floor operations with the use of Internet of Things (IoT), smart sensors and smart devices are allowing organizations to quickly make cost saving-decisions, as opposed to a long and drawn-out analysis that has proven to be very costly. Enterprises are evolving their maintenance strategies based on data-driven approaches and the value of predictive maintenance is being realized from its results.

2.2. Multi-Faceted Approach to PHM

Condition-Based Monitoring (CBM) techniques and Remaining Useful Life prediction are important features of predictive maintenance strategies leading to PHM methods. It would be remiss to state that CBM and RUL define PHM methodologies, since PHM methods are multi-faceted approaches. PHM methods consist of: data acquisition and preprocessing, degradation detection, diagnostics, prognostics and timely development of maintenance policies for decision making [6,7]. A brief overview of PHM’s many facets is provided as follows:

- Data acquisition and preprocessing: For any predictive problem in maintenance to be solved, the availability of data is of utmost importance. IoT devices and smart sensors are typically used to acquire data in manufacturing settings. The data are recorded and evaluated in real-time as certain anomalies may be detected at an early stage by maintenance engineers or control systems. The collection of such data is extremely important as it provides vital information that helps to understand the relationships between the heterogenous components of the system. Once the data are collected, they are analyzed and preprocessed to ensure that crucial information which helps in failure detection is obtained.

- Degradation detection: Identifying that a component is degrading or that it is bound to fail is the next step once the data have been collected and prepared. Anomalies and failures can be detected using sensor readings and by other specified criteria, such as surface roughness, temperature, size of tools/equipment, etc.

- Diagnostics: Once a determination is made that a failure is occurring, understanding the cause of the failure is the next step. Failure types can be categorized to evaluate the extent of the failure, helping in finding its root causes. Operating conditions of individual components can be analyzed along with their interactions to help diagnose the cause of failures.

- Prognostics: With the ability to detect failures using diagnosing mechanisms, predictive methods are used to predict the system health to avoid potential failures. Model-based prognostics involve Physics-of-Failure (PoF) methods to assess wear and predict failure. However, such approaches are limited as even minor changes to the operations can result in poor predictive power. Data-driven approaches are becoming more common for prognostics with the use of DL and ML techniques. By using data-driven methods along with crucial information from physics-based methods, highly accurate predictions can be made about systems.

- Maintenance decisions: Based on results from the predictive methods developed, manufacturing enterprises can determine policies to be followed for maintenance planning that will help with less downtime, higher yield and a reduction in losses.

The importance of enumerating the many phases of PHM is to help us understand that predictive maintenance is an aggregation of methods from data engineering, reliability and quality engineering, material sciences, DL, ML and organizational decision making. While PHM methods in manufacturing face several challenges, a fundamental one is a requirement for an interoperable approach that allows its implementation across different industries.

2.3. Challenges in Implementing PHM in the Industry

While there is significant research being conducted on the different areas of PHM, there are some challenges as well. Researchers from the National Institute of Standards and Technology (NIST) outline some of the most significant challenges in PHM that can be categorized as follows [8].

2.3.1. In Prognostics

- Insufficient failure data or excessive failure data may skew prediction of RUL

- Inadequate standards to assess prognostic models

- Lack of precise real-time assessment of RUL

- Uncertainty in determining accuracy and performance of prognostic models.

2.3.2. In Diagnostics

- Expertise required in diagnosis of failures

- Limitations due to lack of training and formal guidelines in authentication of diagnostic methods

- Difficulty in diagnosis due to outliers, noise in signal data and operating environment.

2.3.3. In Manufacturing

- Ability to effectively assess electronic components

- Integration of sensors and field devices with PHM standards

- Inconsistencies in data, data formats, and interoperability of data in manufacturing facilities

- Inadequate correspondence between production planning and control units and maintenance departments

- High level of complexity and heterogeneity in manufacturing systems.

2.3.4. In Enterprises

- Proactive involvement required towards maintenance to view PHM as a cost-saving approach and not a cost-inducing one

- Enterprises with legacy machines and equipment tend to go with one of the traditional approaches to maintenance, even though PHM methods are more effective

- Securing funding for PHM projects.

2.3.5. In Human Factors

- User friendly interfaces and applications

- Collection of expert knowledge

- Improvement in outlook towards implementing changes to existing mechanisms.

Most of the research being conducted is aimed at monitoring system health and RUL in PHM, while the other areas of PHM are not given as much importance. Our aim is to address some of the difficulties faced throughout all the phases of PHM. Since prognostics is one of the most significant areas in PHM, we look at some of the modeling approaches.

2.4. Overview of Prognostics Modeling Approaches

There are three main prognostics approaches to PHM modeling: physics-based models, data-driven models and hybrid models that are a combination of physics-based and data-driven models [9]. While all three approaches are used in industry, the application of prognostics modeling also faces several challenges [10]:

- Lack of readily available data in a standardized format

- Insufficient failure data due to imbalance in data classes

- Lack of physics-based parameters in the data.

Industrial data from manufacturing systems are also often complex and require a great deal of preparation to be acceptable for modeling. Due to these reasons, efforts are required to prepare and preprocess the data to make them suitable for prognostics modeling. Data-driven and hybrid models are often preferred over physics-based models given the flexibility of analytical techniques that can be used. A synopsis of physics-based, data-driven and hybrid models based on [9,10,11,12] is outlined in Table 1.

Table 1.

Advantages and disadvantages of prognostics modeling approaches.

In the last two decades, there have been great strides made in improving physics-based prognostic approaches. Several of these approaches are reviewed and applied to rotating machinery by Cubillo et al. [13]. PoF methods have been tested on electronic components in monitoring the health of electronic components by Pecht et al. [14]. The RUL of lithium-ion batteries has been predicted by physics-based models in [15]. There are also several publications that address prognostics modeling based on evolutionary methods derived from bio-inspired [16,17] and neuro-inspired algorithms [18,19]. However, based on current research and industry trends, we will limit our focus to data-driven approaches.

2.5. Current Trends in PHM Research

Advanced algorithms and optimization techniques are at the forefront of problem solving in PHM areas, and a review of the current state of research is necessary to understand these topics. ML and DL have become the choice of modeling techniques in studies that undertake data-driven approaches. While there is an abundance of analytical techniques available, there are few publicly available datasets for PHM research. Most datasets are limited to those released by academic institutions and government organizations. This has resulted in certain datasets being benchmarked to test prognostics models, as seen in [10,20]. Datasets that have been used in PHM research are often from PHM data challenges, and the modeling objective can be grouped into four main tasks: prognosis, fault diagnosis, fault detection and health assessment. An in-depth discussion of these datasets can be found in the review conducted by Jia et al. [21]. Another area from which PHM can be approached is from the perspective of manufacturing models. These manufacturing approaches are designed keeping in mind the objectives of maintenance and PHM policies. We will now shift our focus to ML, DL, Health Index and manufacturing approaches that are the frontiers of PHM research.

2.5.1. Applications of Machine Learning in PHM

ML models have applications in a wide range of PHM areas. Although most of the research is focused on CBM and RUL prediction, some papers focus on fault detection as well. An extensive study on the use of Support Vector Machines (SVM) in RUL prediction was conducted by Huang et al. [22]. The authors investigate how SVM works in condition monitoring in a real-time setting, as well as in future RUL predictions. Mathew et al. [23] propose several supervised ML algorithms, such as Decision Trees, Random Forest, k-Nearest Neighbors (kNN) and regression, in estimating the remaining lifecycles of aircraft turbofan engines by a comparison of the Root Mean Square Error (RMSE) metric. It was identified that the random forest model performed best in this setting. Researchers in [24] compare the performance of neural networks, Support Vector Regression (SVR) and Gaussian regression on data from slow-speed bearings that consist of acoustic emission readings. Implementations of techniques such as Least Absolute Shrinkage and Selection Operator (LASSO) Regression, Multi-Layer Perceptron (MLP), SVR and Gradient-Boosted Trees (GBT) are tested on data collected from Unmanned Aerial Vehicles (UAV) in [25]. In this case, non-linear techniques were preferred over linear models, with the best performance achieved by GBT. In fault detection, an SVR outperforms multiple regression on a milling machine dataset, especially when more data are used from sensors [26]. A review of Machine Learning techniques used in intelligent fault detection was conducted by Lei et al. [27], and their challenges were outlined. It is important to note that there are several studies that use semi-supervised ML methods in fault detection of manufacturing equipment, as seen in [28,29,30], but a discussion of these topics is beyond the scope of this paper.

2.5.2. Applications of Deep Learning in PHM

Deep Learning (DL) methods have evolved as frontrunners in RUL assessment largely due to the deep architectures deployed and the ability to tweak the optimization parameters. Recurrent Neural Networks (RNNs) are popular DL methods used in PHM due to their wide range of applicability. Malhi et al. [31] focus on preprocessing of signals using wavelet transformation and apply RNN to investigate its effects on performance. Heimes [32] uses RNN with an Extended Kalman Filter (EKD), backpropagation and Differential Evolution (DE). Research conducted by Palau et al. [33] implemented a Weibull Time-To-Event (WTTE) method with an RNN to predict time-to-failure and demonstrate how it affects real-time distributed collaborative prognostics. A novel method using embedded time series measurements that does not take into consideration any prior knowledge about machine degradation was developed by Gugulothu et al. [34]. Recently, probabilistic generative modeling using Deep Belief Networks (DBN) are being used for feature extraction and in RUL estimation. Authors in [35] argue that feature extraction from data belonging to SM and I4.0 manufacturing can be troublesome due to requirements of extensive prior knowledge, and deploy a Restricted Boltzmann Machine (RBN)-based DBN to estimate RUL. Another interesting study by Zhao et al. [36] uses DBN to extract features, supplemented by a Relevance Vector Machine (RVM) in the prediction of RUL of battery systems. A multi-objective DBN ensemble using evolutionary algorithms was employed in RUL prediction of turbofan engines by [37]. Methods such as RBM have also been implemented with regularization to generate features that are correlated with fault detection criteria [38]. Convolutional Neural Networks (CNNs) have also been used for machine health monitoring with one-dimensional data in [39,40,41,42] and for feature extraction and automated feature learning with two-dimensional data in [43,44,45,46,47,48]. Comprehensive reviews of Deep Learning methods in PHM, such as Autoencoders, RNNs, RBM and DBN, were conducted by Khan et al. [49].

2.5.3. Health Index Construction

Another important area in health monitoring and management is the construction of a Health Index (HI) from input data parameters and using the HI in fault detection and prognostics. HI’s are developed using Principal Component Analysis (PCA), and similarity matching by using distance measures for RUL estimation of a factory slotter is analyzed by Liu et al. [50]. In lifecycle prediction of battery systems, Liu et al. [51] develop a novel technique to extract HI while preserving important degradation information. RUL predictions using HI compared to ones without explicit HI on data from induction motors show that HI-based RUL prediction is preferred [52].

2.5.4. PHM Using Manufacturing Paradigms

Over the last few decades, manufacturing environments have been revolutionized from a multi-objective viewpoint. Not only are costs and yield the sole objectives, but product customization, sustainability, modularity in the shopfloor and servitization are equally important. This multi-objective approach to manufacturing has allowed the area of PHM to be built-in while designing new systems. Approaches such as mass customization, reconfigurable manufacturing, service-oriented manufacturing and sustainable manufacturing allow the incorporation of PHM goals within the manufacturing system’s setup [53].

Mass customization is a multi-dimensional approach to manufacturing that deals with product design, manufacturing processes and the manufacturing supply chain [54]. This approach presents a shift in the traditional manufacturing objective, changing it from a high production volume with a low variation in products to a high variety of products with a lower production volume. This of course presents its own challenges to PHM due to the sheer number of customizations required in production processes. To tackle this, maintenance policies are integrated based on condition monitoring of systems and order volumes by Jin and Ni [55]. Decisions can also be made from a cost-based perspective by including all the maintenance and production costs in the objective [56].

Reconfigurable manufacturing systems (RMS) are modular in their design, allowing changes in their structure to adjust to any inherent changes or shifts in market demands [57]. RMS systems have maintenance policies dependent on their structure: parallel, series, series-parallel, etc. [58]. Preventive maintenance-based RMS was developed by Zhou et al. [59]. The objectives of reduce, reuse, recycle, recover, redesign and remanufacturing were incorporated into RMS to improve the response time to manage system health by Koren et al. [60].

Service-oriented manufacturing offers a Product Service System (PSS), allowing products and services to be picked based on customer needs [61]. PHM services can be offered depending on the manufacturer’s needs, enabling a highly customized approach to PHM services. To maximize the prognostic and diagnostic capabilities of Original Equipment Manufacturer (OEM), a cloud-based approach has been developed by Ning et al. [62].

3. Research Gap and Proposal

The focus of many studies has been the implementation of ML and DL algorithms in identifying RUL of machinery and equipment. While most publications aim to test the predictive power of data-driven models, very few enumerate all the steps taken required to implement PHM methods in manufacturing. Traini et al. [63] developed a framework to address predictive maintenance in milling based on a generalized methodology. Yaguo et al. reviewed the stages in CBM, from data acquisition to RUL estimation, for different PHM datasets. Mohanraj et al. [64] reviewed the steps in condition monitoring from the perspective of a milling operation. A framework for PHM in manufacturing with cost–benefit analysis was developed by Shin et al. [65], and a use-case for data on batteries was evaluated. While these publications provide noteworthy steps to implement prognostics models in the industry, there are no frameworks to address modern PHM approaches to SM, with an in-depth understanding of all the steps involved. To address this research requirement, we introduce Smart Prognostics and Health Management (SPHM) and propose an interoperable framework for SPHM in SM.

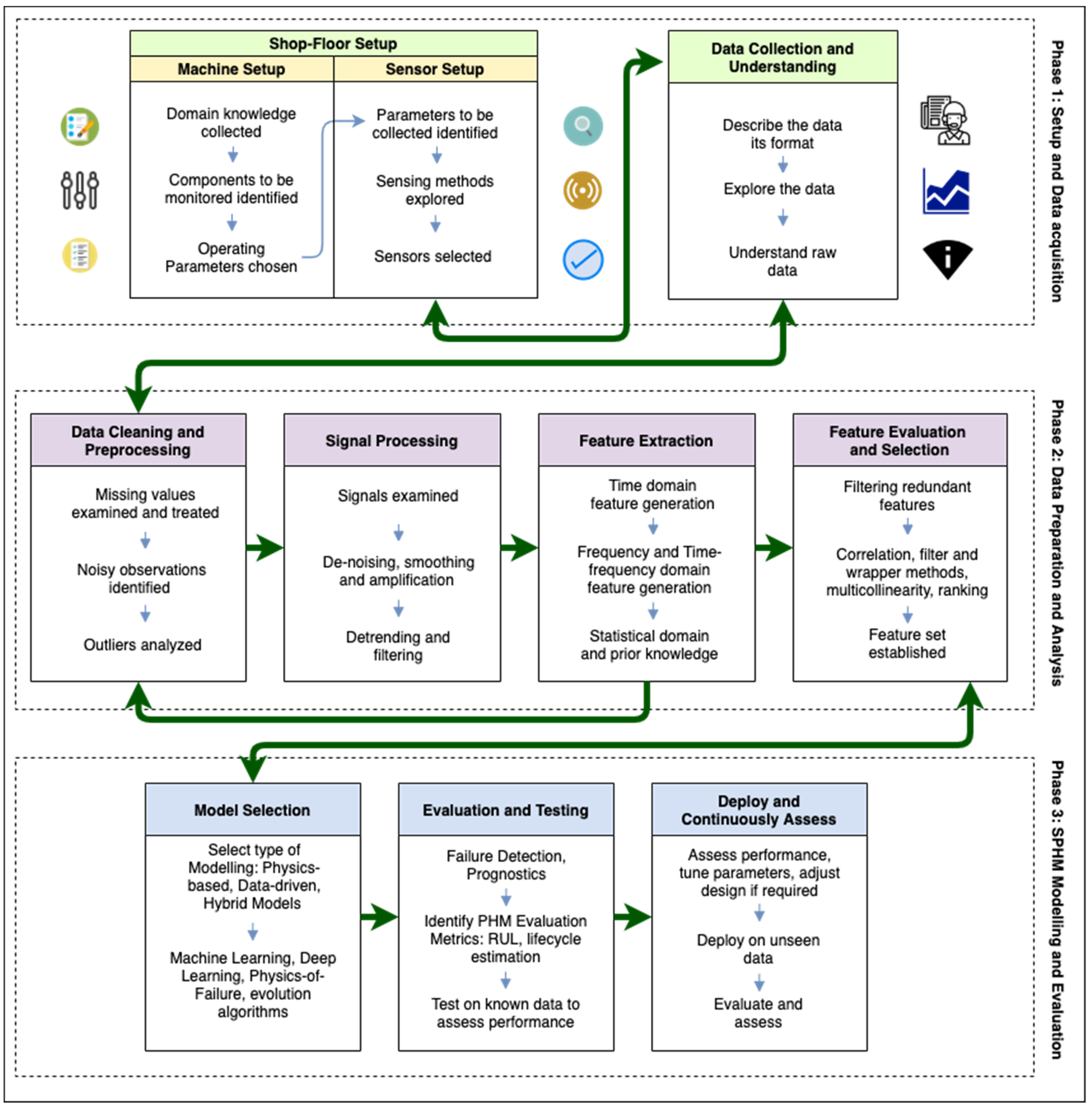

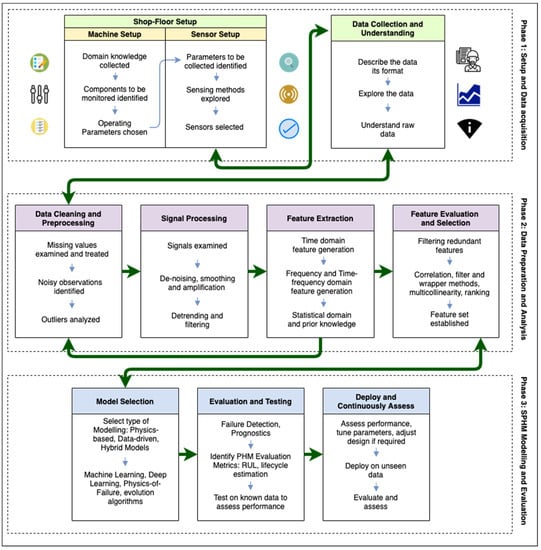

4. An Interoperable Framework for SPHM in SM

Current approaches to PHM are tailor-made to individual systems and components on the shopfloor. Predictive maintenance methodologies, although applicable to heterogeneous machinery, are generic in their approach, without specifying the particulars of data acquisition, modeling and applications. SPHM is a concept that addresses the many facets of PHM in Smart Manufacturing in an interoperable manner. SPHM’s uniqueness lies in its interoperable framework, that addresses all the specifics of PHM by using Industry 4.0 and SM standards in a phased approach. The structure of the framework is loosely based on The Cross-Industry Standard Process for Data Mining (CRISP-DM) [66], an industry standard to apply DM and ML keeping business objectives in mind. The framework consists of three interconnected phases, each with the objective of addressing some of the challenges discussed previously. The proposed SPHM framework for SM with all its phases is shown in Figure 2. The setup and acquisition of data from the shopfloor are covered in the first phase, the preparation of the data and an analysis of the various parameters collected in the data, including an understanding of signal processing, are enumerated in the second phase, and the modeling approaches to SPHM along with their evaluation are discussed in the third phase.

Figure 2.

An interoperable framework for Smart Prognostics and Health Management (SPHM) in Smart Manufacturing (SM).

This paper provides an in-depth description of the first two phases of the SPHM framework, and an overview of the methods in the third phase. A detailed discussion and implementation of modeling techniques are part of ongoing research.

4.1. Phase 1: Setup and Data Acquisition Phase

4.1.1. Shopfloor Setup

The first phase in the framework involves identifying the machinery or equipment that are going to be assessed, using knowledge from the maintenance and production departments. Prior domain knowledge from engineers and technicians will help us identify which components are crucial to the operation. In manufacturing operations, these components are often tool tips or bearings. Once the identification of components has been established, the next step would be to collect information about the operating parameters and environmental conditions. It is important to note that interacting factors also need to be considered in this step. A detailed report with all data about the operating parameters is prepared and a discussion is held with engineers about the relevance of the parameters. Setting up the equipment and sensors is the next part of this step. Most machinery already come with preinstalled sensors, for example Computer Numeric Control (CNC) machines often consist of sensors to capture electric current, vibration, acoustic emission, spindle, torque, etc. The information from these sensors is vital in analyzing the health of the machine. If additional sensors are required, or if the identified equipment consist of legacy machinery, sensors will have to be retrofitted. Often, there may be sensors that are installed for human-factor purposes, essentially aimed at operator safety. These sensors may have no effect on any prognostics or fault detection methodology, so they may be ignored for selection based on existing knowledge. Using information from operating parameters and domain knowledge, a detailed step-by-step guide to setup and run the machine is produced. Once a strategy is in place to conduct the experiment under standard operating conditions, the next step is to identify an appropriate data collection methodology.

4.1.2. Data Collection and Understanding

One of the first and arguably most important considerations in this step is to identify how and where to store the data from operations and devices. A requirement in most organizations is the ability to access data in a secure manner. A key aspect of interoperability is the ability to access data by tasks that share it [3]. Cloud-based systems and Big Data platforms provide secure access to data by using cybersecurity mechanisms, ensuring no misuse of the data. Systems can be setup to directly upload and download data that have been collected from the shopfloor. The collected data need to be analyzed and thoroughly reviewed to ensure there were no inconsistencies encountered during the acquisition of data. The data files should also be recorded in a format such that is readable and its suitable for information extraction. Preliminary investigation of the data by using exploratory analyses such as signal plots, range of attribute values and a basic statistical review will help to gain a better understanding of the experiment. Information such as sampling rate and frequencies pertaining to signal measurements should be recorded. Any other a priori information that would aid in describing the data should also be included in this step.

4.2. Phase 2: Data Preparation and Analysis

In the data preparation phase, there are a few key steps that need to be followed: data cleaning and preprocessing, signal preprocessing, feature extraction and feature evaluation and selection. These steps are paramount to successful implementation of CBM and predictive maintenance methods as they preserve and extract information from the data that best represents the physical experiment.

4.2.1. Data Cleaning and Preprocessing

The procedure to clean and preprocess the data can be understood best by posing the following four questions: (1) Are there any missing values? (2) Is there any noise in the data? (3) Are there any outliers or skewed measurements? (4) Are the data on the same scale? Data cleaning and preprocessing involve many more techniques depending on the type of data recorded, but the treatment of missing values, noise, outliers or skewed instances and scaling and normalization are some of the most crucial ones to be considered.

Missing values can be problematic in any data. Large numbers of missing values can affect the analysis by introducing a bias, causing skewness. Missing value treatment generally involves either the deletion of the record if permissible or replacement of the missing value by using imputation methods [67,68]. Commonly used imputation techniques are mean, median or mode imputation. In this case, the missing values are replaced by the respective mean, median or mode of all the values of that attribute. Predictive modeling methods such as regression and kNN are also used for imputation. However, it is critical to understand which attributes contain missing values. If it is the dependent variable, the strategy most likely to be used would be the removal of the instances that contain the missing values, since we have no control over the values in that attribute. In the case of independent variables, we may have the freedom to use one of the imputation methods discussed previously.

Noise in the data is generally caused due to errors in measurement, causing corrupted data. This may cause inconsistencies in modeling and analysis, and possibly provide incorrect results. Examples of noise are duplicate records and mismatch in data types. The ideal method to address noise is to remove those instances affected. If there is significant noise in the attributes, those values may be removed individually, and replaced using one of the imputation methods. Noise in the data may also be caused due to probabilistic randomness. It is important to note that this type of noise, also known as random errors, can be very difficult to predict. These values will have to be examined and are generally retained as they can be accounted for as randomness in measurements. It is essential to note that the noise we discuss here is different from the noise in signals. We will discuss noise in signal readings in the signal preprocessing step.

To answer the third question, outliers can be due to a variety of reasons. Outliers may be genuine observations that exist for a reason. For example, the readings from the data the moment a tool breaks can be considered as outliers. The values of the readings from that instance may be outliers compared to other readings, but they are still genuine readings since they convey essential information. Outliers in the data may also be due to systematic errors or measurement errors. These outliers are problematic since they can be attributed to flaws in readings and convey no genuine information. The preferred solution to address these outliers is by simply removing them from the data. There may also be differences in measurement criteria that may cause inherent skewness and will have to be treated. Sometimes, attributes in the existing format may not convey the information that is necessary due to some form of skewness. The skewness does not necessarily mean there is something wrong with the data. Instead, it could be due to a small sample size or due to intrinsic factors. In such cases, a transformation may be necessary. Commonly used transformations are log, power, square root, etc. It may also be deemed necessary to use a transformation when the attributes are non-linear and need to be linear to form a meaningful relationship with the other attributes or the target.

Another consideration to be made in this step is the process of scaling or normalizing the data. Normalization or standardization may be required if the data recorded are on varying scales. Having feature values on different scales can cause some features to have more strength in predictions, which is often erroneous. Following are some commonly used methods to scale and normalize data:

- Min–max normalization

Normalizing data using min–max is a method that shifts all the data values to a scale between 0 and 1. The minimum and maximum values are recorded, and Equation (1) is applied to scale it.

- 2.

- Mean normalization

Mean normalization is another method that centers the data around the mean, as shown in Equation (2):

- 3.

- Unit Scaling

For vectors that consist of continuous values, scaling them to a unit length maintains the same direction, but changes its magnitude to 1. See Equation (3):

- 4.

- Standardization

Standardization involves making a loose distributional assumption about the data and scaling it around the mean. Z-Score standardization involves making a normal distributional assumption and using the mean value () and standard deviation ( as seen in Equation (4):

The steps involved in preprocessing are applied to new features that are extracted/generated as well. Hence, it is required to note that in the data preparation and analysis phase, the steps go back and forth.

4.2.2. Signal Preprocessing

The data collected from sensor measurements in most cases are in the form of signals. Signal processing is a complex field of study and involves identifying the type of signal and modifying it in some form to improve its quality. There are a few actions that need to be taken to enhance the quality of signals. First, we need to consider the type of signals that exist in the data. In manufacturing data, we usually observe signals from sound, vibration and power. Since these signals are observed across different machines in the industry, we will focus our discussion on preprocessing these signals. Signal preprocessing involves some key tasks, such as denoising, amplification and filtering. We will discuss these topics in brief in this section.

Noise reduction methods or de-noising is a process that diminishes noise in the signals. The entire removal of noise is not possible, so curtailing it to an acceptable limit is the goal of this step. The Signal-to-Noise Ratio (SNR) is a metric used to determine how much of the signal is composed of true signal versus noise. Signals are decomposed using techniques such as wavelet transforms and median filters [69], which preserve the original signal while reducing the amount of noise that it is composed of. Signal amplification is also a method used in signal processing that improves the quality of the signal by using one of two approaches: boosting its resolution or reducing SNR. Signals are generally amplified to meet threshold requirements of equipment being used.

Filtering is one of the steps in signal conditioning, in which low-pass and high-pass filters are used in attenuating signals based on a specified cut-off. Low-pass filters block high frequencies while allowing low frequencies to pass, and high-pass filters block low frequencies while allowing high frequencies to pass through. Low-pass filters help in removing noise, and high-pass filters filter out the unwanted portions and fluctuations of signals. The cut-off frequencies are generally chosen depending on the noise observed.

There are also methods to pre-process signals using statistical techniques. Estimation methods such as Minimum Variance Unbiased Estimator (MVUE), Cramer-Rao lower bound method, Maximum Likelihood estimation (MLE), Least Squares Estimation (LSE), Monte-Carlo method, method of Moments and Bayesian estimation, along with several others, are discussed in the context of signal processing in [70]. We will not further discuss these estimation methods in signal processing as it is beyond the scope of this article.

4.2.3. Feature Extraction

Signal measurements recorded are high in dimensionality and consist of readings that cannot be directly used in any form of modeling. This is due to the non-linearity of the machine operation that is also dependent on time [71]. To understand the signal readings in the context of the manufacturing process in question, the relevant information from the signals should be extracted or generated. This information from the signals is extracted as features for the dataset. These features are extracted in the time domain, frequency domain and time-frequency domain. Zhang et al. [72] have compiled a list of features that can be extracted in all three of these domains for machining processes. An overview of these features is as follows.

Time-Domain Feature Extraction

There are several statistical features that can be extracted from signals in the time domain. Features such as maximum value, mean value, root mean square, variance and standard deviation are extracted. Additionally, higher-order statistical features such as kurtosis and skewness are calculated. These values are dependent on the probability distribution function, with kurtosis providing information about the peak of the distribution and skewness explaining if the distribution is symmetrical or not. The Peak-to-Peak feature computes the difference between extreme values of the amplitude, i.e., difference between maximum and minimum values. Crest factor is the ratio of the maximum value and mean values of the signal. A list of time-domain features and their description is provided in Table 2. We refer readers to [72,73] for in-depth explanations of the features.

Table 2.

Time-domain features for commonly observed sensor signals for machining according to [72].

Frequency-Domain Feature Extraction

Features in the frequency domain are obtained by using a transform on the signal signatures. The Discrete Fourier Transform (DFT) is a method used in spectral analysis of signals. The DFT is based on the Fourier Transform method, see Equation (5) [74]:

where,

- = input signal at time ,

- = nT = n-th sampling instant, for n 0,

- = spectrum of x at frequency ,

- = sample from k-th frequency in radians per second,

- T = sampling interval in seconds,

- = 1/T = sampling rate or samples per second,

- = total number of samples in signal.

The Discrete Time Fourier Transform (DTFT) is a limiting form of the DFT allowing infinite samples, see Equation (6):

where,

- = signal amplitude at sample,

- = DTFT of x at sample.

The Fast Fourier Transform (FFT) is an algorithm that uses the Discrete Fourier Transform (DTF) method to convert the signal measurements from the original order to the frequency domain by sampling them over time, which is most used for practical purposes. The number of samples is an important parameter to be noted in this stage and is used in the calculation of power spectral density of the signals using a periodogram [75], which is nothing but a ratio of the squared magnitude of DTTF and the number of samples, see Equation (7):

For a more comprehensive understanding of Fourier Transforms, DFT, DTFT and periodograms, refer to Smith [74,75]. Features such as maximum, sum, mean, variance, skewness, kurtosis and relative spectral peaks extracted from power spectra are commonly extracted from signals that are produced by machining operations. The list of frequency-domain features is shown in Table 3. Zhang et al. [72] and Caesarendra et al. [73] provide more details for these frequency-domain features.

Table 3.

Frequency-domain features for commonly observed sensor signals for machining according to [72].

Time-Frequency Domain Features

Like frequency-domain feature extraction, time-frequency features are extracted by using wavelet transforms. Time-frequency analysis provides relationships represented both over time and frequency. This two-dimensional view of the signal, in some cases, can generate features that are not captured by time-domain features or frequency-domain features. Methods such as Short-Term Fourier Transform (STFT), Continuous Wavelet Transform (CWT), Wavelet Packet Transform (WPT) and Hilbert-Huang Transform (HHT) are used to extract features in the time-frequency domain [72,76]. These methods analyze the signals on a two-dimensional view by using a combined function for the two domains. Although there is much research conducted in this field, an elaboration on these methods is beyond the scope of this paper.

4.2.4. Feature Evaluation and Selection

The feature extraction or feature generation from signals results in a high-dimensional space with many features. There are often more features than the number of recorded instances after the feature extraction step. This could be troublesome, causing any modeling technique to potentially overfit the data, causing misleading results. Overfitting is caused by some form of redundancy in the high-dimensional space, with not all features being related to the predictor or dependent variable. A solution to this problem is reducing the number of features that are used in modeling. Feature evaluation and selection methods select features that are better in predicting the response variable than all the other features in the feature space. They primarily fall into three main categories: filter methods, wrapper methods and embedded methods.

Filter methods calculate the performance of features across the entire dataset and select the top-performing features. The most used filter method for feature selection is correlation analysis. There are different computational approaches to calculating the correlation coefficient, and Pearson’s, Kendall’s and Spearman’s correlation are the ones that are commonly used. In correlation analysis, pairwise correlation coefficients are calculated between the variables and compared. The analysis is aimed at eliminating features from highly correlated pairs. In a highly correlated pair, either one of the features can be used in prediction while the other is eliminated. There is a concern with this approach, however, as not all features in the dataset may have a relationship with the dependent variable. This may result in a useful feature being dropped during the correlation analysis. To circumvent this issue, one may sometimes calculate the correlation of all the features in the data against the response and use this to eliminate the weaker feature in the pair. Correlation analyses are effective in feature selection. Cross-validation, a method in which data are divided to validate any analysis or modeling, is sometimes used with correlation for feature selection. Methods such as Random Forest to calculate feature importance, Mutual Information to obtain the entropy, analysis of variance (ANOVA) and several others are compared in [77].

Wrapper methods use subsets of the features to identify which ones are more important to the dataset. These methods generally deploy search-based algorithms to find the best features from the feature space. Wrapper methods can be broadly classified into two categories: heuristic search methods and sequential search algorithms [78]. Heuristic methods include Genetic algorithm (GA), Variable Neighborhood Search (VNS), Simulated Annealing (SA), Particle Swarm Optimization (PSO), etc. Sequential search algorithms include Forward Selection, a method in which an empty feature set is used, and features are added individually and evaluated using modeling techniques. Backward Selection is another sequential search method in which the entire feature set is used, and features are evaluated and eliminated depending on model performance. In an exhaustive search method, subsets of features are evaluated against model performance and the best subset is chosen.

Embedded feature selection methods are deployed on subsets of the data along with the modeling techniques. Regularization is an important method in which a penalty is added to the model as a constraint. The regularizer penalizes the coefficients of features in a model, thereby reducing the feature’s strength in the model. Popular methods include LASSO, Ridge and elastic nets in regression, and Tree-based methods in Decision Trees, Random Forest, XGBoost, etc.

4.3. Phase 3: SPHM Modeling and Evaluation

As mentioned previously, this paper proposes the framework for SPHM and describes its first two phases in detail. In this phase, we provide a brief overview of the methods that can be used in modeling, evaluation and the deployment of the framework.

The selection of modeling methods is based on the manufacturing operation that is being considered. The most popular modeling approaches in the last decade have been data-driven, with some novel hybrid models being developed as well. Supervised learning methods, unsupervised methods as well as DL have come to the forefront in CBM and in the prediction of RUL. As we have presented in Section 2.5, there are a multitude of options to choose from for PHM modeling. Modeling methods not only include regression-based prediction, but also classification models for failure detection that determine whether a component will fail or not.

Evaluation metrics for models can be chosen based on the type of data-driven or hybrid models. There are metrics such as RMSE, Mean Absolute Error (MAE), coefficient of determination (R-Squared), adjusted R-squared and Mallow’s CP used in evaluation and assessment of regression models. Classification models are generally evaluated using a confusion matrix, in which the True Positive, True Negative, False Positive and False Negative classifications are recorded. Based on the confusion matrix, metrics such as accuracy, precision, sensitivity, specificity, F1 score, etc., are calculated and used in evaluating a classifier. Deep Learning methods can also be evaluated based on their predictive power by using accuracy as an evaluation metric.

Deploying SPHM models in the field requires development of apps, either web-based or mobile, so that engineers and technicians can analyze and assess the current state of operations based on real-time data. Digital-Twin-driven modeling consists of a digital replica of the shopfloor setup that is synced in real-time. A review of the development of such applications and their deployment is being considered a separate research area and is proposed as future work.

5. Case Study: Milling Machine Operation

Milling is one of the fundamental operations in manufacturing engineering and is essential in most, if not all shopfloors. It is an ideal starting point to analyze the SPHM framework in manufacturing and is useful in providing a basis to understanding how even a simple operation can generate such complex data. A typical milling machine setup comprises of the following components: spindle, cutting tool, base, workpiece, X-axis and Y-axis traversing mechanism, and a table upon which the workpieces are mounted. The cutting tool is used to remove material from the workpiece by moving it along the different axes via the machine table motion. Old milling machines are operated manually by using the mechanisms to move the cutting tool, whereas newer machines with Computer Numerical Control (CNC) controllers are equipped with a wide range of sensors and automated tool changing mechanisms depending upon the specifics of the operation. The experimental data considered for this paper were developed by Berkeley University, California [79,80].

In this use-case, we apply the first two phases of the proposed SPHM framework, and aim at understanding the setup, cleaning and preprocessing of the data, preprocessing the signals, extracting relevant features and selecting the final set of features.

5.1. Phase 1: Milling Machine Setup and Data Acquisition

5.1.1. Milling Machine and Sensor Setup

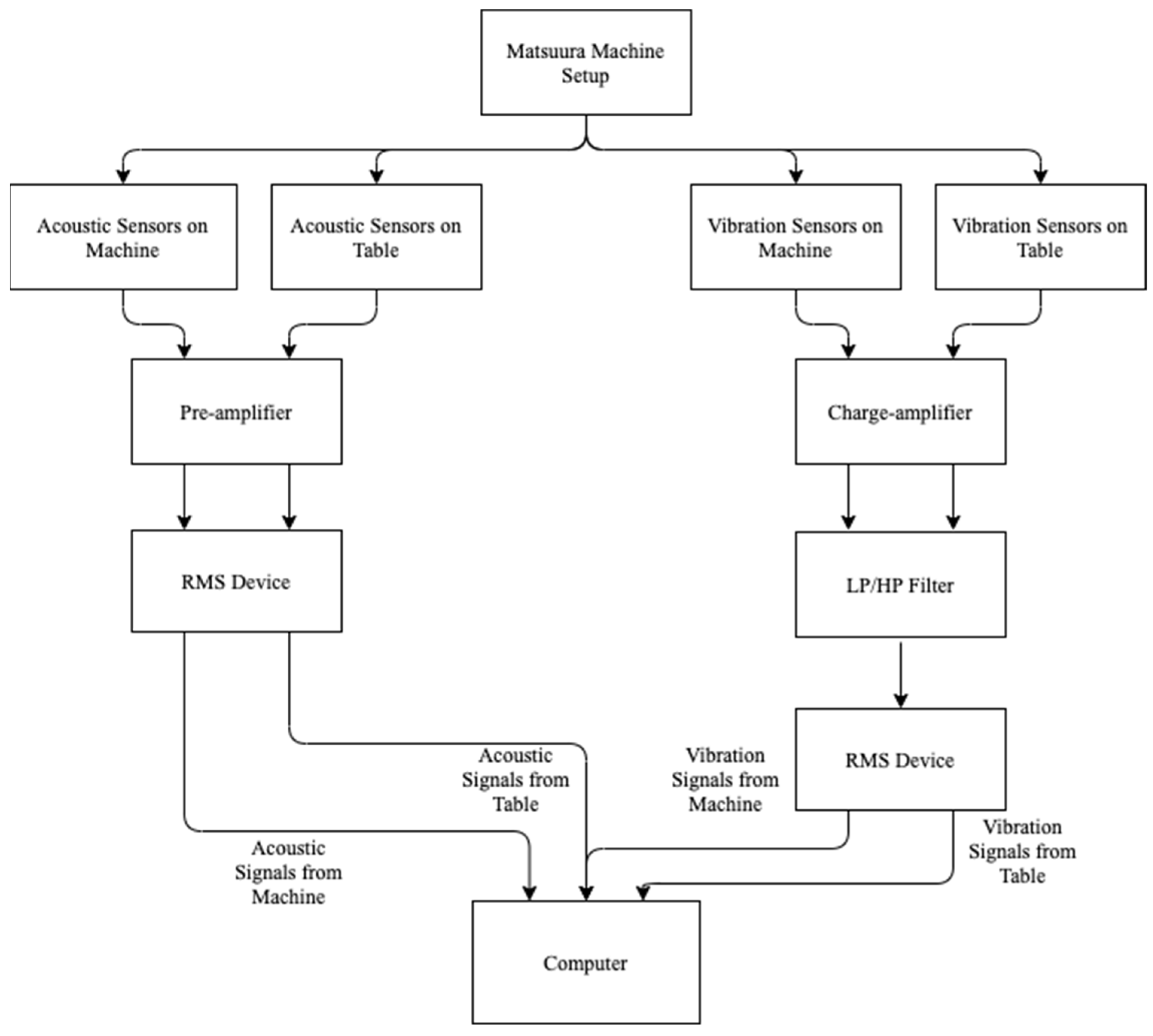

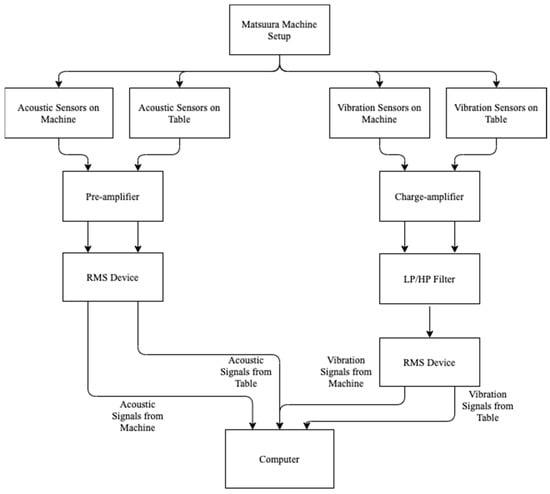

The setup used consists of an MC-510V Matsuura machine along with a table that it is mounted on. There are three sets of sensors: acoustic emission sensors, vibration sensors and current sensors. The acoustic emission sensor is the WD 925 model by the Physical Acoustic Group that has a frequency range of up to 2 MHz. This sensor is secured to a clamping support. The acoustic emission signals are passed through a model 1801 preamplifier built by Dunegan/Endevco. The preamplifier has an in-built 50 kHz high-pass filter. Further amplification of the signals is performed by using a DE model 203 A (Dunegan/Endevco). These signals then pass through a custom-made RMS meter with the time constant set to 8.0 ms. Then, these signals are fed through a UMK-SE 11,25 cable made by Phoenix Contact that is linked to an MIO-16 board made by National Instruments for high-speed data acquisition. Another acoustic emission sensor is mounted on the spindle and the signals follow the same path via the preamplifier, filter, amplifier and RMS meter to the data acquisition board. The vibration sensor used is an accelerometer built by Endevco, Model 7201-50, that has a frequency range of up to 13 kHz. Vibration signals pass through a model 104 charge amplifier made by Endevco and then through an Itthaco 4302 Dual 24 dB octave filter (low-pass and high-pass). These signals then pass through a custom-made RMS meter and to the MIO-16 board using a UMK-SE 11,25 cable. The vibration sensors are mounted on both the table and the milling machine’s spindle. An OMRON K3TB-A1015 current converter feeds signals from one spindle motor current phase to the high-speed data acquisition board. Another current sensor, model CTA 213, built by Flexcore Division of Marlan and Associates, Inc., also feeds signals into the data acquisition board. See Figure 3 for the experimental setup showing the connections of acoustic and vibration sensors.

Figure 3.

Milling operation setup, adapted from [79].

The selection of parameters for the experiment was chosen based on manufacturers’ standards and industry specifications. Two types of inserts were selected for the cutting tool, KC710 and K420. They are resistant to wear and can function in environments that involve high friction. The materials for the workpieces were stainless-steel J45 and cast iron. Other important parameters included the setting of the speed of the cutting tool to 200 m/min, Depth of Cut (DOC) of the two settings of 1.5 and 0.5 mm and feeds to two settings of 413 and 206.5 mm/min. The combinations of the numbers of parameters resulted in 8 different settings under which the milling machine could operate.

The experimental data from [79,80] consisted of 16 cases with varying DOC, feed and materials. These 16 cases were used as experimental conditions and run multiple times. The numbers of runs were determined by evaluating flank wear on the cutting face of the tool, by taking measurements at intermittent but non-uniform intervals.

5.1.2. Data Collection and Understanding

The data were recorded as a ‘struct array’ using MATLAB [81] software. The dataset consists of 13 features, out of which 6 are derived from sensor readings. The description of the dataset can be found in Table 4. There are 16 cases, with the DOC, feed and material kept constant for each case. Each case consists of a varying number of runs that are dependent on VB, the degree of flank wear. VB measurements were recorded at irregular intervals up to the limit when significant wear was observed. If we look closely at the MATLAB file, we notice that each of the values under the sensor features (smcAC, smcDC, vib_table, vib_spindle, AE_table and AE_spindle) comprise of a 9000 1 dimensional vector. This is because the sensor’s signals are amplified and filtered before being captured, resulting in measurements that are of high dimensions. A view of the first few rows of the dataset can be seen in Table A1 of Appendix A, showing the highly dimensional values in sensor readings. These features need to be preprocessed and transformed so that they can be analyzed more thoroughly. We also note some missing values, and possibly some outliers that can be troublesome while conducting a data-driven approach. This dataset requires preparation and preprocessing for it to be suitable for use in modeling.

Table 4.

Features of the milling dataset and their description.

5.2. Phase 2: Data Preparation and Analysis

5.2.1. Data Cleaning and Preprocessing

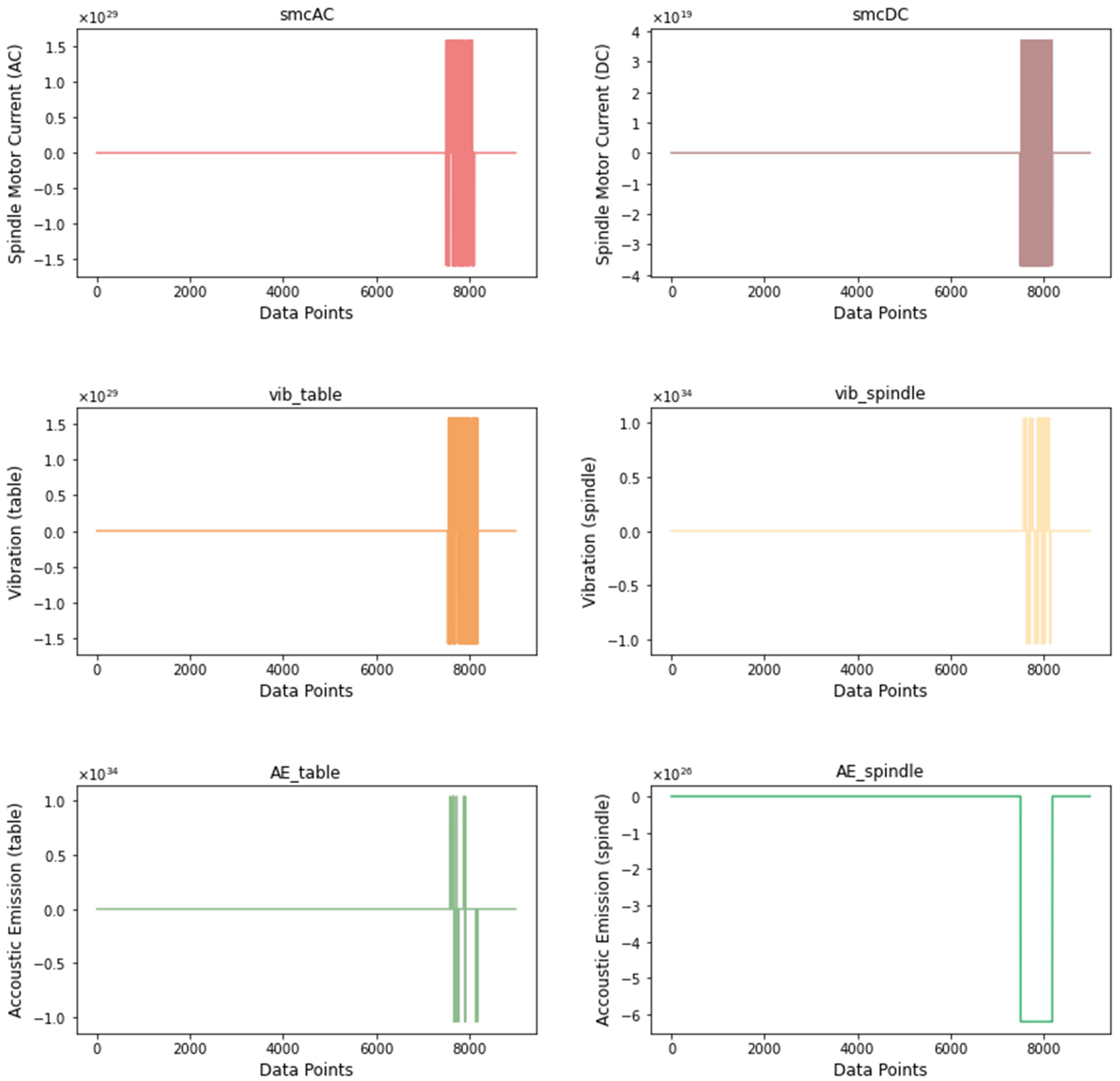

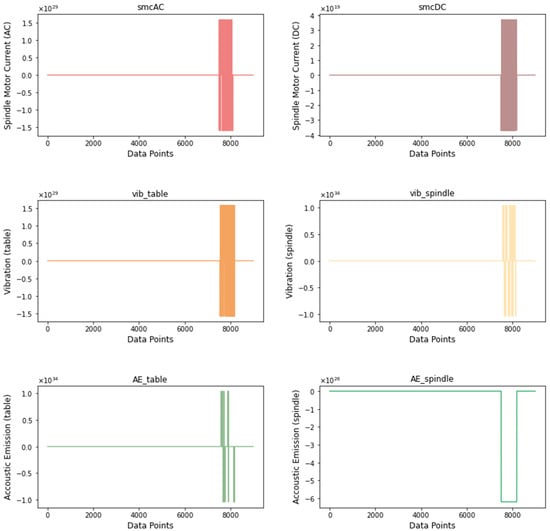

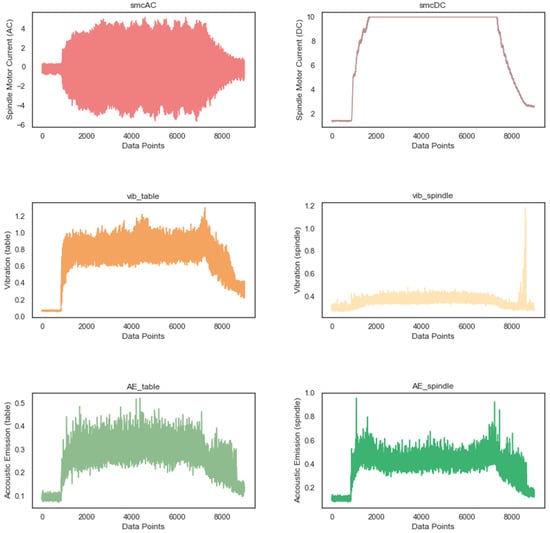

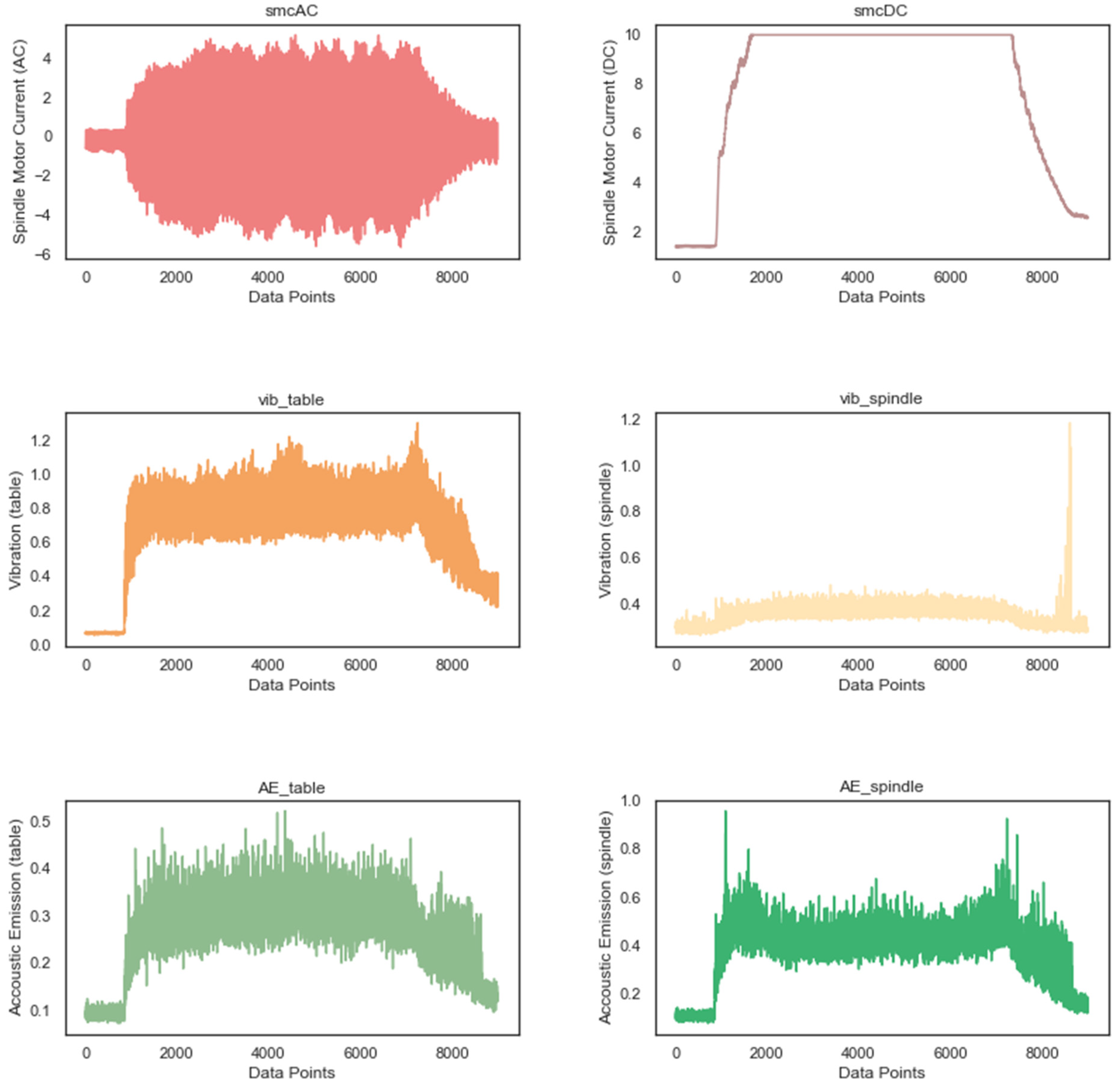

VB is the most important feature in this dataset since RUL assessment and condition monitoring are performed based on VB values. If we observe the dataset closely, we notice that there are missing values in the VB column. This is because VB measurements were taken are irregular intervals until the degradation limit. There were 21 instances identified that contained missing VB values. Since VB is crucial to any analysis that we wish to conduct, the appropriate strategy in this case is to delete the instances in which missing values are observed. After removing the instances with the missing VB values, the dataset is reduced to 146 instances. The next step in this process is to identify any outliers in the data. Figure 4 shows the signal signatures from the six sensors for run 1 of case 2. Compared to signals from other instances (see Figure A1 in Appendix A), the ones from Run 1 of Case 2 are of a much higher magnitude. The sensor measurements for this case have peaks at the following magnitudes: smcAC at 1029, smcDC at 1019, vib_table at 1029, vib_spindle at 1034, AE_table at 1034 and AE_spindle at 1026. The rectangular-shaped peaks observed for this case cannot be attributed to any filtering or operation from the experiment. After comparing this anomalous instance against other instances, we can confirm that it is an outlier that is most likely due to measurement error. Similarly, all the instances in the dataset are scanned for abnormalities. We observe that there is only one instance of an outlier, and we choose to discard it under the circumstances, with the final dataset consisting of 145 instances. Before we move on to the next step, it is crucial to note that the data have not been scaled or normalized. This is because scaling/normalization is performed after all the features have been generated.

Figure 4.

Signatures from the six sensors showing an outlier for case 2, run 1.

5.2.2. Signal Preprocessing

The preprocessing of signals from sensors was conducted during the experimental setup, by Goebel et al. [79,80]. The signals from acoustic and vibration sensors were amplified in the range of ±5 V, according to the equipment threshold. The vib_table and vib_spindle signals were routed through a low-pass and high-pass filter, attenuating any frequency that did not meet the cut-off. The acoustic signals were fed through a high-pass filter to filter out any unwanted frequencies. Cut-off frequencies were identified based on graphical displays on an oscilloscope, with cut-offs of 400 Hz and 1 kHz set for the low-pass and high-pass filters, respectively. An equipment threshold of 8 kHz was set for the acoustic emission sensor, meaning that any frequency observed above that would not be due to machining operations and is filtered out. An RMS meter allowed the signals to undergo some additional preprocessing by smoothing them.

5.2.3. Feature Extraction

In this step, feature extraction methods were applied to generate features in the time domain and frequency domain. The methods applied in feature extraction are ones that have been proven to be suitable for machining operations. Time-domain features were extracted using the prescribed feature set in Table 2. This method generates 54 features, i.e., 9 new features for each of the 6 signals. Frequency-domain features were generated using the prescribed feature set in Table 3, generating an additional 42 features, i.e., 7 new features for each of the 6 input signals. The total generated features are 96, which is a highly dimensional feature set.

Once the features are extracted, we note that some of the new features are on varying scales that could skew the modeling approach. Features based on Kurtosis of Band Power and Relative Spectral Peak per Band consist of values that are significantly higher than values of features based on Mean of Band Power and Variance of Band Power. Therefore, we choose to apply min–max normalization, a method shown in Equation (1). This ensures that all the features are on the same normalized scale.

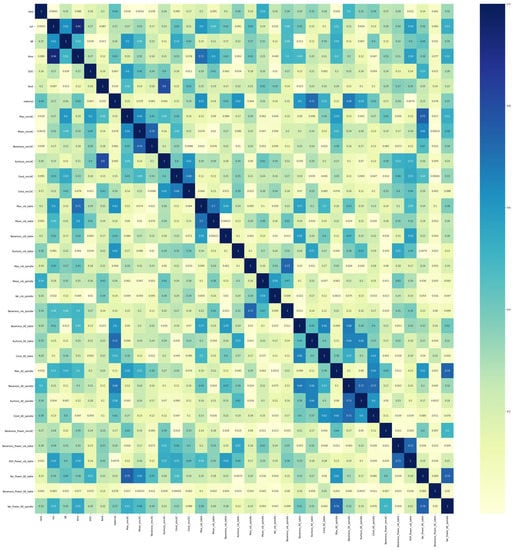

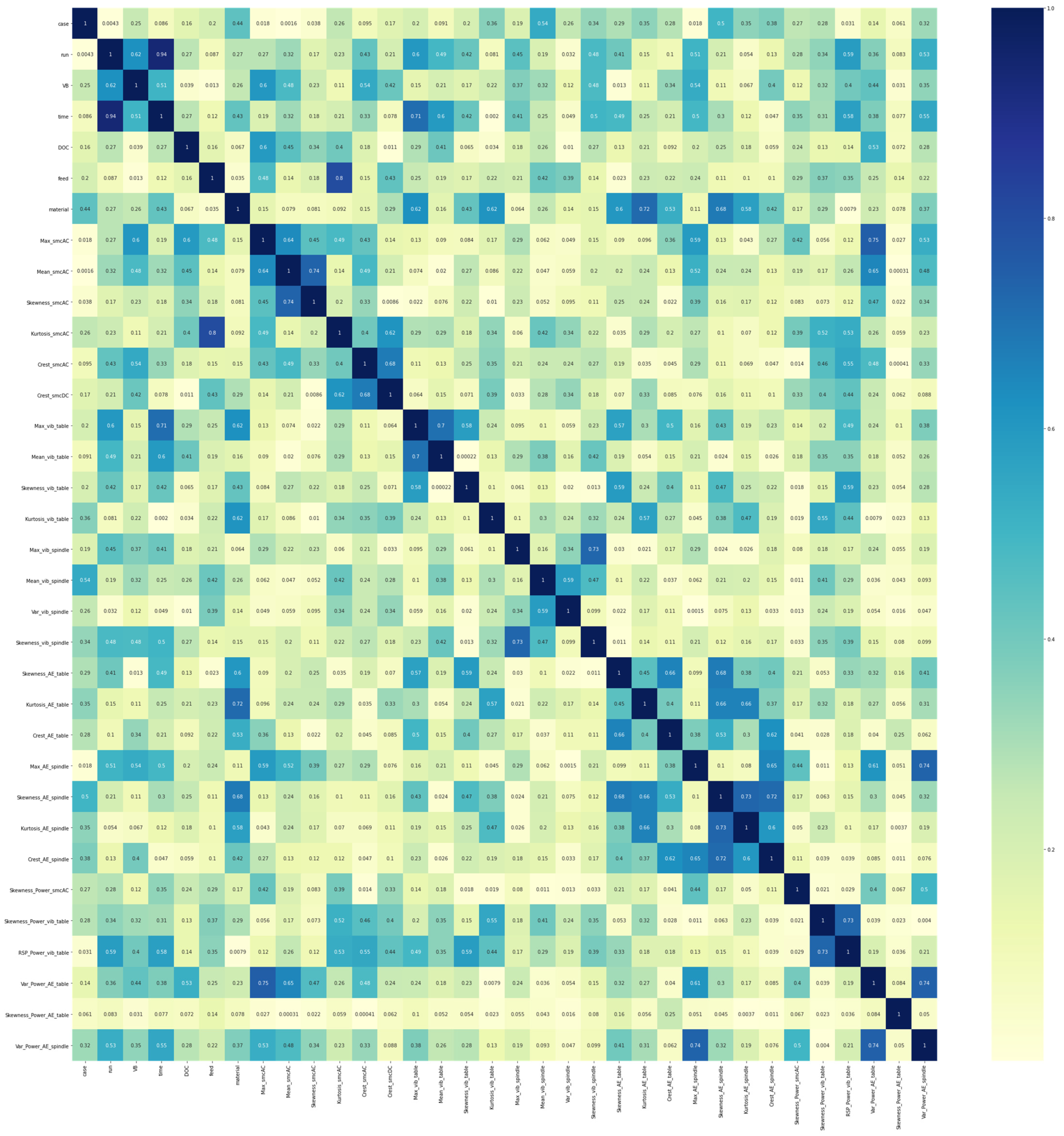

5.2.4. Feature Evaluation and Selection

The feature set after extracting features from signals consists of a total of 103 features. Seven features are parameters of the experiment: case, run, VB, time, DOC, feed and material. The other 96 features are extracted as discussed in the previous step. The data in their current state consist of 145 instances and 103 features. To ensure that the curse of dimensionality is avoided in the modeling phase, the most important features to predict VB and the RUL need to be identified. As a first step, we perform a univariate analysis by calculating the correlation coefficients between the individual features and the response variable VB. All 96 newly generated features are considered, ignoring the 7 experimental parameters since they are important and must be included in the analysis. The correlation coefficients are then ranked in descending order, allowing us to observe which of the newly generated features have a relationship with the response. Next, we calculate the correlation matrix for all 96 features and use the univariate ranking with VB to choose the best features. Feature pairs that have a Pearson correlation coefficient of 0.75 or more are considered for potential elimination by comparing their relationship with VB. From the feature pair, the one that has a higher correlation coefficient with VB is retained, while the other is dropped. This method allows us to identify the most important features without having to specify the number of features, which is a big research problem by itself. The final dataset consists of 34 features, out of which 27 features are extracted from the input signals, 6 of them are experimental parameters, and 1 is the response variable. The correlation matrix for the final set of features is shown in Figure A2 of Appendix A. As we can see, features that have a pairwise correlation coefficient of 0.75 or more are noted. The ranked correlation coefficients of these features with VB are compared, and features are removed accordingly. This is a simple, yet effective strategy to eliminate features that have no strength in the analysis.

6. Results

The application of Phases 1 and 2 of the proposed SPHM framework to milling machine operations provides us with a thoroughly prepared dataset for ML and DL purposes. The milling machine experimental setup is reviewed, and the operating parameters are noted. Details on the acquisition of the data are listed along with a data dictionary consisting of the attributes and their description. The final dataset is cleaned of missing values and outliers. Signals are preprocessed, and suitable features are extracted based on prior knowledge and proven extraction methods. The final set of features is selected based on a comparison of pairwise correlation coefficients and the ranking of the correlation coefficients with the response variable, VB. The link between the steps in the first two phases of SPHM and its application to the milling machine case is shown in Table 5. The SPHM framework thus far has proven to be an effective methodology to setup the experiment, acquire data, prepare data, preprocess data and the signals, extract features and evaluate and select features.

Table 5.

SPHM Phases implemented on milling data.

7. Conclusions and Future Work

This paper provided an in-depth understanding of PHM and maintenance approaches to manufacturing. The different approaches and challenges to PHM were outlined, and the current trends in PHM research were reviewed. A unique SPHM framework that is interoperable to all areas of manufacturing was proposed and the multifaceted SPHM framework was described in 3 phases—Phase 1: Setup and Data Acquisition, Phase 2: Data Preparation and Analysis and Phase 3: SPHM Modeling and Evaluation. In this paper, Phase 1 and Phase 2 were discussed in detail, with the nuances and the elaboration of Phase 3 considered as future work. In future studies, we wish to focus on advanced Deep Learning methods as a part of SPHM Phase 3 and compare their performance with baseline Machine Learning methods, such as regression, SVM, etc.

Author Contributions

Conceptualization, A.Z. and S.S.; methodology, S.S.; software, S.S.; data curation, S.S.; writing—original draft preparation, S.S.; writing—review and editing, A.Z.; visualization, S.S.; supervision, A.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this article are publicly available at https://ti.arc.nasa.gov/tech/dash/groups/pcoe/prognostic-data-repository/, Accessed on: 20 July 2021.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Snapshot of the dataset.

Table A1.

Snapshot of the dataset.

| Case | Run | VB | Time | DOC | Feed | Material | smcAC | smcDC | vib_table | vib_spindle | AE_table | AE_spindle |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 0 | 2 | 1.5 | 0.5 | 1 | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim |

| 1 | 2 | NaN | 4 | 1.5 | 0.5 | 1 | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim |

| 1 | 3 | NaN | 6 | 1.5 | 0.5 | 1 | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim |

| 1 | 4 | 0.11 | 7 | 1.5 | 0.5 | 1 | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim |

| 1 | 5 | NaN | 11 | 1.5 | 0.5 | 1 | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim | 9000 × 1 dim |

Figure A1.

Signatures from the six sensor signals for case 1, run 11.

Figure A1.

Signatures from the six sensor signals for case 1, run 11.

Figure A2.

Correlation matrix of final set of features.

Figure A2.

Correlation matrix of final set of features.

References

- Thomas, D.S.; Weiss, B. Maintenance Costs and Advanced Maintenance Techniques: Survey and Analysis. Int. J. Progn. Health Manag. 2021, 12. Available online: https://papers.phmsociety.org/index.php/ijphm/article/view/2883 (accessed on 6 May 2021). [CrossRef]

- Venkatasubramanian, V. Prognostic and diagnostic monitoring of complex systems for product lifecycle management: Challenges and opportunities. Comput. Chem. Eng. 2005, 29, 1253–1263. [Google Scholar] [CrossRef]

- Zeid, A.; Sundaram, S.; Moghaddam, M.; Kamarthi, S.; Marion, T. Interoperability in Smart Manufacturing: Research Challenges. Machines 2019, 7, 21. Available online: https://www.mdpi.com/2075-1702/7/2/21 (accessed on 11 February 2020). [CrossRef] [Green Version]

- Pintelon, L.M.; Gelders, L.F. Maintenance management decision making. Eur. J. Oper. Res. 1992, 58, 301–317. [Google Scholar] [CrossRef]

- Pinjala, S.K.; Pintelon, L.; Vereecke, A. An empirical investigation on the relationship between business and maintenance strategies. Int. J. Prod. Econ. 2006, 104, 214–229. [Google Scholar] [CrossRef]

- Lee, J.; Wu, F.; Zhao, W.; Ghaffari, M.; Liao, L.; Siegel, D. Prognostics and health management design for rotary machinery systems—Reviews, methodology and applications. Mech. Syst. Signal Process. 2014, 42, 314–334. [Google Scholar] [CrossRef]

- Atamuradov, V.; Medjaher, K.; Dersin, P.; Lamoureux, B.; Zerhouni, N. Prognostics and Health Management for Maintenance Practitioners—Review, Implementation and Tools Evaluation. Int. J. Progn. Health Manag. 2017, 8, 1–31. Available online: https://oatao.univ-toulouse.fr/19521/ (accessed on 10 June 2021).

- Vogl, G.W.; Weiss, B.A.; Helu, M. A review of diagnostic and prognostic capabilities and best practices for manufacturing. J. Intell. Manuf. 2019, 30, 79–95. [Google Scholar] [CrossRef]

- Meng, H.; Li, Y.F. A review on prognostics and health management (PHM) methods of lithium-ion batteries. Renew. Sustain. Energy Rev. 2019, 116, 109405. [Google Scholar] [CrossRef]

- Eker, O.F.; Camci, F.; Jennions, I.K. Major Challenges in Prognostics: Study on Benchmarking Prognostics Datasets. PHM Society. 2012. Available online: http://dspace.lib.cranfield.ac.uk/handle/1826/9994 (accessed on 3 January 2021).

- Elattar, H.M.; Elminir, H.K.; Riad, A.M. Prognostics: A literature review. Complex Intell. Syst. 2016, 2, 125–154. Available online: https://link.springer.com/article/10.1007/s40747-016-0019-3 (accessed on 10 June 2021). [CrossRef] [Green Version]

- Sarih, H.; Tchangani, A.P.; Medjaher, K.; Pere, E. Data preparation and preprocessing for broadcast systems monitoring in PHM framework. In Proceedings of the 6th International Conference on Control, Decision and Information Technologies, CoDIT 2019, Paris, France, 23–26 April 2019; pp. 1444–1449. [Google Scholar] [CrossRef] [Green Version]

- Cubillo, A.; Perinpanayagam, S.; Esperon-Miguez, M. A review of physics-based models in prognostics: Application to gears and bearings of rotating machinery. Adv. Mech. Eng. 2016, 8, 1–21. Available online: https://us.sagepub.com/en-us/nam/ (accessed on 17 June 2021). [CrossRef] [Green Version]

- Pecht, M.; Jie, G. Physics-of-failure-based prognostics for electronic products. Trans. Inst. Meas. Control 2009, 31, 309–322. Available online: http://tim.sagepub.com (accessed on 17 June 2021). [CrossRef]

- Lui, Y.H.; Li, M.; Downey, A.; Shen, S.; Nemani, V.P.; Ye, H.; VanElzen, C.; Jain, G.; Hu, S.; Laflamme, S.; et al. Physics-based prognostics of implantable-grade lithium-ion battery for remaining useful life prediction. J. Power Sources 2021, 485, 229327. [Google Scholar] [CrossRef]

- Bradley, D.; Ortega-Sanchez, C.; Tyrrell, A. Embryonics + immunotronics: A bio-inspired approach to fault tolerance. In Proceedings of the The Second NASA/DoD Workshop on Evolvable Hardware, Palo Alto, CA, USA, 15 July 2000; pp. 215–223. [Google Scholar]

- Dong, H.; Yang, X.; Li, A.; Xie, Z.; Zuo, Y. Bio-inspired PHM model for diagnostics of faults in power transformers using dissolved gas-in-oil data. Sensors 2019, 19, 845. Available online: www.mdpi.com/journal/sensors (accessed on 10 June 2021). [CrossRef] [PubMed] [Green Version]

- Soualhi, A.; Razik, H.; Clerc, G.; Doan, D.D. Prognosis of bearing failures using hidden markov models and the adaptive neuro-fuzzy inference system. IEEE Trans. Ind. Electron. 2014, 61, 2864–2874. [Google Scholar] [CrossRef]

- Moghaddam, M.; Chen, Q.; Deshmukh, A.V. A neuro-inspired computational model for adaptive fault diagnosis. Expert Syst. Appl. 2020, 140, 112879. [Google Scholar] [CrossRef]

- Huang, B.; Di, Y.; Jin, C.; Lee, J. Review of data-driven prognostics and health management techniques: Lessions learned from PHM data challenge competitions. Mach. Fail. Prev. Technol. 2017, 2017, 1–17. [Google Scholar]

- Jia, X.; Huang, B.; Feng, J.; Cai, H.; Lee, J. Review of PHM Data Competitions from 2008 to 2017. Annu. Conf. PHM Soc. 2018, 10. Available online: https://papers.phmsociety.org/index.php/phmconf/article/view/462 (accessed on 11 June 2021). [CrossRef]

- Huang, H.Z.; Wang, H.K.; Li, Y.F.; Zhang, L.; Liu, Z. Support vector machine based estimation of remaining useful life: Current research status and future trends. J. Mech. Sci. Technol. 2015, 29, 151–163. Available online: www.springerlink.com/content/1738-494x (accessed on 11 June 2021). [CrossRef]

- Mathew, V.; Toby, T.; Singh, V.; Rao, B.M.; Kumar, M.G. Prediction of Remaining Useful Lifetime (RUL) of turbofan engine using machine learning. In Proceedings of the IEEE International Conference on Circuits and Systems, ICCS 2017, Thiruvananthapuram, India, 20–21 December 2017; Volume 2018, pp. 306–311. [Google Scholar]

- Elforjani, M.; Shanbr, S. Prognosis of Bearing Acoustic Emission Signals Using Supervised Machine Learning. IEEE Trans. Ind. Electron. 2018, 65, 5864–5871. [Google Scholar] [CrossRef] [Green Version]

- Mansouri, S.S.; Karvelis, P.; Georgoulas, G.; Nikolakopoulos, G. Remaining Useful Battery Life Prediction for UAVs based on Machine Learning. IFAC-PapersOnLine 2017, 50, 4727–4732. [Google Scholar] [CrossRef]

- Cho, S.; Asfour, S.; Onar, A.; Kaundinya, N. Tool breakage detection using support vector machine learning in a milling process. Int. J. Mach. Tools Manuf. 2005, 45, 241–249. [Google Scholar] [CrossRef]

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Li, N.; Nandi, A.K. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Abdelgayed, T.S.; Morsi, W.G.; Sidhu, T.S. Fault detection and classification based on co-training of semisupervised machine learning. IEEE Trans. Ind. Electron. 2017, 65, 1595–1605. [Google Scholar] [CrossRef]

- Wang, X.; Feng, H.; Fan, Y. Fault detection and classification for complex processes using semi-supervised learning algorithm. Chemom. Intell. Lab. Syst. 2015, 149, 24–32. [Google Scholar] [CrossRef]

- Yan, K.; Zhong, C.; Ji, Z.; Huang, J. Semi-supervised learning for early detection and diagnosis of various air handling unit faults. Energy Build. 2018, 181, 75–83. [Google Scholar] [CrossRef]

- Malhi, A.; Yan, R.; Gao, R.X. Prognosis of defect propagation based on recurrent neural networks. IEEE Trans. Instrum. Meas. 2011, 60, 703–711. [Google Scholar] [CrossRef]

- Heimes, F.O. Recurrent neural networks for remaining useful life estimation. In Proceedings of the 2008 International Conference on Prognostics and Health Management, Denver, CO, USA, 6–9 October 2008. [Google Scholar] [CrossRef]

- Palau, A.S.; Bakliwal, K.; Dhada, M.H.; Pearce, T.; Parlikad, A.K. Recurrent Neural Networks for real-time distributed collaborative prognostics. In Proceedings of the 2018 IEEE International Conference on Prognostics and Health Management, ICPHM 2018, Seattle, WA, USA, 11–13 June 2018. [Google Scholar] [CrossRef] [Green Version]

- Gugulothu, N.; TV, V.; Malhotra, P.; Vig, L.; Agarwal, P.; Shroff, G. Predicting Remaining Useful Life using Time Series Embeddings based on Recurrent Neural Networks. arXiv 2017, arXiv:1709.01073. Available online: http://arxiv.org/abs/1709.01073 (accessed on 11 June 2021).

- Deutsch, J.; He, D. Using deep learning-based approach to predict remaining useful life of rotating components. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 11–20. [Google Scholar] [CrossRef]

- Zhao, G.; Zhang, G.; Liu, Y.; Zhang, B.; Hu, C. Lithium-ion battery remaining useful life prediction with Deep Belief Network and Relevance Vector Machine. In Proceedings of the 2017 IEEE International Conference on Prognostics and Health Management, ICPHM 2017, Dallas, TX, USA, 19–21 June 2017; pp. 7–13. [Google Scholar]

- Zhang, C.; Lim, P.; Qin, A.K.; Tan, K.C. Multiobjective Deep Belief Networks Ensemble for Remaining Useful Life Estimation in Prognostics. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2306–2318. [Google Scholar] [CrossRef]

- Liao, L.; Jin, W.; Pavel, R. Enhanced Restricted Boltzmann Machine with Prognosability Regularization for Prognostics and Health Assessment. IEEE Trans. Ind. Electron. 2016, 63, 7076–7083. [Google Scholar] [CrossRef]

- Sun, W.; Zhao, R.; Yan, R.; Shao, S.; Chen, X. Convolutional Discriminative Feature Learning for Induction Motor Fault Diagnosis. IEEE Trans. Ind. Inform. 2017, 13, 1350–1359. [Google Scholar] [CrossRef]

- Zhang, W.; Peng, G.; Li, C.; Chen, Y.; Zhang, Z. A new deep learning model for fault diagnosis with good anti-noise and domain adaptation ability on raw vibration signals. Sensors 2017, 17, 425. Available online: www.mdpi.com/journal/sensors (accessed on 11 June 2021). [CrossRef] [PubMed]

- Abdeljaber, O.; Avci, O.; Kiranyaz, S.; Gabbouj, M.; Inman, D.J. Real-time vibration-based structural damage detection using one-dimensional convolutional neural networks. J. Sound Vib. 2017, 388, 154–170. [Google Scholar] [CrossRef]

- Ince, T.; Kiranyaz, S.; Eren, L.; Askar, M.; Gabbouj, M. Real-Time Motor Fault Detection by 1-D Convolutional Neural Networks. IEEE Trans. Ind. Electron. 2016, 63, 7067–7075. [Google Scholar] [CrossRef]

- Liu, R.; Meng, G.; Yang, B.; Sun, C.; Chen, X. Dislocated Time Series Convolutional Neural Architecture: An Intelligent Fault Diagnosis Approach for Electric Machine. IEEE Trans. Ind. Inform. 2017, 13, 1310–1320. [Google Scholar] [CrossRef]

- Janssens, O.; Slavkovikj, V.; Vervisch, B.; Stockman, K.; Loccufier, M.; Verstockt, S.; Van de Walle, R.; Van Hoecke, S. Convolutional Neural Network Based Fault Detection for Rotating Machinery. J. Sound Vib. 2016, 377, 331–345. [Google Scholar] [CrossRef]

- Babu, G.S.; Zhao, P.; Li, X.L. Deep convolutional neural network based regression approach for estimation of remaining useful life. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2016; Volume 9642, pp. 214–228. Available online: http://www.i2r.a-star.edu.sg (accessed on 11 June 2021). [CrossRef]

- Wang, J.; Zhuang, J.; Duan, L.; Cheng, W. A multi-scale convolution neural network for featureless fault diagnosis. In Proceedings of the International Symposium on Flexible Automation, ISFA 2016, Cleveland, OH, USA, 1–3 August 2016; pp. 65–70. [Google Scholar]

- You, W.; Shen, C.; Guo, X.; Jiang, X.; Shi, J.; Zhu, Z. A hybrid technique based on convolutional neural network and support vector regression for intelligent diagnosis of rotating machinery. Adv. Mech. Eng. 2017, 9, 2017. Available online: https://us.sagepub.com/en-us/nam/ (accessed on 11 June 2021). [CrossRef] [Green Version]

- Weimer, D.; Scholz-Reiter, B.; Shpitalni, M. Design of deep convolutional neural network architectures for automated feature extraction in industrial inspection. CIRP Ann. Manuf. Technol. 2016, 65, 417–420. [Google Scholar] [CrossRef]

- Khan, S.; Yairi, T. A review on the application of deep learning in system health management. Mech. Syst. Signal Process. 2018, 107, 241–265. [Google Scholar] [CrossRef]

- Liu, Y.; Hu, X.; Zhang, W. Remaining useful life prediction based on health index similarity. Reliab. Eng. Syst. Saf. 2019, 185, 502–510. [Google Scholar] [CrossRef]

- Liu, D.; Wang, H.; Peng, Y.; Xie, W.; Liao, H. Satellite lithium-ion battery remaining cycle life prediction with novel indirect health indicator extraction. Energies 2013, 6, 3654–3668. Available online: https://www.mdpi.com/journal/energiesArticle (accessed on 11 June 2021). [CrossRef]

- Yang, F.; Habibullah, M.S.; Zhang, T.; Xu, Z.; Lim, P.; Nadarajan, S. Health index-based prognostics for remaining useful life predictions in electrical machines. IEEE Trans. Ind. Electron. 2016, 63, 2633–2644. [Google Scholar] [CrossRef]

- Xia, T.; Dong, Y.; Xiao, L.; Du, S.; Pan, E.; Xi, L. Recent advances in prognostics and health management for advanced manufacturing paradigms. Reliab. Eng. Syst. Saf. 2018, 178, 255–268. [Google Scholar] [CrossRef]

- Blecker, T.; Friedrich, G. Guest editorial: Mass customization manufacturing systems. IEEE Trans. Eng. Manag. 2007, 54, 4–11. [Google Scholar] [CrossRef]

- Jin, X.; Ni, J. Joint Production and Preventive Maintenance Strategy for Manufacturing Systems With Stochastic Demand. J. Manuf. Sci. Eng. 2013, 135. Available online: http://asmedigitalcollection.asme.org/manufacturingscience/article-pdf/135/3/031016/6261104/manu_135_3_031016.pdf (accessed on 16 August 2021). [CrossRef]

- Fitouhi, M.C.; Nourelfath, M. Integrating noncyclical preventive maintenance scheduling and production planning for multi-state systems. Reliab. Eng. Syst. Saf. 2014, 121, 175–186. [Google Scholar] [CrossRef]

- Koren, Y.; Heisel, U.; Jovane, F.; Moriwaki, T.; Pritschow, G.; Ulsoy, G.; Van Brussel, H. Reconfigurable Manufacturing Systems. CIRP Ann. 1999, 48, 527–540. [Google Scholar] [CrossRef]

- Xia, T.; Xi, L.; Pan, E.; Ni, J. Reconfiguration-oriented opportunistic maintenance policy for reconfigurable manufacturing systems. Reliab. Eng. Syst. Saf. 2017, 166, 87–98. [Google Scholar] [CrossRef]

- Zhou, J.; Djurdjanovic, D.; Ivy, J.; Ni, J. Integrated reconfiguration and age-based preventive maintenance decision making. IIE Trans. 2007, 39, 1085–1102. Available online: https://www.tandfonline.com/doi/abs/10.1080/07408170701291779 (accessed on 16 August 2021). [CrossRef]

- Koren, Y.; Gu, X.; Badurdeen, F.; Jawahir, I.S. Sustainable Living Factories for Next Generation Manufacturing. Procedia Manuf. 2018, 21, 26–36. [Google Scholar] [CrossRef]

- Gao, J.; Yao, Y.; Zhu, V.C.Y.; Sun, L.; Lin, L. Service-oriented manufacturing: A new product pattern and manufacturing paradigm. J. Intell. Manuf. 2009, 22, 435–446. Available online: https://link.springer.com/article/10.1007/s10845-009-0301-y (accessed on 16 August 2021). [CrossRef]

- Ning, D.; Huang, J.; Shen, J.; Di, D. A cloud based framework of prognostics and health management for manufacturing industry. In Proceedings of the 2016 IEEE International Conference on Prognostics and Health Management (ICPHM), Ottawa, ON, Canada, 20–22 June 2016. [Google Scholar] [CrossRef]

- Traini, E.; Bruno, G.; D’Antonio, G.; Lombardi, F. Machine learning framework for predictive maintenance in milling. IFAC-Pap. 2019, 52, 177–182. [Google Scholar] [CrossRef]