Simultaneous Burr and Cut Interruption Detection during Laser Cutting with Neural Networks

Abstract

:1. Introduction

2. Experimental

2.1. Laser System and Cutting Setup

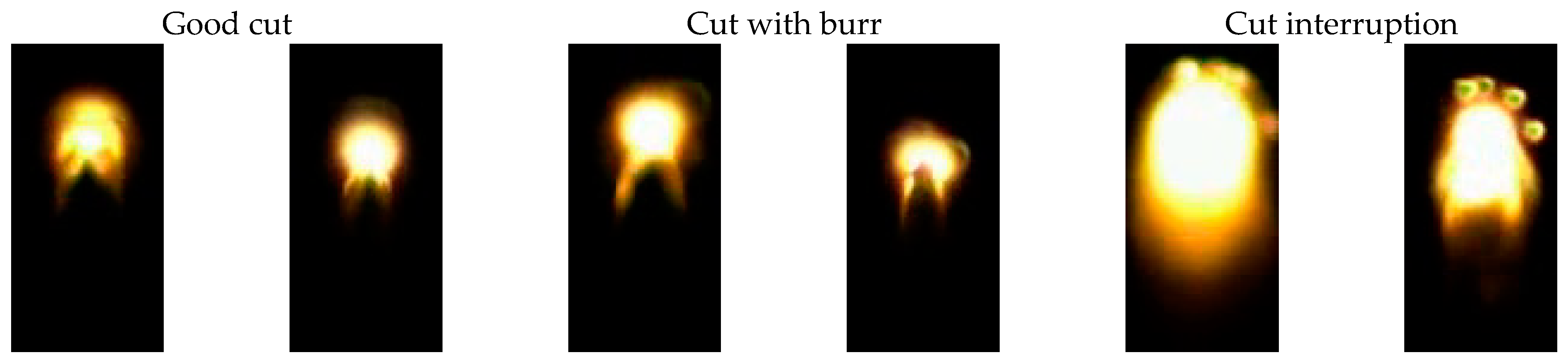

2.2. Laser Cutting

2.3. Camera and Image Acquisition

2.4. Computer Hardware and Neural Network Design

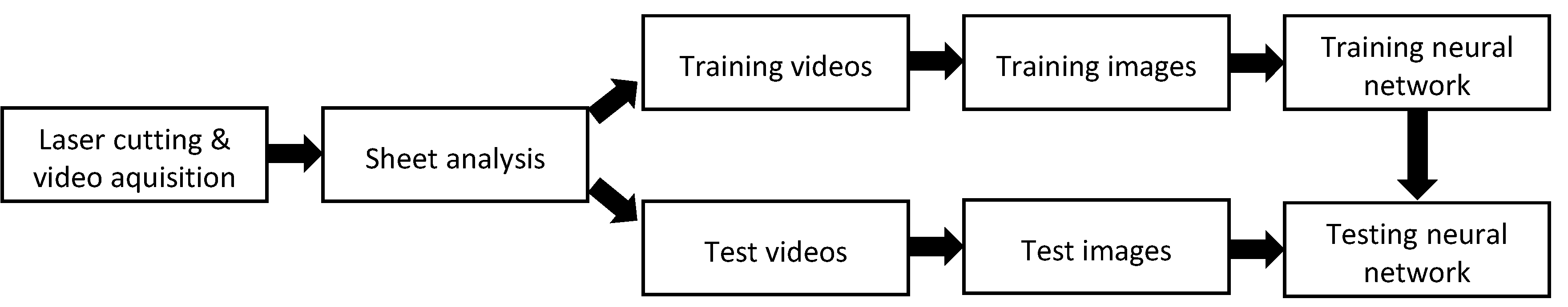

2.5. Methodology

3. Results

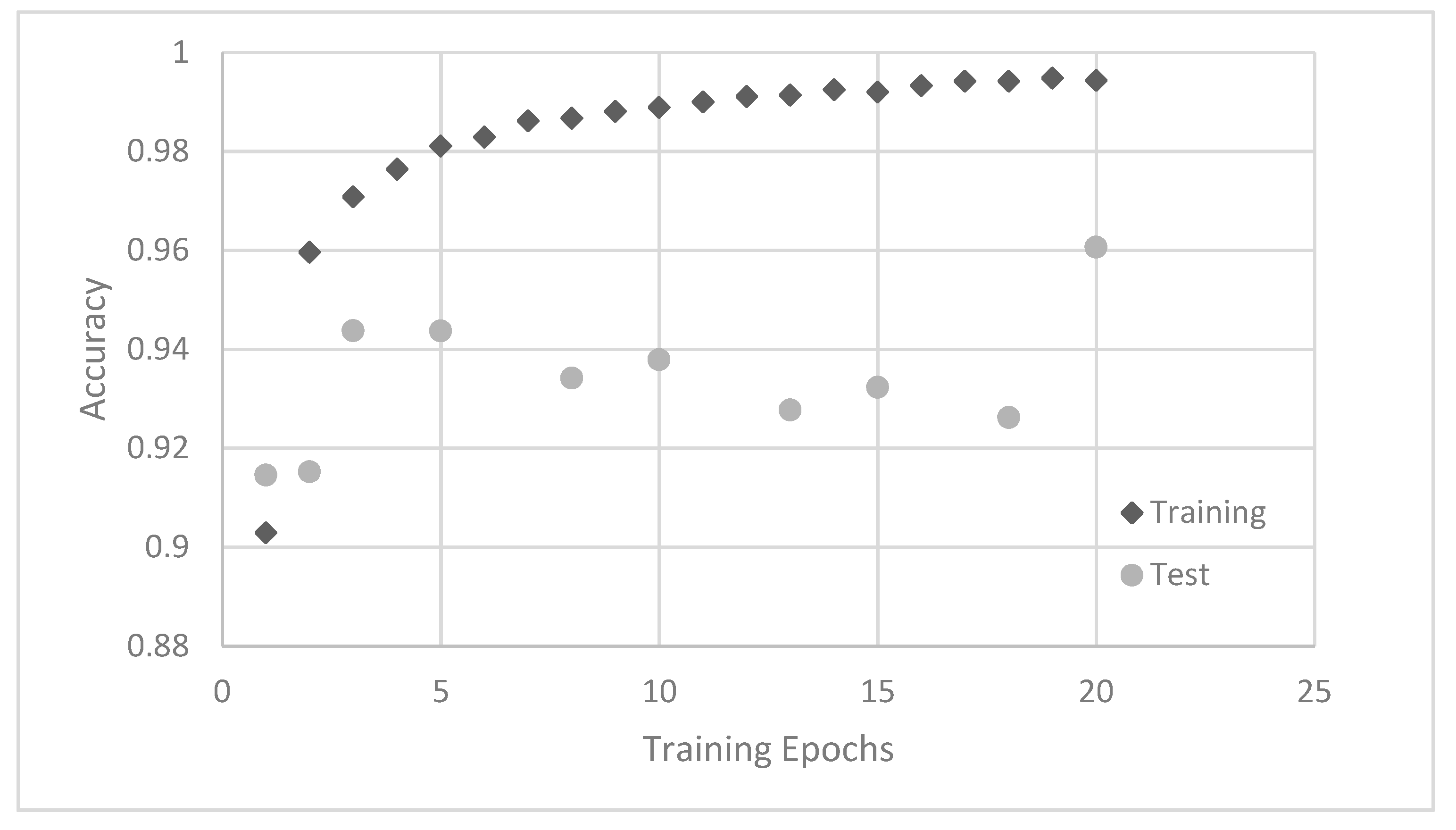

3.1. Training Behaviour

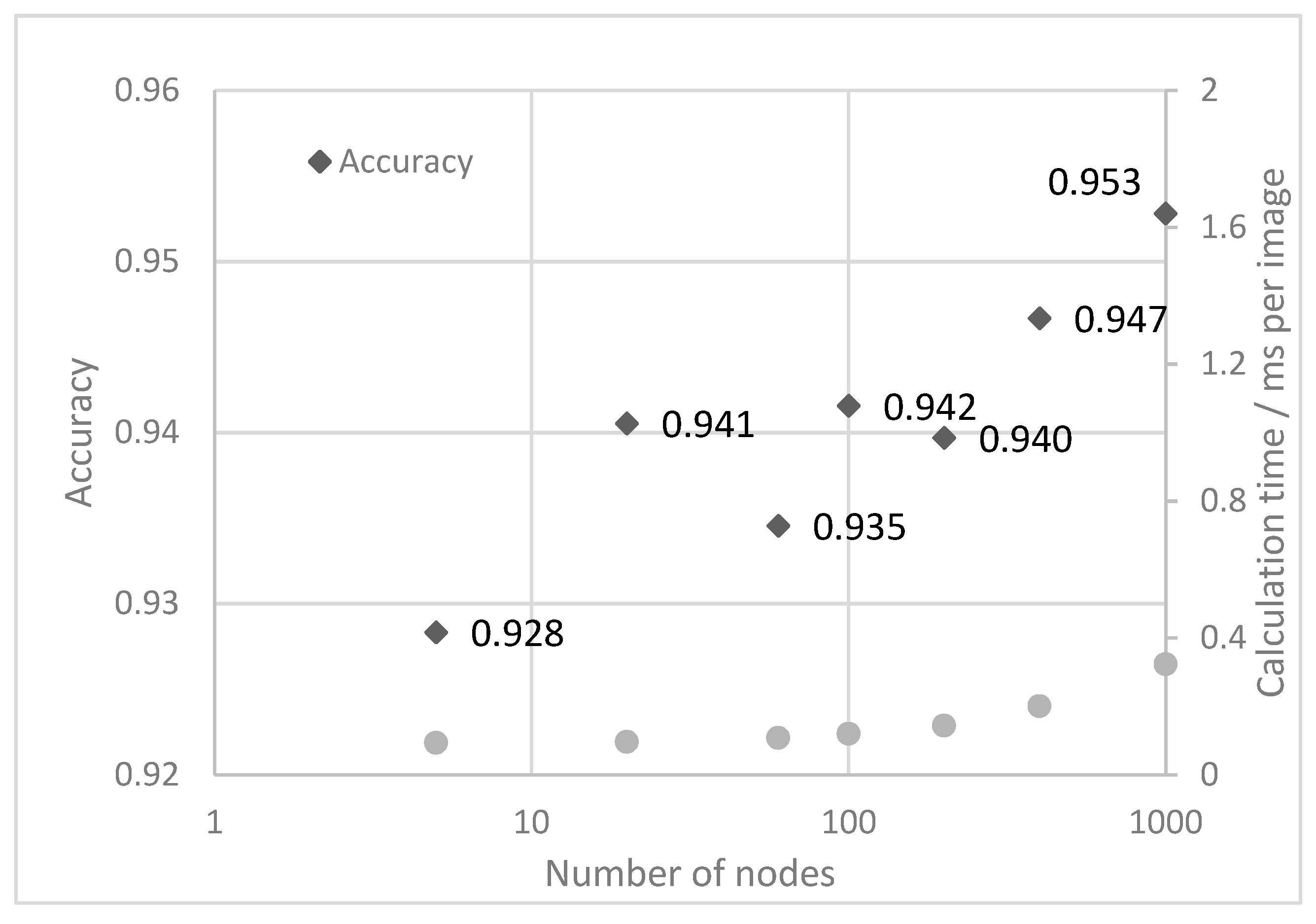

3.2. Basic Neural Network

3.3. Convolutional Neural Network

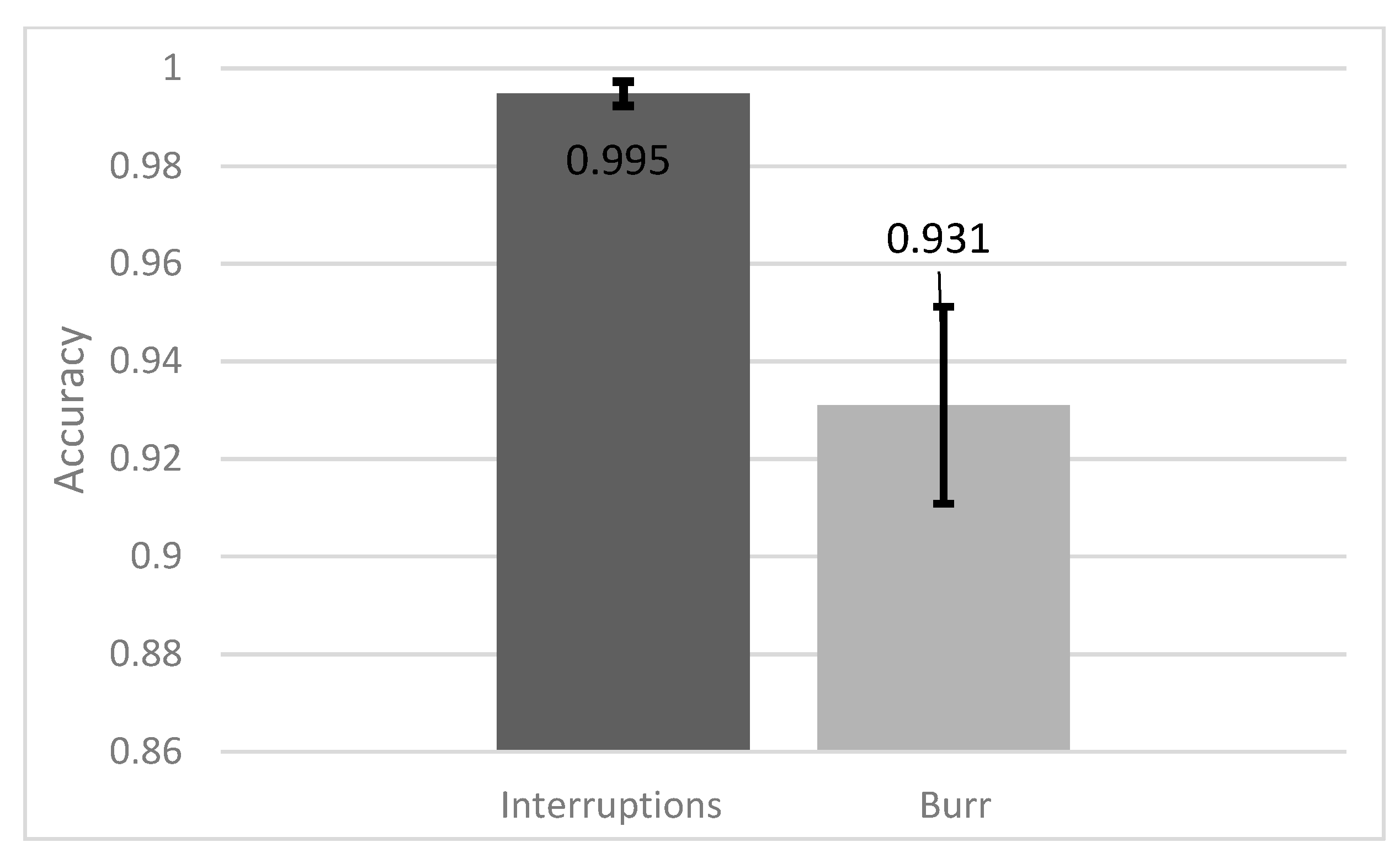

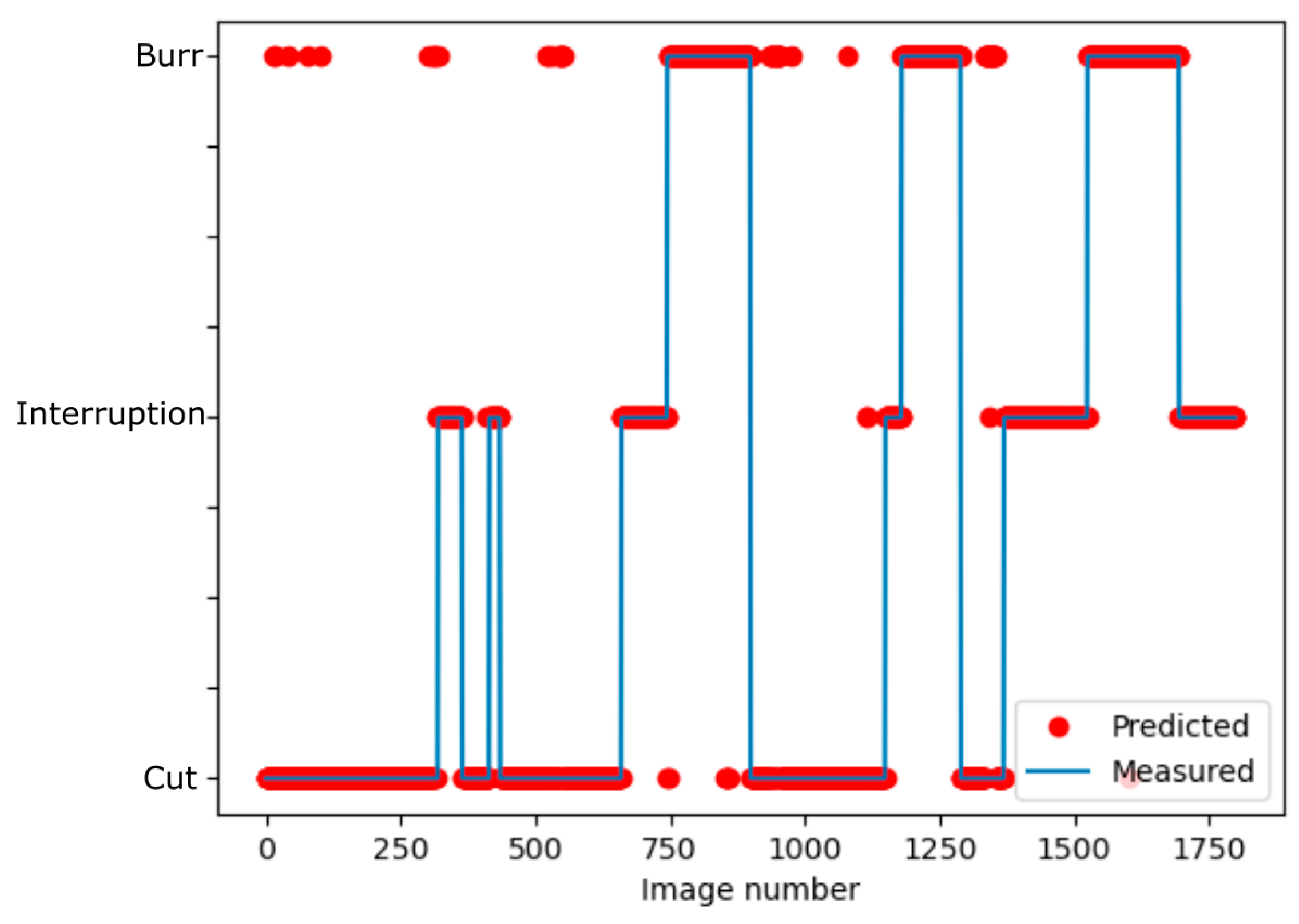

3.4. Comparison between Cut Failures

3.5. Error Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Nr: | Laser | Feed Rate | Nozzle Distance | Focus Position | Category | Use |

| W | mm/s | mm | mm | |||

| 1 | 500 | 600 | 0.5 | −1.25 | Cut | Training |

| 2 | 300 | 400 | 0.5 | −1.25 | Cut | Training |

| 3 | 500 | 500 | 0.5 | −1.25 | Cut | Training |

| 4 | 500 | 300 | 0.5 | −1.25 | Cut | Training |

| 5 | 300 | 600 | 0.5 | −1.25 | Interruption | Test |

| 6 | 200 | 500 | 1,0 | −1.75 | Interruption | Training |

| 7 | 500 | 500 | 0.8 | −1.55 | Burr | Training |

| 8 | 500 | 600 | 0.5 | −1.25 | Cut | Test |

| 9 | 250 | 500 | 0.5 | −1.25 | Interruption | Test |

| 10 | 500 | 400 | 0.5 | −1.25 | Cut | Test |

| 11 | 500 | 600 | 0.5 | −1.25 | Interruption | Training |

| 12 | 500 | 500 | 1,0 | −1.75 | Burr | Training |

| 13 | 500 | 500 | 0.5 | −1.25 | Cut | Test |

| 14 | 500 | 300 | 0.5 | −1.25 | Cut | Training |

| 15 | 500 | 200 | 0.5 | −1.25 | Cut | Test |

| 16 | 500 | 500 | 0.5 | −1.25 | Cut | Training |

| 17 | 500 | 500 | 0.5 | −1.25 | Interruption | Test |

| 18 | 400 | 500 | 0.9 | −1.65 | Burr | Training |

| 19 | 500 | 500 | 0.8 | −1.55 | Burr | Training |

| 20 | 200 | 500 | 1,0 | −1.75 | Interruption | Training |

| 21 | 500 | 300 | 0.5 | −1.45 | Burr | Training |

| 22 | 150 | 500 | 0.5 | −1.25 | Interruption | Test |

| 23 | 500 | 400 | 0.5 | −1.25 | Cut | Training |

| 24 | 500 | 500 | 0.5 | −1.25 | Cut | Test |

| 25 | 500 | 400 | 0.5 | −1.25 | Training | |

| 26 | 400 | 500 | 0.8 | −1.55 | Burr | Training |

| 27 | 500 | 500 | 0.8 | −1.55 | Burr | Test |

| 28 | 150 | 500 | 0.5 | −1.25 | Interruption | Training |

| 29 | 200 | 500 | 1,0 | −1.75 | Interruption | Test |

| 30 | 400 | 500 | 0.9 | −1.65 | Burr | Training |

| 31 | 300 | 600 | 0.5 | −1.25 | Interruption | Training |

| 32 | 500 | 500 | 1,0 | −1.75 | Burr | Training |

| 33 | 500 | 300 | 0.5 | −1.25 | Cut | Test |

| 34 | 400 | 500 | 1,0 | −1.75 | Burr | Test |

| 35 | 500 | 500 | 0.5 | −1.25 | Interruption | Training |

| 36 | 500 | 400 | 0.5 | −1.25 | Cut | Training |

| 37 | 150 | 500 | 0.5 | −1.25 | Interruption | Training |

| 38 | 400 | 500 | 0.5 | −1.45 | Burr | Training |

| 39 | 500 | 600 | 0.5 | −1.25 | Interruption | Training |

| 40 | 400 | 400 | 0.5 | −1.25 | Cut | Training |

| 41 | 500 | 600 | 0.5 | −1.25 | Cut | Training |

| 42 | 500 | 600 | 0.5 | −1.25 | Interruption | Test |

| 43 | 400 | 500 | 0.8 | −1.55 | Burr | Test |

| 44 | 400 | 500 | 0.5 | −1.45 | Burr | Test |

| 45 | 400 | 400 | 0.5 | −1.25 | Cut | Test |

| 46 | 500 | 500 | 0.5 | −1.25 | Cut | Training |

| 47 | 500 | 200 | 0.5 | −1.25 | Cut | Training |

| 48 | 300 | 400 | 0.5 | −1.25 | Interruption | Training |

| 49 | 400 | 500 | 0.5 | −1.45 | Burr | Training |

| 50 | 500 | 400 | 0.5 | −1.25 | Cut | Test |

| 51 | 500 | 500 | 0.8 | −1.55 | Burr | Training |

| 52 | 400 | 500 | 0.9 | −1.65 | Burr | Training |

| 53 | 400 | 500 | 0.9 | −1.65 | Burr | Test |

| 54 | 500 | 300 | 0.5 | −1.45 | Burr | Training |

| 55 | 300 | 400 | 0.5 | −1.25 | Cut | Training |

| 56 | 500 | 300 | 0.5 | −1.45 | Burr | Test |

| 57 | 250 | 500 | 0.5 | −1.25 | Interruption | Training |

| 58 | 300 | 400 | 0.5 | −1.25 | Cut | Training |

| 59 | 300 | 400 | 0.5 | −1.25 | Interruption | Training |

| 60 | 300 | 600 | 0.5 | −1.25 | Interruption | Training |

| 61 | 300 | 400 | 0.5 | −1.25 | Interruption | Test |

References

- Kratky, A.; Schuöcker, D.; Liedl, G. Processing with kW fibre lasers: Advantages and limits. In Proceedings of the XVII International Symposium on Gas Flow, Chemical Lasers, and High-Power Lasers, Lisboa, Portugal, 15–19 September 2008; p. 71311X. [Google Scholar]

- Sichani, E.F.; de Keuster, J.; Kruth, J.; Duflou, J. Real-time monitoring, control and optimization of CO2 laser cutting of mild steel plates. In Proceedings of the 37th International MATADOR Conference, Manchester, UK, 25–27 July 2012; pp. 177–181. [Google Scholar]

- Sichani, E.F.; de Keuster, J.; Kruth, J.-P.; Duflou, J.R. Monitoring and adaptive control of CO2 laser flame cutting. Phys. Procedia 2010, 5, 483–492. [Google Scholar] [CrossRef] [Green Version]

- Wen, P.; Zhang, Y.; Chen, W. Quality detection and control during laser cutting progress with coaxial visual monitoring. J. Laser Appl. 2012, 24, 032006. [Google Scholar] [CrossRef]

- Franceschetti, L.; Pacher, M.; Tanelli, M.; Strada, S.C.; Previtali, B.; Savaresi, S.M. Dross attachment estimation in the laser-cutting process via Convolutional Neural Networks (CNN). In Proceedings of the 2020 28th Mediterranean Conference on Control and Automation (MED), Saint-Raphaël, France, 16–18 September 2020; pp. 850–855. [Google Scholar]

- Schleier, M.; Adelmann, B.; Neumeier, B.; Hellmann, R. Burr formation detector for fiber laser cutting based on a photodiode sensor system. Opt. Laser Technol. 2017, 96, 13–17. [Google Scholar] [CrossRef]

- Garcia, S.M.; Ramos, J.; Arrizubieta, J.I.; Figueras, J. Analysis of Photodiode Monitoring in Laser Cutting. Appl. Sci. 2020, 10, 6556. [Google Scholar] [CrossRef]

- Levichev, N.; Rodrigues, G.C.; Duflou, J.R. Real-time monitoring of fiber laser cutting of thick plates by means of photodiodes. Procedia CIRP 2020, 94, 499–504. [Google Scholar] [CrossRef]

- Tomaz, K.; Janz, G. Use of AE monitoring in laser cutting and resistance spot welding. In Proceedings of the EWGAE 2010, Vienna, Austria, 8–10 September 2010; pp. 1–7. [Google Scholar]

- Adelmann, B.; Schleier, M.; Neumeier, B.; Hellmann, R. Photodiode-based cutting interruption sensor for near-infrared lasers. Appl. Opt. 2016, 55, 1772–1778. [Google Scholar] [CrossRef]

- Adelmann, B.; Schleier, M.; Neumeier, B.; Wilmann, E.; Hellmann, R. Optical Cutting Interruption Sensor for Fiber Lasers. Appl. Sci. 2015, 5, 544–554. [Google Scholar] [CrossRef] [Green Version]

- Schleier, M.; Adelmann, B.; Esen, C.; Hellmann, R. Cross-Correlation-Based Algorithm for Monitoring Laser Cutting with High-Power Fiber Lasers. IEEE Sens. J. 2017, 18, 1585–1590. [Google Scholar] [CrossRef]

- Adelmann, B.; Schleier, M.; Hellmann, R. Laser Cut Interruption Detection from Small Images by Using Convolutional Neural Network. Sensors 2021, 21, 655. [Google Scholar] [CrossRef]

- Tatzel, L.; León, F.P. Prediction of Cutting Interruptions for Laser Cutting Using Logistic Regression. In Proceedings of the Lasers in Manufacturing Conference 2019, Munich, Germany, 24 July 2019; pp. 1–7. [Google Scholar]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Ting, F.F.; Tan, Y.J.; Sim, K.S. Convolutional neural network improvement for breast cancer classification. Expert Syst. Appl. 2018, 120, 103–115. [Google Scholar] [CrossRef]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adeli, H. Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput. Biol. Med. 2018, 100, 270–278. [Google Scholar] [CrossRef] [PubMed]

- Perol, T.; Gharbi, M.; Denolle, M. Convolutional neural network for earthquake detection and location. Sci. Adv. 2018, 4, e1700578. [Google Scholar] [CrossRef] [Green Version]

- Dung, C.V.; Anh, L.D. Autonomous concrete crack detection using deep fully convolutional neural network. Autom. Constr. 2019, 99, 52–58. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road crack detection using deep convolutional neural network. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3708–3712. [Google Scholar]

- Nakazawa, T.; Kulkarni, D. Wafer Map Defect Pattern Classification and Image Retrieval Using Convolutional Neural Network. IEEE Trans. Semicond. Manuf. 2018, 31, 309–314. [Google Scholar] [CrossRef]

- Urbonas, A.; Raudonis, V.; Maskeliūnas, R.; Damaševičius, R. Automated Identification of Wood Veneer Surface Defects Using Faster Region-Based Convolutional Neural Network with Data Augmentation and Transfer Learning. Appl. Sci. 2019, 9, 4898. [Google Scholar] [CrossRef] [Green Version]

- Khumaidi, A.; Yuniarno, E.M.; Purnomo, M.H. Welding defect classification based on convolution neural network (CNN) and Gaussian kernel. In Proceedings of the 2017 International Seminar on Intelligent Technology and Its Applications (ISITIA), Surabaya, Indonesia, 28–29 August 2017; pp. 261–265. [Google Scholar] [CrossRef]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; van Esesn, B.C.; Awwal, A.A.S.; Asari, V.K. The History Began from AlexNet: A Comprehensive Survey on Deep Learning Approaches. March 2018. Available online: http://arxiv.org/pdf/1803.01164v2 (accessed on 26 August 2021).

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks Nov 2015. Available online: http://arxiv.org/pdf/1511.08458v2 (accessed on 26 August 2021).

- Emura, M.; Landgraf, F.J.G.; Ross, W.; Barreta, J.R. The influence of cutting technique on the magnetic properties of electrical steels. J. Magn. Magn. Mater. 2002, 254, 358–360. [Google Scholar] [CrossRef]

- Schoppa, A.; Schneider, J.; Roth, J.-O. Influence of the cutting process on the magnetic properties of non-oriented electrical steels. J. Magn. Magn. Mater. 2000, 215, 100–102. [Google Scholar] [CrossRef]

- Adelmann, B.; Hellmann, R. Process optimization of laser fusion cutting of multilayer stacks of electrical sheets. Int. J. Adv. Manuf. Technol. 2013, 68, 2693–2701. [Google Scholar] [CrossRef]

- Adelmann, B.; Lutz, C.; Hellmann, R. Investigation on shear and tensile strength of laser welded electrical sheet stacks. In Proceedings of the 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), Munich, Germany, 20–24 August 2018; pp. 565–569. [Google Scholar]

- Arntz, D.; Petring, D.; Stoyanov, S.; Quiring, N.; Poprawe, R. Quantitative study of melt flow dynamics inside laser cutting kerfs by in-situ high-speed video-diagnostics. Procedia CIRP 2018, 74, 640–644. [Google Scholar] [CrossRef]

- Tennera, F.; Klämpfla, F.; Schmidta, M. How fast is fast enough in the monitoring and control of laser welding? In Proceedings of the Lasers in Manufacturing Conference, Munich, Germany, 22–25 June 2015. [Google Scholar]

- Keshari, R.; Vatsa, M.; Singh, R.; Noore, A. Learning Structure and Strength of CNN Filters for Small Sample Size Training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Chen, G.; Han, T.X.; He, Z.; Kays, R.; Forrester, T. Deep convolutional neural network based species recognition for wild animal monitoring. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 858–862. [Google Scholar]

- Dong, Z.; Wu, Y.; Pei, M.; Jia, Y. Vehicle Type Classification Using a Semisupervised Convolutional Neural Network. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2247–2256. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. July 2017. Available online: http://arxiv.org/pdf/1707.01083v2 (accessed on 26 August 2021).

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-Level Accuracy with 50x Fewer Parameters and <0.5MB Model Size. February 2016. Available online: http://arxiv.org/pdf/1602.07360v4 (accessed on 26 August 2021).

- Bello, I.; Zoph, B.; Vasudevan, V.; Le, Q.V. Neural Optimizer Search with Reinforcement Learning. September 2017. Available online: http://arxiv.org/pdf/1709.07417v2 (accessed on 26 August 2021).

- An, S.; Lee, M.; Park, S.; Yang, H.; So, J. An Ensemble of Simple Convolutional Neural Network Models for MNIST Digit Recognition. arXiv 2020, arXiv:2008.10400. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adelmann, B.; Hellmann, R. Simultaneous Burr and Cut Interruption Detection during Laser Cutting with Neural Networks. Sensors 2021, 21, 5831. https://doi.org/10.3390/s21175831

Adelmann B, Hellmann R. Simultaneous Burr and Cut Interruption Detection during Laser Cutting with Neural Networks. Sensors. 2021; 21(17):5831. https://doi.org/10.3390/s21175831

Chicago/Turabian StyleAdelmann, Benedikt, and Ralf Hellmann. 2021. "Simultaneous Burr and Cut Interruption Detection during Laser Cutting with Neural Networks" Sensors 21, no. 17: 5831. https://doi.org/10.3390/s21175831

APA StyleAdelmann, B., & Hellmann, R. (2021). Simultaneous Burr and Cut Interruption Detection during Laser Cutting with Neural Networks. Sensors, 21(17), 5831. https://doi.org/10.3390/s21175831