Channel Exchanging for RGB-T Tracking

Abstract

:1. Introduction

1.1. Shortcomings of Existing RGB-T Trackers

1.2. Our Innovation

- We propose a novel RGB-T object tracking framework based on channel exchanging. As far as we know, it is the first time that the channel exchanging method has been used to fuse RGB and TIR data for the RGB-T object tracking framework. The data fusion method based on channel exchanging is more efficient than the previous methods.

- In order to improve the generalization performance and long-term tracking ability of the RGB-T tracker, we utilize the trained image translation model for the first time to generate the TIR dataset LaSOT-TIR based on the RGB long-term tracking dataset LaSOT [13]. After training on LaSOT-RGBT, the generalization performance and the ability of long-term tracking have significantly improved.

- Our proposed method not only achieves the best performance on GTOT and RGBT234, but also outperforms existing methods in the evaluation test of sequestered video sequences. Our advantage is especially prominent in the long-term object tracking task.

2. Related Work

2.1. Single-Modal Tracking

2.2. Modality Fusion Tracking

3. Methods

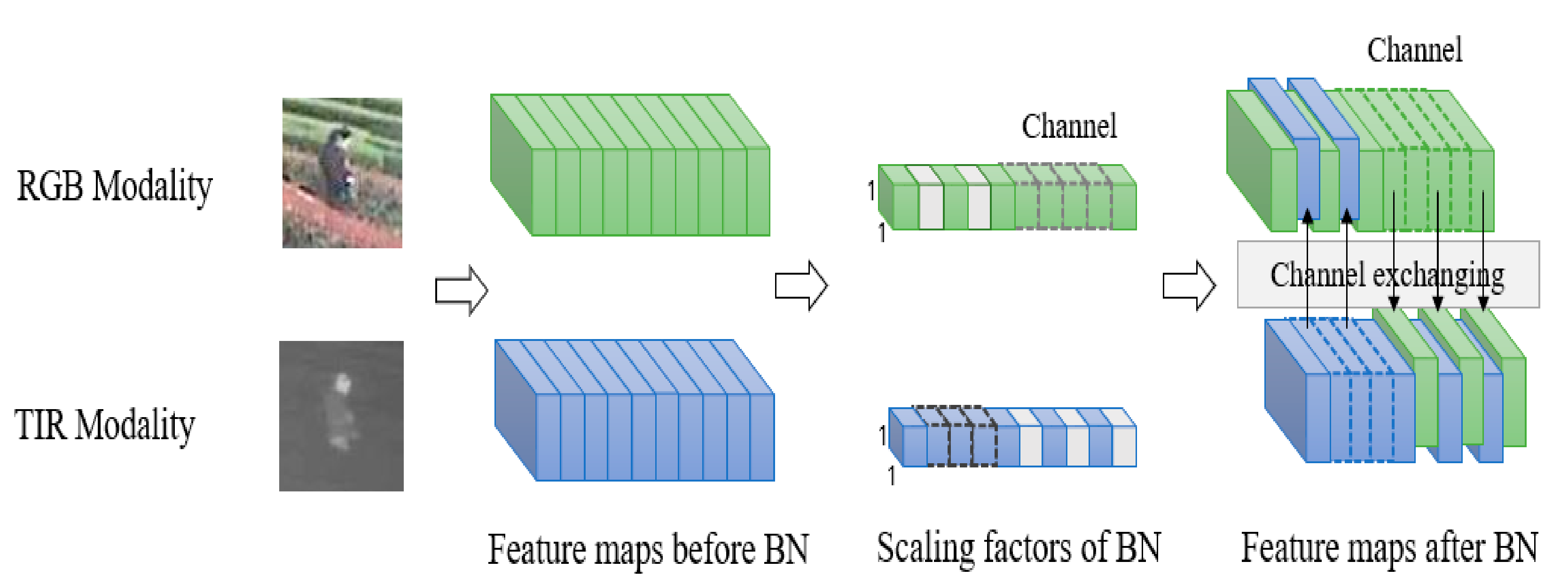

3.1. RGB and TIR Feature Fusion Based on Channel Exchanging

3.2. Network Architecture of the RGB-T Tracker Based on DiMP

3.3. The Training and Optimization of the Target Classification Sub-Network

3.4. Bounding Box Estimation Branch

3.5. Final Loss Function

3.6. LaSOT-RGBT

4. Experiments

4.1. Implementation Details

4.1.1. Backbone Network

4.1.2. Offline Training

4.1.3. Online Tracking

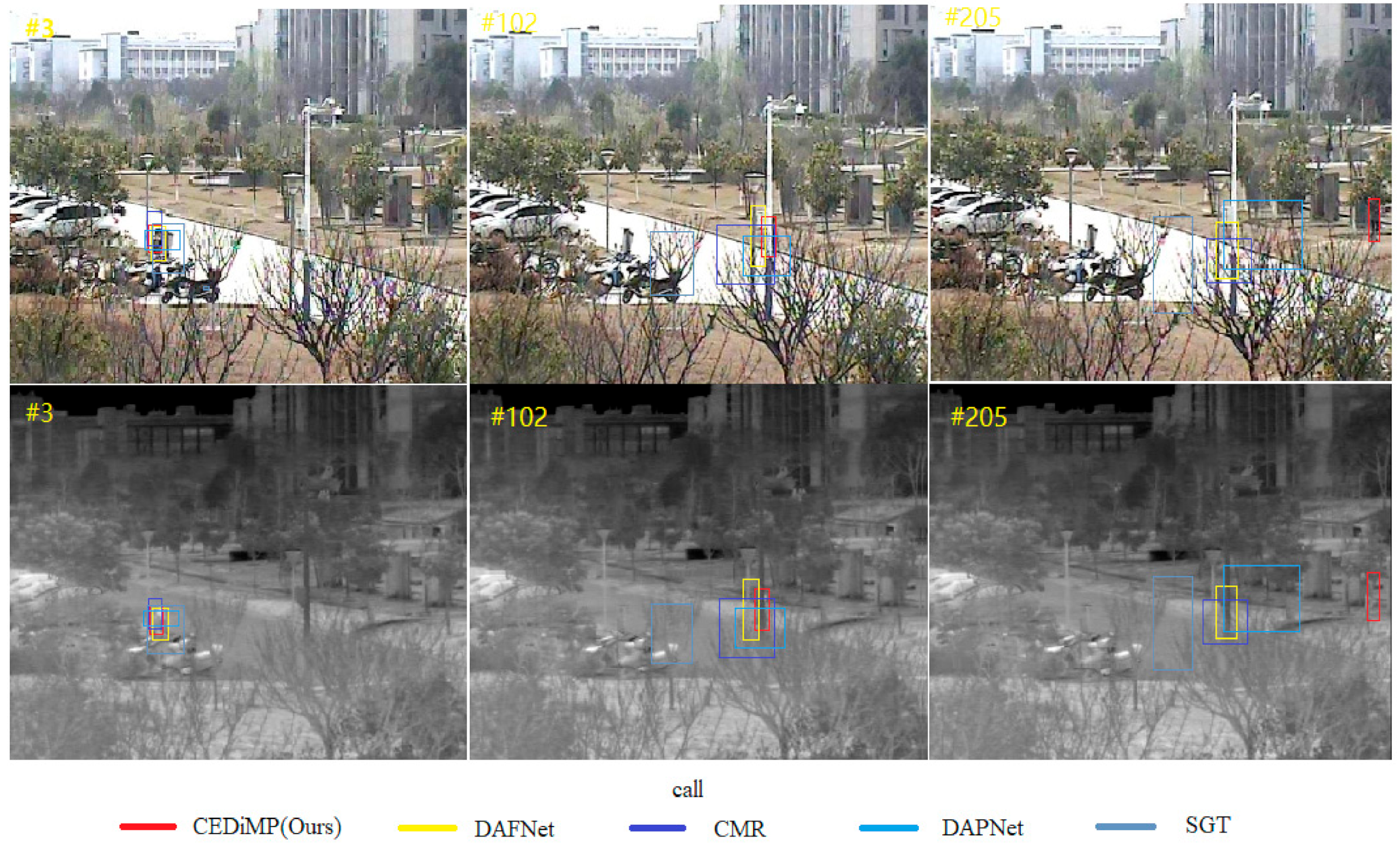

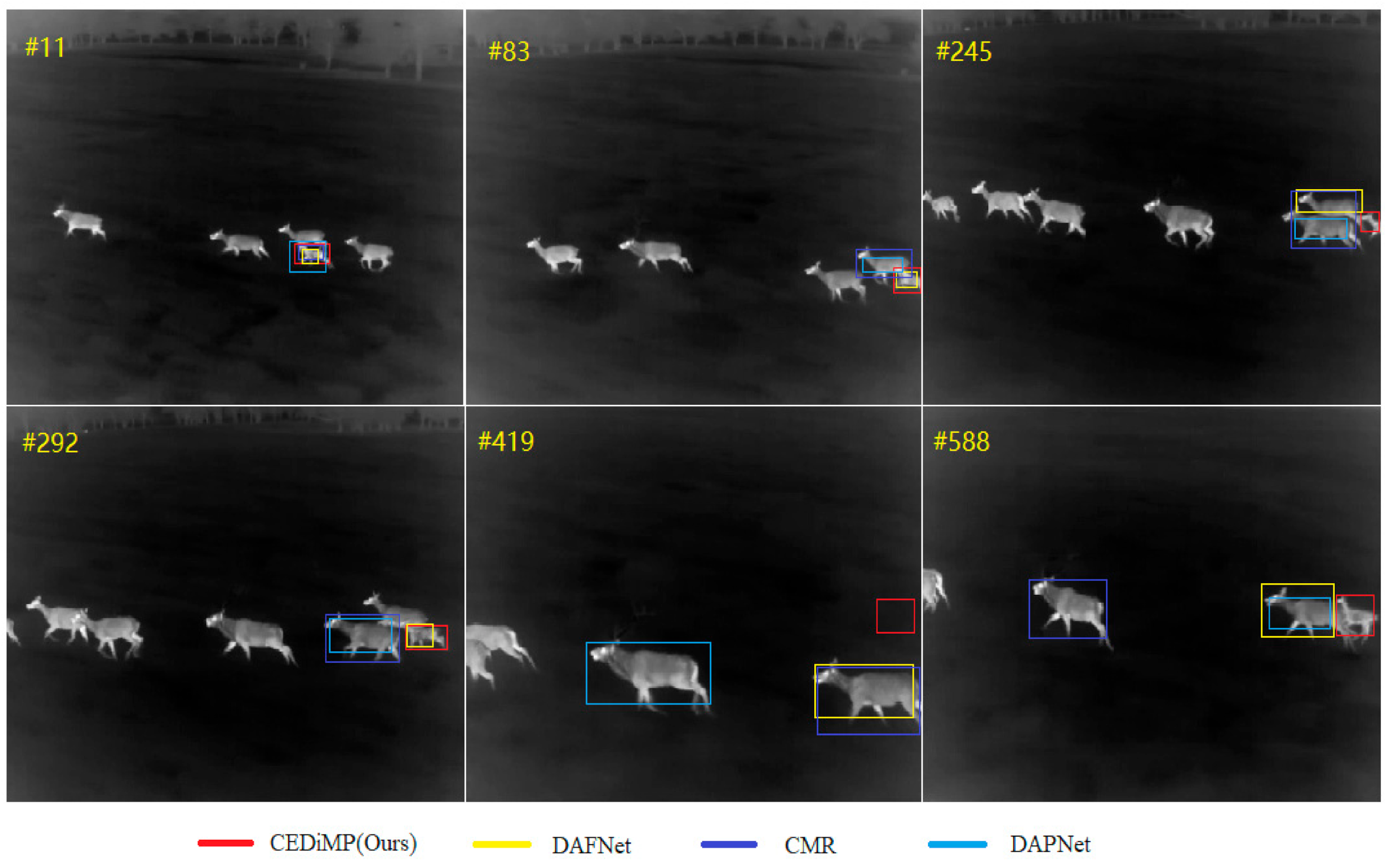

4.2. Comparison to State-of-the-Art Trackers

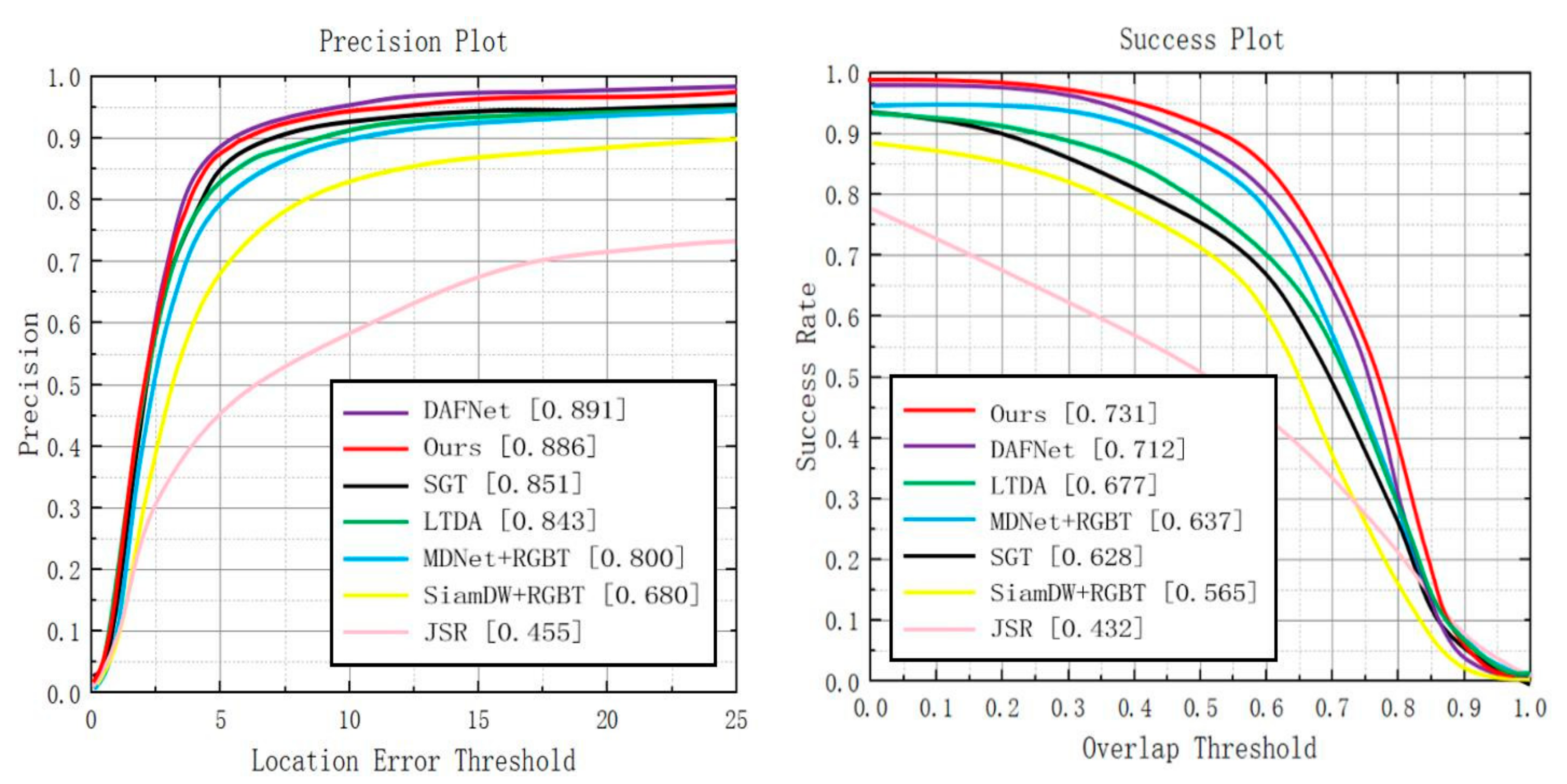

4.2.1. Evaluation on GTOT Dataset

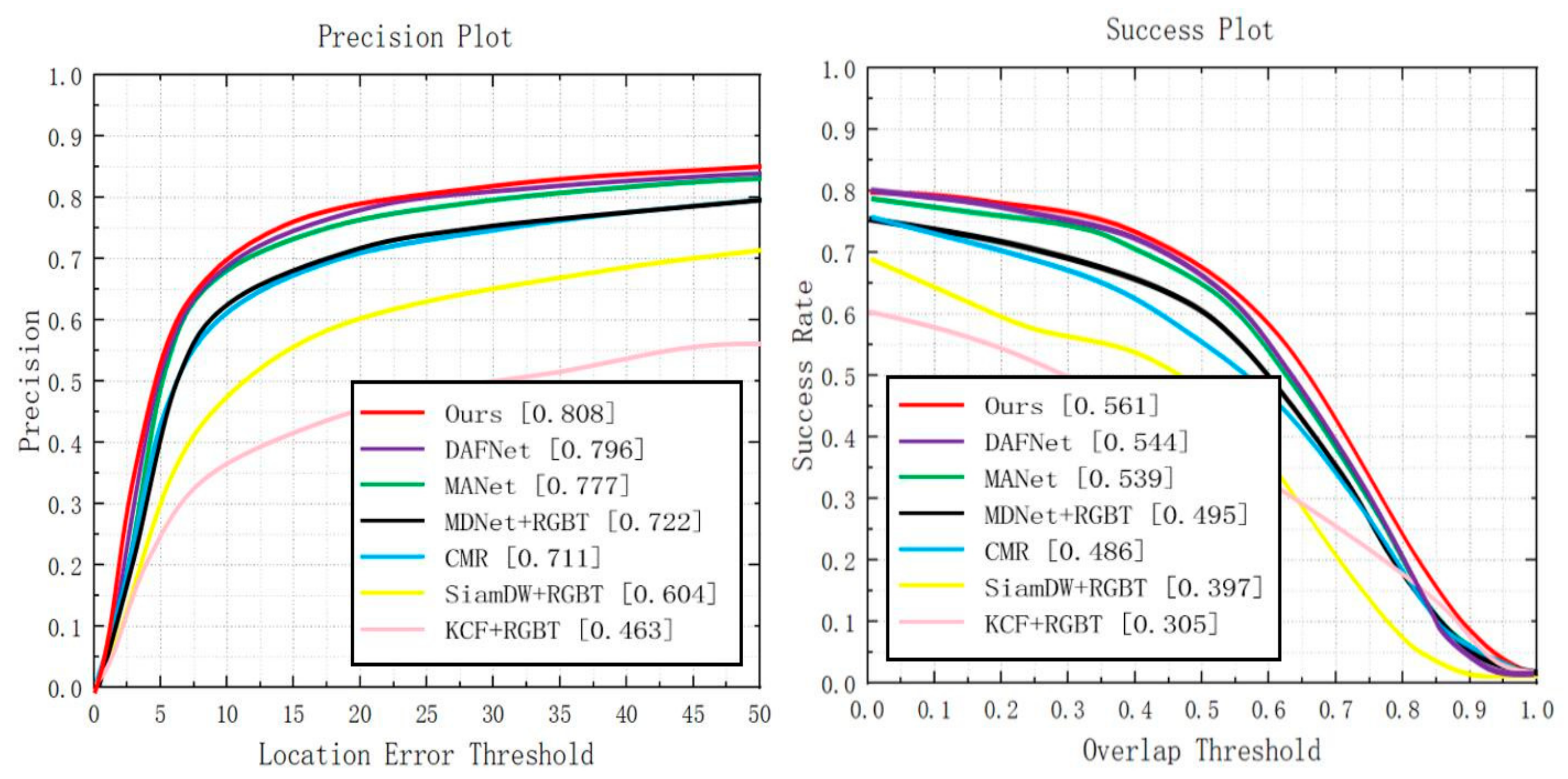

4.2.2. Evaluation on REGT234 Dataset

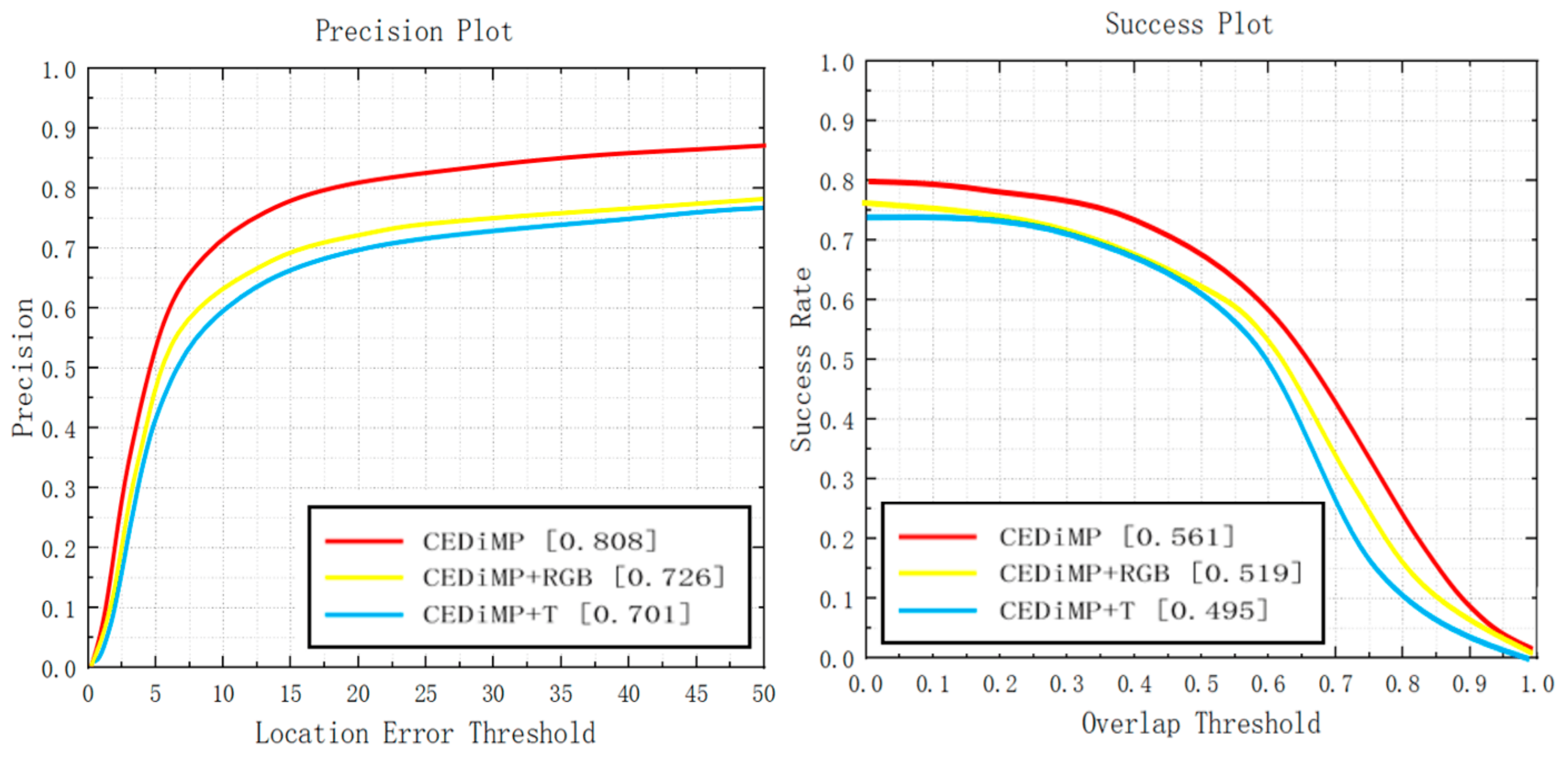

4.3. Ablation Study

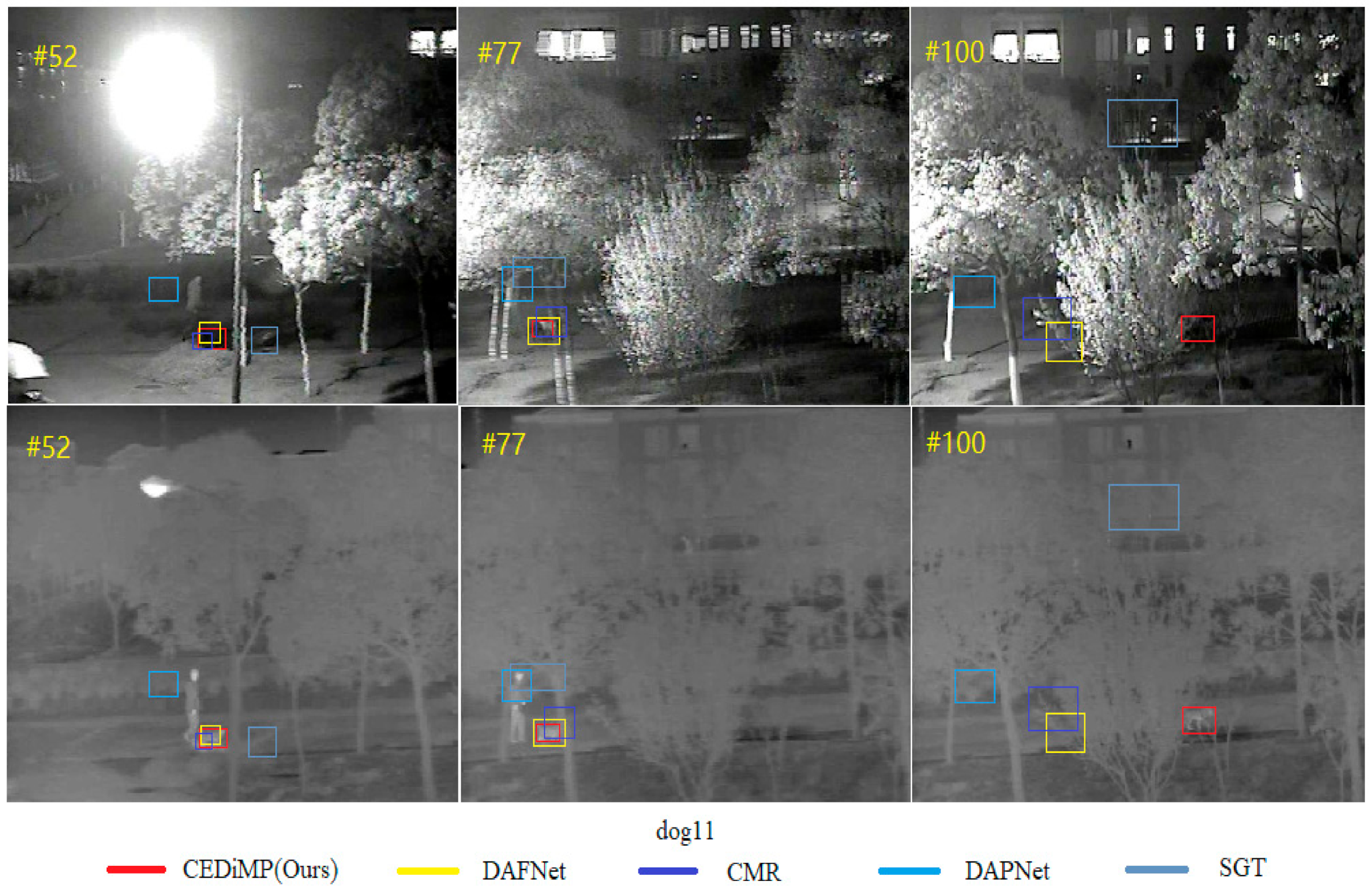

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, X.; Ye, P.; Leung, H.; Gong, K.; Xiao, G. Object Fusion Tracking Based on Visible and Infrared Images: A Comprehensive Review. Inf. Fusion 2020, 63, 166–187. [Google Scholar] [CrossRef]

- Li, C.; Liang, X.; Lu, Y.; Zhao, N.; Tang, J. RGB-T Object Tracking: Benchmark and Baseline. Pattern Recognit. 2019, 96, 106977. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Cheng, H.; Hu, S.; Liu, X.; Tang, J.; Lin, L. Learning Collaborative Sparse Representation for Grayscale-Thermal Tracking. IEEE Trans. Image Process. 2016, 25, 5743–5756. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Huang, W.; Sun, F.; Xu, T.; Huang, J. Deep Multimodal Fusion by Channel Exchanging. In Proceedings of the Neural Information Processing Systems, Online, 6–12 December 2020. [Google Scholar]

- Zhang, L.; Danelljan, M.; Gonzalez-Garcia, A.; Weijer, J.; Khan, F.S. Multi-Modal Fusion for End-to-End RGB-T Tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and Wider Siamese Networks for Real-Time Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Li, C.; Lu, A.; Zheng, A.; Tu, Z.; Tang, J. Multi-Adapter RGBT Tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Zhang, P.; Zhao, J.; Wang, D.; Lu, H.; Yang, X. Jointly Modeling Motion and Appearance Cues for Robust RGB-T Tracking. IEEE Trans. Image Process. 2021, 30, 3335–3347. [Google Scholar] [CrossRef] [PubMed]

- Kristan, M.; Berg, A.; Zheng, L.; Rout, L.; Zhou, L. The Seventh Visual Object Tracking VOT2019 Challenge Results. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GOT-10k: A Large High-Diversity Benchmark for Generic Object Tracking in the Wild. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning Discriminative Model Prediction for Tracking. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lile, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Fan, H.; Ling, H.; Lin, L.; Yang, F.; Liao, C. LaSOT: A High-Quality Benchmark for Large-Scale Single Object Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Henriques, J.F.; Rui, C.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 8–14 October 2012. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Discriminative Scale Space Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1561–1575. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Nam, H.; Han, B.J.I. Learning Multi-Domain Convolutional Neural Networks for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Song, Y.; Chao, M.; Gong, L.; Zhang, J.; Yang, M.H. CREST: Convolutional Residual Learning for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ATOM: Accurate Tracking by Overlap Maximization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GlobalTrack: A Simple and Strong Baseline for Long-Term Tracking. In Proceedings of the Thirty-Fourth AAAI Conference on Artifificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Danelljan, M.; Gool, L.V.; Timofte, R. Probabilistic Regression for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Bo, L.; Yan, J.; Wei, W.; Zheng, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Dong, X.; Shen, J. Triplet Loss in Siamese Network for Object Tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese Visual Tracking with Very Deep Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P. Fast Online Object Tracking and Segmentation: A Unifying Approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y. SiamFC++: Towards Robust and Accurate Visual Tracking with Target Estimation Guidelines. In Proceedings of the Thirty-Fourth AAAI Conference on Artifificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese Fully Convolutional Classification and Regression for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Zhang, Z.; Peng, H.; Fu, J. Ocean: Object-aware Anchor-free Tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Yu, X.; Yu, Q.; Shang, Y.; Zhang, H. Dense structural learning for infrared object tracking at 200+ Frames per Second. Pattern Recognit. Lett. 2017, 100, 152–159. [Google Scholar] [CrossRef]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; He, Z. The Visual Object Tracking VOT2017 Challenge Results. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCVW), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Vojíř, T.; Pflugfelder, R.; Fernández, G.; Nebehay, G.; Porikli, F. A Novel Performance Evaluation Methodology for Single-Target Trackers. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2137–2155. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Q.; Lu, X.; He, Z.; Zhang, C.; Chen, W. Deep Convolutional Neural Networks for Thermal Infrared Object Tracking. Knowl.-Based Syst. 2017, 134, 189–198. [Google Scholar] [CrossRef]

- Liu, Q.; Li, X.; He, Z.; Fan, N.; Liang, Y. Multi-Task Driven Feature Models for Thermal Infrared Tracking. In Proceedings of the Thirty-Third AAAI Conference on Artifificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Cvejic, N.; Nikolov, S.G.; Knowles, H.D.; Loza, A.; Canagarajah, C.N. The Effect of Pixel-Level Fusion on Object Tracking in Multi-Sensor Surveillance Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Schnelle, S.R.; Chan, A.L. Enhanced target tracking through infrared-visible image fusion. In Proceedings of the IEEE International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011. [Google Scholar]

- Li, L.; Li, C.; Tu, Z.; Tang, J. A fusion approach to grayscale-thermal tracking with cross-modal sparse representation. In Proceedings of the 13th Conference on Image and Graphics Technologies and Applications, IGTA 2018, Beijing, China, 8–10 April 2018. [Google Scholar]

- Li, C.; Sun, X.; Wang, X.; Zhang, L.; Tang, J. Grayscale-Thermal Object Tracking via Multitask Laplacian Sparse Representation. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 673–681. [Google Scholar] [CrossRef]

- Li, C.; Zhu, C.; Yan, H.; Jin, T.; Liang, W. Cross-Modal Ranking with Soft Consistency and Noisy Labels for Robust RGB-T Tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Yi, W.; Blasch, E.; Chen, G.; Li, B.; Ling, H. Multiple source data fusion via sparse representation for robust visual tracking. In Proceedings of the IEEE International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011. [Google Scholar]

- Liu, H.P.; Sun, F.C. Fusion tracking in color and infrared images using joint sparse representation. Sci. China Inf. Sci. 2012, 55, 590–599. [Google Scholar] [CrossRef]

- Li, C.; Zhao, N.; Lu, Y.; Zhu, C.; Tang, J. Weighted Sparse Representation Regularized Graph Learning for RGB-T Object Tracking. In Proceedings of the ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1856–1864. [Google Scholar]

- Li, C.; Zhu, C.; Zhang, J.; Luo, B.; Wu, X.; Tang, J. Learning Local-Global Multi-Graph Descriptors for RGB-T Object Tracking. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 2913–2926. [Google Scholar] [CrossRef]

- Ding, M.; Yao, Y.; Wei, L.; Cao, Y. Visual tracking using Locality-constrained Linear Coding and saliency map for visible light and infrared image sequences. Signal Process. Image Commun. 2018, 68, 13–25. [Google Scholar] [CrossRef]

- Zhai, S.; Shao, P.; Liang, X.; Wang, X. Fast RGB-T Tracking via Cross-Modal Correlation Filters. Neuro Comput. 2019, 334, 172–181. [Google Scholar] [CrossRef]

- Wang, Y.; Li, C.; Tang, J. Learning Soft-Consistent Correlation Filters for RGB-T Object Tracking. In Proceedings of the Pattern Recognition and Computer Vision, Guangzhou, China, 23–26 November 2018. [Google Scholar]

- Luo, C.; Sun, B.; Yang, K.; Lu, T.; Yeh, W. Thermal infrared and visible sequences fusion tracking based on a hybrid tracking framework with adaptive weighting scheme. Infrared Phys. Technol. 2019, 99, 265–276. [Google Scholar] [CrossRef]

- Yun, X.; Sun, Y.; Yang, X.; Lu, N. Discriminative Fusion Correlation Learning for Visible and Infrared Tracking. Math. Probl. Eng. 2019, 1, 1–11. [Google Scholar] [CrossRef]

- Wang, C.; Xu, C.; Cui, Z.; Zhou, L.; Zhang, T.; Zhang, X. Cross-Modal Pattern-Propagation for RGB-T Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Li, C.; Liu, L.; Lu, A.; Ji, Q.; Tang, J. Challenge-Aware RGBT Tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Zhang, H.; Zhang, L.; Zhuo, L.; Zhang, J. Object Tracking in RGB-T Videos Using Modal-Aware Attention Network and Competitive Learning. Sensors 2020, 20, 393. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Xiao, G.; Ye, P.; Qiao, D.; Peng, S. Object Fusion Tracking Based on Visible and Infrared Images Using Fully Convolutional Siamese Networks. In Proceedings of the 2019 22nd International Conference on Information Fusion, Ottawa, ON, Canada, 2–5 July 2019. [Google Scholar]

- Zhang, X.; Ye, P.; Liu, J.; Gong, K.; Xiao, G. Decision-level visible and infrared fusion tracking via Siamese networks. In Proceedings of the IEEE International Conference on Information Fusion, Ottawa, ON, Canada, 2–5 July 2019. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Gao, Y.; Li, C.; Zhu, Y.; Tang, J.; Wang, F. Deep Adaptive Fusion Network for High Performance RGBT Tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Yang, R.; Zhu, Y.; Wang, X.; Li, C.; Tang, J. Learning Target-Oriented Dual Attention for Robust RGB-T Tracking. In Proceedings of the IEEE International Conference on Image Processing, Taipei, Taiwan, 22–25 September 2019. [Google Scholar]

- Zhu, Y.; Li, C.; Luo, B.; Tang, J.; Wang, X. Dense feature aggregation and pruning for rgbt tracking. In Proceedings of the ACM international conference on Multimedia, New York, NY, USA, 21–25 October 2019; pp. 465–472. [Google Scholar]

- Liu, Q.; Li, X.; He, Z.; Li, C.; Zheng, F. LSOTB-TIR: A Large-Scale High-Diversity Thermal Infrared Object Tracking Benchmark. In Proceedings of the ACM international conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

| RGB-T Tracker Name | EAO on the Public Dataset VOT-RGBT2019 | EAO on the Sequestered Dataset | Final Ranking in the VOT-RGBT2019 Challenge |

|---|---|---|---|

| mfDiMP [5] | 0.3879 | 0.2347 | 1 |

| siamDW_T [6] | 0.3925 | 0.2143 | 2 |

| MANet [7] | 0.3436 | 0.2041 | 3 |

| JMMAC [8] | 0.4826 | 0.2037 | 4 |

| FSRPN [9] | 0.3553 | 0.1873 | 5 |

| References | Years | Journal/Conference | Category |

|---|---|---|---|

| [38] | 2007 | IEEE Conference on Computer Vision and Pattern Recognition | Traditional method |

| [39] | 2011 | IEEE International Conference on Information Fusion | Traditional method |

| [40] | 2018 | Conference on Image and Graphics Technologies and Applications | Sparse representation (SR)-based |

| [41] | 2017 | IEEE Transactions on Systems, Man, and Cybernetics: Systems | Sparse representation (SR)-based |

| [42] | 2018 | In Proceedings of the European Conference on Computer Vision | Sparse representation (SR)-based |

| [43] | 2011 | IEEE International Conference on Information Fusion | Sparse representation (SR)-based |

| [44] | 2012 | Science China Information Sciences | Sparse representation (SR)-based |

| [45] | 2017 | In Proceedings of the ACM international conference on Multimedia | Graph-based |

| [46] | 2019 | IEEE Transactions on Circuits and Systems for Video Technology | Graph-based |

| [47] | 2018 | Signal Processing: Image Communication | Graph-based |

| [48] | 2019 | Neuro computing | Correlation Filter (CF)-based |

| [49] | 2018 | In Pattern Recognition and Computer Vision | Correlation Filter (CF)-based |

| [50] | 2019 | Infrared Physics & Technology | Correlation Filter (CF)-based |

| [51] | 2019 | Mathematical Problems in Engineering | Correlation Filter (CF)-based |

| [52] | 2020 | IEEE Conference on Computer Vision and Pattern Recognition | Deep Learning (DL)-based |

| [53] | 2020 | European Conference on Computer Vision | Deep Learning (DL)-based |

| [54] | 2020 | Sensors | Deep Learning (DL)-based |

| [55] | 2019 | IEEE International Conference on Image Processing | Deep Learning (DL)-based |

| [56] | 2019 | ACM international conference on Multimedia | Deep Learning (DL)-based |

| CMR [42] | DAPNet [61] | SGT [45] | DAFNet [59] | CEDiMP (Ours) | |

|---|---|---|---|---|---|

| NO | 89.5/61.6 | 90.0/64.4 | 87.7/55.5 | 90.0/63.6 | 88.1/65.9 |

| PO | 77.7/53.5 | 82.1/57.4 | 77.9/51.3 | 85.9/58.8 | 87.1/60.5 |

| HO | 56.3/37.7 | 66.0/45.7 | 59.2/39.4 | 68.6/45.9 | 69.8/46.1 |

| LI | 74.2/49.8 | 77.5/53.0 | 70.5/46.2 | 81.2/54.2 | 82.5/55.1 |

| LR | 68.7/42.0 | 75.0/51.0 | 75.1/47.6 | 81.8/53.8 | 78.8/53.2 |

| TC | 67.5/44.1 | 76.8/54.3 | 76.0/47.0 | 81.1/58.3 | 81.4/55.0 |

| DEF | 66.7/47.2 | 71.7/51.8 | 68.5/47.4 | 74.1/51.5 | 73.1/52.9 |

| FM | 61.3/38.2 | 67.0/44.3 | 67.7/40.2 | 74.0/46.5 | 75.4/48.2 |

| SV | 71.0/49.3 | 78.0/54.2 | 69.2/43.4 | 79.1/54.4 | 78.2/55.8 |

| MB | 60.0/42.7 | 65.3/46.7 | 64.7/43.6 | 70.8/50.0 | 72.1/50.6 |

| CM | 62.9/44.7 | 66.8/47.4 | 66.7/45.2 | 72.3/50.6 | 72.5/52.1 |

| BC | 63.1/39.7 | 71.7/48.4 | 65.8/41.8 | 79.1/49.3 | 80.1/51.2 |

| ALL | 71.1/48.6 | 76.6/53.7 | 72.0/47.2 | 79.6/54.4 | 80.8/56.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, L.; Zhu, M.; Ren, H.; Xue, L. Channel Exchanging for RGB-T Tracking. Sensors 2021, 21, 5800. https://doi.org/10.3390/s21175800

Zhao L, Zhu M, Ren H, Xue L. Channel Exchanging for RGB-T Tracking. Sensors. 2021; 21(17):5800. https://doi.org/10.3390/s21175800

Chicago/Turabian StyleZhao, Long, Meng Zhu, Honge Ren, and Lingjixuan Xue. 2021. "Channel Exchanging for RGB-T Tracking" Sensors 21, no. 17: 5800. https://doi.org/10.3390/s21175800

APA StyleZhao, L., Zhu, M., Ren, H., & Xue, L. (2021). Channel Exchanging for RGB-T Tracking. Sensors, 21(17), 5800. https://doi.org/10.3390/s21175800