1. Introduction

This paper is an attempt to initiate the construction of an abstract theory of sensor management, in the hope that it will help to provide both a theoretical underpinning for the solution of practical problems and insights for future work. A key component of sensor management is the amount of information a sensor in a given configuration can gain from a measurement, and how that information gain changes with the configuration of the sensor. In this vein, it is interesting to observe how information theoretic

surrogates have been used in a range of applications as objective functions for sensor management; see, for instance, [

1,

2,

3]. Our aim here is to abstract from various papers including these, those by Kershaw and Evans [

4] on sensor management, as well as others, the mathematical principles required for this theory. Our approach is set within the mathematical context of differential geometry and we aim to show that geodesics on a particular manifold provide the theoretical best-possible sensor reconfiguration in the same way that the Cramer–Rao lower bound provides a minimum variance for an estimator.

The problem of estimation is expressed in terms of a likelihood; that is, a probability density

, where

x denotes the measurement and

the parameter to be estimated. It is well known [

5] that the Fisher information associated with this likelihood provides a measure of information gained from the measurement. This in turn leads to the Fisher–Rao metric [

6,

7] on the parameter space. This Riemannian metric lies at the heart of our discussion.

While the concepts discussed here are, in some sense, generic and can be applied to any sensor system that has the capability to modify its characteristics, for simplicity and to keep in mind a motivating example, we focus on a particular problem: that of localization of a

target. Of course, a sensor system is itself just a (more complex) aggregate sensor, but it will be convenient, for the particular problems we will discuss, to assume a discrete collection of disparate (at least in terms of location) sensors, that together provide measurements of aspects of the location of the target. This distributed collection of sensors, each drawing measurements that provide partial information about the location of a target, using known likelihoods, defines a particular

sensor configuration state. As the individual sensors move, they change their sensing characteristics and thereby the collective Fisher information associated with estimation of target location. As already stated, the Fisher information matrix defines a metric, the Fisher–Rao metric, over the physical space where the target resides, or in more generality a metric over the parameter space in which the estimation process takes place, and this metric is a function of the location of the sensors. This observation permits analysis of the information content of the system, as a function of sensor parameters, in the framework of differential geometry (“information geometry”) [

8,

9,

10,

11]. A considerable literature is dedicated to the problem of optimizing the configuration so as to maximize information retrieval [

1,

12,

13,

14]. The mathematical machinery of information geometry has led to advances in several signal processing problems, such as blind source separation [

15], gravitational wave parameter estimation [

16], and dimensionality reduction for image retrieval [

17] or shape analysis [

18].

In sensor management/adaptivity applications, the performance of the sensor configuration (in terms of some function of the Fisher information) becomes a cost associated with finding the optimal sensor configuration, and tuning the metric by changing the configuration serves to optimize this cost. Literally hundreds of papers, going back to the seminal work of [

4] and perhaps beyond, have used the Fisher information as a measure of sensor performance. In this context, parametrized families of Fisher–Rao metrics arise (e.g., [

19,

20]). Sensor management then becomes one of choosing an optimal metric (based on the value of the estimated parameter), from among a family of such, to permit the acquisition of the maximum amount of information about that parameter.

As we have stated, the focus, hopefully clarifying, example of this paper, is that of estimating the location of a target using measurements from mobile sensors (cf. [

21,

22]). The information content of the system depends both on the location of the target and on the spatial locations of the sensors, because the covariance of measurements is sensitive to the distances and angles made between the sensors and the target. As the sensors move in space, the associated likelihoods vary, as do the resulting Fisher matrices, describing the information content of the system, for every possible sensor arrangement. It is this interaction between sensors and target that this paper sets out to elucidate in from an information geometric viewpoint.

Perhaps the key extra idea in this paper is the observation by Gil-Medrano and Michor that the collection of all Riemannian metrics on a Riemannian manifold itself admits the structure of an infinite-dimensional Riemannian manifold [

23,

24]. Of interest to us is only the subset of Riemannian metrics corresponding to Fisher informations of available sensor configurations, and this allows us to restrict attention to a finite-dimensional sub-manifold of the manifold of metrics, called the

sensor manifold [

12,

13]. In particular, a continuous adjustment of the sensor configuration, say by moving one of the individual sensors, results in a continuous change in the associated Fisher metric and so a movement in the sensor manifold.

Though computationally difficult, the idea of regarding the Fisher–Rao metric as a measure of performance of a given sensor configuration and then understanding variation in sensor configuration in terms of the manifold of such metrics is a powerful concept. It permits questions concerning optimal target trajectories, as discussed here, to minimize information passed to the sensors and, as will be discussed in a subsequent paper, optimal sensor trajectories to maximize information gleaned about the target. In particular, we remark that the metric on the space of Riemannian metrics that appears naturally in a mathematical context in [

23,

24] also has a natural interpretation in a statistical context.

Our aims here are to further develop the information geometric view of sensor configuration begun in [

12,

13]. While the systems discussed are simple and narrow in focus, already they point to concepts of information collection that appear to be new. Specifically, we setup the target location problem in an information geometric context and show that the optimal (in a manner to be made precise in

Section 3) sensor trajectories, in a physical sense, are determined by solving the geodesic equations on the configuration manifold (

Section 4). Various properties of geodesics on this space are derived, and the mathematical machinery is demonstrated using concrete physical examples (

Section 5).

2. Divergences and Metrics

Here, we discuss some generalities on statistical divergences and metrics. The two divergences of interest to us are the Kullback–Leibler divergence and the Jensen–Shannon divergence. These are defined by

where

p and

q are two probability densities on some underlying measure space.

In our case, the densities

are taken from a single parametrized family

, where

is in a manifold

M. More specifically, the parameter

, the location of the target. A justification for the Kullback–Leibler divergence is that, in this context, given a measurement

of a target at

, the likelihood that the same measurement could be obtained from a target at

,

, is given by the log odds expression

and the average over all measurements is, by definition, the Kullback–Leibler divergence [

25],

.

Each of the Kullback–Leibler and Jensen–Shannon divergences is regarded as a measure of information difference between the two densities. They are both non-negative and share the property that

implies that

almost everywhere. The Kullback–Leibler divergence is related to mutual information, as is, somewhat more circuitously the Jensen–Shannon divergence. We refer the reader to Section 17.1 of [

5] for a discussion of this connection for the Kullback–Leibler, and to [

26] for a brief discussion of the Jensen–Shannon divergence.

Importantly for us, as

, the first non-zero term in the series expansion is second order, viz.

where

is an

symmetric matrix, and

n is the dimension of the manifold

M.

This location-dependent matrix defines a Riemannian metric over

M, the

Fisher information metric [

6,

7]. It can also be calculated, under mild conditions, as the expectation of the tensor product of the gradients of the log-likelihood

as

This is an example of a more general structure encapsulated by the following:

Theorem 1. Given a likelihood and a divergence then there is a unique largest metric d on M such that

The relationship between this metric and information is explored in the next section. A proof of the theorem appears in the

Appendix A.

3. The Information in Sensor Measurements

Sensor measurements, as considered in this paper, can be formulated as follows. Suppose we have, in a fixed manifold

M, a collection of

N sensors located at

. For instance, the manifold may be

and location may just mean that in the usual sense in Euclidean space. The measurements from these sensors are used to estimate the location of a target

also in

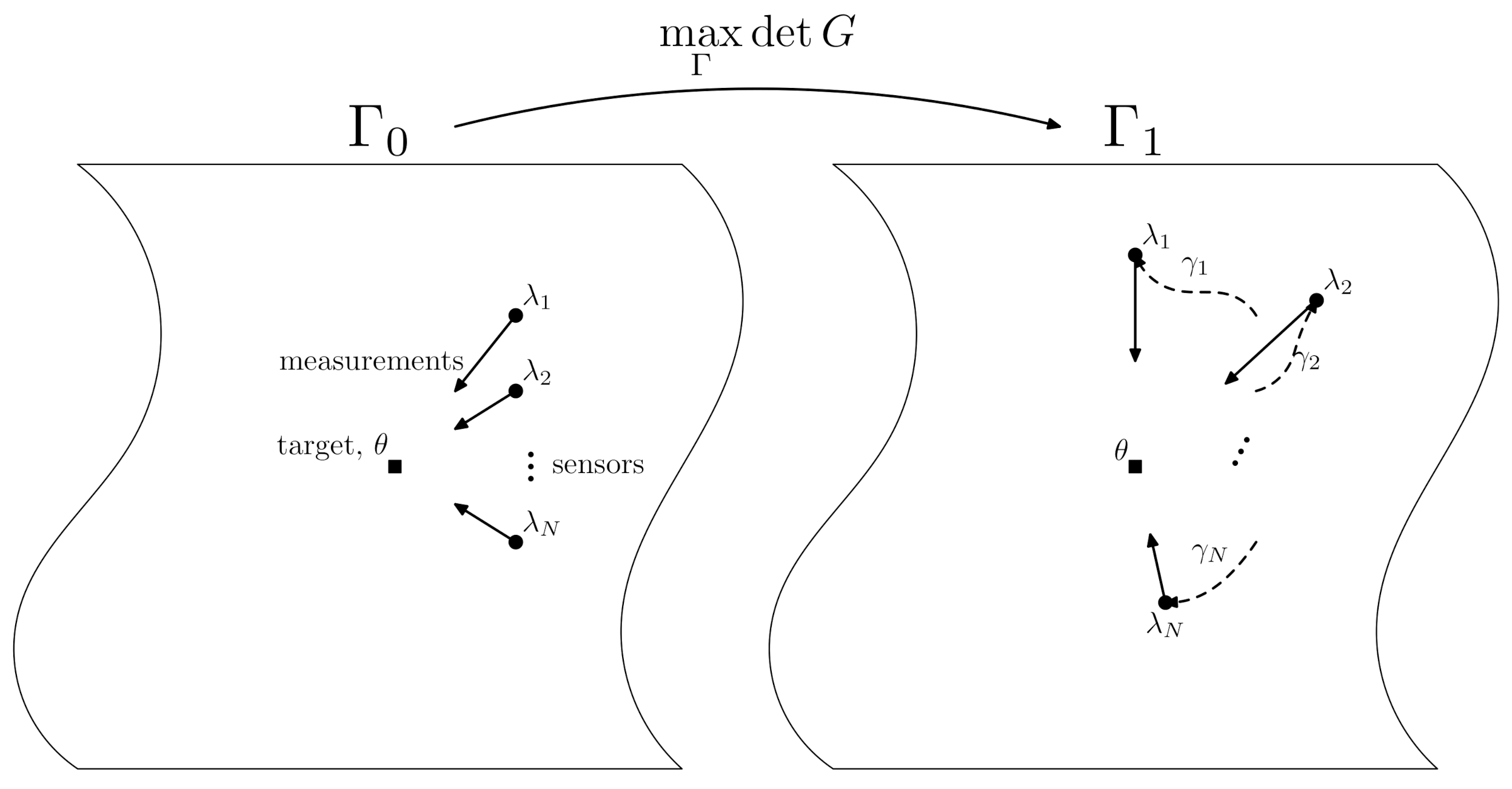

M (see the left of

Figure 1). Each sensor draws measurements

from a distribution with probability density function (PDF)

, where

represents the state of the system we are trying to estimate and

the noise parameters of each individual sensor.

A measurement

is the collected set of individual measurement from each of the sensors with likelihood

These measurements are assumed independent between sensors and over time when dynamics are included. While this assumption is probably not necessary, it allows one to define the aggregate likelihood (

5) as a simple product over the individual likelihoods, which renders the problem computationally more tractable.

Since Fisher information is additive over independent measurements, the Fisher information metric provides a measure of the instantaneous change in information the sensors can obtain about the target. In this paper, we adopt a relatively simplistic view that the continuous collection of measurements as exemplified by, for instance, the Kalman-Bucy filter [

27], is a limit of measurements discretized over time. Because sensor measurements depend on the relative locations of the sensors and target, this incremental change depends on the direction the target is moving; the Fisher metric (

4) can naturally be expressed in coordinates that represent the sensor locations (see

Section 4), but also depends on parameters that represent target location, which may be functions of time in a dynamical situation. Once the Fisher metric (

4) has been evaluated, one can proceed to optimize it in an appropriate manner.

Another divergence will be of interest in our discussions. This is the Jensen–Shannon divergence, defined in terms of the Kullback–Leibler divergence by

3.1. D-Optimality

The information of the sensor system described in

Section 3 is a matrix-valued function and so problems of optimization of ‘total information’ with respect to the sensor parameters are not well defined. We require a definition of ‘optimal’ in an information theoretic context. Several different optimization criteria exist (e.g., [

28,

29]), achieved by constructing scalar invariants from the matrix entries of

g, and maximizing those functions in the usual way.

We adopt the notion of

D-optimality in this paper; we consider the maximization of the determinant of (

4). Equivalently, D-optimality maximizes the differential Shannon entropy of the system with respect to the sensor parameters [

30], and minimizes the volume of the elliptical confidence regions for the sensors estimate of the location of the target

[

31].

While we have focused so far on the metric as a function of target location

on the manifold, it is also clear that sensor parameters are involved. In applying D-optimality (or any other) criterion to this problem, we take account of the possibility that sensor locations (in the context we have described) and distributions are not fixed. Conventionally, measurements are drawn from sensors with

fixed properties, with a view to estimating a parameter

. Permitting sensors to move throughout

M produces an infinite family of sensor configurations, and hence Fisher–Rao metrics (

4), parametrized by the locations of the sensors. One aim of this structure is to move sensors to locations that serve to maximize information content, given some prior distribution for a particular

. This necessitates a tool to measure the difference between information provided by members of a family of Fisher–Rao metrics; this is explored in

Section 4. We emphasize that the ideas described here will extend to situations where the sensor manifold is not identified with a copy (or several copies) of the target manifold.

3.2. Geodesics on the Sensor Manifold

We now consider the case where the target is in motion, so that

varies along a path

. The instantaneous information gain by the sensor(s) at time

t is then

, where

g is the Fisher information metric (

4). We define the information provided to the sensors about the target by the measurements as the target moves along the curve as follows. Take a

-partition

of the interval

, where

(

). At each point

, a measurement is taken represented by the likelihood

. Over the interval

, the increment in information could be

but this does not make sense for an increment as it is not symmetric. Instead, we should calculate the information gain from the endpoints of the interval to the mean distribution

This is also know as the Jensen–Shannon divergence,

. Based on the assumption that measurements taken over time are all independent, as the target traverses a curve

, the total information

gained measuring at the

-partition,

T is

Letting

go to zero gives us an information functional,

, defined on paths,

,

which is the equivalent of the energy functional in differential geometry (e.g., Chapter 9 of [

32]) This has the same extremal paths as

, the arc-length of the path

,

Paths with extremal values of this length are geodesics and these can be interpreted as the evasive action that can be taken by the target to minimize amount of information it gives to the sensors.

3.3. Kinematic Conditions on Information Management

While the curves that are extrema of the information functional and of arc-length are the same as sets, a geodesic only minimizes the information functional if traversed at a specific speed, namely,

In differential geometric terms, this is equivalent to requiring the arc-length parameterization of the geodesic to fulfill the energy condition. In order to minimize information about its location, the target should move along a geodesic of

g at exactly the speed

(

10). This quantifies directly the relation between the speed of the target and the ability to identify its location.

While aspects of this speed constraint are still unclear to us, an analogy that may be useful is to regard the target as moving though a “tensorial information fluid” whose flow is given by the information metric. In this setting, moving slower relative to the fluid will result in “pressure” building behind, requiring information (energy) to be expended to maintain the slower speed. Moving faster also requires more information to push through the slower moving fluid. This information is directly available to the sensors resulting in improved target position estimate.

In the fluid dynamics analogy, the energy expended in moving though a fluid is proportional to the square of the difference in speed between the fluid and the object. The local energy is proportional to the difference between actual speed and the speed desired by the geodesic; that is, the speed that minimizes the energy functional. Pursuing the fluid dynamics analogy, the density of the fluid mediates the relationship between the energy and the relative speed.

In particular, the scalar curvature, which depends on

G, influences the energy and hence the information flow. We will explore this issue further in a future publication.

4. The Information of Sensor Configurations

A sensor configuration is a set of sensor parameters. The Fisher–Rao metric g can be viewed as a function of as well as , the location of the target. To calculate the likelihood that a measurement came from one configuration over another requires the calculation of , which is difficult as the value of is not known exactly. Measurements can be used to construct an estimate . However, the distribution of this estimate is hard to quantify and even harder to calculate. Instead, here, the maximum entropy distribution is used. This is normally distributed with mean , and covariance , the inverse of the Fisher information metric at the estimated target location.

The information gain due to the sensor configuration

is now

because there was no prior information about the location before the sensors were configured compared with the maximum entropy distribution

p after. Note that the uniform distribution, 1 is, in general, an improper prior in the Bayesian sense unless the manifold

M is of finite volume. It may be necessary, therefore, to restrict attention to some (compact) submanifold

for

to be well defined (cf. the discussion below Equation (

12)).

Evaluating this information gain gives

The Fisher information metric

G for this divergence can be calculated from

where

h and

k are tangent vectors to the space of metrics. The integral defining (

12) may not converge for non-compact

M, so restriction to a compact submanifold

of

M is assumed throughout as necessary (cf.

Figure 1).

4.1. The Manifold of Riemannian Metrics

The set of all Riemannian metrics over a manifold

M can itself be imbued with the structure of an infinite-dimensional Riemannian manifold [

23,

24], which we call

. Points of

are Riemmanian metrics on

M; i.e., each point

bijectively corresponds to a positive-definite, symmetric

-tensor in the space

. Under reasonable assumptions, an

metric on

[

23,

33] may be defined as:

which should be compared to (

12).

It should be noted that the points of the manifold

comprise

all of the metrics that can be put on

M, most of which are irrelevant for our physical sensor management problem. We restrict consideration to a sub-manifold of

consisting only of those Riemannian metrics that are members of the family of Fisher information matrices (

4) corresponding to feasible sensor configurations. This particular sub-manifold is called the ‘sensor’ or ‘configuration’ manifold [

12,

13] and is denoted by

, where now the objects

h and

k are now elements of the now finite-dimensional tangent space

. The dimension of

is

since each point of

is uniquely described by the locations of the

N-sensors, each of which require

numbers to denote their coordinates. A visual description of these spaces is given in

Figure 1. For all cases considered in this paper, the integral defined in (

13) is well defined and converges (see, however, the discussion in [

21]).

For the purposes of computation, it is convenient to have an expression for the metric tensor components of (

13) in some local coordinate system. In particular, in a given coordinate basis

over

(not to be confused with the coordinates on

; see Secion

Section 4), the metric (

13) reads

where

h and

k are tangents vectors in

given in coordinates by

From the explicit construction (

14), all curvature quantities of

, such as the Riemann tensor and Christoffel symbols, can be computed.

4.2. D-Optimal Configurations

D-optimality in the context of the sensor manifold described above is discussed in this section. Suppose that the sensors are arranged in some arbitrary configuration

. The sensors now move in anticipation of target behaviour; a prior distribution is adopted to localize a target position

. The sensors move continuously to a new configuration

, where

is determined by maximizing the determinant of

G, i.e.,

corresponds to the sensor locations for which

, computed from (

14), is maximized. The physical situation is depicted graphically in

Figure 2. This process can naturally be extended to the case where real measurement data are used. In particular, as measurements are drawn, a (continuously updated) posterior distribution for

becomes available, and this can be used to update the Fisher metric (and hence the metric

G) to define a sequence

of optimal configurations; see

Section 5.

4.3. Geodesics for the Configuration Manifold

While D-optimality allows us to determine where the sensors should move given some prior, it provides us with no guidance on which path the sensors should traverse, as they move through , to reach their new, optimized positions.

A path

from one configuration

to another

is a set of paths

for each sensor

from location

to

. Varying the sensor locations is equivalent to varying the metric

and the estimate of the target location

. The information gain along

,

, is then

and the extremal paths are again the geodesics of the metric

G, by analogy with (

8). Also the speed constraint observed earlier in

Section 3.

Section 3.3 for the sensor geodesics is in place here and given by

Again, this leads to the conclusion that there are kinematic constraints on the rate of change of sensor parameters that lead to the collection of the maximum amount of information. In this case, it is the sensor using information to slow or increase the rate of change of its parameters relative to (

17). That information is wasted (like a form of information heat) and results in less information available for localisation of the target and, hence, a poorer estimate of it location.

5. Configuration for Bearings-Only Sensors

To illustrate the mathematical machinery developed in the previous sections consider first the configuration metric for two bearings-only sensors. The physical space , where the sensors and the target reside, is chosen to be the square , though the sensors are ‘elevated’ at some height above the plane. Effectively, we assume an uninformative (uniform) prior over the square .

The goal is to estimate a position

from bearings-only measurements taken by the sensors, as in previous work [

12,

13]. We assume that measurements are drawn from a Von Mises distribution,

where

is the concentration parameter and

is the

rth modifed Bessel function of the first kind, [

34], and

is the location of the

n-th sensor in Cartesian coordinates. Note that the parameter,

, being a measure of angular concentration means the location error will increase and the signal-to-noise ratio decrease as the sensors move farther away from the target.

For the choice (

18), the Fisher metric (

4) can be computed easily, and has components

where

is chosen as our noise parameter in what follows.

The target lives in the plane, thus

but the sensors live in a copy of the plane at height 1, i.e., we take

. This prevents singularities from emerging within (

19).

5.1. Target Geodesics

A geodesic,

, starting at

with initial direction

for a manifold with metric

g is the solution of the coupled second-order initial value problem for the components

:

where

are the Christoffel symbols for the metric

g.

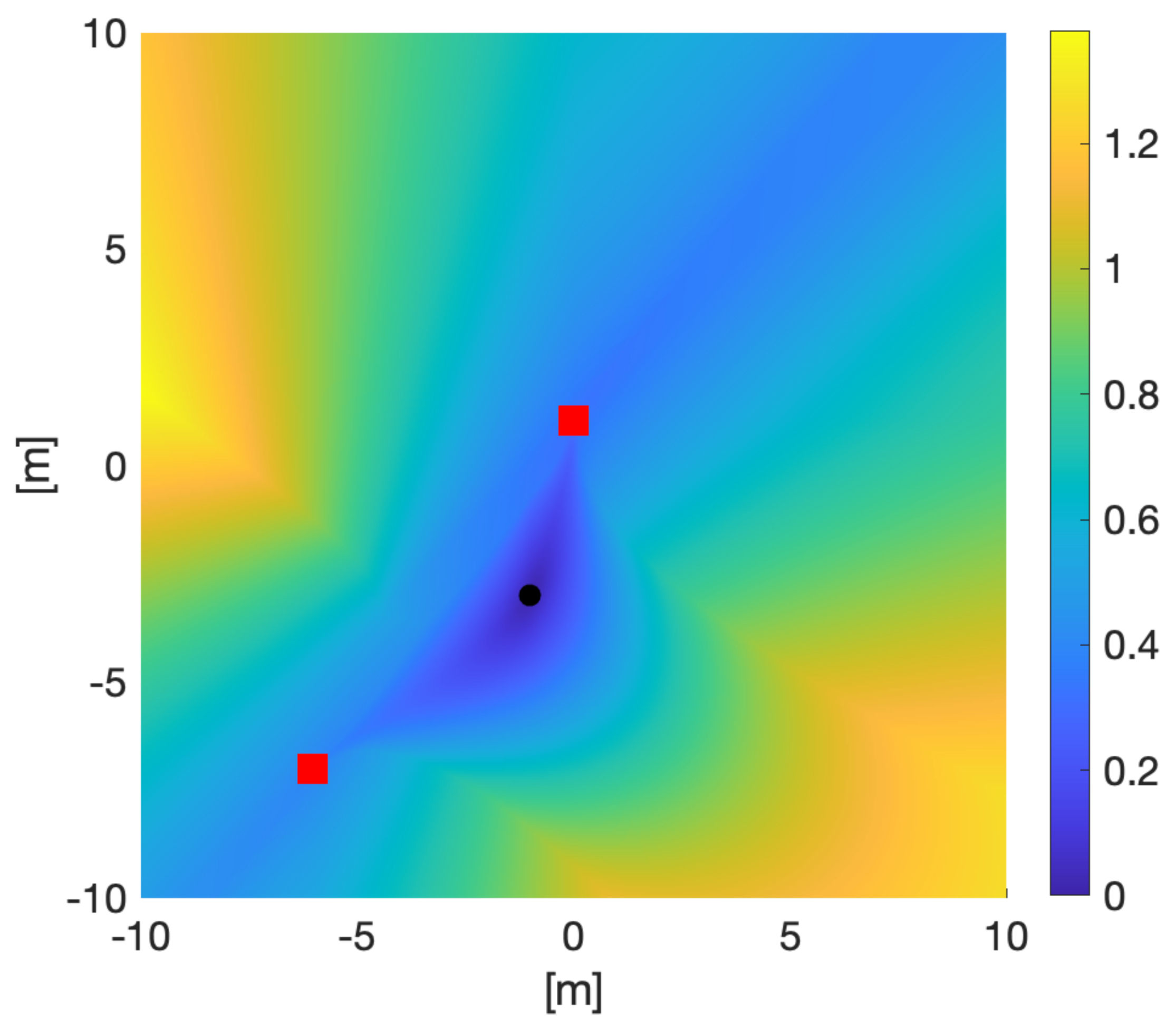

Figure 3 shows directly integrated solutions to the geodesic Equation (

20) a target at

and sensors at

and

(raised at a height 1 above

). The differing paths correspond to the initial direction vector

of the target, varying as

varies from 0 to

radians in steps of

radians.

The solution of the geodesic equation are generated from to 5. this means the length of the corresponds to the information given away by the target. The longest line corresponds to the target forming a right-angle with the sensors which is the optimal arrangement for bearings only sensors. The shortest geodesics occur when the target moves on the line joining the two sensors. This usually where a location cannot be determined but this singularity is avoided in this example because the sensors are arranged above the plane inhabited by the target but it is still the place where the location is most difficult to determine.

An alternative way to numerically compute the geodesics connecting two points on the manifold

M is using the Fast Marching Method (FMM) [

35,

36,

37]. Since the Fisher–Rao information metric is a Riemannian metric on the Riemannian manifold

M, one can show that the geodesic distance map

u, the geodesic distance from the initial point

to a point

, satisfies the Eikonal equation

with initial condition

. By using a mesh over the parameter space, the (isotropic or weakly anisotropic) Eikonal Equation (

21), can be solved numerically by Fast Marching. The geodesic is then extracted by integrating numerically the ordinary differential equation

where

is the mesh size. The computational complexity of FMM is

, where

N is the total number of mesh grid points. We perform FMM on a central computational node in this paper. If the computational capability of that node is limited, a parallel version of FMM maybe used instead and we will include it in future work. For Eikonal equations with strong anisotropy, a generalized version of FMM, the Ordered Upwind Method (OUM) [

38] is preferred.

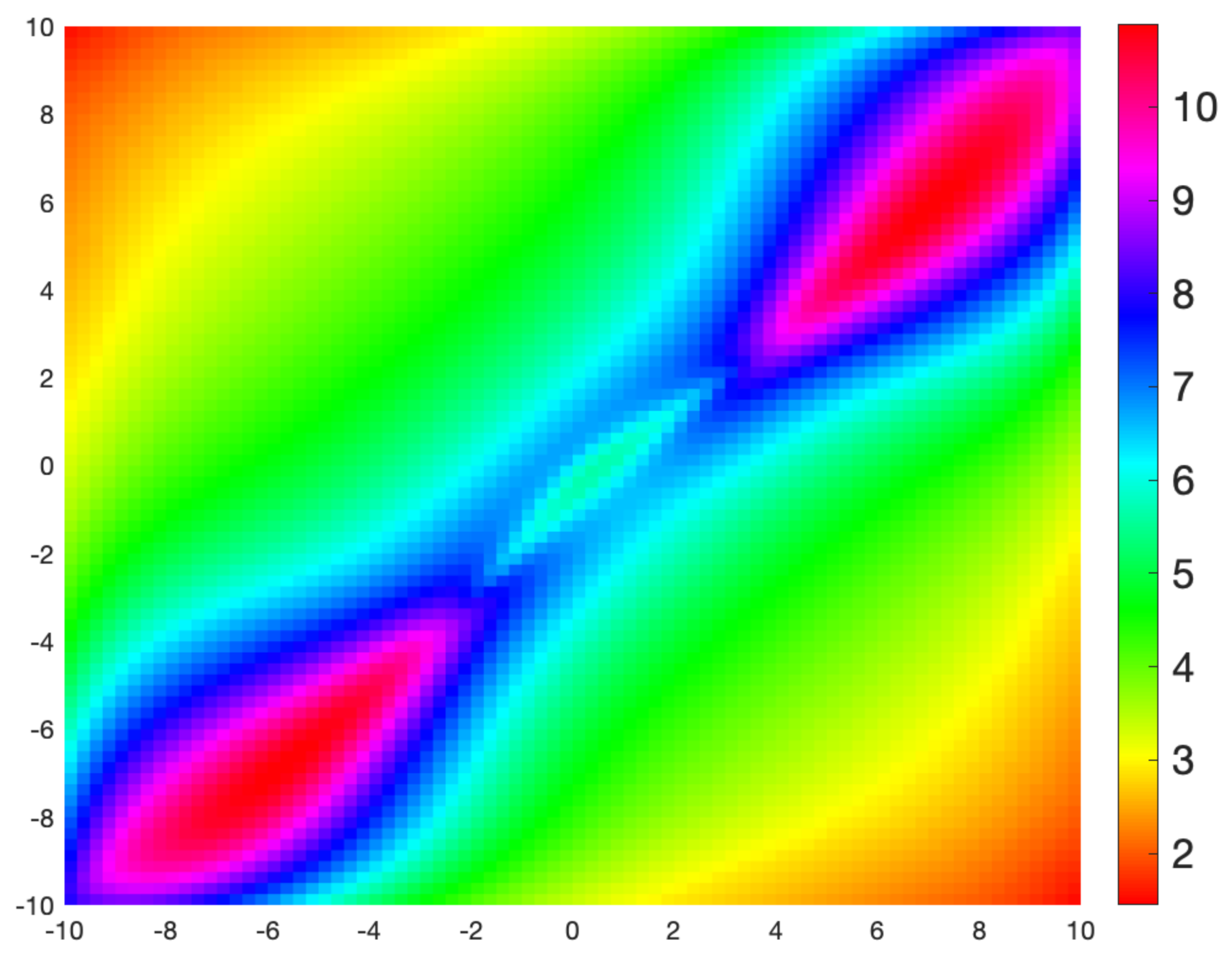

Compare

Figure 3 with

Figure 4 which uses a Fast Marching algorithm to calculate the geodesic distance from the same point.

Figure 5 shows the speed required along a geodesic travelling in the direction of the vector

. In the case of these bearing-only sensors, the trade-off over speed is between time-on-target and rate of change of relative angle between the sensors and the target. Travelling slowly means more time for the sensors to integrate measurements but the change in angle is slower, resulting in more accurate position estimates. Conversely, faster movement than the geodesic speed results in larger change in angle measurements but less time for measurements again resulting in more accurate measurements. Only at the geodesic speed is the balance reached and the minimum information criterion achieved.

5.2. Configuration Metric Calculations

The coordinates

on

are

. The actual positions of the sensor are raised above

by a distance

. The sensor management problem amounts to, given some initial configuration, identifying a choice of

for which the determinant of (

14) is maximized, and traversing geodesics

in

, starting at the initial locations and ending at the D-optimal locations; see

Figure 2. We assume that the target location is given by an uninformative prior distribution; that is,

for all

.

To make the problem tractable, we consider a simple case where one of the sensor trajectories is fixed; that is, we consider a two-dimensional submanifold of

parameterized by the coordinates

of

only.

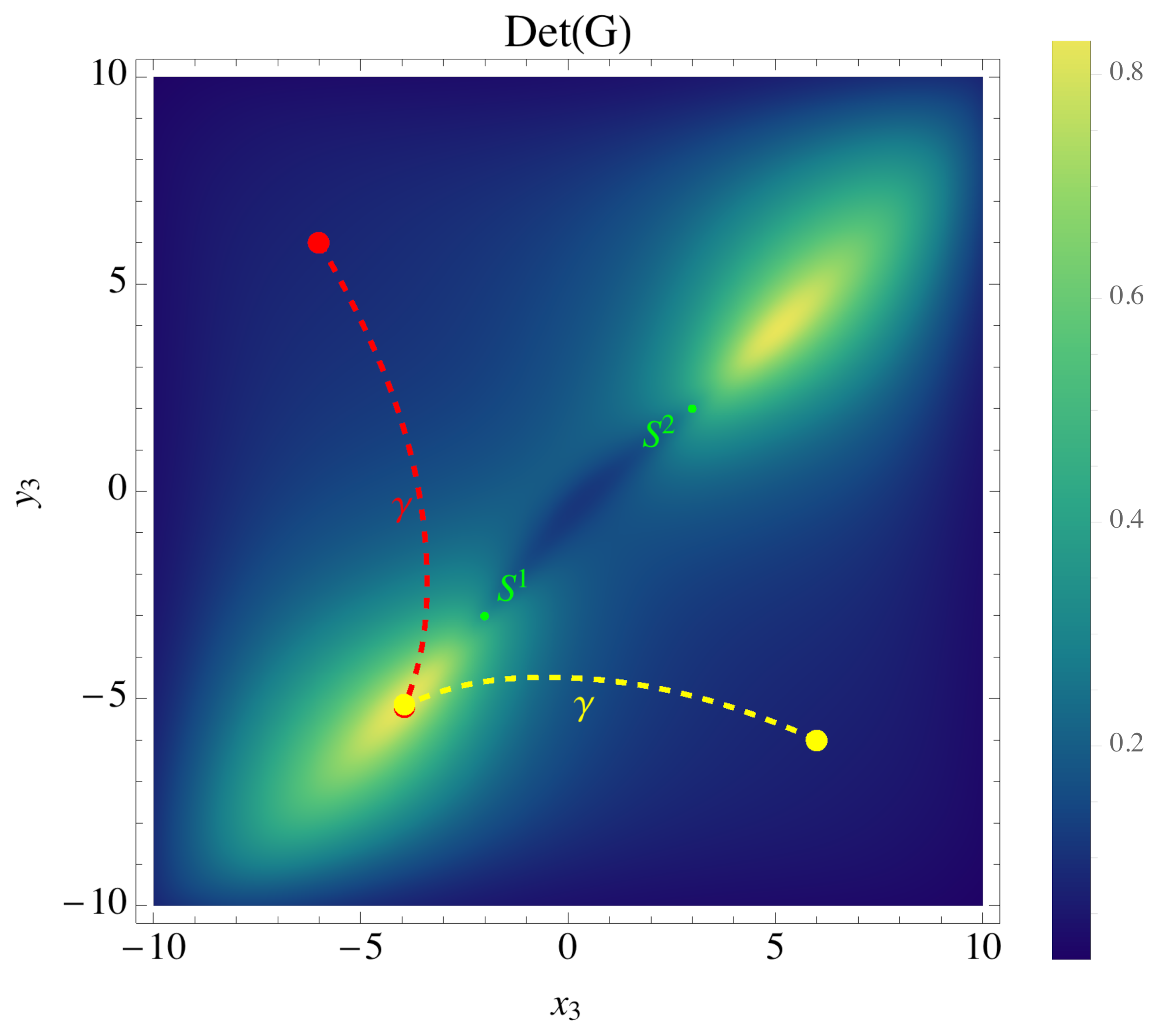

Figure 6 shows a contour plot of

, as a function of

, for the case where

moves from

(yellow dot) to

(black dot). The second sensor

begins at the point

(red dot).

Figure 6 demonstrates that, provided

moves from

to

,

is maximized at the point

, implying that

moving to

is the D-optimal choice. The geodesic path

beginning at

and ending at

is shown by the dashed yellow curve; this is the information-maximizing path of

through

. Similarly for

, the geodesic path

beginning at

and ending at

is shown by the dotted red curve.

5.3. Visualizing Configuration Geodesics

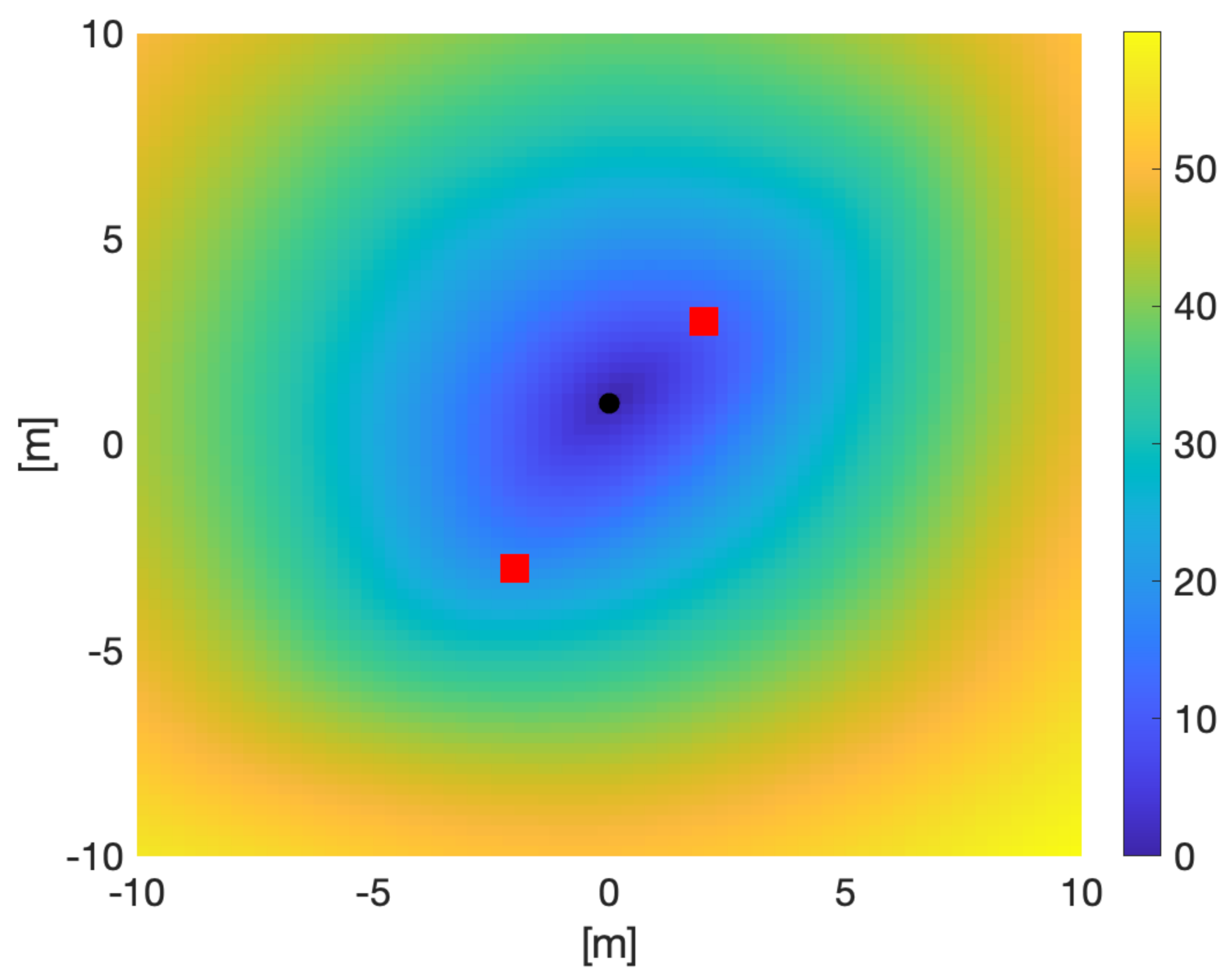

Figure 7 shows solutions to the geodesic equation on

for a sensor starting at

with the other sensor stationary at

and the target at

. The actual positions of the sensor are raised above

by a distance 1. The differing paths correspond to

varying through

in steps of

radians in the target initial direction vector

. the integration of the geodesic equation is carried to

so the length of each line corresponds to the information obtained about the target. It is clear by that the longer lines correspond to the sensors forming a right-angle relationship to the target which is optimal for bearings-only sensors. This should be compared with

Figure 8, which uses a Fast Marching algorithm [

39,

40] to calculate the geodesic distance from the same point.

Figure 9 shows the speed required along a geodesic travelling through each point in the direction of the vector (1,1).

6. Discussion

We consider the problem of sensor management from an information-geometric standpoint [

8,

9]. A physical space houses a target, with an unknown position, and a collection of mobile sensors, each of which takes measurements with the aim of gaining information about target location [

12,

13,

14]. The measurement process is parametrized by the relative positions of the sensors. For example, if one considers an array of sensors that take bearings-only measurements to the target (

18), the amount of information that can be extracted regarding target location clearly depends on the angles between the sensors. In general, we illustrate that in order to optimize the amount of information the sensors can obtain about the target, the sensors should move to positions which maximize the norm of the volume form (‘D-optimality’) on a particular manifold imbued with a metric (

14) which measures the distance (information content difference) between Fisher matrices [

30,

31]. We also show that, if the sensors move along geodesics (with respect to (

14)) to reach the optimal configuration, the amount of information that they

give away to the target is minimized. This paves the way for (future) discussions about game-theoretic scenarios where both the target and the sensors are competitively trying to acquire information about one another from stochastic measurements; see, e.g., [

41,

42] for a discussion on such games. Differential games along these lines will be addressed in forthcoming work.

It is important to note that while the measurements from sensors may be discrete and subject to sampling limits, the underlying model of target and sensor parameters is continuous. We have introduced two discretisations of our manifold to calculate approximations to our optimal reconfiguration paths and to enable some helpful visualisations. The explicit solution of the geodesic equation

Figure 3 uses a numerical differential equation solver on a coordinate patch over the sensor manifold. The Fast Marching visualisation of

Figure 4 uses a meshgrid over the same coordinate patch. Future work on optimal control of sensor parameters and game theoretic competitions between the information demands of sensors and targets may require more sophisticated discretisations, which available in the literature [

43,

44].

We hope that this work may eventually have realistic applications to signal processing problems involving parameter estimation using sensors. We have demonstrated that there is a theoretical way of choosing sensor positions, velocities, and possibly other parameters in an optimal manner so that the maximum amount of useful data can be harvested from a sequence of measurements taken by the sensors. For example, with sensors that take continuous or discrete measurements, this potentially allows one to design a system that minimizes the expected amount of time taken to localize (with some given precision) the position of a target. If the sensors move along paths that are geodesic with respect to (

14), then the target, in some sense, learns the least about its trackers. This allows the sensors to prevent either intentional or unintentional evasive manoeuvres; a unique aspect of information-geometric considerations. Ultimately, these ideas may lead to improvements on search or tracking strategies available in the literature, e.g., [

45,

46]. Though we have only considered simple sensor models in this paper, the machinery can, in principle, be adopted to systems of arbitrary complexity. It would certainly be worth testing the theoretical ideas presented in this paper experimentally using various sensor setups.