Non-Invasive Driver Drowsiness Detection System

Abstract

1. Introduction

2. Related Work

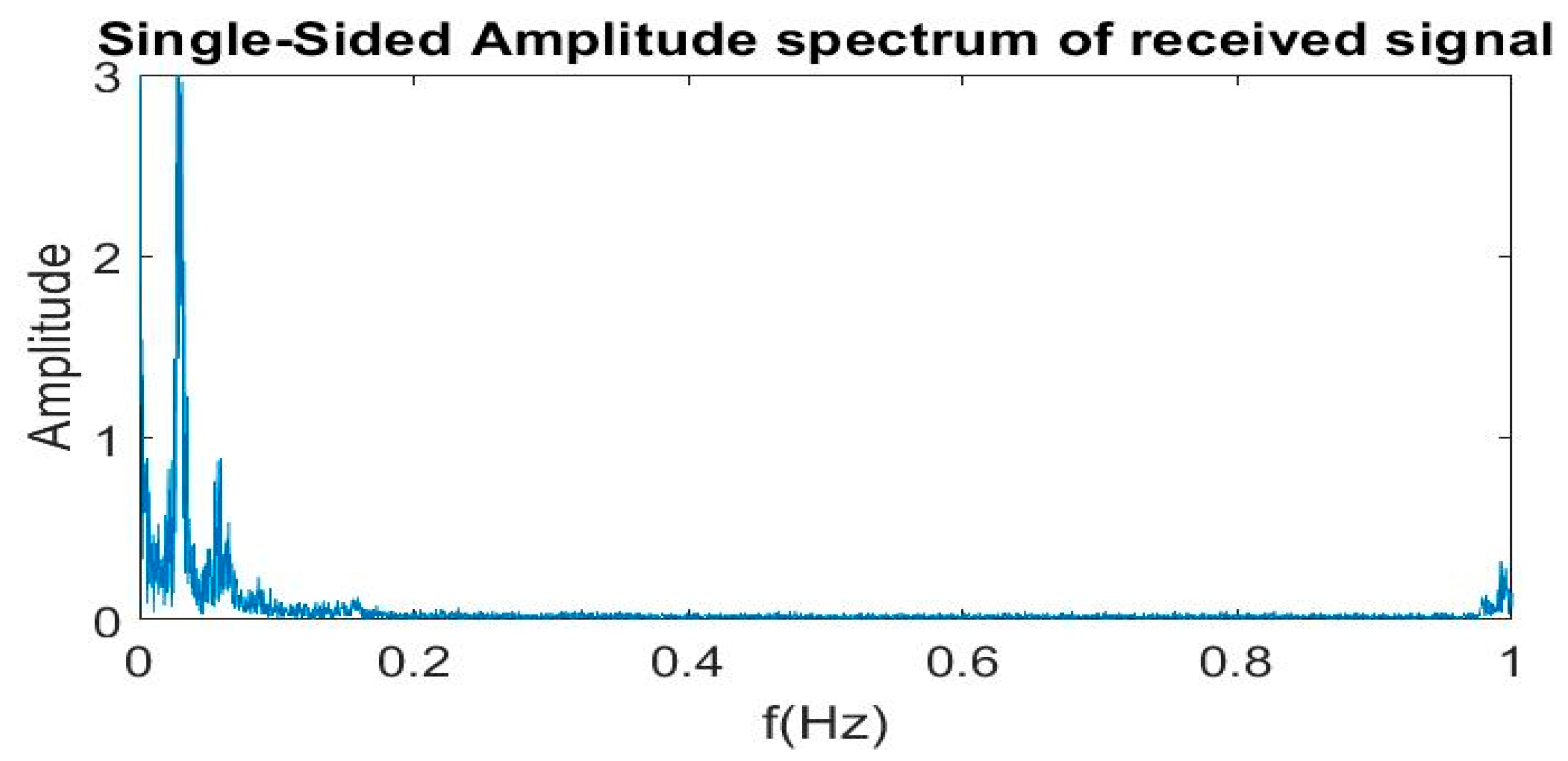

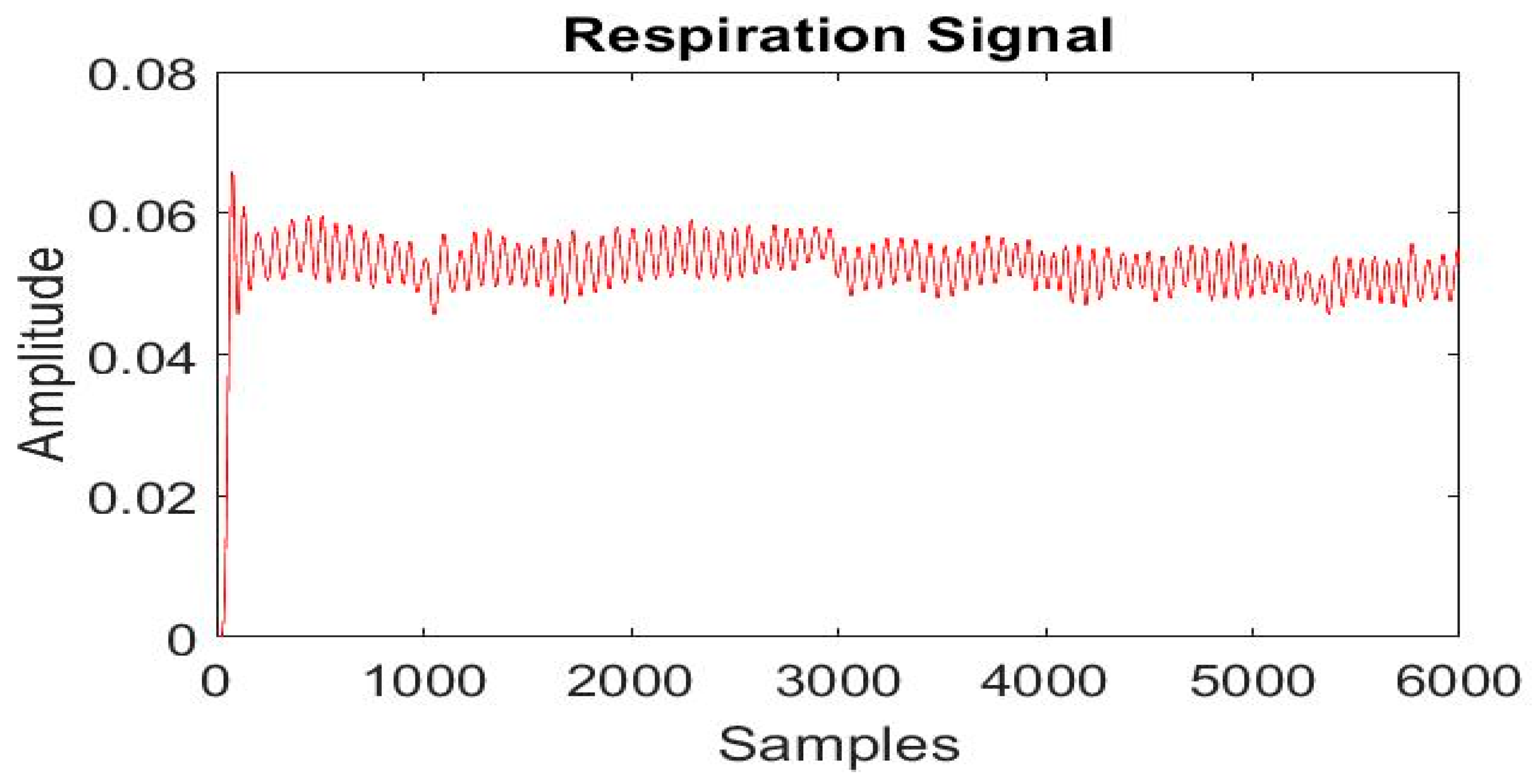

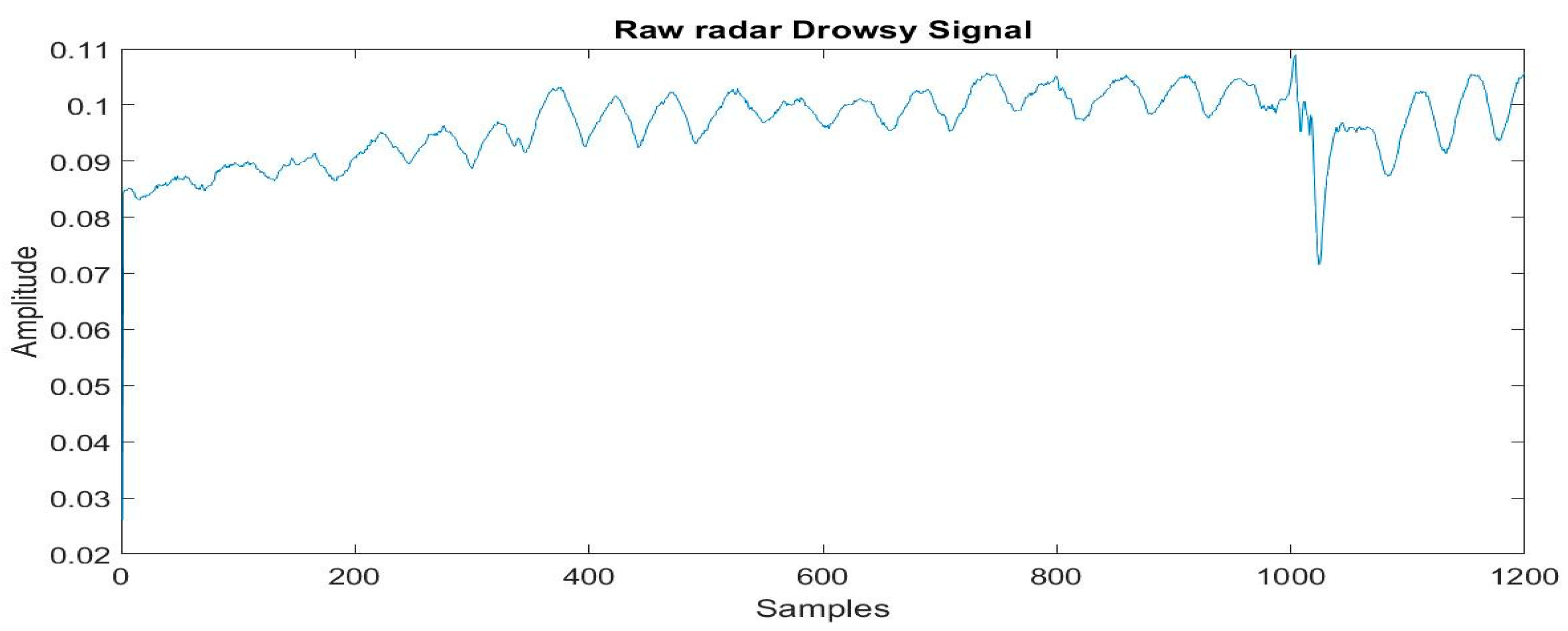

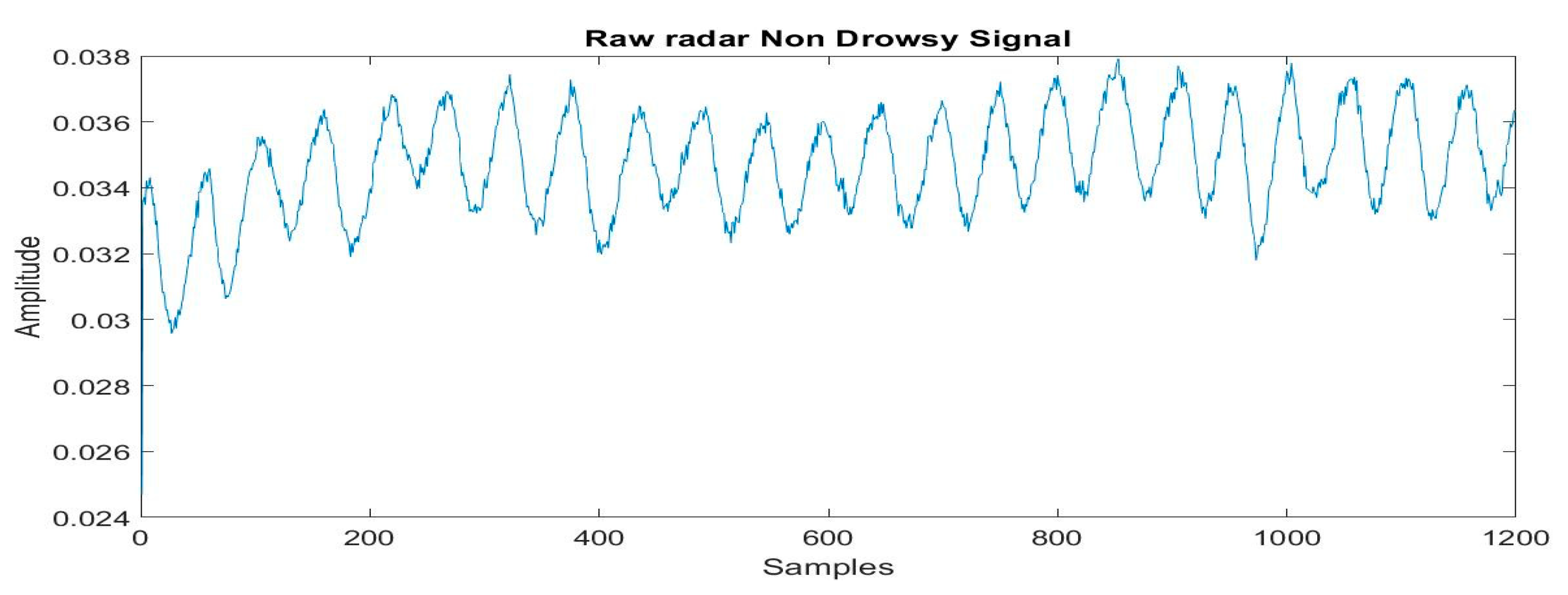

3. Materials and Methods

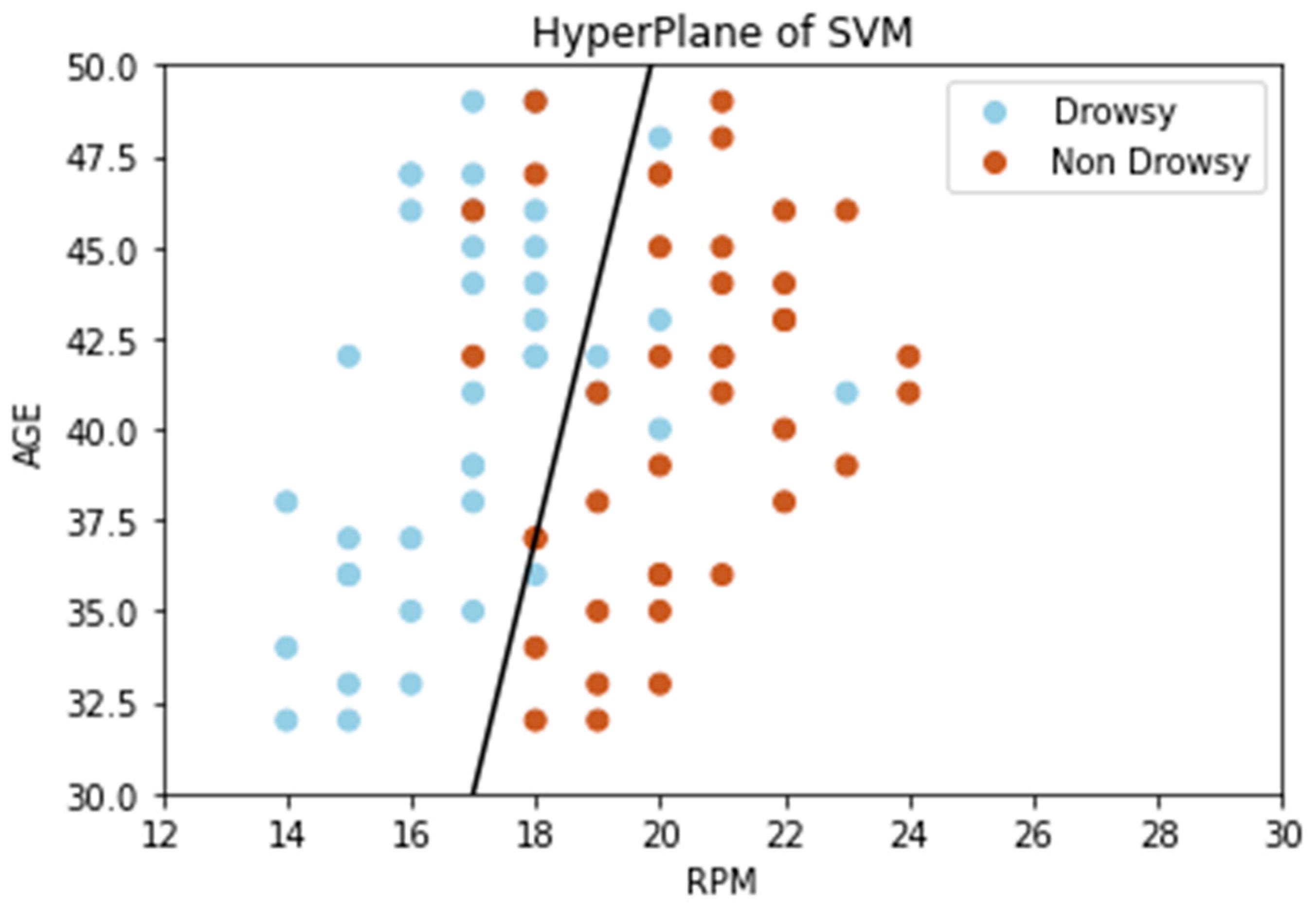

3.1. Support Vector Machine (SVM)

3.2. Decision Tree (DT)

3.3. Extra Tree Classifier (ETC)

3.4. Gradient Boosting Machine (GBM)

3.5. Logistic Regression (LR)

3.6. Multilayer Perceptron (MLP)

4. Results

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. Global Status Report on Road Safety 2018: Summary; World Health Organization: Geneva, Switzerland, 2018. [Google Scholar]

- Martiniuk, A.L.; Senserrick, T.; Lo, S.; Williamson, A.; Du, W.; Grunstein, R.R.; Woodward, M.; Glozier, N.; Stevenson, M.; Norton, R.; et al. Sleep-deprived young drivers and the risk for crash: The DRIVE prospective cohort study. JAMA 2013, 167, 647–655. [Google Scholar] [CrossRef] [PubMed]

- National Safety Council. Drivers Are Falling Asleep behind the Wheel. Available online: https://www.nsc.org/road-safety/safety-topics/fatigued-driving (accessed on 6 November 2020).

- Drowsy Driving and Automobile Crashes. Available online: https://www.nhtsa.gov/sites/nhtsa.gov/files/808707.pdf (accessed on 28 November 2020).

- Sahayadhas, A.; Sundaraj, K.; Murugappan, M. Detecting Driver Drowsiness Based on Sensors: A Review. Sensors 2012, 12, 16937–16953. [Google Scholar] [CrossRef]

- Patel, M.; Lal, S.; Kavanagh, D.; Rossiter, P. Applying neural network analysis on heart rate variability data to assess driver fatigue. Expert Syst. Appl. 2011, 38, 7235–7242. [Google Scholar] [CrossRef]

- Lin, F.-C.; Ko, L.-W.; Chuang, C.-H.; Su, T.-P.; Lin, C.-T. Generalized EEG-Based Drowsiness Prediction System by Using a Self-Organizing Neural Fuzzy System. IEEE Trans. Circuits Syst. I Regul. Pap. 2012, 59, 2044–2055. [Google Scholar] [CrossRef]

- Lin, C.-T.; Chang, C.-J.; Lin, B.-S.; Hung, S.-H.; Chao, C.-F.; Wang, I.-J. A Real-Time Wireless Brain–Computer Interface System for Drowsiness Detection. IEEE Trans. Biomed. Circuits Syst. 2010, 4, 214–222. [Google Scholar] [CrossRef] [PubMed]

- Khushaba, R.; Kodagoda, S.; Lal, S.; Dissanayake, G. Driver Drowsiness Classification Using Fuzzy Wavelet-Packet-Based Feature-Extraction Algorithm. IEEE Trans. Biomed. Eng. 2010, 58, 121–131. [Google Scholar] [CrossRef]

- Akin, M.; Kurt, M.B.; Sezgin, N.; Bayram, M. Estimating vigilance level by using EEG and EMG signals. Neural Comput. Appl. 2007, 17, 227–236. [Google Scholar] [CrossRef]

- Mikaili, M.; Mardi, Z.; Ashtiani, S.N.M. EEG-based drowsiness detection for safe driving using chaotic features and statistical tests. J. Med. Sign. Sens. 2011, 1, 130–137. [Google Scholar] [CrossRef]

- Budak, U.; Bajaj, V.; Akbulut, Y.; Atilla, O.; Sengur, A. An Effective Hybrid Model for EEG-Based Drowsiness Detection. IEEE Sens. J. 2019, 19, 7624–7631. [Google Scholar] [CrossRef]

- Mikaeili, M.; Noori, S.M.R. Driving drowsiness detection using fusion of electroencephalography, electrooculography, and driving quality signals. J. Med. Signals Sens. 2016, 6, 39–46. [Google Scholar] [CrossRef]

- Tabal, K.M.R.; Caluyo, F.S.; Ibarra, J.B.G. Microcontroller-Implemented Artificial Neural Network for Electrooculography-Based Wearable Drowsiness Detection System. In Advanced Computer and Communication Engineering Technology; Springer: Cham, Switzerland, 2015; pp. 461–472. [Google Scholar] [CrossRef]

- Ma, Z.; Li, B.C.; Yan, Z. Wearable driver drowsiness detection using electrooculography signal. In Proceedings of the 2016 IEEE Topical Conference on Wireless Sensors and Sensor Networks (WiSNet), Austin, TX, USA, 24–27 January 2016; pp. 41–43. [Google Scholar] [CrossRef]

- Leng, L.B.; Giin, L.B.; Chung, W.-Y. Wearable driver drowsiness detection system based on biomedical and motion sensors. In Proceedings of the 2015 IEEE Sensors, Busan, Korea, 1–4 November 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Chui, K.T.; Tsang, K.F.; Chi, H.R.; Wu, C.K.; Ling, B.W.-K. Electrocardiogram based classifier for driver drowsiness detection. In Proceedings of the IEEE 13th International Conference on Industrial Informatics (INDIN), Cambridge, UK, 22–24 July 2015. [Google Scholar] [CrossRef]

- Lee, H.; Lee, J.; Shin, M. Using Wearable ECG/PPG Sensors for Driver Drowsiness Detection Based on Distinguishable Pattern of Recurrence Plots. Electrons 2019, 8, 192. [Google Scholar] [CrossRef]

- Babaeian, M.; Mozumdar, M. Driver Drowsiness Detection Algorithms Using Electrocardiogram Data Analysis. In Proceedings of the 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019; pp. 0001–0006. [Google Scholar] [CrossRef]

- Mahmoodi, M.J.; Nahvi, A. Driver drowsiness detection based on classification of surface electromyography features in a driving simulator. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2019, 233, 395–406. [Google Scholar] [CrossRef]

- Boon-Leng, L.; Dae-Seok, L.; Boon-Giin, L. Mobile-based wearable-type of driver fatigue detection by GSR and EMG. In Proceedings of the TENCON 2015-2015 IEEE Region 10 Conference, Macao, China, 1–4 November 2015. [Google Scholar] [CrossRef]

- Douglas, N.J.; White, D.P.; Pickett, C.K.; Weil, J.V.; Zwillich, C.W. Respiration during sleep in normal man. Thorax 1982, 37, 840–844. [Google Scholar] [CrossRef]

- Xie, A. Effect of sleep on breathing-why recurrent apneas are only seen during sleep. J. Thorac. Dis. 2012, 4, 194. [Google Scholar] [PubMed]

- Yang, C.; Wang, X.; Mao, S. Respiration Monitoring With RFID in Driving Environments. IEEE J. Sel. Areas Commun. 2020, 39, 500–512. [Google Scholar] [CrossRef]

- Warwick, B.; Symons, N.; Chen, X.; Xiong, K. Detecting Driver Drowsiness Using Wireless Wearables. In Proceedings of the 2015 IEEE 12th International Conference on Mobile Ad Hoc and Sensor Systems, Dallas, TX, USA, 19–22 October 2015; pp. 585–588. [Google Scholar] [CrossRef]

- Kim, J.; Shin, M. Utilizing HRV-Derived Respiration Measures for Driver Drowsiness Detection. Electronics 2019, 8, 669. [Google Scholar] [CrossRef]

- Brown, R.; Ghavami, N.; Siddiqui, H.-U.; Adjrad, M.; Ghavami, M.; Dudley, S. Occupancy based household energy disaggregation using ultra wideband radar and electrical signature profiles. Energy Build. 2017, 141, 134–141. [Google Scholar] [CrossRef]

- Ghavami, M.; Michael, L.; Kohno, R. Ultra Wideband Signals and Systems in Communication Engineering; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Dinh, A.; Teng, D.; Wang, X. Radar Sensing Using Ultra Wideband—Design and Implementation; IntechOpen: Rijeka, Croatia, 2012. [Google Scholar] [CrossRef]

- Tsang, T.K.; El-Gamal, M.N. Ultra-wideband (UWB) communications systems: An overview. In Proceedings of the The 3rd International IEEE-NEWCAS Conference, Quebec, QC, Canada, 22 June 2005; pp. 381–386. [Google Scholar]

- Chong, C.-C.; Watanabe, F.; Inamura, H. Potential of UWB technology for the next generation wireless communications. In Proceedings of the 2006 IEEE Ninth International Symposium on Spread Spectrum Techniques and Applications, Manaus, Brasil, 28–31 August 2006; pp. 422–429. [Google Scholar]

- Rana, S.P.; Dey, M.; Siddiqui, H.U.; Tiberi, G.; Ghavami, M.; Dudley, S. UWB localization employing supervised learning method. In Proceedings of the 2017 IEEE 17th International Conference on Ubiquitous Wireless Broadband (ICUWB), Salamanca, Spain, 12–15 September 2017; pp. 1–5. [Google Scholar]

- Rana, S.; Dey, M.; Brown, R.; Siddiqui, H.; Dudley, S. Remote Vital Sign Recognition through Machine Learning augmented UWB. In Proceedings of the 12th European Conference on Antennas and Propagation, London, UK, 9–13 April 2018. [Google Scholar] [CrossRef]

- Schleicher, B.; Nasr, I.; Trasser, A.; Schumacher, H. IR-UWB Radar Demonstrator for Ultra-Fine Movement Detection and Vital-Sign Monitoring. IEEE Trans. Microw. Theory Tech. 2013, 61, 2076–2085. [Google Scholar] [CrossRef]

- Khan, F.; Cho, S.H. A Detailed Algorithm for Vital Sign Monitoring of a Stationary/Non-Stationary Human through IR-UWB Radar. Sensors 2017, 17, 290. [Google Scholar] [CrossRef]

- Schires, E.; Georgiou, P.; Lande, T.S. Vital Sign Monitoring Through the Back Using an UWB Impulse Radar with Body Coupled Antennas. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 292–302. [Google Scholar] [CrossRef]

- Sharma, M.K.; Bundele, M.M. Design & analysis of k-means algorithm for cognitive fatigue detection in vehicular driver using oximetry pulse signal. In Proceedings of the 2015 International Conference on Computer, Communication and Control (IC4), Indore, India, 10–12 September 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Solaz, J.; Laparra-Hernández, J.; Bande, D.; Rodríguez, N.; Veleff, S.; Gerpe, J.; Medina, E. Drowsiness Detection Based on the Analysis of Breathing Rate Obtained from Real-time Image Recognition. Transp. Res. Procedia 2016, 14, 3867–3876. [Google Scholar] [CrossRef]

- Tayibnapis, I.R.; Koo, D.-Y.; Choi, M.-K.; Kwon, S. A novel driver fatigue monitoring using optical imaging of face on safe driving system. In Proceedings of the 2016 International Conference on Control, Electronics, Renewable Energy and Communications (ICCEREC), Bandung, Indonesia, 13–15 September 2016. [Google Scholar] [CrossRef]

- Poh, M.-Z.; McDuff, D.J.; Picard, R.W. Advancements in Noncontact, Multiparameter Physiological Measurements Using a Webcam. IEEE Trans. Biomed. Eng. 2010, 58, 7–11. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; pp. 511–518. [Google Scholar]

- Allen, J. Photoplethysmography and its application in clinical physiological measurement. Psychol. Meas. 2007, 28, R1. [Google Scholar] [CrossRef] [PubMed]

- Kiashari, S.E.H.; Nahvi, A.; Bakhoda, H.; Homayounfard, A.; Tashakori, M. Evaluation of driver drowsiness using respiration analysis by thermal imaging on a driving simulator. Multim. Tools Appl. 2020, 79, 17793–17815. [Google Scholar] [CrossRef]

- Wang, D.; Shen, P.; Wang, T.; Xiao, Z. Fatigue detection of vehicular driver through skin conductance, pulse oximetry and respiration: A random forest classifier. In Proceedings of the 2017 IEEE 9th International Conference on Communication Software and Networks (ICCSN), Guanzhou, China, 6–8 May 2017; pp. 1162–1166. [Google Scholar]

- Leicht, L.; Vetter, P.; Leonhardt, S.; Teichmann, D. The PhysioBelt: A safety belt integrated sensor system for heart activity and respiration. In Proceedings of the 2017 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Vienna, Austria, 27–28 June 2017; pp. 191–195. [Google Scholar]

- Tateno, S.; Guan, X.; Cao, R.; Qu, Z. Development of drowsiness detection system based on respiration changes using heart rate monitoring. In Proceedings of the 2018 57th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Nara, Japan, 11–14 September 2018; pp. 1664–1669. [Google Scholar]

- Gu, X.; Zhang, L.; Xiao, Y.; Zhang, H.; Hong, H.; Zhu, X. Non-contact Fatigue Driving Detection Using CW Doppler Radar. In Proceedings of the 2018 IEEE MTT-S International Wireless Symposium (IWS), Chengdu, China, 6–10 May 2018; pp. 1–3. [Google Scholar]

- Lavanya, K.; Bajaj, S.; Tank, P.; Jain, S. Handwritten digit recognition using hoeffding tree, decision tree and random forests—A comparative approach. In Proceedings of the 2017 International Conference on Computational Intelligence in Data Science (ICCIDS), Chennai, China, 2–3 June 2017; pp. 1–6. [Google Scholar]

- Guede-Fernandez, F.; Fernandez-Chimeno, M.; Ramos-Castro, J.; Garcia-Gonzalez, M.A. Driver Drowsiness Detection Based on Respiratory Signal Analysis. IEEE Access 2019, 7, 81826–81838. [Google Scholar] [CrossRef]

- Novelda. X. Available online: https://novelda.com/x4-soc.html (accessed on 28 November 2020).

- Kim, D.-H. Lane Detection Method with Impulse Radio Ultra-Wideband Radar and Metal Lane Reflectors. Sensors 2020, 20, 324. [Google Scholar] [CrossRef]

- Corp, L. X4M. Available online: https://www.laonuri.com/en/product/x4m300/ (accessed on 28 November 2020).

- Cavagnaro, M.; Pisa, S.; Pittella, E. Safety Aspects of People Exposed to Ultra Wideband Radar Fields. Int. J. Antennas Propag. 2013, 2013, 1–7. [Google Scholar] [CrossRef][Green Version]

- Novelda. X4M300 Datasheet. Available online: http://laonuri.techyneeti.com/wp-content/uploads/2019/02/X4M300_DATASHEET.pdf (accessed on 28 November 2020).

- Quintana, D.S.; Elstad, M.; Kaufmann, T.; Brandt, C.L.; Haatveit, B.; Haram, M.; Nerhus, M.; Westlye, L.T.; Andreassen, O.A. Resting-state high-frequency heart rate variability is related to respiratory frequency in individuals with severe mental illness but not healthy controls. Sci. Rep. 2016, 6, 37212. [Google Scholar] [CrossRef]

- Ahmed, A.K.; Harness, J.B.; Mearns, A.J. Respiratory control of heart rate. Eur. J. Appl. Physiol. Occup. Physiol. 1982, 50, 95–104. [Google Scholar] [CrossRef]

- Tiinanen, S.; Kiviniemi, A.; Tulppo, M.; Seppänen, T. RSA component extraction from cardiovascular signals by combining adaptive filtering and PCA derived respiration. In Proceedings of the Computing in Cardiology, Belfast, UK, 26–29 September 2010; pp. 73–76. [Google Scholar]

- Kircher, M.; Lenis, G.; Dössel, O. Separating the effect of respiration from the heart rate variability for cases of constant harmonic breathing. Curr. Dir. Biomed. Eng. 2015, 1, 46–49. [Google Scholar] [CrossRef]

- SETHI, A. Support Vector Regression Tutorial for Machine Learning. Available online: https://www.analyticsvidhya.com/blog/2020/03/support-vector-regression-tutorial-for-machine-learning/ (accessed on 11 December 2020).

- Sehra, C. Decision Trees Explained Easily. Available online: https://chiragsehra42.medium.com/decision-trees-explained-easily-28f23241248 (accessed on 14 December 2020).

- Brownlee, J. How to Develop an Extra Trees Ensemble with Python. Available online: https://machinelearningmastery.com/extra-trees-ensemble-with-python/#:~:text=The%20Extra%20Trees%20algorithm%20works,in%20the%20case%20of%20classification (accessed on 14 December 2020).

- Singh, H. Understanding Gradient Boosting Machines. Available online: https://towardsdatascience.com/understanding-gradient-boosting-machines-9be756fe76ab (accessed on 14 December 2020).

- Abbas, N.M. What Is Logistic Regression? Available online: https://medium.com/swlh/what-is-logistic-regression-62807de62efa (accessed on 14 December 2020).

- Cutler, A.; Cutler, D.R.; Stevens, J.R. Random forests. In Ensemble Machine Learning; Springer: Berlin/Heidelberg, Germany, 2012; pp. 157–175. [Google Scholar]

- Kamaras, G.; Geller, T.; Dioszeghy, C. Effect of road traffic accident contaminants on pulse oximetry among normoxaemic volunteers. Austral. J. Paramed. 2010, 8. [Google Scholar] [CrossRef]

- Clinical Procedures for Safer Patient Care. Available online: https://opentextbc.ca/clinicalskills/ (accessed on 14 December 2020).

| Subject | Test Time | Respiration Acquired by Pulse OXIMETER | Respiration Acquired by Proposed IR-UWB Method |

|---|---|---|---|

| Subject 1 | 09:57–09:58 | 15 | 15 |

| 11:03–11:04 | 18 | 18 | |

| Subject 2 | 10:05–10:06 | 16 | 16 |

| 11:09–11:10 | 17 | 17 | |

| Subject 3 | 10:11–10:12 | 21 | 21 |

| 11:17–11:18 | 12 | 12 |

| Subject | Respiration Rate Acquired by Pulse Oximeter | Respiration Rate Acquired from Chest Movement by Proposed Method | Car Speed in kms/Hour |

|---|---|---|---|

| Subject_1 | 15 | 16 | 20 |

| 17 | 17 | 40 | |

| Subject_2 | 19 | 18 | 20 |

| 17 | 18 | 40 | |

| Subject_3 | 16 | 17 | 60 |

| 15 | 15 | 45 | |

| Subject_4 | 17 | 18 | 20 |

| 16 | 16 | 30 | |

| Subject_5 | 19 | 18 | 60 |

| 19 | 20 | 50 | |

| Subject_6 | 17 | 17 | 50 |

| 19 | 19 | 20 |

| Classifier | Values of Parameters Used during Training in This System |

|---|---|

| SVM | Kernel = ‘linear’, c = 1.0, gamma = ‘scale’, degree = 3 |

| DT | Criterion = ‘gini’, splitter = best, maximum depth of tree = none, minimum number of samples = 2, minimum required leaf nodes = 1, random states = none, maximum leaf nodes = none, minimum impurity decrease = 0.0 |

| ETC | Number of estimators/trees = 100, criterion = entropy, minimum number of samples = 2, maximum number of features to consider during classification = auto |

| GBM | Loss = deviance, number of estimators = 100, criterion = friedman_mse, minimum number of samples = 2, minimum samples to be a leaf node = 1, maximum depth = 5 |

| LR | Penalty = L2 regularization (ridge regression), solver = liblinear, maximum iteration = 100 |

| MLP | Hidden layers = 2, neurons = 100 for each layer, epochs = 700, activation = ‘relu’, loss_function = ‘stochastic gradient’, solver = ‘adam’ |

| Classifier | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| SVM | 87% | 0.86 | 0.88 | 0.86 |

| LR | 70% | 0.68 | 0.69 | 0.68 |

| GBM | 62% | 0.59 | 0.59 | 0.59 |

| ETC | 70% | 0.68 | 0.69 | 0.68 |

| DT | 62% | 0.59 | 0.59 | 0.59 |

| MLP | 70% | 0.68 | 0.69 | 0.68 |

| Threshold 18.5 | 87% | 0.73 | 0.75 | 0.73 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Siddiqui, H.U.R.; Saleem, A.A.; Brown, R.; Bademci, B.; Lee, E.; Rustam, F.; Dudley, S. Non-Invasive Driver Drowsiness Detection System. Sensors 2021, 21, 4833. https://doi.org/10.3390/s21144833

Siddiqui HUR, Saleem AA, Brown R, Bademci B, Lee E, Rustam F, Dudley S. Non-Invasive Driver Drowsiness Detection System. Sensors. 2021; 21(14):4833. https://doi.org/10.3390/s21144833

Chicago/Turabian StyleSiddiqui, Hafeez Ur Rehman, Adil Ali Saleem, Robert Brown, Bahattin Bademci, Ernesto Lee, Furqan Rustam, and Sandra Dudley. 2021. "Non-Invasive Driver Drowsiness Detection System" Sensors 21, no. 14: 4833. https://doi.org/10.3390/s21144833

APA StyleSiddiqui, H. U. R., Saleem, A. A., Brown, R., Bademci, B., Lee, E., Rustam, F., & Dudley, S. (2021). Non-Invasive Driver Drowsiness Detection System. Sensors, 21(14), 4833. https://doi.org/10.3390/s21144833