Stream-Based Visually Lossless Data Compression Applying Variable Bit-Length ADPCM Encoding

Abstract

1. Introduction

- We developed a new ADPCM by adding a mechanism for variable bit length in the conventional method. It is able to control the compressed data size and the image quality dynamically. The video image quality is acceptable in the industrial applications.

- We proved that a lossless data compression is effective by applying it after the proposed ADPCM with variable bit length. Applying our previous work, ASE coding, we evaluated the effect of the lossless data compression by experimental evaluation.

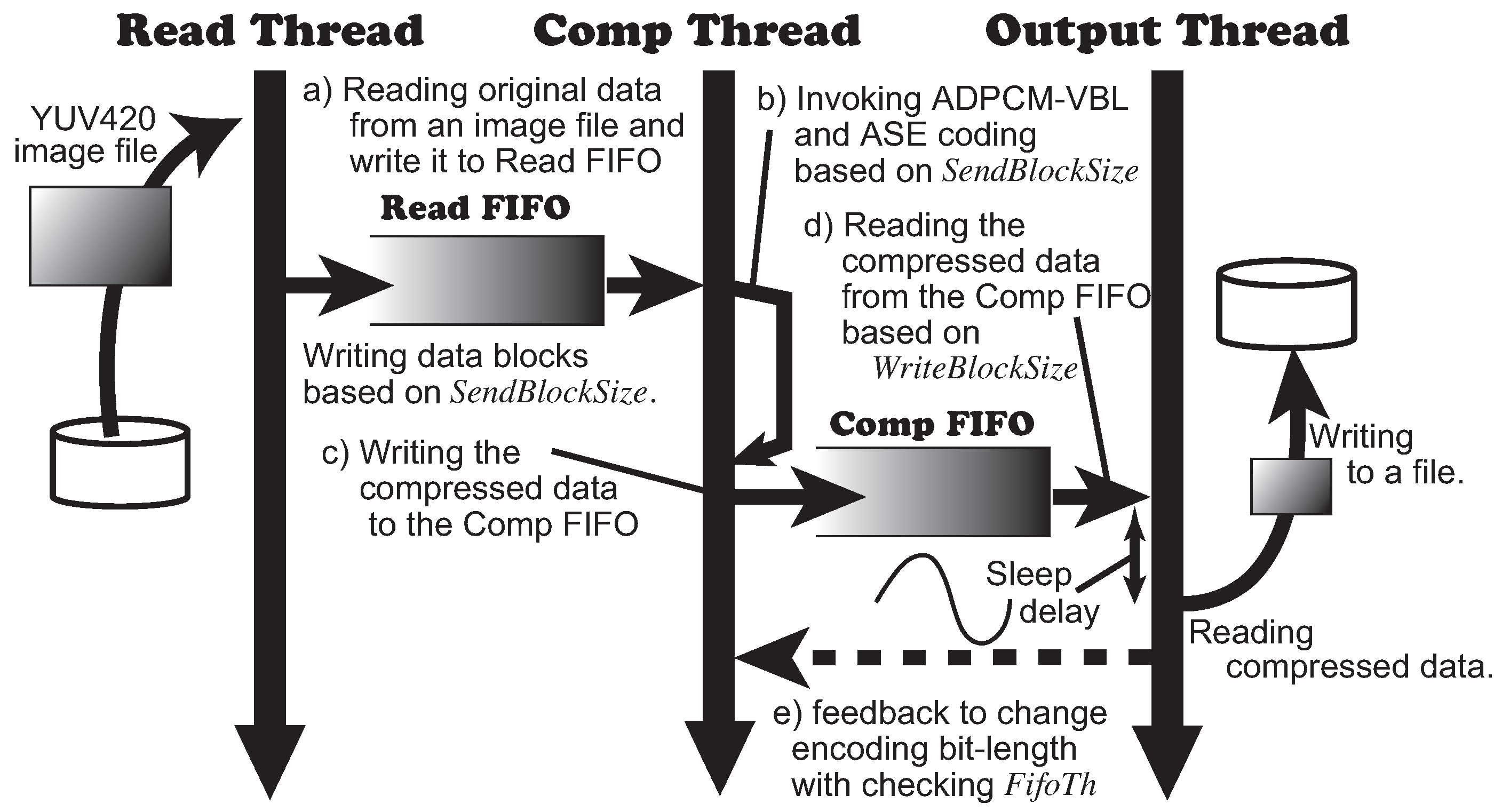

- We proposed a low latency video transfer system by combining the novel ADPCM with variable bit length and a lossless data compression. We employed our previous work, ASE coding, to implement the stream-based manner. The system works without any stalling during the data compression and achieves low latency for video data stream. We proved the validity of the system from experimental evaluations by software emulation.

2. Background and Definitions

2.1. Visual Data Compression

2.2. Visual Lossless Compression Methods

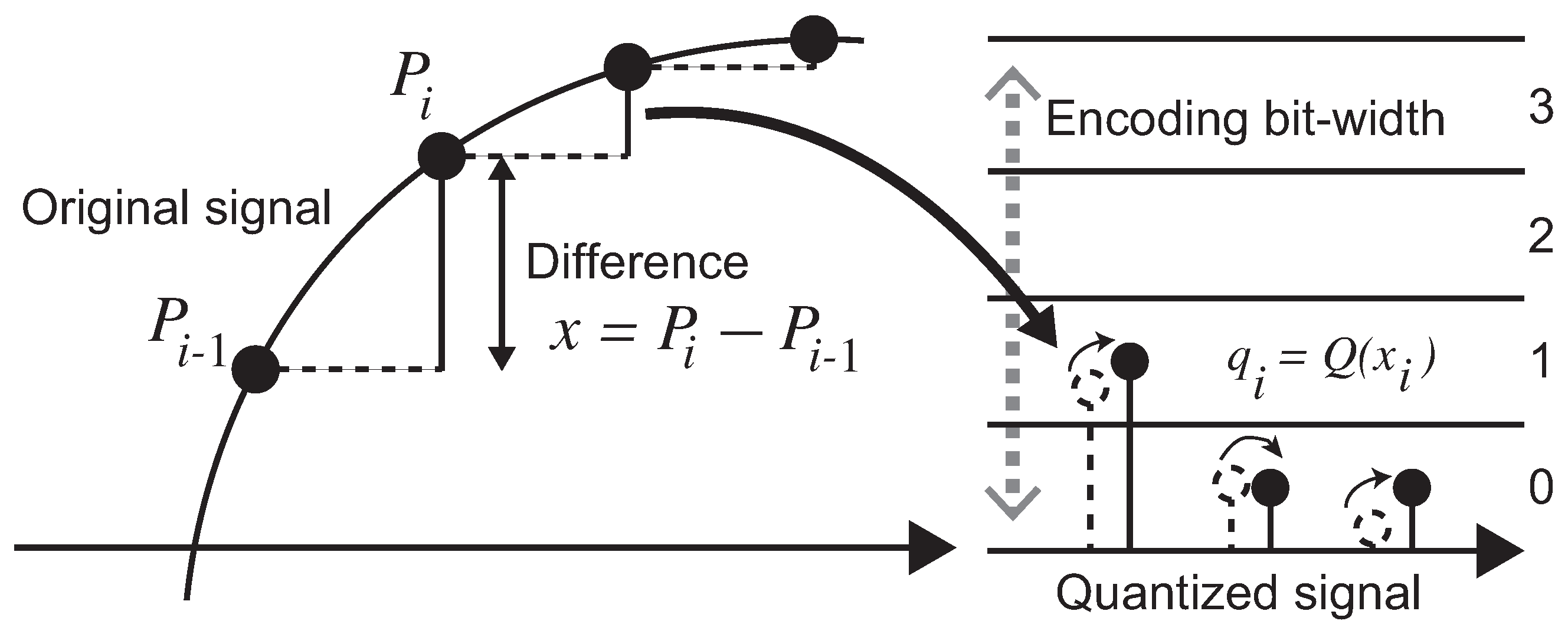

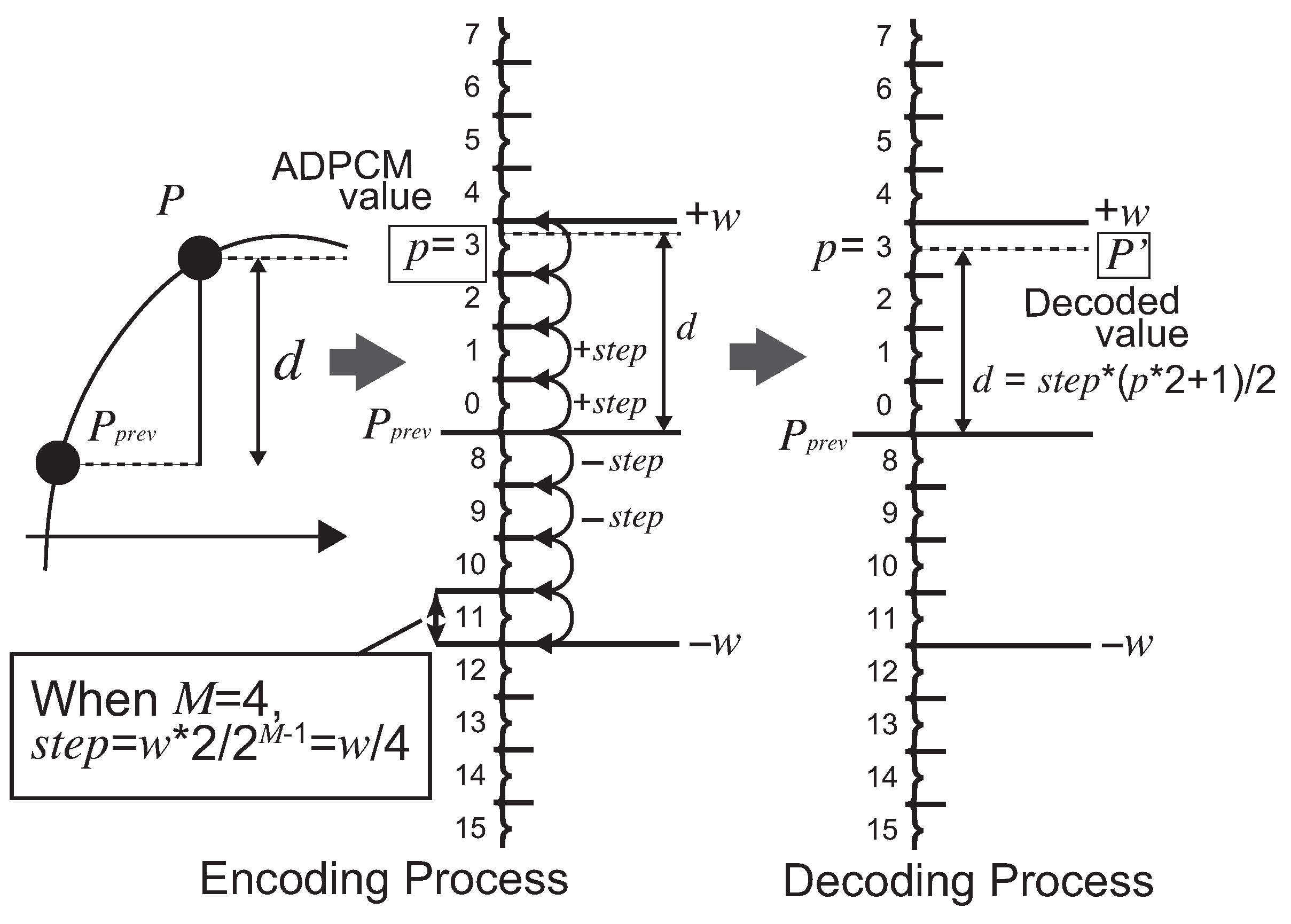

2.3. ADPCM

| Algorithm 1 Encoder of ADPCM [19]. |

| Require:P // a unit of data to be compressed. Ensure: p // an ADPCM value. W_INIT P_INIT if then if then else end if else if then else end if end if |

| Algorithm 2 Decoder of ADPCM [19]. |

| Require:p // an ADPCM value. Ensure: P // a unit of data to be compressed. W_INIT P_INIT if then else end if |

2.4. Stream-Based Lossless Data Compression

2.5. Discussion

3. Visually Lossless Data Compression Applying Variable Bit-Length ADPCM

3.1. System Modelling

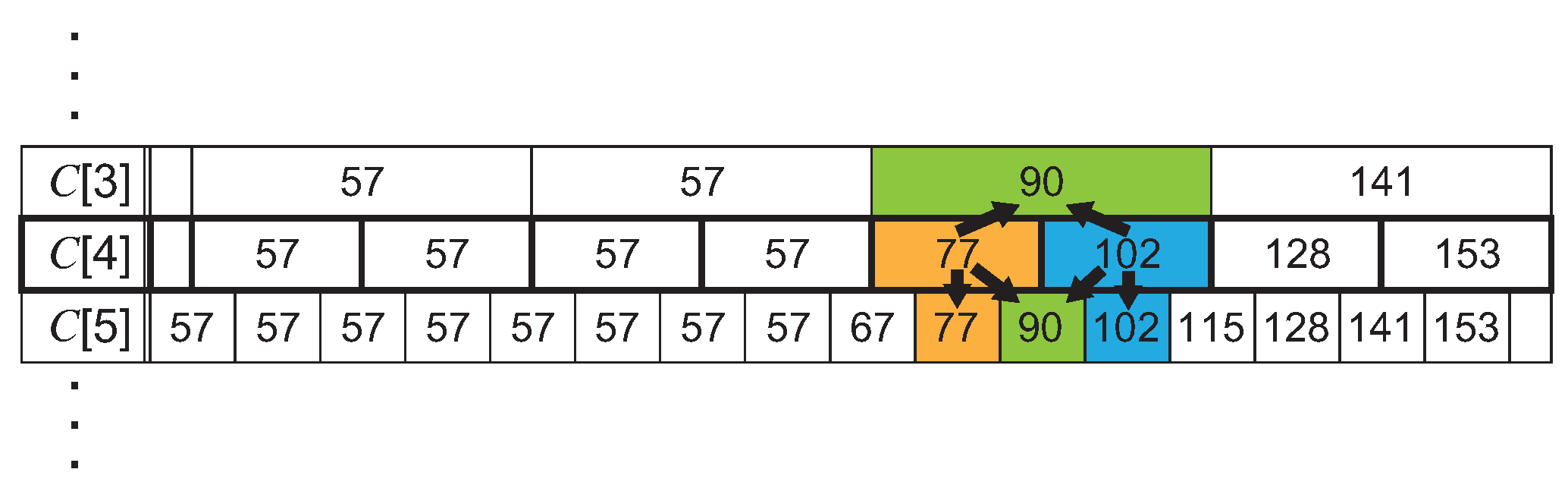

3.2. ADPCM with Variable Bit-Length Control

| Algorithm 3 Initialize the array of the predicted offsets. |

| Require:M // the number of bits for ADPCM encoder. Require: // the array of the initial values for C[N/4]. Ensure: C // the array used for the predicted offset amount. // When M=N/2 for to do end for // When M<N/2 for downto 1 do for to do end for end for // When M>N/2 for to N do while do end while end for |

| Algorithm 4 Initialization for variable bit-length ADPCM. |

| Require:N // N bit Pixel element. Require: C_INIT[ ] // N bit Pixel element. W_INIT P_INIT M_INIT |

| Algorithm 5 Variable bit-length ADPCM encoder. |

| Require:P // N bit Pixel element. Require: M // bits of the current ADPCM value. Require: // if the predicted offset is initialized or not. Require: // a predicted offset to be initialized. Require: // if the is initialized or not. Require: // an original data to be set to . Ensure: p // ADPCM value. if then return end if if then if then else end if end if if then end if if then end if if then if then else end if else if then else end if end if |

| Algorithm 6 Variable bit-length ADPCM decoder. |

| Require:p // ADPCM value. Require: M // bits of the current ADPCM value. Require: // if the predicted offset is initialized or not. Require: // a predicted offset to be initialized. Require: // if the is initialized or not. Require: // an original data to be set to . Ensure: P // N bit Pixel element. if then return end if if then if then else end if end if if then end if if then end if if then else end if |

3.3. Application Examples with Variable Bit-Length ADPCM

| Algorithm 7 Producer function. |

| Require: Require: Require: Ensure: Ensure: if then else end if while do if then WIDTH else end if if WIDTH then end if end while if then end if ; |

| Algorithm 8 Consumer function. |

| Require: Require: Require: Ensure: Ensure: ifthen end if while do if then WIDTH else end if if WIDTH then end if end while |

4. Experimental Evaluation

4.1. Experimental Setup

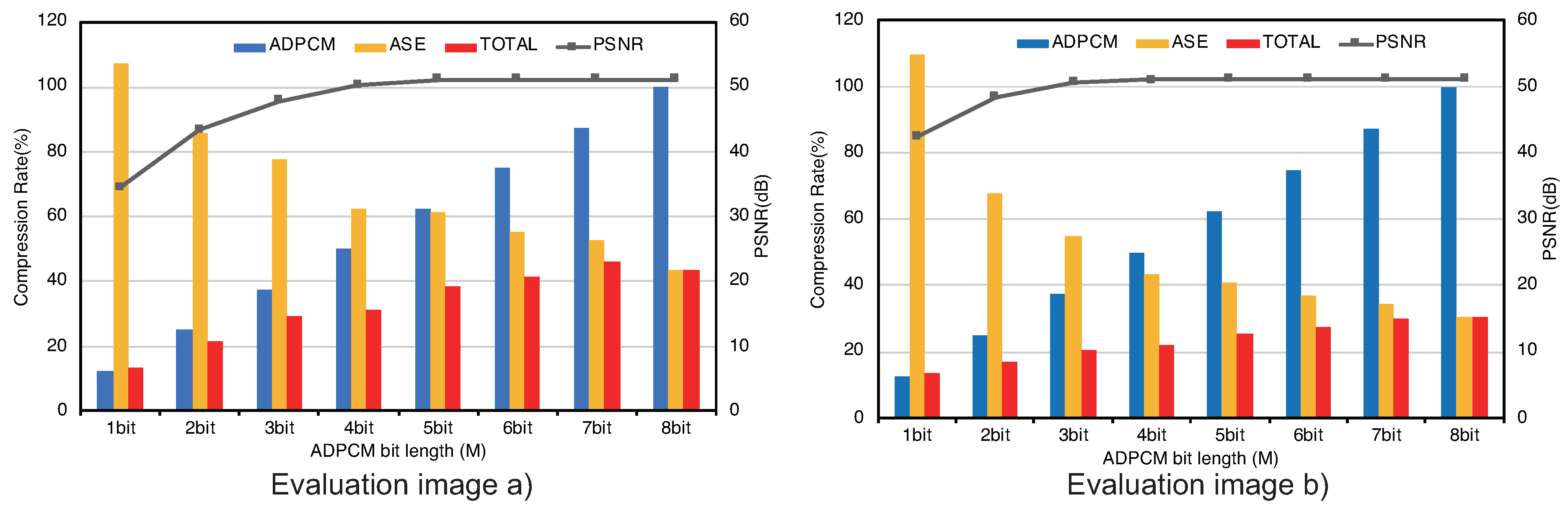

4.2. Evaluation for Variable Bit-Length ADPCM Encoding

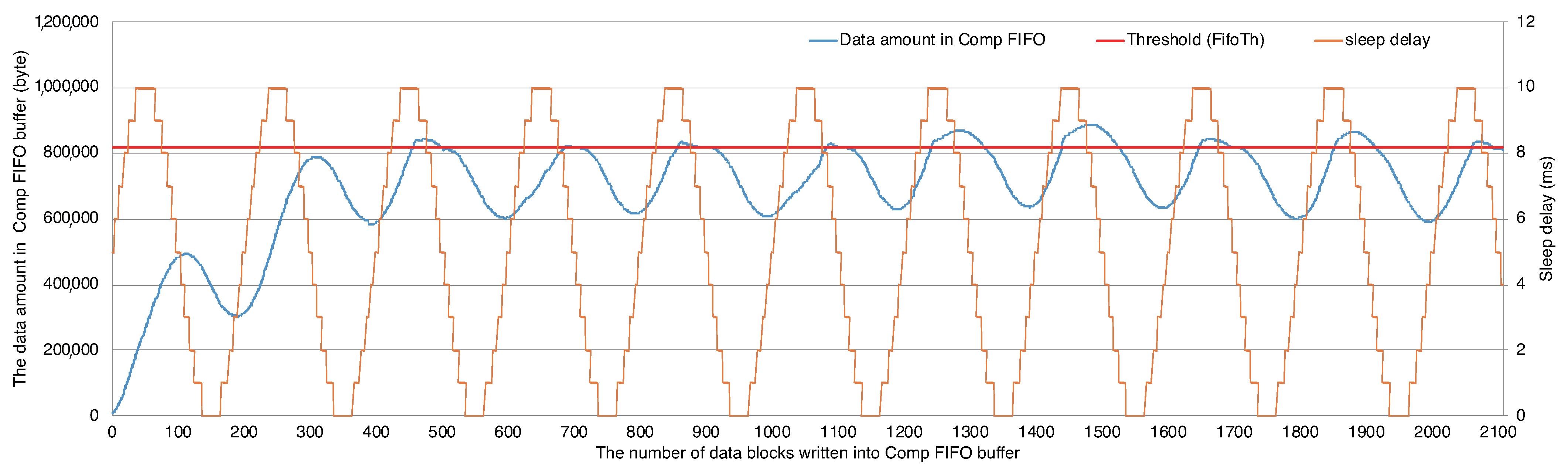

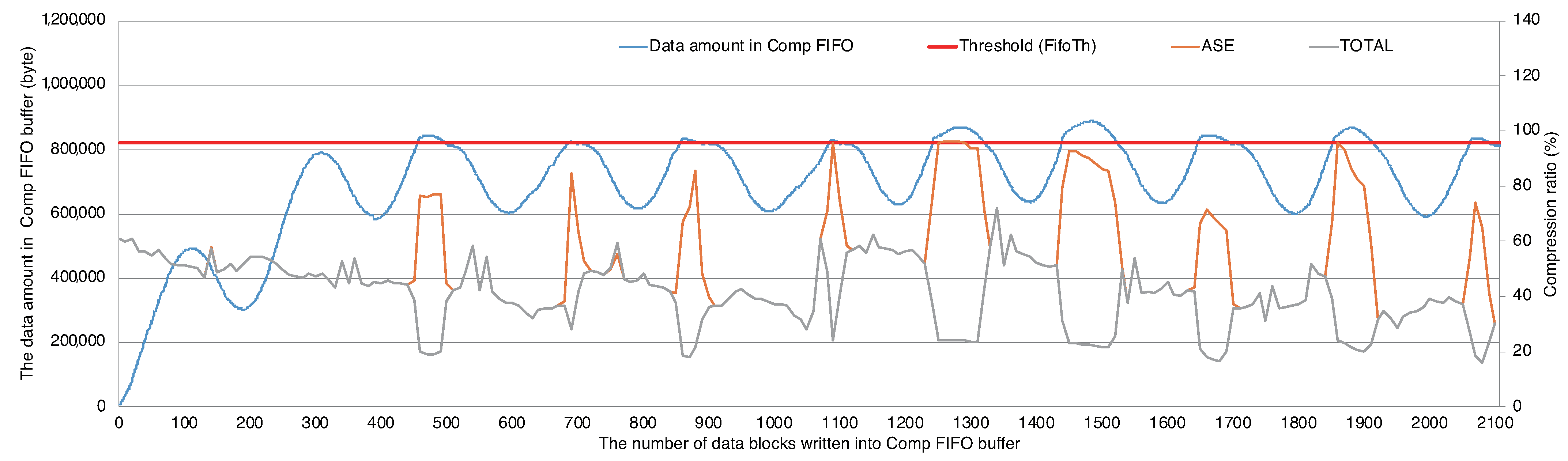

4.3. Evaluation for Video Transfer System with ADPCM-VBL

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shidik, G.F.; Noersasongko, E.; Nugraha, A.; Andono, P.N.; Jumanto, J.; Kusuma, E.J. A Systematic Review of Intelligence Video Surveillance: Trends, Techniques, Frameworks, and Datasets. IEEE Access 2019, 7, 170457–170473. [Google Scholar] [CrossRef]

- Gautam, A.; Singh, S. Trends in Video Object Tracking in Surveillance: A Survey. In Proceedings of the 2019 Third International conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud), Palladam, India, 12–14 December 2019; pp. 729–733. [Google Scholar]

- Khurana, R.; Kushwaha, A.K.S. Deep Learning Approaches for Human Activity Recognition in Video Surveillance—A Survey. In Proceedings of the 2018 First International Conference on Secure Cyber Computing and Communication (ICSCCC), Jalandhar, India, 15–17 December 2018; pp. 542–544. [Google Scholar]

- Olagoke, A.S.; Ibrahim, H.; Teoh, S.S. Literature Survey on Multi-Camera System and Its Application. IEEE Access 2020, 8, 172892–172922. [Google Scholar] [CrossRef]

- Bellavista, P.; Chatzimisios, P.; Foschini, L.; Paradisioti, M.; Scotece, D. A Support Infrastructure for Machine Learning at the Edge in Smart City Surveillance. In Proceedings of the 2019 IEEE Symposium on Computers and Communications (ISCC), Barcelona, Spain, 29 June–3 July 2019; pp. 1189–1194. [Google Scholar]

- Wang, F.; Zhang, M.; Wang, X.; Ma, X.; Liu, J. Deep Learning for Edge Computing Applications: A State-of-the-Art Survey. IEEE Access 2020, 8, 58322–58336. [Google Scholar] [CrossRef]

- Lichtsteiner, P.; Posch, C.; Delbruck, T. A 128× 128 120 dB 15 μs Latency Asynchronous Temporal Contrast Vision Sensor. IEEE J. Solid-State Circuits 2008, 43, 566–576. [Google Scholar] [CrossRef]

- Baby, S.A.; Vinod, B.; Chinni, C.; Mitra, K. Dynamic Vision Sensors for Human Activity Recognition. In Proceedings of the 2017 4th IAPR Asian Conference on Pattern Recognition (ACPR), Nanjing, China, 26–29 November 2017; pp. 316–321. [Google Scholar]

- Sandini, G.; Questa, P.; Scheffer, D.; Diericks, B.; Mannucci, A. A retina-like CMOS sensor and its applications. In Proceedings of the 2000 IEEE Sensor Array and Multichannel Signal Processing Workshop, Cambridge, MA, USA, 17 March 2000; pp. 514–519. [Google Scholar]

- Tung, Y.S.; Wu, J.L. Architecture design, system implementation, and applications of MPEG-4 systems. In Proceedings of the Workshop and Exhibition on MPEG-4, San Jose, CA, USA, 20–20 June 2001; pp. 37–40. [Google Scholar]

- Silvestre-Blanes, J.; Almeida, L.; Marau, R.; Pedreiras, P. Online QoS Management for Multimedia Real-Time Transmission in Industrial Networks. IEEE Trans. Ind. Electron. 2011, 58, 1061–1071. [Google Scholar] [CrossRef]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A Survey of Deep Learning-Based Object Detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Cengil, E.; Çinar, A.; Güler, Z. A GPU-based convolutional neural network approach for image classification. In Proceedings of the 2017 International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 16–17 September 2017; pp. 1–6. [Google Scholar]

- Reuther, A.; Michaleas, P.; Jones, M.; Gadepally, V.; Samsi, S.; Kepner, J. Survey of Machine Learning Accelerators. In Proceedings of the 2020 IEEE High Performance Extreme Computing Conference (HPEC), Waltham, MA, USA, 22–24 September 2020; pp. 1–12. [Google Scholar]

- Duan, L.; Sun, W.; Zhang, X.; Wang, S.; Chen, J.; Yin, J.; See, S.; Huang, T.; Kot, A.C.; Gao, W. Fast MPEG-CDVS Encoder with GPU-CPU Hybrid Computing. IEEE Trans. Image Process. 2018, 27, 2201–2216. [Google Scholar] [CrossRef] [PubMed]

- Yin, H.; Jia, H.; Zhou, J.; Gao, Z. Survey on Algorithm and VLSI Architecture for MPEG-Like Video Coder. J. Signal Process. Syst. 2017, 88, 357–410. [Google Scholar] [CrossRef]

- HDMI Licensing Administrator, Inc. Available online: https://www.hdmi.org (accessed on 21 February 2021).

- Frederick, W.; Sandy, M. VESA Display Stream Compression; VESA: Milpitas, CA, USA, 2014. [Google Scholar]

- Cummiskey, P.; Jayant, N.S.; Flanagan, J.L. Adaptive quantization in differential PCM coding of speech. Bell Syst. Tech. J. 1973, 52, 1105–1118. [Google Scholar] [CrossRef]

- Yamagiwa, S.; Hayakawa, E.; Marumo, K. Stream-Based Lossless Data Compression Applying Adaptive Entropy Coding for Hardware-Based Implementation. Algorithms 2020, 13, 159. [Google Scholar] [CrossRef]

- Hussain, A.; Al-Fayadh, A.; Radi, N. Image compression techniques: A survey in lossless and lossy algorithms. Neurocomputing 2018, 300, 44–69. [Google Scholar] [CrossRef]

- Adams, M.D.; Ward, R. Wavelet transforms in the JPEG-2000 standard. In Proceedings of the 2001 IEEE Pacific Rim Conference on Communications, Computers and Signal Processing, Victoria, BC, Canada, 26–28 August 2001; Volume 1, pp. 160–163. [Google Scholar]

- Favalli, L.; Mecocci, A.; Moschetti, F. Object tracking for retrieval applications in MPEG-2. IEEE Trans. Circuits Syst. Video Technol. 2000, 10, 427–432. [Google Scholar] [CrossRef]

- Sullivan, G.J.; Ohm, J.; Han, W.; Wiegand, T. Overview of the High Efficiency Video Coding (HEVC) Standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 2, 1649–1668. [Google Scholar] [CrossRef]

- Salah, M.; El-Shweky, B.; ElKholy, K.; Helmy, A.; Ismail, Y.; Salah, K. HEVC Implementation for IoT Applications. In Proceedings of the 2018 30th International Conference on Microelectronics (ICM), Sousse, Tunisia, 16–19 December 2018; pp. 295–298. [Google Scholar]

- Blau, Y.; Michaeli, T. Rethinking lossy compression: The rate-distortion-perception tradeoff. In Proceedings of the 36th International Conference on Machine Learning (PMLR 97), Long Beach, CA, USA, 9–15 June 2019; pp. 675–685. [Google Scholar]

- Silvestre-Blanes, J. Structural similarity image quality reliability: Determining parameters and window size. Signal Process. 2011, 91, 1012–1020. [Google Scholar] [CrossRef]

- Flynn, D.; Marpe, D.; Naccari, M.; Nguyen, T.; Rosewarne, C.; Sharman, K.; Sole, J.; Xu, J. Overview of the Range Extensions for the HEVC Standard: Tools, Profiles, and Performance. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 4–19. [Google Scholar] [CrossRef]

- Wu, C.H.; Irwin, J.D.; Dai, F.F. Enabling multimedia applications for factory automation. IEEE Trans. Ind. Electron. 2001, 48, 913–919. [Google Scholar]

- Sector, I.T.S. 40, 32, 24, 16 kbit/s Adaptive Differential Pulse Code Modulation (ADPCM); ITU: Geneva, Switzerland, 1990. [Google Scholar]

- Sullivan, J.R. A New ADPCM Image Compression Algorithm and the Effect of Fixed-Pattern Sensor Noise. In Proceedings of the OE/LASE’89, Digital Image Processing Applications, Los Angeles, CA, USA, 15–20 January 1989; Volume 1075, pp. 129–139. [Google Scholar]

- Kumar, A.; Kumaran, R.; Paul, S.; Parmar, R.M. Low complex ADPCM image compression technique. In Proceedings of the Third International Conference on Computational Intelligence and Information Technology (CIIT 2013), Mumbai, India, 18–19 October 2013; pp. 318–321. [Google Scholar]

- Kumar, A.; Kumaran, R.; Paul, S.; Mehta, S. ADPCM Image Compression Techniques for Remote Sensing Applications. Int. J. Inf. Eng. Electron. Bus. 2015, 7, 26–31. [Google Scholar] [CrossRef]

- Weinberger, M.J.; Seroussi, G.; Sapiro, G. LOCO-I: A low complexity, context-based, lossless image compression algorithm. In Proceedings of the Data Compression Conference—DCC’96, Snowbird, UT, USA, 31 March–3 April 1996; pp. 140–149. [Google Scholar]

- Wu, X.; Memon, N. CALIC-a context based adaptive lossless image codec. In Proceedings of the 1996 IEEE International Conference on Acoustics, Speech, and Signal Processing Conference Proceedings, Atlanta, GA, USA, 9 May 1996; Volume 4, pp. 1890–1893. [Google Scholar]

- Yamagiwa, S.; Kuwabara, S. Autonomous Parameter Adjustment Method for Lossless Data Compression on Adaptive Stream-Based Entropy Coding. IEEE Access 2020, 8, 186890–186903. [Google Scholar] [CrossRef]

- Ziv, J.; Lempel, A. A universal algorithm for sequential data compression. IEEE Trans. Inf. Theory 1977, 23, 337–343. [Google Scholar] [CrossRef]

- Yamagiwa, S.; Marumo, K.; Kuwabara, S. Exception Handling Method Based on Event from Look-Up Table Applying Stream-Based Lossless Data Compression. Electronics 2021, 10, 240. [Google Scholar] [CrossRef]

- FOURCC, YUV Pixel Formats. Available online: https://www.fourcc.org/yuv.php (accessed on 1 July 2021).

- Huynh-Thu, Q.; Ghanbari, M. Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 2008, 44, 800–801. [Google Scholar] [CrossRef]

- Li, H.; Forchhammer, S. MPEG2 video parameter and no reference PSNR estimation. In Proceedings of the 2009 Picture Coding Symposium, Chicago, IL, USA, 6–8 May 2009; pp. 1–4. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yamagiwa, S.; Ichinomiya, Y. Stream-Based Visually Lossless Data Compression Applying Variable Bit-Length ADPCM Encoding. Sensors 2021, 21, 4602. https://doi.org/10.3390/s21134602

Yamagiwa S, Ichinomiya Y. Stream-Based Visually Lossless Data Compression Applying Variable Bit-Length ADPCM Encoding. Sensors. 2021; 21(13):4602. https://doi.org/10.3390/s21134602

Chicago/Turabian StyleYamagiwa, Shinichi, and Yuma Ichinomiya. 2021. "Stream-Based Visually Lossless Data Compression Applying Variable Bit-Length ADPCM Encoding" Sensors 21, no. 13: 4602. https://doi.org/10.3390/s21134602

APA StyleYamagiwa, S., & Ichinomiya, Y. (2021). Stream-Based Visually Lossless Data Compression Applying Variable Bit-Length ADPCM Encoding. Sensors, 21(13), 4602. https://doi.org/10.3390/s21134602