Abstract

To aid the development of future unmanned naval vessels, this manuscript investigates algorithm options for combining physical (noisy) sensors and computational models to provide additional information about system states, inputs, and parameters emphasizing deterministic options rather than stochastic ones. The computational model is formulated using Pontryagin’s treatment of Hamiltonian systems resulting in optimal and near-optimal results dependent upon the algorithm option chosen. Feedback is proposed to re-initialize the initial values of a reformulated two-point boundary value problem rather than using state feedback to form errors that are corrected by tuned estimators. Four algorithm options are proposed with two optional branches, and all of these are compared to three manifestations of classical estimation methods including linear-quadratic optimal. Over ten-thousand simulations were run to evaluate each proposed method’s vulnerability to variations in plant parameters amidst typically noisy state and rate sensors. The proposed methods achieved 69–72% improved state estimation, 29–33% improved rate improvement, while simultaneously achieving mathematically minimal costs of utilization in guidance, navigation, and control decision criteria. The next stage of research is indicated throughout the manuscript: investigation of the proposed methods’ efficacy amidst unknown wave disturbances.

1. Introduction

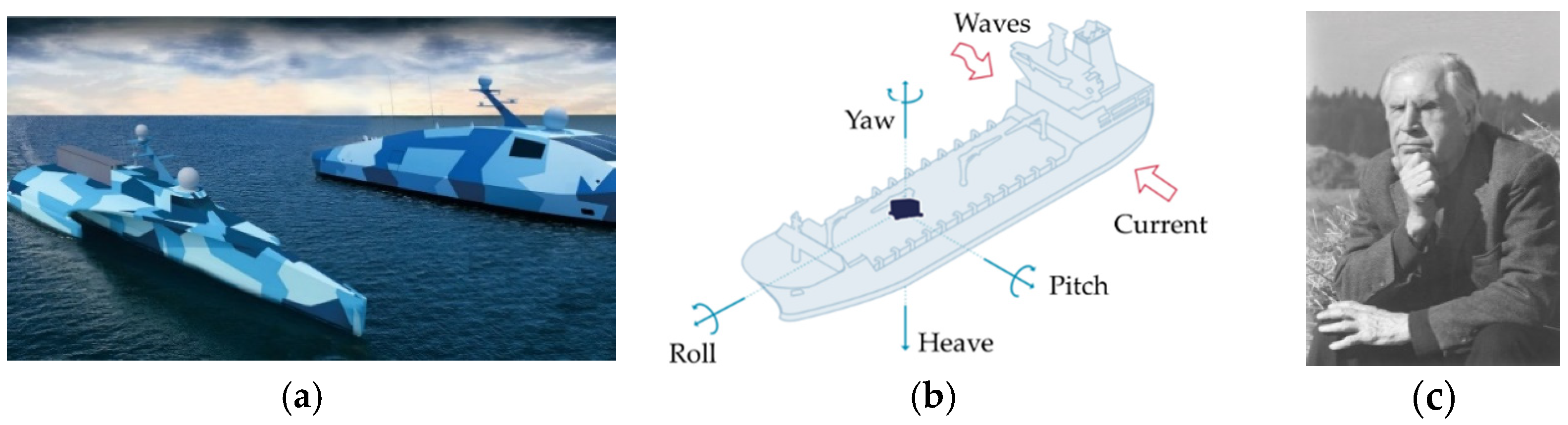

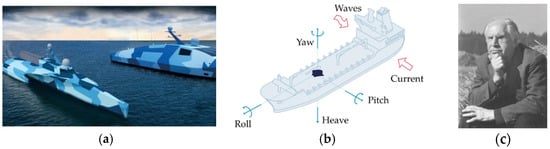

Inertial measurement units provide continuous and accurate estimates of motion states in between sensor measurements. Future unmanned naval vessels depicted in Figure 1a require very accurate motion measurement units including active sensor systems and inertial algorithms when active sensor data is unavailable. State observers are duals of state controllers used for establishing decision criteria to declare accurate positions and rates and several instantiations are studied here when fused with noisy sensors, where theoretical analysis of the variance resulting from noise power is presented and validated in over ten-thousand Monte Carlo simulations.

Figure 1.

Representative motion measurement units for future ships depicted in (a) with measurement bases depicted in (b) are proposed to be augmented by virtual sensoring by minimization of Hamiltonian systems by the principles of Pontryagin depicted in (c). Future unmanned U.S. Navy vessels [3] Medium Unmanned Surface Vessel (MUSV) concept renderings in (a) from shipbuilder Austal USA. Photo Credit: Austal USA. Boat motion monitoring [4] uses measurement bases depicted in (b) whose graphic is from cited reference modified by author. Photo (c) of Lev Pontryagin from the archive of the Steklov Mathematical Institute [2] used with permission (30 June 2021).

The combination of physical sensors and computational models to provide additional information about system states, inputs, and/or parameters, is known as virtual sensoring. Virtual sensoring is becoming more and more popular in many sectors, such as the automotive, aeronautics, aerospatial, railway, machinery, robotics, and human biomechanics sectors. Challenges include the selection of the fusion algorithm and its parameters, the coupling or independence between the fusion algorithm and the multibody formulation, magnitudes to be estimated, the stability and accuracy of the adopted solution, optimization of the computational cost, real-time issues, and implementation on embedded hardware [1].

The proposed methods stem from Pontryagin’s treatment of Hamiltonian systems, rather than utilization of classical or modern optimal estimation and control concepts applied to future naval vessels as depicted in (Figure 1) [2,3,4].

Typical motion reference units conveniently have accuracies on the order of 0.05 (in meters and degree for translation and rotation, respectively, as depicted in Figure 1b for representative naval vessels as depicted in Figure 1a). These figures of merit are aspirational for the virtual sensor that must provide accurate estimates whether active measurements are available to augment the algorithm. Lacking active measurements, the algorithm is merely an inertial navigation unit, while with active measurements, the algorithm becomes an augmented virtual sensor. This manuscript investigates virtual sensoring by evaluating several options for algorithms, resulting estimated magnitudes, accuracy of each solution, optimization of resulting costs of motion, and sensitivity to variations like noise and parameter uncertainty of the translational and rotational motion models investigated (both simplified and high-fidelity). Algorithms are compared using various decision criteria to compare approaches for consideration of usage as motion reference units potentially aided by global navigation systems.

Noting the small size of motion measurement units, simple algorithms are preferred to minimize computational burdens that can increase unit size. Motion estimation and control algorithms to be augmented by sensor measurements are based on well-known mathematical models of translation and rotation from physics, both presented in equations. In 1834, the Royal Society of London published two celebrated papers by William R. Hamilton on Dynamics in the Philosophical Transactions. Ref. [5] The notions were slowly adopted, and not presented relative to other thoughts of the age for nearly seventy years [6], but quickly afterwards, the now-accepted axioms of translational and rotational motion were self-evidently accepted by the turn of the twentieth century [7,8,9,10] as ubiquitous concepts. Half a century later [11,12], standard university textbooks elaborate on the notions to the broad scientific community. Unfortunately, the notions arose in an environment already replete with notions of motion estimation and control based on classical proportional, rate, and integral feedback, so the fuller utilization of the first principals languished until exploitation by Russian mathematician Pontryagin [13]. Pontryagin proposed to utilize the first principles as the basis for treating motion estimation and control as the classical mathematical feedback notions were solidifying in the scientific community. Decades later, the first-principal utilization proposed by Pontryagin are currently rising in prominence as an improvement to classical methods [14]. After establishing performance benchmarks [15] for motion estimation and control of unmanned underwater vehicles, the burgeoning field of deterministic artificial intelligence [16,17] articulates the assertion of the first-principles as “self-awareness statements” with adaption [18,19] or optimal learning [20] used to achieved motion estimation and control commands. The key difference between the usage of first principals presented here follows. Classical methods impose the form of the estimation and control (typically negative feedback with gains) and they have very recently been applied to railway vehicles [21], biomechanical applications [22], and remotely operated undersea vehicles [23], electrical vehicles [24], and even residential heating energy consumption [25] and multiple access channel usage by wireless sensor networks [26]. Deterministic artificial intelligence uses first principals and optimization for all quantities but asserts a desired trajectory. Meanwhile the proposed methods in this manuscript leave the trajectory “free” and calculate an optimal state and rate trajectory for fusion with sensor data and calculates optimal decision criteria for estimation and controls in the same formulation.

This manuscript seeks to use the same notion, assertion of the first principals (via Pontryagin’s formulation of Hamiltonian systems) in the context of inertial motion estimation fused with sensor measurements (that are presumed to be noisy). Noise in sensors is a serious issue elaborated by Oliveiera et al. [27] for background noise of acoustic sensors and by Zhang et al. [28] for accuracy of pulse ranging measurement in underwater multi-path environments. Barker et al. [29] evaluated impacts on doppler radar measurements beneath moving ice. Thomas et al. [30] proposes a unified guidance and control framework for Autonomous Underwater Vehicles (AUVs) based on the task priority control approach, incorporating various behaviors such as path following, terrain following, obstacle avoidance, as well as homing and docking to stationary and moving stations. Zhao et al. [31] very recently pursued the presently ubiquitous pursuit of optimality via stochastic artificial intelligence using particle swarm optimization genetic algorithm, while Anderlini et al. [32] used real-time reinforcement learning. Sensing the ocean environment parallels the current emphasis in motion sensing, e.g., Davidson et al.’s [33] parametric resonance technique for wave sensing and Sirigu et al.’s [34] wave optimization via the stochastic genetic algorithm. Motion control similarly mimics the efforts of motion sensing and ocean environment sensing, e.g., Veremey’s [35] marine vessel tracking control, Volkova et al.’s [36] trajectory prediction using neural networks, and the new guidance algorithm for surface ship path following proposed by Zhang et al. [37]. Virtual sensory will be utilized in this manuscript where noisy state and rate sensors are combined to provide smooth, non-noisy, accurate estimates of state, rate, and acceleration, while no acceleration sensors were utilized. A quadratic cost was formulated for acceleration, since accelerations are directly tied to forces and torques and therefore fuels.

“…condition of the physical world can either be ‘‘directly’’ observed (by a physical sensor) or indirectly derived by fusing data from one or more physical sensors, i.e., applying virtual sensors”.[38]

Thus, the broad context of the field is deeply immersed in a provenance of classical feedback driving a current emphasis on optimization by stochastic methods. Meanwhile this study will iterate options utilizing analytic optimization including evaluation of the impacts of variations and random noise in establishing the efficacy of each proposed approach. Analytical predictions are made of the impacts of applied noise power, and Monte Carlo analysis agrees with the analytical predictions. Developments presented in this manuscript follow the comparative prescription presented in [39], comparing many (eleven) optional approaches permitting the reader to discern their own preferred approach to fusion of sensor data with inertial motion estimation:

- Validation of simple yet optimal inertial motion algorithms for both translation and rotation derived from Pontryagin’s treatment of Hamiltonian systems when fused with sensor data that is assumed to be noisy.

- Validation of high-fidelity optimal (nonlinear, coupled) internal motion algorithms for rotation with translation asserted by logical extension derived from Pontryagin’s treatment of Hamiltonian systems when fused with sensor data that is assumed to be noisy;

- Validation of three approaches for sensor data fused with the proposed motion estimation algorithm (not using classical feedback in a typical control topology): pinv, backslash, and LU inverses derived from Pontryagin’s treatment of Hamiltonian systems when fused with sensor data that is assumed to be noisy;

- Comparison of each proposed fused implementation algorithm to three varieties of classical feedback motion architectures including linear-quadratic optimal tracking regulators, classical proportional plus velocity feedback tuned for performance specification and manually tuned proportional plus integral plus derivative feedback topologies, where these classical methods are utilized as benchmarks for performance comparisons when fused with sensor data that is assumed to be noisy.

- Comparisons are made based on motion state and velocity errors, algorithm parameter estimation errors, and quadratic cost functions, which map to fuel used to create translational and rotational motion.

- Vulnerability to variation is evaluated using ten-thousand Monte Carlo simulations varying state and rate sensor noise power and algorithm plant model variations, where noise power is tailored to the simulation discretization, permitting analytic prediction of the impacts of variations to be compared to the simulations provided.

- Sinusoidal wave action is programmed in the same simulation code to permit future research, and inclusion of such is indicated throughout the manuscript.

Appendix A, Table A1 contains a consolidated list of variables and acronyms in the manuscript.

2. Materials and Methods

Inertial navigation algorithms use physics-based mathematics to make predictions of motion states (position, rate, acceleration, and sometimes jerk). The approach taken here is to utilize the mathematical relationships from physics in a feedforward sense to produce optimal, nonlinear estimates of states that when compared to noisy sensor measurements yield corrected real-time optimal, smooth, and accurate estimates of state, rate, and acceleration. Sensors are modeled as ideal with added Gaussian noise and the smooth estimates will be seen to exhibit none of the noise. The optimization of the estimates will be derived using Pontryagin’s optimization.

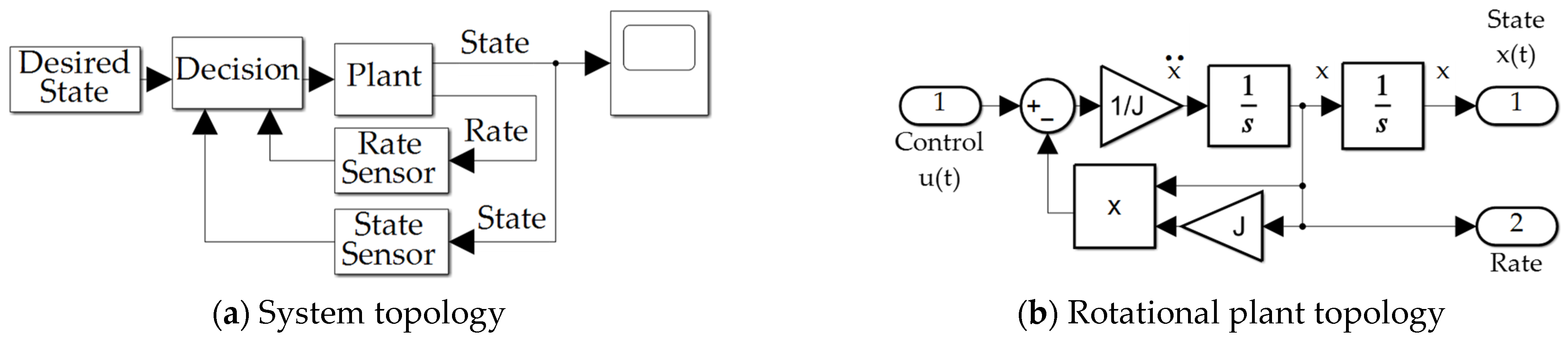

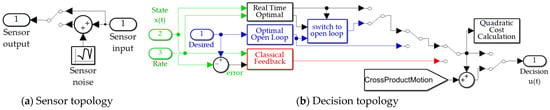

Motion control algorithms to be augmented by sensor measurements are based on well-known mathematical models of translation and rotation from physics, both presented in Equation (1), where both high-fidelity motion models are often simplified to identical double-integrator models where nonlinear coupling cross-products of motion are simplified, linearized, or omitted by assumption. The topologies are provided in Figure 2. Centrifugal acceleration is represented in Equation (1) by . Coriolis acceleration is represented in Equation (1) by . Euler acceleration is represented in Equation (1) by . In this section, double-integrator models are optimized by Pontryagin’s treatment of Hamiltonian systems, where the complete (not simplified, linearized, or omitted) nonlinear cross-products of motion are accounted for using feedback decoupling. Efficacy of feedback decoupling of the full equations of motion is validated by disengaging this feature in a single simulation run to reveal the deleterious effects of the coupled motion when not counteracted by the decoupling approach.

where

Figure 2.

SIMULINK simulation program topologies used to generate the results in Section 3: (a) Overall system topology used to simultaneously produce state and rate estimates integrated with noisy sensors and additionally optimal control calculations; (b) Euler’s moment from Equation (1) elaborated in [5,6,7,8,9,10,11,12] describing rotational motion (notice the nonlinear coupled motion).

- , external force and torque, respectively

- , mass and mass moment of inertia, respectively

- ,angular velocity and acceleration, respectively

- ,position and velocity, and acceleration relative to rotating reference

- and are double integrator plants

- cross-product rotational motion due to rotating reference frame

- cross-product translation motion due to rotating reference frame

- cross-product translation motion due to rotating reference frame

- cross-product translation motion due to rotating reference frame.

2.1. Problem Scaling and Balancing

Consider problems whose solution must simultaneously perform mathematical operations on very large numbers and very small numbers. Such problems are referred to as poorly conditioned. Scaling and balancing problems are one potential mitigation where equations may be transformed to operate with similarly ordered numbers by scaling the variables to nominally reside between zero and unity. Scaling problems by common, well-known values permits single developments to be broadly applied to a wide range of state spaces not initially intended. Consider problems simultaneously involving very large and very small values of time (), mass ()/mass moments of inertia (), and/or length (). Normalizing by a known value permit variable transformation such that newly defined variables are of similar order, e.g., where indicates generic displacement units like . Such scaling permits problem solution with a transformed variable mass and inertia of unity value, initial time of zero and final time of unity, and state and rate variables that range from zero to unity making the developments here broadly applicable to any system of particular parameterization.

2.2. Scaled Problem Formulation

The problem is formulated in terms of standard form described in Equations (2)–(8), where are the decision variables. The endpoint cost is also referred to as the Mayer cost. The running cost is also referred to as the Lagrange cost (usually with the integral). The standard cost function is also referred to as the Bolza cost as the sum of the Mayer cost and Lagrange cost. Endpoint constraints are equations that are selected to be zero when the endpoint is unity.

where

- cost function

- state vector of motion state and rate with initial condition

- and final conditions

- decision vector

- Hamiltonian operator corresponding to system total energy

- adjoint operators, also called co-states (corresponding to each state)

- endpoint costates

- endpoint constraints.

2.3. Hamiltonian System: Minimization

The Hamiltonian in Equation (8) is a function of the state, co-state, and decision criteria (or control) and allows linkage of the running costs with a linear measure of the behavior of the system dynamics . Equation (9) articulates the Hamiltonian of the problem formulation described in Equations (2)–(5). Minimizing the Hamiltonian with respect to the decision criteria vector per Equation (10) leads to conditions that must be true if the cost function is minimized while simultaneously satisfying the constraining dynamics. Equation (11) reveals the optimal decision will be known if the rate adjoint can be discerned.

2.4. Hamiltonian System: Adjoint Gradient Equations

The change of the Hamiltonian with respect to the adjoint maps to the time-evolution of the corresponding state in accordance with Equations (12) and (13).

The rate adjoint was discovered to reveal the optimal decision criteria, and the adjoint equations reveal the rate adjoint is time-parameterized with two unknown constants still to be sought. Together, Equations (11)–(13) form a system of differential equations to be solved with boundary conditions (often referred to as a two-point boundary value problem in mathematics).

2.5. Terminal Transversality of the Enpoint Lagrangian

The endpoint Lagrangian in Equation (14) adjoins the endpoint function endpoint cost and the endpoint constraints functions in Equation (8) and provides a linear measure for endpoint conditions in Equation (7). The endpoint Lagrangian exists at the terminal (final) time alone. The transversality condition in Equation (15) specifies the adjoint at the final time is perpendicular to the cost at the end point. In this problem, the endpoint cost . These Equations (16) and (17) are often useful when seeking a sufficient number of equations to solve the system.

2.6. New Two-Point Boundary Value Problem

For the two-state system, four equations are required with four known conditions to evaluate the equations. In this instance, two Equations (3)–(10) have been formulated for state dynamics, two more Equations (18) and (19) have been formulated for the adjoints, and two more Equations (20) and (21) have been formulated for the adjoint endpoint conditions. Four known conditions, Equations (22)–(25) have also been formulated. Combining Equations (11) and (13) produce Equation (26).

Evaluating Equation (27) with Equation (23) produces the value . Evaluating Equation (28) with Equation (22) produces the value . Evaluating Equation (27) with Equation (25) produces Equation (29), while evaluating Equation (28) with Equation (24) produces Equation (30).

Solving the system of two Equations (29) and (30) produces and . Substituting Equation (26) into Equation (11) with and produces Equation (31), and substitution of and into Equations (27) and (28), respectively, produce Equations (32) and (33) the solution of the trajectory optimization problem.

Equations (31)–(33) constitute the optimal solution for quiescent initial conditions and the state final conditions (zero velocity and unity scaled position). To implement a form of feedback (not classical feedback), consider leaving the initial conditions non-specific in variable-form as described next.

2.7. Real-Time Feedback Update of Boundary Value Problem Optimum Solutions

Classical methods utilize feedback of asserted form for state variable , where the decision criteria (for control or state estimation/observer) and gains are solved to achieve some stated performance criteria. Such methods are used in Section 3 and their results are established as benchmarks for comparison. So-called modern methods utilize optimization problem formulation to eliminate classical gain tuning substituting optimal gain selection but retaining the asserted form of the decision criteria. Such methods are often referred to as “linear-quadratic optimal” estimators or controllers. These estimators are also presented as benchmarks for comparison, where the optimization problem equally weights state errors and estimation accuracy.

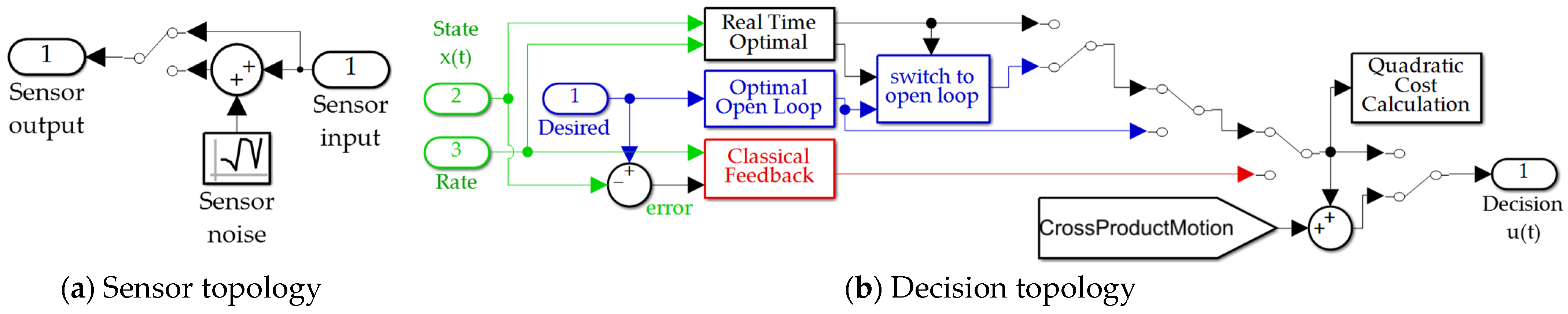

Alternative use of feedback is proposed here (whose simulation is depicted in Figure 3b). Rather than classical feedback topologies asserting utilization of state feedback in formulating the estimator or control’s decision criteria, this section proposes re-labeling the current state feedback as the new initial conditions of the two-point boundary-value problems used to solve for optimal state estimates or control decision criteria in Equations (22) and (23). The solution of (26)–(28) using the initial values of (22) and (23) manifest in values of the integration constants: and . As done in real-time optimal control, the values of the integration constants are left “free” in variable form, and their values are newly established for each discrete instance of state feedback (re-labeled as new initial conditions). This notion is proposed in Proposition 1, whose proof expresses the form of the online calculated integration constants that solve the new optimization problem. The two constants and are utilized in the same decision Equation (31) where the estimates replace the formerly solved values of the boundary value problem resulting in Equation (40).

Figure 3.

Simulink systems for noisy sensors and decision criteria (guidance or control) subsystem: (a) noisy sensor subsystem; (b) decision topology (guidance or control).

Proposition 1.

Feedback may be utilized not in closed form to solve the constrained optimization problem in real time.

Proof of Proposition 1.

Implementing Equations (34)–(37) in matrix form as revealed in Equation (38) permits solution for the unknown constants as a function of time as displayed in Equation (39), and subsequent use of the unknown constants form the new optimal solution from the current position and velocity per Equation (40).

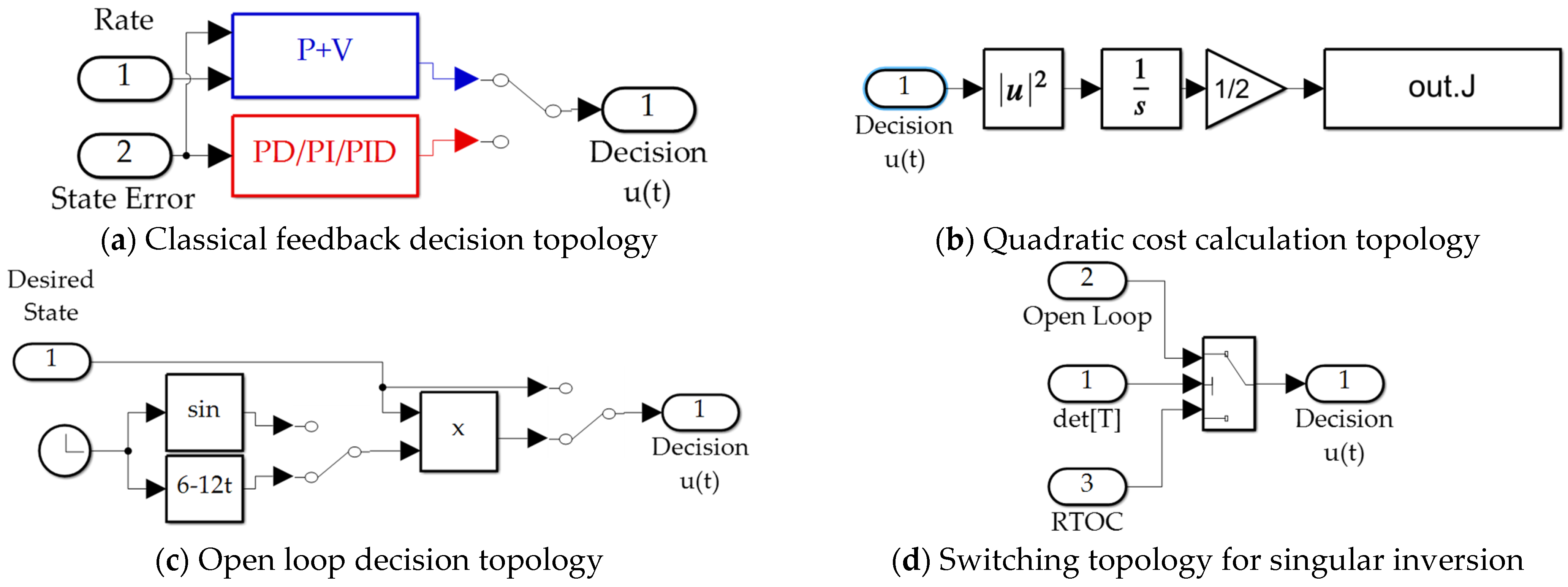

In Section 3, estimation of and becomes singular due to the inversion in Equation (39) as approaching the terminal endpoint, where switching to Equations (31)–(33) is implemented as depicted in Figure 4d to avoid the deleterious effects of singularity when applying Proposition 1. The cases with switching at singular conditions are suffixes with “with switching” in the respective label.

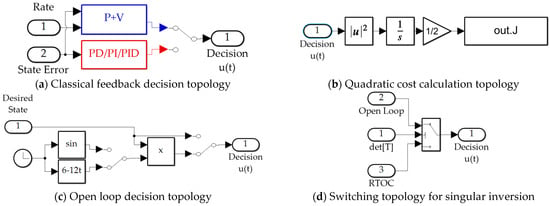

Figure 4.

SIMULINK subsystems: (a) implementation of classical feedback methods; (b) calculation of quadratic cost; (c) open loop decision topologies (sinusoidal and optimal); (d) switching function to disengage use of real-time parameter updates when the matrix in Equation (39) is rank deficient. Notice in subfigure (c) sinusoidal wave input is coded using the identical time-index of the rest of the simulation. The next stages of future research will utilize this identical simulation to investigate efficacy of the proposed virtual sensoring amidst unknown wave actions.

2.8. Feedback Decoupling of Nonlinear, Coupled Motion Due to Cross Products

The real-time feedback update of boundary value problem optimum solutions is often used in the field of real-time optimal control, but a key unaddressed complication remains the nonlinear, coupling cross-products of motion due to rotating reference frames. Here, a feedback decoupling scheme is introduced, allowing the full nonlinear problem to be addressed by the identical scaled problem solution presented, and such is done without simplification, linearization, or reduction by assumption. In proposition 2, feedback decoupling is proposed to augment the optimal solution already derived. The resulting modified decision criteria in Equation (42) is utilized in simulations presented in Section 3 of this manuscript, but a single case omitting Proposition 2 is presented to highlight the efficacy of the approach.

Proposition 2.

The real-time optimal guidance estimation and/or control solution may be extended from the double-integrator to the nonlinear, coupled kinetics by feedback decoupling as implemented in Equation (41).

Proof of Proposition 2.

For nonlinear dynamics of translation or rotation as defined in Equation (1), where the double-integrator is augmented by cross-coupled motion due to rotating reference frames, the same augmentation may be added to the decision criteria in Equation (40) using feedback of the current motion states in accordance with Equation (42). The claim is numerically validated with simulations of “cross-product decoupling” that are nearly indistinguishable from open loop optimal solution, and a single case “without cross-product decoupling” is provided for comparison.

2.9. Analytical Prediction of Impacts of Variations

Assuming Euler discretization (used in the validating simulations) for output y, index i and integration solver timestep h Equation (43) would seem to indicate a linear noise output relationship. Equation (44) indicates the relationship for quiescent initial conditions indicating the results of a style draw. In a Monte Carlo sense (to be simulated) of a very large number n, Equation (45) indicates expectations from theory Equation (46) in simulation for scaled noise entry to the simulation to correctly reflect the noise power of the noisy sensors in the discretized computer simulation. Equation (46) was used to properly enter the sensor noise in the simulation (Figure 2a and Figure 3a).

Assuming this implementation of noise power for a given Euler (ode1) discretization in SIMULINK, 1 − error ellipse may be calculated as Equation (47) for the system in canonical form in accordance with [40] and was implemented in Figure 3a and depicted on “scatter plots” in Section 3’s presentation of results of over ten-thousand Monte Carlo simulations.

2.10. Numerical Simulation in MATLAB/SIMULINK

Validating simulations were performed in MATLAB/SIMULINK Release R2021a with Euler integration solver (ode1) and a fixed time step of 0.01 s, whose results are presented in Section 3, while this subsection displays the SIMULINK models permitting the reader to duplicate the results presented here. Sensor noise was added per Section 2.8. The classical feedback subsystem is displayed in Figure 4a. The optimal open loop subsystem implements Equation (31), and is elaborated in Figure 4b,c. The real time optimal subsystem implements Equations (42) and (31) augmented by feedback decoupling as in Equation (42). The “switch to open loop” subsystem switches when the matrix inverted in Equation (39) is singular indicated by a zero valued determinant and is elaborated in Figure 4d. The quadratic cost calculation computes Equation (3) and is elaborated in Figure 4b, while the cross-product motion feedback implements the cross product of Equation (42). The P + V subsystem and PD/PI/PID subsystems depicted in Figure 4a implement classical methods not re-derived here, but whose computer code is presented in Appendix B, Algorithms A1 and A2.

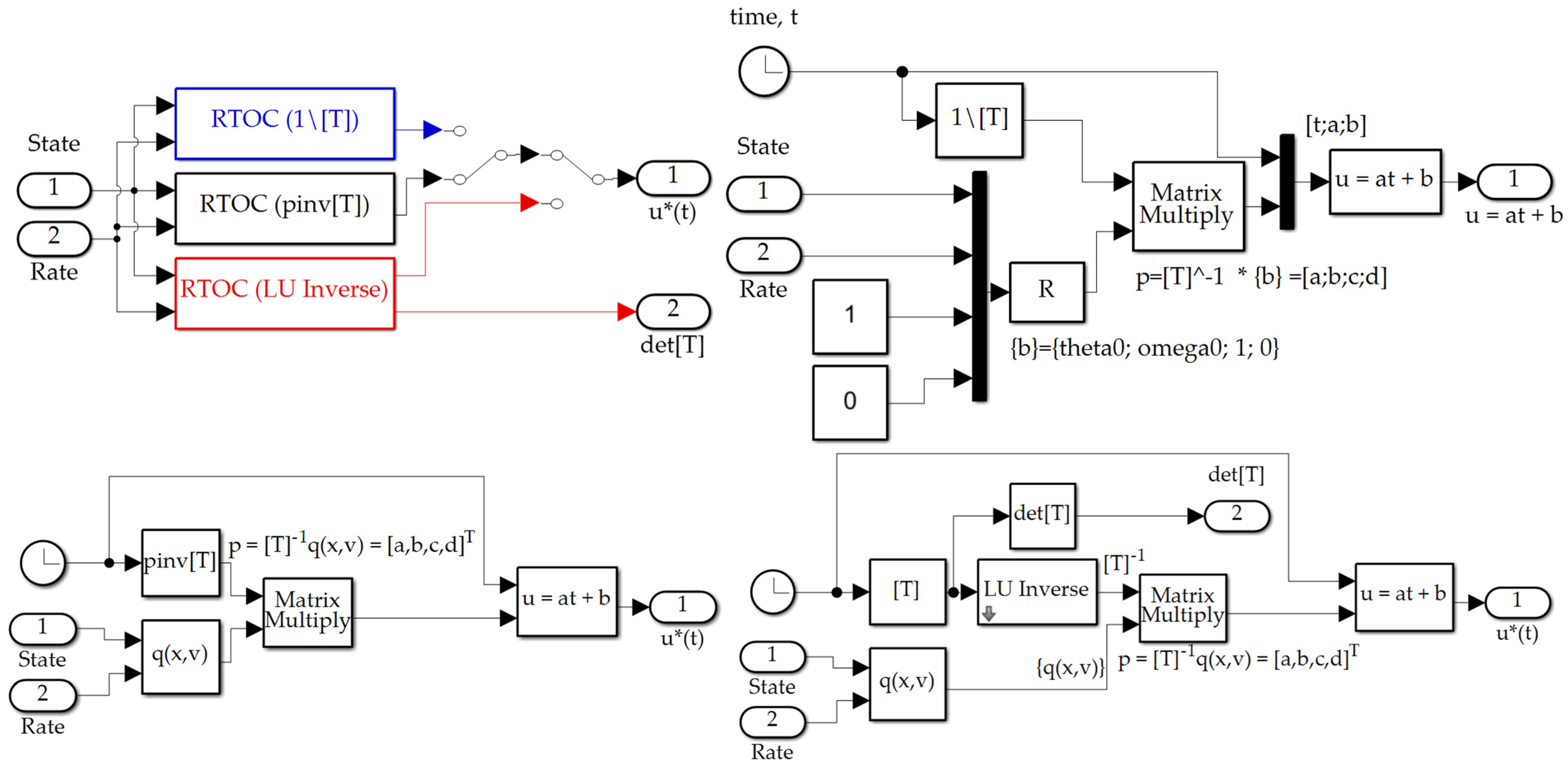

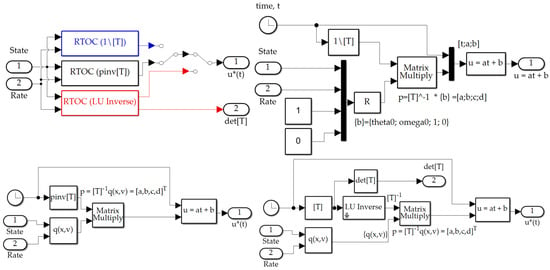

Figure 5 displays the SIMULINK subsystems used to implement the three instantiations of real-time optimization (labeled RTOC from provenance in optimal control) where the switching displayed in Figure 3b permits identical simulation experiments to be performed with all conditions fixed, varying only the proposed implementation. The subsystems execute Equation (39) with three variations of matrix inversion: (1) MATLAB’s backslash “\”, (2) Moore-Penrose pseudoinverse (pinv), (3) LU-inverse.

Figure 5.

SIMULINK subsystems; (a) real-time optimal decision switching topology; (b) real-time optimal calculation of Equation (39) using backslash\inverse; (c) real-time optimal calculation of Equation (39) using pinv inverse; (d) real-time optimal calculation of Equation (39) using LU inverse.

Section 2.10 presented SIMULINK subsystems used to implement the equations derived in the section. Table 1 displays the software configuration used to simulate the equations leading to the results presented immediately afterwards in Section 3.

Table 1.

Software configuration for simulations reported in Section 3.

3. Results

Section 2 derived several options for estimating state, rate, and control simultaneously as outputs of Pontryagin’s treatment of the problem formulated as Hamiltonian systems. Section 2.9 described implementation of sensor noise narratively, while Figure 3 illustrated the topological elaboration using SIMULINK including state and rate sensor with added Gaussian noise whose noise power was set in accordance with Section 2.9. SIMULINK subsystems were presented to aid repeatability (with callback codes in the Appendix B). Those subsystems were used to run more than ten-thousand simulations: a nominal simulation run for each technique with the remainder utilized to evaluate vulnerability to variations as described in Section 2.9. In Section 3.1, benchmarks of performance are established using classical methods for state and rate errors and optimum cost calculated in Section 2. Section 3.2, Section 3.3 and Section 3.4 describe real-time optimal utilization of feedback to establish online estimates of the solution of the modified boundary value problem described in Section 2. Each section respectively evaluates the three methods compared: backslash\inverse, pinv inverse, and LU inverse.

General lessons from the results include:

- Classical feedback estimation methods are very effective at achieving very low estimation errors, but at higher costs utilizing the estimates in the decision criteria (guidance or control).

- Backslash\inverse is relatively inferior to all other inverse methods

- Singular switching generally improves state and rate estimation and costs;

- LU inverse and pinv inverse methods perform alike with disparate strengths and weaknesses relative to each other.

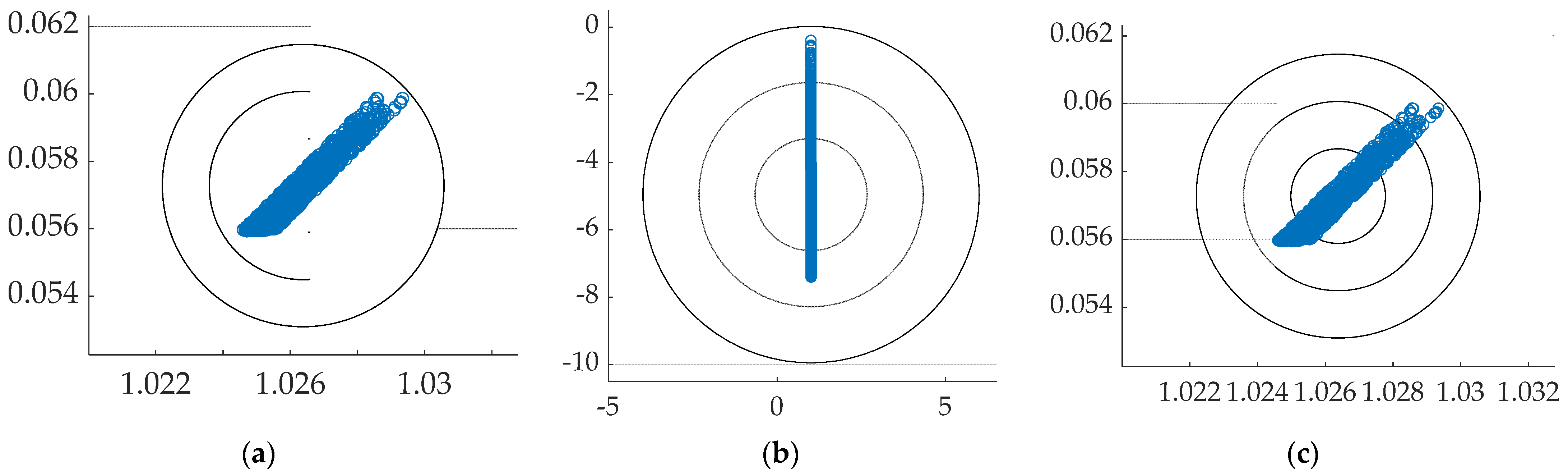

- Choosing the pinv inverse method as the chosen recommendation, Monte Carlo analysis reveals the residual sensitive to parameter variation is indistinguishable from the inherent sensitivity of the optimal solution when using the singular switching technique. Meanwhile, substantial vulnerability to parameter variation is revealed when singular switching is not used.

- Lastly, omitting the complicating cross-products in the problem results in an order of magnitude high estimation errors and several orders of magnitude higher parameter estimation error. Therefore, cross-product motion decoupling is strongly recommended for all instantiations of state and rate estimation.

3.1. Benchmark Classical Methods

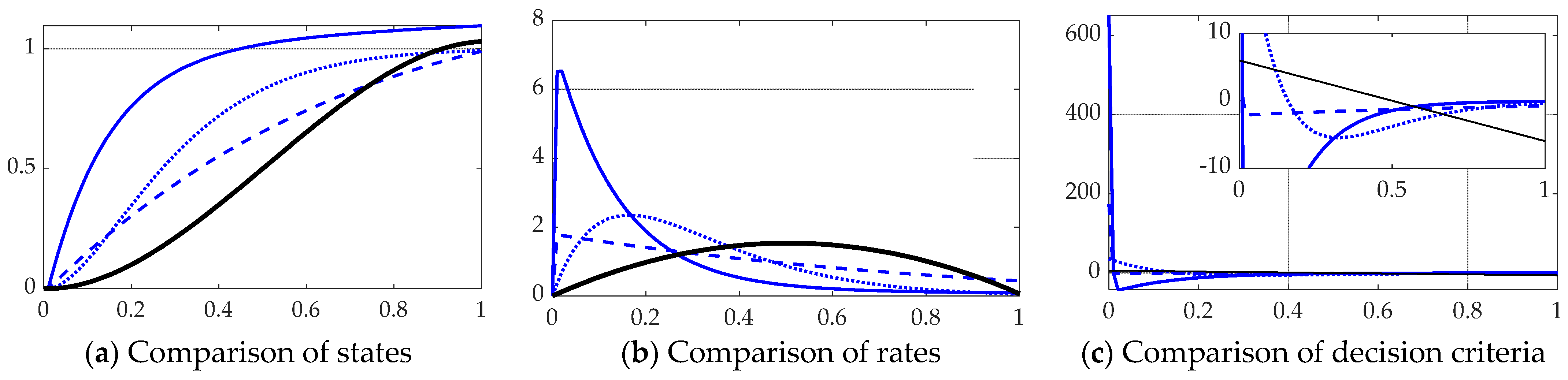

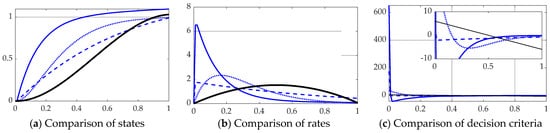

Classical methods as presented in [41,42] with nonlinear decoupling loops as proposed in Equation (42) depicted in Figure 3b were implemented in SIMULINK according to Figure 4a. Computer code implementing these classical methods is presented in Appendix B, Algorithm 1. Estimation was executed by feedback of proportional plus integral plus derivative (PID), proportional plus derivative (PD), and also proportional plus velocity, and the results displayed in Figure 6, establishing the benchmark for state and rate tracking. Table 2 displays quantitative data corresponding to Figure 6’s qualitative displays. Notice the optimal estimation of state, rate, and decision criteria is also included in Figure 6 and Table 2, since the optimal cost benchmark is established by Pontryagin’s treatment in Equation (31).

Figure 6.

Comparison of classical methods with scaled time on the abscissae and respective ordinates titled in the subplot captions: thinner solid blue line is manually tuned PID, dashed line is linear quadratic optimal PD, dotted line is proportional plus velocity tuned to performance specification, thicker solid black line is open loop optimal per Pontryagin (Equation (31) provided for anticipated comparison). (a) States, (b) rates, and (c) decision criteria). Notice the solid black line representing the optimal open loop solution in subfigure (c) is initially positive to create movement in the desired direction, and the control switches exactly at the halfway point to a negative input, slowing progress towards the final desired end states (position and rate states). The ranges of the zoomed view in the inset are indicated by the respective scales.

Table 2.

Comparison of classical decision methods.

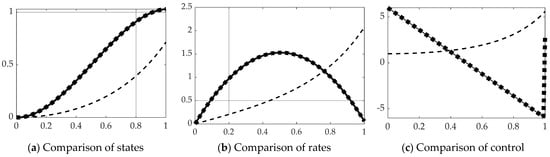

3.2. Real-Time Optimal Methods with Backslash

This section displays the results of real-time optimal estimation using the backslash\inverse depicted in Figure 5b with and without singular switching displayed in Figure 4d to inverse the [T] in Equation (39). The results are compared to open loop optimal results per Equation (31) displayed in Figure 4c. Figure 7 reveals real-time optimal state estimation performs relatively poorly using the MATLAB backslash\inverse, but performance is restored to near-optimal performance when augmented with singular switching. State and rate errors are restored to essentially optimal values, while cost is restored to very near the optimal case as evidenced by the quantitative results displayed in Table 3.

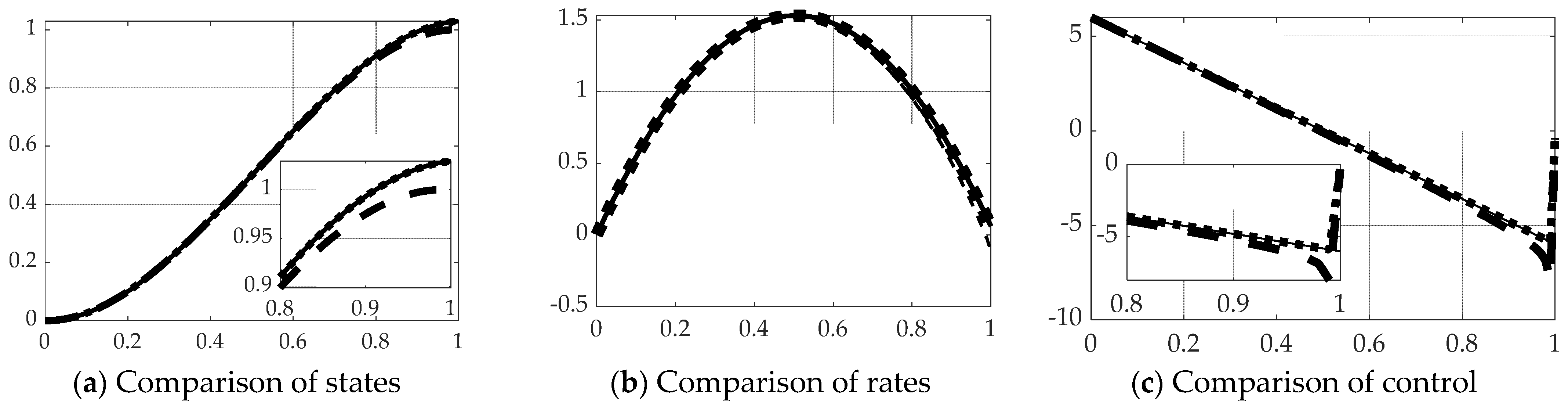

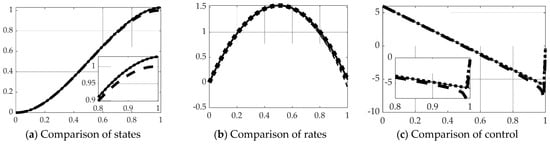

Figure 7.

Comparison of real-time optimal methods with scaled time on the abscissae and respective ordinates titled in the subplot captions: solid line is open loop optimal (benchmark), dashed line is real-time optimal using backslash, thick dotted line is real-time optimal using backslash with singular switching. (a) States, (b) rates. Notice the solid black line representing the optimal open loop solution in subfigure (c) is initially positive to create movement in the desired direction, and the control switches exactly at the halfway point to a negative input, slowing progress towards the final desired end states (position and rate states). The ranges of the zoomed view in the inset are indicated by the respective scales.

Table 3.

Comparison of real-time optimal decision methods using backslash matrix inversion.

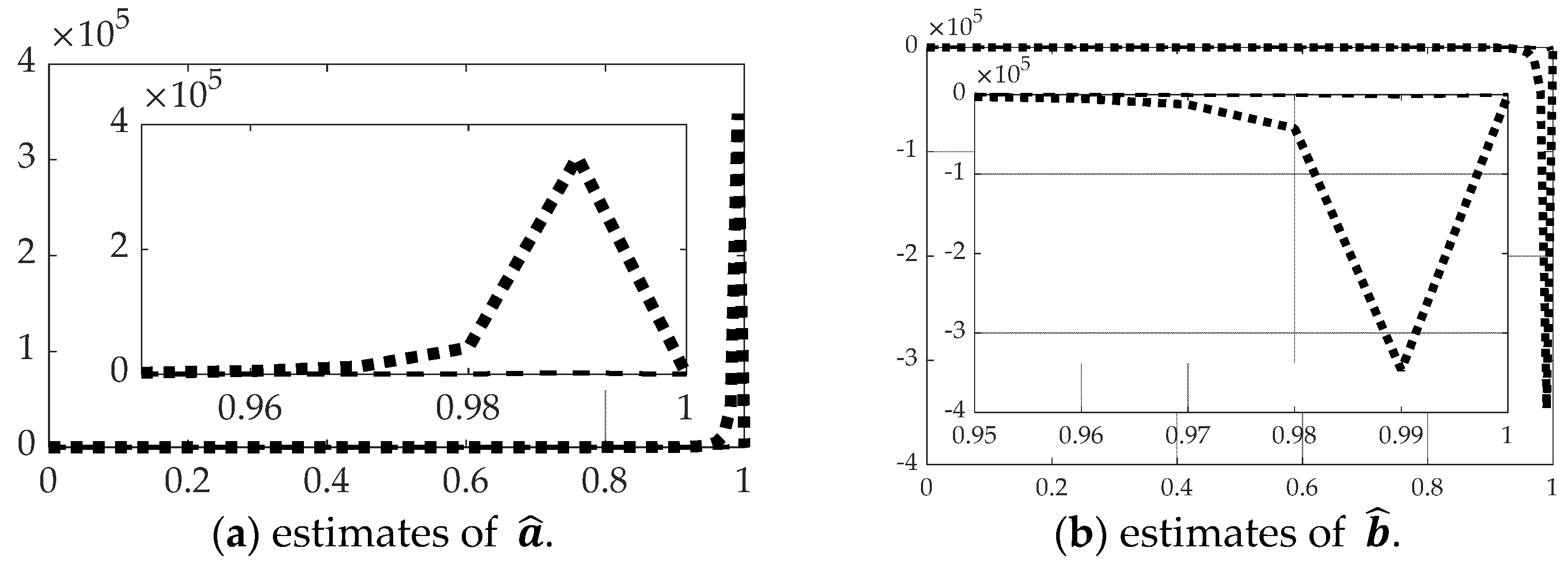

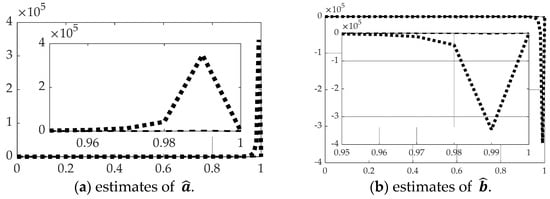

Figure 8 and Table 4 reveal the estimation performance of the constants of integration solving the modified two-point boundary value problem (BVP) using state and rate feedback to reset the initial conditions of the BVP. Oddly, despite relatively superior performance estimating the state and rates when using singular switching augmentation, parameter estimation is far inferior.

Figure 8.

Comparison of real-time optimal methods with scaled time on the abscissae and respective ordinates titled in the subplot captions: dashed line is real-time optimal using backslash, dotted line is real-time optimal using backslash with singular switching. (a) Estimates of , (b) estimates of . The ranges of the zoomed view in the inset are indicated by the respective scales.

Table 4.

Comparison of real-time optimal decision methods using backslash matrix inversion.

Section 3.1 presented the results of classical and optimal methods as benchmarks for performance. Meanwhile, Section 3.2 presented the results of implementing real-time optimal estimation with backslash\inverse with and without singular switching compared to the optimal benchmark. Next, Section 3.3 presents results using pinv inverse with and without singular switching.

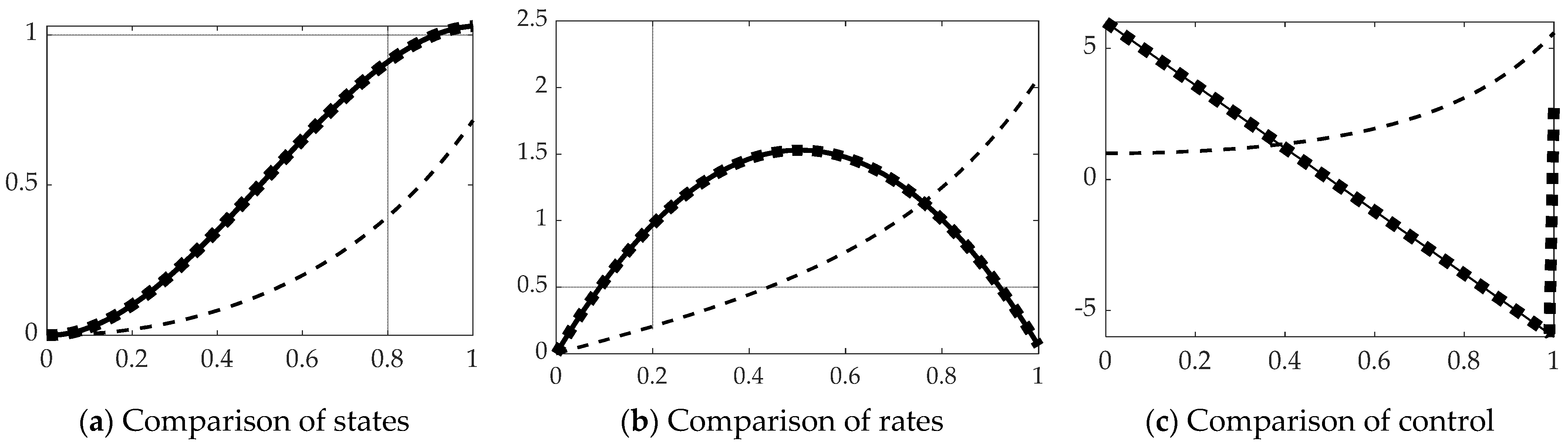

3.3. Real-Time Optimal Methods with Pinv

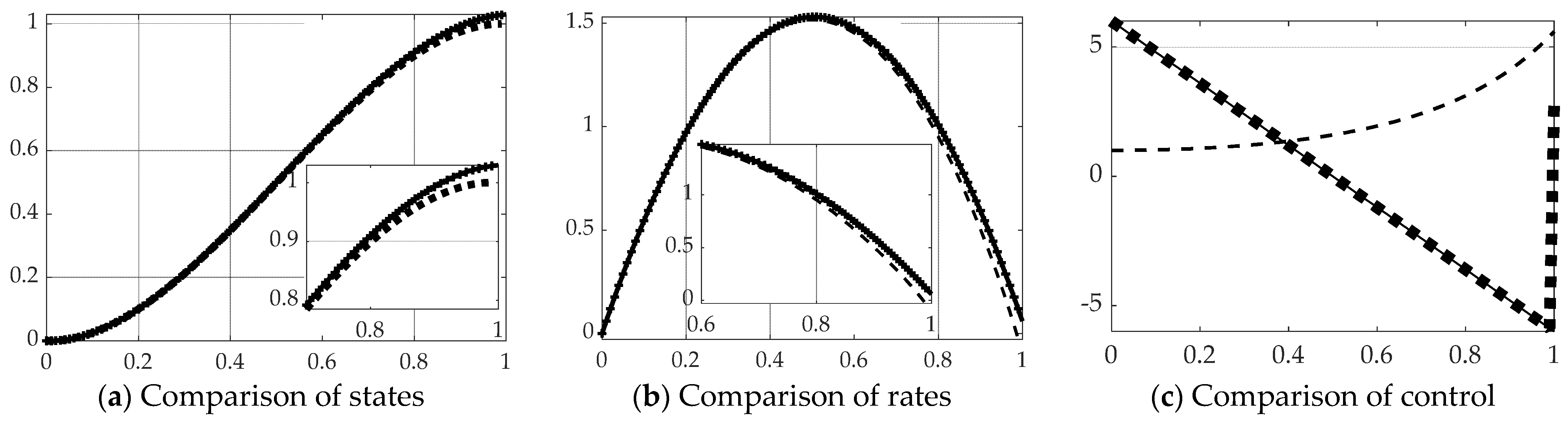

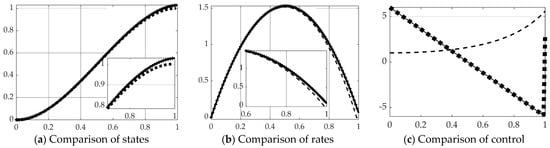

Inversion of the [T] matrix in Equation (39) was also accomplished by the Moore–Penrose pseudoinverse equation: . All other facets of the problem are left identical, while only the method of matrix inversion is modified resulting in state and rates estimates and comparison of control in Figure 9 with corresponding quantitative results in Table 5. Parameter estimation accuracy is displayed in Figure 10 and Table 6.

Figure 9.

Comparison of real-time optimal methods with scaled time on the abscissae and respective ordinates titled in the subplot captions: solid line is open loop optimal (benchmark), dashed line is real-time optimal using backslash, thick dotted line is real-time optimal using pinv with singular switching. (a) States, (b) rates, and (c) decision criteria or control. The ranges of the zoomed view in the inset are indicated by the respective scales.

Table 5.

Comparison of real-time optimal decision methods using pinv matrix inversion.

Figure 10.

Comparison of real-time optimal methods with scaled time on the abscissae and respective ordinates titled in the subplot captions: dashed line is real-time optimal using backslash, dotted line is real-time optimal using pinv with singular switching. (a) Estimates of , (b) estimates of . The ranges of the zoomed view in the inset are indicated by the respective scales.

Table 6.

Comparison of real-time optimal decision methods using p-inv matrix inversion.

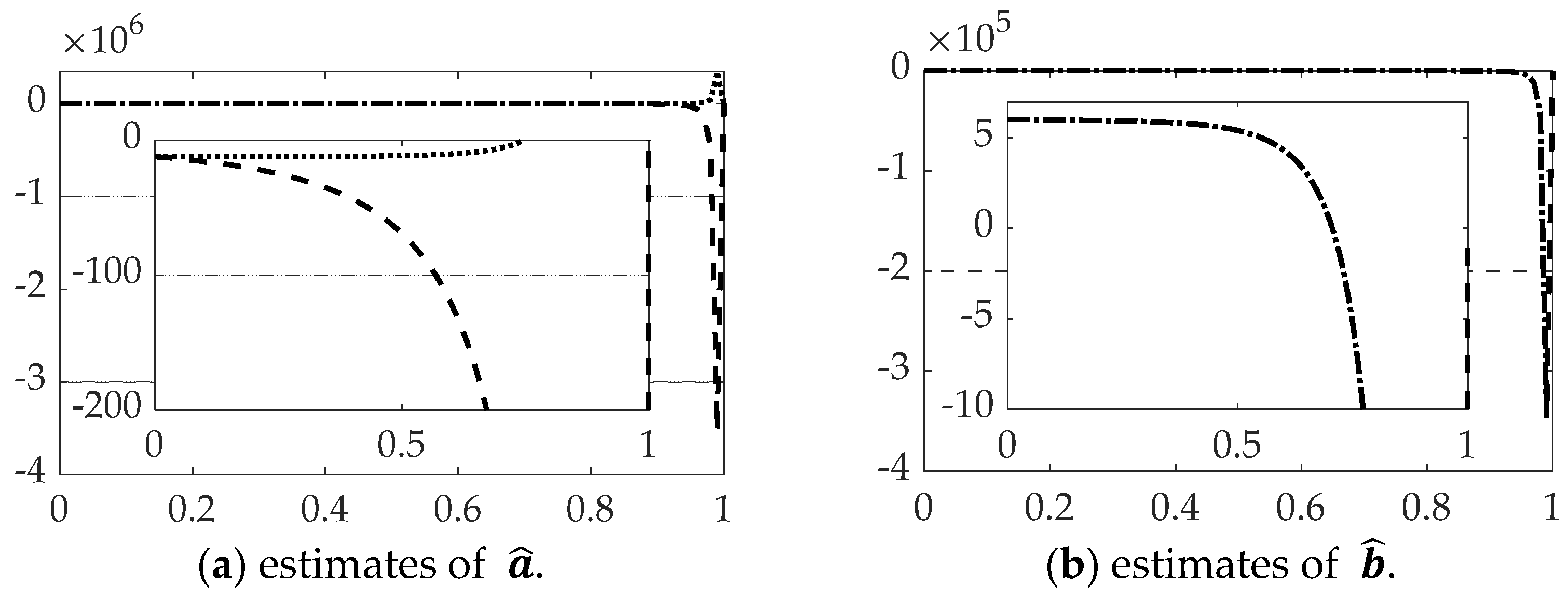

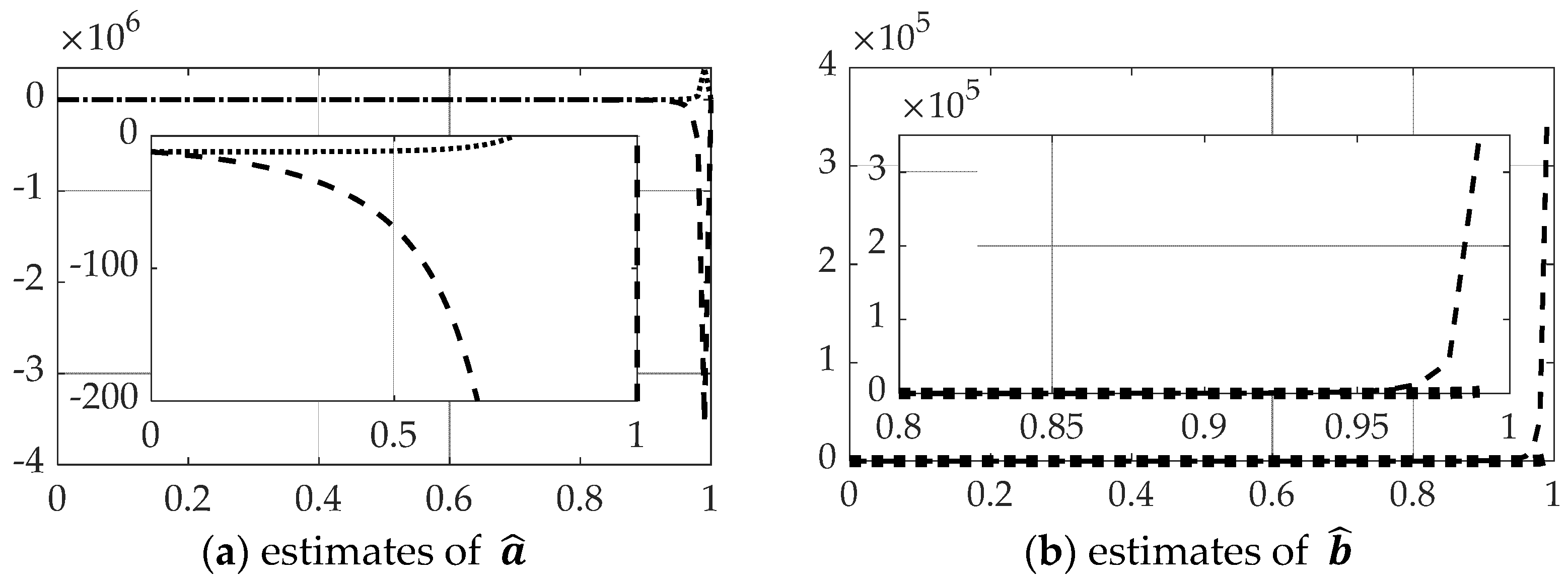

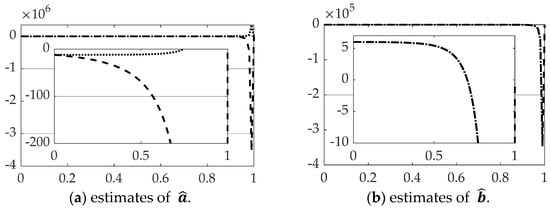

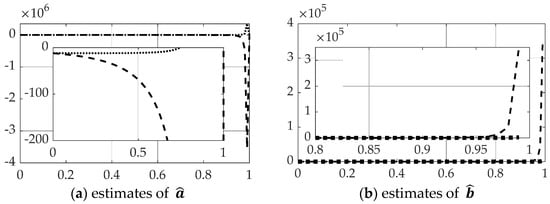

3.4. Real-Time Optimal Methods with Lu-Inverse

Inversion of the [T] matrix in Equation (39) was also accomplished by the LU-inverse, which first creates a pivoted version and then inverts the product of a lower triangular matrix, [L] and an upper triangular matrix [U] based on [T]: . All other facets of the problem are left identical, while only the method of matrix inversion is modified resulting in state and rates estimates and comparison of control in Figure 11 with corresponding quantitative results in Table 7. Parameter estimation accuracy is displayed in Figure 12 and Table 8.

Figure 11.

Comparison of real-time optimal methods with scaled time on the abscissae and respective ordinates titled in the subplot captions: solid line is open loop optimal (benchmark), dashed line is real-time optimal using backslash, thick dotted line is real-time optimal using LU-inverse with singular switching. (a) States, (b) rates, and (c) decision criteria or control. The ranges of the zoomed view in the inset are indicated by the respective scales.

Table 7.

Comparison of real-time optimal decision methods using LU-inverse matrix inversion.

Figure 12.

Comparison of real-time optimal methods with scaled time on the abscissae and respective ordinates titled in the subplot captions: dashed line is real-time optimal using backslash, dotted line is real-time optimal using LU-inverse with singular switching. (a) Estimates of , (b) estimates of . The ranges of the zoomed view in the inset are indicated by the respective scales.

Table 8.

Comparison of real-time optimal decision methods using LU-inverse matrix inversion.

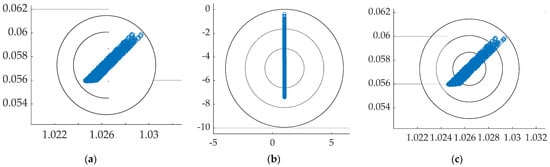

3.5. Monte Carlo Analysis Using Pinv (with Singular Switching) and Open Loop Optimal with Cross-Product Deoupling

Over ten-thousand simulation runs were performed with 10% uniformly random variations in plant parameters (mass and mass moment of inertia). Noise was added to state and rate sensors with zero mean and standard deviation 0.01, and the results are displayed in the “scatter” plots in Figure 13 with corresponding quantitative results displayed in Table 9. Feedback implemented by resetting the initial condition of the reformulated boundary value problem (when implemented with singular switching) yielded optimal results when augmented with cross-product decoupling.

Figure 13.

Comparison of the impacts of system variations on real-time optimal using (a) open loop optimal, (b) pinv without switching, (c) pinv with switching. Scaled state on the abscissae and scaled rate on the ordinates.

Table 9.

Impact of variations with real-time optimal decision methods using pinv inversion.

3.6. Comparison of Results

Section 3.1 presented the benchmark results produced by classical methods and open loop optimal mathematical solutions. Section 3.2 presented results utilizing MATLAB’s backslash\inversion, while Section 3.3 included results using Moore–Penrose pseudoinverse, pinv. Section 3.4 presented results using LU-inverse. Section 3.5 revealed robustness to variations in plan parameters with both state and rate sensor noise. This section consolidates the results into a single table of raw data depicted in Table 10. This data will be used to produce percent performance improvement as figures of merit in the Discussion (Section 4).

Table 10.

Comparison of real-time optimal decision methods.

4. Discussion

State and rate estimation algorithms fused with noisy sensor measurements using several of the proposed methodologies achieve state-of-the art accuracies with optimality that is analytic and deterministic rather than stochastic, and therefore use very simple equations with necessarily low computational burdens. Simple relationships with small numbers of multiplications and additions are required to be comparable to the simplicity of classical methods, but optimum results are produced that exceed the modern notion of linear-quadratic optimal estimation. Implementation of non-standard feedback achieves robustness with the additional computational cost of a matrix inverse, and therefore three optional inversion methods were compared. General lessons taken with manually tuned PID as a benchmark for state and rate estimation errors, while optimal loop optimal cost is the benchmark for the cost of utilization of state estimations for guidance and control:

- Classical feedback estimation methods (tuned per computer code is presented in Appendix B, Algorithm A1) are very effective at achieving very low estimation errors, but at higher costs utilizing the estimates in the decision criteria (guidance or control).

- Linear-quadratic optimal estimation achieved 87% better state estimates, but over 400% poorer rate estimates compared to classical PID with costs over 2000% open-loop optimal costs.

- Classical position plus velocity estimation achieved 90% improved state estimation with over 30% better rate estimation, but cost of implementation remains high (over 400% higher than the optimal benchmark).

- Open loop optimal estimation established the mathematical benchmark for cost, and achieved 72% improved state estimation and 33% rate estimation errors.

- Backslash\inverse is relatively inferior to all other inverse methods, producing 200% poorer state estimation and over 2000% poorer rate estimates with 52% reduced costs compared to the optimal benchmark;

- Singular switching generally improves state and rate estimation and costs;

- Singular switching with backslash\inverse produced 69% improvement in state estimation and 29% improvement in rate estimation with roughly optimal costs.

- Singular switching with LU-inverse produced 72% improvement in state estimation and 33% improvement in rate estimation with roughly optimal costs (3% better than optimal…a numerical curiosity).

- Singular switching with pinv inverse produced 69% improvement in state estimation and 29% improvement in rate estimation with roughly optimal costs (approximately identical improvement percentages to LU-inverse with singular switching).

- LU inverse and pinv inverse methods perform alike with disparate strengths and weaknesses relative to each other.

- Choosing the pinv inverse method as the chosen recommendation, Monte Carlo analysis reveals the residual sensitive to parameter variation is indistinguishable from the inherent sensitivity of the optimal solution when using the singular switching technique. Meanwhile, substantial vulnerability to parameter variation is revealed when singular switching is not used.

- Lastly, omitting the complicating cross-products in the problem results in an order of magnitude higher estimation errors and several orders of magnitude higher parameter estimation error. Therefore, cross-product motion decoupling is strongly recommended for all instantiations of state and rate estimation.

4.1. Notes on Percentages of Performance Improvements

The choice of benchmarks for establishing percentage performance improvements leads to seemingly exaggerated numbers. The current selection of benchmarks emphasizes the strengths of the respective methods: classical feedback estimation methods are designable to achieve high accuracy but suffer from high effort by the decision criteria associated with their use. Optimal methods as instantiated here emphasize minimization of decision effort, so the benchmark for control effort is selected as optimal open loop rather than classical feedback (e.g., manually tuned PID). Percent degradation over thirty thousand percent results when compared to the optimal value (of six) as a benchmark. If the calculation had instead used the manually tuned classical PID as a benchmark, the optimal effort would exhibit an improvement over ninety-nine percent.

The final line in Table 11 illustrates the extreme penalty of not using feedback decoupling of the vector cross-products in Equation (1) representing translation due to the rotating reference. The penalty embodies the deleterious effects of neglecting treatment of the nonlinear, coupled, full six-degree-of-freedom system of equations.

Table 11.

Percent improvement comparison of real-time optimal decision methods percent performance improvement.

4.2. Notes on Executability

Table 12 displays simulation run time for the eleven methods compared in the manuscript. Run-time was established by establishing the run start-time with the tic command, while simulation stop-time was the very first command (toc) following each simulation run. Simply neglecting the cross-product terms results in the fastest calculation. All the methods evaluated are of the same order of magnitude of computational burden. Simulations are depicted graphically in Figure 2, Figure 3, Figure 4 and Figure 5 and alphanumerically in Appendix B.

Table 12.

Simulation run-time comparison.

4.3. Future Research

Notice in Figure 3c sinusoidal wave input is coded using the identical time-index of the rest of the simulation. The next stages of future research will utilize this identical simulation to investigate efficacy of the proposed virtual sensoring amidst unknown wave actions. Secondly, hardware validation of key facets of this research is a logical next step.

5. Conclusions

Using variations of mathematical optimization to provide state, rate, and decision/control provides virtual sensing information useful as sensor replacements. In this instance, arbitrary position and rate sensors were modeled as ideal sensors, plus Gaussian random noise and algorithms were presented and compared that provide very smooth (not noisy) signals for position, rate, and acceleration (manifest in the decision/control). There was no acceleration sensor, so the notion of sensor replacement is manifest for acceleration, while the position and rate information was provided by the selected algorithm acting as a vital sensor. Real-time optimal (nonlinear) state estimation using the Moore–Penrose pseudoinverse (implemented in MATLAB using the pinv command) was revealed to be the most advised approach with very highly accurate estimates and essentially mathematically optimally low costs of utilization. The real-time optimal inverse calculation becomes poorly conditioned as the end-state is approached due to rank deficiency in the matrix inversion, so switching to the open loop optimal in the very end was implemented when the determinant of the matrix became nearly zero.

Funding

This research received no external funding. The APC was funded by the author.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

While the simulation codes used to produce these results are presented in the manuscript and the appendix, data supporting reported results can be obtained by contacting the corresponding author.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A

This appendix contains a table of variable and acronym definitions used in the manuscript.

Table A1.

Variables and acronyms.

Table A1.

Variables and acronyms.

| Variable/Acronym | Definition |

|---|---|

| , | external force and torque, respectively |

| , | mass and mass moment of inertia, respectively |

| , | angular velocity and acceleration, respectively |

| , | position and velocity, and acceleration relative to rotating reference |

| & | double integrator plants |

| cross-product translation motion due to rotating reference frame | |

| cross-product translation motion due to rotating reference frame | |

| cross-product translation motion due to rotating reference frame | |

| cross-product translation motion due to rotating reference frame | |

| endpoint cost also known as “Mayer cost” | |

| running cost also known as “Lagrange cost” | |

| cost function | |

| endpoint constraints | |

| state vector of motion state and rate with initial condition and final conditions | |

| control vector | |

| Hamiltonian operator corresponding to system total energy | |

| adjoint operators, also called co-states (corresponding to each state) | |

| endpoint costates | |

| constants of integration | |

| estimates of constants of integration | |

| time variable | |

| states evaluated at the final endpoint time | |

| states evaluated at the initial time | |

| Dot notation for time-derivative | |

| notation for integration | |

| mass moment of inertia, normalized to unity by scaling in Section 2.1 | |

| output at discrete time index | |

| discretization time-interval (timestep) | |

| theoretical variance of output | |

| variance predicted in simulation of proposed methods | |

| critical damping ratio and natural frequency | |

| P+V | proportional plus velocity |

| PD,PI,PID | proportional plus derivative, integral respectively |

| det | determinant |

| RTOC | real-time optimal calculation |

| \ | backslash matrix inversion (a MathWorks technique) |

| pinv | Moore–Penrose pseudoinverse matrix inversion |

| LU | matrix inversion by factoring and inverting a row-pivoted variant |

Appendix B

The appendix contains computer codes inserted into the InifFcn callback and StopFcn call back, respectively. Together with figures, implementation of Equations (1), (31)–(46), this is the only computer code needed to achieve the results presented in this manuscript.

| Algorithm A1. InitFcn callbacks for SIMULINK model depicted in Figures |

| Includes classical feedback tuning methods |

|

| Algorithm A2. StopFcn callbacks for SIMULINK model depicted in Figures |

|

References

- Special Issue “Combining Sensors and Multibody Models for Applications in Vehicles, Machines, Robots and Humans”. Available online: https://www.mdpi.com/journal/sensors/special_issues/multibody_sensors (accessed on 29 May 2021).

- International Conference “Optimal Control and Differential Games” Dedicated to the 110th Anniversary of L. S. Pontryagin, Russia, 12–14 December 2018; Steklov Mathematical Institute of RAS: Moscow. Photo Originally Published in the Newspaper “Soviet Russia”, April 16, 1989. Used with mild Permission (29 June 2021) from the Head of the Information and Publishing Sector, Department of Computer Networks and Information Technology, Steklov Mathematical Institute of Russian Academy of Sciences. Available online: http://www.mathnet.ru/eng/conf1287 (accessed on 24 June 2021).

- Dunn, B. The Future for Unmanned Surface Vessels in the US Navy. Georgetown Security Studies Review. 28 October 2020. Available online: https://georgetownsecuritystudiesreview.org/2020/10/28/the-future-for-unmanned-surface-vessels-in-the-us-navy/ (accessed on 24 June 2021).

- Boat Motion Monitoring. Available online: https://www.sbg-systems.com/applications/ship-motion-monitoring/ (accessed on 29 May 2021).

- Hamilton, W.R. On a General Method in Dynamics; Royal Society: London, UK, 1834; pp. 247–308. [Google Scholar]

- Merz, J. A History of European Thought in the Nineteenth Century; Blackwood: London, UK, 1903; p. 5. [Google Scholar]

- Whittaker, E. A Treatise on the Analytical Dynamics of Particles and Rigid Bodies; Cambridge University Press: New York, NY, USA, 1904; p. 1937. [Google Scholar]

- Church, I.P. Mechanics of Engineering; Wiley: New York, NY, USA, 1908. [Google Scholar]

- Wright, T. Elements of Mechanics Including Kinematics, Kinetics, and Statics, with Applications; Nostrand: New York, NY, USA, 1909. [Google Scholar]

- Gray, A. A Treatise on Gyrostatics and Rotational Motion; MacMillan: London, UK, 1918; ISBN 978-1-4212-5592-7. [Google Scholar]

- Rose, M. Elementary Theory of Angular Momentum; John Wiley & Sons: New York, NY, USA, 1957; ISBN 978-0-486-68480-2. [Google Scholar]

- Greenwood, D. Principles of Dynamics; Prentice-Hall: Englewood Cliffs, NJ, USA, 1965; ISBN 9780137089741. [Google Scholar]

- Pontryagin, L.S.; Boltyanski, V.G.; Gamkrelidze, R.S.; Mishchenko, E.F. The Mathematical Theory of Optimal Processes; CRC Press: Boca Raton, FL, USA, 1962. [Google Scholar]

- Sands, T. Optimization Provenance of Whiplash Compensation for Flexible Space Robotics. Aerospace 2019, 6, 93. [Google Scholar] [CrossRef] [Green Version]

- Sands, T.; Bollino, K.; Kaminer, I.; Healey, A. Autonomous Minimum Safe Distance Maintenance from Submersed Obstacles in Ocean Currents. J. Mar. Sci. Eng. 2018, 6, 98. [Google Scholar] [CrossRef] [Green Version]

- Sands, T. Development of deterministic artificial intelligence for unmanned underwater vehicles (UUV). J. Mar. Sci. Eng. 2020, 8, 578. [Google Scholar] [CrossRef]

- Sands, T. Control of DC Motors to Guide Unmanned Underwater Vehicles. Appl. Sci. 2021, 11, 2144. [Google Scholar] [CrossRef]

- Sands, T.; Kim, J.J.; Agrawal, B.N. Spacecraft fine tracking pointing using adaptive control. In Proceedings of the 58th International Astronautical Congress, Hyderabad, India, 24–28 September 2007; International Astronautical Federation: Paris, France, 2007. [Google Scholar]

- Sands, T.; Kim, J.J.; Agrawal, B.N. Improved Hamiltonian adaptive control of spacecraft. In Proceedings of the IEEE Aerospace, Big Sky, MT, USA, 7–14 March 2009; IEEE Publishing: Piscataway, NJ, USA, 2009; pp. 1–10. [Google Scholar]

- Smeresky, B.; Rizzo, A.; Sands, T. Optimal Learning and Self-Awareness Versus PDI. Algorithms 2020, 13, 23. [Google Scholar] [CrossRef] [Green Version]

- Escalona, J.L.; Urda, P.; Muñoz, S. A Track Geometry Measuring System Based on Multibody Kinematics, Inertial Sensors and Computer Vision. Sensors 2021, 21, 683. [Google Scholar] [CrossRef] [PubMed]

- Cuadrado, J.; Michaud, F.; Lugrís, U.; Pérez Soto, M. Using Accelerometer Data to Tune the Parameters of an Extended Kalman Filter for Optical Motion Capture: Preliminary Application to Gait Analysis. Sensors 2021, 21, 427. [Google Scholar] [CrossRef]

- Trslić, P.; Omerdic, E.; Dooly, G.; Toal, D. Neuro-Fuzzy Dynamic Position Prediction for Autonomous Work-Class ROV Docking. Sensors 2020, 20, 693. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gruosso, G.; Storti Gajani, G.; Ruiz, F.; Valladolid, J.D.; Patino, D. A Virtual Sensor for Electric Vehicles’ State of Charge Estimation. Electronics 2020, 9, 278. [Google Scholar] [CrossRef] [Green Version]

- Yoon, S.; Choi, Y.; Koo, J.; Hong, Y.; Kim, R.; Kim, J. Virtual Sensors for Estimating District Heating Energy Consumption under Sensor Absences in a Residential Building. Energies 2020, 13, 6013. [Google Scholar] [CrossRef]

- Chau, A.; Dawson, J.; Mitchell, P.; Loh, T.H. Virtual Sensing Directional Hub MAC (VSDH-MAC) Protocol with Power Control. Electronics 2020, 9, 1219. [Google Scholar] [CrossRef]

- Oliveira, A.J.; Ferreira, B.M.; Cruz, N.A. A Performance Analysis of Feature Extraction Algorithms for Acoustic Image-Based Underwater Navigation. J. Mar. Sci. Eng. 2021, 9, 361. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Yao, H. Pulse Ranging Method Based on Active Virtual Time Reversal in Underwater Multi-Path Channel. J. Mar. Sci. Eng. 2020, 8, 883. [Google Scholar] [CrossRef]

- Barker, L.D.L.; Whitcomb, L.L. Performance Analysis of Ice-Relative Upward-Looking Doppler Navigation of Underwater Vehicles Beneath Moving Sea Ice. J. Mar. Sci. Eng. 2021, 9, 174. [Google Scholar] [CrossRef]

- Thomas, C.; Simetti, E.; Casalino, G. A Unifying Task Priority Approach for Autonomous Underwater Vehicles Integrating Homing and Docking Maneuvers. J. Mar. Sci. Eng. 2021, 9, 162. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, Y.; Zhang, Z.; Wang, H. Multicriteria Ship Route Planning Method Based on Improved Particle Swarm Optimization–Genetic Algorithm. J. Mar. Sci. Eng. 2021, 9, 357. [Google Scholar] [CrossRef]

- Anderlini, E.; Husain, S.; Parker, G.G.; Abusara, M.; Thomas, G. Towards Real-Time Reinforcement Learning Control of a Wave Energy Converter. J. Mar. Sci. Eng. 2020, 8, 845. [Google Scholar] [CrossRef]

- Davidson, J.; Kalmár-Nagy, T. A Real-Time Detection System for the Onset of Parametric Resonance in Wave Energy Converters. J. Mar. Sci. Eng. 2020, 8, 819. [Google Scholar] [CrossRef]

- Sirigu, S.A.; Foglietta, L.; Giorgi, G.; Bonfanti, M.; Cervelli, G.; Bracco, G.; Mattiazzo, G. Techno-Economic Optimisation for a Wave Energy Converter via Genetic Algorithm. J. Mar. Sci. Eng. 2020, 8, 482. [Google Scholar] [CrossRef]

- Veremey, E.I. Optimal Damping Concept Implementation for Marine Vessels’ Tracking Control. J. Mar. Sci. Eng. 2021, 9, 45. [Google Scholar] [CrossRef]

- Volkova, T.A.; Balykina, Y.E.; Bespalov, A. Predicting Ship Trajectory Based on Neural Networks Using AIS Data. J. Mar. Sci. Eng. 2021, 9, 254. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, Y.; Zhao, G.; Wang, H.; Zhao, Y. Path-Following Control Method for Surface Ships Based on a New Guidance Algorithm. J. Mar. Sci. Eng. 2021, 9, 166. [Google Scholar] [CrossRef]

- Martin, D.; Kühl, N.; Satzger, G. Virtual Sensors. Bus Inf. Syst. Eng. 2021, 63, 315–323. [Google Scholar] [CrossRef]

- Sands, T. Comparison and Interpretation Methods for Predictive Control of Mechanics. Algorithms 2019, 12, 232. [Google Scholar] [CrossRef] [Green Version]

- Brown, R.; Hwang, P. Introduction to Random Signals and Applied Kalman Filtering, 3rd ed.; Wiley: New York, NY, USA, 1997. [Google Scholar]

- Ogata, K. Modern Control Systems, 5th ed.; Prentice Hall: New York, NY, USA, 2010; ISBN 978-0-13-615673-4. [Google Scholar]

- Lurie, B.; Enright, P. Classical Feedback Control with Nonlinear Multi-Loop Systems, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2019; ISBN 978-1-1385-4114-6. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).