Systematic Review of Electricity Demand Forecast Using ANN-Based Machine Learning Algorithms

Abstract

:1. Introduction

- We analyze the Key Performance Indicators (KPIs) used to evaluate the accuracy of the predictions and to compare the performance of different algorithms. In this regard, the predominance of some metrics in the literature (e.g., MAPE, the Mean Absolute Percentage Error) often leads to overlooking important quality parameters, such as the distribution of the error and the maximum forecast error.

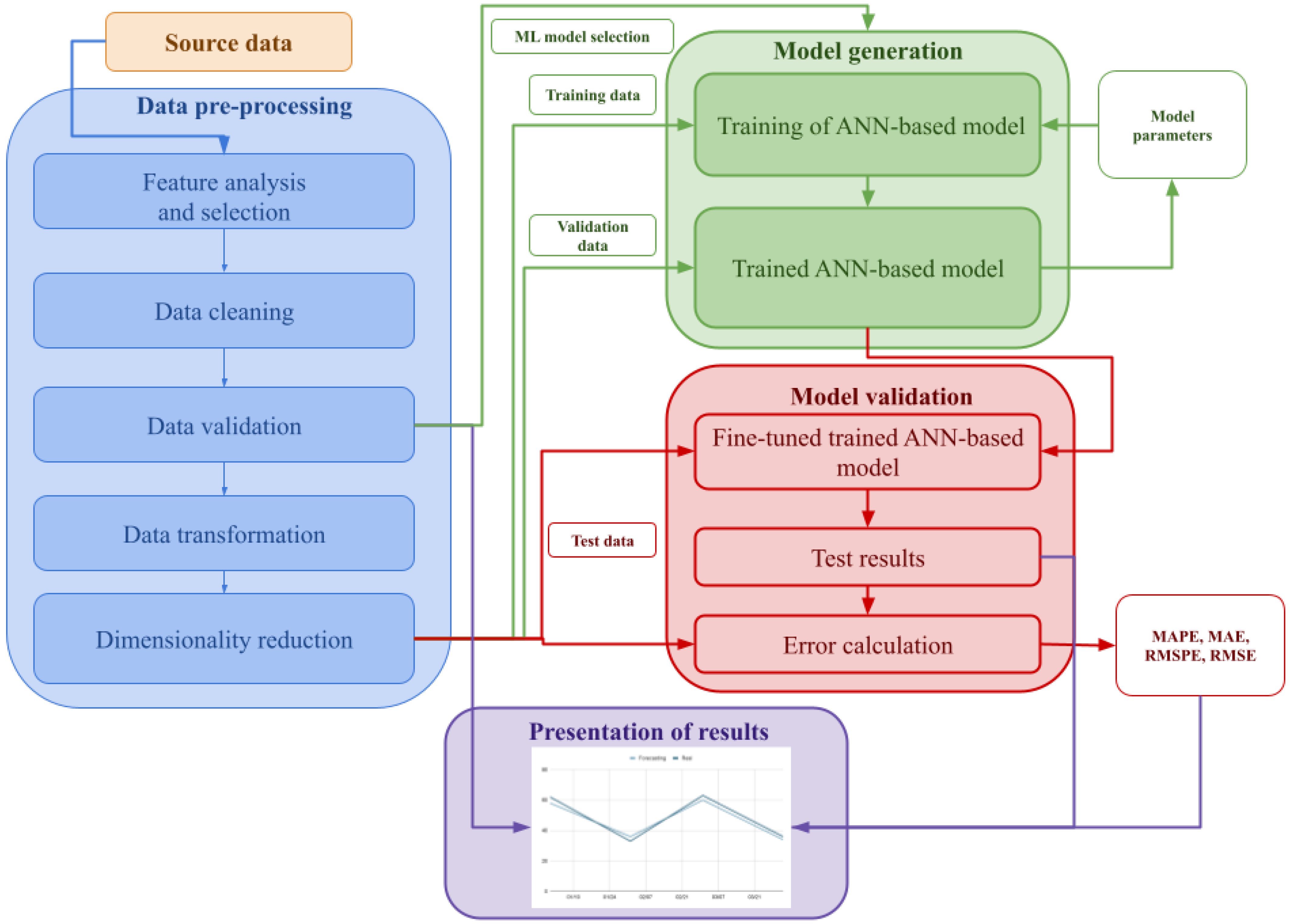

- We look at other fundamental aspects in ML problems, such as the data pre-processing techniques, the selection of training and validation sets, the tuning of the hyper-parameters of the model, the graphical representations and the presentation of the results.

- Last but not least, we discuss the ability to publicly access the datasets used to carry out the experiments and to validate the results and the code of each one of the selected papers. Lack of access makes the results of many papers very hard or impossible to reproduce, reducing their impact as sources of innovation and knowledge.

2. Methodology

- Type of problem to solve.

- Algorithms used.

- Supporting tools.

- Input variables.

- Dataset characteristics.

- Performance indicators.

- Results.

- Particularities.

3. State-of-the-Art ANN-Based Algorithms Used in Load Forecasting Problems

- The Multi-Layer Perceptron (MLP) refers to a canonical feedforward artificial neural network, which typically consists of one input layer, one output layer and a set of hidden layers in between. Early works showed that a single hidden layer is sufficient to yield a universal approximator of any function, and so MLPs were commonly used in papers from the 1990s and early 2000s. However they have been progressively replaced by more sophisticated recursive algorithms, which can better capture the complex patterns of load time series. The most recent papers included in Section 5 show how recursive ANN-based approaches typically outperform MLP.

- Self-Organizing Maps (SOM) are neural network-based dimensionality reduction algorithms, generally used to represent a high-dimensional dataset as a two-dimensional discretized pattern. They are also called feature maps, as they are essentially re-training the features of the input data and grouping them according to similarity parameters. SOMs are used to recognize common patterns in the input space and train distinct ANNs to be used with the different patterns [35].

- Deep Learning refers to ANN networks capable of unsupervised learning from data that are unstructured or unlabeled. The adjective “deep” comes from the use of multiple hidden layers in the network to progressively extract higher-level features from the raw input.

- Many authors (e.g., [20,47,52]) use variants of Recursive Neural Networks (RNNs) that have the capability of learning from previous load time series. Others use Long Short-Term Memory (LSTM) networks, a special kind of RNNs that can learn from long-term dependencies. These were introduced by Hochreiter and Schmidhuber [57] in 1997 and refined and popularized by many authors in subsequent works. Several of the most recent papers included in the review conclude that LSTM variants achieve low forecasting errors outperforming other algorithms in their experiments.

- ANN and Genetic Algorithms (ANN-GA). In these works, the idea of the genetic algorithms is to iteratively apply three operations (referred to as selection, crossing and mutation) in order to optimize different parameters of the ANNs. For example, Wang et al. [33] used the GA to improve specifically the back-propagation weights, whereas Azadeh et al. [36] used GAs to tune all the parameters of an MLP.

- ANN and Particle Swarm Optimization (ANN-PSO). PSO is another optimization technique that tries to improve a candidate solution in a search-space with regard to a given measure of quality. It is a metaheuristic (i.e., it makes few or no assumptions about the problem being optimized) that can search very large spaces of candidate solutions, but it cannot guarantee that an optimal solution is ever found. As an example, Son and Kim [58] used PSO to select the 10 most relevant variables to be used as input for SVR (Support Vector Machine Regression) and ANN algorithms. Likewise, He and Xu [22] proposed the use of PSO to optimize the back-propagation process to tune the parameters of an MLP.

- Adaptive Neuro-Fuzzy Inference System (ANFIS). Developed in 1993 by Jang [59], ANFIS overcomes the deficient parts of ANNs and fuzzy logic by combining both technologies. It is used in [3] to model load demand problems. It uses fuzzy inference in its internal layers which allows the model to be less dependent on proficient knowledge, improving its learning and making it more adaptable.

4. Particularities of Electric Load Demand As a Problem for ANNs

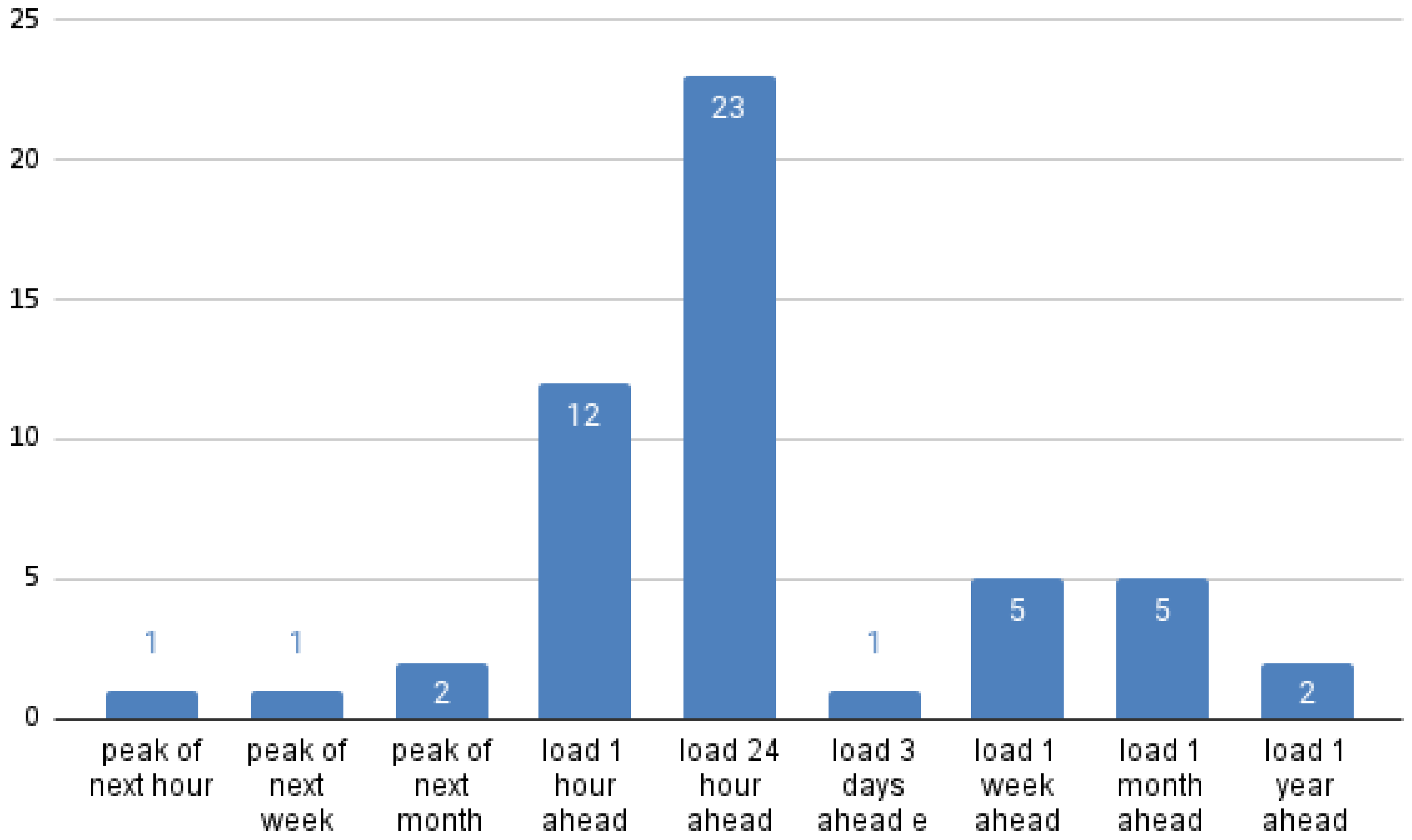

4.1. Prediction Range

- Short-term load forecasting (STLF) refers to predictions up to 1 day ahead.

- Medium-term load forecasting (MTLF) refers to 1 day to 1 year ahead.

- Long-term load forecasting (LTLF) refers to 1–10 years ahead.

4.2. Load Forecasting as a Sequence Prediction Problem

- Sequence prediction: from a sequence of values a single value is predicted. For example, from a time series of previous load values we obtain a prediction for the next load value.

- Sequence-to-Sequence (S2S) prediction: we do not obtain a single value but a sequence of predicted values, defining how the load will evolve in a range of future time steps.

4.3. Input Variables

4.3.1. Sources of Input Data

4.3.2. Pre-Processing of Input Variables

- Data cleaning. Either due to errors in the sensors or in the data processing, the time series may include invalid or missing data, making it necessary to apply conventional mechanisms to modify these values. For example, depending on the type and amount of missing data, different approaches can be used, such as dropping the variable or completing with the mean or the last observed value. Removal of duplicate rows may be also needed at this initial stage. Very few papers explain whether any of these techniques was used, even when they may have a significant effect on the model’s performance.

- Data validation. It is necessary to validate the data, especially when they come directly from AMI devices and they have not been obtained from public databases. Data visualization techniques can help to check if the data match expected patterns. It is worth noting that smart meters typically send the measured values using PLC (Power Line Communication) technologies, which may be affected by different electromagnetic interference sources [66]. This makes it especially important to verify the integrity of the data before training the model. Detection and removal of outlier values is typically performed to optimize the training of ANN-based models. Ref. [46] proposes the use of PCA (Principal Component Analysis) as an effective outlier detection approach.

- Data transformation. This phase includes different types of transformations of the data, such as change of units or data aggregation. Data aggregation from individual sources is a common practice to achieve data reduction, change of scale and minimize variability. More advanced transformation techniques are aimed at rescaling the features in order to make the algorithm to converge faster and properly and minimize the forecasting error. The most common rescaling approaches are normalization and standardization:

- Normalization refers to the process of scaling the original data range to values between 0 and 1. It is useful when the data have varying scales and the used algorithm does not make assumptions about their distribution (as is the case of ANNs).

- Standardization consists of re-scaling the data so that the mean of the values is 0 and the standard deviation is 1. Variables that are measured at different scales would not contribute equally to the analysis and might end up creating biased results through the ANNs. Standardization also avoids problems that would stem from measurements expressed with different units.

- Dimensionality reduction techniques are typically used in machine learning problems in order to optimize the model generation by reducing the number of input variables. However, only a few of the reviewed papers require the usage these techniques due to the low number of input variables.

4.3.3. Non-Linearity with Respect to Input Variables

4.4. Output Variables

- A time series of expected demand for the future, i.e., a list of the demand values predicted for specific moments.

- The load peak value of the electric grid at some point in the future (e.g., next day or next week peak).

4.5. Measuring and Comparing Performance

- The Maximum Negative Error (MNE) and Maximum Positive Error (MPE) give the maximum negative and positive difference, respectively, between a predicted value and a real value. These values can be more relevant than the average error for some applications (e.g., to forecast the fuel stockage in a power plant).

- The Residual Sum of Squares (RSS) is the sum of the squares of residuals (deviations predicted from actual values of data). It can be calculated from the RMSE. It measures the discrepancy between the data and an estimation model.

- The Standard Deviation of Residuals describes the difference in standard deviations of observed values versus predicted values as shown by points in a regression analysis.

- The comparison of the correlation between the time series produced by different algorithms and the real validation set is used by some authors to measure quality [13], too.

4.6. Forecasting Model Generation Process

- The ML algorithm proposed to build the forecasting model. The proposed models are typically compared with commonly used ML models. Section 5 includes an exhaustive list of both the ML algorithm being tested and the alternative options.

- As mentioned in Section 4.5, authors use different alternatives to analyze and compare the performance of the different ML algorithms [13,40,48]. However, the comparison of MAPE and RMSE values is the most typical choice.

4.7. Reproducibility of Forecasting Experiments

5. Summary of the Reviewed Papers

- Title and reference.

- Year of publication.

- Goal.

- Algorithms and optimization techniques used.

- Performance of the best algorithm.

6. Conclusions

6.1. Performance Comparison

6.2. The Best Performing Algorithms

6.3. Influence of the Aggregation Level in Model Performance

6.4. Benchmarks and Reproducibility

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AMI | Advanced Metering Infrastructure |

| ANFIS | Adaptive Neuro-Fuzzy Inference System |

| ANN | Artificial Neural Network |

| ARIMA | Autoregressive integrated moving average |

| ARMAX | Autoregressive–moving-average model |

| BFGS | Broyden–Fletcher–Goldfarb–Shanno |

| BP | Back-Propagation |

| BPN | Back-Propagation Network |

| BR | Bayesian Regularization |

| ENN | Evolving Neural Network |

| FFNN | Feed Forward Neural Network |

| FFANN | Feedforward Artificial Neural Network |

| GBRT | Gradient Boosted Regression Trees |

| LM | Levenberg Marquardt (BP algorithm) |

| DNN | Deep Neural Network |

| GA | Genetic Algorithm |

| GMDH | Group Method Data Handling |

| KPI | Key Performance Indicator |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| MHS | Modified Harmony Search |

| ML | Machine Learning |

| MLP | Multi-Layer Perceptron |

| MLR | Multiple Linear Regression |

| MTLF | Medium-Term Load Forecast |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| LSTM | Long-Short Term Memory networks |

| LTLF | Short-Term Load Forecast |

| PCA | Principal Component Analysis |

| Probability Distribution Function | |

| PDRNN | Diagonal Recurrent Neural Networks |

| PJM | Pennsylvania, New Jersey, and Maryland |

| PSO | Particle Swarm Optimization |

| RBF | Radial Basis Function |

| RBM | Restricted Boltzmann Machine |

| RMSE | Root Mean Square Error |

| RMSPE | Root Mean Square Percentage Error |

| RNN | Recurrent Neural Network |

| SBMO | Sequential Model-Based Global Optimization |

| SFNN | Self-organising Fuzzy Neural Network |

| SOM | Self-Organizing Map |

| STLF | Short-Term Load Forecast |

| SVM | Support Vector Machine |

| SVR | Support Vector Machine Regression |

| WEFuNN | Weighted Evolving Fuzzy Neural Network |

References

- Lee, K.Y.; Park, J.H.; Chang, S.H. Short-term load forecasting using an artificial neural network. IEEE Trans. Power Syst. 1992, 7, 124–130. [Google Scholar] [CrossRef]

- Peng, T.M.; Hubele, N.F.; Karady, G.G. An conceptual approach to the application of neural networks for short term load forecasting. In Proceedings of the IEEE International Symposium on Circuits and Systems, New Orleans, LA, USA, 1–3 May 1990; pp. 2942–2945. [Google Scholar]

- Zor, K.; Timur, O.; Teke, A. A state-of-the-art review of artificial intelligence techniques for short-term electric load forecasting. In Proceedings of the 2017 6th International Youth Conference on Energy (IYCE), Budapest, Hungary, 21–24 June 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Fallah, S.N.; Deo, R.C.; Shojafar, M.; Conti, M.; Shamshirband, S. Computational Intelligence Approaches for Energy Load Forecasting in Smart Energy Management Grids: State of the Art, Future Challenges, and Research Directions. Energies 2018, 11, 596. [Google Scholar] [CrossRef] [Green Version]

- Alfares, H.; Mohammad, N. Electric load forecasting: Literature survey and classification of methods. Int. J. Syst. Sci. 2002, 33, 23–34. [Google Scholar] [CrossRef]

- Mosavi, A.; Salimi, M.; Ardabili, S.F.; Rabczuk, T.; Shamshirband, S.; Varkonyi-Koczy, A.R. State of the Art of Machine Learning Models in Energy Systems, a Systematic Review. Energies 2019, 12, 1301. [Google Scholar] [CrossRef] [Green Version]

- Metaxiotis, K.; Kagiannas, A.; Askounis, D.; Psarras, J. Artificial intelligence in short term electric load forecasting: A state-of-the-art survey for the researcher. Energy Convers. Manag. 2003, 44, 1525–1534. [Google Scholar] [CrossRef]

- Moharari, N.S.; Debs, A.S. An artificial neural network based short term load forecasting with special tuning for weekends and seasonal changes. In Proceedings of the Second International Forum on Applications of Neural Networks to Power Systems, Yokohama, Japan, 19–22 April 1993; pp. 279–283. [Google Scholar] [CrossRef]

- Mori, H.; Ogasawara, T. A recurrent neural network for short-term load forecasting. In Proceedings of the Second International Forum on Applications of Neural Networks to Power Systems, Yokohama, Japan, 19–22 April 1993; pp. 395–400. [Google Scholar] [CrossRef]

- Srinivasa, N.; Lee, M.A. Survey of hybrid fuzzy neural approaches to electric load forecasting. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Part 5, Vancouver, BC, Canada, 22–25 October 1995; pp. 4004–4008. [Google Scholar]

- Mohammed, O.; Park, D.; Merchant, R.; Dinh, T.; Tong, C.; Azeem, A.; Farah, J.; Drake, C. Practical experiences with an adaptive neural network short-term load forecasting system. IEEE Trans. Power Syst. 1995, 10, 254–265. [Google Scholar] [CrossRef]

- Marin, F.J.; Garcia-Lagos, F.; Joya, G.; Sandoval, F. Global model for short-term load forecasting using artificial neural networks. IEEE Gener. Transm. Distrib. 2002, 149, 121–125. [Google Scholar] [CrossRef] [Green Version]

- Abu-El-Magd, M.A.; Findlay, R.D. A new approach using artificial neural network and time series models for short term load forecasting. In Proceedings of the CCECE 2003—Canadian Conference on Electrical and Computer Engineeringl Toward a Caring and Humane Technology (Cat. No.03CH37436), Montreal, QC, Canada, 4–7 May 2003; Volume 3, pp. 1723–1726. [Google Scholar] [CrossRef]

- Izzatillaev, J.; Yusupov, Z. Short-term load forecasting in grid-connected microgrid. In Proceedings of the 2019 7th International Istanbul Smart Grids and Cities Congress and Fair (ICSG), Istanbul, Turkey, 25–26 April 2019; pp. 71–75. [Google Scholar]

- Twanabasu, S.R.; Bremdal, B.A. Load forecasting in a Smart Grid oriented building. In Proceedings of the 22nd International Conference and Exhibition on Electricity Distribution (CIRED 2013), Stockholm, Sweden, 10–13 June 2013; pp. 1–4. [Google Scholar]

- Emre, A.; Hocaoglu, F.O. Electricity demand forecasting of a micro grid using ANN. In Proceedings of the 2018 9th International Renewable Energy Congress (IREC), Hammamet, Tunisia, 20–23 March 2018; pp. 1–5. [Google Scholar]

- Gezer, G.; Tuna, G.; Kogias, D.; Gulez, K.; Gungor, V.C. PI-controlled ANN-based energy consumption forecasting for smart grids. In Proceedings of the ICINCO 2015—12th International Conference on Informatics in Control, Automation and Robotics, Colmar, France, 21–23 July 2015; pp. 110–116. [Google Scholar] [CrossRef]

- Huang, Q.; Li, Y.; Liu, S.; Liu, P. Hourly load forecasting model based on real-time meteorological analysis. In Proceedings of the 2016 8th International Conference on Computational Intelligence and Communication Networks (CICN), Tehri, India, 23–25 December 2016; pp. 488–492. [Google Scholar] [CrossRef]

- Marino, D.L.; Amarasinghe, K.; Manic, M. Building energy load forecasting using Deep Neural Networks. In Proceedings of the IECON 2016—42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 24–27 October 2016; pp. 7046–7051. [Google Scholar] [CrossRef] [Green Version]

- Shi, H.; Xu, M.; Li, R. Deep Learning for Household Load Forecasting—A Novel Pooling Deep RNN. IEEE Trans. Smart Grid 2018, 9, 5271–5280. [Google Scholar] [CrossRef]

- Ryu, S.; Noh, J.; Kim, H. Deep neural network based demand side short term load forecasting. In Proceedings of the 2016 IEEE International Conference on Smart Grid Communications (SmartGridComm), Sydney, Australia, 6–9 November 2016; pp. 308–313. [Google Scholar] [CrossRef]

- He, Y.; Xu, Q. Short-term power load forecasting based on self-adapting PSO-BP neural network model. In Proceedings of the 2012 Fourth International Conference on Computational and Information Sciences, Chongqing, China, 17–19 August 2012; pp. 1096–1099. [Google Scholar] [CrossRef]

- Jigoria-Oprea, D.; Lustrea, B.; Kilyeni, S.; Barbulescu, C.; Kilyeni, A.; Simo, A. Daily load forecasting using recursive Artificial Neural Network vs. classic forecasting approaches. In Proceedings of the 2009 5th International Symposium on Applied Computational Intelligence and Informatics, Timisoara, Romania, 28–29 May 2009; pp. 487–490. [Google Scholar] [CrossRef]

- Tee, C.Y.; Cardell, J.B.; Ellis, G.W. Short-term load forecasting using artificial neural networks. In Proceedings of the 41st North American Power Symposium, Starkville, MS, USA, 4–6 October 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Dudek, G. Short-term load cross-forecasting using pattern-based neural models. In Proceedings of the 2015 16th International Scientific Conference on Electric Power Engineering (EPE), Kouty nad Desnou, Czech Republic, 20–22 May 2015; pp. 179–183. [Google Scholar] [CrossRef]

- Keitsch, K.A.; Bruckner, T. Input data analysis for optimized short term load forecasts. In Proceedings of the 2016 IEEE Innovative Smart Grid Technologies—Asia (ISGT-Asia), Melbourne, Australia, 28 November–1 December 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Singh, N.K.; Singh, A.K.; Paliwal, N. Neural network based short-term electricity demand forecast for Australian states. In Proceedings of the 2016 IEEE 1st International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES), Delhi, India, 4–6 July 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Mitchell, G.; Bahadoorsingh, S.; Ramsamooj, N.; Sharma, C. A comparison of artificial neural networks and support vector machines for short-term load forecasting using various load types. In Proceedings of the 2017 IEEE Manchester PowerTech, Manchester, UK, 18–22 June 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Pan, X.; Lee, B. A comparison of support vector machines and artificial neural networks for mid-term load forecasting. In Proceedings of the 2012 IEEE International Conference on Industrial Technology, Athens, Greece, 19–21 March 2012; pp. 95–101. [Google Scholar] [CrossRef]

- Narayan, A.; Hipel, K.W. Long short term memory networks for short-term electric load forecasting. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 2573–2578. [Google Scholar] [CrossRef]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-Term Residential Load Forecasting Based on LSTM Recurrent Neural Network. IEEE Trans. Smart Grid 2019, 10, 841–851. [Google Scholar] [CrossRef]

- Bashari, M.; Rahimi-Kian, A. Forecasting electric load by aggregating meteorological and history-based deep learning modules. In Proceedings of the 2020 IEEE Power & Energy Society General Meeting (PESGM), Montreal, QC, Canada, 2–6 August 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, Y.; Jing, Y.; Zhao, W.; Mao, Y. Dynamic neural network based genetic algorithm optimizing for short term load forecasting. In Proceedings of the 2010 Chinese Control and Decision Conference, Xuzhou, China, 26–28 May 2010; pp. 2701–2704. [Google Scholar] [CrossRef]

- Darbellay, G.A.; Slama, M. Forecasting the short-term demand for electricity: Do neural networks stand a better chance? Int. J. Forecast. 2000, 16, 71–83. [Google Scholar] [CrossRef]

- Hernández, L.; Baladrón, C.; Aguiar, J.M.; Carro, B.; Sánchez-Esguevillas, A.; Lloret, J. Artificial neural networks for short-term load forecasting in microgrids environment. Energy 2014, 75, 252–264. [Google Scholar] [CrossRef]

- Azadeh, A.; Ghaderi, S.F.; Tarverdian, S.; Saberi, M. Integration of artificial neural networks and genetic algorithm to predict electrical energy consumption. Appl. Math. Comput. 2007, 186, 1731–1741. [Google Scholar] [CrossRef]

- Chang, P.-C.; Fan, C.-Y.; Lin, J.-J. Monthly electricity demand forecasting based on a weighted evolving fuzzy neural network approach. Int. J. Electr. Power Energy Syst. 2011, 33, 17–27. [Google Scholar] [CrossRef]

- Dash, P.K.; Satpathy, H.P.; Liew, A.C. A real-time short-term peak and average load forecasting system using a self-organising fuzzy neural network. Eng. Appl. Artif. Intell. 1998, 11, 307–316. [Google Scholar] [CrossRef]

- Azadeh, A.; Ghaderi, S.F.; Sohrabkhani, S. Forecasting electrical consumption by integration of Neural Network, time series and ANOVA. Appl. Math. Comput. 2007, 186, 1753–1761. [Google Scholar] [CrossRef]

- Azadeh, A.; Ghaderi, S.F.; Sohrabkhani, S. Annual electricity consumption forecasting by neural network in high energy consuming industrial sectors. Energy Convers. Manag. 2008, 49, 2272–2278. [Google Scholar] [CrossRef]

- Santana, Á.L.; Conde, G.B.; Rego, L.P.; Rocha, C.A.; Cardoso, D.L.; Costa, J.C.W.; Bezerra, U.H.; Francês, C.R.L. PREDICT—Decision support system for load forecasting and inference: A new undertaking for Brazilian power suppliers. Int. J. Electr. Power Energy Syst. 2012, 38, 33–45. [Google Scholar] [CrossRef]

- Bunnoon, P.; Chalermyanont, K.; Limsakul, C. Multi-substation control central load area forecasting by using HP-filter and double neural networks (HP-DNNs). Int. J. Electr. Power Energy Syst. 2013, 44, 561–570. [Google Scholar] [CrossRef]

- Rahman, A.; Srikumar, V.; Smith, A.D. Predicting electricity consumption for commercial and residential buildings using deep recurrent neural networks. Appl. Energy 2018, 212, 372–385. [Google Scholar] [CrossRef]

- Son, H.; Kim, C. A Deep Learning Approach to Forecasting Monthly Demand for Residential–Sector Electricity. Sustainability 2020, 12, 3103. [Google Scholar] [CrossRef] [Green Version]

- He, Y.; Qin, Y.; Wang, S.; Wang, X.; Wang, C. Electricity consumption probability density forecasting method based on LASSO-Quantile Regression Neural Network. Appl. Energy 2019, 233–234, 565–575. [Google Scholar] [CrossRef] [Green Version]

- Hernandez, L.; Baladrón, C.; Aguiar, J.M.; Carro, B.; Sanchez-Esguevillas, A.J.; Lloret, J. Short-Term Load Forecasting for Microgrids Based on Artificial Neural Networks. Energies 2013, 6, 1385–1408. [Google Scholar] [CrossRef] [Green Version]

- Bouktif, S.; Fiaz, A.; Ouni, A.; Serhani, M.A. Optimal Deep Learning LSTM Model for Electric Load Forecasting using Feature Selection and Genetic Algorithm: Comparison with Machine Learning Approaches. Energies 2018, 11, 1636. [Google Scholar] [CrossRef] [Green Version]

- Kandananond, K. Forecasting Electricity Demand in Thailand with an Artificial Neural Network Approach. Energies 2011, 4, 1246–1257. [Google Scholar] [CrossRef]

- Ma, Y.-J.; Zhai, M.-Y. Day-Ahead Prediction of Microgrid Electricity Demand Using a Hybrid Artificial Intelligence Model. Processes 2019, 7, 320. [Google Scholar] [CrossRef] [Green Version]

- Amjady, N.; Keynia, F. A New Neural Network Approach to Short Term Load Forecasting of Electrical Power Systems. Energies 2011, 4, 488–503. [Google Scholar] [CrossRef]

- Zheng, H.; Yuan, J.; Chen, L. Short-Term Load Forecasting Using EMD-LSTM Neural Networks with a Xgboost Algorithm for Feature Importance Evaluation. Energies 2017, 10, 1168. [Google Scholar] [CrossRef] [Green Version]

- Hossen, T.; Plathottam, S.J.; Angamuthu, R.K.; Ranganathan, P.; Salehfar, H. Short-term load forecasting using deep neural networks (DNN). In Proceedings of the 2017 North American Power Symposium (NAPS), Morgantown, WV, USA, 17–19 September 2017; pp. 1–6. [Google Scholar]

- Peng, Y.; Wang, Y.; Lu, X.; Li, H.; Shi, D.; Wang, Z.; Li, J. Short-term load forecasting at different aggregation levels with predictability analysis. In Proceedings of the 2019 IEEE Innovative Smart Grid Technologies—Asia (ISGT Asia), Chengdu, China, 21–24 May 2019; pp. 3385–3390. [Google Scholar] [CrossRef] [Green Version]

- Anupiya, N.; Upeka, S.; Kok, W. Predicting Electricity Consumption using Deep Recurrent Neural Networks. arXiv 2019, arXiv:1909.08182. [Google Scholar]

- Hossen, T.; Nair, A.S.; Chinnathambi, R.A.; Ranganathan, P. Residential Load Forecasting Using Deep Neural Networks (DNN). In Proceedings of the 2018 North American Power Symposium (NAPS), Fargo, ND, USA, 9–11 September 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Bunnon, P.; Chalermyanont, K.; Limsakul, C. The Comparision of Mid Term Load Forecasting between Multi-Regional and Whole Country Area Using Artificial Neural Network. Int. J. Comput. Electr. Eng. 2010, 2, 334. [Google Scholar] [CrossRef] [Green Version]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Son, H.; Kim, C. Short-term forecasting of electricity demand for the residential sector using weather and social variables. Resour. Conserv. Recycl. 2017, 123, 200–207. [Google Scholar] [CrossRef]

- Jang, J.R. ANFIS: Adaptive-Network-Based Fuzzy Inference System. IEEE Trans. Syst. Man Cybern. 1993, 23, 665–685. [Google Scholar] [CrossRef]

- Brownlee, J. Long Short-Term Memory Networks with Python. Develop Sequence Prediction Models With Deep Learning; Machine Learning Mastery: San Juan, PR, USA, 2017. [Google Scholar]

- Hernández, L.; Baladrón, C.; Aguiar, J.M.; Calavia, L.; Carro, B.; Sánchez-Esguevillas, A.; Cook, D.J.; Chinarro, D.; Gómez, J. A Study of the Relationship between Weather Variables and Electric Power Demand inside a Smart Grid/Smart World Framework. Sensors 2012, 12, 11571–11591. [Google Scholar] [CrossRef] [Green Version]

- He, Y.; Liu, R.; Li, H.; Wang, S.; Lu, X. Short-term power load probability density forecasting method using kernel-based support vector quantile regression and Copula theory. Appl. Energy 2017, 185, 254–266. [Google Scholar] [CrossRef] [Green Version]

- Zheng, J.; Zhang, L.; Chen, J.; Wu, G.; Ni, S.; Hu, Z.; Weng, C.; Chen, Z. Multiple-Load Forecasting for Integrated Energy System Based on Copula-DBiLSTM. Energies 2021, 14, 2188. [Google Scholar] [CrossRef]

- Shove, E.; Pantzar, M.; Watson, M. The Dynamics of Social Practice: Everyday Life and How it Changes; SAGE Publications: Southend Oaks, CA, USA, 2012. [Google Scholar]

- Ventseslav, S.; Vanya, M. Impact of data preprocessing on machine learning performance. In Proceedings of the International Conference on Information Technologies (InfoTech-2013), Singapore, 1–2 December 2013. [Google Scholar]

- Sendin, A.; Peña, I.; Angueira, P. Strategies for Power Line Communications Smart Metering Network Deployment. Energies 2014, 7, 2377–2420. [Google Scholar] [CrossRef] [Green Version]

- He, Y.; Wang, Y. Short-term wind power prediction based on EEMD-LASSO-QRNN model. Appl. Soft Comput. 2021, 105, 107288. [Google Scholar] [CrossRef]

- Taleb, N.N.; Bar-Yam, Y.; Cirillo, P. On single point forecasts for fat-tailed variables. Int. J. Forecast. 2020. [Google Scholar] [CrossRef] [PubMed]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE)or mean absolute error (MAE)? Argum. Against Avoid. RMSE Lit. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef] [Green Version]

- Papers with Code. Available online: https://paperswithcode.com/ (accessed on 30 June 2021).

- Chen, J.; Do, Q.H. Forecasting daily electricity load by wavelet neural networks optimized by cuckoo search algorithm. In Proceedings of the 2017 6th IIAI International Congress on Advanced Applied Informatics (IIAI-AAI), Hamamatsu, Japan, 9–13 July 2017; pp. 835–840. [Google Scholar] [CrossRef]

- Mohammad, F.; Lee, K.B.; Kim, Y.-C. Short Term Load Forecasting Using Deep Neural Networks. arXiv 2018, arXiv:1811.03242. [Google Scholar]

- Wang, Y.; Chen, Q.; Hong, T.; Kang, C. Review of Smart Meter Data Analytics: Applications, Methodologies, and Challenges. IEEE Trans. Smart Grid 2019, 10, 3125–3148. [Google Scholar] [CrossRef] [Green Version]

| Publisher | Number of Papers | References |

|---|---|---|

| IEEE | 29 | [2,3,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33] |

| ScienceDirect | 12 | [34,35,36,37,38,39,40,41,42,43,44,45] |

| MDPI | 8 | [44,46,47,48,49,50,51] |

| Arxiv | 3 | [52,53,54] |

| Others | 2 | [55,56] |

| Type of Forecast | Number of Papers |

|---|---|

| STLF | 46 |

| MTLF | 8 |

| LTLF | 2 |

| Input Variable | Number of Papers |

|---|---|

| Previous load time- series | 37 |

| Previous load and weather time series | 10 |

| Previous load, weather and economic variables time series | 3 |

| Data | Number of Papers |

|---|---|

| Aggregated data from a geographic area | 34 |

| Aggregated data from microgrids | 8 |

| Individual meters deployed in the public power grid | 13 |

| Data Source | Number of Papers |

|---|---|

| Public data | 14 ([12,20,24,25,26,31,40,47,50,51,52,53,54,58]) |

| Private data | 37 |

| Tool | Number of Papers |

|---|---|

| Not mentioned | 19 |

| MATLAB | 12 |

| Tensorflow-based | 6 |

| Custom code | 3 |

| Title | Year | Goal | Algorithms | Best Algorithm |

|---|---|---|---|---|

| An artificial neural network-based short term load forecasting with special tuning for weekends and seasonal changes [8] | 1993 | To compare the performance of ANN using season, day of week, temperature and previous power peaks as inputs to forecast 1-week ahead peaks. | MLP | MAPE MLP: 1.60% |

| A recurrent neural network for short-term load forecasting [9] | 1993 | To compare the performance of recurrent and feedforward ANNs. | Feedforward 3-layer MLP 3-layer recurrent neural network with BP and diffusion learning | MAPE RNN with diffusion learning: 2.07% |

| Practical experiences with an adaptive neural network short-term load forecasting system [11] | 1995 | To compare performance of statistical methods and MLP to forecast demand 7 days ahead in blocks of 3 h. | 3-layer MLP (hidden layer with 3 neurons) with daily, weekly and monthly adaptation | MAPE MLP: 6% |

| A real-time short-term peak and average load forecasting system using a self-organising fuzzy neural network [38] | 1998 | To predict the demand peak 1 day and 1 week ahead comparing the performance of SFNN (Self-organising Fuzzy Neural Network), FFN (Fuzzy Neural Network) and MLP. | SFNN, FFN and MLP | MAPE SFNN: 1.8% for 1 day ahead peak load forecast and 1.6% for 1 week ahead |

| Forecasting the short-term demand for electricity: Do neural networks stand a better chance? [34] | 2000 | To compare feedforward ANN with ARIMA and ARMAX using previous demand and temperature as inputs. To analyze the non-linearity of the demand forecast problem. | ARIMA, ARMAX and MLP | MAPE MLP: 0.8% |

| Global model for short-term load forecasting using artificial neural networks [12] | 2002 | To check performance of MLPs trained for classes defined using self-organizing maps with statistical methods. No comparison with other algorithms. | Kohonen’s self-organising map + Elman Recurrent Network | MAPE: 1.15–1.61% |

| A new approach using artificial neural network and time series models for short term load forecasting [13] | 2003 | To check accuracy of ANN to predict forecast using input variables selected depending on their correlation coefficient compared with ARIMA. | MLP using correlation coefficient to calculate weights | MAPE: 2.241% |

| Forecasting electrical consumption by integration of Neural Network, time series and ANOVA [39] | 2007 | To compare the performance of MLP to predict aggregated load from time series using analysis of variance and time series approach. Linear regression ANOVA and Duncan’s Multiple Range Tests are used to validate results. | MLP | MAPE: MLP 1.56% |

| Integration of artificial neural networks and genetic algorithm to predict electrical energy consumption [36] | 2007 | To check performance of MLP and GA for LTLF in the Iranian agricultural sector. | MLP + GA | MAPE MLP: 0.13% |

| Annual electricity consumption forecasting by neural network in high energy consuming industrial sectors [40] | 2008 | To check the performance of ANN algorithm to predict annual load of energy intensive industries using different input variables such as electricity price, number of consumers, fossil fuel price, previous load and industrial sector. ANOVA and Duncan’s multiple range test are used for formal comparison and validation. | MLP using different networks and regression. | MAPE: MLP 0.99% |

| Daily load forecasting using recursive Artificial Neural Network vs. classic forecasting approaches [23] | 2009 | To compare the performance of RNN with other analytical methods for 24-h ahead forecasts for a region of Romania. | RNN (using hyperbolic tangent as activation function). | RNN performs better. Least square value used instead of MAPE. |

| Short-term load forecasting using artificial neural networks [24] | 2009 | To compare the performance of ANN for 1-h ahead performance using previous load, weekday, month and temperature as input values with the results of other studios. ISO-New England control data are used to validate the algorithm. | Feed-Forward MLP using LM as BP algorithm. | MAPE: 0.439% (for ISO-New England) |

| Dynamic neural network-based genetic algorithm optimizing for short term load forecasting [33] | 2010 | To compare BP and Genetic Algorithm-based BP to find the optimal weights of a 3-layer MLP for one hour ahead load forecasts using load time series and weather variables | 3-layer MLP using BP and GA-BP | MAPE: GA-BP 1.6% (data calculated from results for day max load) |

| The comparison of mid term load forecasting between multi-regional and whole country area using Artificial Neural Network [56] | 2010 | To compare the forecasting results using MLP with data of Thailand as a whole or disaggregated in several regions. | MLP | MAPE monthly consumption multi-region: 1.45 peak: 2.48 |

| Forecasting electricity demand in Thailand with an Artificial Neural Network approach [48] | 2011 | To compare MLP with ARIMA and Multi-Linear Regression for LTLF for Thailand using previous load time series and economical variables. | Different topologies of MLP and RBF. | MAPE MLP: 0.96% |

| A new neural network approach to short term load forecasting of electrical power systems [50] | 2011 | To compare performance of ANN using MHS (Modified Harmony Search) learning algorithm with other techniques STLF forecast using PJM ISO data | ARMA, RBF, MLP trained by BR (Bayesian Regularization), MLP trained by BFGS (Broyden, Fletcher, Goldfarb, Shanno) and MLP neural network trained by LM | MAPE: MLP MHS 1.39% |

| PREDICT – Decision support system for load forecasting and inference: A new undertaking for Brazilian power suppliers [41] | 2011 | To analyze the use of wavelets, time series analysis methods and artificial neural networks, for both mid and long term forecasts. | MLP with BP and LM | MAPE: 0.72% |

| Monthly electricity demand forecasting based on a weighted evolving fuzzy neural network approach [37]. | 2011 | To compare WEFuNN (Weighted Evolving Fuzzy Neural Network) with ENN and BPN for 1-month ahead load forecast. | WEFuNN, Winter’s, MRA | MAPE WEFuNN: 6.43% |

| Short-term power load forecasting based on self-adapting PSO-BP neural network model [22] | 2012 | To show that PSO-BP algorithm can obtain optimal MLP parameters outperforming BP to forecast hourly 1-day ahead load demand for a city of China. | MLP getting the parameters with PSO-BP and BP | MAPE PSO-BP: 2.39% |

| A comparison of support vector machines and artificial neural networks for mid-term load forecasting [29] | 2012 | To compare the performance of SVM and ANN for MTLF with load and weather data. | MLP with several different numbers of neurons (2, 5, 8, 20/30). Usage of GA and PSO to obtain optimal SVMs models. | The authors conclude that both ANN and SVM are suitable, but SVM is more reliable and stable for load forecasting. |

| Load forecasting in a smart grid oriented building [15] | 2013 | To compare performance of ARIMA, MLP, SVM and STLF (next hour forecast) in university campus microgrid. | Seasonal ARIMA, MLP and SVM. | MAPE MLP: 5.3% |

| Short-term load forecasting for microgrids based on Artificial Neural Networks [46] | 2013 | To check ANN performance for load forecasting in a microgrid-sized Spanish region from previous load time series. | MLP (16 neurons in hidden layer) | MAPE: 2–5% |

| Multi-substation control central load area forecasting by using HP-filter and double neural networks (HP-DNNs) [42] | 2013 | To compare the use of HP (Hodris-Prescott) filter to decompose the previous load signals into trend and cyclical signals and DNN (Double Neural Network) for LTLF with other algorithms. | HP-DNN | MAPE HP-DNNS: 1.42–3.20% |

| Check the performance of MLP using SOM and k-means to find the right number of MLPs for STLF for a microgrid in Spain [35]. | 2014 | To check the performance of MLP using SOM and k-means to find the right number of MLPs for STLF for a microgrid in Spain. | 3-stage: SOM + k-means clustering and MLP. No other algorithms were tested. | MAPE: 2.73–3.22% |

| PI-controlled ANN-based energy consumption forecasting for smart grids [17]. | 2015 | To compare ANN and PI-ANN (Proportional Integral ANN) to predict consumption of individual devices. | PI-ANN and MLP. | N/A |

| Short-term load cross-forecasting using pattern-based neural models [25] | 2015 | To check if a combination of daily and weekly patterns performs better than the models individually for SLTF from previous load. | Unspecified neural model | MAPE cross- forecasting: 0.85% |

| Input data analysis for optimized short term load forecasts [26] | 2016 | To compare the performance of MLP, SVR and clustering for 24-ahead forecast for Germany load demand. | MLP(1,1,1) with (LM) algorithm, SVR and k-means cluster. | MAPE SRV: 2.1% |

| Hourly load forecasting model based on real-time meteorological analysis [18] | 2016 | To check the influence of weather variables in load forecast using MLP. | 3-layer MLP | MAPE (including weather variables) <2% |

| Neural network-based short-term electricity demand forecast for Australian states [27] | 2016 | To check the performance of FFNN (Feed Forward Neural Network) forecasting model for the different regions of Australia for STFL. | FFNN (using LM for training) | MAPE: 2.7233% |

| Building energy load forecasting using deep neural networks [19] | 2016 | To compare standard LSTM and LSTM-based Sequence to Sequence for STFL for 1-min resolution 1-h ahead predictions. | LSTM and LSTM-based S2S. | RMSE LSTM-S2S: 0.667 |

| Deep neural network-based demand side short term load forecasting [21] | 2016 | To compare DNN forecasting results for individual industrial consumers from South Korea with typical 3-layered shallow neural network (SNN), ARIMA, and Double Seasonal Holt-Winters (DSHW) model. | DNN (4 hidden layers with 150 neurons per layer and using RBM and ReLU), ARIMA, DSHW, MLP | DNN RBM: MAPE 8.84% RRMSE 10.62% |

| Forecasting daily electricity load by wavelet neural networks optimized by Cuckoo search algorithm [71] | 2017 | To check performance of MLP using wavelet for data-preprocessing and Cuckoo algorithm to obtain parameters. | MLP (using Wavelet and Cuckoo algorithm), ARIMA, MLR | MAPE Wavelet ANN-CS: 0.058 |

| Short-term forecasting of electricity demand for the residential sector using weather and social variables [58] | 2017 | To compare algorithms to forecast 1-month ahead demand in South Korea. | SVR, Fuzzy-rough feature selection with PSO, MLP, MLR and ARIMA. | MAPE SVR fuzzy-rough: 2.13% |

| A comparison of artificial neural networks and support vector machines for short-term load forecasting using various load types [28] | 2017 | To compare SVM and ANN to predict the load of Trinidad and Tobago for 3 industrial customers with different consumption patterns: continuous, batch, batch-continuous. | 3-layer MLP and SVM. | MAPE ANN: 1.04% |

| Short-term load forecasting using EMD(Empirical Mode Decomposition)-LSTM neural networks with a Xgboost algorithm for feature importance evaluation [51] | 2017 | To compare SD (Similar Days)-EMD-LSTM algorithm with others used for STLF. | SD-EMD-LSTM, LSTM SD-LSTM EMD-LSTM, ARIMA, BPNN, SVR | MAPE SD- EMD- LSTM 24 h: 1.04% 168 h: 1.56% |

| Deep learning for household load forecasting—A novel pooling deep RNN [20] | 2018 | To compare the performance of PDRNN (Diagonal Recurrent Neural Networks) with other algorithms for STLF household forecast. | PDRNN with ARIMA, SVR, DRNN, SIMple RNN. | MAPE PDRNN: 0.2510% |

| Long short term memory networks for short-term electric load forecasting [30] | 2017 | To compare algorithms for STLF regional load forecasting. | LSTM, MLP, ARIMA. | MAPE LSTM: 3.8% |

| A State-of-the-Art Review of Artificial Intelligence Techniques for Short-Term Electric Load Forecasting [3] | 2017 | To compare performance of ANFIS, MLP and SVM for STLF in a large region. | MLP, SVM and ANFIS | MAPE SVM: 1.790% |

| Short term load forecasting using deep neural networks (DNN) [72] | 2018 | To compare different transfer functions using MLP for STFL in an Iberian region. | MLP using different transfer functions: sigmoid, ReLU and ELU. | MAPE MLP ELU-ELU: 2.03% |

| Residential load forecasting using deep neural networks (DNN) [52] | 2018 | To compare DNN algorithms for STFL day-ahead for residentials users. | LSTM, GRU, RNN, ARIMA, GLM, RF, SVM, FFNN. | MAPE LSTM: 29% |

| Optimal deep learning LSTM model for electric load forecasting using Feature Selection and Genetic Algorithm: Comparison with Machine Learning Approaches [47] | 2018 | To find optimal algorithm for STLF and MTLF for region load, using GA to find optimal parameters. | LSTM + GA, Ridge Regression, Random Forest, Gradient Boosting, Neural network, Extra Trees. | RMSE LSTM 0.61% |

| Predicting electricity consumption for commercial and residential buildings using deep recurrent neural networks [43] | 2018 | To evaluate an LSTM-based algorithm using MLP for encoding for MTLF of different residential building load profiles. | LSTM + MLP + SMBO | N/A |

| Predicting electricity consumption using deep recurrent neural networks [53] | 2019 | To compare RNN and LSTM to predict load in STLF MTLF and LTLF. | RNN, LSTM, ARIMA, MLP, DNN | ARIMA for STLF RNN and LSTM for MTLF and LTLF. |

| Short-term load forecasting in grid-connected microgrid [14] | 2019 | To compare the performance of algorithms for STLF in microgrid. | GMDH, MLP-LM | RMSE MLP: 0.062% |

| Short-term load forecasting at different aggregation levels with predictability analysis [54] | 2019 | To compare different algorithms for STLF at different aggregation levels. | MLP, LSTM, GBRT, Linear regression, SVR | N/A |

| Short-term residential load forecasting based on LSTM recurrent neural network [31] | 2019 | To compare the performance of forecast algorithms depending on the level of aggregation of AMI data. | LSTM+BPNN variants, KNN and mean. | MAPE LSTM: ind 44.39%, aggregated forecast: 8.18%, forecast aggregation: 9.14% |

| Day-ahead prediction of microgrid electricity demand using a hybrid Artificial Intelligence model [49] | 2019 | To compare different optimization algorithms before using FFANN for STLF using load and economic input variables. | SA-FFANN, WT-SA- FFANN, GA-FFANN, BP-FFANN, (PSO)-FFANN | MAPE WT-SA -FFANN: 2.95% |

| Electricity consumption probability density forecasting method based on LASSO-Quantile Regression Neural Network [45] | 2019 | To compare LASSO-QRNN for electricity consumption probability density LTLF | LASSO-QRNN | MAPE LSTM: 0.02% |

| Forecasting electric load by aggregating meteorological and history-based Deep Learning modules [32] | 2020 | To compare the combination of LSTM and DNN for STLF with LSTM alone. | LSTM+DNN, LSTM and DNN | MAPE LSTM+DNN: 4.28% |

| A Deep Learning approach to forecasting monthly demand for residential–sector electricity [44] | 2020 | To compare LSTM with other algorithms for MTLF. | SVR, MLP, ARIMA, MLR, LSTM | MAPE LSTM: 0.07% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Román-Portabales, A.; López-Nores, M.; Pazos-Arias, J.J. Systematic Review of Electricity Demand Forecast Using ANN-Based Machine Learning Algorithms. Sensors 2021, 21, 4544. https://doi.org/10.3390/s21134544

Román-Portabales A, López-Nores M, Pazos-Arias JJ. Systematic Review of Electricity Demand Forecast Using ANN-Based Machine Learning Algorithms. Sensors. 2021; 21(13):4544. https://doi.org/10.3390/s21134544

Chicago/Turabian StyleRomán-Portabales, Antón, Martín López-Nores, and José Juan Pazos-Arias. 2021. "Systematic Review of Electricity Demand Forecast Using ANN-Based Machine Learning Algorithms" Sensors 21, no. 13: 4544. https://doi.org/10.3390/s21134544

APA StyleRomán-Portabales, A., López-Nores, M., & Pazos-Arias, J. J. (2021). Systematic Review of Electricity Demand Forecast Using ANN-Based Machine Learning Algorithms. Sensors, 21(13), 4544. https://doi.org/10.3390/s21134544