Infrared Small Target Detection Method with Trajectory Correction Fuze Based on Infrared Image Sensor

Abstract

:1. Introduction

- (1)

- On the basis of [5], the main parameters of the infrared image sensor were selected, and then the correctness was verified through outdoor experiments.

- (2)

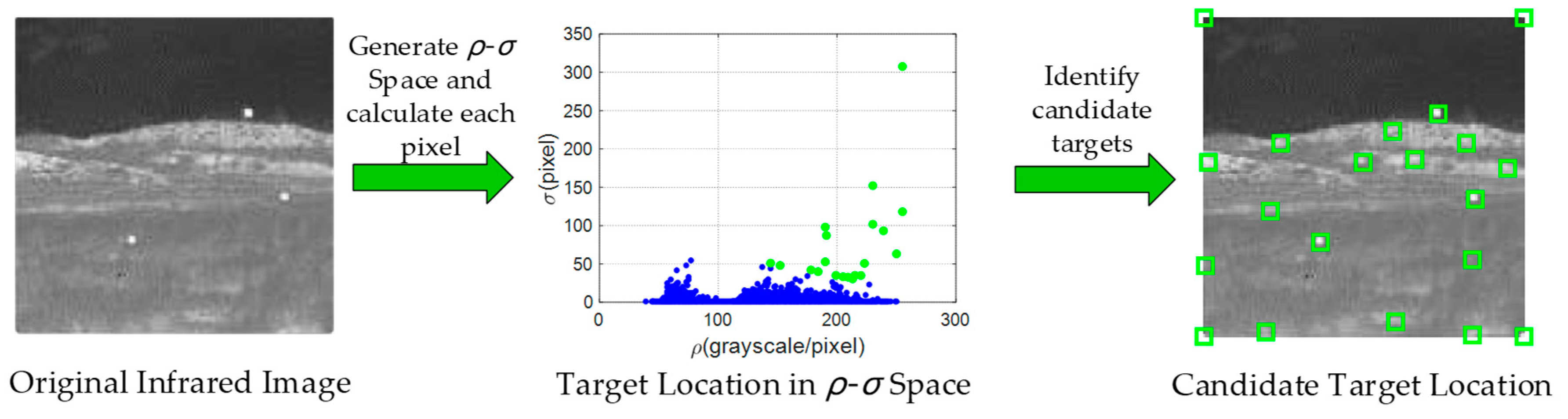

- Inspired by filtering methods, we proposed a novel two-dimensional density-distance space to obtain the density peak pixels by full use of image information.

- (3)

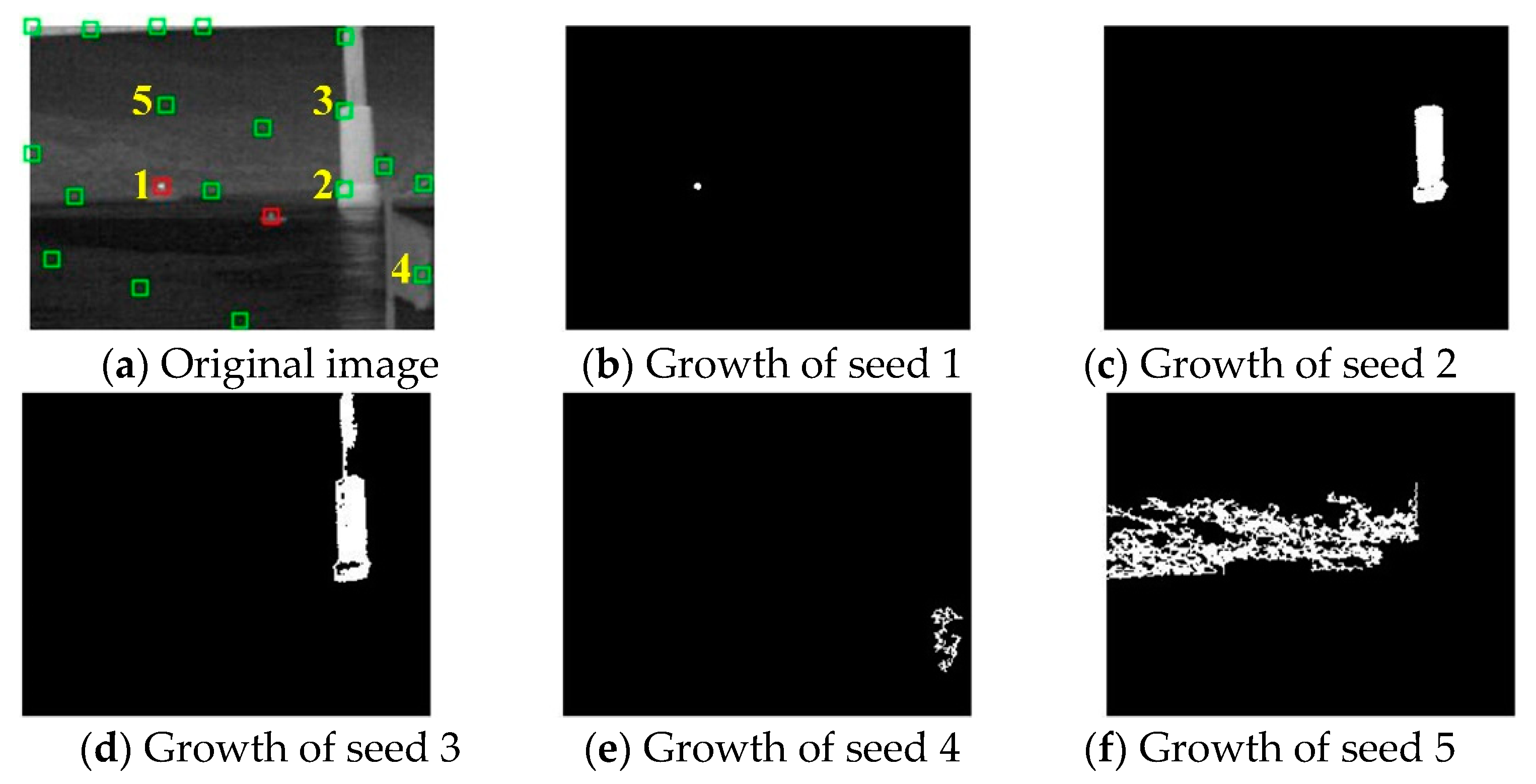

- A new pixel growth method was presented to effectively suppress clutters. Then, the real targets were selected from the density peak pixels.

- (4)

- Three experiments proved the robustness and effectiveness of the algorithm and the applicability of the trajectory correction fuze. Especially, our method maintained a good detection performance without increasing the processing time compared with the previous existing methods.

2. Application Background

2.1. Design of the Fuze

2.2. Connection between Infrared Image Sensor and Fuze

3. Density-Distance Space

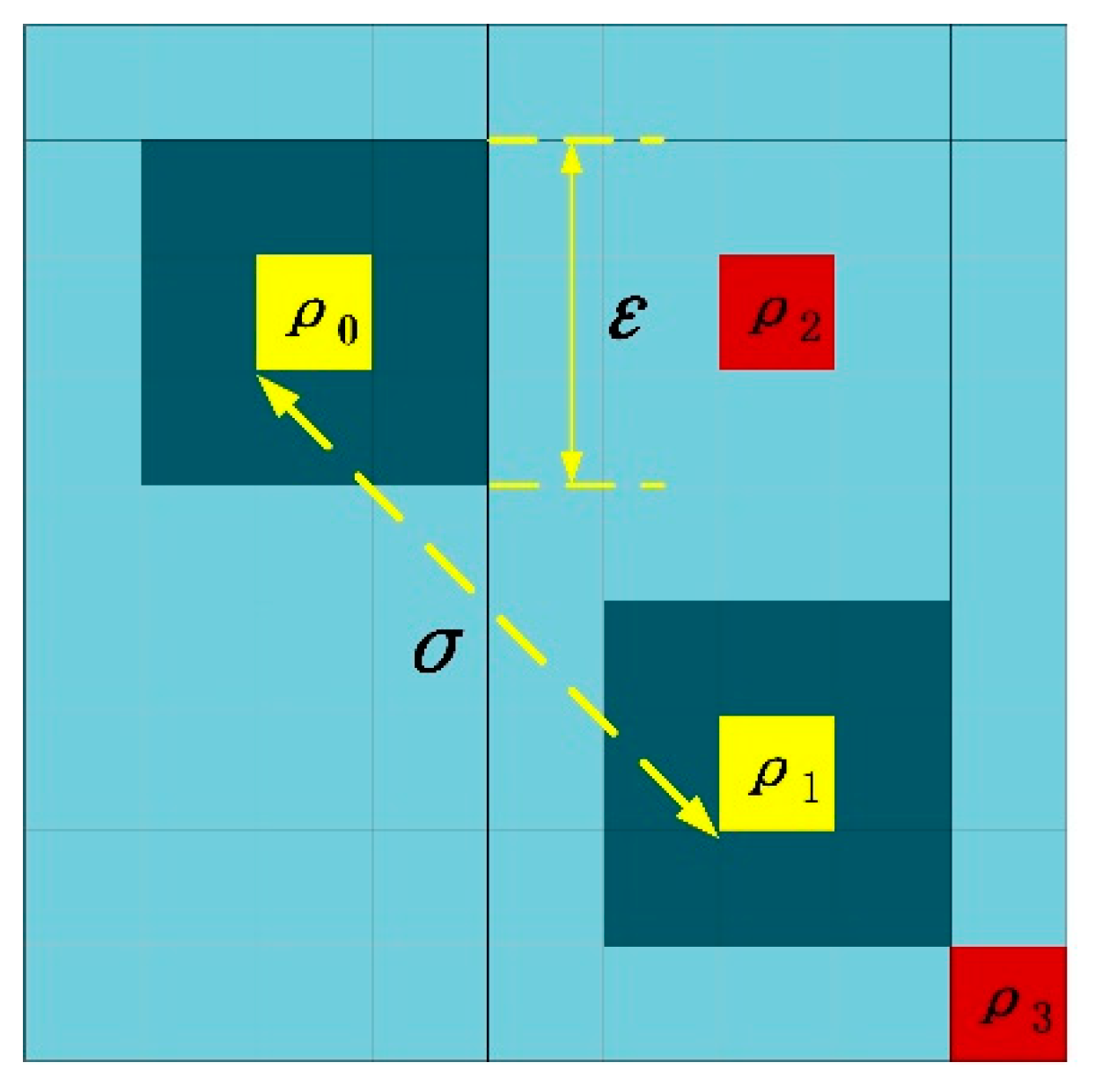

3.1. Definition of Density and Distance

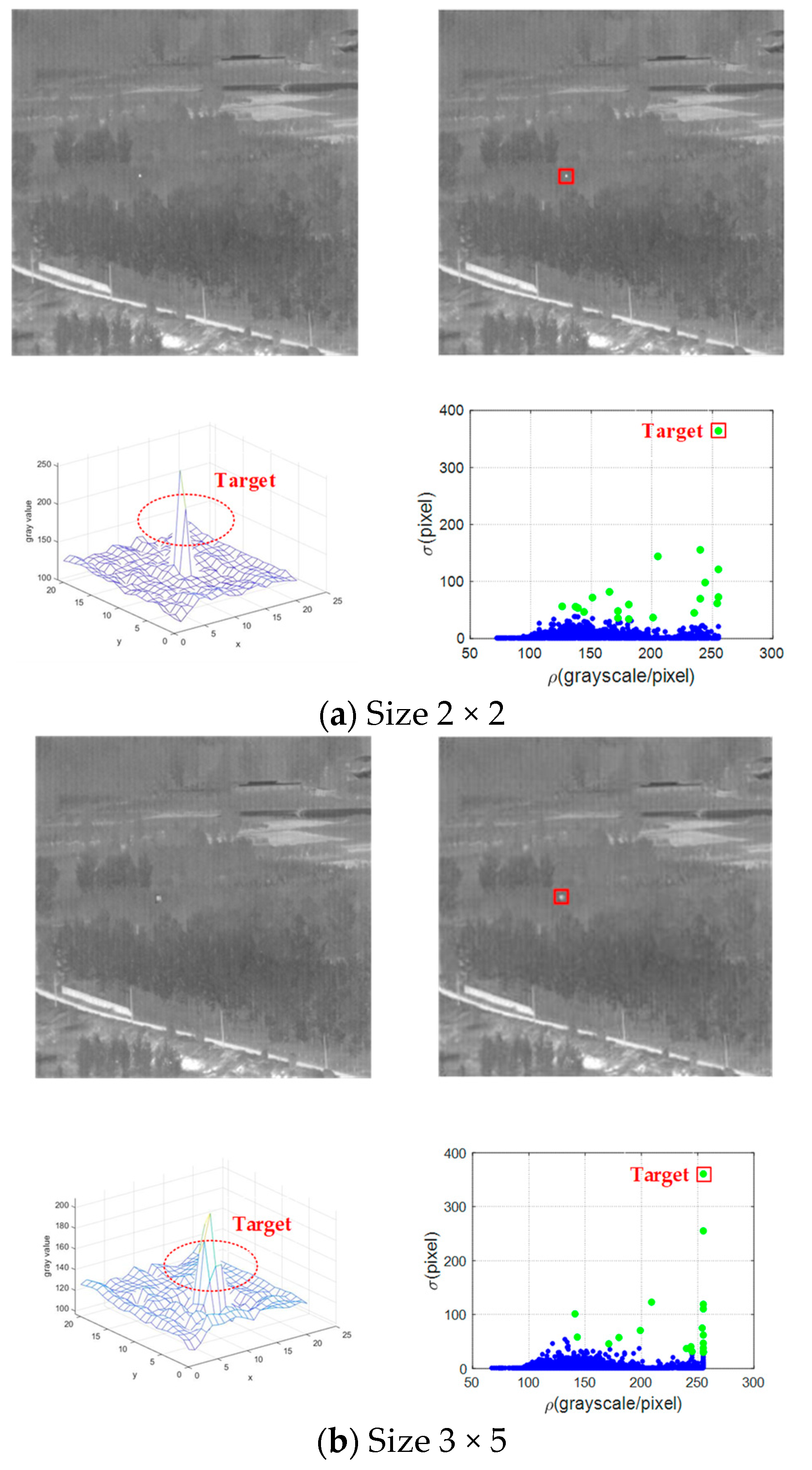

3.2. Density-Distance Space

3.3. Adaptive Pixel Growth

4. Experimental and Analysis

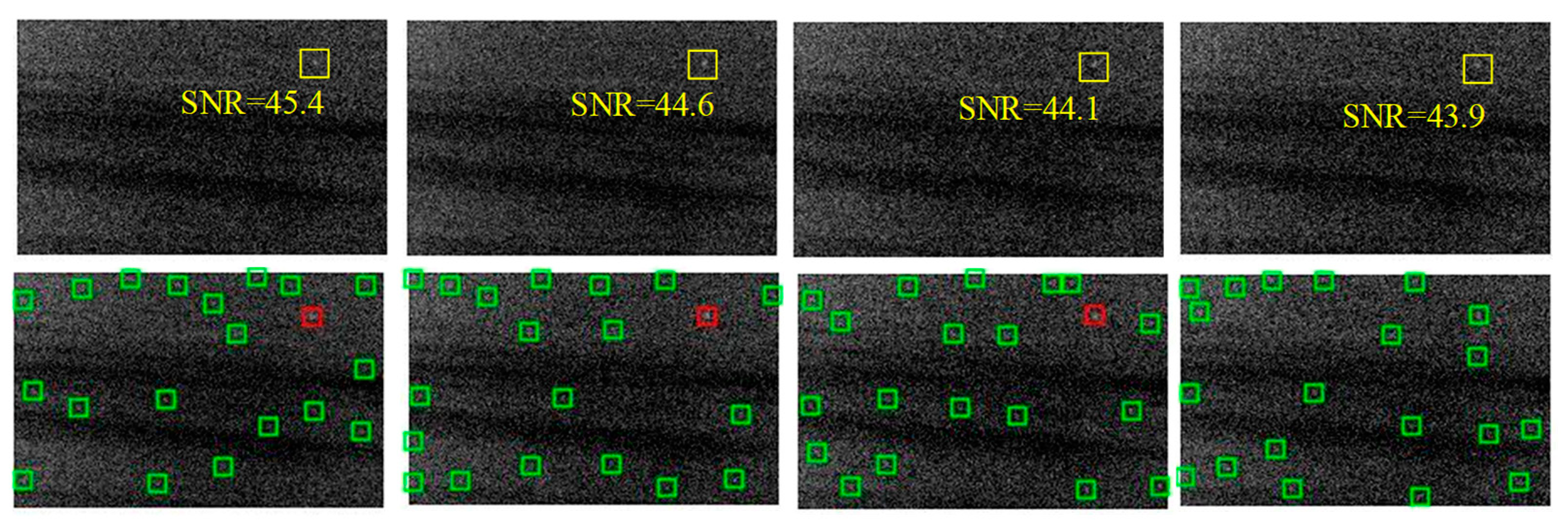

4.1. Equivalent Experiment about Detection Capability of the Infrared Image Sensor

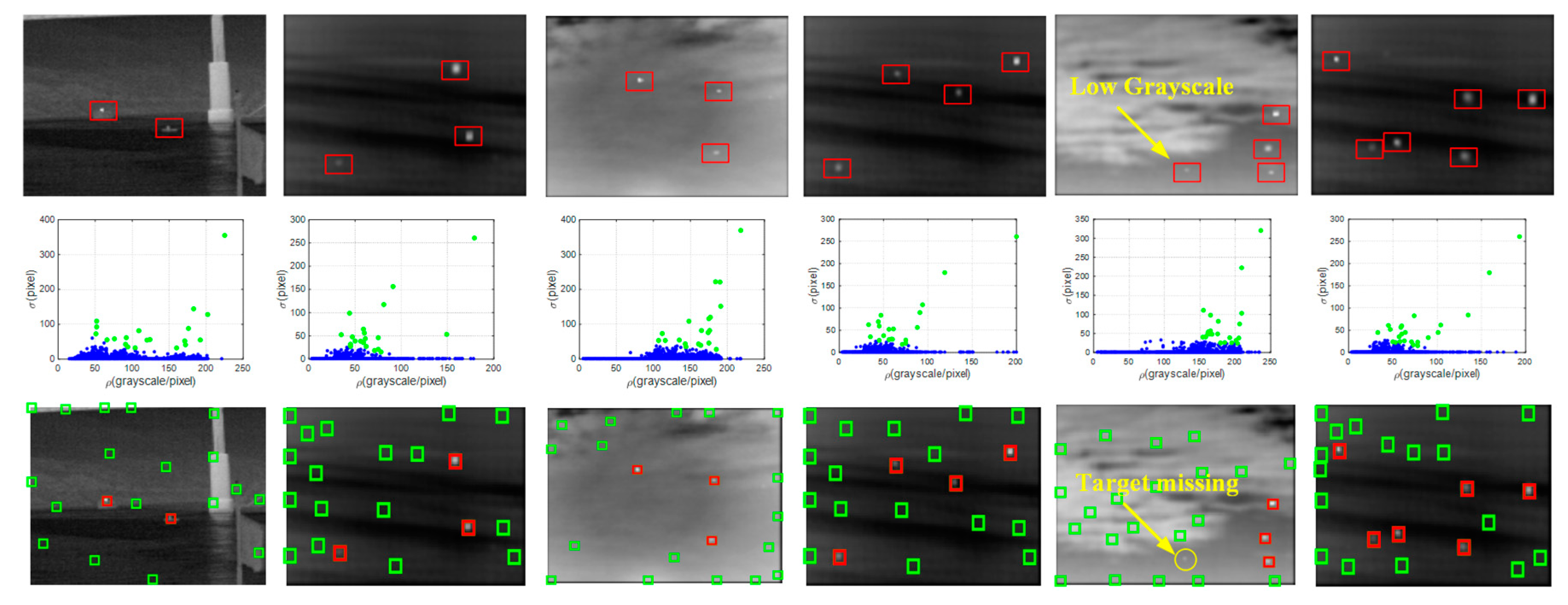

4.2. Simulation of Algorithms

4.3. HIL Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deng, Z.; Shen, Q.; Deng, Z.; Cheng, J. Real-Time Estimation for Roll Angle of Spinning Projectile Based on Phase-Locked Loop on Signals from Single-Axis Magnetometer. Sensors 2019, 19, 839. [Google Scholar] [CrossRef] [Green Version]

- Theodoulis, S.; Sève, F.; Wernert, P. Robust gain-scheduled autopilot design for spin-stabilized projectiles with a course-correction fuze. Aerosp. Sci. Technol. 2015, 42, 477–489. [Google Scholar] [CrossRef]

- He, C.; Xiong, D.; Zhang, Q.; Liao, M. Parallel Connected Generative Adversarial Network with Quadratic Operation for SAR Image Generation and Application for Classification. Sensors 2019, 19, 871. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yue, R.; Wang, H.; Jin, T.; Gao, Y.; Sun, X.; Yan, T.; Zang, J.; Yin, K.; Wang, S. Image Motion Measurement and Image Restoration System Based on an Inertial Reference Laser. Sensors 2021, 21, 3309. [Google Scholar] [CrossRef]

- Li, R.; Li, D.; Fan, J. Correction Strategy of Mortars with Trajectory Correction Fuze Based on Image Sensor. Sensors 2019, 19, 1211. [Google Scholar] [CrossRef] [Green Version]

- Fresconi, F. Guidance and Control of a Projectile with Reduced Sensor and Actuator Requirements. J. Guid. Control. Dyn. 2011, 34, 1757–1766. [Google Scholar] [CrossRef]

- Li, R.; Li, N.; Fan, J. Dynamic Response Analysis for a Terminal Guided Projectile with a Trajectory Correction Fuze. IEEE Access 2019, 7, 94994–95007. [Google Scholar] [CrossRef]

- Zhang, C.; Li, D. Mechanical and Electronic Video Stabilization Strategy of Mortars with Trajectory Correction Fuze Based on Infrared Image Sensor. Sensors 2020, 20, 2461. [Google Scholar] [CrossRef]

- Uzair, M.; Brinkworth, R.; Finn, A. Detecting Small Size and Minimal Thermal Signature Targets in Infrared Imagery Using Biologically Inspired Vision. Sensors 2021, 21, 1812. [Google Scholar] [CrossRef]

- Chan, R.H.; Kan, K.K.; Nikolova, M.; Plemmons, R.J. A two-stage method for spectral–spatial classification of hyperspectral images. J. Math. Imaging Vis. 2020, 62, 790–807. [Google Scholar] [CrossRef] [Green Version]

- Deshpande, S.D.; Er, M.H.; Venkateswarlu, R.; Chan, P. Max-mean and max-median filters for detection of small-targets. Proc. SPIE 1999, 3809, 74–83. [Google Scholar] [CrossRef]

- Deng, L.; Zhu, H.; Zhou, Q.; Li, Y. Adaptive top-hat filter based on quantum genetic algorithm for infrared small target detection. Multimedia Tools Appl. 2017, 77, 10539–10551. [Google Scholar] [CrossRef]

- Zhang, K.; Yang, K.; Li, S.; Chen, H.-B. A Difference-Based Local Contrast Method for Infrared Small Target Detection Under Complex Background. IEEE Access 2019, 7, 105503–105513. [Google Scholar] [CrossRef]

- Lu, Y.; Dong, L.; Zhang, T.; Xu, W. A Robust Detection Algorithm for Infrared Maritime Small and Dim Targets. Sensors 2020, 20, 1237. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, F.; Huang, M.; Ma, Z.; Li, Y.; Huang, Q. An Iterative Weighted-Mean Filter for Removal of High-Density Salt-and-Pepper Noise. Symmetry 2020, 12, 1990. [Google Scholar] [CrossRef]

- Kim, S.; Yang, Y.; Lee, J.; Park, Y. Small Target Detection Utilizing Robust Methods of the Human Visual System for IRST. J. Infrared Millimeter Terahertz Waves 2009, 30, 994–1011. [Google Scholar] [CrossRef]

- Ming, Z.; Li, J.; Zhang, P. The Design of Top-hat Morphological Filter and Application to Infrared Target Detection. Infr. Phys. Technol. 2005, 48, 67–76. [Google Scholar] [CrossRef]

- Huang, S.; Peng, Z.; Wang, Z.; Wang, X.; Li, M. Infrared Small Target Detection by Density Peaks Searching and Maximum-Gray Region Growing. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1919–1923. [Google Scholar] [CrossRef]

- Wang, X.; Lv, G.; Xu, L. Infrared dim target detection based on visual attention. Infrared Phys. Technol. 2012, 55, 513–521. [Google Scholar] [CrossRef]

- Chen, C.L.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A Local Contrast Method for Small Infrared Target Detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 574–581. [Google Scholar] [CrossRef]

- Deng, L.; Zhang, J.; Xu, G.; Zhu, H. Infrared small target detection via adaptive M-estimator ring top-hat transformation. Pattern Recognit. 2021, 112, 107729. [Google Scholar] [CrossRef]

- Han, J.; Ma, Y.; Zhou, B.; Fan, F.; Liang, K.; Fang, Y. A Robust Infrared Small Target Detection Algorithm Based on Human Visual System. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2168–2172. [Google Scholar] [CrossRef]

- Qin, Y.; Li, B. Effective Infrared Small Target Detection Utilizing a Novel Local Contrast Method. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1890–1894. [Google Scholar] [CrossRef]

- Han, J.; Liang, K.; Zhou, B.; Zhu, X.; Zhao, J.; Zhao, L. Infrared Small Target Detection Utilizing the Multiscale Relative Local Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2018, 15, 612–616. [Google Scholar] [CrossRef]

- Wu, L.; Ma, Y.; Fan, F.; Wu, M.; Huang, J. A Double-Neighborhood Gradient Method for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Liu, C.; Zhang, H.; Zhao, Q. A Local Contrast Method for Infrared Small-Target Detection Utilizing a Tri-Layer Window. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1822–1826. [Google Scholar] [CrossRef]

- Wei, Y.; You, X.; Li, H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recognit. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Shi, Y.; Wei, Y.; Yao, H.; Pan, D.; Xiao, G. High-Boost-Based Multiscale Local Contrast Measure for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2017, 15, 33–37. [Google Scholar] [CrossRef]

- Liu, J.; He, Z.; Chen, Z.; Shao, L. Tiny and Dim Infrared Target Detection Based on Weighted Local Contrast. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1780–1784. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Shi, H.; Sun, J.; Zhao, L.; Seah, H.S.; Quah, C.K.; Tandianus, B. Multi-Channel Convolutional Neural Network Based 3D Object Detection for Indoor Robot Environmental Perception. Sensors 2019, 19, 893. [Google Scholar] [CrossRef] [Green Version]

- Gao, X.; Luo, H.; Wang, Q.; Zhao, F.; Ye, L.; Zhang, Y. A Human Activity Recognition Algorithm Based on Stacking Denoising Autoencoder and LightGBM. Sensors 2019, 19, 947. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ding, L.; Xu, X.; Cao, Y.; Zhai, G.; Yang, F.; Qian, L. Detection and tracking of infrared small target by jointly using SSD and pipeline filter. Digit. Signal Process. 2020, 110, 102949. [Google Scholar] [CrossRef]

- Yang, X.; Wang, F.; Bai, Z.; Xun, F.; Zhang, Y.; Zhao, X. Deep Learning-Based Congestion Detection at Urban Intersections. Sensors 2021, 21, 2052. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Li, S.; Niu, S.; Zhang, K. Detection of Infrared Small Targets Using Feature Fusion Convolutional Network. IEEE Access 2019, 7, 146081–146092. [Google Scholar] [CrossRef]

- López-Sastre, R.J.; Herranz-Perdiguero, C.; Guerrero-Gómez-Olmedo, R.; Oñoro-Rubio, D.; Maldonado-Bascón, S. Boosting Multi-Vehicle Tracking with a Joint Object Detection and Viewpoint Estimation Sensor. Sensors 2019, 19, 4062. [Google Scholar] [CrossRef] [Green Version]

- Shi, J.; Chang, Y.; Xu, C.; Khan, F.; Chen, G.; Li, C. Real-time leak detection using an infrared camera and Faster R-CNN technique. Comput. Chem. Eng. 2020, 135, 106780. [Google Scholar] [CrossRef]

- Srivastava, A.; Rodriguez, J.; Saco, P.; Kumari, N.; Yetemen, O. Global Analysis of Atmospheric Transmissivity Using Cloud Cover, Aridity and Flux Network Datasets. Remote Sens. 2021, 13, 1716. [Google Scholar] [CrossRef]

- Moradi, S.; Moallem, P.; Sabahi, M.F. Fast and robust small infrared target detection using absolute directional mean difference algorithm. Signal Process. 2020, 177, 107727. [Google Scholar] [CrossRef]

| Trajectory Parameters | Infrared Imager | ||

|---|---|---|---|

| Detection distance (m) | 1500 | Focal length (mm) | 19 |

| Launch angle (degree) | 53 | Pixel pitch (μm) | 17 |

| Pitch range (degree) | 60.3–65.2 | Fov (degree) | 17 × 13 |

| Time (s) | 8 | Array format | 320 × 240 |

| Initial velocity (m/s) | 272 | Spectral band (μm) | 7.5–13.5 |

| Experimental Conditions | Detection Distance (m) | Target Size (m) | Temperature Difference (K) |

|---|---|---|---|

| Real Conditions | 1500 | 2.3 | 15 |

| Equivalent Conditions | 65 | 0.1 | 7 |

| No. | Background | Target Number | Image Size |

|---|---|---|---|

| 1 | Sea Building | 2 | 284 × 213 |

| 2 | Ground | 3 | 220 × 140 |

| 3 | Cloud Sky | 3 | 281 × 240 |

| 4 | Ground | 4 | 220 × 140 |

| 5 | Cloud Sky | 4 | 250 × 200 |

| 6 | Ground | 6 | 220 × 140 |

| Top-Hat (s) | MinLocalLoG (s) | LS_SVM (s) | HBMLCM (s) | RLCM (s) | MPCM (s) | Proposed (s) | |

|---|---|---|---|---|---|---|---|

| Seq.1 | 0.0058 | 0.0155 | 0.0115 | 0.0181 | 1.9756 | 0.0213 | 0.0214 |

| Seq.2 | 0.0059 | 0.0160 | 0.0120 | 0.0184 | 1.9616 | 0.0221 | 0.0214 |

| Seq.3 | 0.0059 | 0.0154 | 0.0115 | 0.0179 | 1.9817 | 0.0213 | 0.0214 |

| Seq.4 | 0.0061 | 0.0152 | 0.0113 | 0.0177 | 1.9799 | 0.0214 | 0.0211 |

| Seq.5 | 0.0061 | 0.0149 | 0.0112 | 0.0178 | 1.9691 | 0.0217 | 0.0202 |

| Average | 0.0060 | 0.0154 | 0.0115 | 0.0180 | 1.9735 | 0.0216 | 0.0211 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Li, D.; Qi, J.; Liu, J.; Wang, Y. Infrared Small Target Detection Method with Trajectory Correction Fuze Based on Infrared Image Sensor. Sensors 2021, 21, 4522. https://doi.org/10.3390/s21134522

Zhang C, Li D, Qi J, Liu J, Wang Y. Infrared Small Target Detection Method with Trajectory Correction Fuze Based on Infrared Image Sensor. Sensors. 2021; 21(13):4522. https://doi.org/10.3390/s21134522

Chicago/Turabian StyleZhang, Cong, Dongguang Li, Jiashuo Qi, Jingtao Liu, and Yu Wang. 2021. "Infrared Small Target Detection Method with Trajectory Correction Fuze Based on Infrared Image Sensor" Sensors 21, no. 13: 4522. https://doi.org/10.3390/s21134522

APA StyleZhang, C., Li, D., Qi, J., Liu, J., & Wang, Y. (2021). Infrared Small Target Detection Method with Trajectory Correction Fuze Based on Infrared Image Sensor. Sensors, 21(13), 4522. https://doi.org/10.3390/s21134522