Abstract

Plant phenomics has been rapidly advancing over the past few years. This advancement is attributed to the increased innovation and availability of new technologies which can enable the high-throughput phenotyping of complex plant traits. The application of artificial intelligence in various domains of science has also grown exponentially in recent years. Notably, the computer vision, machine learning, and deep learning aspects of artificial intelligence have been successfully integrated into non-invasive imaging techniques. This integration is gradually improving the efficiency of data collection and analysis through the application of machine and deep learning for robust image analysis. In addition, artificial intelligence has fostered the development of software and tools applied in field phenotyping for data collection and management. These include open-source devices and tools which are enabling community driven research and data-sharing, thereby availing the large amounts of data required for the accurate study of phenotypes. This paper reviews more than one hundred current state-of-the-art papers concerning AI-applied plant phenotyping published between 2010 and 2020. It provides an overview of current phenotyping technologies and the ongoing integration of artificial intelligence into plant phenotyping. Lastly, the limitations of the current approaches/methods and future directions are discussed.

1. Introduction

According to data from the United Nations, the world population is expected to grow to nine billion by 2050 [1]. With the increasing need for food production to match the projected population growth in order to prevent food insecurity, plant phenotyping is now at the forefront of plant breeding as compared to genotyping [2]. Plant phenotyping is defined as the assessment of complex traits such as growth, development, tolerance, resistance, architecture, physiology, ecology, yield, and the basic measurement of individual quantitative parameters that form the basis for complex trait assessment [2]. Scientists are increasingly interested in the use of phenomic-level data to aid in the correlation between genomics and the variation in crop yields and plant health [3,4,5]. In this way, plant phenotyping has become an important aspect of crop improvement, availing data to assess traits for variety selection in order to identify desirable traits and eliminate undesirable traits during the evaluation of plant populations [6]. Plant phenotyping has improved progressively over the past 30 years, although obtaining satisfactory phenotypic data for complex traits such as stress tolerance and yield potential remains challenging [7]. The large data required for the effective study of phenotypes has led to the development and use of high-throughput phenotyping technologies to enable the characterization of large numbers of plants at a fraction of the time, cost, and labor of previously used traditional techniques. Traditional techniques previously required destructive measurements whereby crops were harvested at particular growth stages in order to carry out genetic testing and the mapping of plant traits [8]. Since crop breeding programs require repeated experimental trials in order to ascertain which traits are of interest, the process was slow, costly and significantly lagging behind the DNA sequencing technologies which are necessary for crop improvement [9].

High-throughput phenotyping has been fostered by non-invasive imaging techniques, which have enabled the visualization of plant cell structures on a wider scale. As these imaging technologies develop, images carry more useful extractable information that supports biological interpretations of plant growth [10,11]. These techniques include thermal imaging [12], chlorophyll fluorescence [13,14], digital imaging [15], and spectroscopic imaging [16].

High-throughput phenotyping techniques are currently being used to enable data acquisition in both laboratory and field settings. They are being employed at the levels of data collection, data management, and analysis. They include imaging sensors, growth chambers, data management and analysis software, etc. [7,17,18,19,20]. The integration of artificial intelligence into these technologies has contributed to the development of the non-invasive imaging aspect of phenomics. Artificial intelligence (AI) technologies in the form of computer vision and machine learning are increasingly being used to acquire and analyze plant image data. Computer vision systems process digital images of plants to detect specific attributes for object recognition purposes [21]. Machine learning employs various tools and approaches to ‘learn’ from large collections of crop phenotypes in order to classify unique data, identify new patterns and features, and predict novel trends [22,23]. Recent advancements in deep learning, a subset of machine learning, have provided promising results for real-time image analysis. Deep learning is a machine learning approach that takes advantage of the large plant datasets available and uses them to carry out image analysis using convolutional neural networks [24]. In field phenotyping, AI is being applied in field equipment for ground and obstacle detection, the detection of plants and weeds during data collection, and the stable remote control of the equipment [25]. Although the field phenotyping applications of AI are in relative infancy compared to laboratory phenotyping, their growth is notable because they provide the phenotypic data of plants in their natural environment.

In addition to image analysis, AI applications that are widely used in other domains of science are now being integrated into the phenomics data management pipeline. Cyberinfrastructure (CI), a research environment that provides linkages between researchers, data storage, and computing systems using high-performance networks has been applied widely in the environmental sciences [26,27,28]. CI is now being applied to phenomics in order to facilitate collaboration among researchers [29]. Open-source devices and tools represent another fast developing application of AI technologies [30]. In phenomics, these tools are addressing the challenges of expensive phenotyping equipment and proprietary or incompatible data formats. The growth of these applications of AI has expanded the field of phenomics with industry companies investing in the manufacture and distribution of phenotyping technologies alongside government-funded agricultural institutions.

This review begins by considering the broader area of artificial intelligence and its integration and application in phenomics through machine learning and deep learning. Digital, fluorescence, spectroscopic, thermography, and tomography imaging, and the integration of artificial intelligence into their individual data management are highlighted. Thereafter, additional applications of AI such as cyberinfrastructure and open-source devices and tools are discussed. The current utilization of phenotyping technologies for field phenotyping, which is increasingly gaining ground over phenotyping in controlled environments, is then discussed briefly, highlighting their cross applicability with artificial intelligence.

2. Artificial Intelligence

Artificial Intelligence (AI) is widely referred to as the simulation of human intelligence in machines which are programmed to think like humans and mimic their actions. It is applied when referring to machines that exhibit traits associated with the human mind [31]. The recent emergence and growth of AI in academia has presented an opportunity, as well as a threat, for various domains in the sciences. Whereas some predict that the application of AI through robotics could lead to technological unemployment, it has enabled science to extend into areas previously unexplored and provided ease of execution, for example, in medical diagnostics [32].

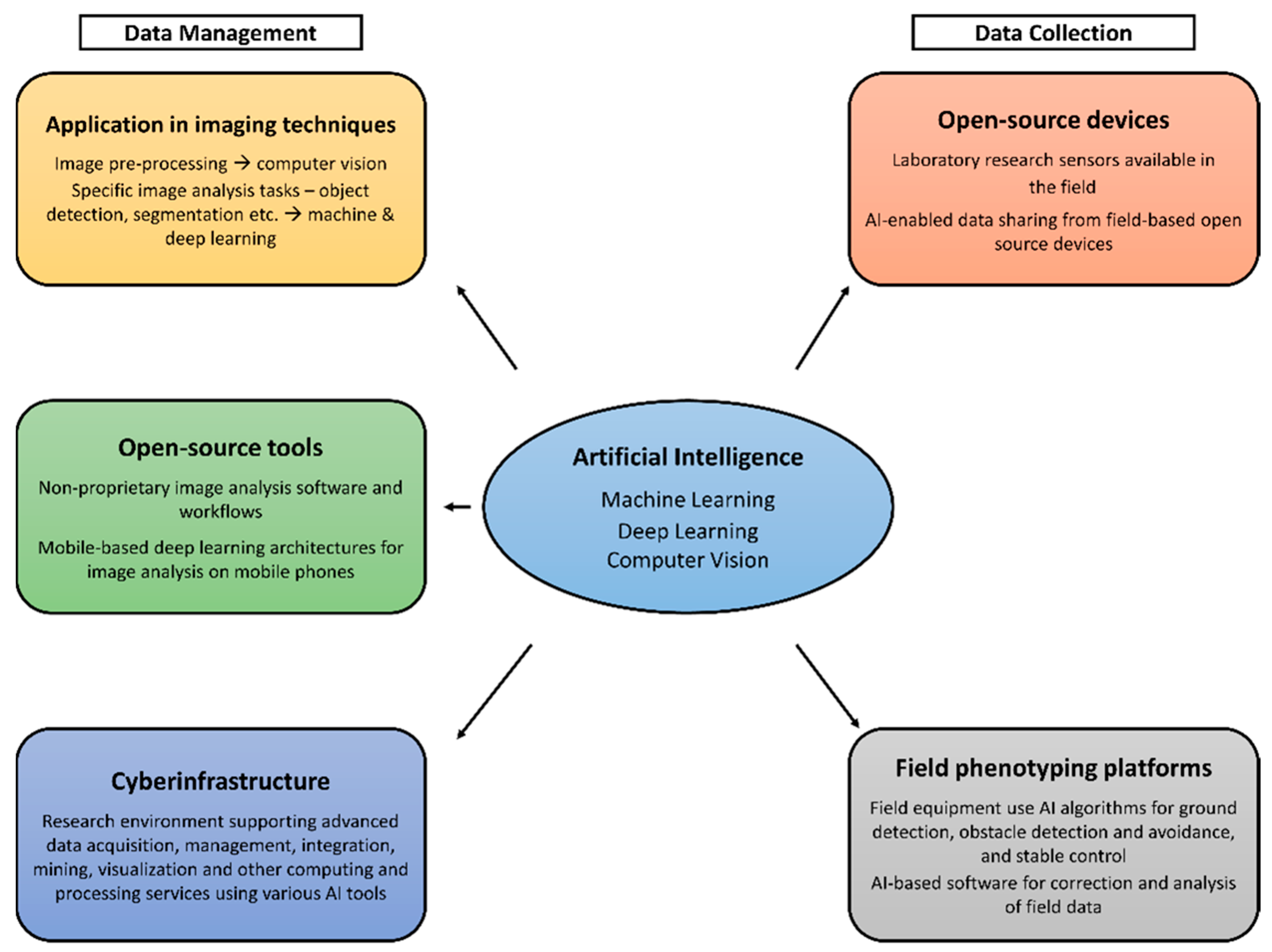

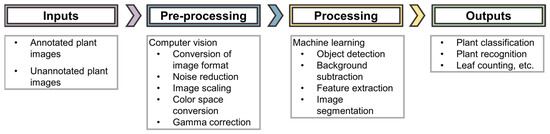

In order to effectively program machines for desired tasks, AI methods call for large repositories of data. The algorithms used in AI methods need large sets of data for training to facilitate decision support by enhancing early detection and thereby improving decision-making [33]. The data acquisition process in non-destructive phenomics involves integrating the data from instruments/sensors (i.e., digital cameras and spectrometers), usually equipped with their individual, proprietary communication protocols, into the AI algorithms. The sensor outputs often require conversion to compatible digital formats before analysis [7]. Phenomic data management therefore involves three critical components where artificial intelligence is applied: algorithms and programs to convert the sensory data into phenotypic information; model development to understand the genotype–phenotype relationships with environmental interactions; and the management of databases to allow for the sharing of information and resources [6]. The main aspects of AI, machine learning, deep learning, and computer vision have been applied thus far to a recognizable extent in phenomics (illustrated in Figure 1). Other areas of application are cyberinfrastructure and open-source devices and tools, which will subsequently be discussed in detail.

Figure 1.

Workflow illustrating application of AI in phenomics.

2.1. Machine Learning

Since the 1970s, AI research has been focused on machine learning. Statistical machine learning frameworks and models such as Perceptron, support vector machines, and Bayesian networks have been designed. However, no single model works best for all tasks. It is still challenging to determine the best model for a given problem [34]. According to Roscher et al. 2020 [35], the rise and success of neural networks, coupled with the abundance of data and high-level computational and data processing infrastructure, has led to the comprehensive utilization of machine learning (ML) models and algorithms despite the challenge of model determinations for a given task.

The utilization of a range of imaging techniques for nondestructive phenotyping has influenced the development of high-throughput phenotyping (HTP), whereby multiple imaging sensors collect plant data in near-real-time platforms. These sensors have the capacity to collect large volumes of data which has, in turn, made phenomics a big data problem well suited for the application of ML. The analysis and interpretation of these large datasets are quite challenging, but ML algorithms provide an approach for faster, efficient, and better data analytics than traditional processing methods. Traditional processing methods include probability theory, decision theory, optimization, and statistics. ML tools leverage these processing methods to extract patterns and features from these large amounts of data in order to enable feature identification in particular complex tasks such as stress phenotyping [36].

According to Rahaman et al. 2019 [37], one of the advantages of using ML approaches in plant phenotyping is their ability to search large datasets and discover patterns by simultaneously looking at a combination of features (compared to analyzing each feature separately). This was previously a challenge because of the high dimensionality of plant images and their large quantity, making them difficult to analyze through traditional processing methods [36]. Machine learning methods have thus far been successfully applied in the identification and classification of plant diseases [38,39] and plant organ segmentation, among other tasks as shown in Table 1 below. This has been achieved by supervised learning, where the algorithms can identify diseased plants after being trained with sample images from large datasets. However, this approach prevents the search for novel and unexpected phenotypic traits that would otherwise be discovered by the less accurate unsupervised ML [40].

Table 1.

Examples of ML-based approaches that have been applied in phenotyping tasks.

2.2. Deep Learning

Deep learning is a rapidly advancing subset of machine learning tools that has created a paradigm shift in image-based plant phenotyping. It is efficient in the discovery of complex structures in high-dimensional data and is thus applicable to a range of scientific research tasks [24]. The plant images collected using the various sensors have a wide variability, making the use of some machine learning techniques challenging [47]. While traditional machine learning involves trial-and-error steps in the feature extraction process of images, deep learning tools have enabled the creation of more reliable workflows for feature identification. They employ an automatic hierarchical feature extraction process using a large bank of non-linear filters before carrying out decision-making, such as classification. Deep learning approaches have multiple hidden layers in the network, with each layer performing a simple operation on the images in succession which increases their discrimination and prediction ability [48]. A wide range of deep learning architectures has been used in plant phenotyping by a process called transfer learning. This involves the use of a network that was pre-trained on a large dataset somewhat similar to the one under investigation and retraining it with weights for the new dataset. Table 2 details some deep learning architectures that have been applied using transfer learning for phenotyping.

Table 2.

Examples of deep learning architectures applied in plant phenotyping using transfer learning.

Even with the current influx of deep learning architectures, they still face a few challenges in their integration with agricultural applications. There is still limited availability of publicly available annotated agricultural data, which reduces the possibility of obtaining high-performance feature extraction models through transfer learning. In addition, many agricultural image data have high levels of occlusion (especially plant leaves and background noise), leading to higher likelihoods of error from confusing objects of interest with the background. This is partly due to the environmental variations (e.g., cloudy sky, windy weather for field data collection) that significantly impact the images and make them harder to work with. Similarly, data samples are also sensitive to imaging angles, field terrain and conditions, and variations within plant genotypes. Hence, the robustness and adaptability requirements are significantly high for the deep learning models built for agricultural applications [55].

Thus far, deep learning architectures in phenotyping have been used in leaf counting [56], the classification of plant morphology [57], plant recognition and identification [55], root and shoot feature identification [48], and plant stress identification and classification [58]. A few reported applications of machine learning or deep learning for stress prediction and quantification provide great opportunities for new research efforts of plant scientists. A key challenge to overcome is that the underlying processes for linking the inputs to the outputs are too complex to model mathematically.

3. Application of Artificial Intelligence in Phenotyping Technologies

3.1. Imaging Techniques

Traditionally, the measurement of observable plant traits has been conducted by destructive sampling followed by laboratory determinations to characterize phenotypes based on their genetic functions. Due to technological advancement in AI, imaging techniques (overview in Table 3) have emerged as important tools for non-destructive sampling, allowing image capture, data processing, and analysis to determine observable plant traits. According to Houle et al. 2010 [5], “imaging is ideal for phenomic studies because of the availability of many technologies that span molecular to organismal spatial scales, the intensive nature of the characterization, and the applicability of generic segmentation techniques to data.” Spatial or temporal data of many phenotype classes such as morphology and geometric features, behavior, physiological state, and locations of proteins and metabolites can be captured in intensive detail by imaging. For that reason, imaging techniques have allowed for high-throughput screening and real-time image analysis of physiological changes in plant populations. At the laboratory scale, the different imaging methods are tested individually. One, or a combination of the best-suited methods for crop surveillance, are then used both in controlled and field environments [5,59].

Table 3.

Visualization techniques and applications [60].

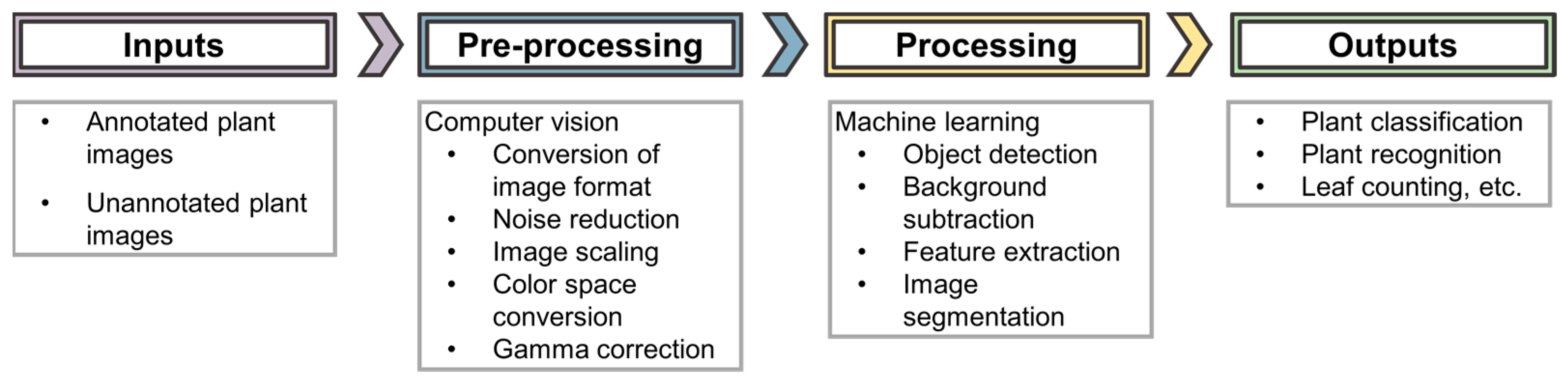

Computer vision is the major aspect of artificial intelligence that is applied in these imaging techniques. Zhuang et al. 2017 [34], comprehensively state that “computer vision aims to bring together factors derived from multiple research areas such as image processing and statistical learning to simulate human perception capability using the power of computational modeling of the visual domain.” Computer vision uses machine learning to recognize patterns and extract information from the images. The computer vision workflow (shown in Figure 2) carries out visual tasks ranging from pre-processing (e.g., conversion of image formats, color space conversions etc.) to object detection applied in ML algorithms, resulting in the comprehension of image understanding in a human-like way [34]. Computer vision transforms the images so that they can be applied to an AI system. This process has high computational demands, especially when working with images of varying formats from a range of sensors, as is the case in phenotyping imaging techniques [32]. A few of the different imaging techniques are discussed below.

Figure 2.

AI workflow for image analysis.

3.1.1. Digital/RGB Imaging

Digital imaging is the lowest costing and easiest to use imaging technique. Its images comprise pixels from a combination of the red, green, and blue (RGB) color channels. RGB camera sensors are sensitive to light in the visible spectral range (400–700 nm). Within this range, they are able to extract images that can be used to depict some significant physiological changes in a biological sample. RGB/digital imaging depends on the color variation of different biological samples and has significantly contributed to various plant phenotyping aspects [75,76]. It tracks the color changes and directly helps in monitoring the status of plant developmental stage, morphology, biomass, health, yield traits, and stress response mechanisms. These mechanisms can be measured rapidly and accurately for large populations. Digital imaging also provides information on the size and color of plants, which enables the quantification of plant deterioration arising from, for example, nutrient deficiencies or pathogen infections, etc. Using a combination of careful image capture, image analysis, and color classification, it is possible to follow the progression of lesions from infections and deficiencies over time quantitatively [8].

Advances in hardware and software for digital image processing have motivated the development of machine vision systems providing for the expeditious analysis of RGB images. Being relatively the simplest and most widely used technique has served as an advantage, as many machine learning techniques and deep learning architecture can be applied effectively to RGB images. These techniques have been applied in the identification of plant growth stage [41], classification of plant images [43,44,45,46], disease detection and classification [38,52,53,65], identification of biotic and abiotic stress [49,58], weed detection [50,51], detection of flowering times [57], and leaf counting [54,55,56]. Although the extraction of useful phenotyping features from 2D digital images has been successful, the expansion in computer vision has led to an exploration of the applications of 3D imaging. This has been achieved using stereo-vision, where two identical RGB cameras are used to capture images in a setup similar to the operation of the human eyes. These images are then used to reconstruct a 3D model of the plant for analysis using stereo-matching algorithms [60,77]. Approaches using multiple images from more than two RGB cameras placed at different viewing angles have been successfully used for larger plants with a higher degree of occlusion thereby expanding the applications of digital imaging [78].

3.1.2. Spectroscopy

Spectroscopic imaging is a widely used imaging technique that has been used to predict many properties of large plant populations [5]. It consists of multispectral and hyperspectral imaging. In multispectral imaging, the images are captured in wavelengths between visible and near-infrared, consisting of up to fifteen spectral bands, whereas in hyperspectral imaging, hundreds of continuous spectral wavebands are available. Previous phenotyping studies have shown that spectroscopy can be used to monitor plant photosynthetic pigment composition, assess water status, and detect abiotic or biotic plant stresses [79]. During plant development, varying growth conditions induce changes in surface and internal leaf structure, modifying the reflection of light from plant leaves or canopies. These changes can be visualized by spectroscopy, either in the visible spectrum or near-infrared wavelengths undetectable by the human eye (0.7–1.3 mm) [18]. The application of spectroscopy is therefore important for the field monitoring of plant traits arising from gene expression in response to environmental factors [20].

Hyperspectral imaging has thus far been successfully applied in both controlled environments (i.e., greenhouses and growth chambers) and field environments [80]. However, a major limitation to the utility of hyperspectral data in field phenotyping, besides the cost of the equipment, is the variability in environmental conditions during measurements. Spectrometers are highly sensitive and rely on solar radiation as a light source in the field, and this leads to difficulty in the analysis of images due to cloud cover, shadows caused by phenotyping platforms, and changes in solar angle during the photo period [8,80]. Another challenge in hyperspectral data analysis is data redundancy due to the continuous nature of wavelengths and their similarity. This has been alleviated by the selection of effective wavelengths using algorithms such as the successive projections algorithm (SPA), genetic algorithm (GA), the Monte-Carlo uninformative variable elimination (MC-UVE), and boosted regression tree (BRT) which is also a ML technique [81,82,83].

Although these difficulties can potentially be remedied by applying robust computer vision algorithms, hyperspectral images have only been successfully applied to machine learning algorithms and not to faster and more advanced deep learning algorithms. Nonetheless, hyperspectral imaging allows for a wide variety of stresses to be detected and continues to be a promising way to detect specific signatures for a particular stressor [18].

3.1.3. Thermography

Thermography, also known as thermal imaging, is a technique that detects infra-red radiation from an object and creates an image based on it. Thermographic cameras detect infrared radiation (9000−14,000 nanometers) in the electromagnetic spectrum and create images based off of it [84]. Thermography has been used in plant research to monitor transpiration and canopy temperature. Transpiration is linked with nutrient uptake by the roots and, ultimately, with crop productivity. However, it also reflects water use efficiency. Canopy temperature has been widely used to infer crop water use, photosynthesis, and, in some cases, to predict yield. In breeding programs aimed at selecting plants based on water use efficiency, thermography improves the speed and effectiveness of monitoring transpiration [18]. It has also been used in the field as a remote sensing tool to capture canopy temperature data for a large number of plots using microbolometer-based thermal imaging mounted on field phenotyping platforms above the crop using helium balloons or manned aircraft [8]. Despite the inability of thermography to detect pre-symptomatic changes in leaves, it can detect changes in leaf thickness [18]. This allows for the visualization and monitoring of internal structural heterogeneity resulting from stresses or infections.

In phenotyping, thermography is used in combination with other imaging techniques for effective diagnostics [85]. Photogrammetry algorithms such as structure-from-motion have been applied to thermographic images [86] collected in field environments without much success. Currently, the data collected from the images is analyzed using standard equations and ML statistical methods such as probability theory, decision theory and classifiers [87]. Thermography has a range of applications from medical diagnostics to metal defect detection in industries where deep learning algorithms have been applied to thermal images [88].

3.1.4. Fluorescence

Fluorescence imaging, also known as fluorescence spectroscopy, is used as a measurement technique for photosynthetic function under stresses such as drought and infections by detecting light emitted after the plant has been exposed to a specific wavelength of light. These stresses have adverse effects that lead to a decrease in photosynthesis which, in turn, limits crop yield. Chlorophyll fluorescence imaging has enabled the early visualization of viral and fungal infections due to its ability to achieve high resolutions. It has also been used in studies to determine plant leaf area [18]. For the rapid screening of plant populations, portable fluorometers are being used to obtain average measurements of whole plants or leaves at the same developmental stage. There is potential for portable fluorescence imaging to be used for the field-scale assessment of infections, even for those that leave no visible trace [8,18,67]. Fluorescence imaging is usually used in combination with hyperspectral imaging, and image data extracted using this technique has been successfully applied to algorithms based on AI methods, such as neural networks, for analysis [89].

3.1.5. Tomography

X-ray computed tomography (X-ray CT) is a technology that uses computer-processed X-rays to produce tomographic images of specific areas of scanned objects. It can generate a 3D image of the inside of an object from an extensive series of 2D radiographic images taken around a single axis of rotation [76]. X-ray CT imaging technology has been used for several applications in plant phenotyping. It has been applied in the observation of root growth because of its ability to capture the intricacies of the edaphic environment with high spatial resolutions [90,91]. X-ray CT has also been used in the high-throughput measurement of rice tillers to determine grain yield. It was applied as the preferred imaging technique for the rice tiller study because of the tendency of rice tillers to overlap and, hence, not be easily detectable by digital imaging [92]. According to Li et al. 2014 [76], however, “tomographic imaging remains low throughput, and its image segmentation and reconstruction need to be further improved to enable high throughput plant phenotyping.” Although this technology is effective in the early detection of plant stress symptoms, its effectiveness is further improved by combined use with other imaging techniques. The simultaneous use of CT and positron emission tomography (PET) has the potential to be used to provide insight into the effect of abiotic stress in particular [71,76]. Additionally, to provide satisfactory resolutions, X-ray CT requires small pot sizes and controlled environments, making it unsuitable for field applications. For morphological root phenotyping tasks, X-ray CT has been applied in the identification of root tips and root-soil segmentation tasks using machine learning [93]. Despite the minimal use of tomography in phenotyping, its application in the medical field positions it as a powerful technique that, coupled with AI algorithms (and particularly CNNs), is beneficial in diagnostics [94].

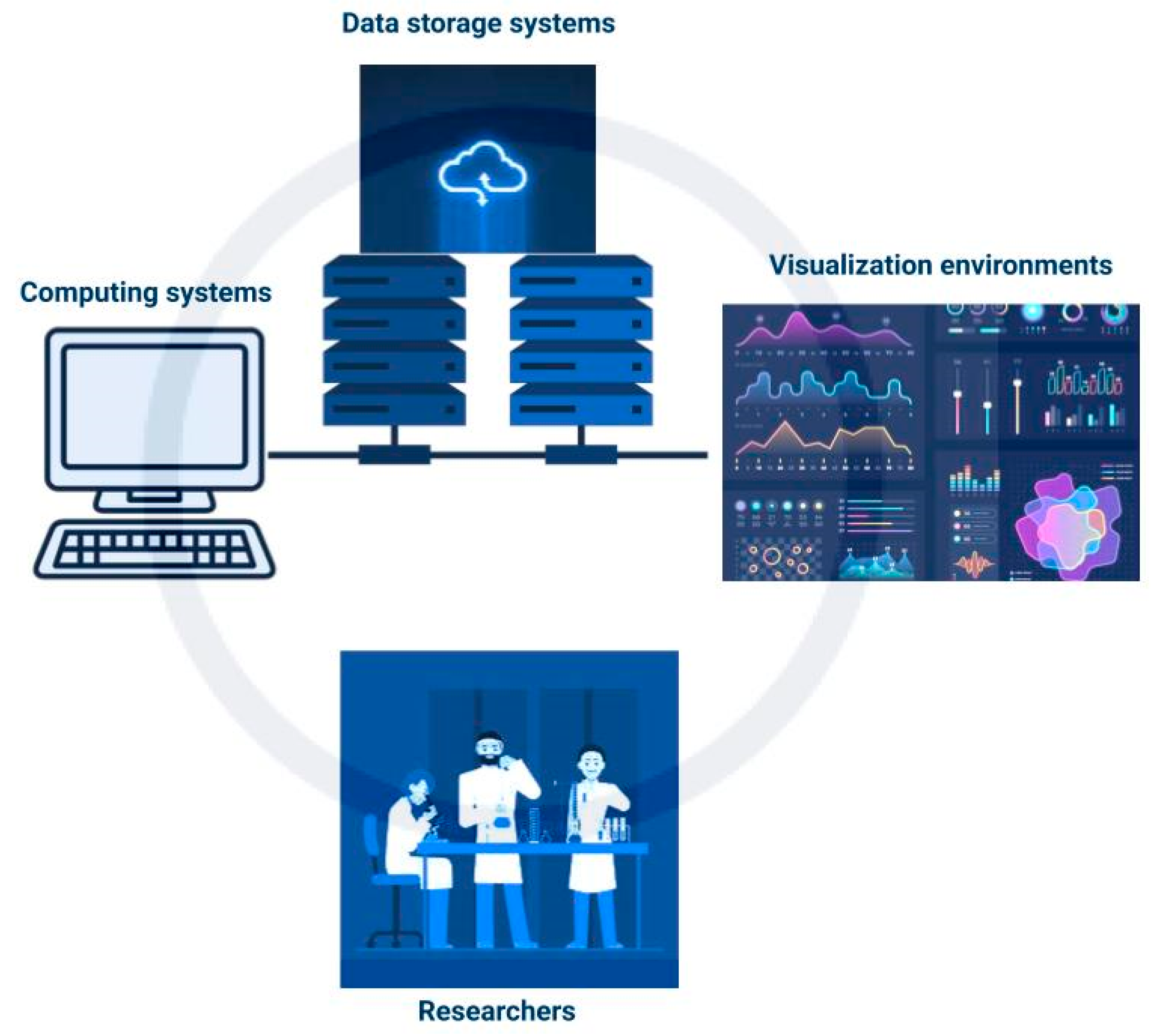

3.2. Cyberinfrastructure

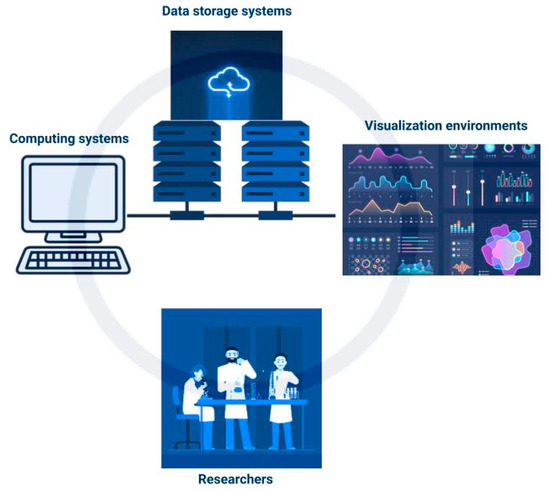

Cyberinfrastructure (CI) is described by Atkins et al. 2003 [95] as a “research environment that supports advanced data acquisition, storage, management, integration, mining, visualization and other computing and processing services distributed over the internet beyond the scope of a single institution.” It consists of computing systems, data storage systems, advanced instruments and data repositories, visualization environments, and people (shown in Figure 3) linked together by software and high-performance networks [27]. This enhances the efficiency of research and productivity in the use of resources. Some CI systems can provide for in-field data analysis to point out errors in data collection that can be rectified and identify further areas of interest for data collection.

Figure 3.

Simplified schematic of cyberinfrastructure.

CI has gained more interest in recent years because of the growth in quantities of data collected in science, interdisciplinarity in research, the establishment of a range of locations around the world where cutting-edge research is performed, and the spread of advanced technologies [96]. Due to the various components required, CI systems are expensive, with the cost of a supercomputer alone being upwards of US$90 million. For this reason, some organizations that can invest in this infrastructure offer it as a service at a fee to researchers. For example, the University of Illinois at Chicago provides various cost models for access to their infrastructure [97].

CI has been applied in scientific disciplines ranging from biomedical to geospatial and environmental sciences. One such project is the distributed CI called the Function Biomedical Informatics Research Network (FBIRN), a large-scale project in the area of biomedical research funded by the U.S. National Institutes of Health (NIH) [96]. CI has also been applied in geospatial research with a range of initiatives under the National Spatial Data Infrastructure (NSDI). The NSDI focuses on spatial data collection from government and private sources, integration and sharing through its GeoPlatform (https://www.geo-platform.gov/, accessed on 26 June 2021) [98]. In the geospatial domain, one example is the Data Observation Network for Earth (DataONE), which is a CI platform for integrative biological and environmental research. It is designed to provide an underlying infrastructure that facilitates data preservation and re-use for research with an initial focus on remote-sensed data for the biological and environmental sciences [99]. Because of the complexities in developing and setting up a CI, Wang et al. 2013 [100] put forward the Cyberaide Creative service, which uses virtual machine technologies to create a common platform separate from the hardware and software and then deploys a cyberinfrastructure for its users. This allows for end-users to specify the necessary resource requirements and have them immediately deployed without needing to understand their configuration and basic infrastructures.

In plant phenotyping, a case for the use of CI has been made similarly in that non-invasive high throughput phenotyping technologies collect large amounts of plant data. Analysis methods for this data using AI are being developed but face the challenge of integrating datasets and the poor scalability of these tools [29]. The large amounts of data generated by HTP platforms need to be efficiently archived and retrieved for analysis. Researchers affiliated with the United States National Science Foundation (NSF) have developed a form of CI called iPlant that incorporates artificial intelligence technologies to store and process plant data gathered from the various HTP platforms. This CI platform provides tools for data analysis and storage with high-performance computing to access and analyze the data. It also has methods for the integration of tools and datasets [29].

In order to support both genotyping and phenotyping, iPlant uses the BISQUE (Bio-Image Semantic Query User Environment) [101] software system. Its main functionality is image analysis which it supports using its five core services: image storage and management, metadata management and query, analysis execution, and client presentation. Its design is flexible enough to support the range of variability in image analysis workflows between research labs. The plant-oriented version of BISQUE, PhytoBISQUE, provides an application programming interface integrated with iPlant to develop and deploy new algorithms, facilitating collaboration among researchers [29].

3.3. Open-Source Devices and Tools

Open-source is a term generally used to refer to tools or software that, as stated by Aksulu & Wade, 2010 [30], “allows for the modification of source code, is freely distributed, is technologically neutral, and grants free subsidiary licensing rights.” Characteristically, Open-Source Systems (OSS) are voluntary and collaborative in nature and their lifespan lasts as long as there is an individual willing and able to maintain the system [102]. Few traditional operation constraints such as scope, time, and cost factors affect these systems, and they have the added advantage of enhancing the skills of the people involved while producing tangible cost-effective technology output [30]. Some OSS development teams take advantage of crowdsourcing which widens the scope and quality of ideas and reduces project cycle time [103].

In phenomics, new crop management strategies require the co-analyses of both sensor data on crop status and related environmental and genetic metadata. Unfortunately, this data is mostly restricted to larger well-funded agricultural institutions since the instruments for data collection are expensive. The available phenotyping instruments output data that is challenging to interpret because of proprietary or incompatible formats. Many techniques that are being applied are ready-made off-the-shelf software packages that do not have specific algorithms for this data interpretation. Phenotyping researchers, therefore, have to address the challenges of data interpretation and data sharing alongside limited access to instrumentation (especially that which is well suited for field phenotyping). Open-source tools and devices represent a promising approach for addressing these challenges. Those being applied in phenomics are more accessible and easy to use while providing a connection to a community of users with broader support and continuous improvement [104,105]. One such open-source device is the MultispeQ device (PhotosynQ, East Lansing, MI, USA), an inexpensive device linked through the PhotosynQ platform (https://www.photosynq.com, accessed on 1 June 2021) to communities of researchers, providing useful data on plant performance. The MultispeQ device is rugged and field deployable, open-source, and expandable to incorporate new sensors and techniques. The PhotosynQ platform connects the MultispeQ instrument to the community of researchers, breeders, and citizen scientists to foster field-based and community-driven phenotyping [105].

An open-source prediction approach called Dirichlet-aggregation regression (DAR) was put forward by Bauckhage and Kersting, 2013 [104], to address the challenge of manual data labeling and running supervised classification algorithms on hyperspectral data. Hyperspectral cameras record a spectrum of several hundred wave-lengths ranging from approximately 300 nm to 2500 nm, which poses a significant challenge of data handling in hyperspectral image analysis. Therefore, working with hyperspectral data requires algorithms and architecture that can cope with massive amounts of data. Their research shows that DAR can predict the level of drought stress of plants effectively and before it becomes visible to the human eye.

Open-source software and platforms have also been developed that simplify the computer vision image management pipeline. One such tool is the PlantCV image analysis software package. It is used to build workflows that can be used to extract data from images and sensors, and it employs various computational tools in python that are extendable, depending on the required image analysis task, in order to provide data scientists and biologists with a common interface [106]. It deploys additional open-source tools such as LabelImg for image annotation [107]. PlantCV’s image processing library has been applied in Deep Plant Phenomics which is an open-source software platform that implements deep convolutional neural networks for plant phenotyping [108]. Deep Plant Phenomics provides an image processing pipeline that has been used for complex non-linear phenotyping tasks such as leaf counting, mutant classification, and age regression in Arabidopsis.

Another group of open-source tools that have been applied in phenomics are the MobileNet deep learning architectures. MobileNet architectures are convolutional neural networks (CNNs) with reduced complexity and model size and are suited to devices with low computational power such as mobile phones. MobileNets optimize for latency resulting from low computing power by providing small networks with substantial accuracy that can be used in real-world applications [109]. One example is the MobileNetV2 which is built to be used for classification, detection, and segmentation of images. It uses ReLU6 non-linearity, which is suited to low-precision computation [110]. These are promising for use on mobile phones which are widely accessible and commonplace to be potentially used for field phenotyping. MobileNets employ TensorFlow Lite, an open-source deep learning framework used to deploy machine learning models on mobile devices [111].

Lighting problems in outdoor settings have the potential to affect open-source tools and the performance of CNNs. There is, therefore, a need to test and train the networks on plant images collected in the field. A mobile-based CNN model was used for plant disease diagnosis, and the problem of inconsistent light conditions was solved in this study [112] by the use of an umbrella. This model was employed offline and displayed decreased performance because of differing training datasets from the data collected in the field and, therefore, highlighted the need to capture more images using mobile devices in typical field settings and to use those very images for the training of the models in order to improve the accuracy. In deploying open-source platforms and CNNs, there is an additional challenge in developing tools that can analyze and determine a wide variety of phenotypes from various crops, and it has not been possible to develop a one-size-fits-all platform for analysis. However, various platforms are thus far available for some phenotyping tasks.

4. Artificial Intelligence and Field Phenotyping

In order to screen plants for valuable traits (such as grain size, abiotic stress tolerance, product quality, or yield potential), experiments with repeated trials are required in different environments based on the objectives of the study. Much of the discussion of phenotyping has focused on the measurement of individual plants in controlled environments. However, controlled environments do not provide an accurate representation of plant growth in open-air conditions [7]. Field-based phenotyping (FBP) is now increasingly widely recognized as the only approach that gives accurate depictions of the traits in actual cropping systems. Currently, sensor systems suitable for high-throughput field phenotyping can simultaneously measure multiple plots and fuse a multitude of traits in different data formats [17]. Through the use of vehicles carrying multiple sets of sensors, FBP platforms are transforming the characterization of plant populations for genetic research and crop improvement. Accomplishing FBP in a timely and cost-effective manner has led to the use of unmanned aircraft, wheeled vehicles, or agricultural robots to deploy multiple sensors that can measure plant traits in brief time intervals [7]. Therefore, phenotyping in many crop breeding programs is now being conducted by combining instruments with novel technologies such as non-invasive imaging, robotics, and high-performance computing on the FBP platforms [8].

Unmanned aircraft are particularly attractive for data acquisition because they enable sensing with a high spatial and spectral resolution for a relatively low cost. Unmanned helicopters (such as the one shown in Figure 4) can carry various sensors and have the accommodation to carry larger sensors. In windy conditions, helicopters enable precise flight control and operations in cluttered environments because of their maneuverability and ability to fly at low speeds. Replicated collection of sensor data can be achieved through automatic flight control when the helicopter is equipped with algorithms for ground detection, obstacle detection and avoidance, and stable effective control [113]. Modern unmanned aerial systems (UAS) are better equipped to manage the harsh environmental conditions and obstacles due to rapid advances in technology such as collision technology, optical sensors for machine vision, GPS, accelerometers, gyroscopes, and compasses. However, they still face the challenge of limited battery power, with electric batteries providing between 10 to 30 min of battery power [25].

Figure 4.

The CSIRO autonomous helicopter system. Adapted from Merz & Chapman, 2012 [113].

Although not commonly used for phenotyping, wheeled vehicles are sometimes used in phenotyping systems for some research projects since they also provide for proximal phenotyping. They have the advantage of being able to cover large areas and operate for longer periods, along with the disadvantage of compacting and damaging the soil and being costly because of the human labor required to operate the vehicle. According to White et al. 2012 [7], high-clearance tractors were expected to play a more central role in FBP as the wheeled vehicles of choice due to their high vertical clearance, availability, and ease of use. They can be used for continuous measurements at different stages of the crop growth process and can operate for longer periods compared to the UAVs. A variation of high clearance tractors in the form of mobile motorized platforms, which eliminate the need for human labor in the field, have been developed and tested in various phenotyping applications [114,115].

Phenotyping data collected using these FBP systems faces the challenge of instability during motion and weather changes, which cause occlusion and mal-alignment in the images. This is partially addressed by using proximal sensing at slower speeds in order to improve image resolution (although this limits the areal coverage in one flight and does not fully solve the misalignment). Data processing for this data has significantly improved in recent years with AI-enabled snapshot and line scanning imaging software, optimized specifically for unmanned aircraft such as structure-from-motion photogrammetry [87,116]. One approach that has been proposed to enhance the application of these platforms for sensing in field conditions is adapting field conditions to align with the HTP field techniques (rather than the traditional approach of adapting the instruments for the field), depending on the crop of interest without compromising the realistic crop evaluation in field conditions [6].

5. Phenotyping Communities and Facilities

The growth in plant phenotyping research coupled with the integration of AI technology has fostered the development of laboratories and centers equipped with high-throughput phenotyping technologies. Some of these plant phenotyping centers are members of the International Plant Phenotyping Network (IPPN), which works with various member organizations in academia and industry to distribute relevant information about plant phenotyping and increase its visibility [117]. Partner facilities such as the Australian Plant Phenomics Facility, a government-funded national facility, provide access to infrastructure such as glass-house automation technologies, digital imaging technologies, long-term data storage, etc. [118]. Such facilities have and continue to provide subsidized access to advanced AI-phenotyping technologies that would otherwise be inaccessible due to the costs of operation and maintenance. In addition, as high-throughput phenotyping becoming more common, there has come the issue of data-merging with many laboratories gathering phenotypic data that rarely enter the public domain where it could be accessed by other institutions to foster interdisciplinary research [8]. Networks such as the IPPN continue to provide access to phenotyping information generated by member organizations which is key in enabling cooperation between the organizations and advancing the phenomics agenda through collaborative research.

Besides the academic institutions and government organizations, private industry companies (a few of which have been highlighted here) are establishing themselves as key providers and facilitators of plant phenotyping AI technology around the world. Biopute technology provides high-end research instruments such as multispectral cameras for field phenotyping, drones for aerial photography, and provides after-sales support services to their customers. In partnership with universities and research institutes, Biopute provides innovations that are contributing to the progress of plant phenotyping in China (http://www.bjbiopute.cn, accessed on 1 June 2021). KeyGene is an agricultural biotechnology company providing tools for precision breeding and digital phenotyping investing in deep learning-based algorithms and virtual reality for data visualization (https://www.keygene.com, accessed on 1 June 2021). PhenoTrait Technology Co., Ltd. mainly focuses on plant phenotyping using the photosynthesis characteristics of plants and promoting the use of phenotyping technologies to improve crop quality, crop yield, and environmental conditions in China. Some of their products include high-throughput phenotyping instruments, chlorophyll fluorescence imaging systems, etc. (http://www.phenotrait.com, accessed on 1 June 2021). Photon Systems Instruments (PSI) is a company in the Czech Republic that also supplies a range of phenotyping systems, both field and laboratory-based, including root system phenotyping. They have also incorporated machine learning to integrate robotics into the systems they develop to better automate the processes (https://psi.cz, accessed 1 June 2021).

6. Conclusions

Recent advancements in high-throughput phenotyping technologies have led to significant strides in plant phenomics. The on-going integration of artificial intelligence into these technologies promises progression into smarter and much faster technologies with significantly lower input costs. In the area of phenotyping image data analysis, the integration of AI into the data management pipeline of tomography and thermography is on a lower scale in comparison to the other imaging techniques. The application of deep learning in the data analysis of these techniques is promising, as it has been successfully implemented in analysis of composite materials [88] and medical diagnostics [94]. As much as field phenotyping is the most effective way to collect phenotypic data, it is still being conducted on a relatively lower scale than is possible. Artificial intelligence technologies also require large amounts of data from various sources to improve their accuracy. This provides an opportunity to invest more into the tailoring of current technologies for field data collection and the utilization of already existing AI adaptable technologies, such as smartphones, to increase the quantity of quality data. Smartphones have become widespread consumer products, and the simplicity and ease of use of their sensors suggest that their use can be explored in agriculture [104]. Some of the challenges that would need to be addressed are that advanced signal processing on smartphones has to cope with constraints such as low battery life, restricted computational power, or limited bandwidth [104]. The use of citizen science alongside professional researchers [119] in data collection also has the potential to aid in increasing the amount of data collected. The overall goal of employing these approaches and technologies is to provide the infrastructure that allows for tracking how plant traits progress throughout the growing season and facilitate the coordination of data analysis, management, and utilization of results using AI methods.

Author Contributions

Conceptualization, S.N. and B.-K.C.; investigation, M.S.K. and I.B.; writing—original draft preparation, S.N.; writing—review and editing, H.-K.S. and M.S.K.; visualization, S.N. and I.B.; supervision, B.-K.C. and H.-K.S.; funding acquisition, B.-K.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Institute of Food Science and Technology (Project No.: PJ0156892021) of the Rural Development Administration, Korea.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- UN. United Nations|Population Division. Available online: https://www.un.org/development/desa/pd/ (accessed on 10 September 2020).

- Costa, C.; Schurr, U.; Loreto, F.; Menesatti, P.; Carpentier, S. Plant phenotyping research trends, a science mapping approach. Front. Plant Sci. 2019, 9, 1–11. [Google Scholar] [CrossRef]

- Arvidsson, S.; Pérez-Rodríguez, P.; Mueller-Roeber, B. A growth phenotyping pipeline for Arabidopsis thaliana integrating image analysis and rosette area modeling for robust quantification of genotype effects. New Phytol. 2011, 191, 895–907. [Google Scholar] [CrossRef] [PubMed]

- Furbank, R.T. Plant phenomics: From gene to form and function. Funct. Plant Biol. 2009, 36, v–vi. [Google Scholar]

- Houle, D.; Govindaraju, D.R.; Omholt, S. Phenomics: The next challenge. Nat. Rev. Genet. 2010, 11, 855–866. [Google Scholar] [CrossRef]

- Pauli, D. High-throughput phenotyping technologies in cotton and beyond. In Proceedings of the Advances in Field-Based High-Throughput Phenotyping and Data Management: Grains and Specialty Crops, Spokane, WA, USA, 9–10 November 2015; pp. 1–11. [Google Scholar]

- White, J.W.; Andrade-Sanchez, P.; Gore, M.A.; Bronson, K.F.; Coffelt, T.A.; Conley, M.M.; Feldmann, K.A.; French, A.N.; Heun, J.T.; Hunsaker, D.J.; et al. Field-based phenomics for plant genetics research. Field Crops Res. 2012, 133, 101–112. [Google Scholar] [CrossRef]

- Furbank, R.T.; Tester, M. Phenomics—Technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 2011, 16, 635–644. [Google Scholar] [CrossRef] [PubMed]

- Fahlgren, N.; Gehan, M.A.; Baxter, I. Lights, camera, action: High-throughput plant phenotyping is ready for a close-up. Curr. Opin. Plant Biol. 2015, 24, 93–99. [Google Scholar] [CrossRef]

- Chen, D.; Neumann, K.; Friedel, S.; Kilian, B.; Chen, M.; Altmann, T.; Klukas, C. Dissecting the phenotypic components of crop plant growthand drought responses based on high-throughput image analysis w open. Plant Cell 2014, 26, 4636–4655. [Google Scholar] [CrossRef] [PubMed]

- Walter, T.; Shattuck, D.W.; Baldock, R.; Bastin, M.E.; Carpenter, A.E.; Duce, S.; Ellenberg, J.; Fraser, A.; Hamilton, N.; Pieper, S.; et al. Visualization of image data from cells to organisms. Nat. Methods 2010, 7, S26–S41. [Google Scholar] [CrossRef] [PubMed]

- Oerke, E.C.; Steiner, U.; Dehne, H.W.; Lindenthal, M. Thermal imaging of cucumber leaves affected by downy mildew and environmental conditions. J. Exp. Bot. 2006, 57, 2121–2132. [Google Scholar] [CrossRef]

- Chaerle, L.; Pineda, M.; Romero-Aranda, R.; Van Der Straeten, D.; Barón, M. Robotized thermal and chlorophyll fluorescence imaging of pepper mild mottle virus infection in Nicotiana benthamiana. Plant Cell Physiol. 2006, 47, 1323–1336. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Berni, J.A.J.; Suárez, L.; Sepulcre-Cantó, G.; Morales, F.; Miller, J.R. Imaging chlorophyll fluorescence with an airborne narrow-band multispectral camera for vegetation stress detection. Remote Sens. Environ. 2009, 113, 1262–1275. [Google Scholar] [CrossRef]

- Jensen, T.; Apan, A.; Young, F.; Zeller, L. Detecting the attributes of a wheat crop using digital imagery acquired from a low-altitude platform. Comput. Electron. Agric. 2007, 59, 66–77. [Google Scholar] [CrossRef]

- Montes, J.M.; Utz, H.F.; Schipprack, W.; Kusterer, B.; Muminovic, J.; Paul, C.; Melchinger, A.E. Near-infrared spectroscopy on combine harvesters to measure maize grain dry matter content and quality parameters. Plant Breed. 2006, 125, 591–595. [Google Scholar] [CrossRef]

- Bai, G.; Ge, Y.; Hussain, W.; Baenziger, P.S.; Graef, G. A multi-sensor system for high throughput field phenotyping in soybean and wheat breeding. Comput. Electron. Agric. 2016, 128, 181–192. [Google Scholar] [CrossRef]

- Chaerle, L.; Van Der Straeten, D. Imaging techniques and the early detection of plant stress. Trends Plant Sci. 2000, 5, 495–501. [Google Scholar] [CrossRef]

- Gupta, S.; Ibaraki, Y.; Trivedi, P. Applications of RGB color imaging in plants. Plant Image Anal. 2014, 41–62. [Google Scholar] [CrossRef]

- Montes, J.M.; Melchinger, A.E.; Reif, J.C. Novel throughput phenotyping platforms in plant genetic studies. Trends Plant Sci. 2007, 12, 433–436. [Google Scholar] [CrossRef] [PubMed]

- Casanova, J.J.; O’Shaughnessy, S.A.; Evett, S.R.; Rush, C.M. Development of a wireless computer vision instrument to detect biotic stress in wheat. Sensors 2014, 14, 17753–17769. [Google Scholar] [CrossRef] [PubMed]

- Kruse, O.M.O.; Prats-Montalbán, J.M.; Indahl, U.G.; Kvaal, K.; Ferrer, A.; Futsaether, C.M. Pixel classification methods for identifying and quantifying leaf surface injury from digital images. Comput. Electron. Agric. 2014, 108, 155–165. [Google Scholar] [CrossRef]

- Shakoor, N.; Lee, S.; Mockler, T.C. High throughput phenotyping to accelerate crop breeding and monitoring of diseases in the field. Curr. Opin. Plant Biol. 2017, 38, 184–192. [Google Scholar] [CrossRef] [PubMed]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hardin, P.J.; Lulla, V.; Jensen, R.R.; Jensen, J.R. Small Unmanned Aerial Systems (sUAS) for environmental remote sensing: Challenges and opportunities revisited. GIScience Remote Sens. 2019, 56, 309–322. [Google Scholar] [CrossRef]

- Mookerjee, M.; Vieira, D.; Chan, M.A.; Gil, Y.; Goodwin, C.; Shipley, T.F.; Tikoff, B. We need to talk: Facilitating communication between field-based geoscience and cyberinfrastructure communities. GSA Today 2015, 34–35. [Google Scholar] [CrossRef]

- Stewart, C.A.; Simms, S.; Plale, B.; Link, M.; Hancock, D.Y.; Fox, G.C. What is cyberinfrastructure? In Proceedings of the Proceedings of the 38th Annual ACM SIGUCCS Fall Conference: Navigation and Discovery, Norfolk, VA, USA, 24–27 October 2010; pp. 37–44. [Google Scholar] [CrossRef]

- Madhavan, K.; Elmqvist, N.; Vorvoreanu, M.; Chen, X.; Wong, Y.; Xian, H.; Dong, Z.; Johri, A. DIA2: Web-based cyberinfrastructure for visual analysis of funding portfolios. IEEE Trans. Vis. Comput. Graph. 2014, 20, 1823–1832. [Google Scholar] [CrossRef]

- Goff, S.A.; Vaughn, M.; McKay, S.; Lyons, E.; Stapleton, A.E.; Gessler, D.; Matasci, N.; Wang, L.; Hanlon, M.; Lenards, A.; et al. The iPlant collaborative: Cyberinfrastructure for plant biology. Front. Plant Sci. 2011, 2, 1–16. [Google Scholar] [CrossRef]

- Aksulu, A.; Wade, M. A comprehensive review and synthesis of open source research. J. Assoc. Inf. Syst. 2010, 11, 576–656. [Google Scholar] [CrossRef]

- Frankenfield, J. Artificial Intelligence (AI). Available online: https://www.investopedia.com/terms/a/artificial-intelligence-ai.asp (accessed on 9 February 2021).

- Paschen, U.; Pitt, C.; Kietzmann, J. Artificial intelligence: Building blocks and an innovation typology. Bus. Horiz. 2020, 63, 147–155. [Google Scholar] [CrossRef]

- Frey, L.J. Artificial intelligence and integrated genotype–Phenotype identification. Genes 2019, 10, 18. [Google Scholar] [CrossRef]

- Zhuang, Y.T.; Wu, F.; Chen, C.; Pan, Y. He Challenges and opportunities: From big data to knowledge in AI 2.0. Front. Inf. Technol. Electron. Eng. 2017, 18, 3–14. [Google Scholar] [CrossRef]

- Roscher, R.; Bohn, B.; Duarte, M.F.; Garcke, J. Explainable Machine Learning for Scientific Insights and Discoveries. IEEE Access 2020, 8, 42200–42216. [Google Scholar] [CrossRef]

- Singh, A.; Ganapathysubramanian, B.; Singh, A.K.; Sarkar, S. Machine Learning for High-Throughput Stress Phenotyping in Plants. Trends Plant Sci. 2016, 21, 110–124. [Google Scholar] [CrossRef]

- Rahaman, M.M.; Ahsan, M.A.; Chen, M. Data-Mining Techniques for Image-based Plant Phenotypic Traits Identification and Classification. Sci. Rep. 2019, 9, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Huang, K.Y. Application of artificial neural network for detecting Phalaenopsis seedling diseases using color and texture features. Comput. Electron. Agric. 2007, 57, 3–11. [Google Scholar] [CrossRef]

- Wetterich, C.B.; Kumar, R.; Sankaran, S.; Belasque, J.; Ehsani, R.; Marcassa, L.G. A comparative study on application of computer vision and fluorescence imaging spectroscopy for detection of citrus huanglongbing disease in USA and Brazil. Opt. InfoBase Conf. Pap. 2013, 2013. [Google Scholar] [CrossRef]

- Sommer, C.; Gerlich, D.W. Machine learning in cell biology-teaching computers to recognize phenotypes. J. Cell Sci. 2013, 126, 5529–5539. [Google Scholar] [CrossRef]

- Sadeghi-Tehran, P.; Sabermanesh, K.; Virlet, N.; Hawkesford, M.J. Automated method to determine two critical growth stages of wheat: Heading and flowering. Front. Plant Sci. 2017, 8, 1–14. [Google Scholar] [CrossRef]

- Brichet, N.; Fournier, C.; Turc, O.; Strauss, O.; Artzet, S.; Pradal, C.; Welcker, C.; Tardieu, F.; Cabrera-Bosquet, L. A robot-assisted imaging pipeline for tracking the growths of maize ear and silks in a high-throughput phenotyping platform. Plant Methods 2017, 13, 1–12. [Google Scholar] [CrossRef]

- Wilf, P.; Zhang, S.; Chikkerur, S.; Little, S.A.; Wing, S.L.; Serre, T. Computer vision cracks the leaf code. Proc. Natl. Acad. Sci. USA 2016, 113, 3305–3310. [Google Scholar] [CrossRef]

- Sabanci, K.; Toktas, A.; Kayabasi, A. Grain classifier with computer vision usingadaptive neuro-fuzzy inference system.pdf. J. Sci. Food Agric. 2017, 97, 3994–4000. [Google Scholar] [CrossRef]

- Sabanci, K.; Kayabasi, A.; Toktas, A. Computer vision-based method for classification of wheat grains using artificial neural network. J. Sci. Food Agric. 2017, 97, 2588–2593. [Google Scholar] [CrossRef] [PubMed]

- Lin, P.; Li, X.L.; Chen, Y.M.; He, Y. A Deep Convolutional Neural Network Architecture for Boosting Image Discrimination Accuracy of Rice Species. Food Bioprocess Technol. 2018, 11, 765–773. [Google Scholar] [CrossRef]

- Singh, A.K.; Ganapathysubramanian, B.; Sarkar, S.; Singh, A. Deep Learning for Plant Stress Phenotyping: Trends and Future Perspectives. Trends Plant Sci. 2018, 23, 883–898. [Google Scholar] [CrossRef]

- Pound, M.P.; Atkinson, J.A.; Townsend, A.J.; Wilson, M.H.; Griffiths, M.; Jackson, A.S.; Bulat, A.; Tzimiropoulos, G.; Wells, D.M.; Murchie, E.H.; et al. Deep machine learning provides state-of-the-art performance in image-based plant phenotyping. GigaScience 2017, 6, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [PubMed]

- Abdalla, A.; Cen, H.; Wan, L.; Rashid, R.; Weng, H.; Zhou, W.; He, Y. Fine-tuning convolutional neural network with transfer learning for semantic segmentation of ground-level oilseed rape images in a field with high weed pressure. Comput. Electron. Agric. 2019, 167, 105091. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Vali, E.; Fountas, S. Combining generative adversarial networks and agricultural transfer learning for weeds identification. Biosyst. Eng. 2021, 204, 79–89. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Impact of dataset size and variety on the effectiveness of deep learning and transfer learning for plant disease classification. Comput. Electron. Agric. 2018, 153, 46–53. [Google Scholar] [CrossRef]

- Wang, G.; Sun, Y.; Wang, J. Automatic Image-Based Plant Disease Severity Estimation Using Deep Learning. Comput. Intell. Neurosci. 2017, 2017. [Google Scholar] [CrossRef]

- Buzzy, M.; Thesma, V.; Davoodi, M.; Velni, J.M. Real-time plant leaf counting using deep object detection networks. Sensors 2020, 20, 6896. [Google Scholar] [CrossRef]

- Ghosal, S.; Zheng, B.; Chapman, S.C.; Potgieter, A.B.; Jordan, D.R.; Wang, X.; Singh, A.K.; Singh, A.; Hirafuji, M.; Ninomiya, S.; et al. A Weakly Supervised Deep Learning Framework for Sorghum Head Detection and Counting. Plant Phenomics 2019, 2019, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Aich, S.; Stavness, I. Leaf counting with deep convolutional and deconvolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (Workshops), Venice, Italy, 22–29 October 2017; pp. 2080–2089. [Google Scholar] [CrossRef]

- Wang, X.; Xuan, H.; Evers, B.; Shrestha, S.; Pless, R.; Poland, J. High-throughput phenotyping with deep learning gives insight into the genetic architecture of flowering time in wheat. GigaScience 2019, 8, 1–11. [Google Scholar] [CrossRef]

- Ghosal, S.; Blystone, D.; Singh, A.K.; Ganapathysubramanian, B.; Singh, A.; Sarkar, S. An explainable deep machine vision framework for plant stress phenotyping. Proc. Natl. Acad. Sci. USA 2018, 115, 4613–4618. [Google Scholar] [CrossRef]

- Chaerle, L.; Van Der Straeten, D. Seeing is believing: Imaging techniques to monitor plant health. Biochim. Biophys. Acta Gene Struct. Expr. 2001, 1519, 153–166. [Google Scholar] [CrossRef]

- Perez-Sanz, F.; Navarro, P.J.; Egea-Cortines, M. Plant phenomics: An overview of image acquisition technologies and image data analysis algorithms. GigaScience 2017, 6, 1–18. [Google Scholar] [CrossRef]

- Cen, H.; Weng, H.; Yao, J.; He, M.; Lv, J.; Hua, S.; Li, H.; He, Y. Chlorophyll fluorescence imaging uncovers photosynthetic fingerprint of citrus Huanglongbing. Front. Plant Sci. 2017, 8, 1–11. [Google Scholar] [CrossRef]

- Lichtenthaler, H.K.; Langsdorf, G.; Lenk, S.; Buschmann, C. Chlorophyll fluorescence imaging of photosynthetic activity with the flash-lamp fluorescence imaging system. Photosynthetica 2005, 43, 355–369. [Google Scholar] [CrossRef]

- Ehlert, B.; Hincha, D.K. Chlorophyll fluorescence imaging accurately quantifies freezing damage and cold acclimation responses in Arabidopsis leaves. Plant Methods 2008, 4, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Zheng, H.; Zhou, X.; He, J.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Early season detection of rice plants using RGB, NIR-G-B and multispectral images from unmanned aerial vehicle (UAV). Comput. Electron. Agric. 2020, 169, 105223. [Google Scholar] [CrossRef]

- Padmavathi, K.; Thangadurai, K. Implementation of RGB and grayscale images in plant leaves disease detection—Comparative study. Indian J. Sci. Technol. 2016, 9, 4–9. [Google Scholar] [CrossRef]

- Wang, X.; Yang, W.; Wheaton, A.; Cooley, N.; Moran, B. Automated canopy temperature estimation via infrared thermography: A first step towards automated plant water stress monitoring. Comput. Electron. Agric. 2010, 73, 74–83. [Google Scholar] [CrossRef]

- Munns, R.; James, R.A.; Sirault, X.R.R.; Furbank, R.T.; Jones, H.G. New phenotyping methods for screening wheat and barley for beneficial responses to water deficit. J. Exp. Bot. 2010, 61, 3499–3507. [Google Scholar] [CrossRef] [PubMed]

- Urrestarazu, M. Infrared thermography used to diagnose the effects of salinity in a soilless culture. Quant. InfraRed Thermogr. J. 2013, 10, 1–8. [Google Scholar] [CrossRef][Green Version]

- Fittschen, U.E.A.; Kunz, H.H.; Höhner, R.; Tyssebotn, I.M.B.; Fittschen, A. A new micro X-ray fluorescence spectrometer for in vivo elemental analysis in plants. X-ray Spectrom. 2017, 46, 374–381. [Google Scholar] [CrossRef]

- Chow, T.H.; Tan, K.M.; Ng, B.K.; Razul, S.G.; Tay, C.M.; Chia, T.F.; Poh, W.T. Diagnosis of virus infection in orchid plants with high-resolution optical coherence tomography. J. Biomed. Opt. 2009, 14, 014006. [Google Scholar] [CrossRef]

- Garbout, A.; Munkholm, L.J.; Hansen, S.B.; Petersen, B.M.; Munk, O.L.; Pajor, R. The use of PET/CT scanning technique for 3D visualization and quantification of real-time soil/plant interactions. Plant Soil 2012, 352, 113–127. [Google Scholar] [CrossRef]

- Ač, A.; Malenovský, Z.; Hanuš, J.; Tomášková, I.; Urban, O.; Marek, M.V. Near-distance imaging spectroscopy investigating chlorophyll fluorescence and photosynthetic activity of grassland in the daily course. Funct. Plant Biol. 2009, 36, 1006–1015. [Google Scholar] [CrossRef]

- Vigneau, N.; Ecarnot, M.; Rabatel, G.; Roumet, P. Potential of field hyperspectral imaging as a non destructive method to assess leaf nitrogen content in Wheat. Field Crops Res. 2011, 122, 25–31. [Google Scholar] [CrossRef]

- Behmann, J.; Steinrücken, J.; Plümer, L. Detection of early plant stress responses in hyperspectral images. ISPRS J. Photogramm. Remote Sens. 2014, 93, 98–111. [Google Scholar] [CrossRef]

- Prey, L.; von Bloh, M.; Schmidhalter, U. Evaluating RGB imaging and multispectral active and hyperspectral passive sensing for assessing early plant vigor in winter wheat. Sensors 2018, 18, 2931. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef]

- Han, X.F.; Laga, H.; Bennamoun, M. Image-based 3D Object Reconstruction: State-of-the-Art and Trends in the Deep Learning Era. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1578–1604. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, C.V.; Fripp, J.; Lovell, D.R.; Furbank, R.; Kuffner, P.; Daily, H.; Sirault, X. 3D scanning system for automatic high-resolution plant phenotyping. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016. [Google Scholar]

- Matovic, M.D. Biomass: Detection, Production and Usage; BoD—Books on Demand: Norderstedt, Germany, 2011; ISBN 9533074922. [Google Scholar]

- Liu, H.; Bruning, B.; Garnett, T.; Berger, B. Hyperspectral imaging and 3D technologies for plant phenotyping: From satellite to close-range sensing. Comput. Electron. Agric. 2020, 175, 105621. [Google Scholar] [CrossRef]

- Zhu, H.; Chu, B.; Fan, Y.; Tao, X.; Yin, W.; He, Y. Hyperspectral Imaging for Predicting the Internal Quality of Kiwifruits Based on Variable Selection Algorithms and Chemometric Models. Sci. Rep. 2017, 7, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Li, G. Visual detection of apple bruises using AdaBoost algorithm and hyperspectral imaging. Int. J. Food Prop. 2018, 21, 1598–1607. [Google Scholar] [CrossRef]

- Gu, Q.; Sheng, L.; Zhang, T.; Lu, Y.; Zhang, Z.; Zheng, K.; Hu, H.; Zhou, H. Early detection of tomato spotted wilt virus infection in tobacco using the hyperspectral imaging technique and machine learning algorithms. Comput. Electron. Agric. 2019, 167, 105066. [Google Scholar] [CrossRef]

- Ramesh, V. A Review on the Application of Deep Learning in Thermography. Int. J. Eng. Manag. Res. 2017, 7, 489–493. [Google Scholar]

- Pineda, M.; Barón, M.; Pérez-Bueno, M.L. Thermal imaging for plant stress detection and phenotyping. Remote Sens. 2021, 13, 68. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV thermal imagery in precision agriculture: State of the art and future research outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Maes, W.H.; Huete, A.R.; Steppe, K. Optimizing the processing of UAV-based thermal imagery. Remote Sens. 2017, 9, 476. [Google Scholar] [CrossRef]

- Bang, H.T.; Park, S.; Jeon, H. Defect identification in composite materials via thermography and deep learning techniques. Compos. Struct. 2020, 246, 112405. [Google Scholar] [CrossRef]

- Moshou, D.; Bravo, C.; West, J.; Wahlen, S.; McCartney, A.; Ramon, H. Automatic detection of “yellow rust” in wheat using reflectance measurements and neural networks. Comput. Electron. Agric. 2004, 44, 173–188. [Google Scholar] [CrossRef]

- Flavel, R.J.; Guppy, C.N.; Tighe, M.; Watt, M.; McNeill, A.; Young, I.M. Non-destructive quantification of cereal roots in soil using high-resolution X-ray tomography. J. Exp. Bot. 2012, 63, 2503–2511. [Google Scholar] [CrossRef]

- Gregory, P.J.; Hutchison, D.J.; Read, D.B.; Jenneson, P.M.; Gilboy, W.B.; Morton, E.J. Non-invasive imaging of roots with high resolution X-ray micro-tomography. Plant Soil 2003, 255, 351–359. [Google Scholar] [CrossRef]

- Yang, W.; Xu, X.; Duan, L.; Luo, Q.; Chen, S.; Zeng, S.; Liu, Q. High-throughput measurement of rice tillers using a conveyor equipped with X-ray computed tomography. Rev. Sci. Instrum. 2011, 82, 1–8. [Google Scholar] [CrossRef]

- Atkinson, J.A.; Pound, M.P.; Bennett, M.J.; Wells, D.M. Uncovering the hidden half of plants using new advances in root phenotyping. Curr. Opin. Biotechnol. 2019, 55, 1–8. [Google Scholar] [CrossRef]

- Shi, F.; Wang, J.; Shi, J.; Wu, Z.; Wang, Q.; Tang, Z.; He, K.; Shi, Y.; Shen, D. Review of artificial intelligence techniques in imaging data acquisition, segmentation and diagnosis for COVID-19. IEEE Rev. Biomed. Eng. 2020, 14, 4–15. [Google Scholar] [CrossRef]

- Atkins, D.E.; Droegemeier, K.K.; Feldman, S.I.; García Molina, H.; Klein, M.L.; Messerschmitt, D.G.; Messina, P.; Ostriker, J.P.; Wright, M.H.; Garcia-molina, H.; et al. Revolutionizing Science and Engineering through Cyberinfrastructure. Science 2003, 84. [Google Scholar]

- Lee, C.P.; Dourish, P.; Mark, G. The human infrastructure of cyberinfrastructure. In Proceedings of the 2006 20th Anniversary Conference on Computer Supported Cooperative Work, Banff, AB, Canada, 4–8 November 2006; pp. 483–492. [Google Scholar] [CrossRef]

- UIC Advanced Cyberinfrastructure for Education and Research. Available online: https://acer.uic.edu/get-started/resource-pricing/ (accessed on 4 September 2020).

- Yang, C.; Raskin, R.; Goodchild, M.; Gahegan, M. Geospatial Cyberinfrastructure: Past, present and future. Comput. Environ. Urban Syst. 2010, 34, 264–277. [Google Scholar] [CrossRef]

- Michener, W.K.; Allard, S.; Budden, A.; Cook, R.B.; Douglass, K.; Frame, M.; Kelling, S.; Koskela, R.; Tenopir, C.; Vieglais, D.A. Participatory design of DataONE-Enabling cyberinfrastructure for the biological and environmental sciences. Ecol. Inform. 2012, 11, 5–15. [Google Scholar] [CrossRef]

- Wang, L.; Chen, D.; Hu, Y.; Ma, Y.; Wang, J. Towards enabling Cyberinfrastructure as a Service in Clouds. Comput. Electr. Eng. 2013, 39, 3–14. [Google Scholar] [CrossRef]

- Kvilekval, K.; Fedorov, D.; Obara, B.; Singh, A.; Manjunath, B.S. Bisque: A platform for bioimage analysis and management. Bioinformatics 2009, 26, 544–552. [Google Scholar] [CrossRef] [PubMed]

- Shah, S.K. Motivation, governance, and the viability of hybrid forms in open source software development. Manag. Sci. 2006, 52, 1000–1014. [Google Scholar] [CrossRef]

- Olson, D.L.; Rosacker, K. Crowdsourcing and open source software participation. Serv. Bus. 2013, 7, 499–511. [Google Scholar] [CrossRef]

- Bauckhage, C.; Kersting, K. Data Mining and Pattern Recognition in Agriculture. KI Künstl. Intell. 2013, 27, 313–324. [Google Scholar] [CrossRef]

- Kuhlgert, S.; Austic, G.; Zegarac, R.; Osei-Bonsu, I.; Hoh, D.; Chilvers, M.I.; Roth, M.G.; Bi, K.; TerAvest, D.; Weebadde, P.; et al. MultispeQ Beta: A tool for large-scale plant phenotyping connected to the open photosynQ network. R. Soc. Open Sci. 2016, 3. [Google Scholar] [CrossRef] [PubMed]

- Gehan, M.A.; Fahlgren, N.; Abbasi, A.; Berry, J.C.; Callen, S.T.; Chavez, L.; Doust, A.N.; Feldman, M.J.; Gilbert, K.B.; Hodge, J.G.; et al. PlantCV v2: Image analysis software for high-throughput plant phenotyping. PeerJ 2017, 2017, 1–23. [Google Scholar] [CrossRef] [PubMed]

- Tzutalin LabelImg. Available online: https://github.com/tzutalin/labelImg (accessed on 14 September 2020).

- Ubbens, J.R.; Stavness, I. Deep plant phenomics: A deep learning platform for complex plant phenotyping tasks. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2015, arXiv:1603.04467. [Google Scholar]

- Ramcharan, A.; McCloskey, P.; Baranowski, K.; Mbilinyi, N.; Mrisho, L.; Ndalahwa, M.; Legg, J.; Hughes, D.P. A mobile-based deep learning model for cassava disease diagnosis. Front. Plant Sci. 2019, 10, 1–8. [Google Scholar] [CrossRef]

- Merz, T.; Chapman, S. Autonomous Unmanned Helicopter System for Remote Sensing Missions in Unknown Environments. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXVIII-1, 143–148. [Google Scholar] [CrossRef]

- Andrade-Sanchez, P.; Gore, M.A.; Heun, J.T.; Thorp, K.R.; Carmo-Silva, A.E.; French, A.N.; Salvucci, M.E.; White, J.W. Development and evaluation of a field-based high-throughput phenotyping platform. Funct. Plant Biol. 2014, 41, 68–79. [Google Scholar] [CrossRef] [PubMed]

- Chawade, A.; Van Ham, J.; Blomquist, H.; Bagge, O.; Alexandersson, E.; Ortiz, R. High-throughput field-phenotyping tools for plant breeding and precision agriculture. Agronomy 2019, 9, 258. [Google Scholar] [CrossRef]

- Virlet, N.; Sabermanesh, K.; Sadeghi-Tehran, P.; Hawkesford, M.J. Field Scanalyzer: An automated robotic field phenotyping platform for detailed crop monitoring. Funct. Plant Biol. 2017, 44, 143–153. [Google Scholar] [CrossRef]

- IPPN International Plant Phenotyping Network. Available online: https://www.plant-phenotyping.org/ (accessed on 13 April 2020).

- APPF Australian Plant Phenomics Facility. Available online: https://www.plantphenomics.org.au/ (accessed on 13 April 2020).

- Cooper, C.B.; Shirk, J.; Zuckerberg, B. The Invisible Prevalence of Citizen Science in Global Research: Migratory The Invisible Prevalence of Citizen Science in Global Research: Migratory Birds and Climate Change. PLoS ONE 2014, 9, e106508. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).