Trends of Human-Robot Collaboration in Industry Contexts: Handover, Learning, and Metrics

Abstract

1. Introduction

1.1. Analysis of Past Reviews

1.2. Purpose and Contribution

1.3. Paper Organization

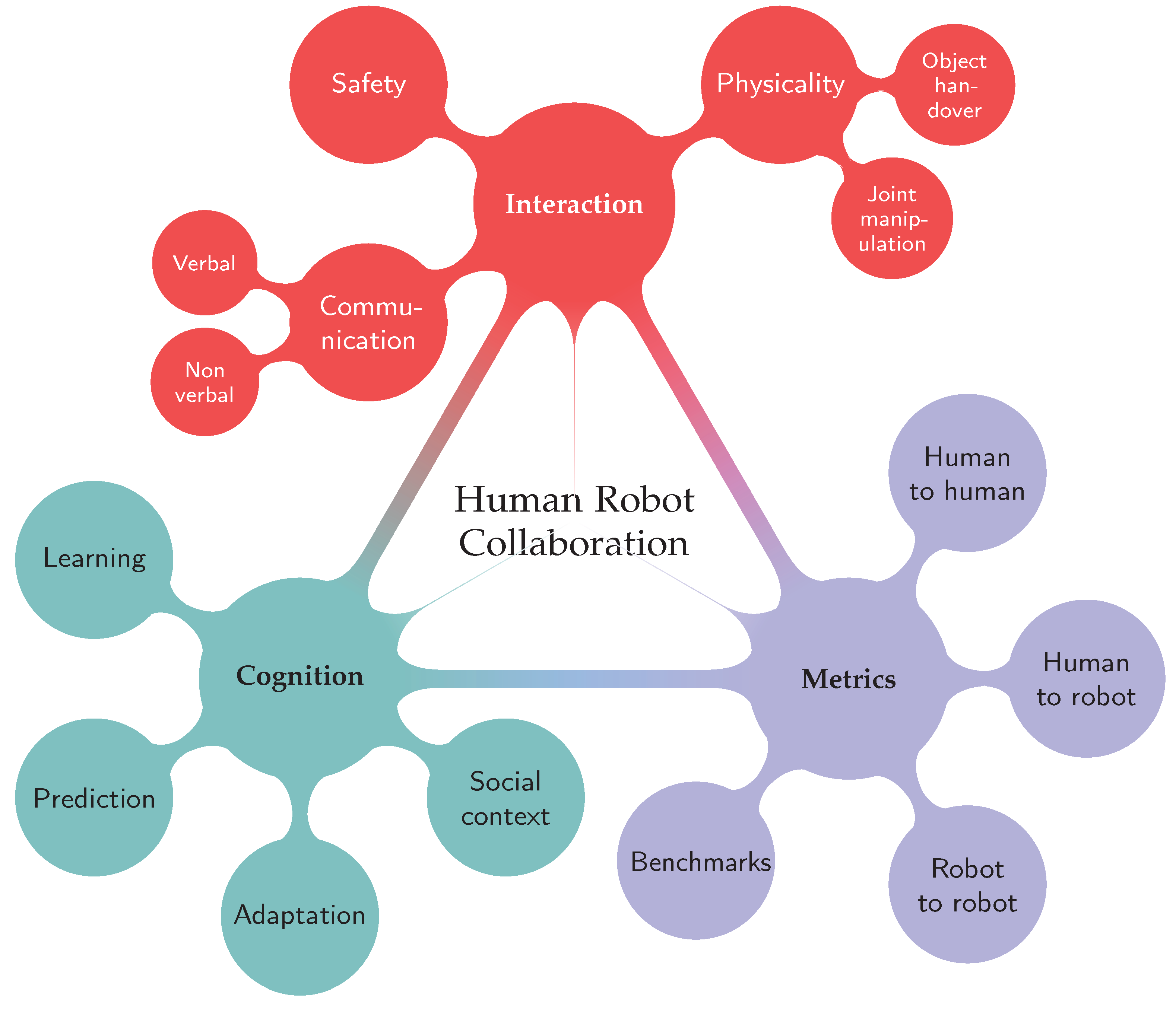

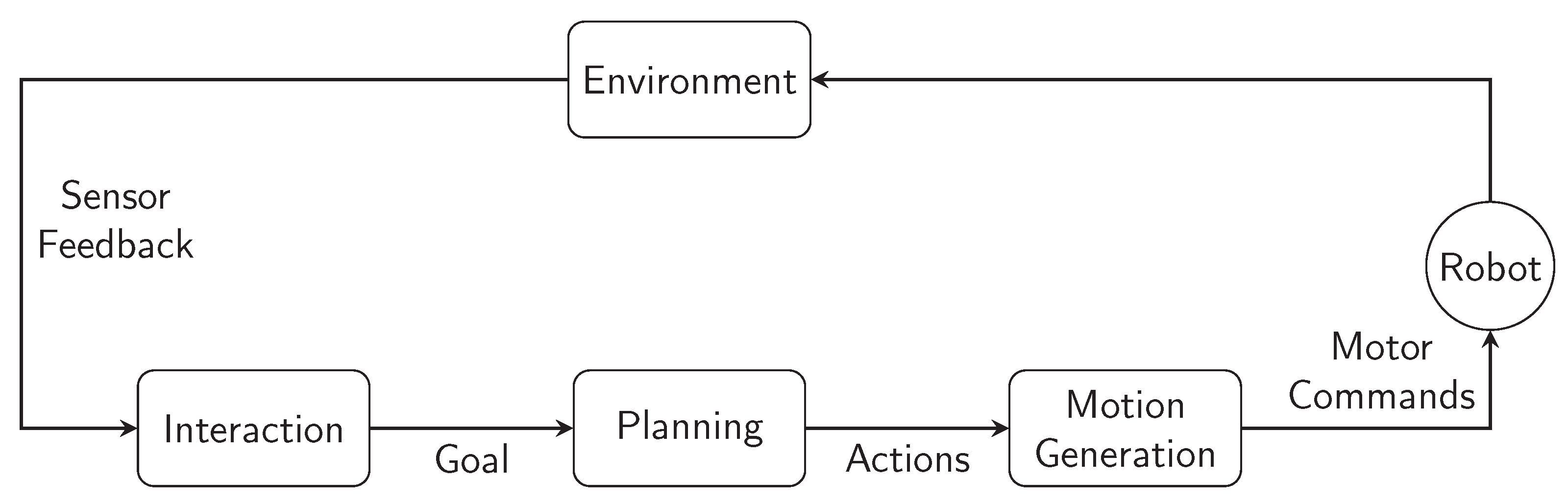

2. Human-Robot Collaboration Concepts

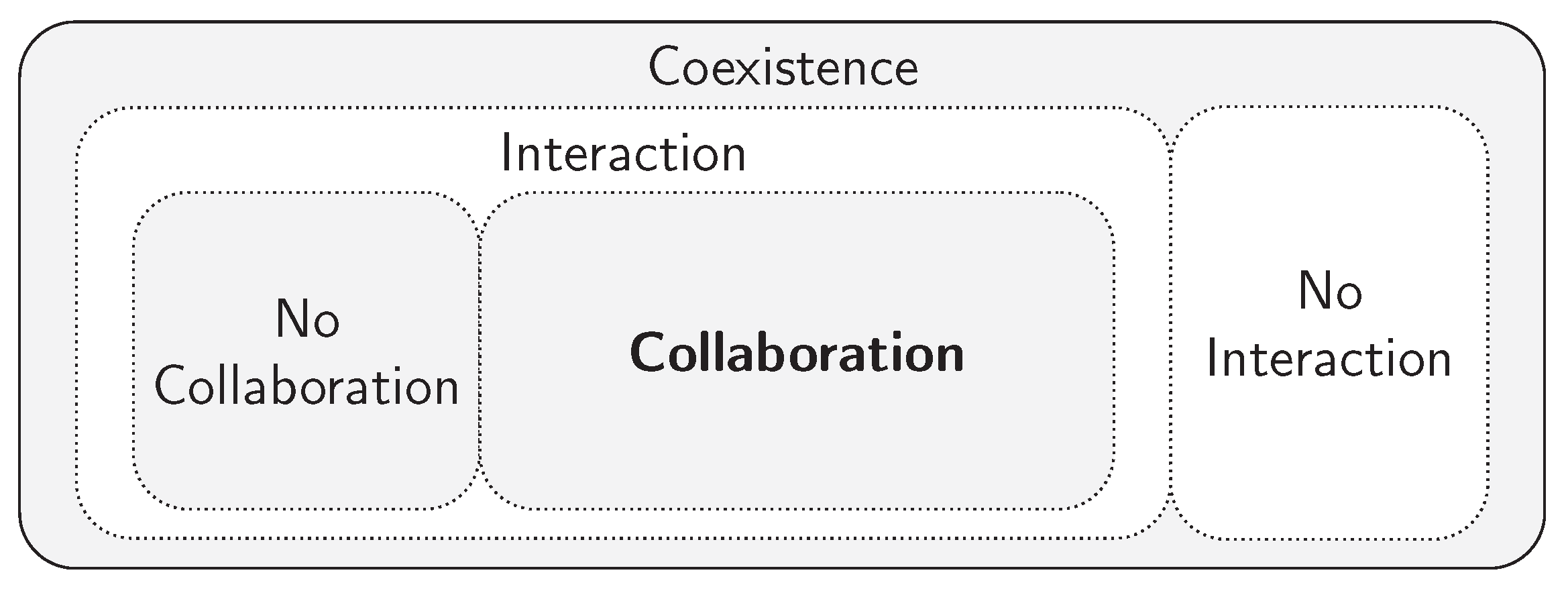

2.1. Interaction vs. Collaboration

2.2. Interfaces of Communication

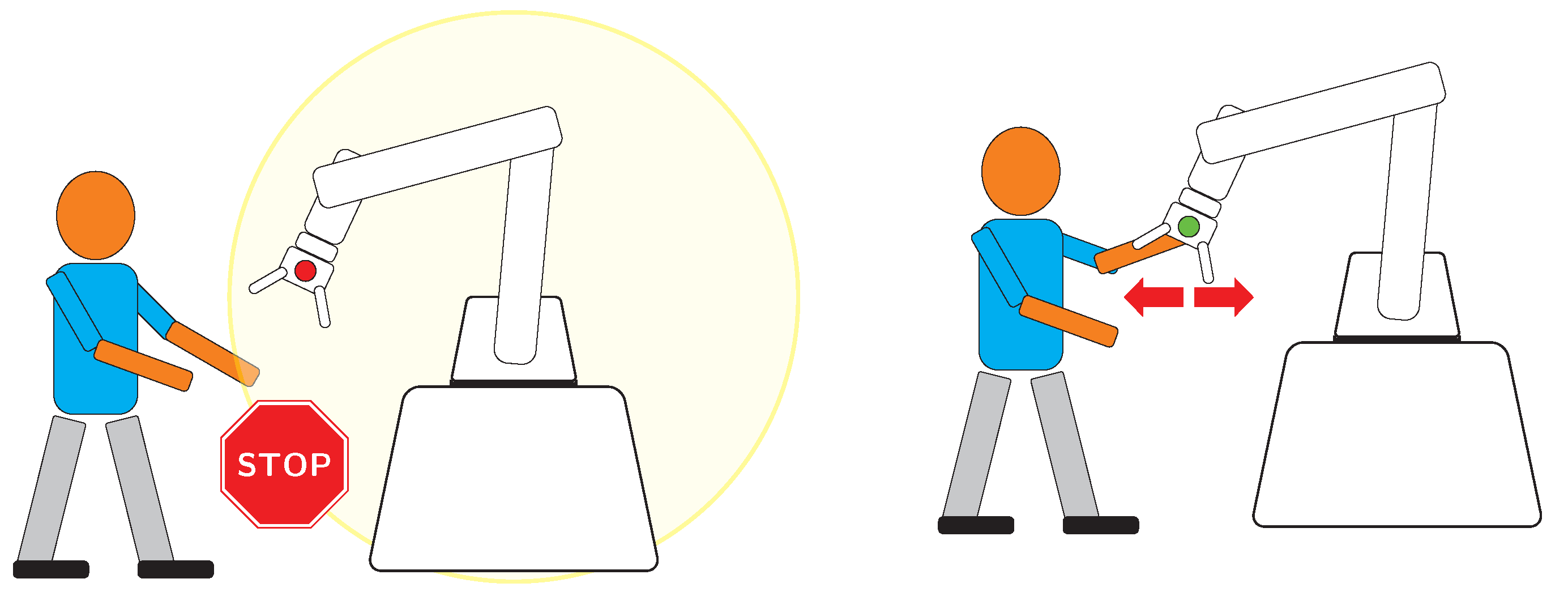

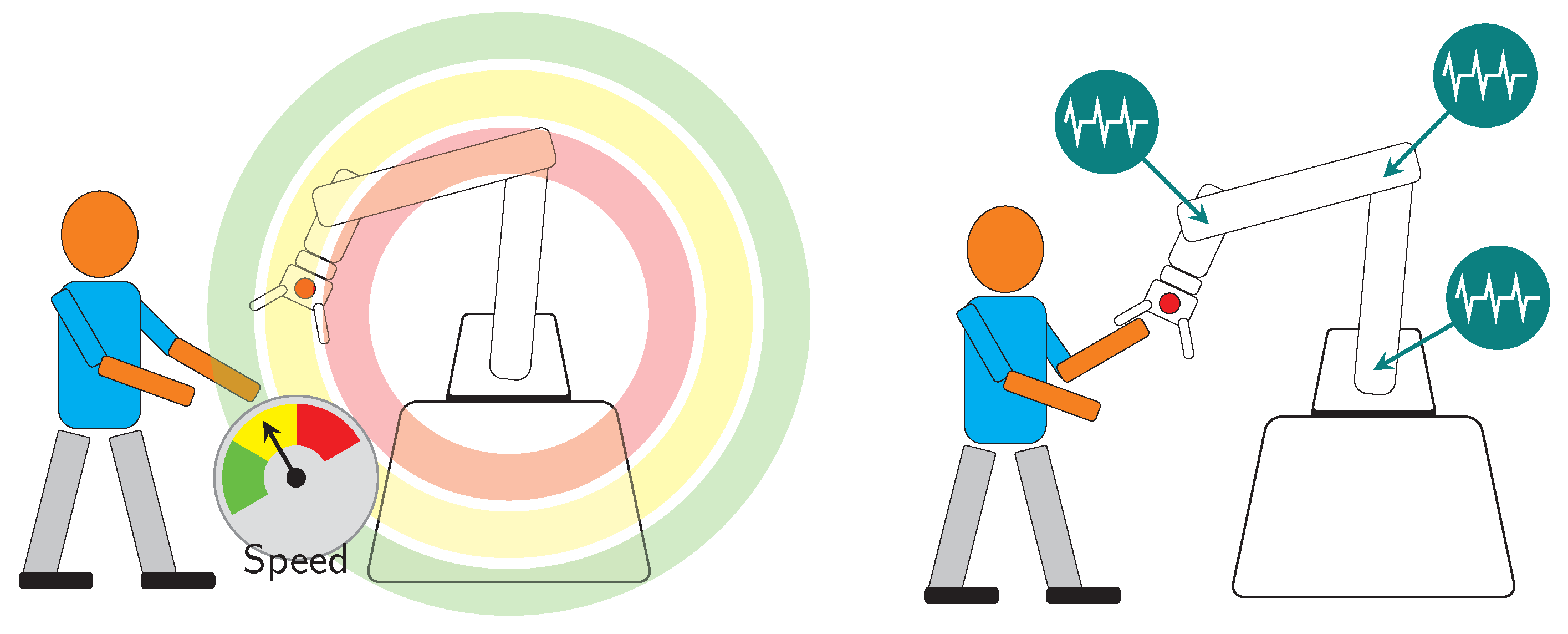

2.3. Safety in HRC

2.4. Physical Interaction

3. Current Advances and Trends

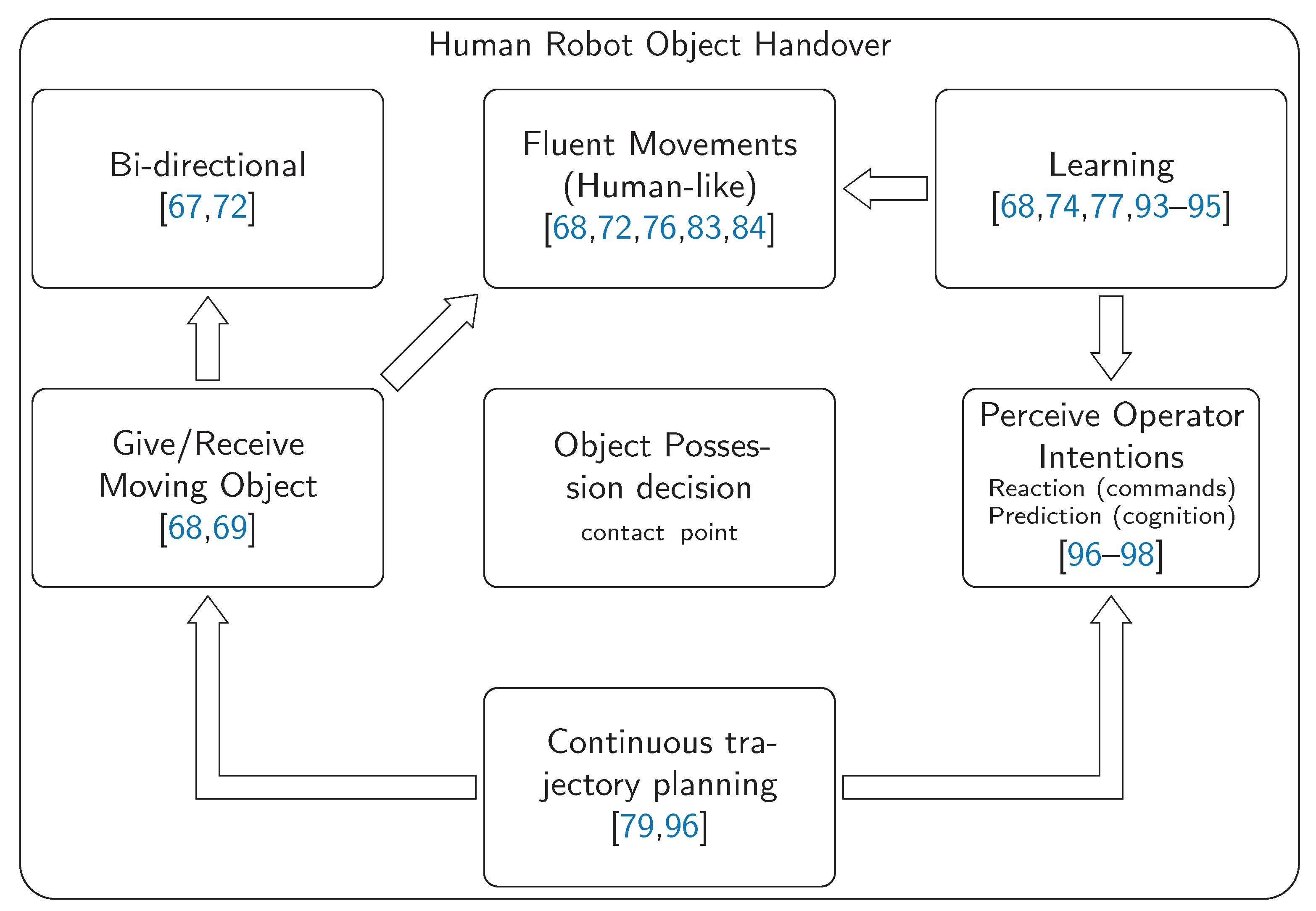

3.1. Object Handover

3.2. Robot Learning

3.3. Metrics in HRC

4. Future Challenges

4.1. Open Research Questions in Object Handover

4.2. Limitations and Opportunities for Cognitive Collaboration

4.2.1. Representations and Understanding of the World

4.2.2. Hierarchical Task Decomposition

4.2.3. Skill Reusability

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chandrasekaran, B.; Conrad, J.M. Human-robot collaboration: A survey. In Proceedings of the SoutheastCon 2015, Fort Lauderdale, FL, USA, 9–12 April 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Ajoudani, A.; Zanchettin, A.M.; Ivaldi, S.; Albu-Schäffer, A.; Kosuge, K.; Khatib, O. Progress and Prospects of the Human-Robot Collaboration. Auton. Robot. 2018, 42. [Google Scholar] [CrossRef]

- Hentout, A.; Aouache, M.; Maoudj, A.; Akli, I. Human–robot interaction in industrial collaborative robotics: A literature review of the decade 2008–2017. Adv. Robot. 2019, 33, 764–799. [Google Scholar] [CrossRef]

- El Zaatari, S.; Marei, M.; Li, W.; Usman, Z. Cobot programming for collaborative industrial tasks: An overview. Robot. Auton. Syst. 2019, 116, 162–180. [Google Scholar] [CrossRef]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human-robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Matheson, E.; Minto, R.; Zampieri, E.G.G.; Faccio, M.; Rosati, G. Human-Robot Collaboration in Manufacturing Applications: A Review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef]

- Kumar, S.; Savur, C.; Sahin, F. Survey of Human-Robot Collaboration in Industrial Settings: Awareness, Intelligence, and Compliance. IEEE Trans. Syst. Man Cybern. Syst. 2021. [Google Scholar] [CrossRef]

- Ogenyi, U.; Liu, J.; Yang, C.; Ju, Z.; Liu, H. Physical Human-Robot Collaboration: Robotic Systems, Learning Methods, Collaborative Strategies, Sensors, and Actuators. IEEE Trans. Cybern. 2019, 1–14. [Google Scholar] [CrossRef]

- Grosz, B.J. Collaborative Systems (AAAI-94 Presidential Address). AI Mag. 1996, 17, 67. [Google Scholar] [CrossRef]

- Green, S.A.; Billinghurst, M.; Chen, X.; Chase, J.G. Human-Robot Collaboration: A Literature Review and Augmented Reality Approach in Design. Int. J. Adv. Robot. Syst. 2008, 5, 1. [Google Scholar] [CrossRef]

- Bauer, A.; Wollherr, D.; Buss, M. Human-Robot Collaboration: A Survey. I. J. Humanoid Robot. 2008, 5, 47–66. [Google Scholar] [CrossRef]

- De Luca, A.; Flacco, F. Integrated control for pHRI: Collision avoidance, detection, reaction and collaboration. In Proceedings of the 2012 4th IEEE RAS EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012; pp. 288–295. [Google Scholar] [CrossRef]

- Rozo, L.; Ben Amor, H.; Calinon, S.; Dragan, A.; Lee, D. Special issue on learning for human–robot collaboration. Auton. Robot. 2018, 42. [Google Scholar] [CrossRef]

- Liu, H.; Wang, L. Gesture recognition for human-robot collaboration: A review. Int. J. Ind. Ergon. 2018, 68, 355–367. [Google Scholar] [CrossRef]

- Chai, J.Y.; She, L.; Fang, R.; Ottarson, S.; Littley, C.; Liu, C.; Hanson, K. Collaborative Effort towards Common Ground in Situated Human-Robot Dialogue. In Proceedings of the 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Bielefeld, Germany, 3–6 March 2014; pp. 33–40. [Google Scholar]

- Maurtua, I.; Fernández, I.; Tellaeche, A.; Kildal, J.; Susperregi, L.; Ibarguren, A.; Sierra, B. Natural multimodal communication for human–robot collaboration. Int. J. Adv. Robot. Syst. 2017, 14, 1729881417716043. [Google Scholar] [CrossRef]

- Coupeté, E.; Moutarde, F.; Manitsaris, S. A User-Adaptive Gesture Recognition System Applied to Human-Robot Collaboration in Factories. In MOCO ’16: Proceedings of the 3rd International Symposium on Movement and Computing; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Peppoloni, L.; Brizzi, F.; Avizzano, C.; Ruffaldi, E. Immersive ROS-integrated framework for robot teleoperation. In Proceedings of the 2015 IEEE Symposium on 3D User Interfaces (3DUI), Arles, France, 23–24 March 2015; pp. 177–178. [Google Scholar]

- Barattini, P.; Morand, C.; Robertson, N.M. A proposed gesture set for the control of industrial collaborative robots. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 132–137. [Google Scholar] [CrossRef]

- Mitra, S.; Acharya, T. Gesture Recognition: A Survey. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Akkaladevi, S.C.; Heindl, C. Action recognition for human robot interaction in industrial applications. In Proceedings of the 2015 IEEE International Conference on Computer Graphics, Vision and Information Security (CGVIS), Bhubaneswar, India, 2–3 November 2015; pp. 94–99. [Google Scholar]

- Ramírez-Amaro, K.; Beetz, M.; Cheng, G. Understanding the intention of human activities through semantic perception: Observation, understanding and execution on a humanoid robot. Adv. Robot. 2015, 29, 345–362. [Google Scholar] [CrossRef]

- Gustavsson, P.; Syberfeldt, A.; Brewster, R.; Wang, L. Human-robot Collaboration Demonstrator Combining Speech Recognition and Haptic Control. Procedia CIRP 2017, 63, 396–401. [Google Scholar] [CrossRef]

- Kragic, D.; Gustafson, J.; Karaoguz, H.; Jensfelt, P.; Krug, R. Interactive, Collaborative Robots: Challenges and Opportunities. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI-18, Stockholm, Sweden, 13–19 July 2018; pp. 18–25. [Google Scholar] [CrossRef]

- Stenmark, M.; Nugues, P. Natural language programming of industrial robots. In Proceedings of the 2013 44th International Symposium on Robotics, ISR 2013, Seoul, Korea, 24–26 October 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Nakata, S.; Kobayashi, H.; Kumata, M.; Suzuki, S. Human speech ontology changes in virtual collaborative work. In Proceedings of the 4th International Conference on Human System Interaction, HSI 2011, Yokohama, Japan, 19–21 May 2011; pp. 363–368. [Google Scholar] [CrossRef]

- Yamaguchi, A.; Atkeson, C.G. Combining finger vision and optical tactile sensing: Reducing and handling errors while cutting vegetables. In Proceedings of the 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, 15–17 November 2016; pp. 1045–1051. [Google Scholar] [CrossRef]

- Kawasetsu, T.; Horii, T.; Ishihara, H.; Asada, M. Mexican-Hat-Like Response in a Flexible Tactile Sensor Using a Magnetorheological Elastomer. Sensors 2018, 18, 587. [Google Scholar] [CrossRef] [PubMed]

- Kaboli, M.; Cheng, G. Novel Tactile Descriptors and a Tactile Transfer Learning Technique for Active In-Hand Object Recognition via Texture Properties. In Proceedings of the IEE-RAS International Conference on Humanoid Robots-Workshop Tactile Sensing for Manipulation: New Progress and Challenges, Cancun, Mexico, 15–17 November 2016. [Google Scholar]

- Kaboli, M.; Cheng, G. Robust Tactile Descriptors for Discriminating Objects From Textural Properties via Artificial Robotic Skin. IEEE Trans. Robot. 2018, 34, 985–1003. [Google Scholar] [CrossRef]

- Yang, C.; Zeng, C.; Liang, P.; Li, Z.; Li, R.; Su, C. Interface Design of a Physical Human–Robot Interaction System for Human Impedance Adaptive Skill Transfer. IEEE Trans. Autom. Sci. Eng. 2018, 15, 329–340. [Google Scholar] [CrossRef]

- Mangukiya, Y.; Purohit, B.; George, K. Electromyography(EMG) sensor controlled assistive orthotic robotic arm for forearm movement. In Proceedings of the 2017 IEEE Sensors Applications Symposium (SAS), Glassboro, NJ, USA, 13–15 March 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Faidallah, E.M.; Hossameldin, Y.H.; Abd Rabbo, S.M.; El-Mashad, Y.A. Control and modeling a robot arm via EMG and flex signals. In Proceedings of the 15th International Workshop on Research and Education in Mechatronics (REM), El Gouna, Egypt, 9–11 September 2014; pp. 1–8. [Google Scholar] [CrossRef]

- Tzallas, A.T.; Giannakeas, N.; Zoulis, K.N.; Tsipouras, M.G.; Glavas, E.; Tzimourta, K.D.; Astrakas, L.G.; Konitsiotis, S. EEG Classification and Short-Term Epilepsy Prognosis Using Brain Computer Interface Software. In Proceedings of the 2017 IEEE 30th International Symposium on Computer-Based Medical Systems (CBMS), Thessaloniki, Greece, 22–24 June 2017; pp. 349–353. [Google Scholar] [CrossRef]

- Guerin, K.R.; Riedel, S.D.; Bohren, J.; Hager, G.D. Adjutant: A framework for flexible human-machine collaborative systems. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 1392–1399. [Google Scholar] [CrossRef]

- Pedersen, M.R.; Herzog, D.L.; Krüger, V. Intuitive skill-level programming of industrial handling tasks on a mobile manipulator. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4523–4530. [Google Scholar] [CrossRef]

- Steinmetz, F.; Wollschläger, A.; Weitschat, R. RAZER—A HRI for Visual Task-Level Programming and Intuitive Skill Parameterization. IEEE Robot. Autom. Lett. 2018, 3, 1362–1369. [Google Scholar] [CrossRef]

- Krüger, J.; Lien, T.K.; Verl, A. Cooperation of human and machines in assembly lines. CIRP Ann. Manuf. Technol. 2009. [Google Scholar] [CrossRef]

- Tsarouchi, P.; Makris, S.; Chryssolouris, G. Human–robot interaction review and challenges on task planning and programming. Int. J. Comput. Integr. Manuf. 2016, 29, 916–931. [Google Scholar] [CrossRef]

- Robla-Gómez, S.; Becerra, V.M.; Llata, J.R.; González-Sarabia, E.; Torre-Ferrero, C.; Pérez-Oria, J. Working Together: A Review on Safe Human-Robot Collaboration in Industrial Environments. IEEE Access 2017, 5, 26754–26773. [Google Scholar] [CrossRef]

- Bi, Z.M.; Luo, M.; Miao, Z.; Zhang, B.; Zhang, W.J.; Wang, L. Safety assurance mechanisms of collaborative robotic systems in manufacturing. Robot. Comput. Integr. Manuf. 2021. [Google Scholar] [CrossRef]

- Gualtieri, L.; Rauch, E.; Vidoni, R. Emerging research fields in safety and ergonomics in industrial collaborative robotics: A systematic literature review. Robot. Comput. Integr. Manuf. 2021. [Google Scholar] [CrossRef]

- Valori, M.; Scibilia, A.; Fassi, I.; Saenz, J.; Behrens, R.; Herbster, S.; Bidard, C.; Lucet, E.; Magisson, A.; Schaake, L.; et al. Validating safety in human-robot collaboration: Standards and new perspectives. Robotics 2021, 10, 65. [Google Scholar] [CrossRef]

- Zanchettin, A.M.; Ceriani, N.M.; Rocco, P.; Ding, H.; Matthias, B. Safety in Human-Robot Collaborative Manufacturing Environments: Metrics and Control. IEEE Trans. Autom. Sci. Eng. 2016. [Google Scholar] [CrossRef]

- Mauro, S.; Scimmi, L.S.; Pastorelli, S. Collision Avoidance System for Collaborative Robotics. In Proceedings of the International Conference on Robotics in Alpe-Adria Danube Region, Turin, Italy, 21–23 June 2017; pp. 344–352. [Google Scholar] [CrossRef]

- Ragaglia, M.; Zanchettin, A.M.; Rocco, P. Trajectory generation algorithm for safe human-robot collaboration based on multiple depth sensor measurements. Mechatronics 2018. [Google Scholar] [CrossRef]

- Scimmi, L.S.; Melchiorre, M.; Mauro, S.; Pastorelli, S. Multiple collision avoidance between human limbs and robot links algorithm in collaborative tasks. In Proceedings of the 15th International Conference on Informatics in Control, Automation and Robotics, Porto, Portugal, 29–31 July 2018; pp. 301–308. [Google Scholar] [CrossRef]

- Kanazawa, A.; Kinugawa, J.; Kosuge, K. Adaptive Motion Planning for a Collaborative Robot Based on Prediction Uncertainty to Enhance Human Safety and Work Efficiency. IEEE Trans. Robot. 2019. [Google Scholar] [CrossRef]

- Melchiorre, M.; Scimmi, L.S.; Pastorelli, S.P.; Mauro, S. Collison Avoidance using Point Cloud Data Fusion from Multiple Depth Sensors: A Practical Approach. In Proceedings of the 2019 23rd International Conference on Mechatronics Technology, ICMT, Salerno, Italy, 23–26 October 2019. [Google Scholar] [CrossRef]

- Nikolakis, N.; Maratos, V.; Makris, S. A cyber physical system (CPS) approach for safe human-robot collaboration in a shared workplace. Robot. Comput. Integr. Manuf. 2019. [Google Scholar] [CrossRef]

- Scimmi, L.S.; Melchiorre, M.; Mauro, S.; Pastorelli, S.P. Implementing a Vision-Based Collision Avoidance Algorithm on a UR3 Robot. In Proceedings of the 2019 23rd International Conference on Mechatronics Technology, ICMT, Salerno, Italy, 23–26 October 2019. [Google Scholar] [CrossRef]

- Zanchettin, A.M.; Rocco, P.; Chiappa, S.; Rossi, R. Towards an optimal avoidance strategy for collaborative robots. Robot. Comput. Integr. Manuf. 2019. [Google Scholar] [CrossRef]

- Huber, G.; Wollherr, D. An Online Trajectory Generator on SE(3) for Human-Robot Collaboration. Robotica 2020. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, X.; Cai, Y.; Xu, W.; Liu, Q.; Zhou, Z.; Pham, D.T. Dynamic risk assessment and active response strategy for industrial human-robot collaboration. Comput. Ind. Eng. 2020. [Google Scholar] [CrossRef]

- Murali, P.K.; Darvish, K.; Mastrogiovanni, F. Deployment and evaluation of a flexible human-robot collaboration model based on AND/OR graphs in a manufacturing environment. Intell. Serv. Robot. 2020. [Google Scholar] [CrossRef]

- Liu, H.; Wang, L. Collision-free human-robot collaboration based on context awareness. Robot. Comput. Integr. Manuf. 2021. [Google Scholar] [CrossRef]

- Pupa, A.; Arrfou, M.; Andreoni, G.; Secchi, C. A Safety-Aware Kinodynamic Architecture for Human-Robot Collaboration. IEEE Robot. Autom. Lett. 2021. [Google Scholar] [CrossRef]

- Scimmi, L.S.; Melchiorre, M.; Troise, M.; Mauro, S.; Pastorelli, S. A practical and effective layout for a safe human-robot collaborative assembly task. Appl. Sci. 2021, 11, 1763. [Google Scholar] [CrossRef]

- Dahiya, R.S.; Mittendorfer, P.; Valle, M.; Cheng, G.; Lumelsky, V.J. Directions Toward Effective Utilization of Tactile Skin: A Review. IEEE Sens. J. 2013, 13, 4121–4138. [Google Scholar] [CrossRef]

- Björkman, M.; Bekiroglu, Y.; Högman, V.; Kragic, D. Enhancing visual perception of shape through tactile glances. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 3180–3186. [Google Scholar] [CrossRef]

- Li, M.; Bekiroglu, Y.; Kragic, D.; Billard, A. Learning of grasp adaptation through experience and tactile sensing. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 3339–3346. [Google Scholar]

- Li, K.; Fang, Y.; Zhou, Y.; Liu, H. Non-Invasive Stimulation-Based Tactile Sensation for Upper-Extremity Prosthesis: A Review. IEEE Sens. J. 2017, 17, 2625–2635. [Google Scholar] [CrossRef]

- Gienger, M.; Ruiken, D.; Bates, T.; Regaieg, M.; MeiBner, M.; Kober, J.; Seiwald, P.; Hildebrandt, A. Human-Robot Cooperative Object Manipulation with Contact Changes. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1354–1360. [Google Scholar] [CrossRef]

- Noohi, E.; Žefran, M.; Patton, J.L. A Model for Human–Human Collaborative Object Manipulation and Its Application to Human–Robot Interaction. IEEE Trans. Robot. 2016, 32, 880–896. [Google Scholar] [CrossRef]

- Magrini, E.; Flacco, F.; De Luca, A. Control of generalized contact motion and force in physical human-robot interaction. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2298–2304. [Google Scholar] [CrossRef]

- Wojtara, T.; Uchihara, M.; Murayama, H.; Shimoda, S.; Sakai, S.; Fujimoto, H.; Kimura, H. Human–robot collaboration in precise positioning of a three-dimensional object. Automatica 2009, 45, 333–342. [Google Scholar] [CrossRef]

- Roy, S.; Edan, Y. Investigating joint-action in short-cycle repetitive handover tasks: The role of giver versus receiver and its implications for human-robot collaborative system design. Int. J. Soc. Robot. 2018. [Google Scholar] [CrossRef]

- Kupcsik, A.; Hsu, D.; Lee, W.S. Learning Dynamic Robot-to-Human Object Handover from Human Feedback. In Robotics Research: Volume 1; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Sanchez-Matilla, R.; Chatzilygeroudis, K.; Modas, A.; Duarte, N.F.; Xompero, A.; Frossard, P.; Billard, A.; Cavallaro, A. Benchmark for human-to-robot handovers of unseen containers with unknown filling. IEEE Robot. Autom. Lett. 2020, 5, 1642–1649. [Google Scholar] [CrossRef]

- Strabala, K.; Lee, M.K.; Dragan, A.; Forlizzi, J.; Srinivasa, S.S.; Cakmak, M.; Micelli, V. Toward Seamless Human-Robot Handovers. J. Hum. Robot Interact. 2013, 2, 112–132. [Google Scholar] [CrossRef]

- Kshirsagar, A.; Kress-Gazit, H.; Hoffman, G. Specifying and Synthesizing Human-Robot Handovers. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Macau, China, 3–8 November 2019. [Google Scholar] [CrossRef]

- Medina, J.R.; Duvallet, F.; Karnam, M.; Billard, A. A human-inspired controller for fluid human-robot handovers. In Proceedings of the 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, 15–17 November 2016; pp. 324–331. [Google Scholar] [CrossRef]

- Chan, W.P.; Pan, M.K.; Croft, E.A.; Inaba, M. An Affordance and Distance Minimization Based Method for Computing Object Orientations for Robot Human Handovers. Int. J. Soc. Robot. 2020. [Google Scholar] [CrossRef]

- van Hoof, H.; Hermans, T.; Neumann, G.; Peters, J. Learning robot in-hand manipulation with tactile features. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 121–127. [Google Scholar] [CrossRef]

- Rasch, R.; Wachsmuth, S.; Konig, M. An Evaluation of Robot-to-Human Handover Configurations for Commercial Robots. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Macau, China, 3–8 November 2019. [Google Scholar] [CrossRef]

- Nemlekar, H.; Dutia, D.; Li, Z. Object Transfer Point Estimation for Fluent Human-Robot Handovers. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 2627–2633. [Google Scholar] [CrossRef]

- Maeda, G.J.; Neumann, G.; Ewerton, M.; Lioutikov, R.; Kroemer, O.; Peters, J. Probabilistic movement primitives for coordination of multiple human-robot collaborative tasks. Auton. Robot. 2017, 41, 593–612. [Google Scholar] [CrossRef]

- Suay, H.B.; Sisbot, E.A. A position generation algorithm utilizing a biomechanical model for robot-human object handover. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3776–3781. [Google Scholar] [CrossRef]

- Pan, M.K.X.J.; Knoop, E.; Bächer, M.; Niemeyer, G. Fast Handovers with a Robot Character: Small Sensorimotor Delays Improve Perceived Qualities. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 6735–6741. [Google Scholar] [CrossRef]

- Moon, A.; Troniak, D.M.; Gleeson, B.; Pan, M.K.; Zheng, M.; Blumer, B.A.; MacLean, K.; Croft, E.A. Meet Me Where i’m Gazing: How Shared Attention Gaze Affects Human-Robot Handover Timing. In HRI ’14: Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction; Association for Computing Machinery: New York, NY, USA, 2014; pp. 334–341. [Google Scholar] [CrossRef]

- Kshirsagar, A.; Lim, M.; Christian, S.; Hoffman, G. Robot Gaze Behaviors in Human-to-Robot Handovers. IEEE Robot. Autom. Lett. 2020. [Google Scholar] [CrossRef]

- Bestick, A.; Pandya, R.; Bajcsy, R.; Dragan, A.D. Learning Human Ergonomic Preferences for Handovers. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 3257–3264. [Google Scholar] [CrossRef]

- Rasch, R.; Wachsmuth, S.; König, M. A Joint Motion Model for Human-Like Robot-Human Handover. In Proceedings of the 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), Beijing, China, 6–9 November 2018; pp. 180–187. [Google Scholar] [CrossRef]

- Huang, C.M.; Cakmak, M.; Mutlu, B. Adaptive Coordination Strategies for Human-Robot Handovers. In Robotics: Science and Systems; Springer: Rome, Italy, 2015; Volume 11. [Google Scholar]

- Melchiorre, M.; Scimmi, L.S.; Mauro, S.; Pastorelli, S. Influence of human limb motion speed in a collaborative hand-over task. In Proceedings of the 15th International Conference on Informatics in Control, Automation and Robotics, Porto, Portugal, 29–31 July 2018. [Google Scholar] [CrossRef]

- Duarte, N.F.; Chatzilygeroudis, K.; Santos-Victor, J.; Billard, A. From human action understanding to robot action execution: how the physical properties of handled objects modulate non-verbal cues. In Proceedings of the 2020 Joint IEEE 10th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob), Valparaiso, Chile, 26–30 October 2020; pp. 1–6. [Google Scholar]

- Yang, W.; Paxton, C.; Cakmak, M.; Fox, D. Human Grasp Classification for Reactive Human-to-Robot Handovers. arXiv 2020, arXiv:2003.06000. [Google Scholar]

- Yang, W.; Paxton, C.; Mousavian, A.; Chao, Y.W.; Cakmak, M.; Fox, D. Reactive Human-to-Robot Handovers of Arbitrary Objects. arXiv 2020, arXiv:2011.08961. [Google Scholar]

- Rosenberger, P.; Cosgun, A.; Newbury, R.; Kwan, J.; Ortenzi, V.; Corke, P.; Grafinger, M. Object-Independent Human-to-Robot Handovers Using Real Time Robotic Vision. IEEE Robot. Autom. Lett. 2021, 6, 17–23. [Google Scholar] [CrossRef]

- Parastegari, S.; Noohi, E.; Abbasi, B.; Žefran, M. A fail-safe object handover controller. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2003–2008. [Google Scholar]

- Pan, M.K.; Croft, E.A.; Niemeyer, G. Exploration of geometry and forces occurring within human-to-robot handovers. In Proceedings of the 2018 IEEE Haptics Symposium (HAPTICS), San Francisco, CA, USA, 25–28 March 2018; pp. 327–333. [Google Scholar]

- Han, Z.; Yanco, H. The Effects of Proactive Release Behaviors during Human-Robot Handovers. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Daegu, Korea, 11–14 March 2019. [Google Scholar] [CrossRef]

- Wang, W.; Chen, Y.; Li, R.; Jia, Y. Learning and Comfort in Human-Robot Interaction: A Review. Appl. Sci. 2019, 9, 5152. [Google Scholar] [CrossRef]

- Rozo, L.; Calinon, S.; Caldwell, D.G.; Jimenez, P.; Torras, C. Learning physical collaborative robot behaviors from human demonstrations. IEEE Trans. Robot. 2016, 32, 513–527. [Google Scholar] [CrossRef]

- Lee, J. A survey of robot learning from demonstrations for human-robot collaboration. arXiv 2017, arXiv:1710.08789. [Google Scholar]

- Fishman, A.; Paxton, C.; Yang, W.; Ratliff, N.; Fox, D. Trajectory optimization for coordinated human-robot collaboration. arXiv 2019, arXiv:1910.04339. [Google Scholar]

- Liu, H.; Wang, L. Human motion prediction for human-robot collaboration. J. Manuf. Syst. 2017, 44, 287–294. [Google Scholar] [CrossRef]

- Bütepage, J.; Black, M.J.; Kragic, D.; Kjellström, H. Deep Representation Learning for Human Motion Prediction and Classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1591–1599. [Google Scholar] [CrossRef]

- Papageorgiou, X.S.; Chalvatzaki, G.; Tzafestas, C.S.; Maragos, P. Hidden markov modeling of human pathological gait using laser range finder for an assisted living intelligent robotic walker. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 6342–6347. [Google Scholar] [CrossRef]

- Kulic, D.; Croft, E.A. Affective State Estimation for Human–Robot Interaction. IEEE Trans. Robot. 2007, 23, 991–1000. [Google Scholar] [CrossRef]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2018, 37, 421–436. [Google Scholar] [CrossRef]

- Calinon, S.; Evrard, P.; Gribovskaya, E.; Billard, A.; Kheddar, A. Learning collaborative manipulation tasks by demonstration using a haptic interface. In Proceedings of the 2009 International Conference on Advanced Robotics, Munich, Germany, 22–26 June 2009; pp. 1–6. [Google Scholar]

- Sidiropoulos, A.; Psomopoulou, E.; Doulgeri, Z. A human inspired handover policy using Gaussian Mixture Models and haptic cues. Auton. Robot. 2019, 43, 1327–1342. [Google Scholar] [CrossRef]

- Munzer, T.; Toussaint, M.; Lopes, M. Efficient behavior learning in human–robot collaboration. Auton. Robot. 2018, 42, 1103–1115. [Google Scholar] [CrossRef]

- Nemec, B.; Likar, N.; Gams, A.; Ude, A. Human robot cooperation with compliance adaptation along the motion trajectory. Auton. Robot. 2018, 42, 1023–1035. [Google Scholar] [CrossRef]

- Rajeswaran, A.; Kumar, V.; Gupta, A.; Vezzani, G.; Schulman, J.; Todorov, E.; Levine, S. Learning complex dexterous manipulation with deep reinforcement learning and demonstrations. arXiv 2017, arXiv:1709.10087. [Google Scholar]

- Murphy, R.R.; Schreckenghost, D. Survey of metrics for human-robot interaction. In Proceedings of the 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 197–198. [Google Scholar] [CrossRef]

- Shi, C.; Shiomi, M.; Smith, C.; Kanda, T.; Ishiguro, H. A Model of Distributional Handing Interaction for a Mobile Robot. In Proceedings of the Robotics: Science and Systems, Berlin, Germany, 24–28 June 2013. [Google Scholar] [CrossRef]

- Koene, A.; Endo, S.; Remazeilles, A.; Prada, M.; Wing, A.M. Experimental testing of the CogLaboration prototype system for fluent Human-Robot object handover interactions. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 249–254. [Google Scholar] [CrossRef]

- Hoffman, G. Evaluating Fluency in Human-Robot Collaboration. IEEE Trans. Hum. Mach. Syst. 2019, 49, 209–218. [Google Scholar] [CrossRef]

- Gervasi, R.; Mastrogiacomo, L.; Franceschini, F. A conceptual framework to evaluate human-robot collaboration. Int. J. Adv. Manuf. Technol. 2020. [Google Scholar] [CrossRef]

- Ortenzi, V.; Cosgun, A.; Pardi, T.; Chan, W.; Croft, E.; Kulic, D. Object handovers: A review for robotics. arXiv 2020, arXiv:2007.12952. [Google Scholar]

- Choi, Y.S.; Chen, T.; Jain, A.; Anderson, C.; Glass, J.D.; Kemp, C.C. Hand it over or set it down: A user study of object delivery with an assistive mobile manipulator. In Proceedings of the RO-MAN 2009-The 18th IEEE International Symposium on Robot and Human Interactive Communication, New Delhi, India, 14–18 October 2009; pp. 736–743. [Google Scholar]

- Micelli, V.; Strabala, K.; Srinivasa, S. Perception and Control Challenges for Effective Human-Robot Handoffs. In Proceedings of RSS 2011 RGB-D Workshop. 2011. Available online: https://www.ri.cmu.edu/pub_files/2011/6/2011%20-%20Micelli,%20Strabala,%20Srinivasa%20-%20Perception%20and%20Control%20Challenges%20for%20Effective%20Human-Robot%20Handoffs.pdf (accessed on 8 June 2021).

- Prada, M.; Remazeilles, A.; Koene, A.; Endo, S. Implementation and experimental validation of dynamic movement primitives for object handover. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2146–2153. [Google Scholar]

- Chan, W.P.; Parker, C.A.; Van der Loos, H.M.; Croft, E.A. A human-inspired object handover controller. Int. J. Robot. Res. 2013, 32, 971–983. [Google Scholar] [CrossRef]

- Konstantinova, J.; Krivic, S.; Stilli, A.; Piater, J.; Althoefer, K. Autonomous object handover using wrist tactile information. In Annual Conference Towards Autonomous Robotic Systems; Springer: Guildford, UK, 2017; pp. 450–463. [Google Scholar]

- Cakmak, M.; Srinivasa, S.S.; Lee, M.K.; Kiesler, S.; Forlizzi, J. Using spatial and temporal contrast for fluent robot-human hand-overs. In Proceedings of the 6th International Conference on Human-Robot Interaction, Lausanne, Switzerland, 6–9 March 2011; pp. 489–496. [Google Scholar]

- Bohren, J.; Rusu, R.B.; Jones, E.G.; Marder-Eppstein, E.; Pantofaru, C.; Wise, M.; Mösenlechner, L.; Meeussen, W.; Holzer, S. Towards autonomous robotic butlers: Lessons learned with the PR2. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 5568–5575. [Google Scholar]

- Grigore, E.C.; Eder, K.; Pipe, A.G.; Melhuish, C.; Leonards, U. Joint action understanding improves robot-to-human object handover. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 4622–4629. [Google Scholar]

- Aleotti, J.; Micelli, V.; Caselli, S. An affordance sensitive system for robot to human object handover. Int. J. Soc. Robot. 2014, 6, 653–666. [Google Scholar] [CrossRef]

- Cakmak, M.; Srinivasa, S.S.; Lee, M.K.; Forlizzi, J.; Kiesler, S. Human preferences for robot-human hand-over configurations. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1986–1993. [Google Scholar]

- Controzzi, M.; Singh, H.; Cini, F.; Cecchini, T.; Wing, A.; Cipriani, C. Humans adjust their grip force when passing an object according to the observed speed of the partner’s reaching out movement. Exp. Brain Res. 2018, 236, 3363–3377. [Google Scholar] [CrossRef]

- Dehais, F.; Sisbot, E.A.; Alami, R.; Causse, M. Physiological and subjective evaluation of a human–robot object hand-over task. Appl. Ergon. 2011, 42, 785–791. [Google Scholar] [CrossRef]

- Bestick, A.; Bajcsy, R.; Dragan, A.D. Implicitly assisting humans to choose good grasps in robot to human handovers. In International Symposium on Experimental Robotics; Springer: Roppongi, Tokyo, Japan, 2016; pp. 341–354. [Google Scholar]

- Koene, A.; Remazeilles, A.; Prada, M.; Garzo, A.; Puerto, M.; Endo, S.; Wing, A.M. Relative importance of spatial and temporal precision for user satisfaction in human-robot object handover interactions. In Proceedings of the Third International Symposium on New Frontiers in Human-Robot Interaction, London, UK, 1–4 April 2014. [Google Scholar]

- Aleotti, J.; Micelli, V.; Caselli, S. Comfortable robot to human object hand-over. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 771–776. [Google Scholar]

- Chen, M.; Soh, H.; Hsu, D.; Nikolaidis, S.; Srinivasa, S. Trust-aware decision making for human-robot collaboration: Model learning and planning. ACM Trans. Hum. Robot. Interact. 2020. [Google Scholar] [CrossRef]

- Cooper, S.; Fensome, S.F.; Kourtis, D.; Gow, S.; Dragone, M. An EEG investigation on planning human-robot handover tasks. In Proceedings of the 2020 IEEE International Conference on Human-Machine Systems, ICHMS, Rome, Italy, 7–9 September 2020. [Google Scholar] [CrossRef]

- Meissner, A.; Trübswetter, A.; Conti-Kufner, A.S.; Schmidtler, J. Friend or Foe Understanding Assembly Workers’ Acceptance of Human-robot Collaboration. ACM Trans. Hum. Robot. Interact. 2020. [Google Scholar] [CrossRef]

- Tang, K.H.; Ho, C.F.; Mehlich, J.; Chen, S.T. Assessment of handover prediction models in estimation of cycle times for manual assembly tasks in a human-robot collaborative environment. Appl. Sci. 2020, 10, 556. [Google Scholar] [CrossRef]

- Costanzo, M.; De Maria, G.; Natale, C. Handover Control for Human-Robot and Robot-Robot Collaboration. Front. Robot. AI 2021. [Google Scholar] [CrossRef] [PubMed]

- He, W.; Li, J.; Yan, Z.; Chen, F. Bidirectional Human-Robot Bimanual Handover of Big Planar Object With Vertical Posture. IEEE Trans. Autom. Sci. Eng. 2021. [Google Scholar] [CrossRef]

- Melchiorre, M.; Scimmi, L.S.; Mauro, S.; Pastorelli, S.P. Vision-based control architecture for human–robot hand-over applications. Asian J. Control 2021. [Google Scholar] [CrossRef]

- Sutiphotinun, T.; Neranon, P.; Vessakosol, P.; Romyen, A.; Hiransoog, C.; Sookgaew, J. A human-inspired control strategy: A framework for seamless human-robot handovers. J. Mech. Eng. Res. Dev. 2020, 43, 235–245. [Google Scholar]

- Neranon, P.; Sutiphotinun, T. A Human-Inspired Control Strategy for Improving Seamless Robot-To-Human Handovers. Appl. Sci. 2021, 11, 4437. [Google Scholar] [CrossRef]

- Riccio, F.; Capobianco, R.; Nardi, D. Learning human-robot handovers through π-STAM: Policy improvement with spatio-temporal affordance maps. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Cancun, Mexico, 15–17 November 2016. [Google Scholar] [CrossRef]

- Liu, H.; Fang, T.; Zhou, T.; Wang, Y.; Wang, L. Deep Learning-based Multimodal Control Interface for Human-Robot Collaboration. Procedia CIRP 2018. [Google Scholar] [CrossRef]

- Zhao, X.; Chumkamon, S.; Duan, S.; Rojas, J.; Pan, J. Collaborative Human-Robot Motion Generation Using LSTM-RNN. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Beijing, China, 6–9 November 2018. [Google Scholar] [CrossRef]

- Chen, X.; Wang, N.; Cheng, H.; Yang, C. Neural Learning Enhanced Variable Admittance Control for Human-Robot Collaboration. IEEE Access 2020. [Google Scholar] [CrossRef]

- Roveda, L.; Maskani, J.; Franceschi, P.; Abdi, A.; Braghin, F.; Molinari Tosatti, L.; Pedrocchi, N. Model-Based Reinforcement Learning Variable Impedance Control for Human-Robot Collaboration. J. Intell. Robot. Syst. Theory Appl. 2020. [Google Scholar] [CrossRef]

- Kshirsagar, A.; Hoffman, G.; Biess, A. Evaluating guided policy search for human-robot handovers. IEEE Robot. Autom. Lett. 2021. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Pierson, H.A.; Gashler, M.S. Deep learning in robotics: A review of recent research. Adv. Robot. 2017, 31, 821–835. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Halevy, A.; Norvig, P.; Pereira, F. The unreasonable effectiveness of data. IEEE Intell. Syst. 2009. [Google Scholar] [CrossRef]

- Sünderhauf, N.; Brock, O.; Scheirer, W.; Hadsell, R.; Fox, D.; Leitner, J.; Upcroft, B.; Abbeel, P.; Burgard, W.; Milford, M.; et al. The limits and potentials of deep learning for robotics. Int. J. Robot. Res. 2018. [Google Scholar] [CrossRef]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Wiering, M.; van Otterlo, M. Reinforcement Learning: State-of-the-Art; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Kober, J.; Bagnell, J.A.; Peters, J. Reinforcement learning in robotics: A survey. Int. J. Robot. Res. 2013. [Google Scholar] [CrossRef]

- Levine, S.; Finn, C.; Darrell, T.; Abbeel, P. End-to-End Training of Deep Visuomotor Policies. J. Mach. Learn. Res. 2016, 17, 1334–1373. [Google Scholar]

- Zhu, H.; Gupta, A.; Rajeswaran, A.; Levine, S.; Kumar, V. Dexterous manipulation with deep reinforcement learning: Efficient, general, and low-cost. In Proceedings of the IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019. [Google Scholar] [CrossRef]

- Hua, J.; Zeng, L.; Li, G.; Ju, Z. Learning for a robot: Deep reinforcement learning, imitation learning, transfer learning. Sensors 2021, 21, 1278. [Google Scholar] [CrossRef]

- Mahler, J.; Matl, M.; Satish, V.; Danielczuk, M.; DeRose, B.; McKinley, S.; Goldberg, K. Learning ambidextrous robot grasping policies. Sci. Robot. 2019. [Google Scholar] [CrossRef]

- Lake, B.M.; Ullman, T.D.; Tenenbaum, J.B.; Gershman, S.J. Building machines that learn and think like people. Behav. Brain Sci. 2017. [Google Scholar] [CrossRef]

- Bohg, J.; Hausman, K.; Sankaran, B.; Brock, O.; Kragic, D.; Schaal, S.; Sukhatme, G.S. Interactive perception: Leveraging action in perception and perception in action. IEEE Trans. Robot. 2017. [Google Scholar] [CrossRef]

- Kroemer, O.; Niekum, S.; Konidaris, G. A review of robot learning for manipulation: Challenges, representations, and algorithms. J. Mach. Learn. Res. 2021, 22, 1–82. [Google Scholar]

- Ahmed, O.; Träuble, F.; Goyal, A.; Neitz, A.; Wütrich, M.; Bengio, Y.; Schölkopf, B.; Bauer, S. CausalWorld: A Robotic Manipulation Benchmark for Causal Structure and Transfer Learning. arXiv 2020, arXiv:2010.04296. [Google Scholar]

- Hellström, T. The relevance of causation in robotics: A review, categorization, and analysis. J. Behav. Robot. 2021, 12, 238–255. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D.D. A survey of transfer learning. J. Big Data 2016. [Google Scholar] [CrossRef]

- Devin, C.; Gupta, A.; Darrell, T.; Abbeel, P.; Levine, S. Learning modular neural network policies for multi-task and multi-robot transfer. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017. [Google Scholar] [CrossRef]

- Hochreiter, S.; Younger, A.S.; Conwell, P.R. Learning to learn using gradient descent. In International Conference on Artificial Neural Networks; Springer: Berlin/Heidelberg, Germany, 2001; pp. 87–94. [Google Scholar] [CrossRef]

- Wang, J.X.; Kurth-Nelson, Z.; Kumaran, D.; Tirumala, D.; Soyer, H.; Leibo, J.Z.; Hassabis, D.; Botvinick, M. Prefrontal cortex as a meta-reinforcement learning system. Nat. Neurosci. 2018. [Google Scholar] [CrossRef]

| Source | Definitions |

|---|---|

| [9] | interaction: action on someone or something else.collaboration: working jointly with someone or something. |

| [10] | collaboration: working jointly with others or together especially in an intellectual endeavor. |

| [11] | interaction includes collaboration.interaction: action on someone else.collaboration: working with someone, aiming at reaching a common goal. |

| [12] | collaboration: robot feature to perform complex tasks with direct human interaction and coordination.physical interaction: few nested behaviors that the robot must ensure (collaboration, coexistence and safety). |

| [1] | efficient Human-Robot Collaboration: robot should be capable of perceiving several communications mechanisms similar to the ones related to human-human interaction. |

| [2] | physical Human-Robot Collaboration: the moment when human(s), robot(s) and the environment come to contact with each other and form a tightly coupled dynamical system to accomplish a task. |

| [13] | collaborative robot: able to understand its collaborator’s intentions and predict his actions, in order to adapt its behavior in accordance and provide assistance in a wide diversity of tasks. |

| [5] | HRC: requires a common goal that is sought by both robot and human working together.HRI: the interaction between the human and the robot does not necessarily entail a common goal, thus falling in the definition of coexistence. |

| [6] | coexistence: when the human operator and the robot are in the same environment but do not interact with each other.synchronised: when the human and the robot work in the same space but at alternated times.cooperation: when the human and robot work on separate tasks, but in the same space at the same time.collaboration: when the human operator and the robot work together on the same task. |

| Metrics Descriptor | References | |

|---|---|---|

| Objective Metrics | Success rate | [70,77,89,108,109,113,114,115] |

| Interaction force | [72,90,91,116,117] | |

| Timings (idle & total) | [70,80,87,110,114,118,119,120,121] | |

| Joint effort | [109] | |

| Subjective Metrics | Fluency | [70,80,87,110,114,122,123,124] |

| Satisfaction | [109,113,115,123,125,126] | |

| Comfort | [91,109,115,121,124,126,127] | |

| Usage of interface | [90,109,113,115,125,126] | |

| Trust in the robot | [87,110,125] | |

| Human-like motion | [121] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Castro, A.; Silva, F.; Santos, V. Trends of Human-Robot Collaboration in Industry Contexts: Handover, Learning, and Metrics. Sensors 2021, 21, 4113. https://doi.org/10.3390/s21124113

Castro A, Silva F, Santos V. Trends of Human-Robot Collaboration in Industry Contexts: Handover, Learning, and Metrics. Sensors. 2021; 21(12):4113. https://doi.org/10.3390/s21124113

Chicago/Turabian StyleCastro, Afonso, Filipe Silva, and Vitor Santos. 2021. "Trends of Human-Robot Collaboration in Industry Contexts: Handover, Learning, and Metrics" Sensors 21, no. 12: 4113. https://doi.org/10.3390/s21124113

APA StyleCastro, A., Silva, F., & Santos, V. (2021). Trends of Human-Robot Collaboration in Industry Contexts: Handover, Learning, and Metrics. Sensors, 21(12), 4113. https://doi.org/10.3390/s21124113