HyAdamC: A New Adam-Based Hybrid Optimization Algorithm for Convolution Neural Networks

Abstract

1. Introduction

- First, we show how HyAdamC identifies a current state of the optimization terrain from the past momentum and gradients.

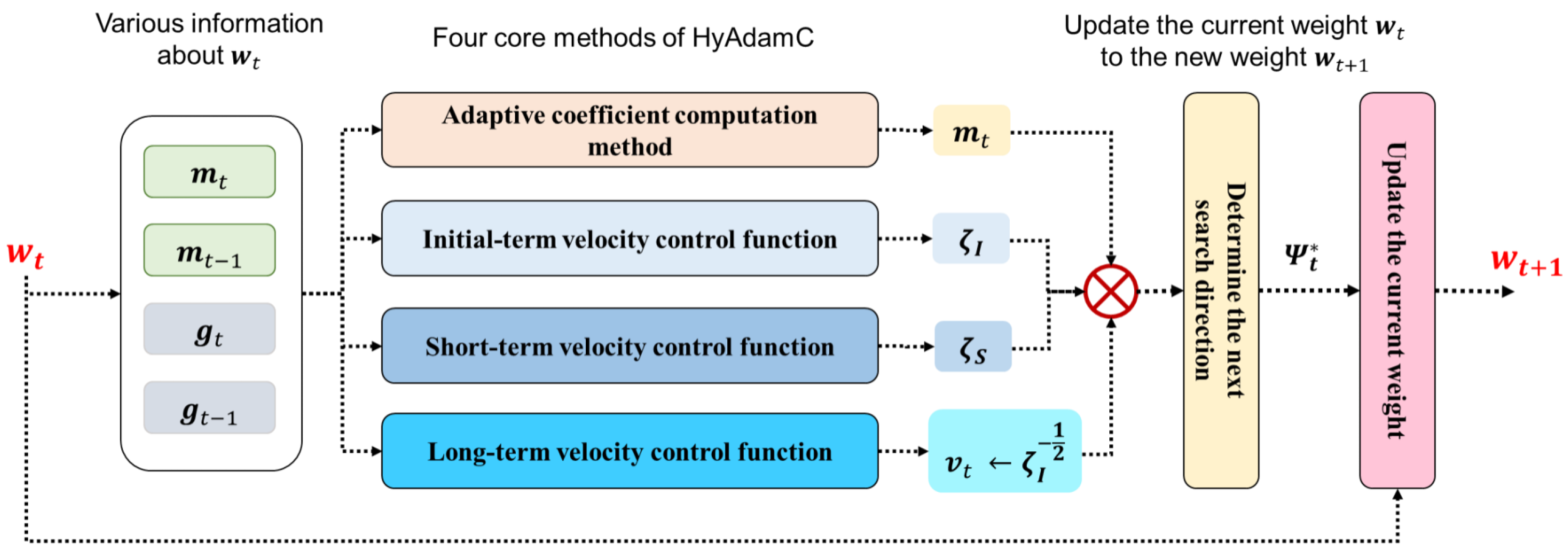

- Second, we propose three new velocity control functions that can adjust the search velocity elaborately depending on the current optimization states.

- Third, we propose a hybrid mechanism by concretely implementing how these are combined. Furthermore, we show how our hybrid method contributes to enhancing its optimization performance in training the CNNs.

- Fourth, we propose how the three velocity functions and the strategy to prevent outlier gradient are combined into one method. Accordingly, we show that such elastic hybrid method can significantly contribute to overcome the problems from which the existing optimization methods have suffered.

- First, we discovered a variety of useful information for searching an optimal weight in the solution space with any complicated terrains.

- Second, we showed that such various terrain information could be simply utilized by applying them to scale the search direction.

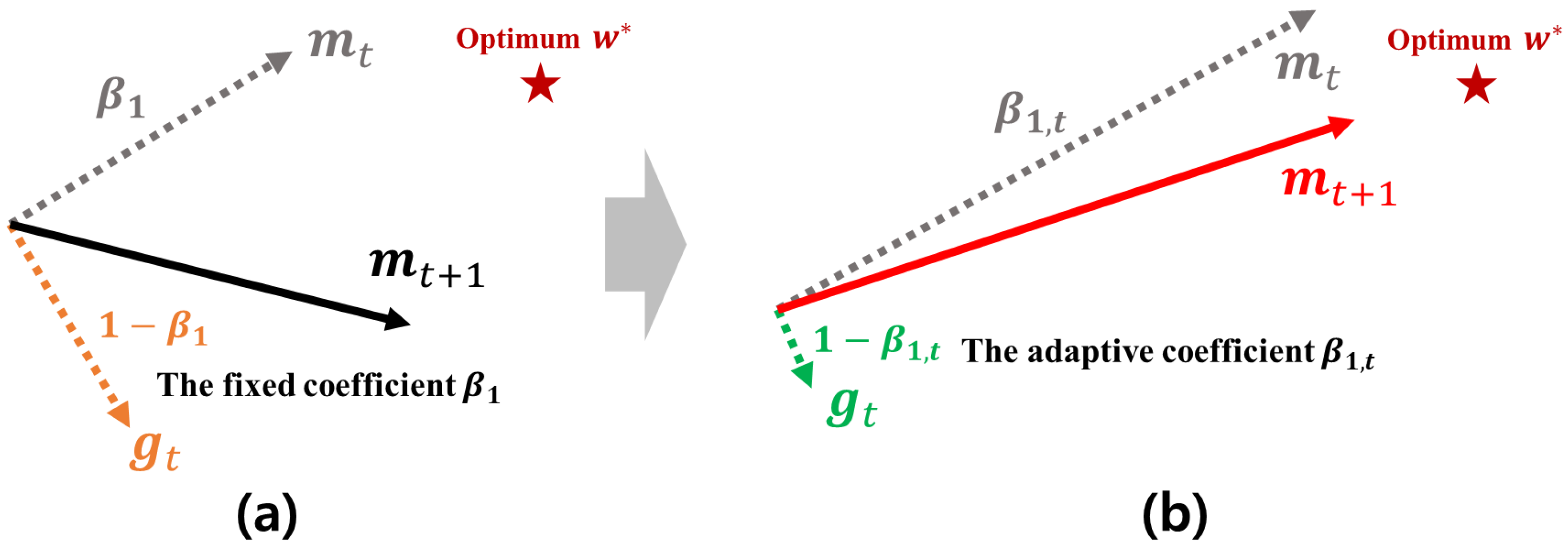

- Third, we concretely found that minimizing the unexpected affections caused by any outlier gradients could contribute significantly to determining its promising search direction by implementing the adaptive coefficient computation methods.

- Fourth, we showed that they could be combined as a hybrid optimization method with a detailed implementation.

- Fifth, we confirmed that our HyAdamC was theoretically valid by proving its upper regret bound mathematically.

- Sixth, we validated the practical optimization ability of HyAdamC by conducting comprehensive experiments with various CNN models for the image classification and image segmentation tasks.

2. Preliminaries

3. Related Work

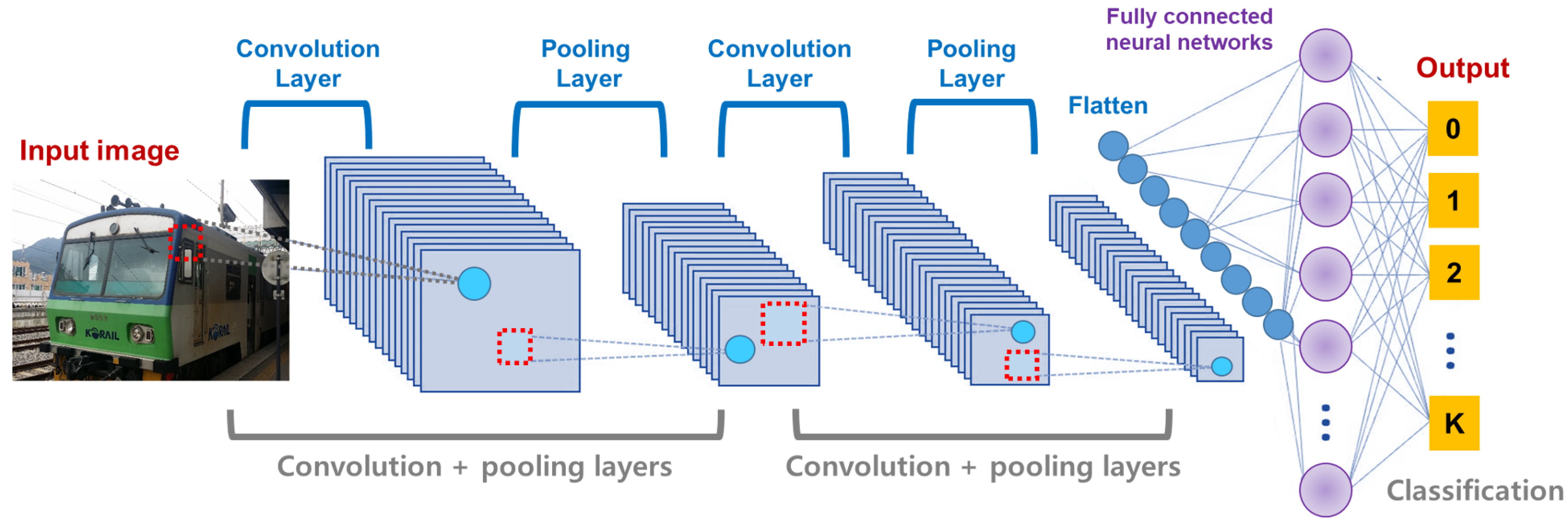

3.1. CNNs

3.2. Overview of Optimization Methods for Machine Learning

3.3. Optimization Methods to Train CNNs

| Algorithm 1: A pseudocode of Adam optimization algorithm |

| Algorithm: Adam Input: f, , , , Output: Begin Initialize while not converged do: , end while End Begin |

4. Proposed Method

4.1. Introduction

4.2. Adaptive Coefficient Computation Method for the Robust First Momentum

4.3. Adaptive Velocity Control Functions

4.3.1. Initial-Term Velocity Control Function

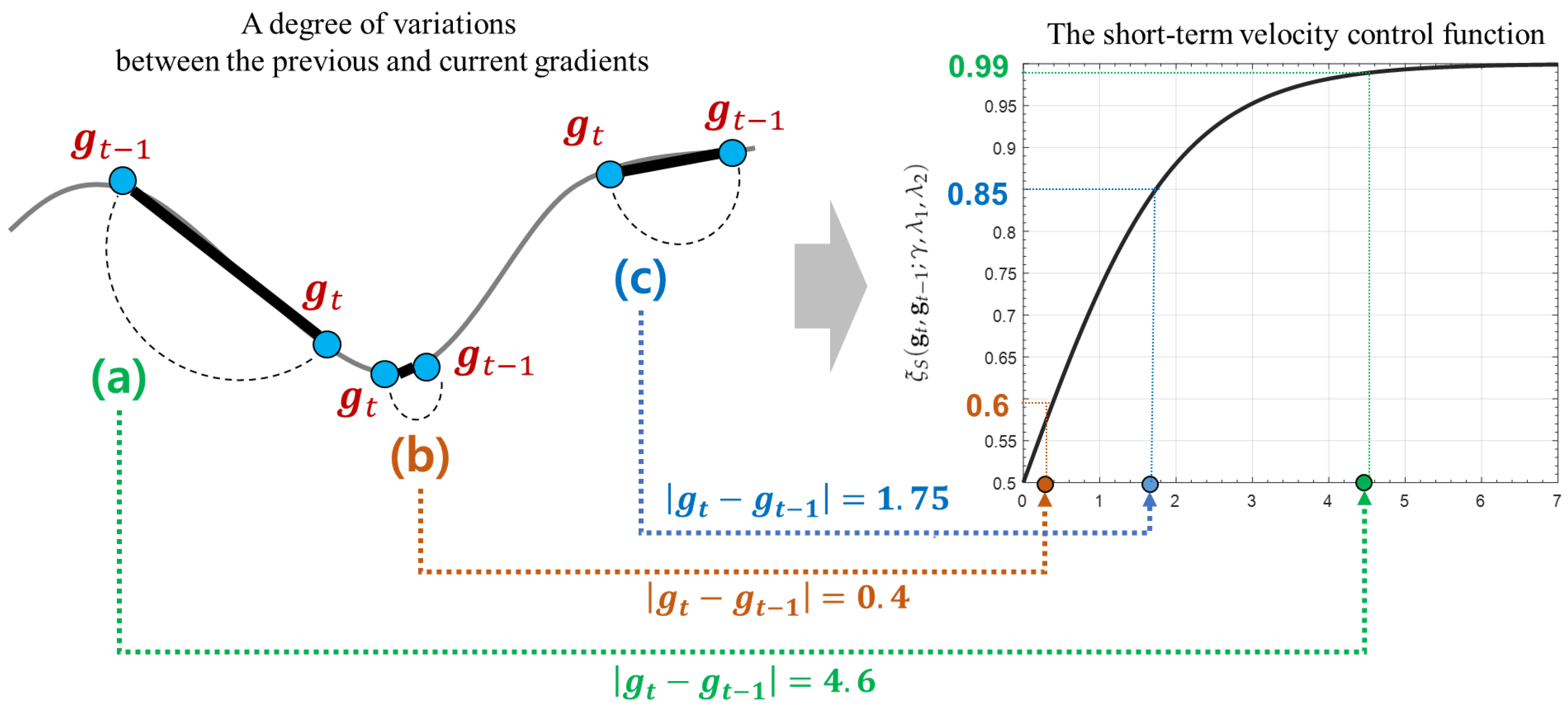

4.3.2. Short-Term Velocity Control Function

4.3.3. Long-Term Velocity Control Function

4.4. Parameter Update Methods and Implementations

| Algorithm 2: An implementation of HyAdamC |

| Algorithm: HyAdamC Input:L, , , , , , , Output: Begin while not converged do: end while End Begin |

4.5. Regret Bound Analysis

5. Experiments

5.1. Experimental Settings

- Compared optimization methods: As the optimization methods to compare the optimization performance of HyAdamC, we adopted 11 first-order optimization methods, i.e., SGD [16], Adam [18], RMSProp [36], AdaDelta [47], AdaGrad [20], AdamW [18], Rprop [48], Yogi [21], Fromage [22], diffGrad [23], and TAdam [24]. These have been extensively utilized to train various deep learning models involving the CNNs. Among them, in particular, Fromage, diffGrad, and TAdam are the latest optimization methods and have shown better optimization performance than the existing methods. In our experiments, all these parameters were set to the default values reported by their papers. The detailed parameter settings of our HyAdamC and the compared methods are listed in Table 1 (In all the methods except for RMSProp, their learning rate is denoted by as shown in Algorithm 1).

- Baseline CNNs and benchmark datasets: In this experiments, VGG [12], ResNet [13], and DenseNet [14] were chosen as the baseline CNN models. In addition, we adopted the image classification task as a baseline task to evaluate the optimization performance of VGG, ResNet, and DenseNet models trained by HyAdamC and the compared methods. The image classification is one of the most fundamental and important tasks in the image processing applications and has been widely applied into many practical applications with the CNNs. Moreover, we adopted an universal benchmark image dataset, i.e., CIFAR-10 [49] image dataset which is one of the most popular benchmark datasets used to evaluate the image classification performance. In detail, the dataset involves 70,000 images with 60,000 training samples and 10,000 test ones. Each of them is a 32 × 32 color image and belongs to one of ten coarse classes. Figure 9 describes several example images and their classes briefly. In our experiments, the images involved in the dataset were utilized to evaluate how accurately the CNN models trained by HyAdamC and other algorithms could classify them.

- Metrics used in the experiments: In the experiments, we set the batch-size of the train/test samples to 64 and 128, respectively. The VGG, ResNet, and DenseNet were trained by HyAdamC and the compared methods. At this time, their weight values were randomly initialized by the default function provided in PyTorch. In detail, PyTorch initializes the weight value in each layer depending on the layer generation functions such as Linear() and Conv2d(). For example, the weights in Conv2d layers are initialized by Xavier method if any initialization method is not declared explicitly by a user. Different to ResNet and DenseNet, an implementation of VGG contains a function to initialize its weights. Thus, the weights of VGG were initialized by its initialization method. Furthermore, to maintain the same initial weight values for all compared methods, we fixed the random seeds of PyTorch, CUDA, and NumPy as a constant when training them. Then, we compared their performance and learning curves for the first 200 epochs. The training and test accuracies, i.e., and were measured bywhere is the GT class and is the classified class for ith training sample, respectively; is a Kroneker delta; and are the total number of training and test image samples. In addition, the train and validation loss in our experiments were measured by the cross-entropy method. When the CNN model is defined as a K-classes classification problem, an cross-entropy loss for any ith input image is measured bywhere is the GT of the jth node and is an output value of the jth node for ith input image, respectively.

- Experimental environments: Our HyAdamC and other optimization methods were implemented and evaluated by Python 3.8.3 with PyTorch 1.7.1 and CUDA 11.0. In addition, we used matplotlib 3.4.1 library to represent our experimental results visually. Finally, all the experiments were performed on the Linux server with Ubuntu 7.5 OS, Intel Core i7-7800X 3.50GHz CPU, and NVIDIA GeForce RTX 2080Ti GPU.

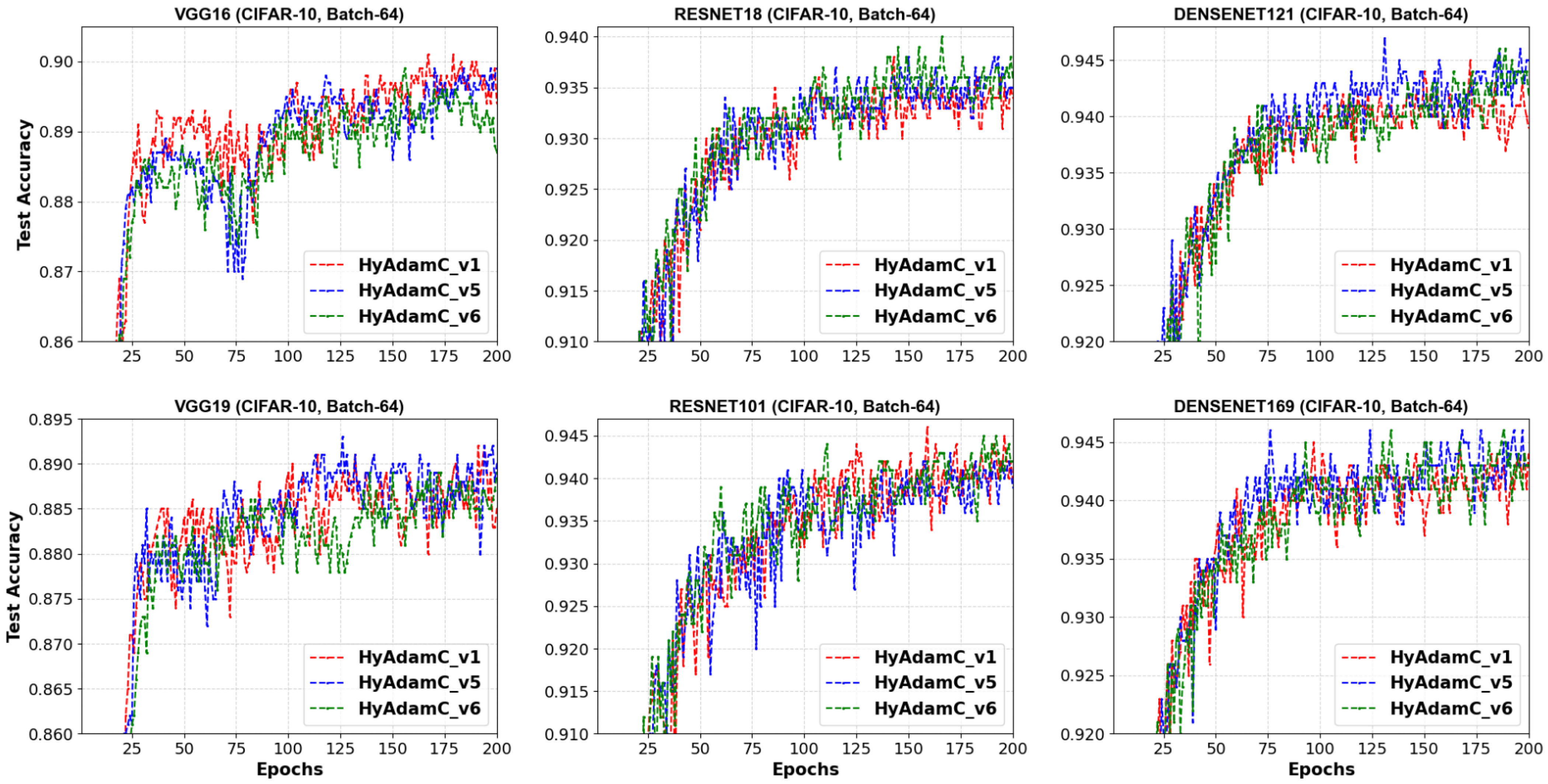

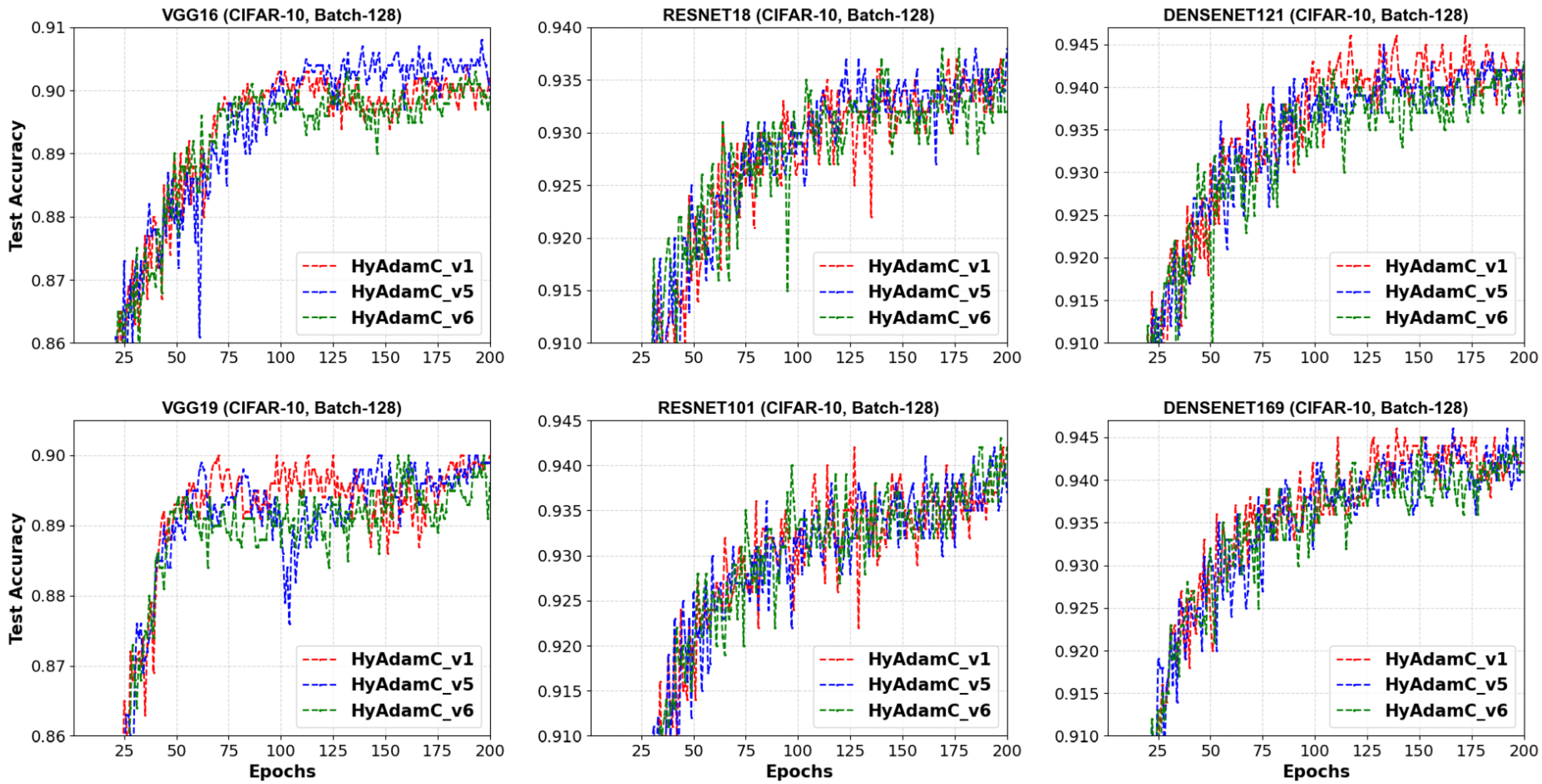

5.2. Experiments to Choose the Convergence Control Model of Hyadamc

- HyAdamC-v1 → HyAdamC-Basic,

- HyAdamC-v5 → HyAdamC-Scale (i.e., scaled HyAdamC)

5.3. Experimental Results in the Image Classification Tasks

5.3.1. Image Classification Tasks in Vgg-16 and 19

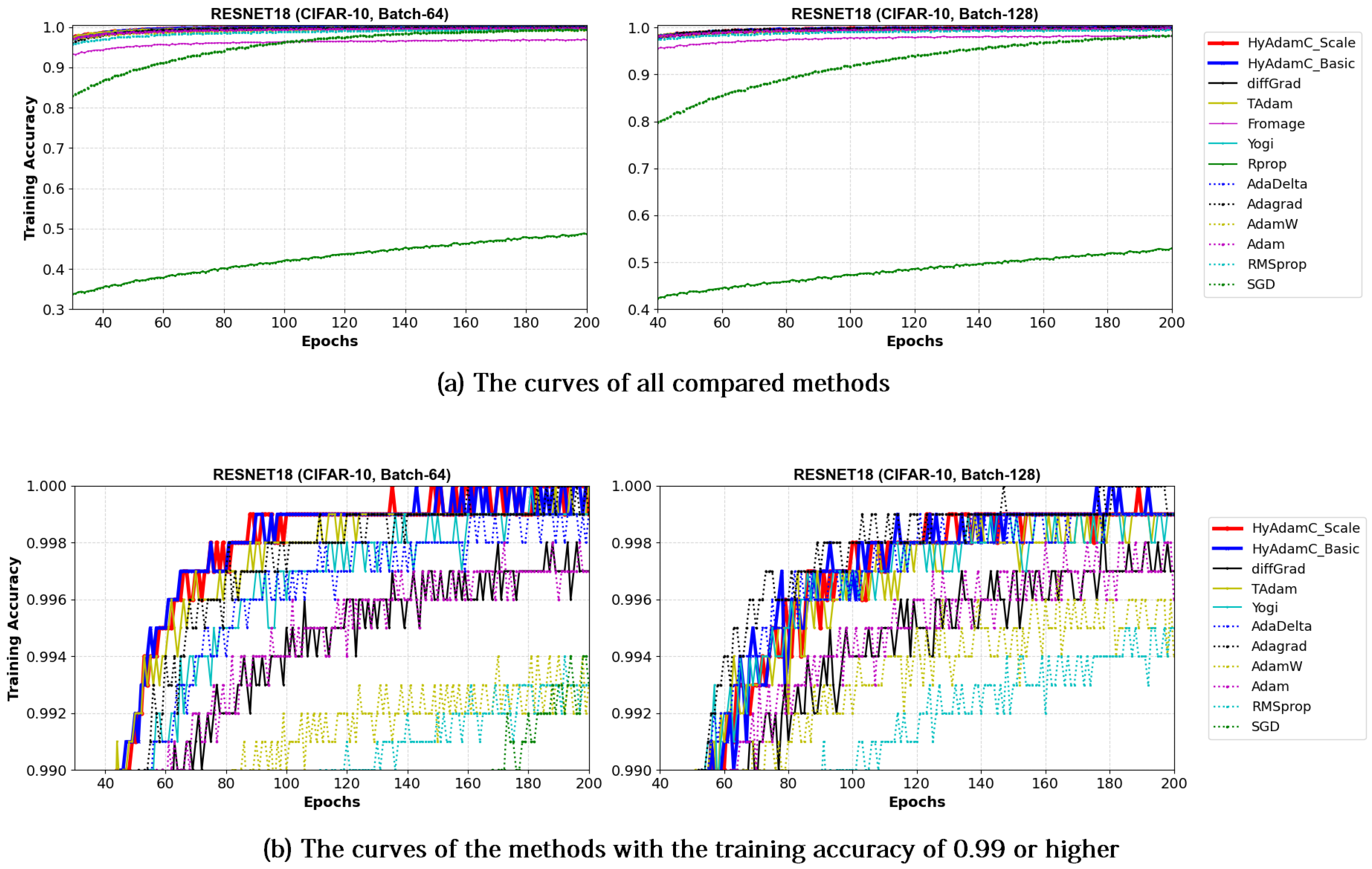

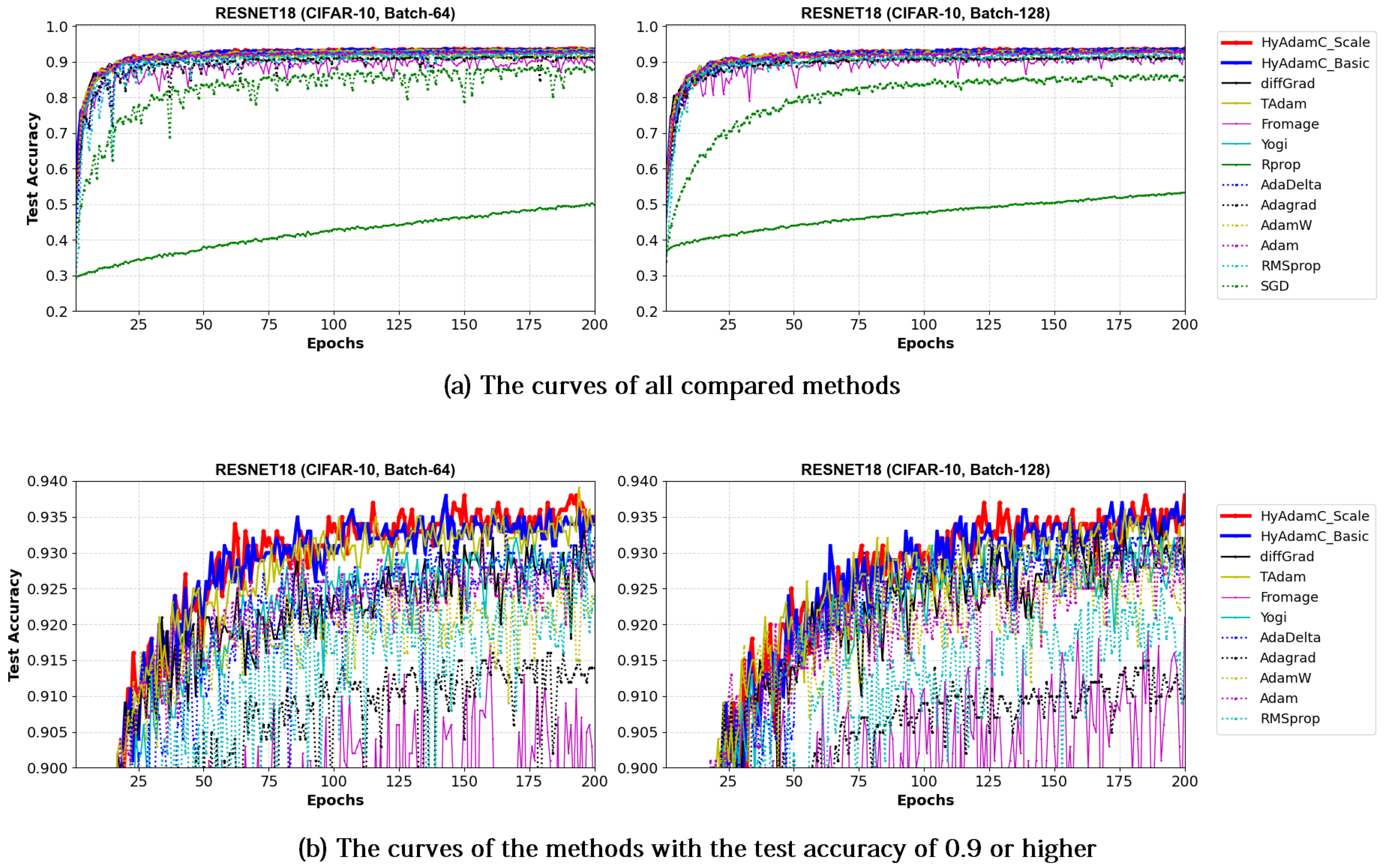

5.3.2. Image Classification Tasks in Resnet-18 and 101

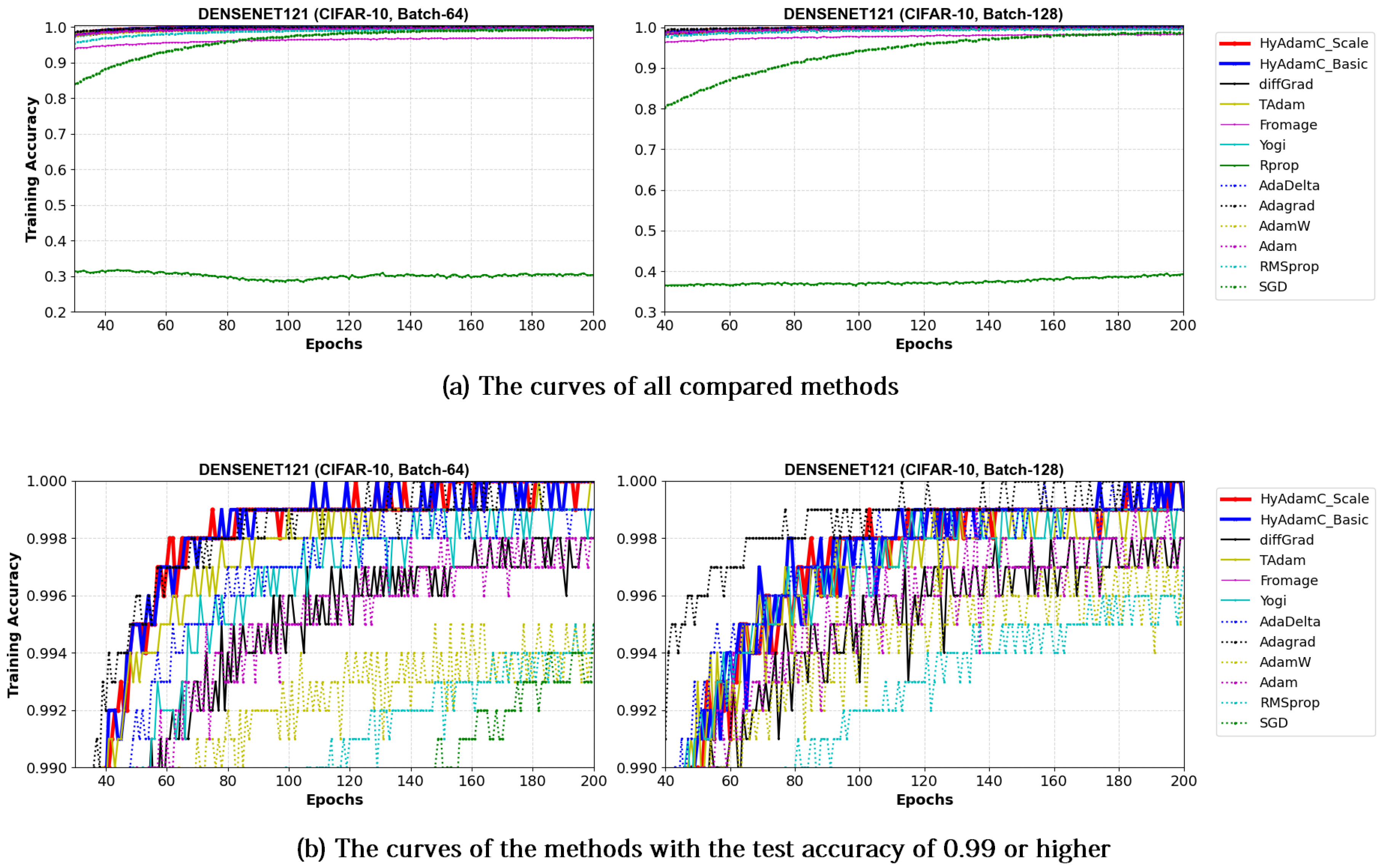

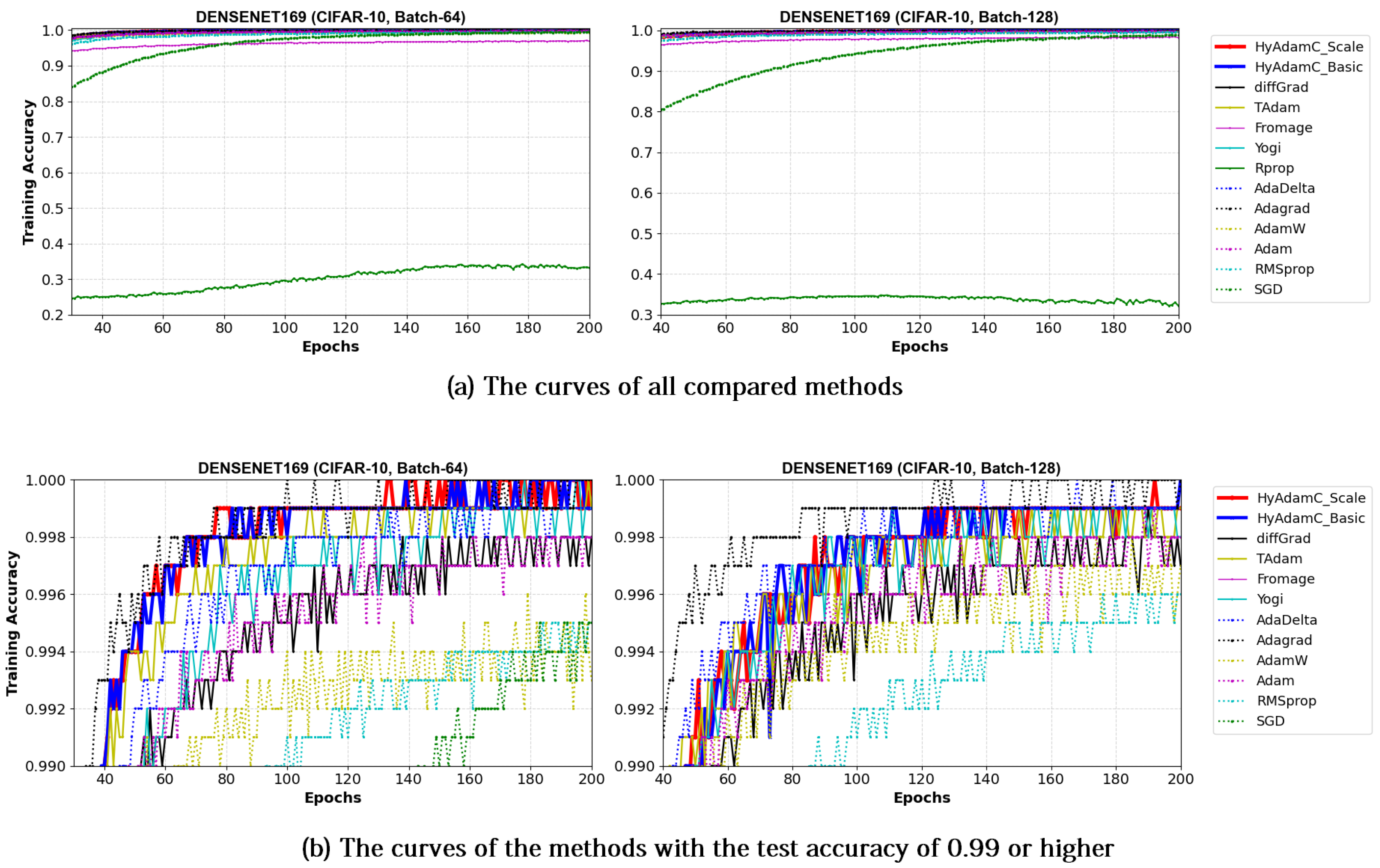

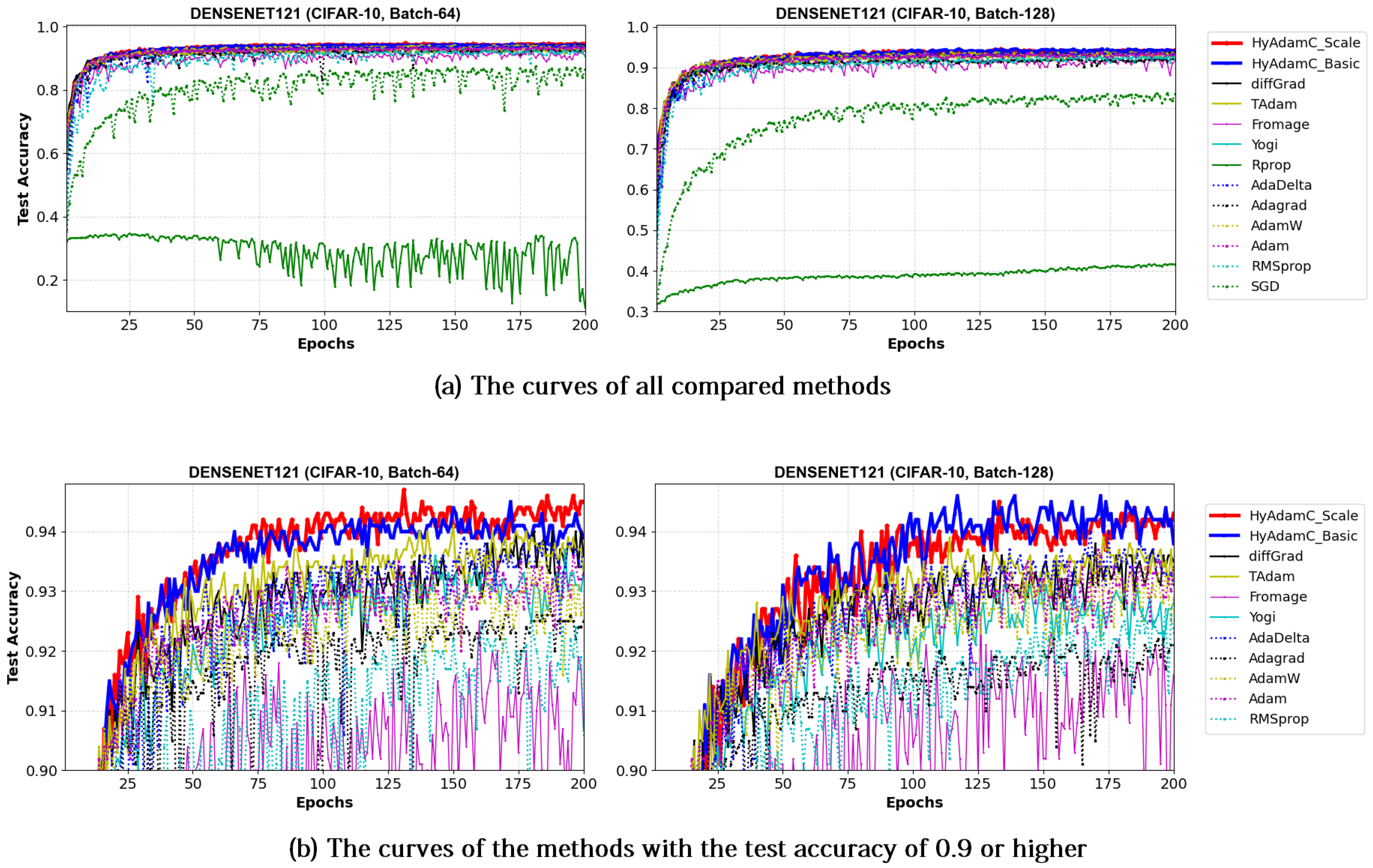

5.3.3. Image Classification Tasks in Densenet-121 and 169

5.4. Additional Experiments to Evaluate the Performance of Hyadamc

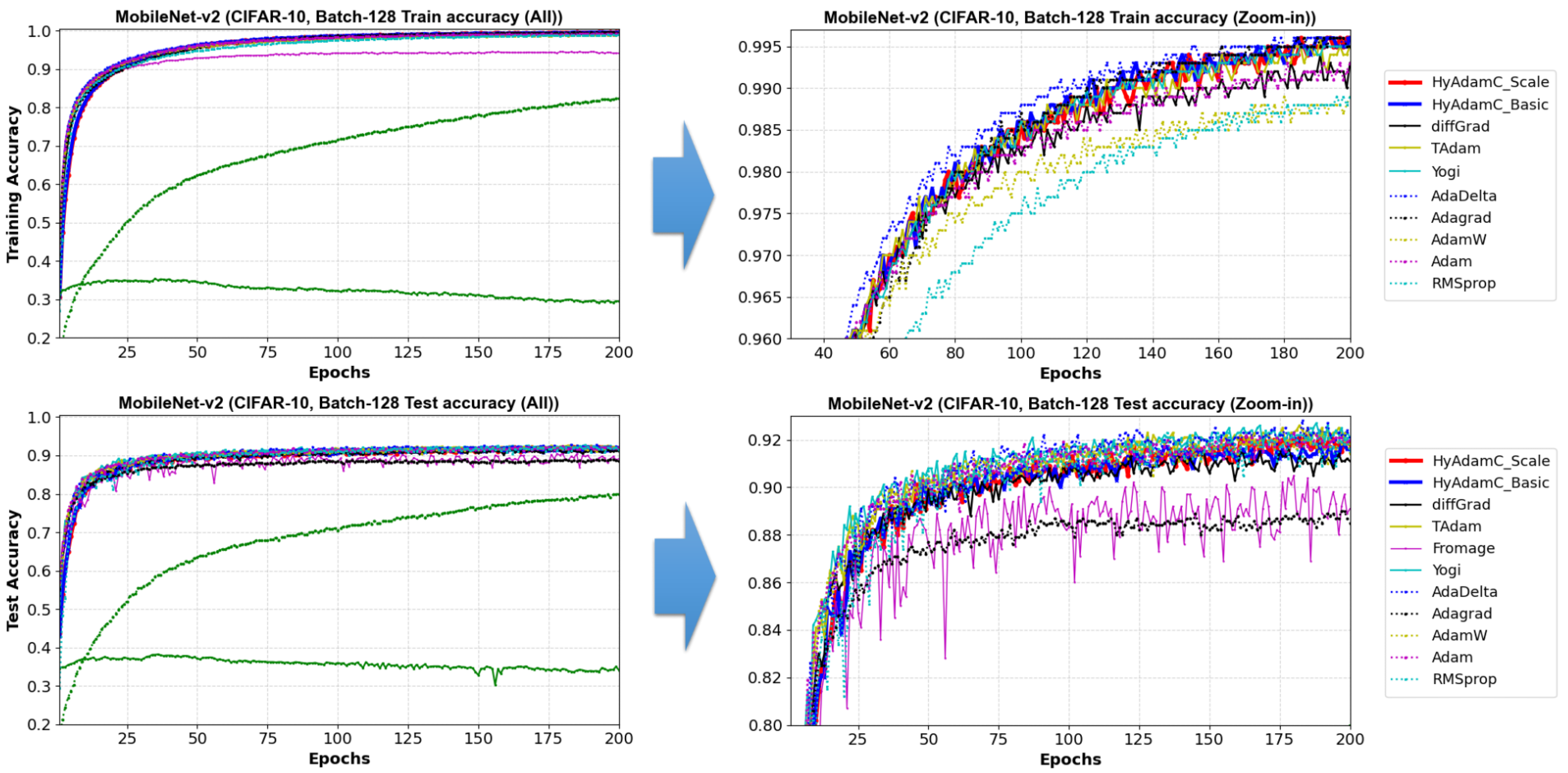

5.4.1. Image Classification Task in the Latest Lightweight Cnn Model: Mobilenet-V2

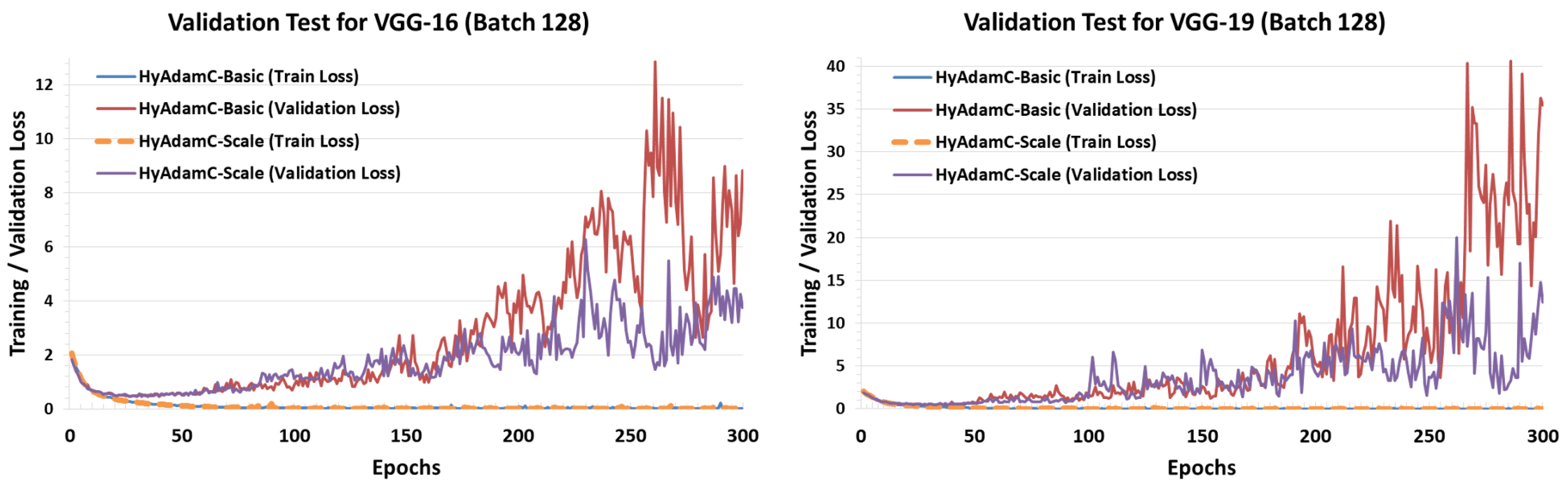

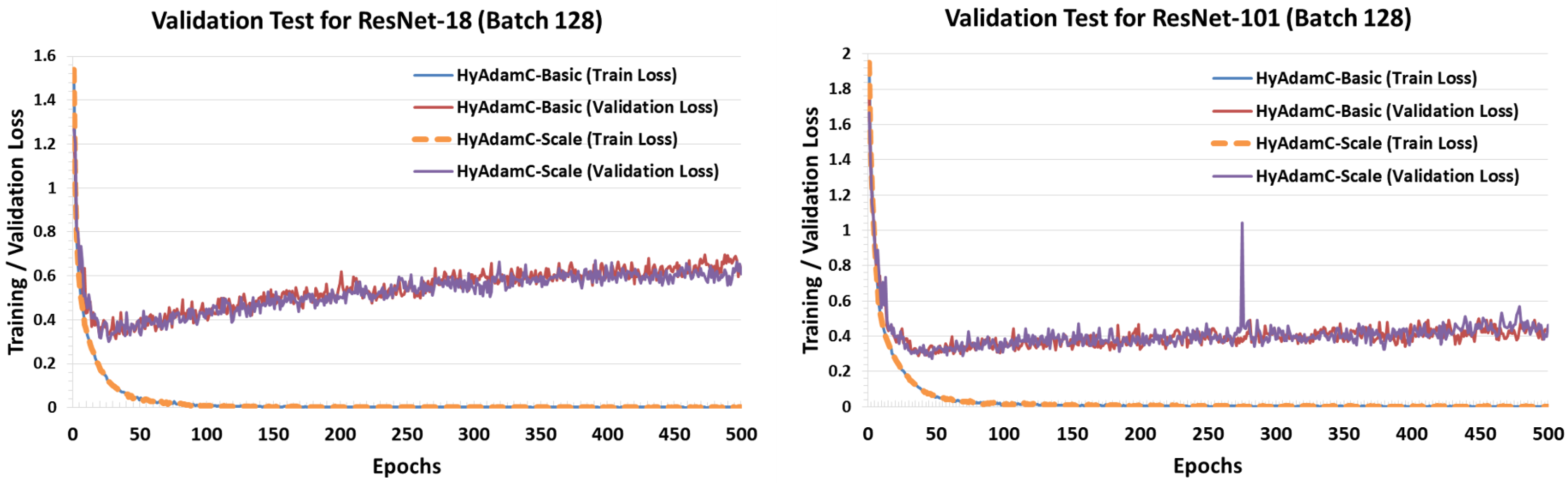

5.4.2. Validation Tests

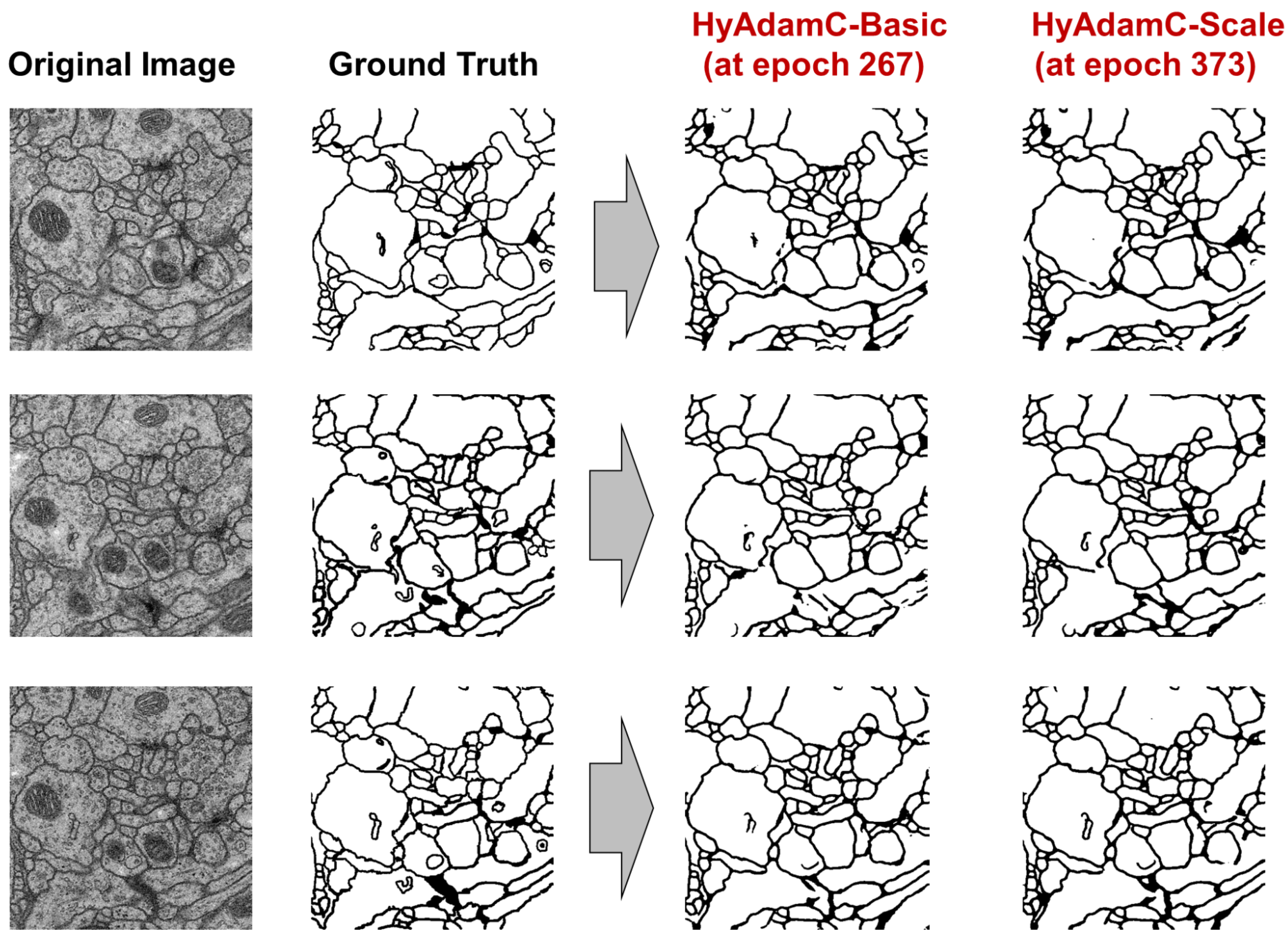

5.4.3. Image Segmentation in the U-Net

6. Discussions

- VGG: As explained previously, the VGG is vulnerable to the vanishing gradient problem because it does not use the residual connections [13]. Accordingly, the existing methods such as SGD, AdaDelta, and Rprop showed considerably unstable convergence while training both the VGG-16 and 19 even though RMSprop and AdamW failed to train them. On the other hands, HyAdamC showed the most ideal convergence with the highest accuracies and the least oscillations when compared to other SOTA methods. It implies that our HyAdamC can perform considerably stable yet robust training even for the CNN models suffering from the vanishing gradient problems.

- ResNet: Different from the VGG, the ResNet alleviates the vanishing gradient problem by introducing the residual connections [13]. Accordingly, most of the compared methods, including HyAdamC, achieved high training accuracies 0.95 or higher in the experiments for ResNet-18 and 101. Nevertheless, HyAdamC still showed better test accuracies although it had slightly slower convergence than other SOTA methods. In particular, although AdaGrad presented faster convergence than HyAdamC, its test accuracies were significantly lower than those of HyAdamC. It indicates that the velocity control methods of HyAdamC are effective to search its optimal weight carefully on the complicated CNN models with the residual connections.

- DenseNet: As explained in Section 3, the DenseNet has more complicated architecture than the ResNet by constructing more residual connections [14]. Thus, the optimization terrain created in the DenseNet becomes further complicated than those in ResNet. In the experiments for the DenseNet-121 and 169, HyAdamC showed not only the best test accuracies but the most stable convergence. Especially, from Table 8, we found that the gap between HyAdamC and other methods became further increased when compared to the results in ResNet-18 and 101. Such results show the HyAdamC still maintains considerably robust and stable training ability with the highest accuracies in the complex architecture. Our velocity control functions and adaptive coefficient computation methods provide useful information about the complicated solution space of the DenseNet. Accordingly, HyAdamC could further elastically control its search strength and direction which helps to avoid falling into any local minimums or excessively oscillating around them. It is the most distinguished characteristic and merit of HyAdamC.

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolution neural network |

| CV | Computer vision |

| DenseNet | Densely connected network |

| DNN | Deep neural network |

| EWA | Exponentially weighted average |

| GAN | Generative adversarial network |

| GD | Gradient descent |

| GPU | Graphic processing unit |

| GT | Ground truth |

| IoT | Internet of Things |

| NLP | Natural language processing |

| Probability density function | |

| ResNet | Residual network |

| RNN | Recurrent neural network |

| SGD | Stochastic gradient descent |

| SGDM | Stochastic gradient descent with momentum |

| SOTA | State-of-the-art |

References

- Mukherjee, H.; Ghosh, S.; Dhar, A.; Obaidullah, S.M.; Santosh, K.C.; Roy, K. Deep neural network to detect COVID-19: One architecture for both CT Scans and Chest X-rays. Appl. Intell. 2021, 51, 2777–2789. [Google Scholar] [CrossRef]

- Irfan, M.; Iftikhar, M.A.; Yasin, S.; Draz, U.; Ali, T.; Hussain, S.; Bukhari, S.; Alwadie, A.S.; Rahman, S.; Glowacz, A.; et al. Role of Hybrid Deep Neural Networks (HDNNs), Computed Tomography, and Chest X-rays for the Detection of COVID-19. Int. J. Environ. Res. Public Health 2021, 18, 3056. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Lao, Z.; Hou, M.; Li, C.; Zhang, S. Mechanical fault time series prediction by using EFMSAE-LSTM neural network. Measurement 2021, 173, 108566. [Google Scholar] [CrossRef]

- Namasudra, S.; Dhamodharavadhani, S.; Rathipriya, R. Nonlinear Neural Network Based Forecasting Model for Predicting COVID-19 Cases. Neural Process. Lett. 2021. [Google Scholar] [CrossRef] [PubMed]

- Hong, T.; Choi, J.A.; Lim, K.; Kim, P. Enhancing Personalized Ads Using Interest Category Classification of SNS Users Based on Deep Neural Networks. Sensors 2021, 21, 199. [Google Scholar] [CrossRef] [PubMed]

- Shambour, Q. A deep learning based algorithm for multi-criteria recommender systems. Knowl. Based Syst. 2021, 211, 106545. [Google Scholar] [CrossRef]

- Zgank, A. IoT-Based Bee Swarm Activity Acoustic Classification Using Deep Neural Networks. Sensors 2021, 21, 676. [Google Scholar] [CrossRef]

- Kumar, A.; Sharma, K.; Sharma, A. Hierarchical deep neural network for mental stress state detection using IoT based biomarkers. Pattern Recognit. Lett. 2021, 145, 81–87. [Google Scholar] [CrossRef]

- Minaee, S.; Kalchbrenner, N.; Cambria, E.; Nikzad, N.; Chenaghlu, M.; Gao, J. Deep Learning–Based Text Classification: A Comprehensive Review. ACM Comput. Surv. 2021, 54. [Google Scholar] [CrossRef]

- Zhou, G.; Xie, Z.; Yu, Z.; Huang, J.X. DFM: A parameter-shared deep fused model for knowledge base question answering. Inf. Sci. 2021, 547, 103–118. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper With Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Bottou, L. Large-Scale Machine Learning with Stochastic Gradient Descent; Lechevallier, Y., Saporta, G., Eds.; Physica-Verlag HD: Heidelberg, Germany, 2010; pp. 177–186. [Google Scholar]

- Qian, N. On the momentum term in gradient descent learning algorithms. Neural Netw. 1999, 12, 145–151. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; Vol. 1. No. 2.; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Zaheer, M.; Reddi, S.; Sachan, D.; Kale, S.; Kumar, S. Adaptive Methods for Nonconvex Optimization. In Advances in Neural Information Processing Systems; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Bernstein, J.; Vahdat, A.; Yue, Y.; Liu, M.Y. On the distance between two neural networks and the stability of learning. arXiv 2021, arXiv:2002.03432. [Google Scholar]

- Dubey, S.R.; Chakraborty, S.; Roy, S.K.; Mukherjee, S.; Singh, S.K.; Chaudhuri, B.B. diffGrad: An Optimization Method for Convolutional Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 4500–4511. [Google Scholar] [CrossRef] [PubMed]

- Ilboudo, W.E.L.; Kobayashi, T.; Sugimoto, K. Robust Stochastic Gradient Descent With Student-t Distribution Based First-Order Momentum. IEEE Trans. Neural Netw. Learn. Syst. 2020, 1–14. [Google Scholar] [CrossRef]

- Brownlee, J. Better Deep Learning: Train Faster, Reduce Overfitting, and Make Better Predictions; Machine Learning Mastery: San Juan, PR, USA, 2018. [Google Scholar]

- Ying, X. An Overview of Overfitting and its Solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- Sun, R.Y. Optimization for Deep Learning: An Overview. J. Oper. Res. Soc. China 2020, 8, 249–294. [Google Scholar] [CrossRef]

- Sun, S.; Cao, Z.; Zhu, H.; Zhao, J. A Survey of Optimization Methods From a Machine Learning Perspective. IEEE Trans. Cybern. 2020, 50, 3668–3681. [Google Scholar] [CrossRef]

- Xu, P.; Roosta, F.; Mahoney, M.W. Second-order Optimization for Non-convex Machine Learning: An Empirical Study. In Proceedings of the 2020 SIAM International Conference on Data Mining (SDM), Cincinnati, OH, USA, 7–9 May 2020; pp. 199–207. [Google Scholar] [CrossRef]

- Lesage-Landry, A.; Taylor, J.A.; Shames, I. Second-order Online Nonconvex Optimization. IEEE Trans. Autom. Control. 2020, 1. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Lee, E.H.; Lee, S. Study on the classification performance of underwater sonar image classification based on convolutional neural networks for detecting a submerged human body. Sensors 2020, 20, 94. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Liang, D.; Chen, Q.; Iwamoto, Y.; Han, X.H.; Zhang, Q.; Hu, H.; Lin, L.; Chen, Y.W. Medical image classification using deep learning. In Deep Learning in Healthcare; Springer: Berlin/Heidelberg, Germany, 2020; pp. 33–51. [Google Scholar]

- Zhang, Q.; Bai, C.; Liu, Z.; Yang, L.T.; Yu, H.; Zhao, J.; Yuan, H. A GPU-based residual network for medical image classification in smart medicine. Inf. Sci. 2020, 536, 91–100. [Google Scholar] [CrossRef]

- Apostolopoulos, I.D.; Mpesiana, T.A. Covid-19: Automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020, 43, 635–640. [Google Scholar] [CrossRef] [PubMed]

- Cevikalp, H.; Benligiray, B.; Gerek, O.N. Semi-supervised robust deep neural networks for multi-label image classification. Pattern Recognit. 2020, 100, 107164. [Google Scholar] [CrossRef]

- Hinton, G.; Srivastava, N.; Swersky, K. Neural networks for machine learning lecture 6a overview of mini-batch gradient descent. Cited 2012, 14, 1–31. [Google Scholar]

- Nazareth, J.L. Conjugate gradient method. WIREs Comput. Stat. 2009, 1, 348–353. [Google Scholar] [CrossRef]

- Head, J.D.; Zerner, M.C. A Broyden—Fletcher—Goldfarb—Shanno optimization procedure for molecular geometries. Chem. Phys. Lett. 1985, 122, 264–270. [Google Scholar] [CrossRef]

- Liu, D.C.; Nocedal, J. On the limited memory BFGS method for large scale optimization. Math. Program. 1989, 45, 503–528. [Google Scholar] [CrossRef]

- Cao, Y.; Zhang, H.; Li, W.; Zhou, M.; Zhang, Y.; Chaovalitwongse, W.A. Comprehensive Learning Particle Swarm Optimization Algorithm With Local Search for Multimodal Functions. IEEE Trans. Evol. Comput. 2019, 23, 718–731. [Google Scholar] [CrossRef]

- Tan, Y.; Zhou, M.; Zhang, Y.; Guo, X.; Qi, L.; Wang, Y. Hybrid Scatter Search Algorithm for Optimal and Energy-Efficient Steelmaking-Continuous Casting. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1814–1828. [Google Scholar] [CrossRef]

- Demidova, L.A.; Gorchakov, A.V. Research and Study of the Hybrid Algorithms Based on the Collective Behavior of Fish Schools and Classical Optimization Methods. Algorithms 2020, 13, 85. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Han, J. On the Variance of the Adaptive Learning Rate and Beyond. arXiv 2020, arXiv:1908.03265. [Google Scholar]

- Reddi, S.J.; Kale, S.; Kumar, S. On the Convergence of Adam and Beyond. arXiv 2019, arXiv:1904.09237. [Google Scholar]

- Zinkevich, M. Online convex programming and generalized infinitesimal gradient ascent. In Proceedings of the 20th International Conference on Machine Learning (ICML-03), Washington, DC, USA, 21–24 August 2003; pp. 928–936. [Google Scholar]

- Zeiler, M.D. Adadelta: An adaptive learning rate method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Riedmiller, M.; Braun, H. A direct adaptive method for faster backpropagation learning: The RPROP algorithm. IEEE Int. Conf. Neural Netw. 1993, 1, 586–591. [Google Scholar] [CrossRef]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images. 2009. Available online: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf (accessed on 1 May 2021).

- Hu, Y.; Huber, A.; Anumula, J.; Liu, S.C. Overcoming the vanishing gradient problem in plain recurrent networks. arXiv 2019, arXiv:1801.06105. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Cardona, A.; Saalfeld, S.; Preibisch, S.; Schmid, B.; Cheng, A.; Pulokas, J.; Tomancak, P.; Hartenstein, V. An Integrated Micro- and Macroarchitectural Analysis of the Drosophila Brain by Computer-Assisted Serial Section Electron Microscopy. PLoS Biol. 2010, 8, 1–17. [Google Scholar] [CrossRef]

- Ghosh, R. A Recurrent Neural Network based deep learning model for offline signature verification and recognition system. Expert Syst. Appl. 2021, 168, 114249. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

| Algorithms | Parameter Settings |

|---|---|

| HyAdamC | , , , |

| SGD | |

| RMSProp | Learning rate = , , |

| Adam | , , |

| AdamW | , , |

| Adagrad | , , |

| AdaDelta | , , |

| Rprop | , , , step sizes |

| Yogi | , , , |

| Fromage | |

| TAdam | , , , , |

| diffGrad | , , |

| Models | |||

|---|---|---|---|

| HyAdamC-v1 | 0 | 0 | |

| HyAdamC-v2 | 0 | 1 | |

| HyAdamC-v3 | 1 | 0 | |

| HyAdamC-v4 | 1 | 1 | |

| HyAdamC-v5 | 2 | 0 | |

| HyAdamC-v6 | 2 | 1 |

| VGG-16 | VGG-19 | |||

|---|---|---|---|---|

| Models | Batch 64 | Batch 128 | Batch 64 | Batch 128 |

| HyAdamC-v1 | 0.894 | 0.902 | 0.885 | 0.900 |

| HyAdamC-v2 | 0.894 | 0.894 | 0.887 | 0.885 |

| HyAdamC-v3 | 0.894 | 0.900 | 0.886 | 0.899 |

| HyAdamC-v4 | 0.888 | 0.906 | 0.881 | 0.894 |

| HyAdamC-v5 | 0.899 | 0.900 | 0.890 | 0.899 |

| HyAdamC-v6 | 0.887 | 0.899 | 0.889 | 0.896 |

| ResNet-18 | ResNet-101 | |||

|---|---|---|---|---|

| Models | Batch 64 | Batch 128 | Batch 64 | Batch 128 |

| HyAdamC-v1 | 0.934 | 0.935 | 0.940 | 0.939 |

| HyAdamC-v2 | 0.936 | 0.937 | 0.937 | 0.933 |

| HyAdamC-v3 | 0.934 | 0.936 | 0.940 | 0.937 |

| HyAdamC-v4 | 0.933 | 0.935 | 0.940 | 0.938 |

| HyAdamC-v5 | 0.935 | 0.938 | 0.939 | 0.937 |

| HyAdamC-v6 | 0.936 | 0.932 | 0.942 | 0.942 |

| DenseNet-121 | DenseNet-169 | |||

|---|---|---|---|---|

| Models | Batch 64 | Batch 128 | Batch 64 | Batch 128 |

| HyAdamC-v1 | 0.939 | 0.938 | 0.944 | 0.942 |

| HyAdamC-v2 | 0.942 | 0.938 | 0.943 | 0.942 |

| HyAdamC-v3 | 0.943 | 0.940 | 0.942 | 0.945 |

| HyAdamC-v4 | 0.941 | 0.943 | 0.944 | 0.942 |

| HyAdamC-v5 | 0.945 | 0.943 | 0.943 | 0.944 |

| HyAdamC-v6 | 0.942 | 0.943 | 0.943 | 0.941 |

| VGG-16 | VGG-19 | |||

|---|---|---|---|---|

| Methods | Batch 64 | Batch 128 | Batch 64 | Batch 128 |

| SGD | 0.820 | 0.674 | 0.790 | 0.688 |

| RMSProp | 0.100 | 0.100 | 0.100 | 0.100 |

| Adam | 0.100 | 0.871 | 0.100 | 0.100 |

| AdamW | 0.100 | 0.100 | 0.100 | 0.100 |

| Adagrad | 0.746 | 0.738 | 0.740 | 0.742 |

| AdaDelta | 0.100 | 0.100 | 0.100 | 0.100 |

| Rprop | 0.123 | 0.223 | 0.149 | 0.166 |

| Yogi | 0.100 | 0.100 | 0.100 | 0.100 |

| Fromage | 0.883 | 0.897 | 0.859 | 0.882 |

| TAdam | 0.875 | 0.889 | 0.871 | 0.887 |

| diffGrad | 0.875 | 0.886 | 0.100 | 0.878 |

| HyAdamC-Basic | 0.894 | 0.902 | 0.885 | 0.900 |

| HyAdamC-Scale | 0.899 | 0.900 | 0.890 | 0.899 |

| HyAdamC-Basic: W/T/L | 11/0/0 | 11/0/0 | 11/0/0 | 11/0/0 |

| HyAdamC-Scale: W/T/L | 11/0/0 | 11/0/0 | 11/0/0 | 11/0/0 |

| ResNet-18 | ResNet-101 | |||

|---|---|---|---|---|

| Methods | Batch 64 | Batch 128 | Batch 64 | Batch 128 |

| SGD | 0.881 | 0.860 | 0.854 | 0.825 |

| RMSProp | 0.924 | 0.920 | 0.912 | 0.911 |

| Adam | 0.931 | 0.923 | 0.934 | 0.929 |

| AdamW | 0.923 | 0.927 | 0.922 | 0.928 |

| Adagrad | 0.914 | 0.910 | 0.924 | 0.918 |

| AdaDelta | 0.932 | 0.931 | 0.931 | 0.936 |

| Rprop | 0.498 | 0.533 | 0.102 | 0.302 |

| Yogi | 0.929 | 0.927 | 0.928 | 0.931 |

| Fromage | 0.894 | 0.921 | 0.911 | 0.912 |

| TAdam | 0.934 | 0.932 | 0.939 | 0.936 |

| diffGrad | 0.926 | 0.928 | 0.933 | 0.938 |

| HyAdamC-Basic | 0.934 | 0.935 | 0.940 | 0.939 |

| HyAdamC-Scale | 0.935 | 0.938 | 0.939 | 0.937 |

| HyAdamC-Basic: W/T/L | 10/1/0 | 11/0/0 | 11/0/0 | 11/0/0 |

| HyAdamC-Scale: W/T/L | 11/0/0 | 11/0/0 | 10/1/0 | 10/0/1 |

| DenseNet-121 | DenseNet-169 | |||

|---|---|---|---|---|

| Methods | Batch 64 | Batch 128 | Batch 64 | Batch 128 |

| SGD | 0.865 | 0.835 | 0.866 | 0.830 |

| RMSProp | 0.906 | 0.923 | 0.925 | 0.921 |

| Adam | 0.933 | 0.934 | 0.933 | 0.937 |

| AdamW | 0.928 | 0.929 | 0.932 | 0.928 |

| Adagrad | 0.925 | 0.921 | 0.920 | 0.919 |

| AdaDelta | 0.937 | 0.931 | 0.932 | 0.938 |

| Rprop | 0.114 | 0.416 | 0.104 | 0.367 |

| Yogi | 0.933 | 0.928 | 0.927 | 0.916 |

| Fromage | 0.907 | 0.916 | 0.907 | 0.907 |

| TAdam | 0.933 | 0.931 | 0.937 | 0.937 |

| diffGrad | 0.936 | 0.935 | 0.937 | 0.933 |

| HyAdamC-Basic | 0.939 | 0.938 | 0.944 | 0.942 |

| HyAdamC-Scale | 0.945 | 0.943 | 0.943 | 0.944 |

| HyAdamC-Basic: W/T/L | 11/0/0 | 11/0/0 | 11/0/0 | 11/0/0 |

| HyAdamC-Scale: W/T/L | 11/0/0 | 11/0/0 | 11/0/0 | 11/0/0 |

| Methods | Test Accuracy | HyAdamC-Basic − Other Method | HyAdamC-Scale − Other Method |

|---|---|---|---|

| SGD | 0.8 | 0.116 | 0.118 |

| RMSProp | 0.915 | 0.001 | 0.003 |

| Adam | 0.92 | −0.004 | −0.002 |

| AdamW | 0.914 | 0.002 | 0.004 |

| Adagrad | 0.885 | 0.031 | 0.033 |

| AdaDelta | 0.92 | −0.004 | −0.002 |

| Rprop | 0.341 | 0.575 | 0.577 |

| Yogi | 0.921 | −0.005 | −0.003 |

| Fromage | 0.891 | 0.025 | 0.027 |

| TAdam | 0.918 | −0.002 | 0 |

| diffGrad | 0.911 | 0.005 | 0.007 |

| HyAdamC-Basic | 0.916 | - | - |

| HyAdamC-Scale | 0.918 | - | - |

| Win/Tie/Lose (HyAdamC-Basic) | 7/0/4 | ||

| Win/Tie/Lose (HyAdamC-Scale) | 7/1/3 | ||

| HyAdamC-Basic | HyAdamC-Scale | |||

|---|---|---|---|---|

| Epochs | Train Loss | Val.Acc. | Train Loss | Val.Acc. |

| 50 | 0.3075 | 0.8694 | 0.3052 | 0.8790 |

| 100 | 0.2616 | 0.9027 | 0.2859 | 0.8901 |

| 200 | 0.1739 | 0.9233 | 0.1834 | 0.9223 |

| 500 | 0.0973 | 0.9199 | 0.1227 | 0.9222 |

| 1000 | 0.0684 | 0.9184 | 0.0753 | 0.9192 |

| 2000 | 0.0524 | 0.9200 | 0.0554 | 0.9190 |

| Maximum Val. Acc. | Epochs | Maximum Val. Acc. | Epochs | |

| 0.9254 | 267 | 0.9256 | 373 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, K.-S.; Choi, Y.-S. HyAdamC: A New Adam-Based Hybrid Optimization Algorithm for Convolution Neural Networks. Sensors 2021, 21, 4054. https://doi.org/10.3390/s21124054

Kim K-S, Choi Y-S. HyAdamC: A New Adam-Based Hybrid Optimization Algorithm for Convolution Neural Networks. Sensors. 2021; 21(12):4054. https://doi.org/10.3390/s21124054

Chicago/Turabian StyleKim, Kyung-Soo, and Yong-Suk Choi. 2021. "HyAdamC: A New Adam-Based Hybrid Optimization Algorithm for Convolution Neural Networks" Sensors 21, no. 12: 4054. https://doi.org/10.3390/s21124054

APA StyleKim, K.-S., & Choi, Y.-S. (2021). HyAdamC: A New Adam-Based Hybrid Optimization Algorithm for Convolution Neural Networks. Sensors, 21(12), 4054. https://doi.org/10.3390/s21124054