Aircraft Fuselage Corrosion Detection Using Artificial Intelligence

Abstract

1. Introduction

- an evaluation of the state-of-the-art Deep Learning models for corrosion monitoring;

- a Deep Learning model that can be trained using a small number of corrosion images, allowing for an automated corrosion analysis based on a reduced number of data samples labeled by specialists;

- a Deep Learning model that performs aircraft corrosion identification with startling accuracy; and

- a robust model validation achieved with experimental data and specialist supervision using a portable non-destructive device.

2. Materials and Methods

2.1. Aircraft Fuselage

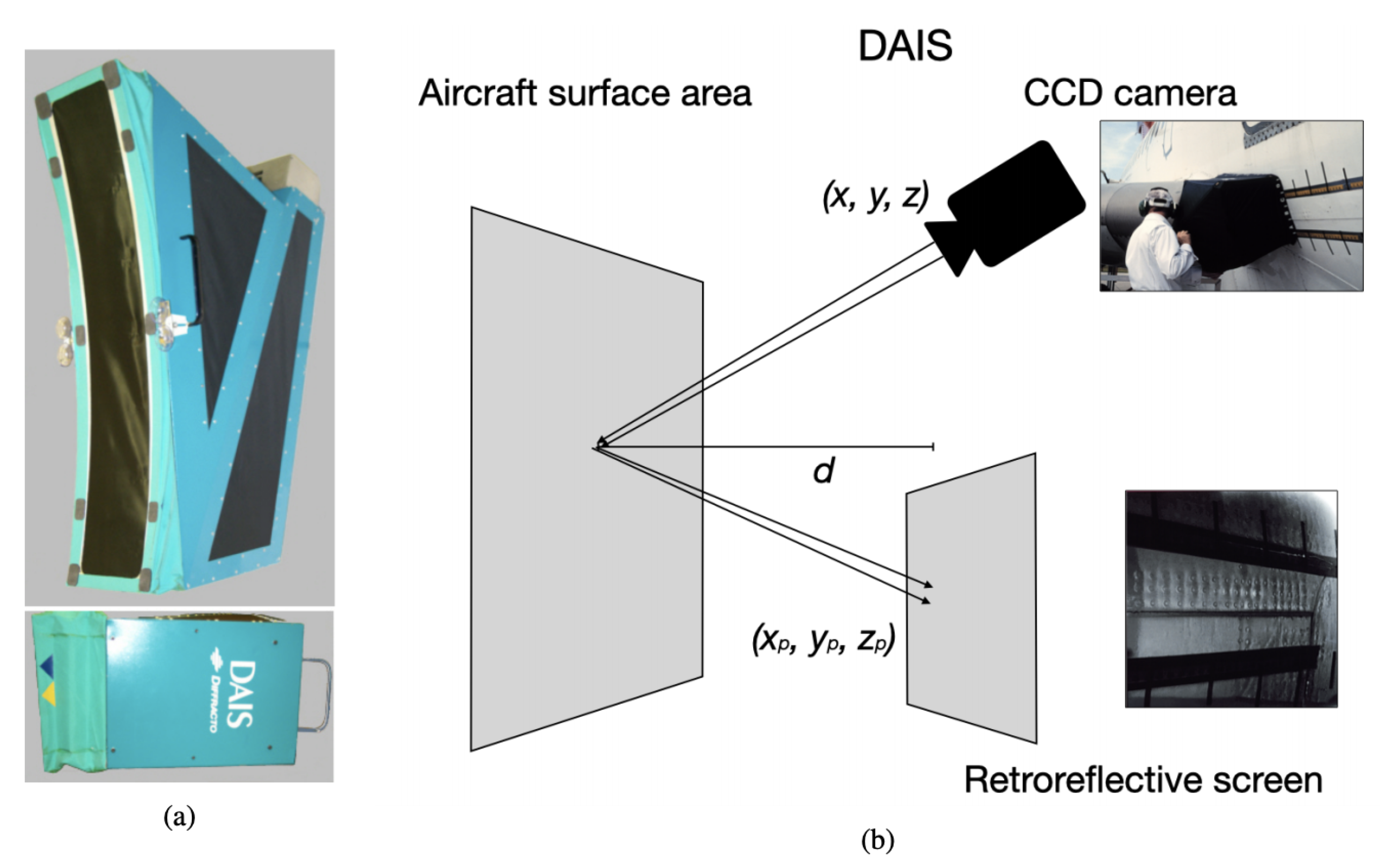

2.2. D-Sight Aircraft Inspection System (DAIS)

2.3. AI and Deep Neural Networks

2.3.1. CNN-Based Classification

2.3.2. Transfer Learning

3. Experimental Analysis

3.1. DAIS Image Dataset

3.2. Experimental Setup and Training

3.3. Evaluation Metrics

4. Results

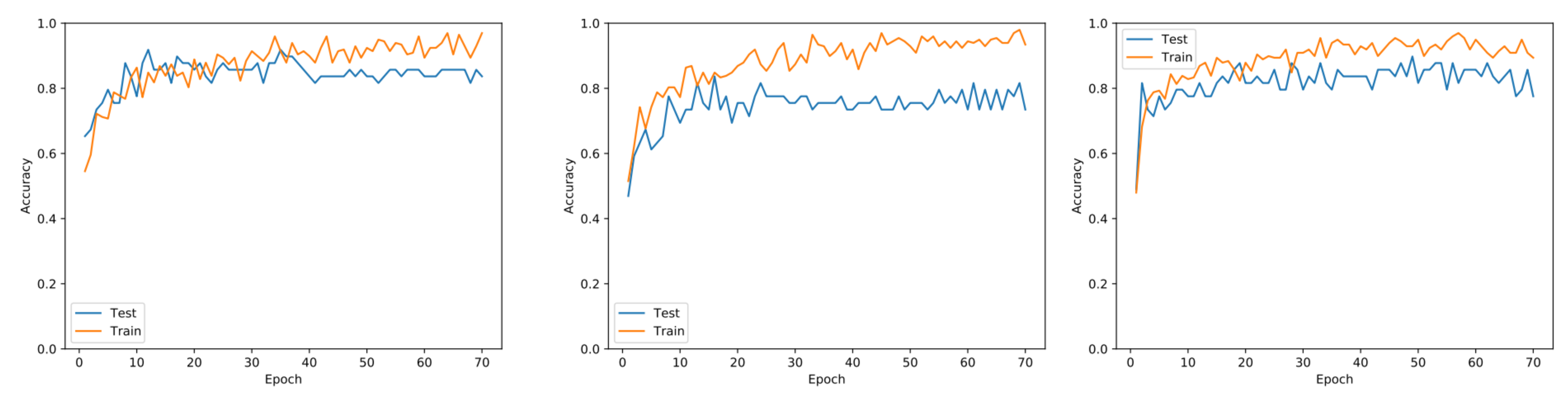

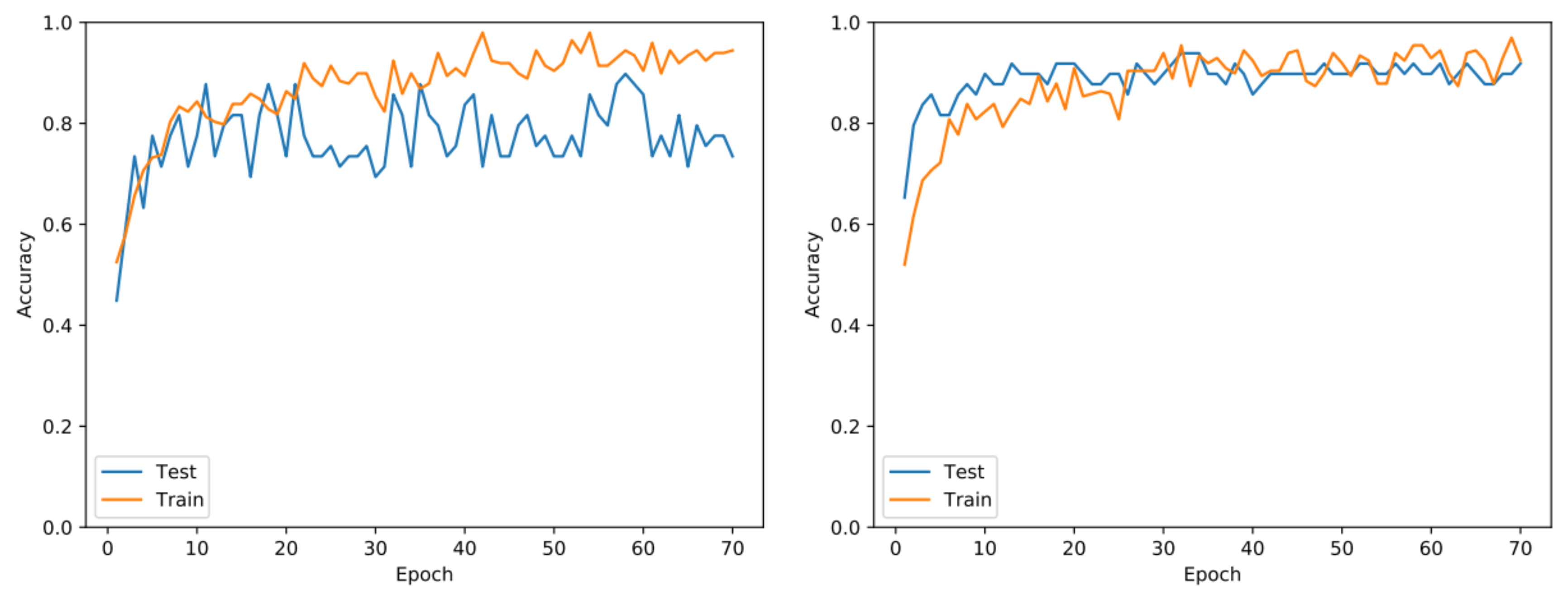

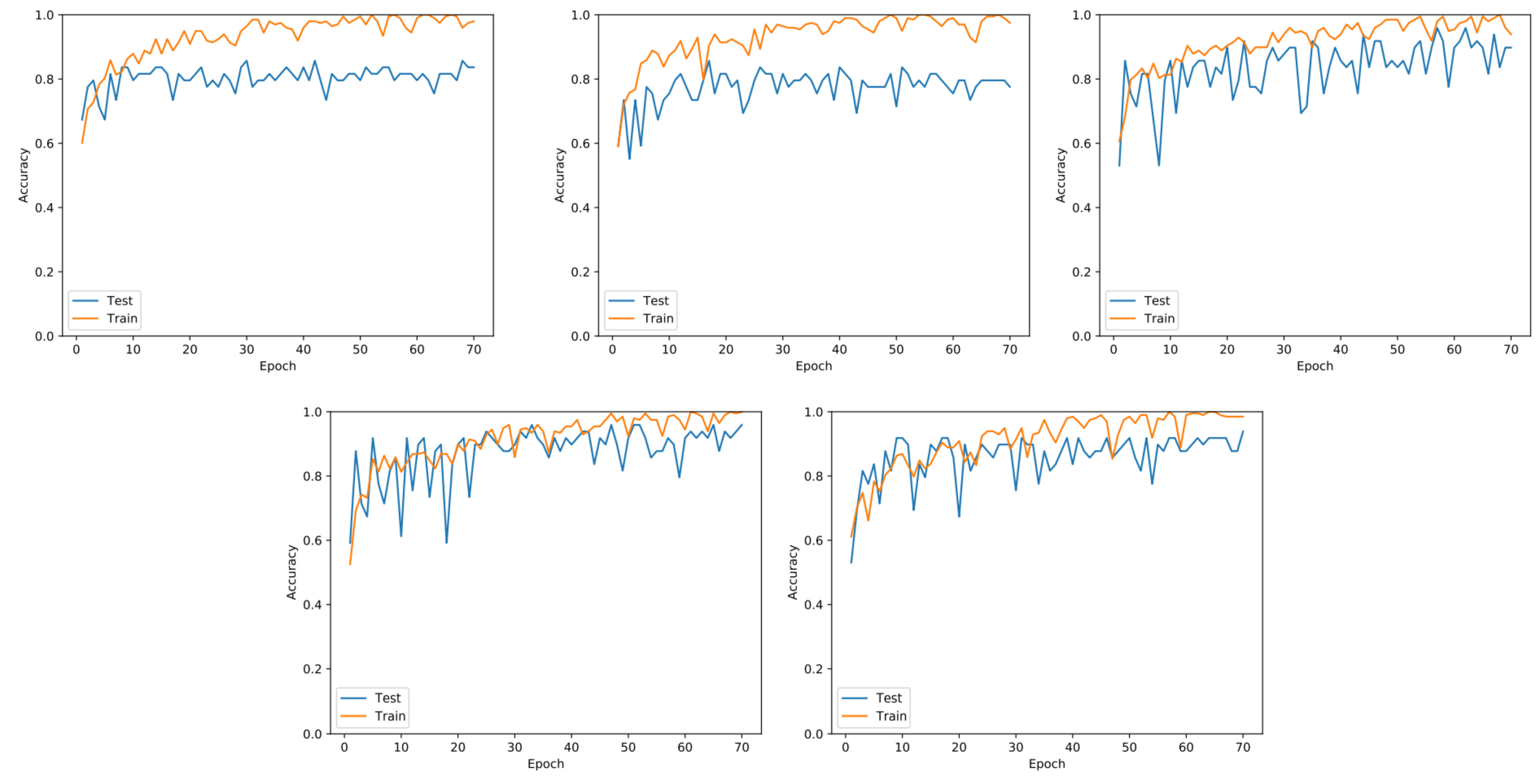

4.1. Performance Evaluation

4.2. Discussion

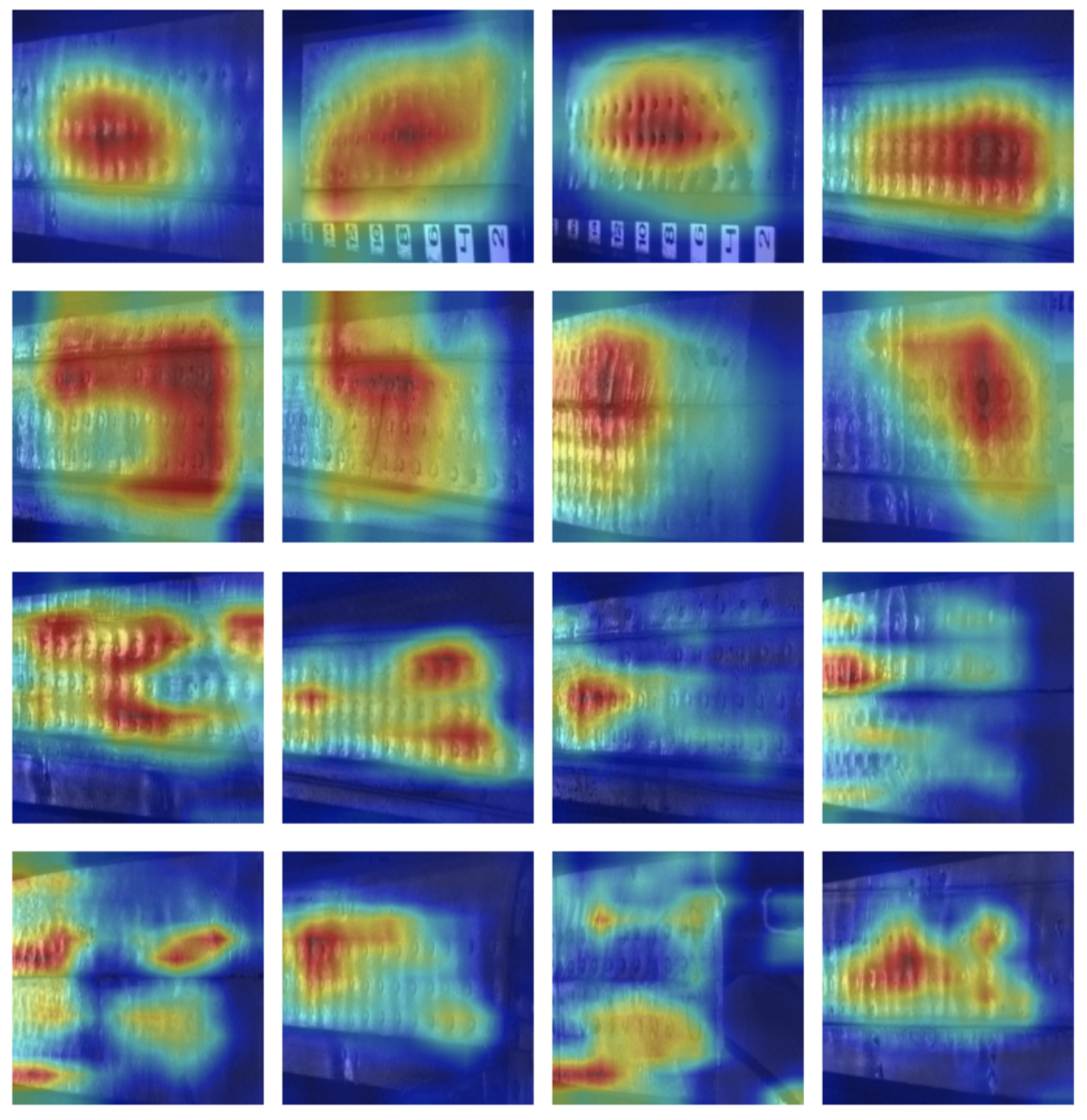

Visual Interpretability

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Sample Availability

References

- Bonnin-Pascual, F.; Ortiz, A. On the use of robots and vision technologies for the inspection of vessels: A survey on recent advances. Ocean Eng. 2019, 190, 106420. [Google Scholar] [CrossRef]

- Bastian, B.T.; Jaspreeth, N.; Ranjith, S.K.; Jiji, C. Visual inspection and characterization of external corrosion in pipelines using deep neural network. NDT Int. 2019, 107, 102134. [Google Scholar] [CrossRef]

- Ali, R.; Cha, Y.J. Subsurface damage detection of a steel bridge using deep learning and uncooled micro-bolometer. Constr. Build. Mater. 2019, 226, 376–387. [Google Scholar] [CrossRef]

- Sajedi, S.; Huang, Q. Reliability-based life-cycle-cost comparison of different corrosion management strategies. Eng. Struct. 2019, 186, 52–63. [Google Scholar] [CrossRef]

- Seidi, F.; Crespy, D. Fighting corrosion with stimuli-responsive polymer conjugates. Chem. Commun. 2020, 56, 11931–11940. [Google Scholar] [CrossRef]

- Knight, S.; Salagaras, M.; Trueman, A. The study of intergranular corrosion in aircraft aluminium alloys using X-ray tomography. Corros. Sci. 2011, 53, 727–734. [Google Scholar] [CrossRef]

- Ren, K.; Gao, S.; Liu, P.; Dong, Y. Influence of environmental factors on corrosion damage of aircraft structure. Theor. Appl. Mech. Lett. 2011, 1, 061004. [Google Scholar] [CrossRef]

- Liao, M.; Bellinger, N.; Komorowski, J. Modeling the effects of prior exfoliation corrosion on fatigue life of aircraft wing skins. Int. J. Fatigue 2003, 25, 1059–1067. [Google Scholar] [CrossRef]

- Zhang, S.; He, Y.; Zhang, T.; Wang, G.; Du, X. Long-Term Atmospheric Corrosion Behavior of Epoxy Prime Coated Aluminum Alloy 7075-T6 in Coastal Environment. Materials 2018, 11, 965. [Google Scholar] [CrossRef]

- Liu, Z.; Forsyth, D.; Komorowski, J.P.; Hanasaki, K.; Kirubarajan, T. Survey: State of the Art in NDE Data Fusion Techniques. IEEE Trans. Instrum. Meas. 2007, 56, 2435–2451. [Google Scholar] [CrossRef]

- Chulkov, A.; Nesteruk, D.; Vavilov, V.; Moskovchenko, A.; Saeed, N.; Omar, M. Optimizing input data for training an artificial neural network used for evaluating defect depth in infrared thermographic nondestructive testing. Infrared Phys. Technol. 2019, 102, 103047. [Google Scholar] [CrossRef]

- Liao, M.; Renaud, G.; Bellinger, N. Fatigue modeling for aircraft structures containing natural exfoliation corrosion. Int. J. Fatigue 2007, 29, 677–686. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, W.; Wang, X.; Sun, B. Lamb-Wave-Based Tomographic Imaging Techniques for Hole-Edge Corrosion Monitoring in Plate Structures. Materials 2016, 9, 916. [Google Scholar] [CrossRef]

- Sun, H.; Yi, J.; Xu, Y.; Wang, Y.; Qing, X. Identification and Compensation Technique of Non-Uniform Temperature Field for Lamb Wave-and Multiple Sensors-Based Damage Detection. Sensors 2019, 19, 2930. [Google Scholar] [CrossRef] [PubMed]

- Chang, M.; Yuan, S.; Guo, F. Corrosion monitoring using a new compressed sensing-based tomographic method. Ultrasonics 2020, 101, 105988. [Google Scholar] [CrossRef]

- Towsyfyan, H.; Biguri, A.; Boardman, R.; Blumensath, T. Successes and challenges in non-destructive testing of aircraft composite structures. Chin. J. Aeronaut. 2019. [Google Scholar] [CrossRef]

- Prabhu, D.R.; Winfree, W.P. Neural Network Based Processing of Thermal NDE Data for Corrosion Detection. In Review of Progress in Quantitative Nondestructive Evaluation; Thompson, D.O., Chimenti, D.E., Eds.; Springer: Boston, MA, USA, 1993; Volume 12, pp. 775–782. [Google Scholar] [CrossRef]

- Bellinger, N.C.; Komorowski, J.P. Corrosion pillowing stresses in fuselage lap joints. AIAA J. 1997, 35, 317–320. [Google Scholar] [CrossRef]

- Tan, X.M.; Chen, Y.L.; Ping, J.I.N. Corrosion Fatigue Life Prediction of Aircraft Structure Based on Fuzzy Reliability Approach. Chin. J. Aeronaut. 2005, 18, 346–351. [Google Scholar] [CrossRef]

- Hoeppner, D.W.; Arriscorreta, C.A. Exfoliation Corrosion and Pitting Corrosion and Their Role in Fatigue Predictive Modeling: State-of-the-Art Review. Int. J. Aerosp. Eng. 2012, 2012. [Google Scholar] [CrossRef]

- Gialanella, S.; Malandruccolo, A. Corrosion. In Aerospace Alloys; Springer International Publishing: Berlin, Germany, 2020; pp. 439–499. [Google Scholar] [CrossRef]

- Main, B.; Molent, L.; Singh, R.; Barter, S. Fatigue crack growth lessons from thirty-five years of the Royal Australian Air Force F/A-18 A/B Hornet Aircraft Structural Integrity Program. Int. J. Fatigue 2020, 133, 105426. [Google Scholar] [CrossRef]

- Ganther, W.; Paterson, D.; Lewis, C.; Isaacs, P.; Galea, S.; Meunier, C.; Mangeon, G.; Cole, I. Monitoring Aircraft Microclimate and Corrosion. Procedia Eng. 2017, 188, 369–376. [Google Scholar] [CrossRef]

- Li, L.; Chakik, M.; Prakash, R. A Review of Corrosion in Aircraft Structures and Graphene-Based Sensors for Advanced Corrosion Monitoring. Sensors 2021, 21, 2908. [Google Scholar] [CrossRef]

- Phan, H.; Nguyen, H.L.; Chén, O.Y.; Pham, L.D.; Koch, P.; McLoughlin, I.V.; Mertins, A. Multi-view Audio and Music Classification. In Proceedings of the ICASSP 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021. [Google Scholar]

- Spadon, G.; Hong, S.; Brandoli, B.; Matwin, S.; Rodrigues, J.F., Jr.; Sun, J. Pay Attention to Evolution: Time Series Forecasting with Deep Graph-Evolution Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef]

- Gupta, T.; Kamath, A.; Kembhavi, A.; Hoiem, D. Towards General Purpose Vision Systems. arXiv 2021, arXiv:2104.00743. [Google Scholar]

- Malekzadeh, T.; Abdollahzadeh, M.; Nejati, H.; Cheung, N. Aircraft Fuselage Defect Detection using Deep Neural Networks. arXiv 2017, arXiv:1712.09213. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Miranda, J.; Veith, J.; Larnier, S.; Herbulot, A.; Devy, M. Machine learning approaches for defect classification on aircraft fuselage images aquired by an UAV. In Proceedings of the Fourteenth International Conference on Quality Control by Artificial Vision, Mulhouse, France, 15–17 May 2019; Cudel, C., Bazeille, S., Verrier, N., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2019; Volume 11172, pp. 49–56. [Google Scholar] [CrossRef]

- Fernandes, L.C.; Souza, J.R.; Pessin, G.; Shinzato, P.Y.; Sales, D.O.; Mendes, C.C.T.; Prado, M.; Klaser, R.L.; Magalhães, A.C.; Hata, A.Y.; et al. CaRINA Intelligent Robotic Car: Architectural design and applications. J. Syst. Archit. 2014, 60, 372–392. [Google Scholar] [CrossRef]

- Rodrigues, J.F., Jr.; Gutierrez, M.A.; Spadon, G.; Brandoli, B.; Amer-Yahia, S. LIG-Doctor: Efficient patient trajectory prediction using bidirectional minimal gated-recurrent networks. Inf. Sci. 2021, 545, 813–827. [Google Scholar] [CrossRef]

- Dos Santos de Arruda, M.; Spadon, G.; Rodrigues, J.F.; Gonçalves, W.N.; Brandoli, B. Recognition of Endangered Pantanal Animal Species using Deep Learning Methods. In Proceedings of the 2018 International Joint Conference on Neural Networks, IJCNN 2018, Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Tetila, E.; Machado, B.B.; de Souza Belete, N.A.; Guimaraes, D.A.; Pistori, H. Identification of Soybean Foliar Diseases Using Unmanned Aerial Vehicle Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2190–2194. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–16 December 2015. [Google Scholar]

- Mitani, A.; Huang, A.; Venugopalan, S.; Corrado, G.S.; Peng, L.; Webster, D.R.; Hammel, N.; Liu, Y.; Varadarajan, A.V. Detection of anaemia from retinal fundus images via deep learning. Nat. Biomed. Eng. 2020, 4, 18–27. [Google Scholar] [CrossRef] [PubMed]

- Iandola, F.N.; Moskewicz, M.W.; Ashraf, K.; Han, S.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and <1 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Flennerhag, S.; Rusu, A.A.; Pascanu, R.; Visin, F.; Yin, H.; Hadsell, R. Meta-Learning with Warped Gradient Descent. In Proceedings of the 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020; Available online: OpenReview.net (accessed on 10 June 2021).

- Abati, D.; Tomczak, J.; Blankevoort, T.; Calderara, S.; Cucchiara, R.; Bejnordi, B.E. Conditional Channel Gated Networks for Task-Aware Continual Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 3930–3939. [Google Scholar] [CrossRef]

- Jiang, X.; Ding, L.; Havaei, M.; Jesson, A.; Matwin, S. Task Adaptive Metric Space for Medium-Shot Medical Image Classification. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2019; Springer International Publishing: Berlin, Germany, 2019. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 1–26 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Bressem, K.K.; Adams, L.C.; Erxleben, C.; Hamm, B.; Niehues, S.M.; Vahldiek, J.L. Comparing different deep learning architectures for classification of chest radiographs. Sci. Rep. 2020, 10, 13590. [Google Scholar] [CrossRef] [PubMed]

- Xie, Q.; Luong, M.T.; Hovy, E.; Le, Q.V. Self-Training With Noisy Student Improves ImageNet Classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 10684–10695. [Google Scholar] [CrossRef]

- Kolesnikov, A.; Beyer, L.; Zhai, X.; Puigcerver, J.; Yung, J.; Gelly, S.; Houlsby, N. Big Transfer (BiT): General Visual Representation Learning. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Berlin, Germany, 2020; pp. 491–507. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Abhishek Das, R.V.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

| Accuracy | |||||

|---|---|---|---|---|---|

| Architecture | Reference | Max Value | Avg Last 15 | Max Last 15 | Avg Last 5 |

| Inceptionv3 | [40] | ||||

| Resnet-101 | [44] | ||||

| NasNet | [45] | ||||

| SqueezeNet | [38] | ||||

| DenseNet-121 | [39] | ||||

| DenseNet-201 | [39] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brandoli, B.; de Geus, A.R.; Souza, J.R.; Spadon, G.; Soares, A.; Rodrigues, J.F., Jr.; Komorowski, J.; Matwin, S. Aircraft Fuselage Corrosion Detection Using Artificial Intelligence. Sensors 2021, 21, 4026. https://doi.org/10.3390/s21124026

Brandoli B, de Geus AR, Souza JR, Spadon G, Soares A, Rodrigues JF Jr., Komorowski J, Matwin S. Aircraft Fuselage Corrosion Detection Using Artificial Intelligence. Sensors. 2021; 21(12):4026. https://doi.org/10.3390/s21124026

Chicago/Turabian StyleBrandoli, Bruno, André R. de Geus, Jefferson R. Souza, Gabriel Spadon, Amilcar Soares, Jose F. Rodrigues, Jr., Jerzy Komorowski, and Stan Matwin. 2021. "Aircraft Fuselage Corrosion Detection Using Artificial Intelligence" Sensors 21, no. 12: 4026. https://doi.org/10.3390/s21124026

APA StyleBrandoli, B., de Geus, A. R., Souza, J. R., Spadon, G., Soares, A., Rodrigues, J. F., Jr., Komorowski, J., & Matwin, S. (2021). Aircraft Fuselage Corrosion Detection Using Artificial Intelligence. Sensors, 21(12), 4026. https://doi.org/10.3390/s21124026