Orientation-Invariant Spatio-Temporal Gait Analysis Using Foot-Worn Inertial Sensors

Abstract

1. Introduction

2. Materials and Methods

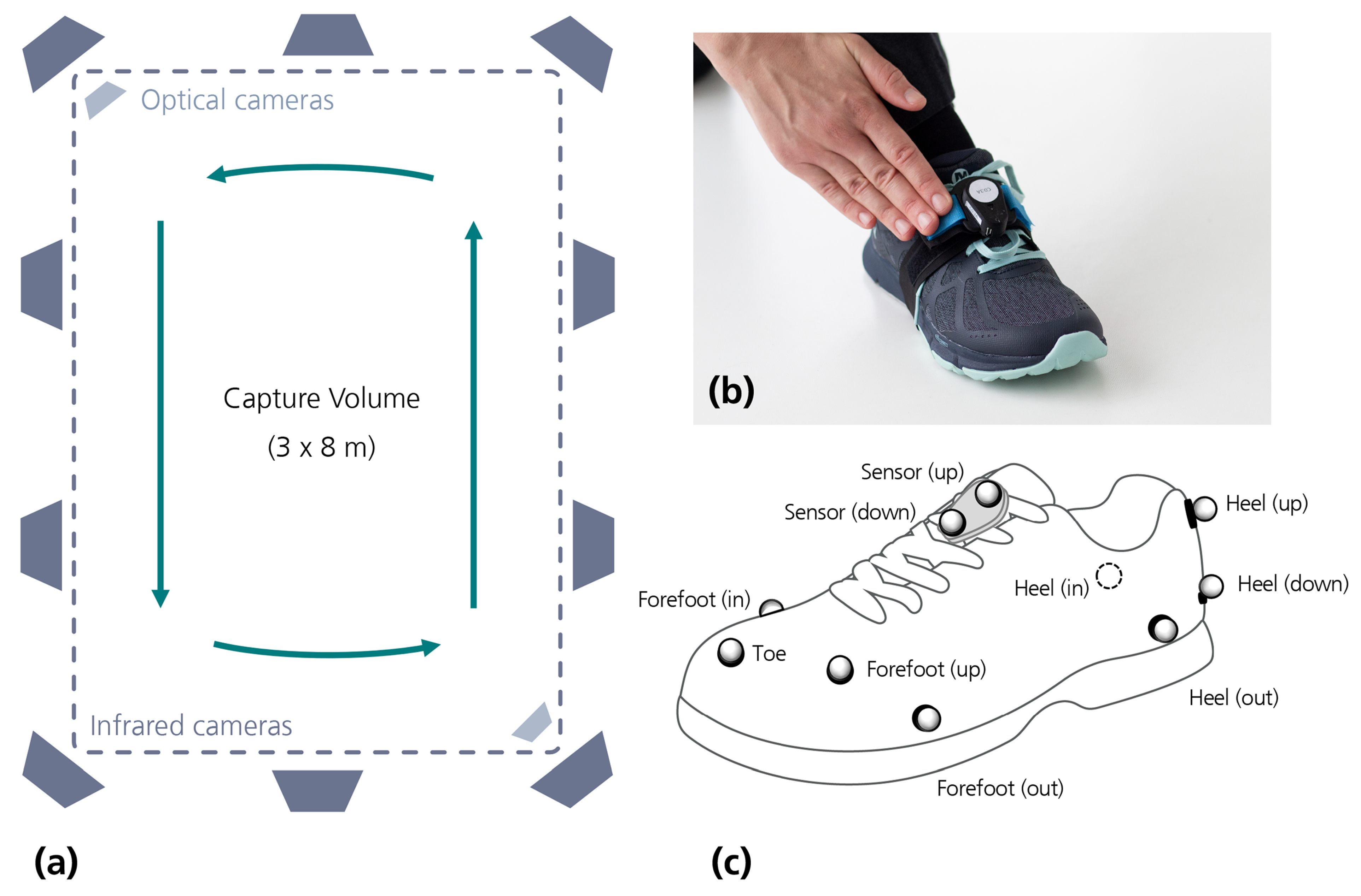

2.1. Wearable Sensors

2.2. Reference System

2.3. Data Collection

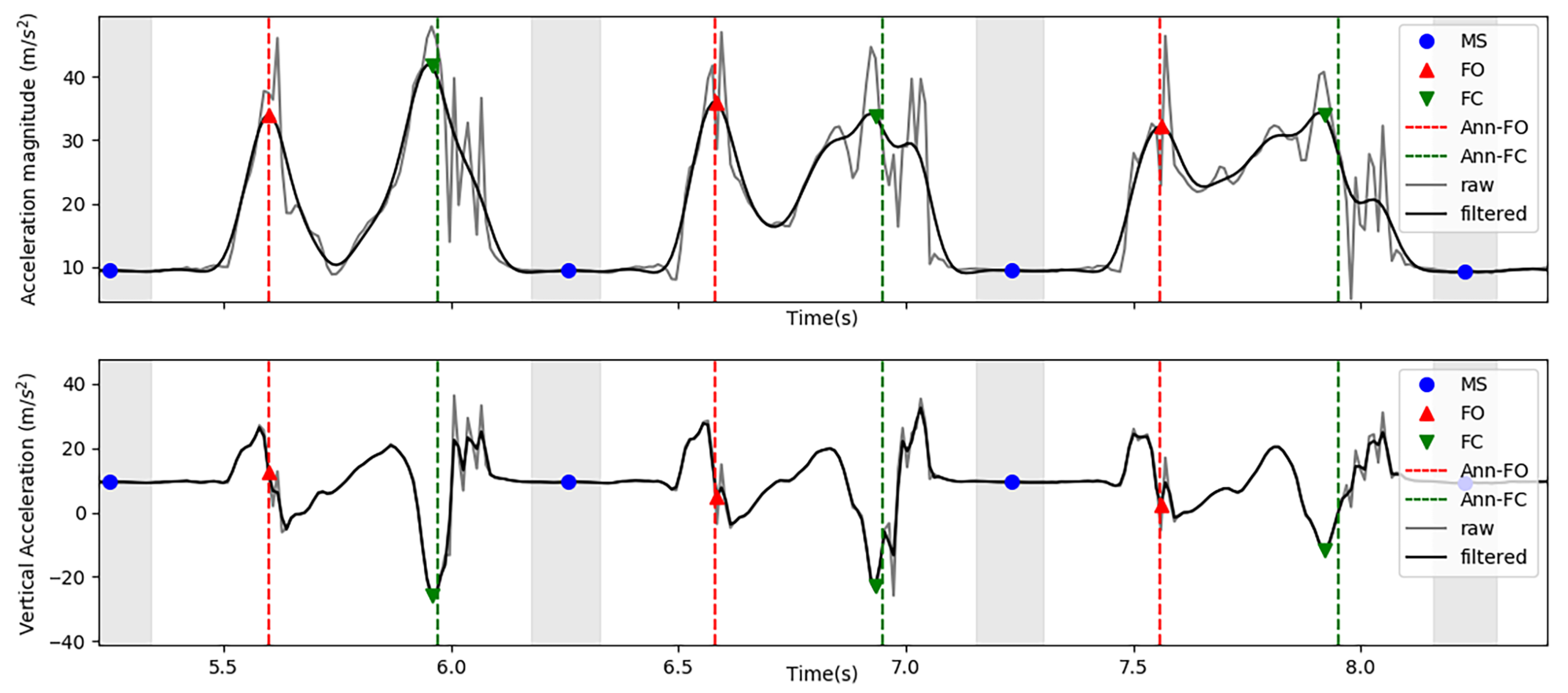

2.4. IMU Data Processing

2.4.1. Zero Velocity Detection

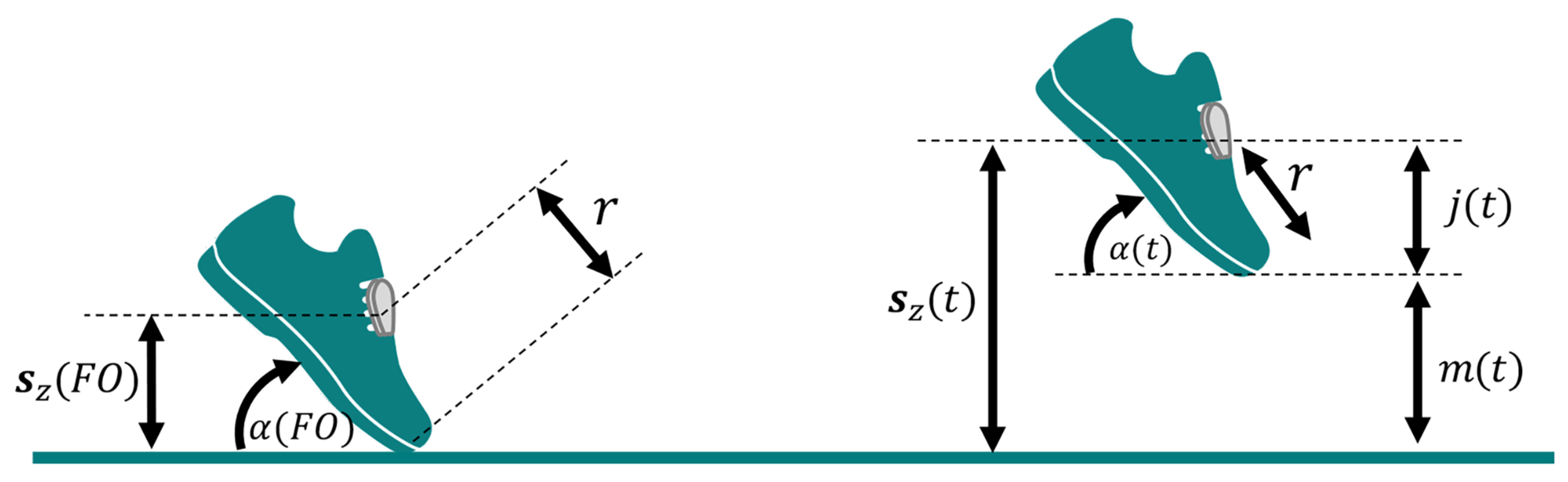

2.4.2. Orientation Estimation

Gyroscope Integration

Madgwick CF

Euston CF

2.4.3. Double Integration

Linear Dedrifting

Direct and Reverse Integration

2.4.4. Events Detection

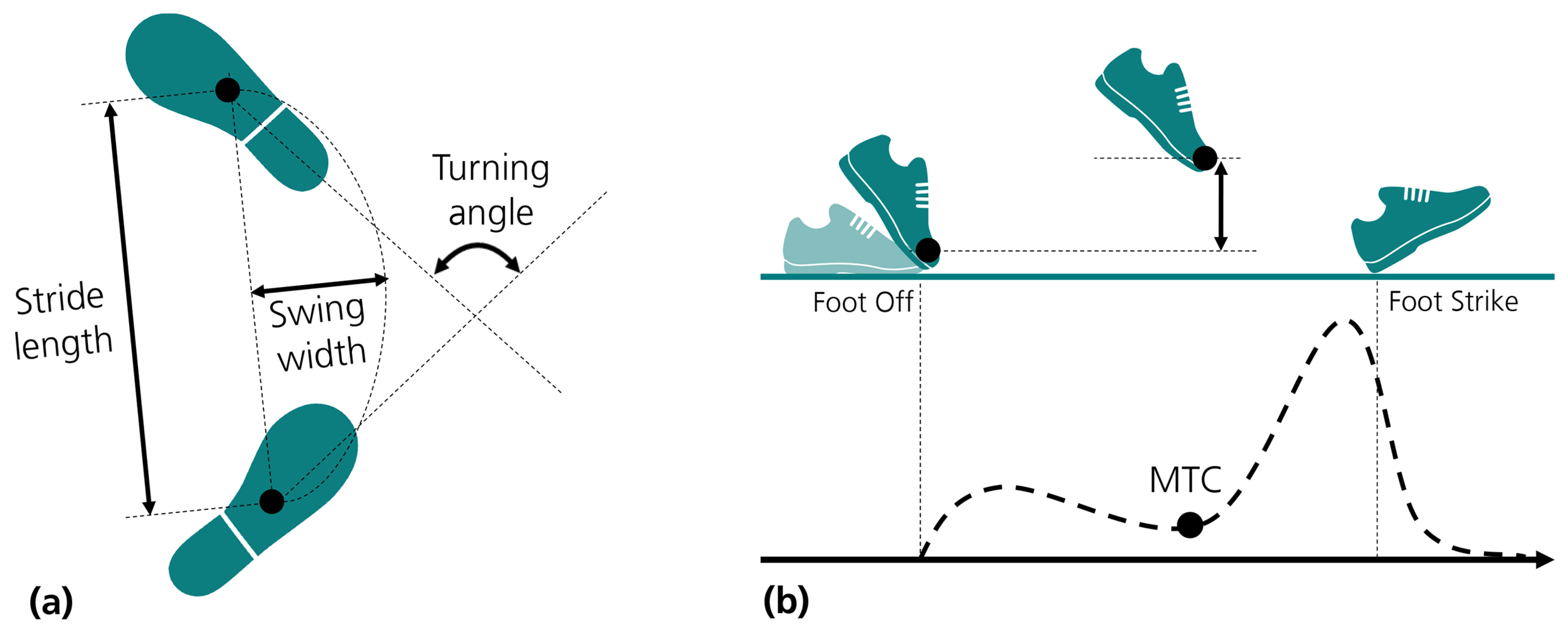

2.4.5. Gait Parameters Estimation

2.5. Experiments

2.5.1. Parameter Tuning and Algorithm Selection

2.5.2. Instrument Comparison and Validation

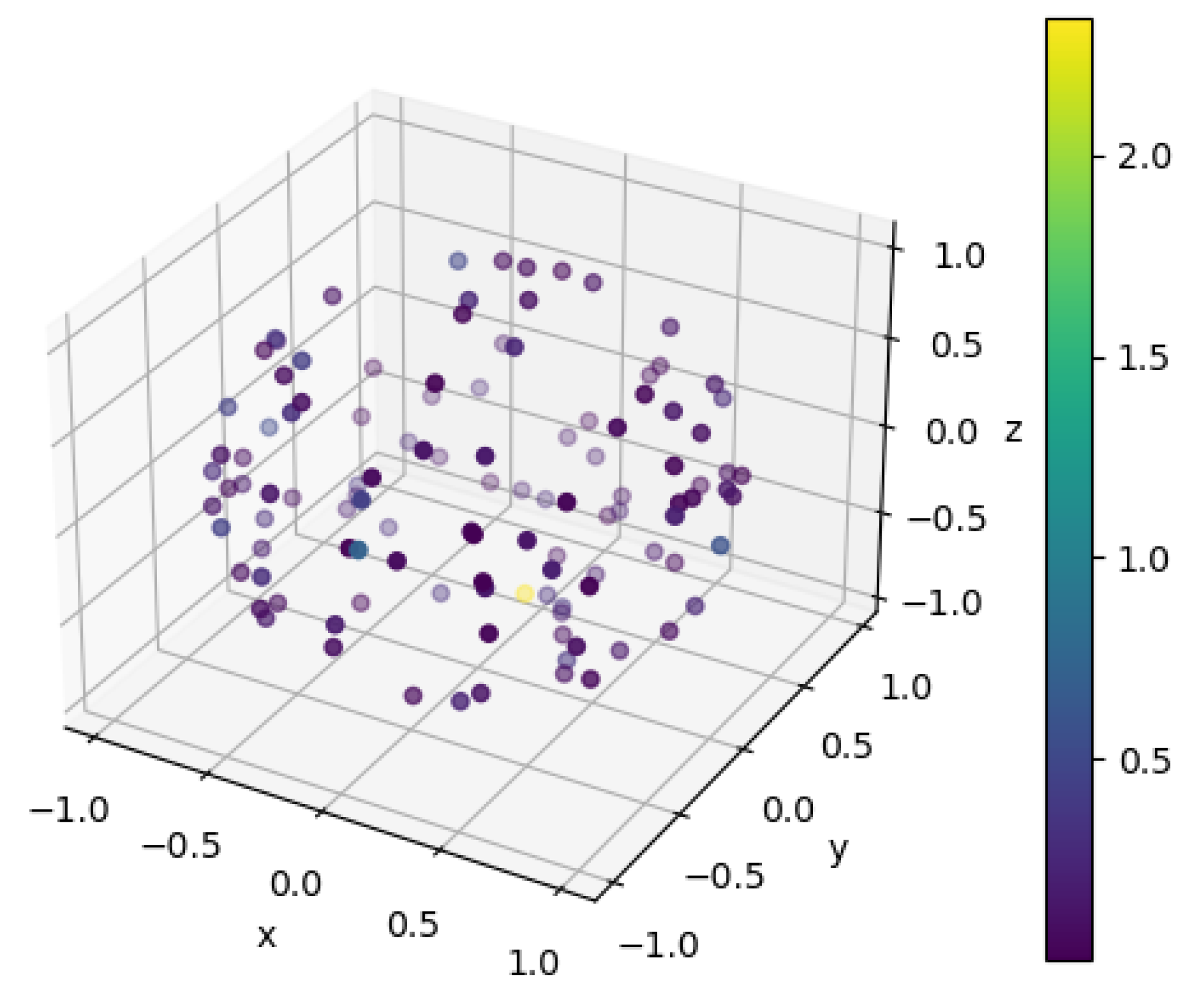

2.5.3. Orientation Invariance

3. Results

3.1. Algorithm Selection and Parameter Tuning

3.2. Validation

3.3. Orientation Invariance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CF | Complementary filter |

| FC | Initial foot contact |

| FO | Foot off |

| IMU | Inertial measurement unit |

| MTC | Minimum toe clearance |

| PCA | Principal component analysis |

| RMSD | Root mean square deviation |

| RMSE | Root mean square error |

| SL | Stride length |

| SW | Swing width |

| TRIAD | Tri-axial attitude determination |

| ZVI | Zero velocity interval |

References

- Akhtaruzzaman, M.; Shafie, A.A.; Khan, M.R. Gait analysis: Systems, technologies, and importance. J. Mech. Med. Biol. 2016, 16, 1630003. [Google Scholar] [CrossRef]

- Chen, S.; Lach, J.; Lo, B.; Yang, G.Z. Toward Pervasive Gait Analysis With Wearable Sensors: A Systematic Review. IEEE J. Biomed. Health Inform. 2016, 20, 1521–1537. [Google Scholar] [CrossRef]

- Marques, N.R.; Spinoso, D.H.; Cardoso, B.C.; Moreno, V.C.; Kuroda, M.H.; Navega, M.T. Is it possible to predict falls in older adults using gait kinematics? Clin. Biomech. 2018, 59, 15–18. [Google Scholar] [CrossRef]

- Chhetri, J.K.; Chan, P.; Vellas, B.; Cesari, M. Motoric Cognitive Risk Syndrome: Predictor of Dementia and Age-Related Negative Outcomes. Front. Med. 2017, 4. [Google Scholar] [CrossRef] [PubMed]

- Schoon, Y.; Bongers, K.; Van Kempen, J.; Melis, R.; Olde Rikkert, M. Gait speed as a test for monitoring frailty in community-dwelling older people has the highest diagnostic value compared to step length and chair rise time. Eur. J. Phys. Rehabil. Med. 2014, 50, 693–701. [Google Scholar] [PubMed]

- Mariani, B.; Hoskovec, C.; Rochat, S.; Büla, C.; Penders, J.; Aminian, K. 3D gait assessment in young and elderly subjects using foot-worn inertial sensors. J. Biomech. 2010, 43, 2999–3006. [Google Scholar] [CrossRef] [PubMed]

- Mariani, B.; Jiménez, M.C.; Vingerhoets, F.J.G.; Aminian, K. On-Shoe Wearable Sensors for Gait and Turning Assessment of Patients With Parkinson’s Disease. IEEE Trans. Biomed. Eng. 2013, 60, 155–158. [Google Scholar] [CrossRef]

- Rampp, A.; Barth, J.; Schülein, S.; Gaßmann, K.G.; Klucken, J.; Eskofier, B.M. Inertial sensor-based stride parameter calculation from gait sequences in geriatric patients. IEEE Trans. Biomed. Eng. 2015, 62, 1089–1097. [Google Scholar] [CrossRef]

- Kanzler, C.M.; Barth, J.; Rampp, A.; Schlarb, H.; Rott, F.; Klucken, J.; Eskofier, B.M. Inertial sensor based and shoe size independent gait analysis including heel and toe clearance estimation. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 5424–5427. [Google Scholar] [CrossRef]

- Petraglia, F.; Scarcella, L.; Pedrazzi, G.; Brancato, L.; Puers, R.; Costantino, C. Inertial sensors versus standard systems in gait analysis: A systematic review and meta-analysis. Eur. J. Phys. Rehabil. Med. 2019, 55, 265–280. [Google Scholar] [CrossRef]

- Tunca, C.; Pehlivan, N.; Ak, N.; Arnrich, B.; Salur, G.; Ersoy, C. Inertial Sensor-Based Robust Gait Analysis in Non-Hospital Settings for Neurological Disorders. Sensors 2017, 17, 825. [Google Scholar] [CrossRef]

- Peruzzi, A.; Della Croce, U.; Cereatti, A. Estimation of stride length in level walking using an inertial measurement unit attached to the foot: A validation of the zero velocity assumption during stance. J. Biomech. 2011, 44, 1991–1994. [Google Scholar] [CrossRef]

- Hannink, J.; Ollenschläger, M.; Kluge, F.; Roth, N.; Klucken, J.; Eskofier, B.M. Benchmarking Foot Trajectory Estimation Methods for Mobile Gait Analysis. Sensors 2017, 17, 1940. [Google Scholar] [CrossRef]

- Sabatini, A.M.; Martelloni, C.; Scapellato, S.; Cavallo, F. Assessment of walking features from foot inertial sensing. IEEE Trans. Biomed. Eng. 2005, 52, 486–494. [Google Scholar] [CrossRef] [PubMed]

- Kluge, F.; Gaßner, H.; Hannink, J.; Pasluosta, C.; Klucken, J.; Eskofier, B.M. Towards Mobile Gait Analysis: Concurrent Validity and Test-Retest Reliability of an Inertial Measurement System for the Assessment of Spatio-Temporal Gait Parameters. Sensors 2017, 17, 1522. [Google Scholar] [CrossRef] [PubMed]

- Hori, K.; Mao, Y.; Ono, Y.; Ora, H.; Hirobe, Y.; Sawada, H.; Inaba, A.; Orimo, S.; Miyake, Y. Inertial Measurement Unit-Based Estimation of Foot Trajectory for Clinical Gait Analysis. Front. Physiol. 2019, 10, 1530. [Google Scholar] [CrossRef] [PubMed]

- Madgwick, S.O.H.; Harrison, A.J.L.; Vaidyanathan, R. Estimation of IMU and MARG orientation using a gradient descent algorithm. In Proceedings of the 2011 IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 1–7. [Google Scholar] [CrossRef]

- Euston, M.; Coote, P.; Mahony, R.; Kim, J.; Hamel, T. A complementary filter for attitude estimation of a fixed-wing UAV. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 340–345. [Google Scholar] [CrossRef]

- Basso, M.; Martinelli, A.; Morosi, S.; Sera, F. A Real-Time GNSS/PDR Navigation System for Mobile Devices. Remote Sens. 2021, 13, 1567. [Google Scholar] [CrossRef]

- Leonardo, R.; Rodrigues, G.; Barandas, M.; Alves, P.; Santos, R.; Gamboa, H. Determination of the Walking Direction of a Pedestrian from Acceleration Data. In Proceedings of the 2019 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Falbriard, M.; Meyer, F.; Mariani, B.; Millet, G.P.; Aminian, K. Accurate Estimation of Running Temporal Parameters Using Foot-Worn Inertial Sensors. Front. Physiol. 2018, 9. [Google Scholar] [CrossRef] [PubMed]

- Mariani, B.; Rochat, S.; Büla, C.J.; Aminian, K. Heel and toe clearance estimation for gait analysis using wireless inertial sensors. IEEE Trans. Biomed. Eng. 2012, 59, 3162–3168. [Google Scholar] [CrossRef] [PubMed]

- Byun, S.; Lee, H.J.; Han, J.W.; Kim, J.S.; Choi, E.; Kim, K.W. Walking-speed estimation using a single inertial measurement unit for the older adults. PLoS ONE 2019, 14, e0227075. [Google Scholar] [CrossRef] [PubMed]

- Hannink, J.; Kautz, T.; Pasluosta, C.F.; Barth, J.; Schülein, S.; Gaßmann, K.; Klucken, J.; Eskofier, B.M. Mobile Stride Length Estimation With Deep Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2018, 22, 354–362. [Google Scholar] [CrossRef]

- Lambrecht, S.; Harutyunyan, A.; Tanghe, K.; Afschrift, M.; De Schutter, J.; Jonkers, I. Real-Time Gait Event Detection Based on Kinematic Data Coupled to a Biomechanical Model. Sensors 2017, 17, 671. [Google Scholar] [CrossRef] [PubMed]

- Hreljac, A.; Marshall, R.N. Algorithms to determine event timing during normal walking using kinematic data. J. Biomech. 2000, 33, 783–786. [Google Scholar] [CrossRef]

- Huxham, F.; Gong, J.; Baker, R.; Morris, M.; Iansek, R. Defining spatial parameters for non-linear walking. Gait Posture 2006, 23, 159–163. [Google Scholar] [CrossRef] [PubMed]

- Skog, I.; Nilsson, J.; Händel, P. Evaluation of zero-velocity detectors for foot-mounted inertial navigation systems. In Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation, Zurich, Switzerland, 15–17 September 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Shuster, M.D.; Oh, S.D. Three-axis attitude determination from vector observations. J. Guid. Control 1981, 4, 70–77. [Google Scholar] [CrossRef]

- Madgwick, S.O.H. An Efficient Orientation Filter for Inertial and Inertial/Magnetic Sensor Arrays; Technical Report, Report x-io; University of Bristol (UK): Bristol, UK, 2010. [Google Scholar]

- Altman, D.G.; Bland, J.M. Measurement in Medicine: The Analysis of Method Comparison Studies. Statistician 1983, 32, 307. [Google Scholar] [CrossRef]

- Ravi, D.K.; Gwerder, M.; König Ignasiak, N.; Baumann, C.R.; Uhl, M.; van Dieën, J.H.; Taylor, W.R.; Singh, N.B. Revealing the optimal thresholds for movement performance: A systematic review and meta-analysis to benchmark pathological walking behaviour. Neurosci. Biobehav. Rev. 2020, 108, 24–33. [Google Scholar] [CrossRef]

- Shoemake, K. Uniform Random Rotations. In Graphics Gems III (IBM Version); Elsevier: Amsterdam, The Netherlands, 1992; pp. 124–132. [Google Scholar] [CrossRef]

- Mukaka, M. A guide to appropriate use of Correlation coefficient in medical research. Malawi Med. J. 2012, 24, 69–71. [Google Scholar]

- Mariani, B.; Rouhani, H.; Crevoisier, X.; Aminian, K. Quantitative estimation of foot-flat and stance phase of gait using foot-worn inertial sensors. Gait Posture 2013, 37, 229–234. [Google Scholar] [CrossRef] [PubMed]

- Han, S.; Meng, Z.; Omisore, O.; Akinyemi, T.; Yan, Y. Random Error Reduction Algorithms for MEMS Inertial Sensor Accuracy Improvement—A Review. Micromachines 2020, 11, 1021. [Google Scholar] [CrossRef]

| Metric | Development Set (7 Subjects/45 Samples) | Validation Set (19 Subjects/115 Samples) |

|---|---|---|

| Height (cm) | ||

| Gender (female/male) | 4/3 | 9/10 |

| Weight (kg) | ||

| Foot size (cm) |

| Type | Methods | Coarse Grid-Search | Fine Grid-Search |

|---|---|---|---|

| Orientation estimation | Madgwick CF | ||

| Euston CF | |||

| Gyroscope integration | Not applicable | Not applicable | |

| Double integration | Direct and reverse integration | ||

| Linear dedrifting | Not applicable | Not applicable | |

Horizontal correction | {True, False} | {True} |

| Metric | IMU | VICON | Rel. Error | Abs. Error | RMSE |

|---|---|---|---|---|---|

| Stride dur. (s) | |||||

| Swing dur. (s) | |||||

| Stance dur. (s) | |||||

| Cad. (st/min) | |||||

| SL (cm) | |||||

| Speed (cm/s) | |||||

| SW (cm) | |||||

| MTC (cm) | |||||

| Turn angle () |

| Metric | IMU | VICON | Rel. Error | Abs. Error | Lim. Agr. | RMSE | Corr. † | Equival. ‡ |

|---|---|---|---|---|---|---|---|---|

| Stride dur. (s) | ||||||||

| Swing dur. (s) | ||||||||

| Stance dur. (s) | ||||||||

| Cad. (st/min) | ||||||||

| SL (cm) | ||||||||

| Speed (cm/s) | ||||||||

| SW (cm) | ||||||||

| MTC (cm) | ||||||||

| Turn angle () |

| Metric | IMU | VICON | Rel. Error | Abs. Error | Lim. Agr. | RMSE | Corr. † | Equival. ‡ |

|---|---|---|---|---|---|---|---|---|

| Stride dur. (s) | ||||||||

| Swing dur. (s) | ||||||||

| Stance dur. (s) | ||||||||

| Cad. (st/min) | ||||||||

| SL (cm) | ||||||||

| Speed (cm/s) | ||||||||

| SW (cm) | ||||||||

| MTC (cm) | ||||||||

| Turn angle () |

| Metric | Original Orientation | Random Rotations | RMSD | Correlation † | Equivalence ‡ |

|---|---|---|---|---|---|

| Stride dur. (s) | |||||

| Swing dur. (s) | |||||

| Stance dur. (s) | |||||

| Cad. (st/min) | |||||

| SL (cm) | |||||

| Speed (cm/s) | |||||

| SW (cm) | |||||

| MTC (cm) | |||||

| Turn angle () |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guimarães, V.; Sousa, I.; Correia, M.V. Orientation-Invariant Spatio-Temporal Gait Analysis Using Foot-Worn Inertial Sensors. Sensors 2021, 21, 3940. https://doi.org/10.3390/s21113940

Guimarães V, Sousa I, Correia MV. Orientation-Invariant Spatio-Temporal Gait Analysis Using Foot-Worn Inertial Sensors. Sensors. 2021; 21(11):3940. https://doi.org/10.3390/s21113940

Chicago/Turabian StyleGuimarães, Vânia, Inês Sousa, and Miguel Velhote Correia. 2021. "Orientation-Invariant Spatio-Temporal Gait Analysis Using Foot-Worn Inertial Sensors" Sensors 21, no. 11: 3940. https://doi.org/10.3390/s21113940

APA StyleGuimarães, V., Sousa, I., & Correia, M. V. (2021). Orientation-Invariant Spatio-Temporal Gait Analysis Using Foot-Worn Inertial Sensors. Sensors, 21(11), 3940. https://doi.org/10.3390/s21113940